Occlusion-Free Image-Based Visual Servoing using Probabilistic Control Barrier Certificates

Abstract

Image-based visual servoing (IBVS) is a widely-used approach in robotics that employs visual information to guide robots towards desired positions. However, occlusions in this approach can lead to visual servoing failure and degrade the control performance due to the obstructed vision feature points that are essential for providing visual feedback. In this paper, we propose a Control Barrier Function (CBF) based controller that enables occlusion-free IBVS tasks by automatically adjusting the robot’s configuration to keep the feature points in the field of view and away from obstacles. In particular, to account for measurement noise of the feature points, we develop the Probabilistic Control Barrier Certificates (PrCBC) using control barrier functions that encode the chance-constrained occlusion avoidance constraints under uncertainty into deterministic admissible control space for the robot, from which the resulting configuration of robot ensures that the feature points stay occlusion free from obstacles with a satisfying predefined probability. By integrating such constraints with a Model Predictive Control (MPC) framework, the sequence of optimized control inputs can be derived to achieve the primary IBVS task while enforcing the occlusion avoidance during robot movements. Simulation results are provided to validate the performance of our proposed method.

1 Introduction

Image-Based Visual Servoing (IBVS) is a key problem in robotics that involves using image data to control the robot’s movement to a desired location. Specifically, this involves selecting specific feature points in images to generate a sequence of motions that move the robot in response to observations from a camera, ultimately reaching a goal configuration in the real world (Chaumette et al., 2016). Many IBVS methods have been proposed and used in real-world applications such as the Visual Servoing Platform (Marchand et al., 2005), the Amazon Picking Challenge (Huang and Mok, 2018), and Surgery (Li et al., 2020). However, these methods often assume that there are no obstacles obstructing the camera’s field of view (FoV), allowing feature points to remain visible during servoing. When obstacles enter the camera’s FoV, direct feedback from objects in the scene may be lost, making navigation more difficult.

To address the problem, some researchers focus on using environment knowledge to design the robot controller in case there is occlusion during visual servoing. One idea is using feature estimation method to recover control properties during occlusions, either by estimating point feature depth with nonlinear observers (De Luca et al., 2008), or using a geometric approach to reconstruct a dynamic object characterized by point and line features (Fleurmond and Cadenat, 2016). Another idea is to plan a camera trajectory that would altogether avoid the occlusion by other obstacles. (Kazemi et al., 2010) presented an overview of the main visual servoing path-planning techniques which can be used to guarantee occlusion-free and collision-free trajectories, as well as to consider FoV limitations. However, these methods rely on accurate knowledge of the (partial) workspace and may require long computation time.

Other researchers used potential fields (Mezouar and Chaumette, 2002) or variable weighting Linear Quadratic control laws (Kermorgant and Chaumette, 2013) to preserve visibility and avoid self-occlusions. Although these approaches are suitable for real-time implementation, they may exhibit local minima and possible unwanted oscillations. Moreover, the existing methods generally do not address the uncertainty in the environment, which could easily jeopardize the performance guarantee in presence of realistic factors such as measurement noises on the image.

On the other hand, Model Predictive Control (MPC) has been widely used in robotic systems to generate a sequence of controller by considering a finite time horizon optimization. It can deal with constraints, non-minimum phase processes and implement robust control even if the system dynamics is time-varying. A large amount of work about MPC based visual servoing has been studied (Saragih et al., 2019; Nicolis et al., 2018).

Control Barrier Functions (CBFs) based methods have been used for safety critical applications such as automobile (Xu et al., 2017) and human-robot interaction (Landi et al., 2019) with its provable theoretical guarantees that allow for a forward invariant desired set. CBFs have been used in visual servoing to keep the target in the FoV in (Zheng et al., 2019). However, this method is not suitable for handling occlusion problems and does not account for possible measurement uncertainties.

In this paper, we present a control method that enables a chance-constrained occlusion-free guarantee for IBVS tasks under camera measurement uncertainty through the use of Probabilistic Control Barrier Certificates (PrCBC). The key idea of PrCBC, adopted in our IBVS problem, is to enforce the chance-constrained occlusion avoidance between the feature points and obstacle in the camera view with deterministic constraints over an existing robotic controller, so that the occlusion-free movements can be achieved with a satisfying probability. We further integrate the PrCBC control constraints into a standard model predictive control (MPC) framework. Without loss of generality, we take the general case of a 6-DOF robot arm with eye-in-hand configuration as an example for the IBVS task, and provide simulation results on such platform to demonstrate the effectiveness of our proposed approach. Our key insight in this paper is that the proposed PrCBC can filter out the unsatisfying robot control action from the primary IBVS controller that may lead to occlusion with the obstacles, and leverage optimal controller from the integrated MPC framework. This insight leads us to the following contributions:

-

1.

We present a novel chance-constrained occlusion avoidance method for visual servoing tasks using Probabilistic Control Barrier Certificates (PrCBC) under camera measurement uncertainty, with theoretical analysis on the performance guarantee.

-

2.

We integrate PrCBC with Model Predictive Control (MPC) to provide a high-level planner with a minimally invasive control behavior to guarantee the occlusion avoidance for IBVS task, and validate through extensive simulation results.

2 Preliminaries

2.1 Feature Points Dynamics

Consider a moving camera which is fixed on the robot end-effector with the eye-in-hand configuration and the camera is viewing objects in the workspace. is a right-handed orthogonal coordinate frame whose origin is at the principle point of the camera and axis is collinear with the optical axis of the camera. Assuming is the pixel coordinate of a static point in the image plane, then we can define its normalized image plane coordinate as according to the camera projection model (Corke and Khatib, 2011).

| (1) |

where are camera intrinsic parameters and is the 3D coordinate of the point in .

The image vision feature can be constructed from the normalized image plane coordinates such that . Assume there are feature points extracted from the image and the state vectors of current and target vision feature points are denoted as at time and respectively, then the IBVS task aims to regulate the positional image feature points error vector to zero through robot movements, which thus drives the robot to the desired position.

According to (Chaumette and Hutchinson, 2006), the dynamics of the feature points can be expressed as:

| (2) |

where is the image interaction matrix and can be computed refer to (Chaumette and Hutchinson, 2006), is a vector of the robot motion controller to express the camera translation and rotation velocity in the workspace and denotes the depths of feature points. In this paper, we assume the depth information of the feature points has been acquired. Therefore in IBVS, (2) represents the system dynamics of the feature points with as the system state and as the system controller.

Since is predefined and time independent, we have:

| (3) |

According to (Chaumette and Hutchinson, 2006), the unconstrained gradient-based IBVS controller driving can be defined as:

| (4) |

where is a constant as predefined control gain, and is the pseudo-inverse of , which is given by . To ensure the local asymptotic stability, we should have , i.e. at least three feature points should be available in the FoV.

2.2 Model Predictive Control Policy

To optimize the IBVS controller over a finite time horizon, the MPC policy is used to construct a step time horizon planner rendering a sequence of candidate control actions. Consider the problem of IBVS that seeks to regulate the current feature points to the target feature points through robot movements. According to the error dynamics (3), the discrete-time control system can be described by:

| (5) |

where is an identity matrix and represents the state of the image feature error of feature points at time step . The system state is with as the control input and is locally Lipschitz.

Therefore, the finite-time optimal control problem can be solved at time step using the following policy :

| (6) | ||||

| s.t. | (7) | |||

| (8) | ||||

| (9) |

where is the prediction time horizon, and , and are weighting matrices which represent a trade-off between the small magnitude of the control input (with larger value of ) and fast response (with larger value of and ). denotes the error vector at time step predicted at time step , which is obtained from the current image feature error and the control input of . Finally, the optimized control sequence can be obtained as .

2.3 Obstacle Model and Occlusion Avoidance

Without loss of generality, Consider an obstacle which is moving in the workspace and may occlude the feature points in the camera view. We model the obstacle as a rigid sphere with the radius in the workspace. Similar to the feature points, the normalized image plane coordinates of the obstacle center and radius of the obstacle can be defined as and respectively. To simplify the notation, we will use and to denote the real-time state of the obstacle center and obstacle radius in the normalized image plane.

In this way, for any pair-wise occlusion avoidance between the feature point and the obstacle in the normalized image plane, the occlusion-free condition and state set can be defined as follows:

| (10) |

| (11) |

Then for all feature points, the desired set for occlusion-free states is thus defined as:

| (12) |

2.4 Occlusion-free Constraints using CBF

Control Barrier Functions (CBF) (Ames et al., 2019) have been widely applied to generate control constraints that render a set forward invariant, i.e. if the system state starts inside a set, it will never leave this set under the satisfying controller. The main idea of CBF is summarized as the following Lemma.

Lemma 1

[summarized from Ames et al. (2019)] Given a dynamical system affine in control and a desired set as the 0-superlevel set of a continuous differentiable function , the function is a control barrier function if there exists an extended class- function such that for all . The admissible control space for Lipschitz continuous controller rendering forward invariant (i.e. keeping the system state staying in over time) thus becomes:

| (13) |

Based on the occlusion avoidance condition (10)-(11) and the Lemma 1, if the feature points are not occluded by the obstacle initially, then the admissible control space for the robot to keep the feature points free from occlusion can be represented by the following control constraints over :

| (14) |

where defines the Control Barrier Certificates (CBC) for the feature point-obstacle occlusion avoidance, and is a user-defined parameter in the particular choice of as in (Luo et al., 2020b). It will render the occlusion-free set forward invariant, i.e. as long as we can guarantee the control input lies in the set , the feature points will not be occluded by the obstacle at all times.

2.5 Chance Constraints for Measurement Uncertainty

Although (14) indicates an explicit condition for occlusion avoidance with the perfect knowledge of the actual position , the presence of measurement uncertainty on makes it challenging to enforce (14), or even impossible when the measurement noise is unbounded, e.g. Gaussian noise. In this paper, we consider the realistic situation where the pixel coordinates of the extracted feature points acquired by the camera have Gaussian distribution noise that are formulated as follows:

| (15) |

where are the Gaussian measurement noises and can be considered as independent random variables with zero mean and as the variances. With that, for the rest of the paper we assume only the noisy positions and of extracted feature points are available when the uncertainty is considered.

Then the occlusion avoidance condition in (10)-(11) can be considered in a chance-constrained setting. Formally, given the user-defined satisfying probability threshold of the occlusion avoidance as , we have:

| (16) |

where Pr(.) indicates the probability of an event. Note that when the is approaching 1, it will lead to a more conservative controller to maintain the probabilistic occlusion-free set. In Section 3.2, we will discuss how to transfer the chance constraints on the feature points states into a deterministic admissible control space w.r.t. , so that the occlusion-free performance could be guaranteed with satisfying probability as implied in (16).

2.6 Problem Statement

To achieve the occlusion-free IBVS, we first use the MPC as a planner to generate the unconstrained control sequence at each time step . To address the occlusion problem brought by the obstacle, we discuss two situations to define the optimized problem.

Without Noise: First, we assume that the camera can get the accurate pixel coordinates from the image, i.e. we can extract the feature points precisely. In this way, we assume the feature points are not occluded by the obstacle at the initial location. Then we can formally define this occlusion-free constraint with the following step-wise Quadratic-Program (QP) at each time step :

| (17) | |||

| (18) |

where is the first step of the control sequence generated from MPC at time , and is the maximum velocity. Hence the resulting is the step-wise optimized controller to be executed at each that renders the occlusion-free set in (12) forward invariant.

With Noise: In presence of camera measurement noise, then only the inaccurate vision feature information of the normalized image plane and can be obtained for IBVS task. In this case, the chance-constrained occlusion-free problem can be formally defined as:

| (19) | |||

| (20) |

3 Method

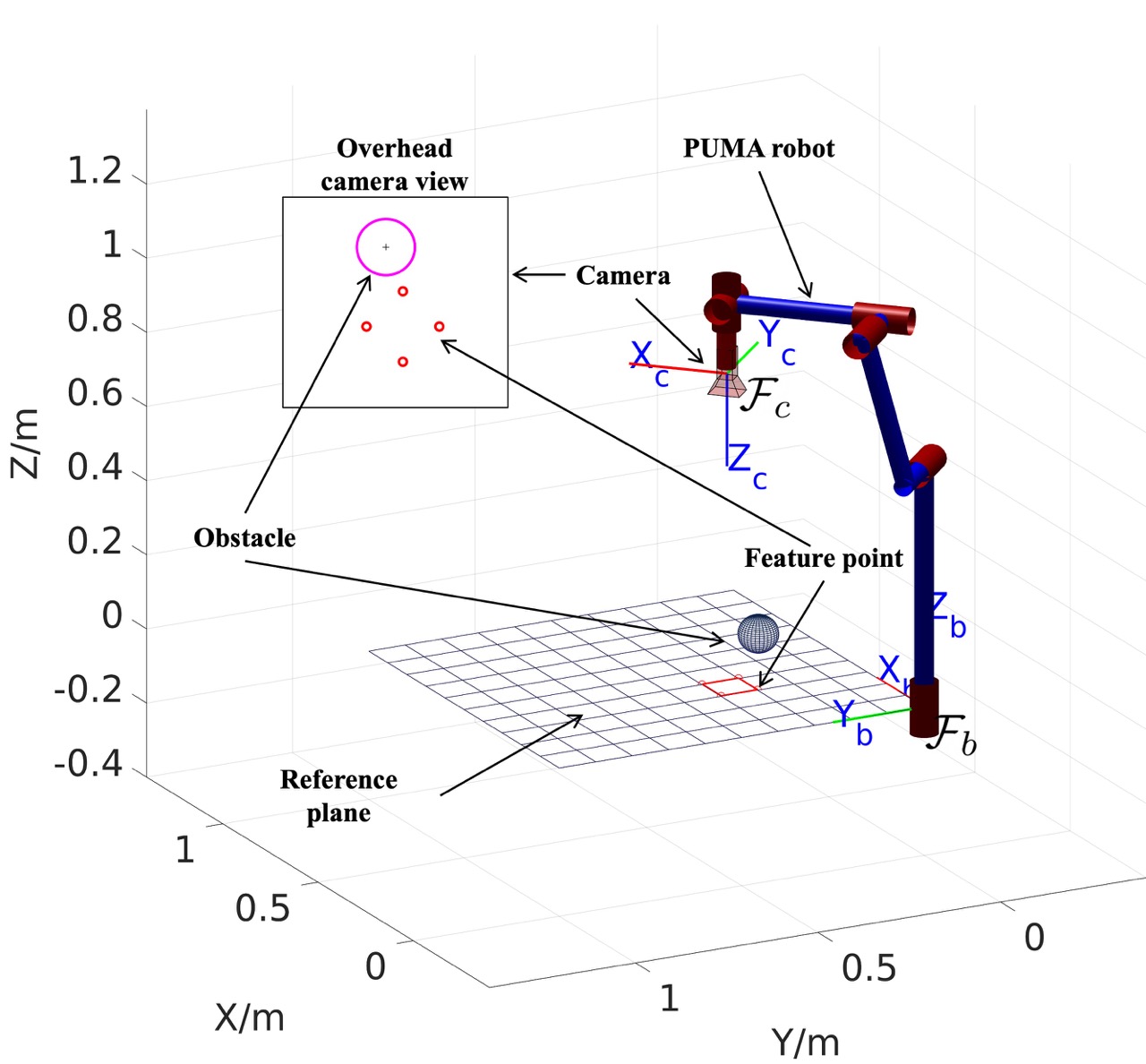

We consider the IBVS task scenario as shown in Fig. 1, where the camera is attached to a 6-DOF PUMA robot end-effector and four feature points are set (i.e. ) as the extracted image feature for guiding the IBVS task as commonly assumed e.g. (Chaumette and Hutchinson, 2006). There is one moving obstacle with radius in the workspace which may occlude the feature points during the execution of the primary IBVS task. Therefore, the objective of the IBVS task for the robot to achieve a desired configuration is to move the PUMA robotic arm in a way that the feature points positions in the camera view eventually converge to the desired positions without occlusion from the obstacle at all times.

With that, we have the feature points state as and the corresponding control input that expresses the 6-DOF motion controller of the camera. and are the vector of the linear and angular velocities. is the interaction matrix which is detailed later in (21). Similar to , we can acquire the state of the obstacle center as . According to (Chaumette and Hutchinson, 2006), we can calculate interaction matrix of -th feature point:

| (21) |

where and is the depth of -th feature point in . Similarly, the interaction matrix of the obstacle center and obstacle radius, and can be derived as:

| (22) |

| (23) |

where and is obstacle depth in .

Intuitively, the distance will be used to derive the analytical form of the occlusion-free set. According to the different situations discussed in Section 2.6, the CBC and PrSBC for occlusion-free performance are detailed as follows.

3.1 Control Barrier Certificates for Occlusion Avoidance

First, we consider the condition where the camera can acquire the accurate pixel coordinate from the world space when there is no measurement noise. Given the occlusion free condition in (10)- (11) and the form of CBC in (14), we now formally define the CBC for the occlusion avoidance condition as follows:

Theorem 3.1

We demonstrate that our proposed control barrier function in (10) is a valid control barrier function. As summarized in (Capelli and Sabattini, 2020), from Lemma 1 the condition for a function to be a valid CBF should satisfy the following three conditions: (a) is continuously differentiable, (b) the first-order time derivative of depends explicitly on the control input (i.e. is of relative degree one), and (c) it is possible to find an extended class- function such that for all .

Hence, consider our proposed candidate CBF in (10), and according to the differential function in (25), it is straightforward that the first order derivative of (10) in the form of (25) depends explicitly on the control input . Thus, the function in (10) is (a) continuously differential and (b) is of relative degree one.

For condition (c) , we need to prove that the following inequality has at least one solution.

| (26) |

Given the form of in (25), then (26) can be re-written as:

| (27) |

where , and . Given a specific state of and , will be a constant. It is also straightforward that the six terms of can not be zero at the same time. Therefore, if we consider an unbounded control input , we can always find a solution such that .

In the case that is bounded, several existing approaches could be employed to enforce the feasibility of the condition in (27). For example, in (Lyu et al., 2021), we provided an optimization solution for parameter over time to guarantee that the admissible control set is always not empty when a feasible solution does exist. Besides, the authors in (Xiao et al., 2022) provided a novel method to find sufficient conditions, which are captured by a single constraint and enforced by an additional CBF, to guarantee the feasibility of the original CBF control constraint. Readers are referred to (Xiao et al., 2022) for further details. With that, we conclude the proof.

3.2 Probabilistic Control Barrier Certificates (PrCBC) for Occlusion Avoidance

In presence of uncertainty, similar to (Luo et al., 2020a), we have the sufficient condition of (16) as

| (28) |

Following (Luo et al., 2020a), we define our PrCBC for the chance-constrained occlusion free condition as follows:

Probabilistic Control Barrier Certificates (PrCBC): Given a confidence level , the admissible control space determined as below enforces the chance-constrained condition in (16) at all times.

| (29) |

The analytical form of will be given in the latter part of (3.2).

Computation of PrCBC: Given the confidence level , the chance constraints of can be transformed into a deterministic quadratic constraint over the controller in the form of (3.2). We denote as one solution that makes the joint cumulative distribution function (CDF) of random variable of equal to , where represent the side length of the square that covers the cumulative distribution probability with . Then, the deterministic constraints below become the sufficient condition for :

| (30) |

where and . Therefore, we formally construct the PrCBC as the following deterministic quadratic constraints:

| (31) |

With that, we have , and . Due to the size limitation of the physical workspace in the visual servoing scenario, the quadratic control constraint may not be feasible, e.g. when the moving obstacle is very large, it is impossible to avoid occlusion. In this extreme case, one alternative solution is to hold the robot static and to wait until the obstacle no longer occlude the feature points.

3.3 MPC Planner with Occlusion Avoidance

To integrate the advantages of the Model Predictive Control policy for high-level planning and CBC/PrCBC to enforce the occlusion-free condition, we combine the procedure of the MPC and proposed CBC/PrCBC to generate an optimized control sequence. Specifically, after executing the CBC/PrCBC to minimally modify the control to at time step , the process is repeatedly implemented at the time step : using policy in Section 2.2 to generate the control sequence , obtain the optimized control , and calculate the occlusion-free control to execute next.

4 Experiment Results and Discussion

In this section, we use Matlab as the simulation platform and present experimental results to validate the effectiveness of our proposed method. Matlab robotics toolbox (Corke, 2017) is used to construct the 6-DOF robot simulation model, and the solver IPOPT (Wächter and Biegler, 2006) is used to generate a high-level planner.

4.1 Simulation Performance

To validate the performance of our experiment, we implement our algorithm under different experiment setups:

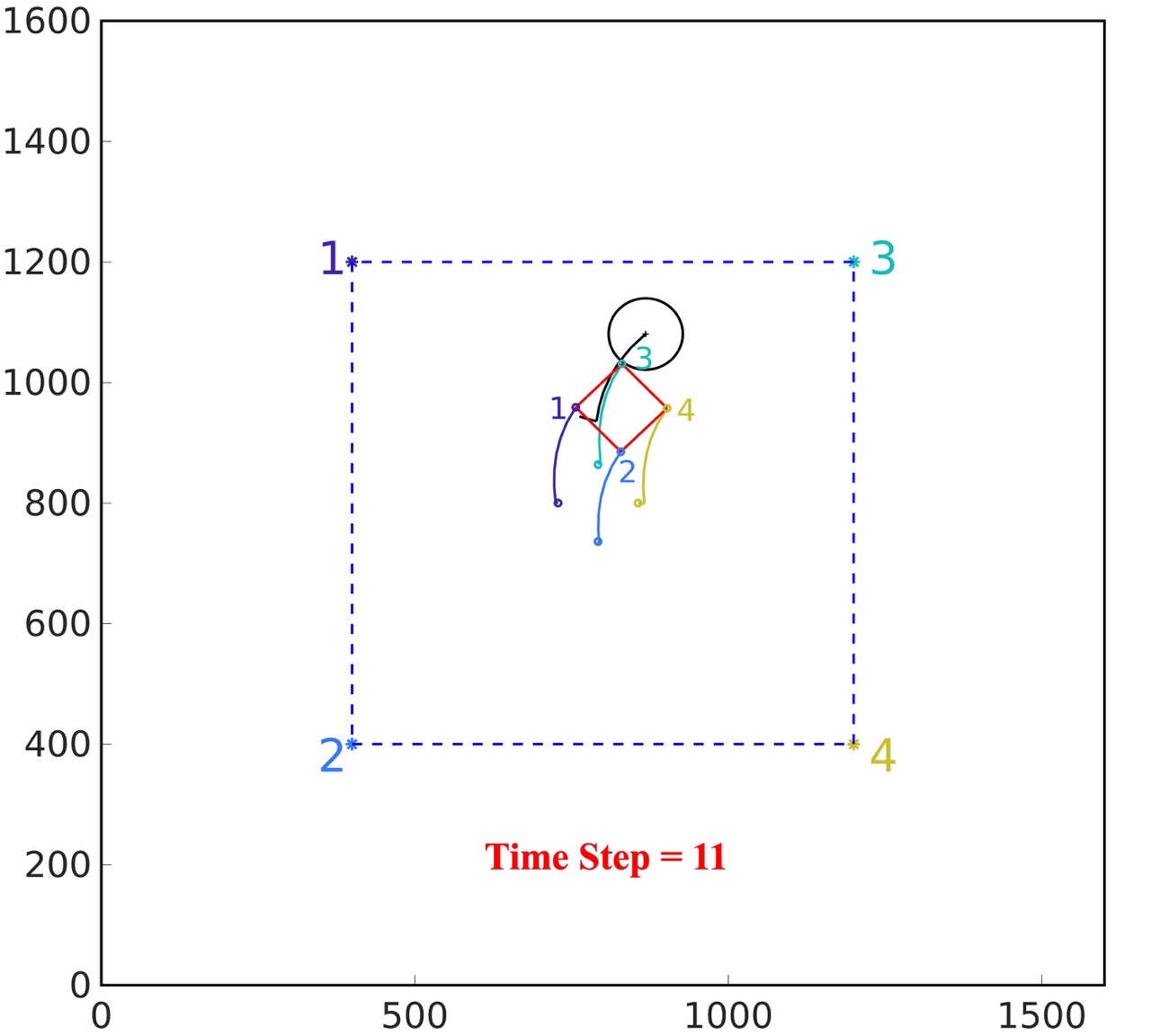

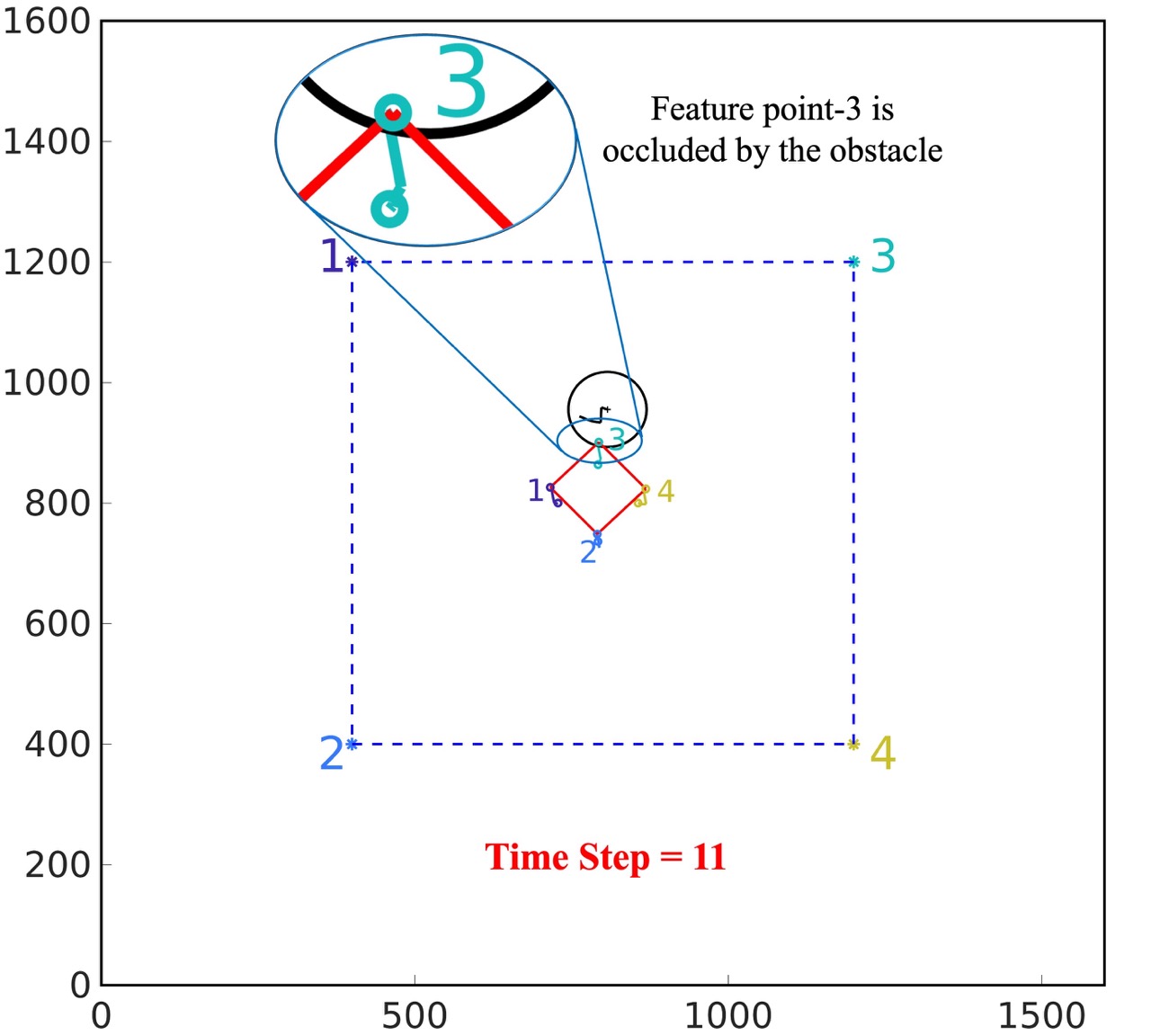

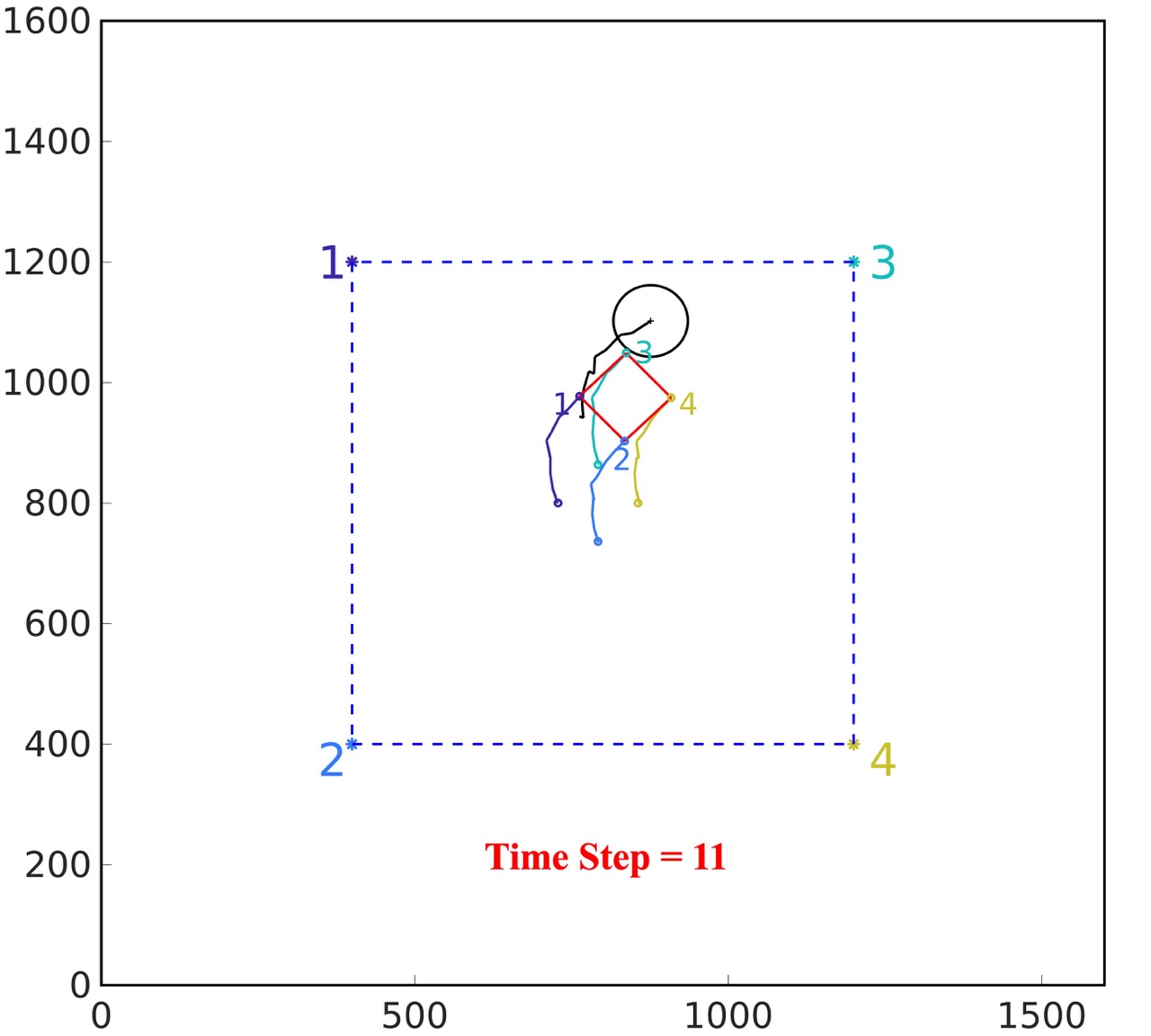

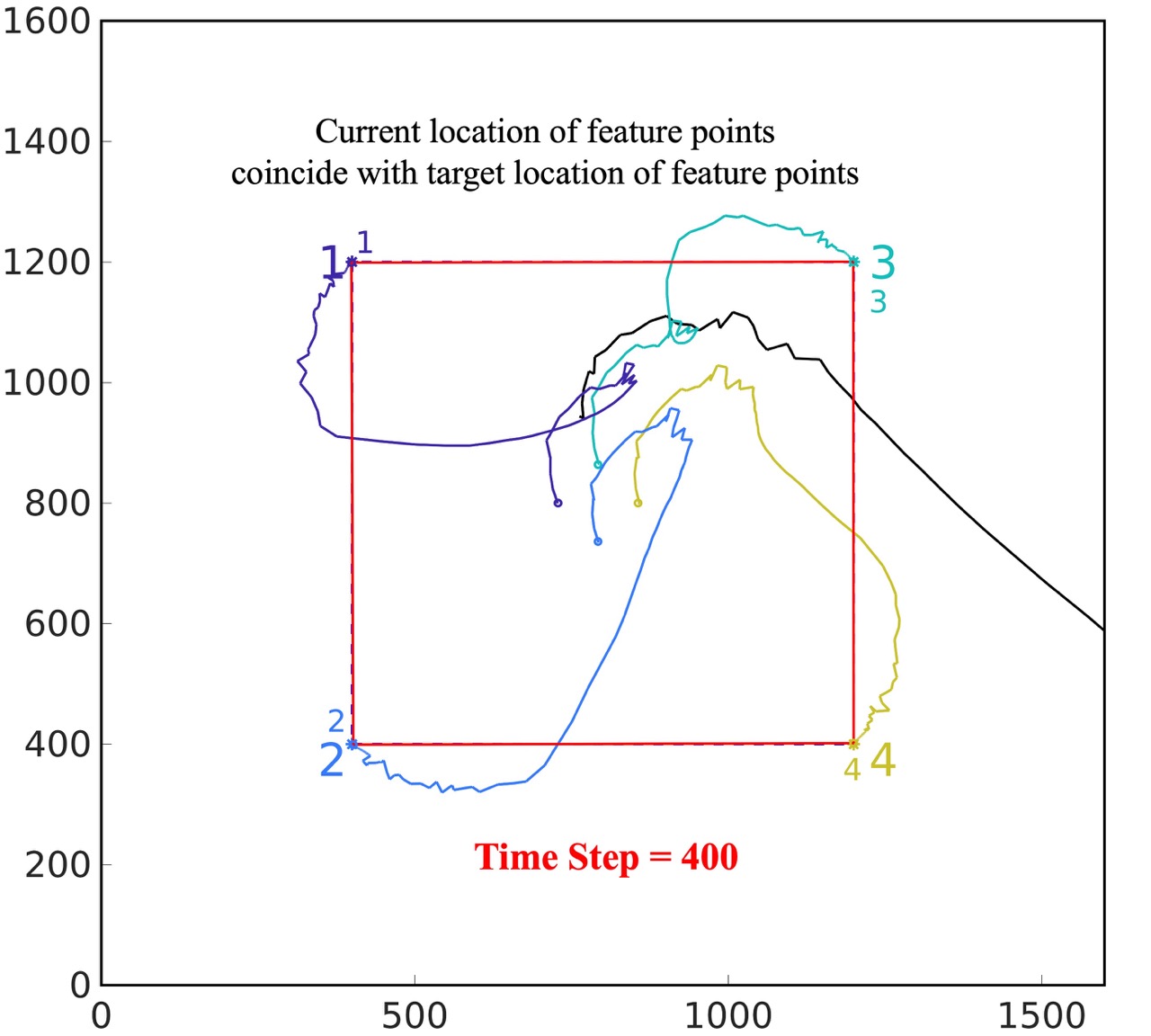

CBC without noise: According to the experiment setup shown in Fig. 2(a), the obstacle is moving in the workspace with initial location . In this experiment, we assume the camera can acquire the accurate coordinates of the feature points and obstacle center. With designed controller CBC, the results are shown in Fig. 2(b) and Fig. 2(c). From Fig. 2(b) and Fig. 2(c), the feature points can avoid occlusion of the obstacle and converge to the pre-defined target locations successfully.

CBC with noise: With the same initial condition of Fig. 2(a), we assume the pixel coordinates of the feature points and obstacle center are acquired by the camera with Gaussian distributed noise . From Fig. 2(d), it can be observed that the feature point has been occluded by the obstacle in the camera view.

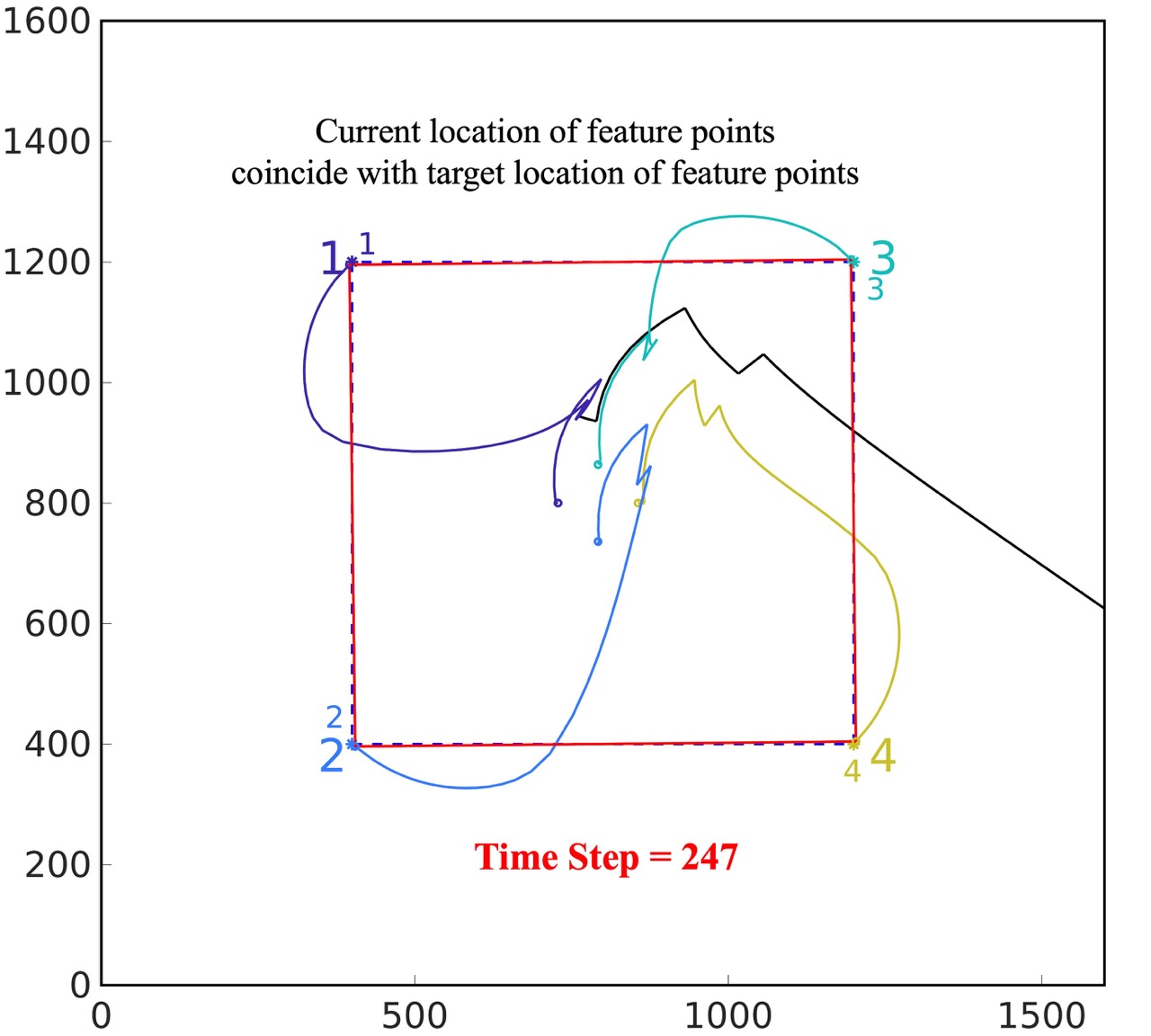

PrCBC with noise: With the same initial condition and measurement noise in Fig. 2(a), the confidence level is set to be . As shown in Fig. 2(e), the feature points can avoid the occlusion of the obstacle in the camera view. As shown in Fig, 2(f), the robot could navigate the camera to the desired location where the four feature points converge to the pre-defined target locations in the camera view.

Hence, the CBC could enforce an occlusion-free IBVS only when accurate feature points information is available. In comparison, the PrCBC is able to guarantee chance-constrained probabilistic occlusion avoidance even in presence of the measurement noise.

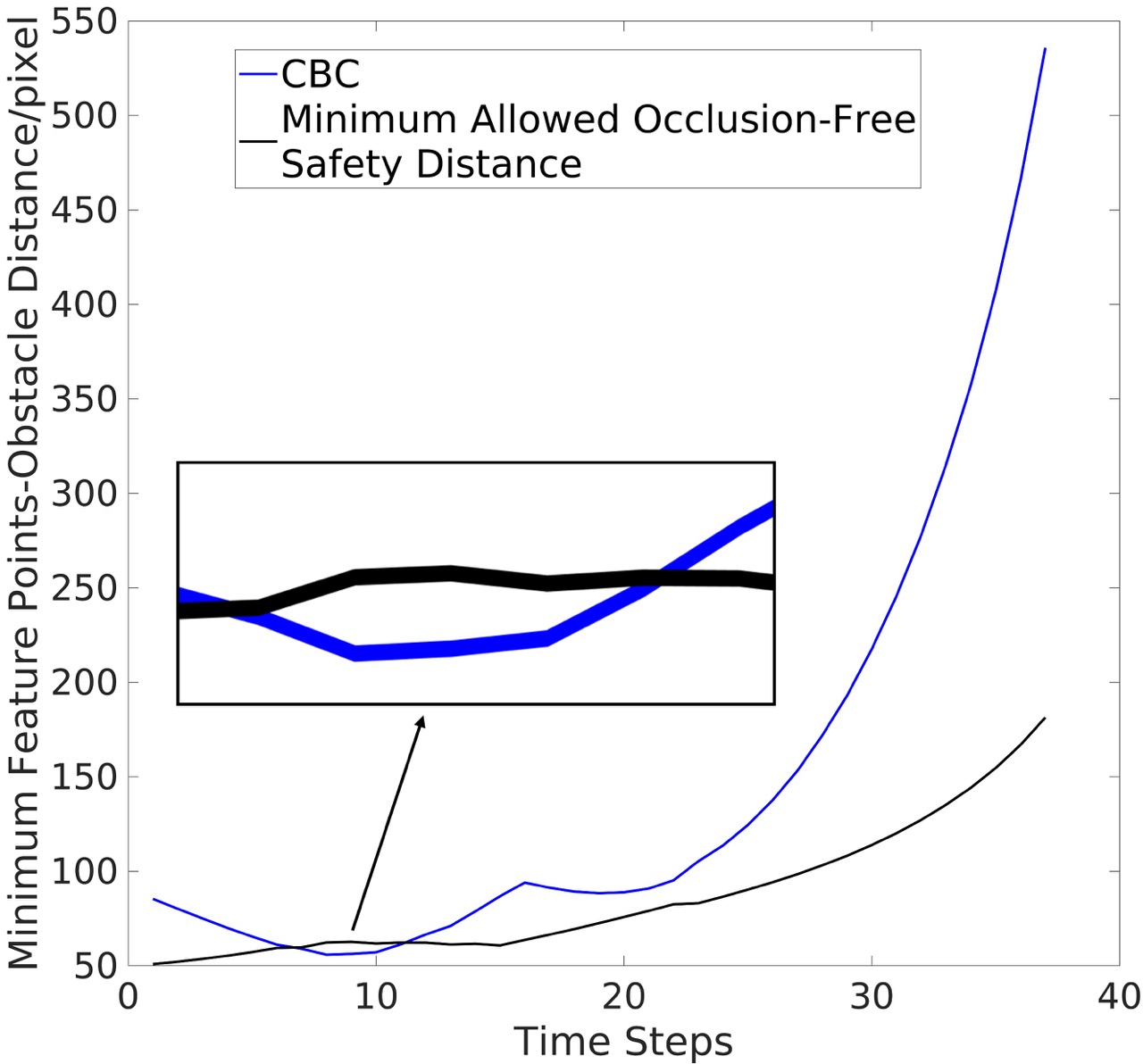

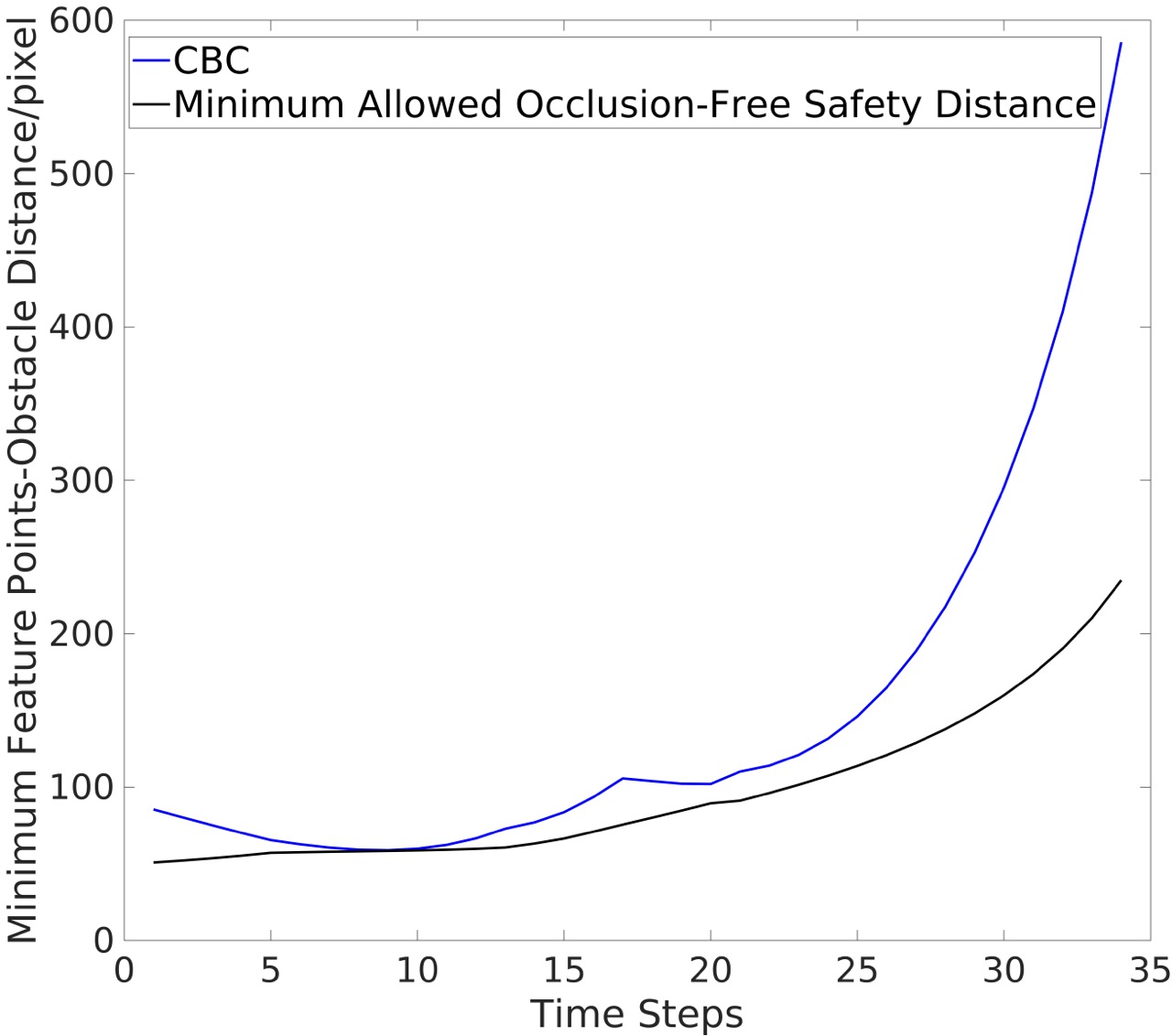

4.2 Quantitative Results

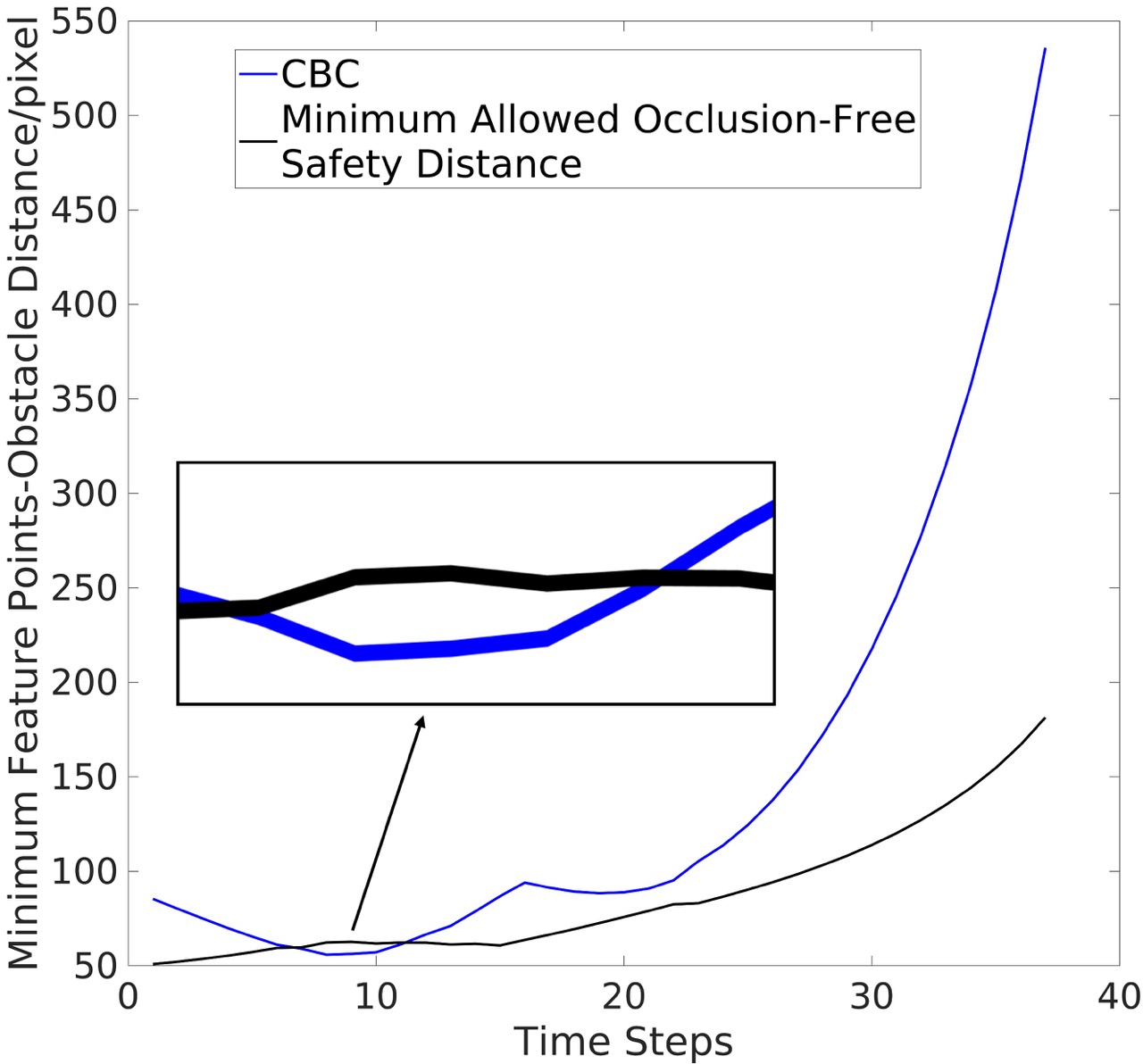

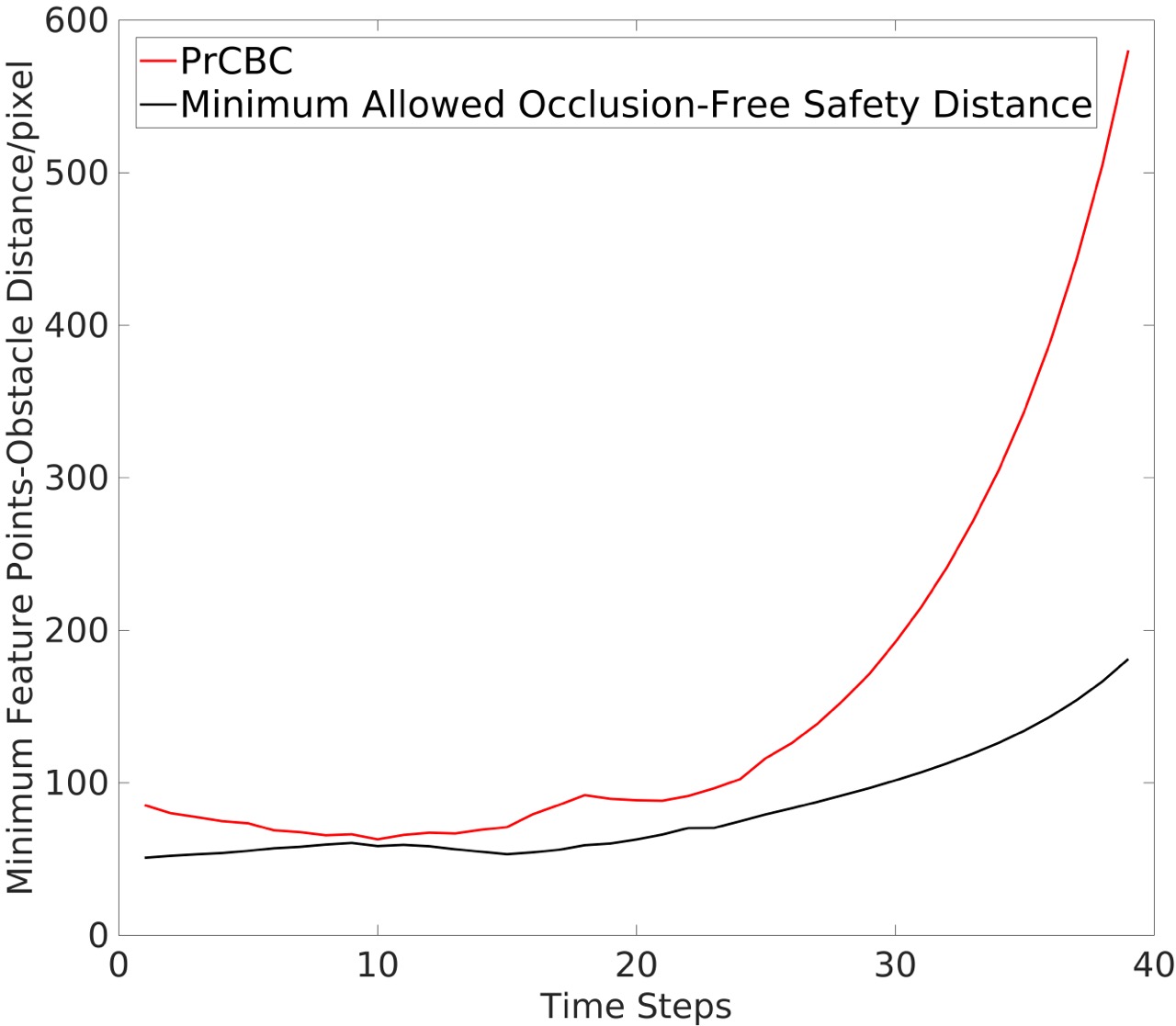

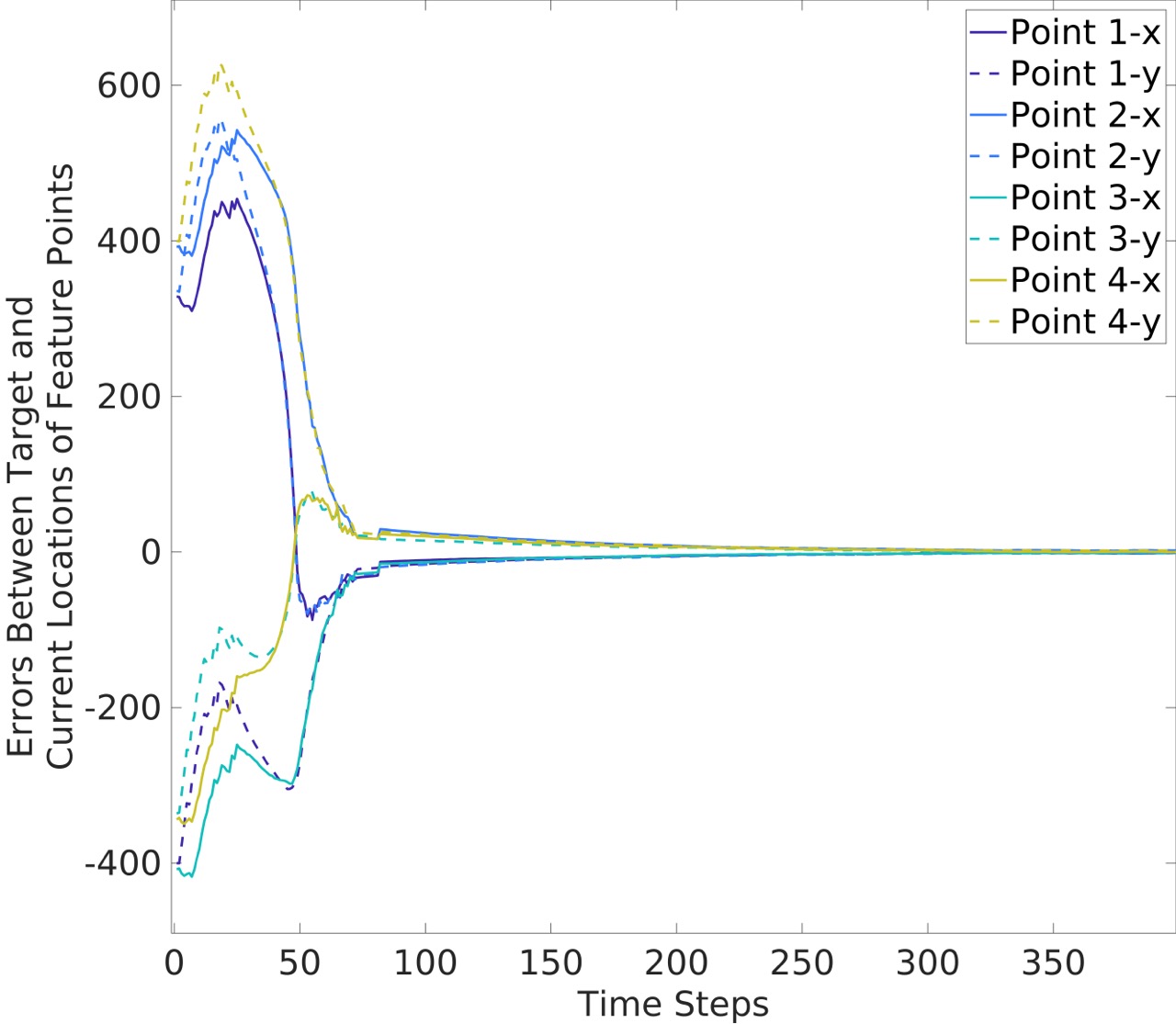

Next, we present quantitative results from the simulation experiments. As shown in Fig. 3(a), without noise in the pixel coordinates, the CBC method performs well, always ensuring that the minimum feature points-obstacle distance exceeds the occlusion-free safety distance. However, when noise is added, the CBC method is unable to guarantee this, as shown in Fig. 3(b) (here we continue running the simulation until the obstacle moving out of the camera view). The PrCBC method, on the other hand, consistently maintains the minimum feature points-obstacle distance above the safety distance, even in the presence of observation noise. Fig. 3(d) shows the convergence of the feature points locations in the camera view. It validates that our method can accomplish the IBVS task with occlusion-free performance.

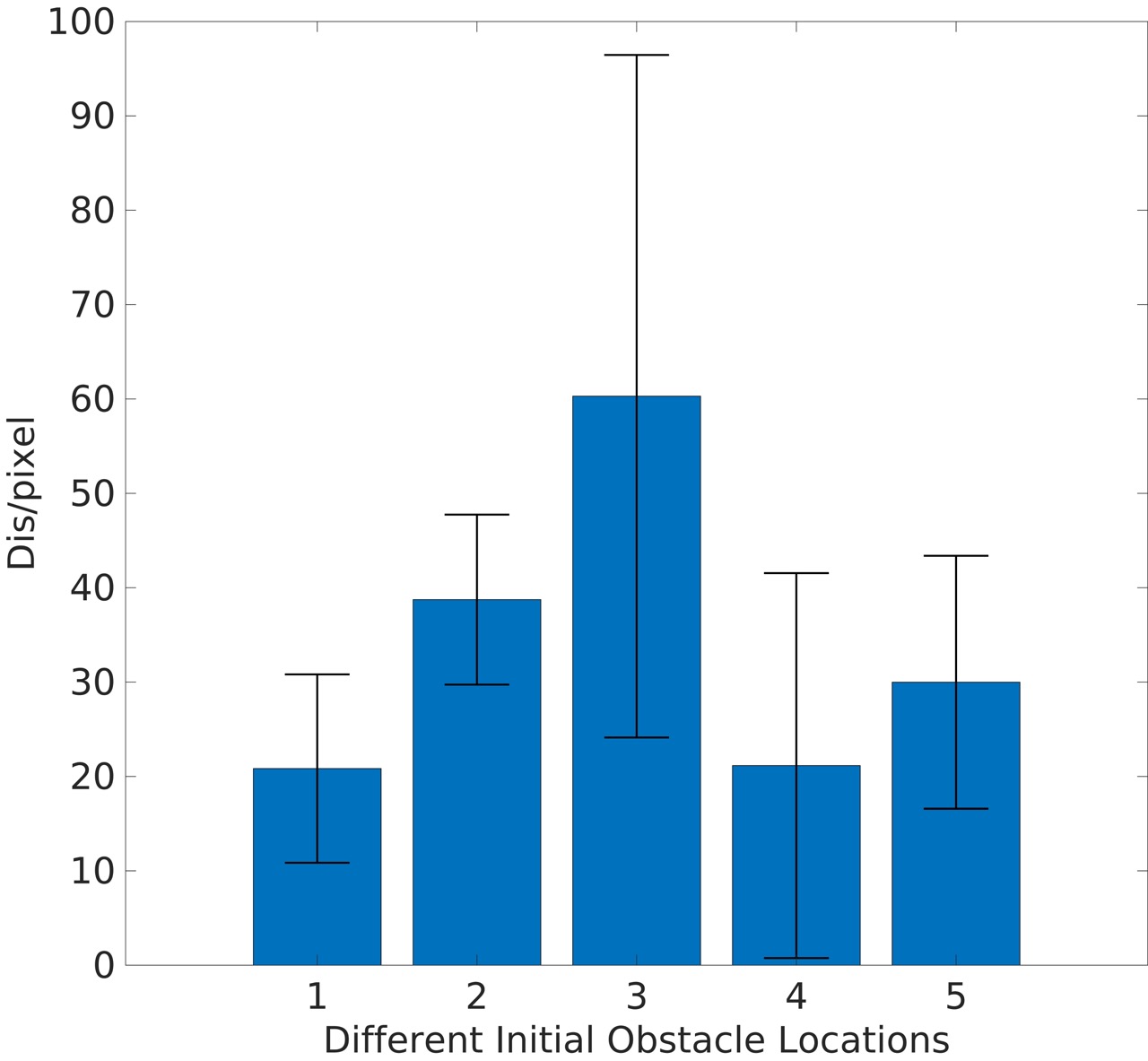

We also performed 50 random trials (5 different initial locations with each one having 10 random trails) under the confidence level of to validate the effectiveness of the PrCBC controller in presence of random camera measurement noise. We define to express the distance between the feature point and the obstacle edge in the image plane with denoted as:

| (32) |

where is the obstacle radius in the image plane (pixel scale). Fig. 4 shows the mean with its corresponding variance, which verified that the feature points would not collide with the obstacle using the proposed PrCBC, indicating the occlusion-free performance.

5 Conclusion

In this paper, we present a control method to address the chance-constrained occlusion avoidance problem between the feature points and obstacle in Image Based Visual Servoing (IBVS) tasks. By adopting the probabilistic control barrier certificates (PrCBC), we transform the probabilistic occlusion-free conditions to deterministic control constraints, which formally guarantee the performance with satisfying probability under measurement uncertainty. Then we integrate the control constraints with Model Predictive Control (MPC) policy to generate a sequence of optimized control for high-level planning with enforced occlusion avoidance. The simulation results verify the effectiveness of the proposed method. Future works will further explore the real-world applications of the proposed method using feature points such as Scale-Invariant Feature Transform (SIFT), Oriented FAST and Rotated BRIEF (ORB), etc.

References

- Ames et al. (2019) Ames, A.D., Coogan, S., Egerstedt, M., Notomista, G., Sreenath, K., and Tabuada, P. (2019). Control barrier functions: Theory and applications. In 18th European Control Conference (ECC), 3420–3431. IEEE.

- Capelli and Sabattini (2020) Capelli, B. and Sabattini, L. (2020). Connectivity maintenance: Global and optimized approach through control barrier functions. In IEEE International Conference on Robotics and Automation (ICRA), 5590–5596. IEEE.

- Chaumette and Hutchinson (2006) Chaumette, F. and Hutchinson, S. (2006). Visual servo control. i. basic approaches. IEEE Robotics & Automation Magazine, 13(4), 82–90.

- Chaumette et al. (2016) Chaumette, F., Hutchinson, S., and Corke, P. (2016). Visual servoing. In Springer Handbook of Robotics, 841–866. Springer.

- Corke (2017) Corke, P.I. (2017). Robotics, Vision & Control: Fundamental Algorithms in MATLAB. Springer, second edition. ISBN 978-3-319-54413-7.

- Corke and Khatib (2011) Corke, P.I. and Khatib, O. (2011). Robotics, vision and control: fundamental algorithms in MATLAB, volume 73. Springer.

- De Luca et al. (2008) De Luca, A., Oriolo, G., and Robuffo Giordano, P. (2008). Feature depth observation for image-based visual servoing: Theory and experiments. The International Journal of Robotics Research, 27(10), 1093–1116.

- Fleurmond and Cadenat (2016) Fleurmond, R. and Cadenat, V. (2016). Handling visual features losses during a coordinated vision-based task with a dual-arm robotic system. In 2016 European Control Conference (ECC), 684–689. IEEE.

- Huang and Mok (2018) Huang, P.C. and Mok, A.K. (2018). A case study of cyber-physical system design: Autonomous pick-and-place robot. In 2018 IEEE 24th international conference on embedded and real-time computing systems and applications (RTCSA), 22–31. IEEE.

- Kazemi et al. (2010) Kazemi, M., Gupta, K., and Mehrandezh, M. (2010). Path-planning for visual servoing: A review and issues. Visual Servoing via Advanced Numerical Methods, 189–207.

- Kermorgant and Chaumette (2013) Kermorgant, O. and Chaumette, F. (2013). Dealing with constraints in sensor-based robot control. IEEE Transactions on Robotics, 30(1), 244–257.

- Landi et al. (2019) Landi, C.T., Ferraguti, F., Costi, S., Bonfè, M., and Secchi, C. (2019). Safety barrier functions for human-robot interaction with industrial manipulators. In 2019 18th European Control Conference (ECC), 2565–2570. IEEE.

- Li et al. (2020) Li, W., Chiu, P.W.Y., and Li, Z. (2020). An accelerated finite-time convergent neural network for visual servoing of a flexible surgical endoscope with physical and rcm constraints. IEEE transactions on neural networks and learning systems, 31(12), 5272–5284.

- Luo et al. (2020a) Luo, W., Sun, W., and Kapoor, A. (2020a). Multi-robot collision avoidance under uncertainty with probabilistic safety barrier certificates. Advances in Neural Information Processing Systems, 33, 372–383.

- Luo et al. (2020b) Luo, W., Yi, S., and Sycara, K. (2020b). Behavior mixing with minimum global and subgroup connectivity maintenance for large-scale multi-robot systems. In 2020 IEEE International Conference on Robotics and Automation (ICRA), 9845–9851. IEEE.

- Lyu et al. (2021) Lyu, Y., Luo, W., and Dolan, J.M. (2021). Probabilistic safety-assured adaptive merging control for autonomous vehicles. In 2021 IEEE International Conference on Robotics and Automation (ICRA), 10764–10770. IEEE.

- Marchand et al. (2005) Marchand, E., Spindler, F., and Chaumette, F. (2005). Visp for visual servoing: a generic software platform with a wide class of robot control skills. IEEE Robotics and Automation Magazine, 12(4), 40–52.

- Mezouar and Chaumette (2002) Mezouar, Y. and Chaumette, F. (2002). Avoiding self-occlusions and preserving visibility by path planning in the image. Robotics and Autonomous Systems, 41(2-3), 77–87.

- Nicolis et al. (2018) Nicolis, D., Palumbo, M., Zanchettin, A.M., and Rocco, P. (2018). Occlusion-free visual servoing for the shared autonomy teleoperation of dual-arm robots. IEEE Robotics and Automation Letters, 3(2), 796–803.

- Saragih et al. (2019) Saragih, C.F.D., Kinasih, F.M.T.R., Machbub, C., Rusmin, P.H., and Rohman, A.S. (2019). Visual servo application using model predictive control (mpc) method on pan-tilt camera platform. In 2019 6th International Conference on Instrumentation, Control, and Automation (ICA), 1–7. IEEE.

- Wächter and Biegler (2006) Wächter, A. and Biegler, L.T. (2006). On the implementation of an interior-point filter line-search algorithm for large-scale nonlinear programming. Mathematical programming, 106(1), 25–57.

- Xiao et al. (2022) Xiao, W., Belta, C.A., and Cassandras, C.G. (2022). Sufficient conditions for feasibility of optimal control problems using control barrier functions. Automatica, 135, 109960.

- Xu et al. (2017) Xu, X., Waters, T., Pickem, D., Glotfelter, P., Egerstedt, M., Tabuada, P., Grizzle, J.W., and Ames, A.D. (2017). Realizing simultaneous lane keeping and adaptive speed regulation on accessible mobile robot testbeds. In 2017 IEEE Conference on Control Technology and Applications (CCTA), 1769–1775. IEEE.

- Zheng et al. (2019) Zheng, D., Wang, H., Wang, J., Zhang, X., and Chen, W. (2019). Toward visibility guaranteed visual servoing control of quadrotor uavs. IEEE/ASME Transactions on Mechatronics, 24(3), 1087–1095.