Object-Aware Impedance Control for Human-Robot Collaborative Task with Online Object Parameter Estimation

Abstract

Physical human-robot interactions (pHRIs) can improve robot autonomy and reduce physical demands on humans. In this paper, we consider a collaborative task with a considerably long object and no prior knowledge of the object’s parameters. An integrated control framework with an online object parameter estimator and a Cartesian object-aware impedance controller is proposed to realize complicated scenarios. During the transportation task, the object parameters are estimated online while a robot and human lift an object. The perturbation motion is incorporated into the null space of the desired trajectory to enhance the estimator accuracy. An object-aware impedance controller is designed using the real-time estimation results to effectively transmit the intended human motion to the robot through the object. Experimental demonstrations of collaborative tasks, including object transportation and assembly tasks, are implemented to show the effectiveness of our proposed method.

Note to Practitioners

This research was motivated by the need to facilitate collaboration between humans and robots in handling heavy or considerable long objects, which can be challenging for a single operator. This paper proposes a physical Human-Robot Interaction (pHRI) approach that enables physical interaction between the human and the robot through the object without additional sensors such as camera or human-machine interfaces. To achieve collaborative task, the separation between the intended human motion intention and the object dynamics is essential. Most of real-world situations involve uncertain or unknown objects, making it challenging to assume prior knowledge of the target object’s properties. Therefore, this research introduces a real-time approach for estimating the dynamic parameters of unknown objects during collaboration, without requiring additional operational time. Consequently, the design of object-aware impedance controller can be achieved by real-time incorporation of object dynamics. Collaborative transportation and assembly task is demonstrated with whole-body controlled mobile manipulator and 1.5m long object. In future research, we will focus on enhancing the estimation precision through the use of physical informed neural network methods.

Index Terms:

Physical human-robot interaction, Online object parameter estimation, Object-aware control, Cartesian impedance control, Mobile manipulation

I Introduction

Robots have been increasingly used in human environments for repetitive and simple operations with the notable improvements in the safety and performance of hardware and controllers. Nevertheless, their applicability is still limited in industrial and service-related scenarios in which flexibility is needed, including manufacturing, logistics, and construction. On the other hand, robots have several advantages over humans, such as high precision in repetitive tasks and the lack of increasing fatigue during payload lifting. Therefore, the development of robotic technologies for physical interactions between humans and robots is important to improve the autonomy of robots with human decision-making capabilities and reduce the excessive physical demand on humans during payload lifting [1, 2, 3, 4, 5, 6, 7]. In physical human-robot interactions (pHRIs), two or more agents are physically coupled and interact and communicate to perform tasks. pHRIs enable collaborative transportation of heavy or bulky objects and manipulations involving multiple grasping points, which would be difficult to achieve for either humans or robots alone [9, 11].

In this paper, we focus on human-robot collaborative transportation and assembly of bulky objects without prior knowledge of the object’s parameters. Due to the considerable length of the target object, the human cannot directly contact the robot in the leader-follower collaboration task. Consequently, the intended human motion can be transmitted to the robot only indirectly through the object. Additionally, due to the lack of knowledge regarding the object’s parameters, an online object parameter estimation process is necessary to accurately determine the intended human motion.

Human-robot collaborative transportation tasks have been studied in various applications [12, 13, 14]. These tasks are mainly performed under a leader-follower architecture, in which the desired trajectory of the robot is explicitly determined by humans based on the estimated intended human motion. The effects of dynamic role allocation on physical robotic assistants have been investigated, and the load distribution between the partners was efficiently determined with redundancies of the control inputs [4, 13]. However, only a planar task was performed without considering the inertial effects of the object. In pHRI-based cooperative object manipulation tasks, the performance estimator-based intention was presented to reduce human effort during collaborative tasks [7, 8]. However, the demonstration with a human was performed with direct manipulation of the robot, and the geometry of the object was not considered. Online adaptation of the admittance control parameters reduced human physical effort during collaborative transportation tasks [14]. Human intention was inferred by monitoring the magnitude and direction of the desired acceleration and the present velocity value. However, the inertial parameters and shapes of the objects have not yet been considered.

The use of external sensors has also been studied for collaborative tasks with physical interactions. Human-robot collaborative transportation tasks with unknown deformable objects have been performed by utilizing both haptic and kinematic information to estimate the intended human motion [2]. A low-cost cover made of soft, large-area capacitive tactile sensors was placed around the platform base to measure the interaction forces applied to the robot base to implement an expanded Cartesian impedance controller, and follow-me controllers were implemented [5]. An object lifted by a human and robot was presented using biceps activity, which was measured based on surface electromyography (EMG), to estimate the changes in the user’s hand height [15]. Invariance control was also presented for safe human-robot interactions in dynamic environments by enforcing dynamic constraints even in the presence of external forces [16]. Safe-aware human-robot collaborative transportation tasks considering aerial robots with cable-suspended payloads have also been demonstrated, in which a safe distance is maintained between the human operator and each agent without affecting performance [17]. The use of external sensors enables direct acquisition of motion and wrench information for the human participant, enhancing the performance and stability of collaborative tasks. However, in practice, it may not always be feasible to equip additional sensors in systems. Therefore, within the context of collaborative tasks, the estimation of intended human motion, even in the absence of such sensors, can be a noteworthy approach, notwithstanding the acceptance of estimation result inaccuracies.

Since the dynamic object parameters are mostly unknown in unstructured environments, online identification strategies for estimating object dynamics should be developed to accurately understand the intended human motion during collaborative tasks. Bias in the object dynamics leads to inaccurately calculated robot wrenches, which may disturb the human during interactions and affect the trust and interaction behavior of the human partner [10, 13, 4]. An online payload identification approach was presented based on the momentum observer using proprioceptive sensors with a calibration scheme that compensates for static offset between the virtual and real object [18]. However, directly applying this method in collaborative tasks is not straightforward due to the presence of dynamically coupled human partners. Alternatively, in some studies, only the weight of the object was estimated during the lifting phase of robot only [11] or human-robot collaborative tasks [19]. However, other dynamic parameters, such as the center of mass and moments of inertia, are also needed depending on the task. An online grasp pose and object parameter estimation strategy for pHRI was also presented [10, 9]. This strategy aims to induce identification-relevant motions that minimally disturb the desired human motion while preventing undesired interaction torques with the human. The use of probing signals for precise estimation and online estimation strategies is similar to our objective; however, these strategies require measurements of interaction torques for both the human and the robot and tracking of human motion, which may not always be possible in real-life scenarios. Indeed, without acquiring the wrench and motion information on the human side, it can be difficult to precisely estimate the object’s parameters. However, the objective of this study is to accurately determine the intended human motion while the human and robot are collaboratively lifting the object. Thus, it is adequate to estimate the effective object parameters, which corresponds to the load endured by the robot during the collaboration task, in this specific configuration. Consequently, in this study, we estimate the effective object parameters without the use of additional sensors on the human side. Note that researchers mainly adopted estimator methods based on least squares methods ([9, 20, 18]) or Kalman filter-based methods ([21, 22] for parameter estimation. We adopted the extended Kalman filter (EKF) because the estimation system varies over time.

In this paper, a Cartesian object-aware impedance controller with online object parameter estimation is proposed. Object parameter estimation enables a precise understanding of the intended human motion during human-robot collaborative tasks. Furthermore, to effectively transmit the intended human motion to the robot through long objects, the desired inertia shaping of impedance is performed with considering the geometry of the object.

The contributions of this paper can be summarized as follows:

-

•

The effective object parameters are estimated online by inducing identification motions in the null space of the Cartesian trajectory without the use of additional sensors on the human sides during load-sharing conditions.

-

•

A Cartesian object-aware impedance controller is designed that effectively transmits the intended human motion to the robot through the object by reflecting the object dynamics to the impedance relations.

-

•

Experimental demonstrations of a collaborative transportation and the assembly of a long object using a mobile manipulator are presented.

The remainder of the paper is organized as follows. In Section II, an online object parameter estimation method is presented by deriving the object dynamic equations. Based on a whole-body control architecture, a Cartesian object-aware impedance controller is designed in Section III. Experimental demonstrations and results are presented in Section IV, and the conclusions are discussed in Section V.

II Online Object Parameter Estimation

II-A Object Dynamics

We investigate a human-robot collaborative task scenario involving an elongated object in which parameters such as the mass, center of mass, and moment of inertia are not provided in advance.

,

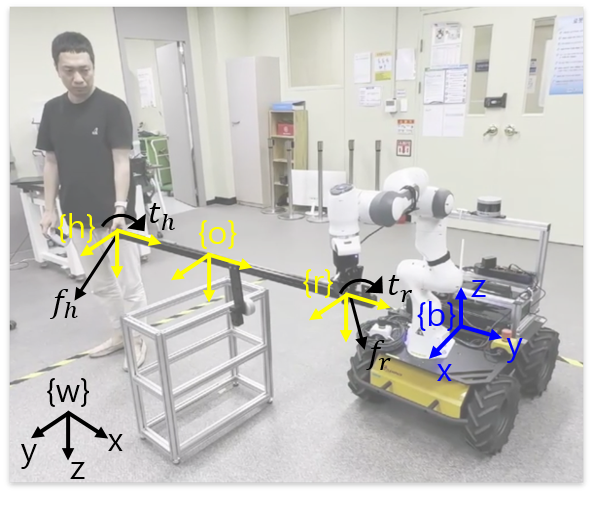

is the state of the object expressed in the world frame {w}, as illustrated in Fig. 1, and represent translation and orientation, respectively. Let and be twist and acceleration vectors, respectively. is the total object wrench, where is the force and is the torque acting on the object. and are the inertia matrix and nonlinear matrix containing Coriolis effects and gravity, respectively.

Because human and robot wrenches both act on the object, the relative kinematics can be represented as follows:

| (4) |

where and are the robot and human wrenches at each grasping point, respectively. represents the kinematic relations in the robot and human frames with respect to the object frame and is defined as follows:

| (6) |

where and are vectors from the object to the robot/human grasp points, respectively. denotes the skew matrix, where vector represents the cross product. and are partial grasp matrices from the object to the robot/human grasp points, respectively.

The kinematic relations between the object frame and robot frame can be represented in terms of the velocity:

| (8) |

They can also be represented in terms of the acceleration as follows:

| (10) |

The variable denotes the wrench exerted on the object with respect to the robot frame and can be directly estimated based on the robot’s sensory feedback and measurement capabilities.

II-B Unknown Object Parameter Estimation

For human-robot collaboration tasks, obtaining human motion and wrench measurements requires that the human participant be equipped with additional sensors for motion tracking or force/torque feedback [9]. However, in most real-world scenarios, it is challenging to use additional sensors, leading to limited available human information. Therefore, we estimated the object parameters in a scenario without human motion information and wrench measurements. In this study, the objective of the parameter estimation process is not to precisely obtain the real parameters of the object. Instead, the aim is to accurately recognize the intended human motion by compensating for the effects of the object dynamics, thereby enabling safe and precise human-robot collaboration. Consequently, in the proposed approach, we focus on estimating the effective object parameters that the robot needs during the collaborative task.

As previously mentioned, since we do not account for the human wrench during the estimation process, is set to zero as follows:

| (19) |

By substituting (19) into (15), the object dynamics affecting the robot can be represented as follows:

| (21) |

where is a data matrix containing the linear and angular accelerations and angular velocities [18]. The parameter vector consists of the object’s dynamic parameters and is defined as follows:

| (23) |

where is the object mass, is the center of mass between the robot and object frames, and is the moment of inertia, with .

The extended Kalman filter (EKF) is adopted as an estimator because is time varying. With the filter state , the prediction of the EKF is formulated as follows:

| (25) |

| (27) |

where is the process noise covariance matrix. Then, the correction process is formulated with the effective object dynamics as follows:

| (30) |

| (33) |

| (35) |

where is the measurement noise covariance matrix, is the estimated error covariance matrix, and is obtained by backward differentiation. is directly measured based on the robot sensory feedback, and is the value estimated according to this filter.

With the estimated effective object dynamics, the real object dynamics can be expressed according to (17) as follows:

| (37) |

Here, denotes the intended human motion transmitted to the robot through . Therefore, the real object dynamics are expressed as a sum of the effective object dynamics and the intended human motion projected by the object geometry.

III Object-Aware Impedance Controller

An object-aware impedance controller is designed based on the estimated effective object dynamics for human-robot collaborative mobile manipulation tasks.

III-A Dynamics of the Mobile Manipulator

The dynamics of the nonholonomic mobile manipulator are briefly reviewed [23]. The mobile manipulator consists of a four-wheel differential-drive mobile base and manipulator, as depicted in Fig. 1. The generalized coordinates include 3-degree-of-freedom (DOF) rigid body motion () due to the presence of a floating base, wheel angular motion () and arm joint motion () and are written as follows:

| (39) |

where , and . Note that has only two components despite the presence of the four wheels because the left and right pairs of wheels are dependent on each other. As the manipulator used in our system has 7-DOF motion, the total DOF of the generalized coordinates is .

The differential-drive mobile base is characterized by two nonholonomic constraints: no lateral slip and pure rolling motion. The constraint matrix can be represented as follows:

| (41) |

where . The transformation matrix is in the null space of the constraint matrix [24].

| (43) |

where satisfies and is the reduced velocity vector of the generalized coordinate of the robot. The Jacobian matrix between the Cartesian velocity space and the reduced joint velocity space can be derived as follows:

| (45) |

where . The nonholonomic constraint can be included in the equation of motion of the mobile manipulator by introducing a Lagrangian multiplier as follows:

| (47) |

where and are the joint torque input vector and the external disturbance vector, respectively. , , and are the inertia matrix, Coriolis and centrifugal matrix, and gravity vector, respectively. is the Lagrangian multiplier for the nonholonomic constraints.

By substituting (43) and its time derivative into (47) and multiplying by on both sides of the equation, the nonconstrained equation of motion can be reformulated as follows:

| (49) |

where

Consequently, the 3-DOF rigid body motion is eliminated from the state vector of the reformulated equation, enabling the use of the nonholonomic constraints.

III-B QP-Based Whole-Body Controller

Due to the redundancy of the whole-body robot, quadratic programming (QP) optimization can be introduced to develop a multipriority control framework. The redundancy is due to the difference between the number of joints and the number of Cartesian DOFs, and the Jacobian can be introduced to address this redundancy. The QP optimization can be formulated as follows:

| (54) |

where and are the weight vectors in the cost function. and are the joint and Cartesian space desired accelerations introduced in the cost function, and and are the desired accelerations introduced in the constraints of the QP formulation. and are the lower and upper limits of the joint acceleration, respectively.

If the solution of (54) exits, it should satisfy the equality and inequality constraints. On the other hand, the goal of the optimization problem is to find a solution that minimizes the resultant cost of the loss function under the given constraints. Consequently, it is not necessary for each term in the loss function to converge to zero. With this perspective, the tasks designated by the constraints must be satisfied, and they have the highest priority (priority = 0), while the tasks in the loss function have lower priority (priority = 1). In summary, the QP optimization process can be formulated by selecting tasks based on the candidates, such as priority 0 tasks and/or priority 1 tasks ().

In the joint space, the desired acceleration can be obtained through a PD controller as follows:

| (57) |

where denotes the priorities. and are the proportional and derivative gains in joint space, respectively, and denotes the desired joint angle. Additionally, the desired acceleration in Cartesian space can be obtained as follows:

| (59) |

where and are the proportional and derivative gains in Cartesian space, respectively, and are the desired pose and velocity, respectively. In this paper, instead of (59), we adopt a Cartesian space object-aware impedance controller.

According to the solution shown in (54), , the desired input torque for the real robot, can be obtained with the feedback linearization technique as follows:

| (61) |

III-C Object-Aware Impedance Controller Formulation

For a human-robot collaborative task with an object, the external force is determined by the real object dynamics as follows:

| (63) |

By substituting (37) into (49), the equation of motion considering the object dynamics can be represented as follows:

| (66) |

The external forces acting on the robot include the human wrench, which is exerted through the object, and the effective object dynamics that the robot endures under the specific configuration. If there is no object, becomes an identity matrix, reducing the external forces to , signifying that the intended human motion is applied directly to the robot. On the other hand, in the absence of human-robot collaboration, in which only the robot performs the task, the robot bears all the object dynamics. Hence, (37) becomes , representing that the real object dynamics are manifested as external forces.

For human-robot collaborative tasks, the target impedance can be designed as follows:

| (68) |

The crucial point is that the external force in the desired impedance is and not . When the human and the robot are cooperatively holding an object, the desired impedance represents the relationship between the robot motion and the intended human motion, excluding the influence of the object. Note that if the stiffness of the desired impedance is set to zero, the robot can actively follow the human operator based on the estimated human motion.

To implement the impedance controller using the QP formulation, the Cartesian space task with priority 0 can be expressed as follows:

| (71) |

The above equation is similar to (59); however, instead of a PD controller, an impedance controller including the desired inertia and external force is employed.

By assigning the impedance controller as the highest priority task and solving the QP problem, the object-aware impedance controller can be represented as follows:

| (74) |

where is the pseudoinverse of . Without loss of generality, the existence of a feasible solution for the impedance controller can be guaranteed due to the its highest priority. Therefore, the desired input torque can be represented by substituting (74) into (66) as follows:

| (80) |

Alternatively, by gathering the terms related to , (80) can be represented as follows:

| (86) |

where and , which is the inertia in Cartesian space [25]. For a general impedance controller, external force feedback can be avoided when inertia shaping is not performed, as expressed by [26]. However, in scenarios in which an object is present between the robot and human, avoiding the external forces feedback requires specific inertia shaping with considering object geometry, which is expressed as , thereby resulting in . Accordingly, the desired impedance is formulated as follows:

| (89) |

where . However, understanding the intended human motion, denoted as , necessitates the use of external force measurements. Consequently, ifurther shaping of the desired inertia is employed to enable efficient interactions between the human and the robot through the object as follows:

| (91) |

where is the desired weighting matrix of the external wrenches, which is determined in the subsequent section.

IV Experiments

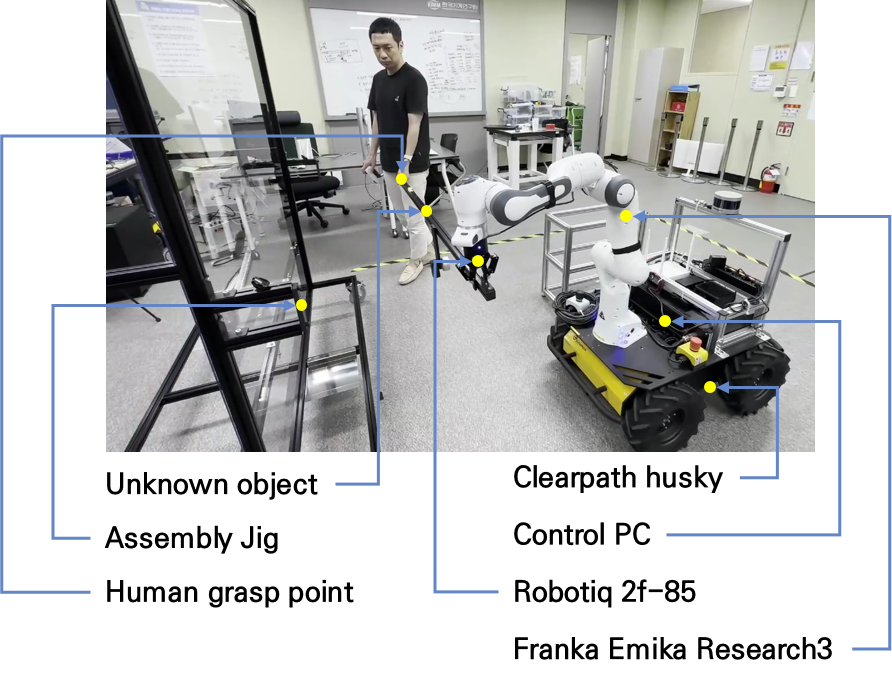

The mobile manipulator was realized, as illustrated in Fig. 2, to experimentally verify the performance of the proposed method in human-robot collaborative task scenarios by integrating a 7-DOF torque-controlled manipulator, Research3, manufactured by Fanka Emika, and a velocity-controlled four-wheel mobile base, Husky, manufactured by Clearpath Robotics. The mobile base is operated by a differential drive maneuver, and the left and right pairs of wheels are dependent on each other, resulting in 2-DOF motion. A 1-DOF two-fingered gripper, 2F-85 by Robotiq, is attached to the end-effector of the robot arm. The control algorithms are implemented on an Intel Core i7 4.7 GHz with 16 GB RAM with a robot operating system (ROS) interface and PREEMPT_RT kernel.

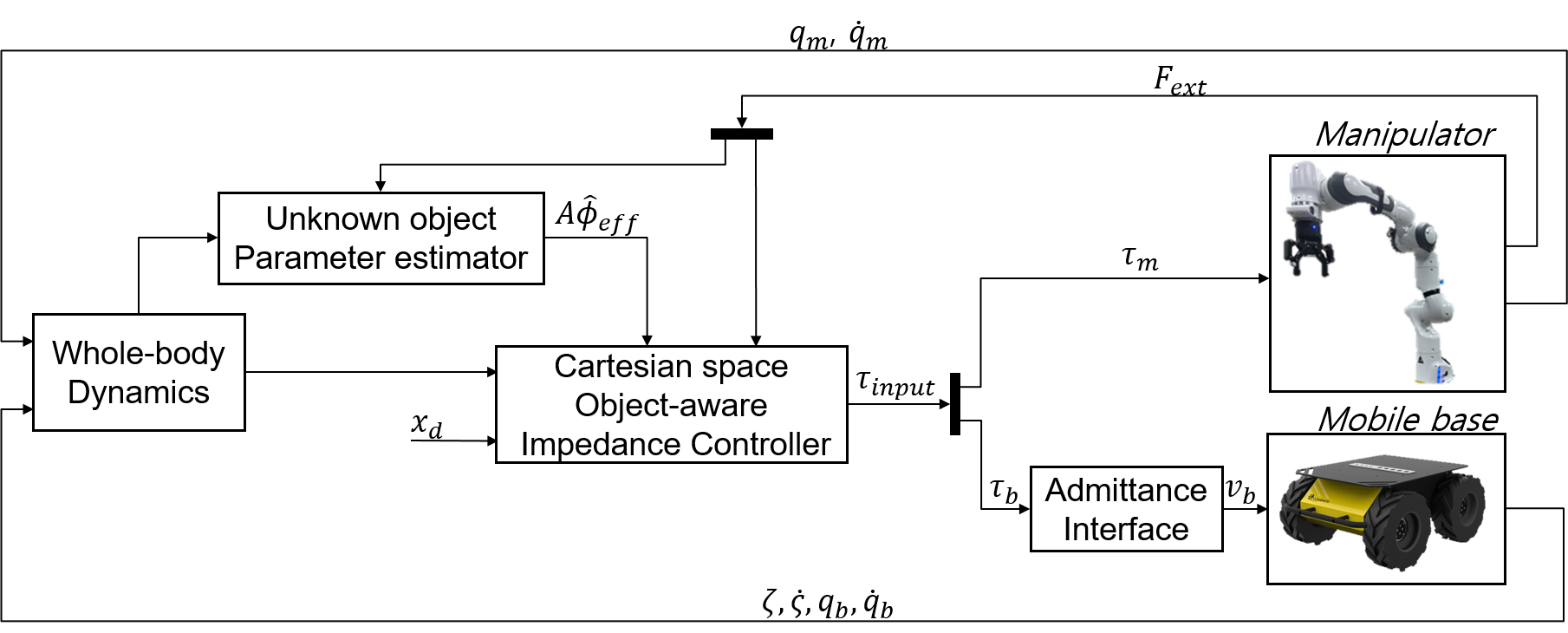

A schematic diagram of the proposed human-robot collaborative task, including the unknown object parameter estimation process and the Cartesian space object-aware impedance controller, is shown in Fig. 3. The parameter estimation process is carried out during the estimation phase, and after the parameters are estimated, the object dynamics, , can be transmitted to the controller. To enhance the estimation precision, a perturbation signal is introduced into the null space of , as detailed in the next section. The measurements are the state of the manipulator, , , and mobile base, , , and the position and velocity of the mobile manipulator, , , are provided by localization algorithm [27]. The external force, , exerted on the end effector is also estimated based on the torque sensors equipped at each joint of the manipulator. The desired torque input computed by our controller is directly transmitted to the robot arm, while for the velocity-controlled mobile base, the torque inputs are transformed into velocity inputs with the admittance interface [28]. The manipulator is controlled in real time with a 1 kHz control frequency, and the mobile base is controlled by ROS topic communication with a 10 Hz sampling rate.

IV-A Unknown Object Parameter Estimation Results

For parameter estimation, the development of estimation methods such as least squares or adaptive algorithms is crucial. However, to enhance accuracy and precision, the design of a probing signal is also important [29]. The object should be perturbed to satisfy the persistent excitation condition, which is achieved by noncolinear angular motion. However, the input perturbation signal should not affect human motion during collaborative tasks to guarantee safety. Pivoted angular motion with respect to the human grasp point enables the object to be perturbed without significantly affecting the human motion.

Similar to (8), the kinematic relation between the robot and hand grasping frames can be represented as follows:

| (93) |

The pivoted perturbation motion can be realized with reduced kinematics considering only translational motion as follows:

| (95) |

where . The robot end-effector motion in Cartesian space had redundancy in terms of making only translational motion of human grasping frame, thereby allowing the creation of the desired robot motion as follows:

| (97) |

where is an arbitrary vector projected in the null space of . By introducing angular perturbation motion to the vector , collaborative transportation (translational motion) and estimation with object perturbations can be performed simultaneously, with the human grasping point maintained as a pivot [9]. In this paper, the angular motions are chosen as , where [rad/s], with [Hz]. The object to be estimated is a 1.5 m long aluminum object with additional attached weights, and the true parameters are represented in Table I. The coordinates representing the center of mass are illustrated in Fig. 1; the y-axes of the human and robot frames are aligned with the longitudinal direction of the object, expressed as .

| [kg] | [mm] | [mm] | [mm] | |

| True | 2.23 | -683 | 0 | 49 |

| Effective | 1.115 | 0 | 0 | 49 |

| Estimated | 1.131 | 8 | -7 | 44 |

In this paper, the object length is assumed to be known before the object is held by the human and robot; well-known artificial intelligence (AI)-based algorithms such as the region-based convolutional neural network (R-CNN) can be used to estimate the object length.

The effective object parameters depend on the human-robot grasping configuration. In this experiment, the human and the robot grasp both ends of the object as rigidly as possible; additionally, the human participant attempts to hold the object as parallel to the robot as possible. Accordingly, the effective parameters of the object are presented in Table I. Additional sensors are not employed to estimate human information, such as the human wrench or motion.

The objective of this paper is to effectively eliminate the object dynamics during collaborative tasks and not to estimate the true object parameters; thus, the moment of inertia of the object is not estimated because this parameter is less important than the mass and center of mass if motion with large acceleration does not occur during the task. Moreover, perturbing the object with large accelerations is undesirable because such motion can potentially affect human safety. In this section, the human and the robot remain static to accurately verify the parameter estimation performance, and in the following section, the estimation is performed during the collaborative transportation task.

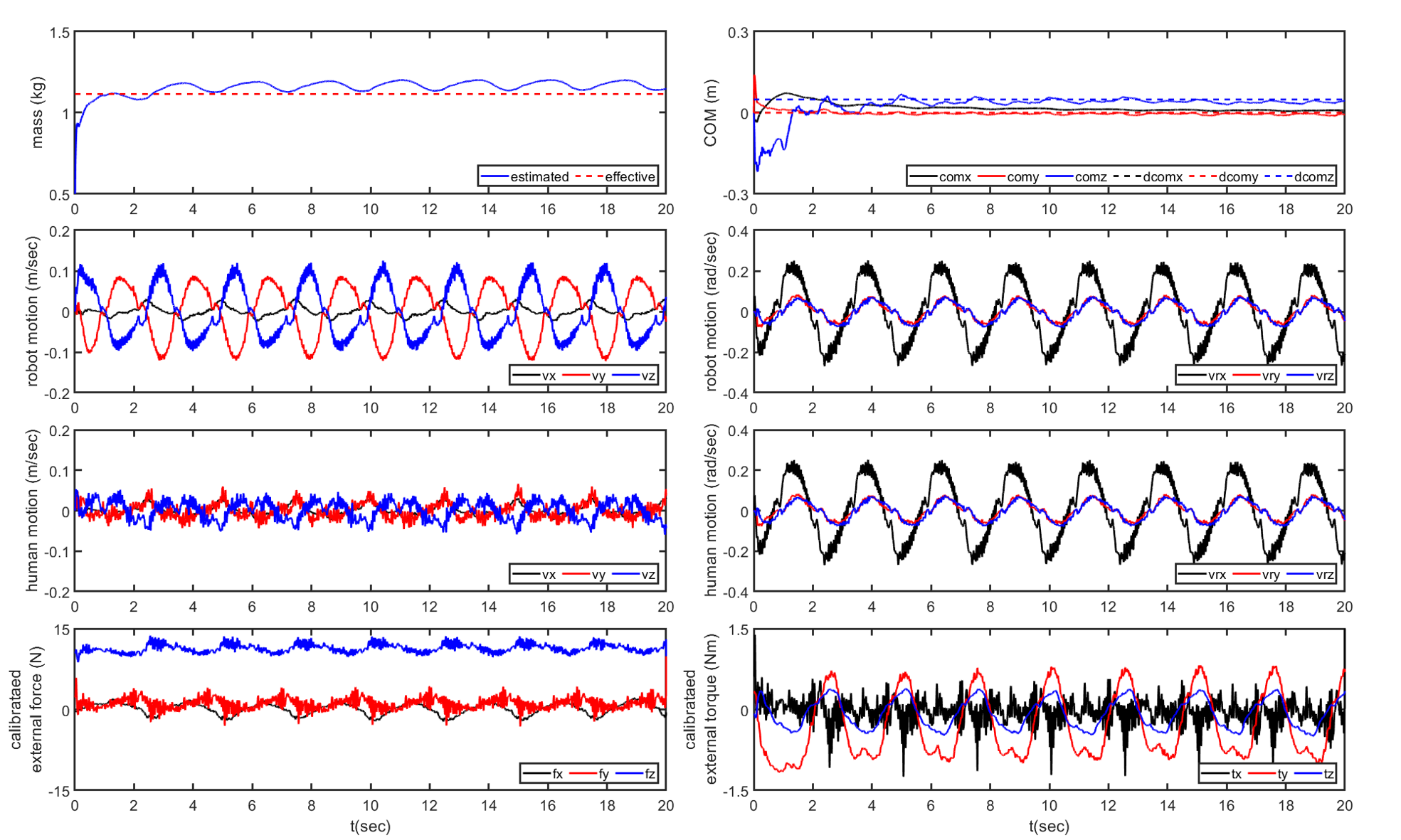

The initial values of the estimator are and . The weighting matrix of the estimator is set to and . The results are represented in Fig. 4 and Table I. Since angular perturbation motion was realized in the null space of , the robot also showed transitional motion. On the other hand, the hand motion, estimated with (95), was well pivoted, as the linear motion remained static, including only some fluctuations, which may be introduced due to the difficulty of humans holding the object consistently during the duration of the estimation. The angular velocities of the human, robot and object are the same, as they are connected rigidly during the task.

For the estimation, most unmodeled bias and disturbance terms are subtracted from the calibration motion based on the measured external wrench, resulting in the calibrated external wrench depicted in Fig. 4. The result of converges within to , with a relative error and an effective mass of . The results of converge within to , with a relative error and an effective mass of . Because the estimation results for the mass and the center of mass both converge within 10 seconds, the required estimation time for our collaborative task scenario is set to 10 seconds.

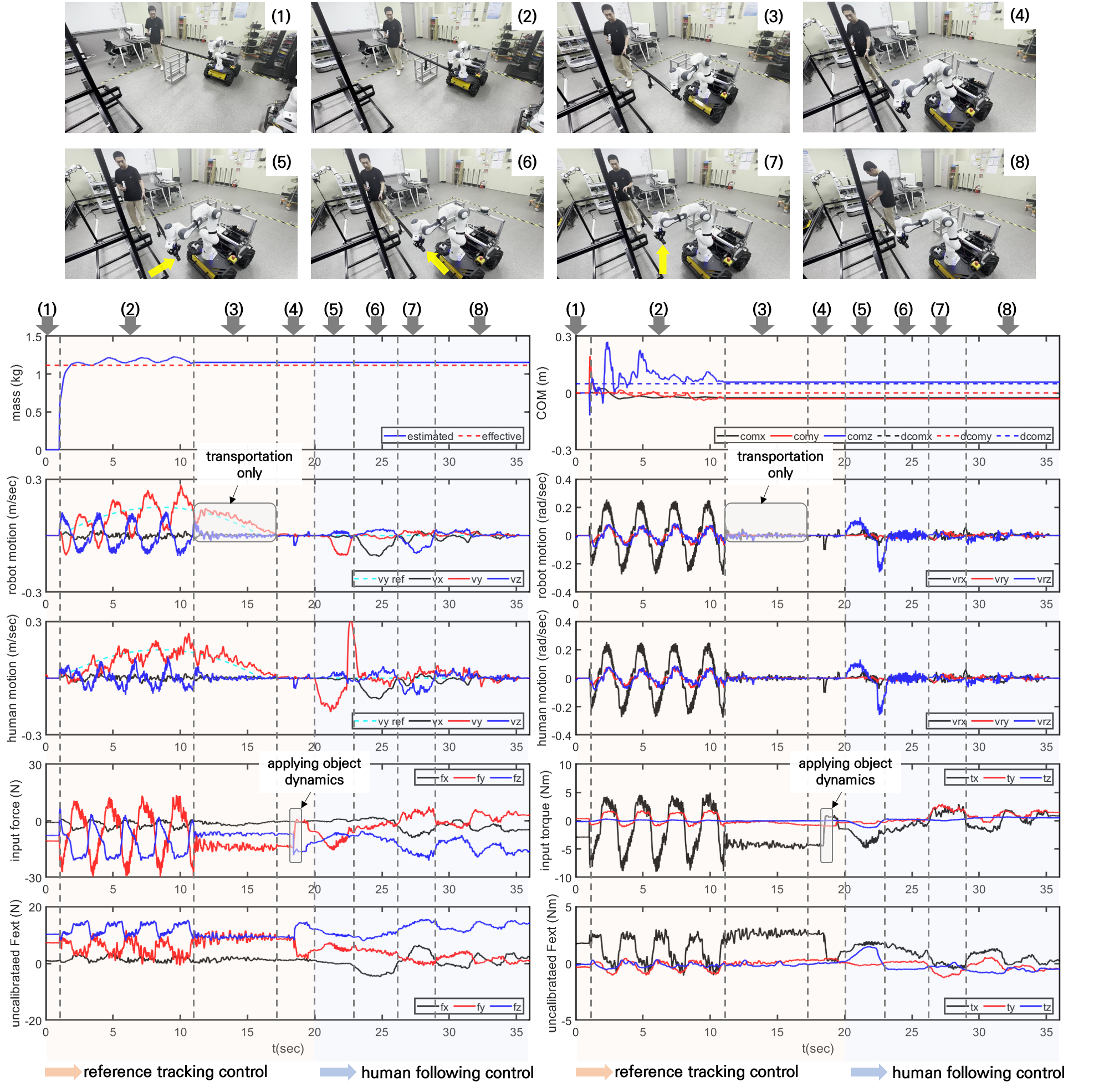

IV-B Human-Robot Collaborative Task Results

A scenario was selected to demonstrate the effectiveness of our proposed methods, including the online parameter estimation strategy and the Cartesian impedance controller considering object dynamics. The scenario involves human-robot collaborative transportation and assembly tasks with an unknown object. A jig, as depicted in Fig. 2, is utilized for the object assembly task. The specific steps of the scenario are as follows:

-

1.

Object lifting: The human and the robot lift an object together.

-

2.

Object parameter estimation during the collaborative transportation task: The human and robot transport the object and approach the jig. During the transportation task, the parameter estimation is performed while inducing an object perturbation signal in the null space of the desired robot motion.

-

3.

Continuation of the collaborative transportation task: Once the estimation process is completed, the perturbation signal is no longer applied, regardless of whether the transportation task was completed. In this scenario, the transportation task continues even after the estimation is completed.

-

4.

Object dynamics compensation: After the transportation phase, the object dynamics are compensated for to complete the design of the object-aware impedance controller, as represented in (86).

-

5.

Following the intended human motion: The human intention following control, also called as follow-me control [5], is implemented by setting the stiffness of the desired impedance, represented in (68), to zero. This enables active tracking of the intended human motion to locate the end of the object on the robot grasping side at the assembly point of the jig.

-

6.

Object assembly onto the jig: The human accomplishes the assembly task by securely attaching both ends of the object to the jig. Meanwhile, the robot returns to its initial position.

As reflected in the above scenario, we attempted to minimize the duration of the transportation task by conducting the parameter estimation during this task employing a nonthreatening perturbation signal. Therefore, by incorporating the object dynamics into the impedance controller in real time, the intended human motion can be transmitted to the robot without any additional action during the scenario. Note that the human worker performs the task with one hand holding the object, and their other hand is used to manipulate the joystick for the step transition. Therefore, the human can successfully perform the scenario without additional control PCs or coworkers. The whole scenario is available at https://www.youtube.com/watch?v=bGH6GAFlRgA, where the task was captured in a single continuous take.

With several practice of collaborative task, it was observed that transmitting intended human motion to the robot through the object becomes more challenging as the longer the object. The results showed that the use of rotational wrench at the robot side is more efficient to understand the translational human motion. To incorporate this observation into the weighting matrix of the external wrenches, , presented in (86), we defined as follows:

| (99) |

With the coordinate system illustrated in Fig. 1, the translational motion of the robot along the +y and +z directions can be adjusted based on the rotational motion of the human along the -z and -y axes. Moreover, the robot’s +x transitional motion can be adjusted based on the +x translation motion of the human due to the alignment of the x-axis of the robot’s end effector, the human grasping point and the object.

The overall results of the scenario are presented in Fig. 5. The object parameter estimation results during the nonstationary transportation task are and . Comparatively, the results exhibited larger errors than those obtained in the stationary task. The bias in the results may be influenced by the human’s inability to maintain the alignment between the robot’s grasping position and the object during the transportation phase; especially, the negative bias of x and y-axes center of gravity indicates that human participant tends to walk rapidly and more raise the object than the robot based on the coordinate system in Fig. 1. Nevertheless, the estimation errors are acceptable as the robot could maintain its posture without tilting downward during human intention following control.

During the transportation phase, an impedance controller with nonzero stiffness and damping, , , was employed to move the robot’s end effector from the initial position, , , to the final position, , , by tracking the desired trajectory. In the whole-body controller defined in (54), high-priority tasks (priority 0) include the trajectory tracking control in the Cartesian space and limiting the input torques, while low-priority tasks (priority 1) include maintaining the initial robot arm configuration. Notably, in the tracking control task, the emphasis lies that the human is allowed to track the desired trajectory, whereas the end effector does not exactly follow the trajectory due to the presence of the identification input. After the transportation phase is completed, the input force and torques were modulated due to the feedforward incorporation of the object dynamics, as shown in Fig. 5.

To attach the object to the jig, precise alignment is necessary. To achieve this, the intended human motion following the control strategy was executed by configuring the highest priority task of the whole-body controller to maintain the initial Cartesian position using an impedance controller with zero translational stiffness. The priority 1 task attempts to maintain the initial configuration of the robot arm, as in the previous experiment. The results show that the external wrench does not significantly increase during the object alignment, indicating that the robot successfully follows the intended human motion. Moreover, the x-, y-, and z-axes of the object position are adjusted by the x-axis of the external force and the z- and y-axes of the external torques, as represented in (99). Note that some vibrations appear in during the transportation phase due to the motion of the mobile base, and these vibrations can be eliminated by tuning the velocity controller.

Consequently, the proposed human-robot collaborative method can realize transportation and assembly tasks in a single operation.

V Conclusions

In this paper, an approach for physical human-robot interactions that accounts for unknown object dynamics is proposed, including an online estimation method and a Cartesian impedance controller. The EKF estimator with an object perturbation input aims to estimate effective object parameters in a specific configuration involving a human, robot, and object. The object-aware impedance control strategy incorporates the object dynamics in real time, allowing exact transmission of the intended human motion.

Notably, a key feature of this approach is that the following control scheme is realized through physical contact with the object rather than direct contact between the robot and the human. To achieve this, the direction of the intended human motion is transformed from rotational to linear motion by shaping the desired inertia matrix. To demonstrate the feasibility of the proposed method in real-world scenarios, transportation and assembly tasks were performed. The results showed that safe and accurate interactions with long objects can be successfully achieved.

In future works, we will focus on enhancing the parameter estimation performance by effectively removing the uncertainties of human motion and the bias in the external force estimators with physics informed neural network (PINN).

References

- [1] D. Sirintuna, A. Giammarino, and A. Ajoudani, “An Object Deformation-Agnostic Framework for Human-Robot Collaborative Transportation,” arXiv preprint arXiv:2207.13427, 2022.

- [2] D. Sirintuna, A. Giammarino, and A. Ajoudani, “Human-Robot Collaborative Carrying of Objects with Unknown Deformation Characteristics,” IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2022.

- [3] A. De Santis, B. Siciliano, A. De Luca, and A. Bicchi, “An atlas of physical human-robot interaction,” Mech. Mach. Theory, vol. 43, no. 3, pp. 253–270, 2008.

- [4] Alexander Mörtl, Martin Lawitzky, Ayse Kucukyilmaz, Metin Sezgin, Cagatay Basdogan and Sandra Hirche, “The role of roles: Physical cooperation between humans and robots,” International Journal of Robotics Research, vol. 31, no. 13, pp. 1657–1675, 2012.

- [5] M. Leonori, J. M. Gandarias, and A. Ajoudani, “MOCA-S: A Sensitive Mobile Collaborative Robotic Assistant exploiting Low-Cost Capacitive Tactile Cover and Whole-Body Control,” IEEE Robot. Autom. Lett., pp. 1–8, 2022.

- [6] L. Rapetti, C. Sartore, M. Elobaid, Y. Tirupachuri, F. Draicchio, T. Kawakami, T. Yoshiike and D. Pucci, ”A Control Approach for Human-Robot Ergonomic Payload Lifting,” arXiv preprint arXiv:2305.08499, 2023.

- [7] K. I. Alevizos, C. P. Bechlioulis, and K. J. Kyriakopoulos, “Physical Human-Robot Cooperation Based on Robust Motion Intention Estimation,” Robotica, vol. 38, no. 10, pp. 1842-1866, 2021.

- [8] K. I. Alevizos, C. P. Bechlioulis, and K. J. Kyriakopoulos, “Bounded Energy Collisions in Human-Robot Cooperative Transportation,” IEEE Trans. Mechatronics, 2021.

- [9] Denis Ćehajić, Pablo Budde gen. Dohmann, and Sandra Hirche, ”Estimating unknown object dynamic in human-robot manipulation tasks,” IEEE International Conference on Robotics and Automation (ICRA), 2017, pp. 1730-1737.

- [10] Denis Ćehajić, Sebastian Erhart, and Sandra Hirche, “Grasp pose estimation in human-robot manipulation tasks using wearable motion sensors,” IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 1031–1036, 2015.

- [11] D. Torielli, L. Muratore, A. D. Luca and N. Tsagarakis, “A Shared Telemanipulation Interface to Facilitate Bimanual Grasping and Transportation of Objects of Unknown Mass,” IEEE-RAS 21st International Conference on Humanoid Robots (Humanoids), 2022.

- [12] T. Asfour et al., “ARMAR-6: A Collaborative Humanoid Robot for Industrial Environments,” IEEE-RAS Int. Conf. Humanoid Robot., vol. 2018-Novem, pp. 447–454, 2019.

- [13] Martin Lawitzky, Alexander Mörtl and Sandra Hirche, “Load sharing in human-robot cooperative manipulation,” 19th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), 2010, pp. 185–191.

- [14] F. Benzi, C. Mancus, and C. Secchi, “Whole-Body Control of a Mobile Manipulator for Passive Collaborative Transportation,” arXiv preprint arXiv:2211.13680 2022.

- [15] J. Delpreto and D. Rus, “Sharing the Load: Human-Robot Team Lifting Using Muscle Activity,” IEEE International Conference on Robotics and Automation (ICRA), 2019.

- [16] M. Kimmel and S. Hirche, “Invariance control for safe human-robot interaction in dynamic environments,” IEEE Trans. Robot., vol. 33, no. 6, pp. 1327–1342, 2017.

- [17] X. Liu, G. Li, and G. Loianno, “Safety-Aware Human-Robot Collaborative Transportation and Manipulation with Multiple MAVs,” arXiv preprint arXiv:2210.05894, 2022.

- [18] A. Kurdas, M. Hamad, J. Vorndamme, N. Mansfeld, S. Abdolshah, and S. Haddadin, “Online Payload Identification for Tactile Robots Using the Momentum Observer,” IEEE International Conference on Robotics and Automation (ICRA), pp. 5953–5959, 2022.

- [19] Antoine Bussy, Abderrahmane Kheddar, A. Crosnier, and F. Keith, “Human-Humanoid Haptic Joint Object Transportation Case Study,” IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 3683–3638, 2012.

- [20] Antonio Franchi, Antonio Petitti, and Alessandro Rizzo, “Decentralized parameter estimation and observation for cooperative mobile manipulation of an unknown load using noisy measurements,” IEEE International Conference on Robotics and Automation (ICRA), 2015.

- [21] V. Wüest, V. Kumar, and G. Loianno, “Online Estimation of Geometric and Inertia Parameters for Multirotor Aerial Vehicles,” IEEE International Conference on Robotics and Automation (ICRA), 2019.

- [22] J. Jang and J. H. Park, ”Parameter Identification of an Unknown Object in Human-Robot Collaborative Manipulation,” 20th International Conference on Control, Automation and Systems (ICCAS), 2020.

- [23] S. Kim, K. Jang, S. Park, Y. Lee, S-Y. Lee and J. Park, ”Whole-body Control of Non-holonomic Mobile Manipulator Based on Hierarchical Quadratic Programming and Continuous Task Transition,” IEEE 4th International Conference on Advanced Robotics and Mechatronics (ICARM), 2019.

- [24] R. Dhaouadi and A-A. Hatab, ”Dynamic Modelling of Differential-Drive Mobile Robots using Lagrange and Newton-Euler Methodologies: A Unified Framework,” Advances in Robotics & Automation, vol. 2, no. 2, 2013.

- [25] K. Bussmann, A. Dietrich and C. Ott, ”Whole-Body Impedance Control for a Planetary Rover with Robotic Arm: Theory, Control Design, and Experimental Validation,” IEEE International Conference on Robotics and Automation (ICRA), 2018.

- [26] Christian Ott, ”Cartesian Impedance Control of Redundant and Flexible-Joint Robots,” Springer, 2008.

- [27] http://wiki.ros.org/robots/husky.

- [28] A. Dietrich, K. Bussmann, F. Petit, P. Kotyczka, C. Ott, B. Lohmann and A. Albu-Schäffer, ”Whole-body impedance control of wheeled mobile manipulators,” Autonomous Robots, vol. 40, no. 3, pp. 505-517, 2016.

- [29] J. Park and Y. Park, ”Optimal Input Design for Fault Identification of Overactuated Electric Ground Vehicles,” IEEE Transactions on Vehicular Technology, vol. 65, no. 4, pp. 1912-1923, 2016.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/63484994-a1ae-462e-bba0-b646d56fdcf3/Park.jpg) |

Jinseong Park (Member, IEEE) received B.S., M.S. and Ph.D. degrees in mechanical engineering from the Korea Advanced Institute of Science and Technology (KAIST), Daejeon, Republic of Korea, in 2008, 2010 and 2016, respectively. He was a senior researcher at Hyundai Robotics Co., Ltd., Ulsan, Republic of Korea, from 2016-2017. He has been a senior researcher at the Korea Institute of Machinery and Materials (KIMM), Daejeon, Republic of Korea, since 2017. His research interests include optimal control, active vibration control, and fault diagnosis, with an emphasis on mobile manipulators, vehicles, and magnetic levitation systems. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/63484994-a1ae-462e-bba0-b646d56fdcf3/yshin.jpg) |

Young-Sik Shin (Member, IEEE) received a B.S. degree in electrical engineering from Inha University, Incheon, South Korea, in 2013, and M.S. and Ph.D. degrees in civil and environmental engineering with a dual degree from the Robotics Program, Korea Advanced Institute of Science and Technology (KAIST), Daejeon, South Korea, in 2015 and 2020, respectively. He is currently a senior researcher with the Korea Institute of Machinery and Materials (KIMM), Daejeon. His research interests include robust simultaneous localization and mapping and navigation using heterogeneous sensors. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/63484994-a1ae-462e-bba0-b646d56fdcf3/sanghyun.jpg) |

Sanghyun Kim (Member, IEEE) received a B.S. degree in mechanical engineering and a Ph.D. degree in intelligent systems from Seoul National University, South Korea, in 2012 and 2020, respectively. He was a postdoctoral researcher at the University of Edinburgh, U.K., in 2020, and a senior researcher at the Korea Institute of Machinery and Materials (KIMM), South Korea, from 2020 to 2023. He is currently an assistant professor of mechanical engineering at Kyung Hee University. His main research interests include the optimal control of mobile manipulators. |