Numerical Approximations of the Allen-Cahn-Ohta-Kawasaki (ACOK) Equation with Modified Physics Informed Neural Networks (PINNs)

Abstract

The physics informed neural networks (PINNs) has been widely utilized to numerically approximate PDE problems. While PINNs has achieved good results in producing solutions for many partial differential equations, studies have shown that it does not perform well on phase field models. In this paper, we partially address this issue by introducing a modified physics informed neural networks. In particular, they are used to numerically approximate Allen-Cahn-Ohta-Kawasaki (ACOK) equation with a volume constraint. Technically, the inverse of Laplacian in the ACOK model presents many challenges to the baseline PINNs. To take the zero-mean condition of the inverse of Laplacian, as well as the volume constraint, into consideration, we also introduce a separate neural network, which takes the second set of sampling points in the approximation process. Numerical results are shown to demonstrate the effectiveness of the modified PINNs. An additional benefit of this research is that the modified PINNs can also be applied to learn more general nonlocal phase-field models, even with an unknown nonlocal kernel.

keywords:

Physics Informed Neural Networks, Allen-Cahn-Ohta-Kawasaki Equation, Phase Field Models1 Introduction

Ohta-Kawasaki(OK) free energy[22], usually associated with a volume constraint, has been used to simulate the phase separation of diblock copolymers, i.e., polymers consisting of two types of monomers, and , that are chemically incompatible and connected by a covalent chemical bond. It is formulated as

| (1.1) |

Here is a periodic domain, and is a phase field labeling function representing the density of species with interfacial width . Function is a double well potential. The nonlinear function keeps the structure of as and . More importantly it also has the property that , which localize the force and avoid possible non-zeros and non-ones near the boundary of the interface [29] when considering gradient flow dynamics of (1.1). The first integral is a local surface energy, the second term represents the long-range interaction between the molecules with controlling its strength and being the fraction of species , and the third term is a penalty term to fulfill the volume constraint

| (1.2) |

Allen-Cahn-Ohta-Kawasaki(ACOK) model [29], which is the focus of this paper, takes the gradient dynamics of the OK free energy in (1.1) as

| (1.3) | ||||

| (1.4) |

Various successful and efficient PDE solvers can be applied to the model, such as Finite Difference, Finite Element [20], Spectral method [10, 15], etc. In recent years, some novel numerical methods have also been studied for Cahn-Hilliard dynamics, i.e., the gradient flow dynamics of OK energy, such as the midpoint spectral method [1], and the Invariant Energy Quadratization (IEQ) method [5]. In [29], a first-order energy stable linear semi-implicit method is introduced and analyzed for the ACOK equation. Our goal in this paper is to study the approximation of the solution of the ACOK equation with neural networks.

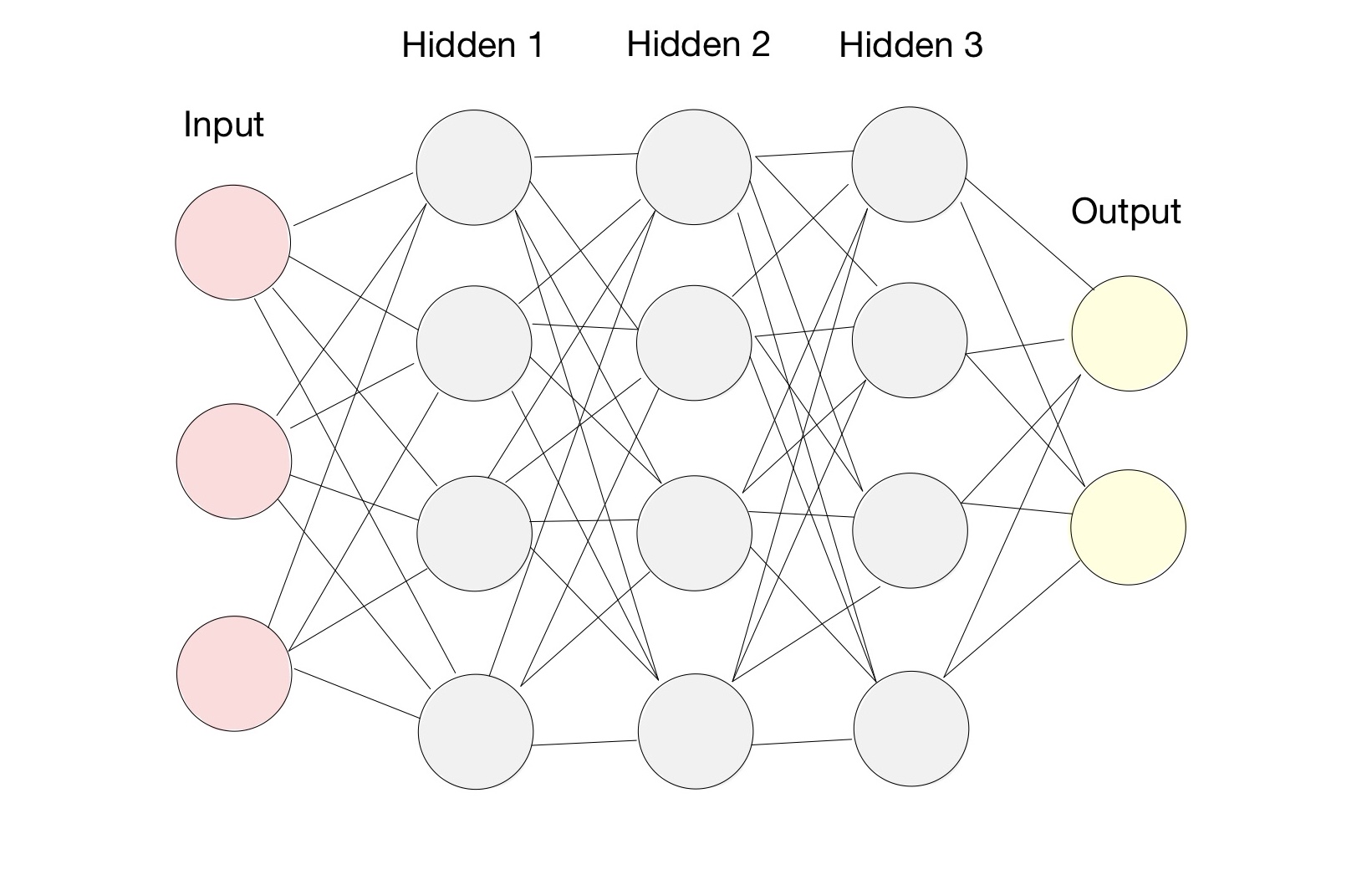

Recently, there has been an increasing interest in solving PDEs with machine learning methods, among which the physics-informed neural networks(PINNs) [24] have gained tremendous popularity. PINNs are based on a fully-connected feed-forward deep neural network (DNN)[14, 26], which is often interpreted utilizing universal approximation theorem [6, 18, 12, 11]. The structure of a fully-connected DNN, consisting of an input layer, hidden layers, and an output layer, is shown in Figure 1. Every node in one layer is connected with every node in another by a series of computations. The nodes in the input layer are the specific data studied, and the nodes in the output are the expected outcome. For example, in the case of facial recognition, the input could be the RBG values (features) of each pixel of the sample pictures, while the output could be the assigned numerical values representing the individuals (classes). The nodes in the hidden layers are called neurons, and they are responsible for the performance of the neural network. There can be many hidden layers in a neural network; in the case of Figure 1, the number of hidden layers is three.

A column vector is fed to each node of the input layer. Then in the first hidden layer, we multiply it by another row vector, i.e., weight, to get the product. This is the case that there is only one neuron in the relevant hidden layer. If there are more neurons, the row vector will be a weight matrix instead, where the number of rows of the matrix represents the number of neurons. A bias vector is then added to the product, and an activation function is applied to the new vector, which contributes to the output of the current hidden layer, which is also the input of the next layer. In a nutshell, let be the number of hidden layers, be the input of the neural network, then the output of the neural network will be , with

| (1.5) | ||||

| (1.6) |

where is the input, represents the weights, represents the bias, and is the activation function in the layer.

To obtain the proper weights and bias, we perform an optimization process, for example, gradient descent, on the weight and bias to update them. To calculate the derivatives involved in the optimization process, the network goes through a process called back-propagation [25], which is essentially a chain rule applied on each layer starting from the final one. We start with a certain loss function:

where is the object, the network is approximating, and denotes the weights and bias of the DNN. The loss function, depending on the specific problem, could be a mean square error (MSE), mean absolute error (MAE), log loss function, etc. We then perform back-propagation on the loss function, trace all the way back to get the derivatives of weights and bias for each layer, and then use the optimization tool to update the weights and bias. A gradient descent update is as follows:

where is the learning rate, and is the direction of update. It works essentially like a directing system, telling you which direction to go and how far to go in that direction based on your current location and destination. The optimization process will be repeated several times until the prescribed accuracy is achieved. We call this number of repetitions the number of epochs.

Furthermore, there are a variety of optimizers that can be selected. The previously mentioned gradient descent is one of them. While it has many advantages, it does not work well in our non-convex problem due to its notoriously poor performance on escaping local minimums [7]. Therefore, other optimizers, such as momentum [23], ADAM [16], Adadelta [30], RMSprop [13], etc are developed to better address this issue. The momentum method adds an accelerator in the relevant direction. For example, think about rolling a ball down a ramp. As the ball goes down, it gains momentum and travels faster and faster. Other methods, like Adadelta, use different forms of adaptive learning rates. It is worth mentioning that RMSprop works by dividing “the learning rate for a weight by a running average of the magnitudes of recent gradients for that weight” [13].

In our case, we adopt ADAM as our optimizer for each epoch and L-BFGS-B with a certain stopping condition as the optimizer afterward. Introduced in [16], ADAM can be viewed as a combination of the momentum method and RMSprop. It uses the mean and uncentered variance of the gradient with regards to time to adapt the learning rate for each weight and bias of the neural network:

where is the gradient of the loss function at time step , and the hyper-parameters direct the decay rates of and . A bias correction is also applied to and , which leads to

and to update the weights and bias, we perform , where is the learning rate, and is arbitrary. On the other hand, the L-BFGS-B optimizer [3] uses a “limited memory” Broyden–Fletcher– Goldfarb–Shanno (BFGS) [2, 8, 9, 27] matrix to represent the Hessian matrix of the objective function, making it great for optimization problems with a large number of variables. Stems from the L-BFGS method [19, 21], L-BFGS-B places upper and lower bound constraints on variables. It identifies fixed and free variables for each step with a basic gradient method. It then performs the L-BFGS method on the free variables to achieve better accuracy.

For PINNS, sampled collocation points are fed to the DNN, and the function value is returned to the output layer. Weight and bias in each layer can be learned or updated by optimizing the error function to reach the prescribed accuracy, in which the back-propagation technique is needed to compute the first-order derivatives. Different from traditional machine learning DNN, which requires a very large amount of ground truth data to make an accurate prediction, PINNs take advantage of the physics information and make up for the lack of sufficient ground truth data. It divides the error function into two components, one corresponding to the known ground truth data and the other corresponding to the physics information. Consequently, our goal is to minimize the error between the limited amount of ground truth and the prediction, as well as how much the prediction ”fits” the physics information. Furthermore, as shown in [4], PINNs can be employed to solve inverse problems, in particular, inverse scatter problems in photonic metamaterials and nano-optics technologies, by transforming them into parameter retrieving problems. However, PINNs also have their downsides. As suggested in [17], “existing PINN methodologies can learn good models for relatively trivial problems”, and it can fail when predicting solutions for a more complex model, which is likely due to the fact that the setup of PINNs makes it hard to optimize the less-smooth loss landscape. They have, therefore, proposed a “curriculum regularization”, which is finding a good initialization for weights and training the model gradually. Notably, this has made the optimization process easier. They also mentioned a sequence-to-sequence learning method, which is similar to the Time-Adaptive Method proposed in [28]. This method can make learning easier by learning in small intervals.

While many successful and efficient traditional numerical methods exist, our motivation to study the ACOK equation using machine learning methods is that they can learn the unknown operators in a family of generalized equations from a set of data samples, i.e., the inverse problem. This would benefit future studies focusing on nonlocal PDEs with general nonlocal operators. It can also potentially help tackle real-world challenges in fields such as physics, medicine, fluid dynamics, aerospace, etc. Our contribution to this work is to tackle the challenges that the complexity of the ACOK model has imposed on PINNs. As shown in 1.1, the long-range interaction term, the volume constraint, and the zero-mean condition make it necessary to apply computational and structural modifications to the basic PINNs. Our solution for the long-range interaction term is to apply the Laplace operator on a second neural network output and ensure its accuracy. We introduce a separate neural network into the model for the volume constraint and the zero-mean condition, which takes a separate set of uniform sampling points as its input.

The rest of this paper is organized as follows. In Section 2, we present the numerical methods. Specifically, we briefly revisit the baseline PINNs in Section 2.1. Then we elaborate on the modified PINNs, including the application of the periodic boundary condition, the approximation of the long-range interaction term, the volume constraint, and the zero-mean condition, in Section 2.2. We will then present and discuss some 1-dimensional results in Section 3. In the end, we give a detailed discussion and explain our future work in Section 4.

2 Numerical Methods

Our goal in this paper is to take the ground truth data as input, and predict the solution of ACOK equation with neural networks.

2.1 Baseline PINNs

For the basic setup, we follow the basic PINNs. Let us recall (1.1) and define the residual function as

| (2.1) |

where can be approximated by a deep neural network , and can be calculated by a shared parameter neural network based on the predicted . The network can be learned by minimizing the mean square error:

where

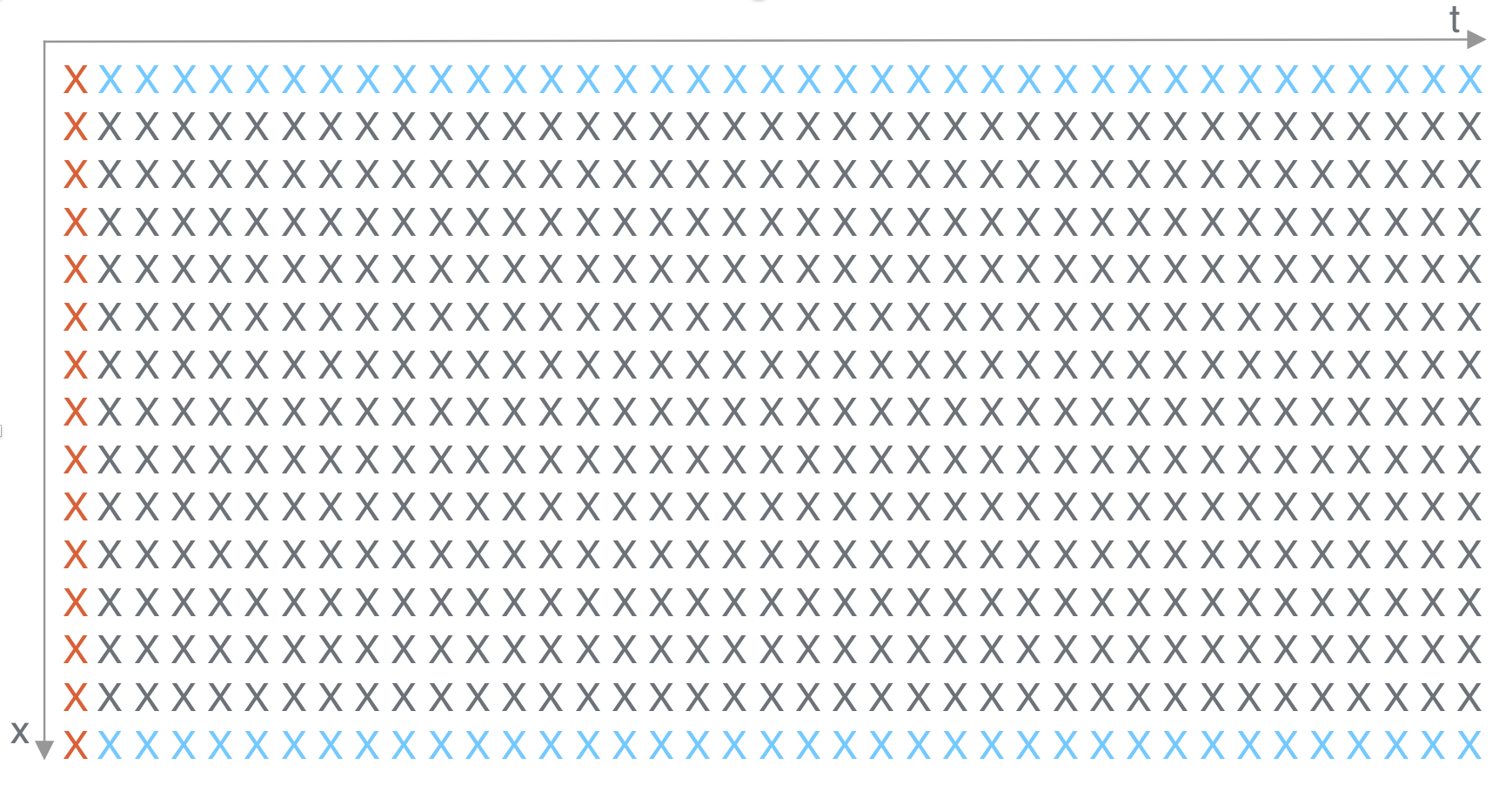

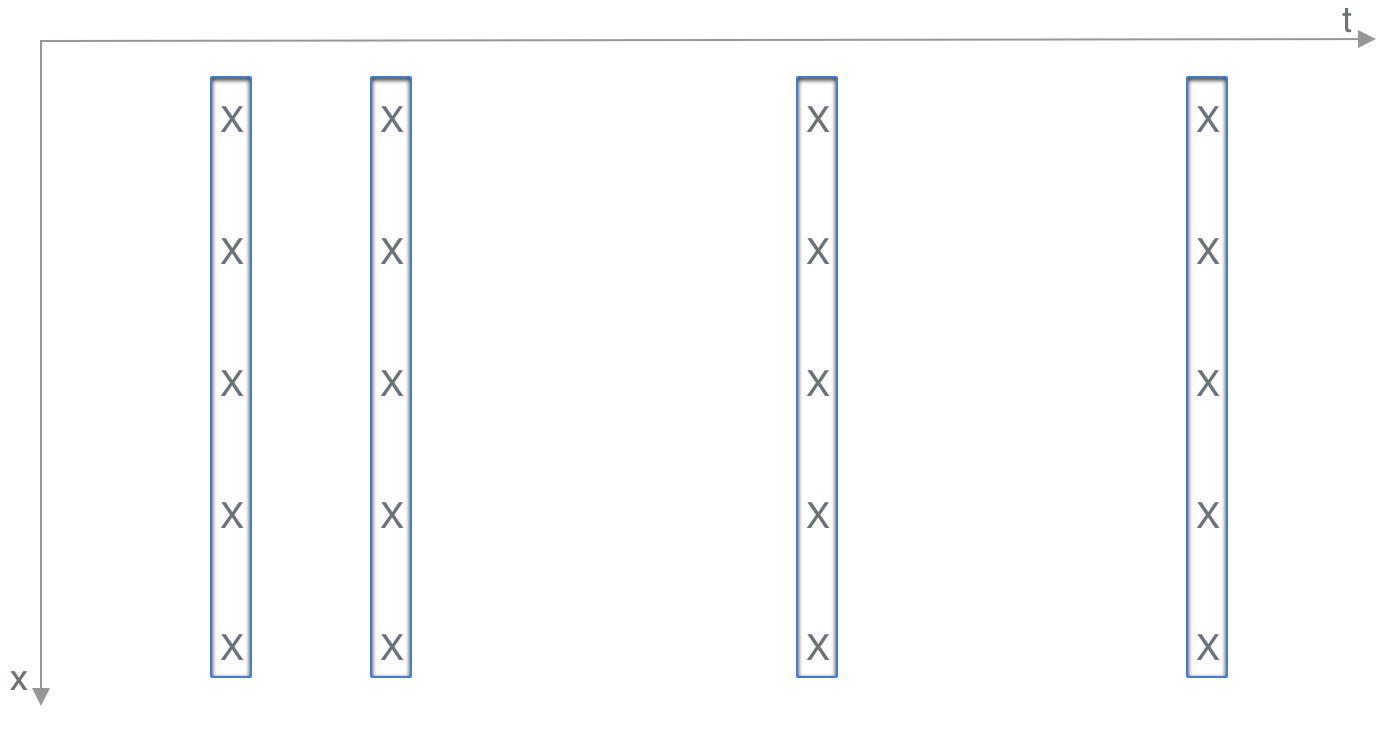

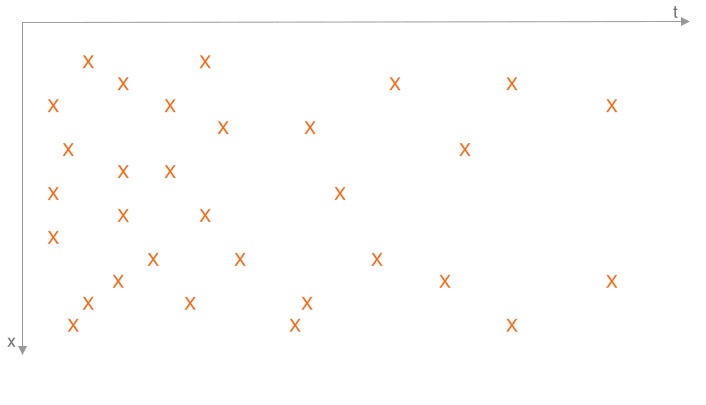

As shown in Figure 2, are selected from the collocation points that are along the boundary, i.e. the blue points, and at the initial time, i.e. the red points. Also, are sampled from the internal collocation points, i.e. the black points.

For the purpose of computational efficiency and prediction accuracy, a random sample is taken from the initial and boundary collocation points. Moreover, the internal points are selected near-randomly using Latin Hypercube Sampling(LHS) method.

We take pairs of and as the input, and employ the feed forward deep neural network(DNN) to approximate the value at these collocation points: and . To check the accuracy of the prediction, we take the mean square error between the ground truth and the prediction . Note that we take the mean square error of obtained through to ensure that the approximation by the DNN satisfies the physics, which is described by the ACOK equation.

As previously mentioned, ADAM is selected as our optimizer for each epoch, and L-BFGS-B is employed in the last step. Both of them have built-in packages developed in Python for implementation. We also use as the activation function for each hidden layer.

2.2 Modified PINNs

Next, we demonstrate how we develop the modified PINNs. There are several difficulties in applying the baseline PINNs directly to the ACOK equation, which motivate us to propose the modified PINNs to overcome these issues.

2.2.1 Periodic boundary conditions

As we have the periodic boundary condition in the ACOK equation, is separated further into two components, one corresponding to the initial collocation points, i.e. , and the other corresponding to the boundary collocation points, i.e. . Hence, we can update the loss function as:

where

and

Since we have the ground truth at the initial time, we take the mean square error between the value predicted by the DNN, , and the ground truth . However, the periodic boundary condition means that we will calculate by taking the mean square error between the value on the lower bound, i.e. , and the value on the upper bound, i.e. , at the same time , since the ground truth value of is unknown.

2.2.2 Approximation of the inverse of Laplacian

One major difficulty is how to approximate the long-range interaction term in our model. As stated above, the purpose of the shared parameter neural network is to approximate given . Recall (2.1):

While , can be obtained by back-propagation, and , and can be directly calculated using the explicit expression, the difficulty lies with the approximation of and .

To approximate the inverse of the Laplacian

we add a second output to to approximate

If a operator is applied on both sides, we recover:

Since has already been approximated by the DNN, can be calculated by back-propagation. To ensure the accuracy of , a mean square error can be taken between and , as the latter is achieved by the explicit expression:

and the first four terms of can be calculated by:

Furthermore, as the ground truth of and the periodic boundary condition on are available, the total mean square loss is modified as:

where

2.2.3 Integral approximation

Next, we propose a structural modification to approximate the integral term. The integral term

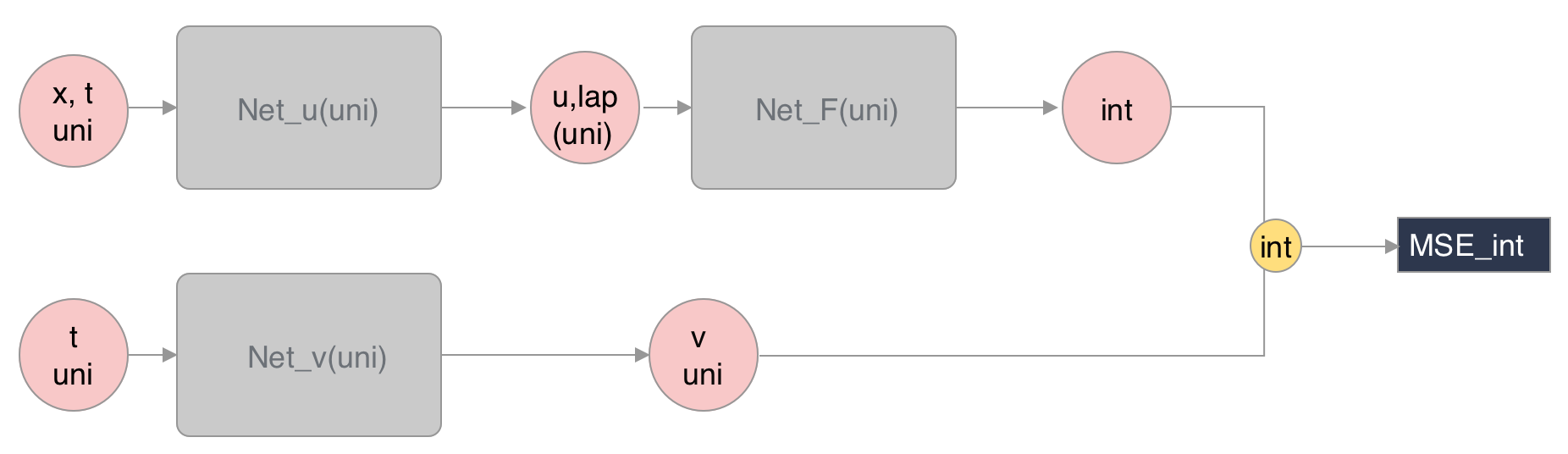

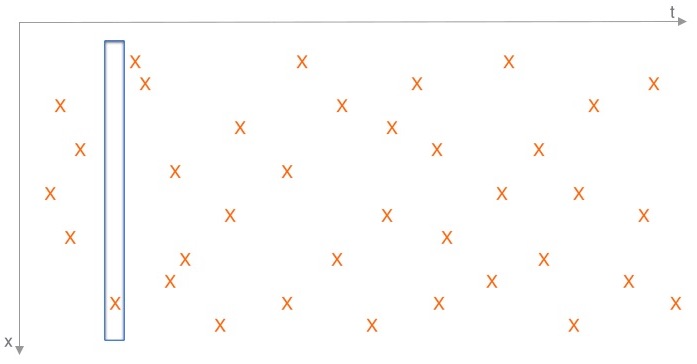

imposes a problem to the modified PINNs, as the near-randomly sampled collocation points are not evenly distributed along each . It is usually the case that at some there are one or no points selected, shown in Figure 4. As a result, the integral can not be calculated accurately for each . To resolve this issue, a separate shallow neural network is introduced, which only takes as input and has a built-in uniform mesh on . This network aims to output the value of the integral term for each randomly sampled . After the network is trained, the updated optimal weights and bias ensure that for any input , the network will produce the corresponding , even if the new input is different from the used for training.

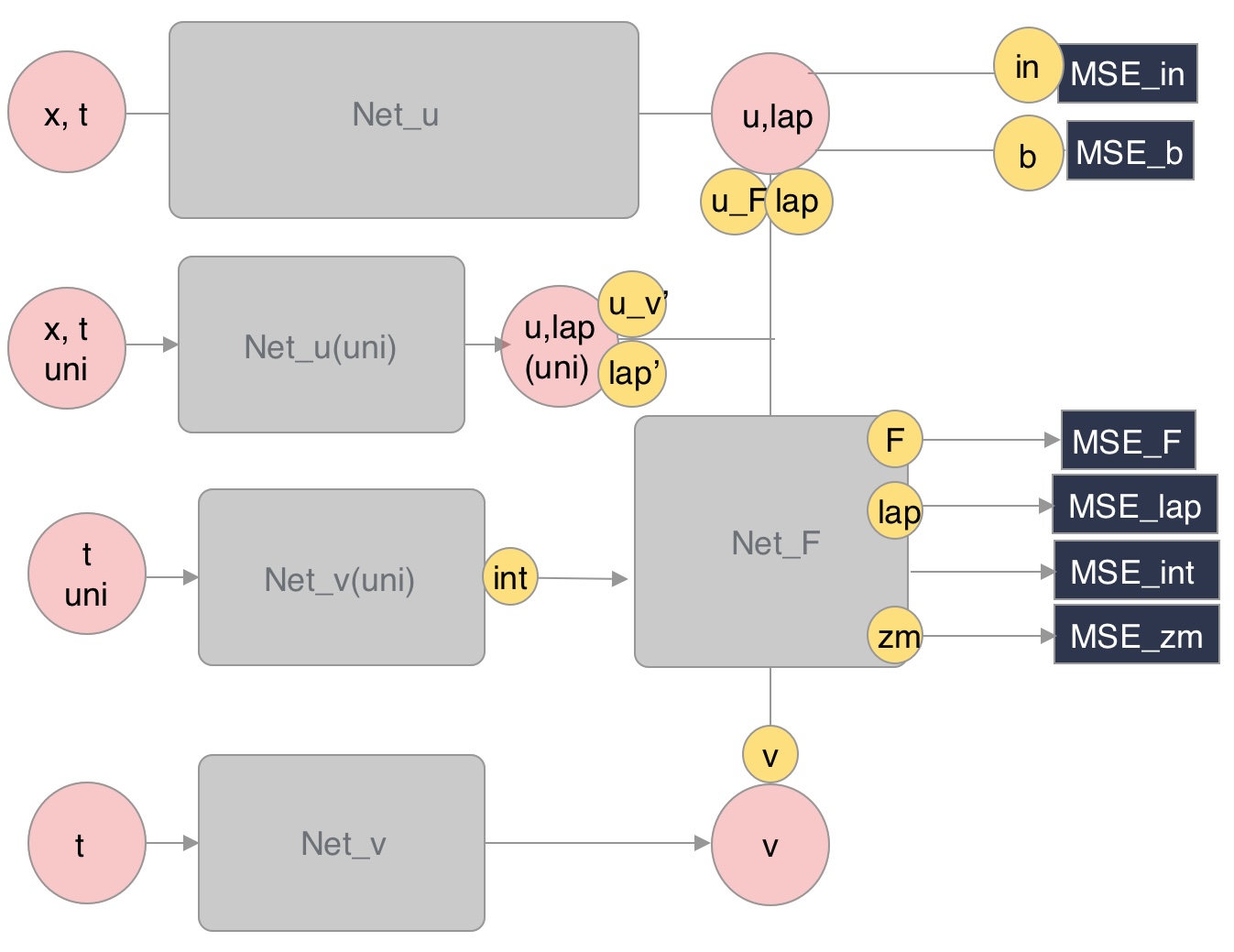

As demonstrated in Figure 3, to train , the mean square error between the output of and the discrete form of the integral

is taken:

where denotes the new uniform and random mesh as in Figure 4, and can be output by with the same set of input. Furthermore, is obtained by taking the column sum of output of along each sampled direction.

After the optimal weight and bias are achieved, the part of the original LHS sampled points is fed to , and outputs

and can be approximated by

which is calculated in . Taking the mean square error will obtain the complete .

Therefore, the total mean square loss is modified as:

where

2.2.4 Zero-mean constraint

For the zero-mean condition on the inverse of the Laplacian, since the calculation of the mean is involved, the uniform and random meshgrid created for the integral approximation can be used as input of , and the column sum of the output , which corresponds to each time , is minimized in a loss function

The complete modified PINNs structure is shown in Figure 5.

Hence, the modified PINNs is learned by minimizing the mean square loss:

Moreover, since certain components have more importance over the others, such as the mean square error on the initial condition for its ground truth availability, adjustable weights are applied to each component:

3 Numerical Results

Now we have all the pieces regarding how to apply PINNs on ACOK. We can combine them and implement them in Python. The code is based on the work of Maziar Raissi et al. [24]. We now show the results of the modified PINNs on 1-dimensional ACOK with different time intervals, ranging from s to s. Fine-tuning on hyper-parameter is needed to reach the best results, but it is out of our current research scope.

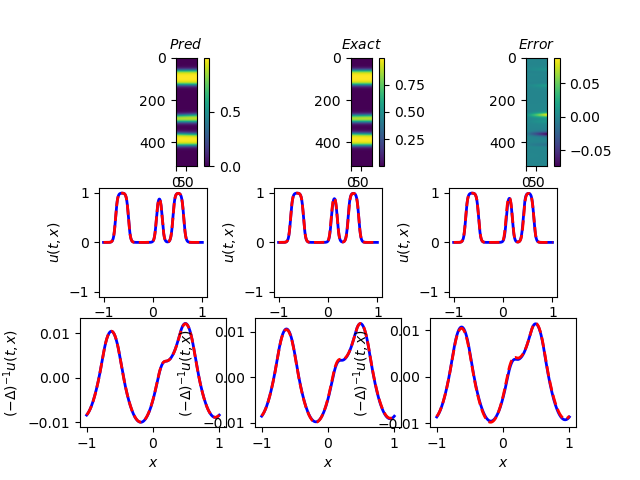

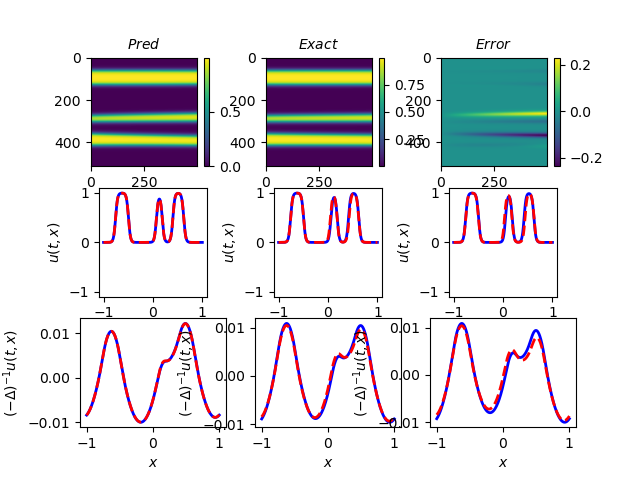

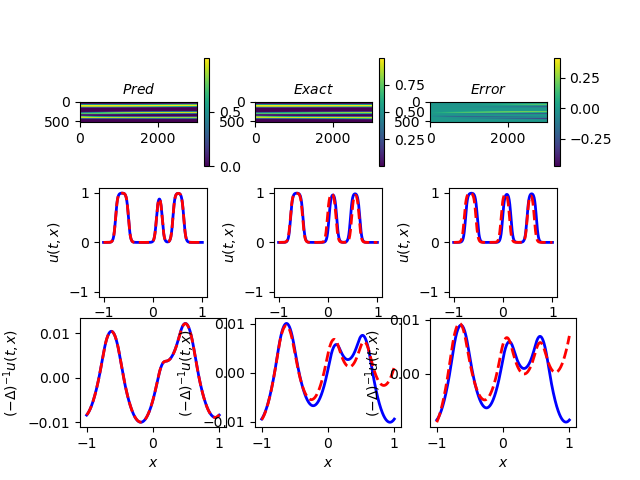

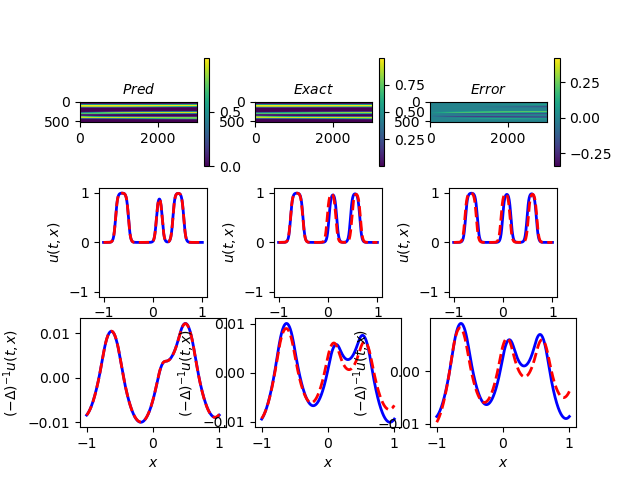

Figure 6 are run for epochs, with weights , , , , , , , . There are hidden layers in , each containing 20 neurons, while only hidden layers with neurons for each layer were needed for , since it is a much simpler neural network.

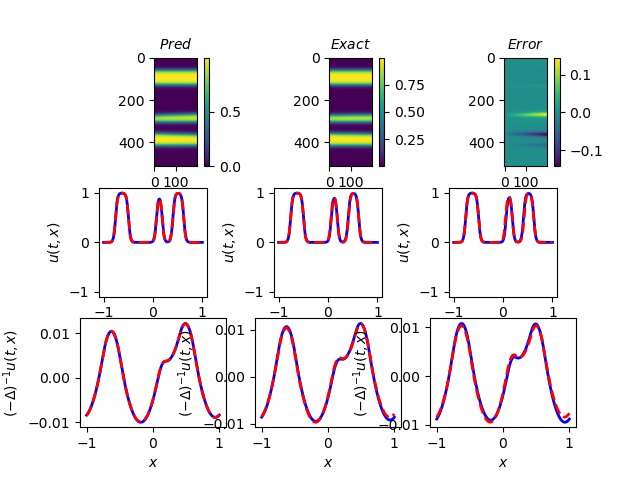

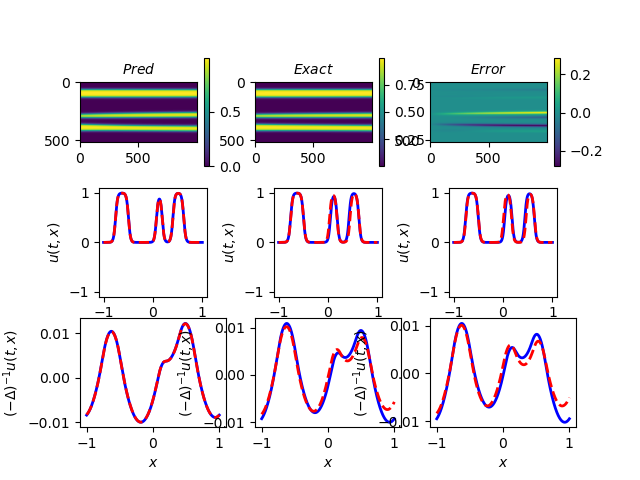

We can see that the modified PINNs perform remarkably well in smaller time periods. However, as the time interval increases, the prediction of at the right tail is getting notably worse. There are several ways to address this problem. One approach is the Time-Adaptive Method [28], or similarly, the sequence-to-sequence learning [17], which learns only one small-time period at a time and then uses the prediction as to the initial data for the next period. Since our model approximates well with short time intervals, we can compile all the sub-results together to get the entire solution. For example, we could use the data of the last time column in Figure 6(a) as the initial data for the next 100 columns and repeat the process in 100 column increments to achieve good results.

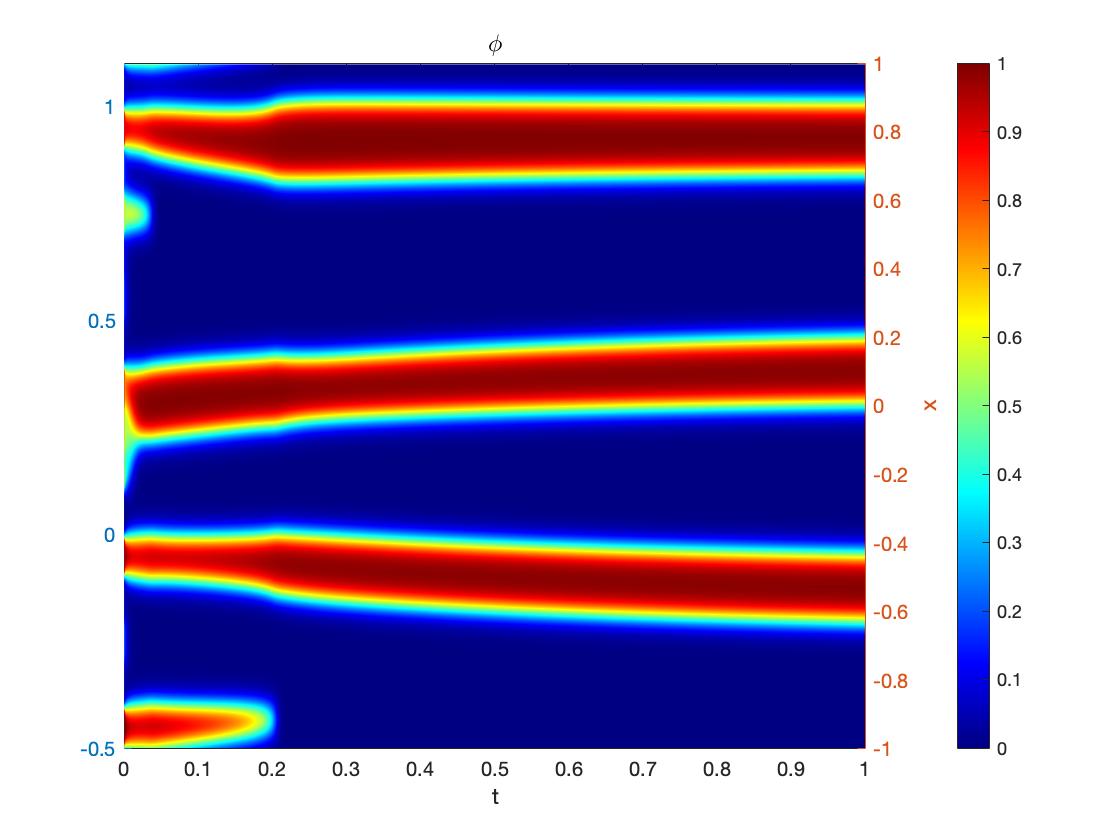

Furthermore, the problem can be improved by rescaling the sampling points. As shown in Figure 7(a), changes in phase separation occur most rapidly during the earlier time period. Therefore, we propose rescaling the sampling points to be denser in earlier time columns. Recall Figure 4, we continue to use the LHS sampling, but rescale the points polynomially to achieve the denser in the beginning effect, as in Figure 7(b).

The results are shown as follows, Figure 8(a) is the result with original LHS sampling, with time interval being s. Notice that the right tail on fits quite badly in this case due to the long time interval. Figure 8(b) is the result with a rescaling on the points. Although it does not fix the problem entirely, we can see that the tail on fits much better with a rescaling. In some cases, rescaling does not improve the result much. Therefore, rescaling could potentially be used to improve the fitting further.

4 Discussion and future work

During implementation, we have discovered that our modified PINNs are challenging to train due to their many hyper-parameters. Some hyper-parameters, such as learning rate, number of hidden layers, and number of neurons of each layer, are fixed during our training. However, the weights, number of epochs, and number of sampling points need fine-tuning to achieve the best results.

The most challenging part of our fine-tuning is finding a well-balanced set of weights. Each weight controls a different part of the estimation. For instance, , controls the fitting of initial data, which is undoubtedly an essential part of our loss function since the initial data is the only ground truth data we have. But they differ from each other, as we later find out that the scale of is 100 times smaller than that of . Therefore, we also consider that by increasing to counter the scale issue. A similar scaling technique is applied to and , which controls the periodic boundary conditions.

dominates the physics information in our model, while controls the accuracy of the approximation of the long-range interaction term. We have learned that the accuracy of the long-range interaction term is one of the key players in our approximation. directs the volume constraint and, therefore, can be increased if the predicted result has an incorrect volume. applies only to the , which means the scaling problem also needs to be taken into consideration. We have also discovered that the interval of a number of epochs is quite important. The model will not train well with too few epochs. However, if we boost the number of epochs to the scale of the thousands, the model is over-trained and does not perform well.

Moreover, we tune the number of the sampling points using the principle that we want to keep the randomness while at the same time preserving as much of the known information as possible. We also remain attentive to the efficiency of the program. Again, the initial data, being the only piece of ground truth information available, need to have the most sampling points proportionally. We do not take all the initial data because we want to keep the randomness. We also sample a relatively large percentage of the boundary points for the same reason. As the total number of the grid points is quite large for the internal points, we cannot take as many as the sampling points while keeping the program’s efficiency. Randomness also plays a crucial part here. Therefore, we only select less than half of the points. The number of randomly selected columns in the uniform mesh, , follows the same principle.

To increase the randomness, we also implemented a mini-batch method. The idea is that instead of considering all the points simultaneously, we consider a small subset of the points at a time. In theory, it should need fewer epochs and converge faster. However, as the computation in our case is quite complex, we have discovered that the mini-batch method takes significantly longer time than the regular approach.

We are currently working on the 2-dimensional case of this problem. As the number of points has drastically increased for the same time period, we have run into technical issues, in particular, insufficient memory capacity. To address this issue, we have to dramatically decrease the sampling points, even on the initial data. Also, we are keeping the time interval relatively small for the same reason. Additionally, for the boundary condition, we now have the upper and lower bound and the front and back bound. A more complicated back-propagation further lengthens the training time due to the second-order derivative. These are the difficulties we have encountered in the 2-dimensional case.

Possible future work includes investigating the inverse problem for nonlocal phase-field models with unknown nonlocal operators. We could train our model to learn the dynamics using the existing data and obtain a set of trained weights and bias representing the unknown operator to compare with a number of known possible operators. Furthermore, we could also explore other methods to improve the efficiency of the training process. The current one-dimensional results run within an hour. However, as the structure of the DNN becomes more complicated, training time increases drastically, likely caused by the more complex computations and the increased number of epochs. One possible solution is to start the currently randomized initial weights and bias closer to the solution, which will significantly lower the number of epochs, and by extension, decrease the training time overall. Additionally, various other machine learning methods, such as FNO, conventional neural networks, and transformer-based methods, can be applied to PDEs. These are also some exciting directions to explore.

Acknowledgements

The work of J. Xu and Y. Zhao is supported by a grant from the Simons Foundation through Grant No. 357963 and NSF grant DMS-2142500. J. Zhao would like to acknowledge the support from National Science Foundation, United States, with grant DMS-2111479.

References

- [1] Barbora Benešová, Christof Melcher, and Endre Süli. An implicit midpoint spectral approximation of nonlocal Cahn–Hilliard equations. SIAM Journal on Numerical Analysis, 52:1466–1496, 01 2014.

- [2] C. G. BROYDEN. The Convergence of a Class of Double-rank Minimization Algorithms 1. General Considerations. IMA Journal of Applied Mathematics, 6(1):76–90, 03 1970.

- [3] Richard H. Byrd, Peihuang Lu, Jorge Nocedal, and Ciyou Zhu. A limited memory algorithm for bound constrained optimization. SIAM Journal on Scientific Computing, 16(5):1190–1208, 1995.

- [4] Yuyao Chen, Lu Lu, George Karniadakis, and Luca Negro. Physics-informed neural networks for inverse problems in nano-optics and metamaterials. Optics Express, 28, 03 2020.

- [5] Qing Cheng, Xiaofeng Yang, and Jie Shen. Efficient and accurate numerical schemes for a hydro-dynamically coupled phase field diblock copolymer model. J. Comput. Phys., 341:44–60, 2017.

- [6] George Cybenko. Approximation by superpositions of a sigmoidal function. Technical report, Department of computer Science, Tufts University, Medford, MA, October 1988.

- [7] Yann N. Dauphin, Razvan Pascanu, Çaglar Gülçehre, Kyunghyun Cho, Surya Ganguli, and Yoshua Bengio. Identifying and attacking the saddle point problem in high-dimensional non-convex optimization. CoRR, abs/1406.2572, 2014.

- [8] R. Fletcher. A new approach to variable metric algorithms. The Computer Journal, 13(3):317–322, 01 1970.

- [9] Donald Goldfarb. A family of variable-metric methods derived by variational means. Mathematics of Computation, 24:23–26, 1970.

- [10] David Gottlieb and Steven A. Orszag. Numerical Analysis of Spectral Methods. Society for Industrial and Applied Mathematics, 1977.

- [11] Mohamad H. Hassoun. Fundamentals of Artificial Neural Networks. MIT Press, Cambridge, MA, 1995.

- [12] Simon Haykin. Neural Networks: A Comprehensive Foundation. Prentice Hall, 1999.

- [13] Geoffrey Hinton, Nitish Srivastava, and Kevin Swersky. Neural networks for machine learning, lecture 6a overview of mini-batch gradient descent, 2012.

- [14] J J Hopfield. Neural networks and physical systems with emergent collective computational abilities. Proceedings of the National Academy of Sciences, 79(8):2554–2558, 1982.

- [15] M. Yousuff. Hussaini, Thomas A. Zang, Institute for Computer Applications in Science, and Engineering. Spectral methods in fluid dynamics. Institute for Computer Applications in Science and Engineering, NASA Langley Research Center ; National Technical Information Service, 1986.

- [16] Diederik Kingma and Jimmy Ba. Adam: A method for stochastic optimization. International Conference on Learning Representations, 12 2014.

- [17] Aditi S. Krishnapriyan, Amir Gholami, Shandian Zhe, Robert M. Kirby, and Michael W. Mahoney. Characterizing possible failure modes in physics-informed neural networks. CoRR, abs/2109.01050, 2021.

- [18] Kurt and Hornik. Approximation capabilities of multilayer feedforward networks. Neural Networks, 4(2):251–257, 1991.

- [19] Hermann G. Matthies and Gilbert Strang. The solution of nonlinear finite element equations. International Journal for Numerical Methods in Engineering, 14:1613–1626, 1979.

- [20] K. W. Morton and D. F. Mayers. Numerical Solution of Partial Differential Equations: An Introduction. Cambridge University Press, 2 edition, 2005.

- [21] Jorge Nocedal. Updating quasi-newton matrices with limited storage. Mathematics of Computation, 35(151):773–782, 1980.

- [22] Takao Ohta and Kyozi Kawasaki. Equilibrium morphology of block copolymer melts. Macromolecules, 19:2621–2632, 1986.

- [23] Ning Qian. On the momentum term in gradient descent learning algorithms. Neural Networks, 12(1):145–151, January 1999.

- [24] Maziar Raissi, Paris Perdikaris, and George Karniadakis. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. Journal of Computational Physics, 378, 11 2018.

- [25] David E. Rumelhart, Geoffrey E. Hinton, and Ronald J. Williams. Learning Representations by Back-propagating Errors. Nature, 323(6088):533–536, 1986.

- [26] David E. Rumelhart, James L. McClelland, and CORPORATE PDP Research Group, editors. Parallel Distributed Processing: Explorations in the Microstructure of Cognition, Vol. 1: Foundations. MIT Press, Cambridge, MA, USA, 1986.

- [27] D. F. Shanno. Conditioning of quasi-newton methods for function minimization. Mathematics of Computation, 24(111):647–656, 1970.

- [28] Colby L. Wight and Jia Zhao. Solving Allen-Cahn and Cahn-Hilliard equations using the adaptive physics informed neural networks. CoRR, abs/2007.04542, 2020.

- [29] Xiang Xu and Yanxiang Zhao. Energy stable semi-implicit schemes for Allen–Cahn–Ohta–Kawasaki model in binary system. Journal of Scientific Computing, 80, 09 2019.

- [30] Matthew D. Zeiler. Adadelta: An adaptive learning rate method. CoRR, abs/1212.5701, 2012.