Numerical analysis of American option pricing in a two-asset jump-diffusion model

Abstract

This paper addresses an important gap in rigorous numerical treatments for pricing American options under correlated two-asset jump-diffusion models using the viscosity solution framework, with a particular focus on the Merton model. The pricing of these options is governed by complex two-dimensional (2-D) variational inequalities that incorporate cross-derivative terms and nonlocal integro-differential terms due to the presence of jumps. Existing numerical methods, primarily based on finite differences, often struggle with preserving monotonicity in the approximation of cross-derivatives-a key requirement for ensuring convergence to the viscosity solution. In addition, these methods face challenges in accurately discretizing 2-D jump integrals.

We introduce a novel approach to effectively tackle the aforementioned variational inequalities while seamlessly handling cross-derivative terms and nonlocal integro-differential terms through an efficient and straightforward-to-implement monotone integration scheme. Within each timestep, our approach explicitly enforces the inequality constraint, resulting in a 2-D Partial Integro-Differential Equation (PIDE) to solve. Its solution is then expressed as a 2-D convolution integral involving the Green’s function of the PIDE. We derive an infinite series representation of this Green’s function, where each term is strictly positive and computable. This series facilitates the numerical approximation of the PIDE solution through a monotone integration method, such as the composite quadrature rule. To further enhance efficiency, we develop an efficient implementation of this monotone integration scheme via Fast Fourier Transforms, exploiting the Toeplitz matrix structure.

The proposed method is proved to be both -stable and consistent in the viscosity sense, ensuring its convergence to the viscosity solution of the variational inequality. Extensive numerical results validate the effectiveness and robustness of our approach, highlighting its practical applicability and theoretical soundness.

Keywords: American option pricing, two-asset Merton jump-diffusion model, variational inequality, viscosity solution, monotone scheme, numerical integration

AMS Subject Classification: 65D30, 65M12, 90C39, 49L25, 93E20, 91G20

1 Introduction

In stochastic control problems, such as those arising in financial mathematics, value functions are often non-smooth, prompting the use of viscosity solutions [20, 22, 31, 59]. The framework for provable convergence numerical methods, established by Barles and Souganidis in [9], requires them to be (i) -stable, (ii) consistent, and (iii) monotone in the viscosity sense, assuming a strong comparison principle holds. Achieving monotonicity is often the most difficult criterion, and non-monotone schemes can fail to converge to viscosity solutions, leading to violations of the fundamental no-arbitrage principle in finance [57, 61].

While monotonicity is a well-established sufficient condition for convergence to the viscosity solution [9], its necessity remains an open question. In some specialized settings (see, for example, [68, 14]), non-monotone schemes can still converge, yet no general result analogous to Barles–Souganidis [9] exists for such methods. Determining whether monotonicity is strictly required for convergence remains an important open problem in viscosity-solution-based numerical analysis. Nonetheless, monotone schemes remain the most reliable approach for ensuring convergence in complex settings.

Monotone finite difference schemes are typically constructed using positive coefficient discretization techniques [67], and rigorous convergence results exist for one-dimensional (1D) models, both with and without jumps [27, 33]. However, extending these results to multi-dimensional settings presents significant challenges, particularly when the underlying assets are correlated. In such cases, the local coordinate rotation of the computational stencil improves stability and accuracy, but this technique is fairly complex and introduces significant computational overhead [53, 17, 15]. Moreover, accurate discretization of the nonlocal integro-differential terms arising from jumps remains a difficult task.

Fourier-based integration methods often rely on an analytical expression for the Fourier transform of the underlying transition density function or of an associated Green’s function, as demonstrated in various studies [65, 2, 39, 32, 51, 30, 62, 63]. Among these, the Fourier-cosine (COS) method [30, 63, 62] is particularly notable for achieving high-order convergence in piecewise smooth problems. However, for general stochastic optimal control problems, such as asset allocation [69, 25], which often involve complex and non-smooth structures, this high-order convergence is generally unattainable [32, 51].

When applicable, Fourier-based integration methods offer advantages such as eliminating timestepping errors between intervention dates (e.g. rebalancing dates in asset allocation) and naturally handling complex dynamics like jump-diffusion and regime-switching. However, a key drawback is their potential loss of monotonicity, which can lead to violations of the no-arbitrage principle in numerical value functions. This poses significant challenges in stochastic optimal control, where accuracy is crucial for optimal decision-making [32]. In the same vein of research, recent works on -monotone Fourier methods for control problems in finance merit attention [32, 52, 51, 50].

These challenges have motivated the development of strictly monotone numerical integration methods, such as the approach in [69] for asset allocation under jump-diffusion models within an impulse control framework with discrete rebalancing. Here, the stock index follows the 1D Merton [55] or Kou [48] jump-diffusion dynamics, while the bond index evolves deterministically with a constant drift rate. Since the bond index dynamics lack a diffusion or jump operator, the asset allocation problem reduces to a weakly coupled system of one-dimensional (1D) equations linked through impulse control.

Akin to other Fourier-based integration methods, the approach in [69] leverages a known closed-form expression for the Fourier transform of an associated 1D conditional density function. However, a key feature of this method is the derivation of an infinite series representation of this density function, in which each term is nonnegative and explicitly computable. This representation is used to advance each 1D equation in time, ensuring strict monotonicity. However, the method remains primarily suited for 1D problems within a discrete rebalancing setting where the other dimension is weakly coupled.

This work generalizes the strictly monotone integration methodology of [69] to a fully 2-D jump-diffusion setting, addressing the key challenge of cross-derivative terms and 2-D jumps. Furthermore, we establish its convergence to the viscosity solution in continuous time. We apply this framework to American option pricing under a correlated two-asset jump-diffusion model—an important yet computationally demanding problem in financial mathematics. While American options exhibit relatively weak nonlinearity, they provide a suitable setting to develop and assess strictly monotone numerical integration techniques in a fully 2-D framework, without the added complexity of more advanced financial applications, such as that of fully 2-D asset allocation.

1.1 American options

American options play a crucial role in financial markets and are widely traded for both hedging and speculative purposes. Unlike European options, which can only be exercised at expiration, American options allow exercise at any time before expiration. While this flexibility enhances their appeal across equities, commodities, and bonds, it also introduces substantial mathematical and computational challenges due to the absence of closed-form solutions in most cases.

From a mathematical perspective, American option pricing is formulated as a variational inequality due to its optimal stopping feature. The problem becomes particularly challenging when incorporating jumps, as the governing equations involve nonlocal integro-differential terms [70, 60]. These complexities necessitate advanced numerical methods to achieve accurate valuations.

A common approach to tackling the variational inequality in American option pricing is to reformulate it as a partial (integro-)differential complementarity problem [16, 71, 17, 33, 13]. This reformulation captures the early exercise feature through time-dependent complementarity conditions, effectively handling the boundary between the exercise and continuation regions. Finite difference techniques are widely used to solve these problems, resulting in nonlinear discretized equations that require iterative solvers such as the projected successive over-relaxation method (PSOR) [23] and penalty methods [71]. For models incorporating jumps, fixed-point iterations can be used to address the integral terms, as shown in [17] for two-asset jump-diffusion models. Additionally, efficient operator splitting schemes—including implicit-explicit and alternating direction implicit types—have recently been proposed for American options under the two-asset Merton jump-diffusion model [13], while the method of lines has been applied to the same problem under the two-asset Kou model [37].

An alternative approach to pricing American options is to approximate them using Bermudan options, a discrete-time counterpart, and analyze the convergence of the solution as the early exercise intervals shrink to zero. In this context, Fourier-based COS methods [62] have been applied to 2-D Bermudan options. However, for this application, the COS method requires particularly careful boundary tracking despite its high-order convergence for piecewise smooth problems. Notably, as observed in [32, 28], the COS method may produce negative option prices for short maturities, akin to cases where early exercise opportunities become more frequent. This phenomenon is closely tied to its potential loss of monotonicity, a limitation highlighted in previous discussions. Consequently, this approach may struggle to ensure convergence from Bermudan to American options.

1.2 Main contributions

This paper bridges the gap in existing numerical methods by introducing an efficient, straightforward-to-implement monotone integration scheme for the variational inequalities governing American options under the two-asset Merton jump-diffusion model. Our approach simultaneously handles cross-derivative terms and nonlocal integro-differential terms, simplifying the design of monotone schemes and ensuring convergence to the viscosity solution. In doing so, we address key computational challenges in current numerical techniques.

The main contributions of our paper are outlined below.

-

(i)

We present the localized variational inequality for pricing American options under the two-asset Merton jump-diffusion model, formulated on an infinite domain in log-price variables with a finite interior and artificial boundary conditions. Using a probabilistic technique, we demonstrate that the difference between the solutions of the localized and full-domain variational inequalities decreases exponentially with respect to the log-price domain size. In addition, we establish that the localized variational inequality satisfies a comparison result.

-

(ii)

We develop a monotone scheme for the variational inequality that explicitly enforces the inequality constraint. We solve a 2-D Partial Integro-Differential Equation (PIDE) at each timestep to approximate the continuation value, followed by an intervention action applied at the end of the timestep. Using the closed-form Fourier transform of the Green’s function, we derive an infinite series representation where each term is non-negative. This enables the direct approximation of the PIDE’s solutions via 2-D convolution integrals, using a monotone numerical integration method.

-

(iii)

We implement the monotone integration scheme efficiently by exploiting the Toeplitz matrix structure and using Fast Fourier Transforms (FFTs) combined with circulant convolution. The implementation process includes expanding the inner summation’s convolution kernel into a circulant matrix, followed by transforming the double summation kernel into a circulant block structure. This allows the circulant matrix-vector product to be efficiently computed as a circulant convolution using 2-D FFTs.

-

(iv)

We prove that the proposed monotone scheme is both -stable and consistent in the viscosity sense, ensuring pointwise convergence to the viscosity solution of the variational inequality as the discretization parameter approaches zero.

-

(v)

Extensive numerical results demonstrate strong agreement with benchmark solutions from published test cases, including those obtained via operator splitting methods, establishing our method as a reference for validating numerical techniques.

While this work focuses on American option pricing under a correlated two-asset Merton jump-diffusion model, the core methodology—particularly the infinite series representation of the Green’s function, where each term is non-negative—can be extended to other stochastic control problems. One such application is asset allocation with a stock index and a bond index. For discrete rebalancing, the 2-D extension is straightforward, as time advancement can be handled using a similar infinite series representation. For continuous rebalancing, a natural approach is to start from the discrete setting and leverage insights from [69] to analyze the limit as the rebalancing interval approaches zero.

The extension to the 2-D Kou model presents significant additional challenges due to its piecewise-exponential structure, leading to complex multi-region double integrals. We leave this extension for future work and refer the reader to Subsection 3.2, where we discuss these difficulties and outline a potential neural network-based approach.

The remainder of the paper is organized as follows. In Section 2, we provide an overview of the two-asset Merton jump-diffusion model and present the corresponding variational inequality. We then define a localized version of this problem, incorporating boundary conditions for the sub-domains. Section 3 introduces the associated Green’s function and its infinite series representation. In Section 4, we describe a simple, yet effective, monotone integration scheme based on a composite 2-D quadrature rule. Section 5 establishes the mathematical convergence of the proposed scheme to the viscosity solution of the localized variational inequality. Numerical results are discussed in Section 6, and finally, Section 7 concludes the paper and outlines directions for future research.

2 Variational inequalities and viscosity solution

We consider a complete filtered probability space , which includes a sample space , a sigma-algebra , a filtration for a finite time horizon , and a risk-neutral measure . For each , and represent the prices of two distinct underlying assets. These price processes are modeled under the risk-neutral measure to follow jump-diffusion dynamics given by

| (2.1a) | ||||

| (2.1b) | ||||

Here, denotes the risk-free interest rate, and and represent the instantaneous volatility of the respective underlying asset. The processes and are two correlated Brownian motions, with , where is the correlation parameter. The process is a Poisson process with a constant finite intensity rate . The random variables and , representing the jump multipliers, are two correlated positive random variables with correlation coefficient . In (2.1), and are independent and identically distributed (i.i.d.) random variables having the same distribution as and , respectively; the quantities and , where is the expectation operator taken under the risk-neutral measure .

In this paper, we focus our attention on the Merton jump-diffusion model [55], where the jump multiplier and subject to log-normal distribution, respectively. Specifically, we denote by the joint density function of the random variable and with correlation . Consequently, the joint probability density function (PDF) is given by

| (2.2) |

2.1 Formulation

For the underlying process , let be the state of the system. We denoted by the time- no-arbitrage price of a two-asset American option contract with maturity and payoff . It is established that is given by the optimal stopping problem [41, 45, 54, 59, 42, 70]

| (2.3) |

Here, represents a stopping time; denotes the conditional expectation under the risk-neutral measure , conditioned on . We focus on the put option case, where the payoff function is bounded and continuous.

The methods of variational inequalities, originally developed in [11], are widely used for pricing American options, as evidenced by [70, 42, 60, 59], among many other publications. The value function , defined in (2.3), is known to be non-smooth, which prompts the use of the notion of viscosity solutions. This approach provides a powerful means for characterizing the value functions in stochastic control problems [20, 22, 21, 31, 59].

It is well-established that the value function , defined in (2.3), is the unique viscosity solution of a variational inequality as noted in [58, 60, 59]. While the original references describe the variational inequality using the spatial variables , our approach employs a logarithmic transformation for theoretical analysis and numerical method development. Specifically, with , and given positive values for and , we apply the transformation and . With , we define and . Consequently, is the unique viscosity solution of the variational inequality given by

| (2.4a) | ||||

| (2.4b) | ||||

Here, the differential and jump operators and are defined as follows

| (2.5) |

where is the joint probability density function of .

2.2 Localization

Under the log transformation, the formulation (2.4) is posed on an infinite spatial domain . For problem statement and convergence analysis of numerical schemes, we define a localized pricing problem with a finitem, open, spatial interior sub-domain, denoted by . More specifically, with and , where , , , and are sufficiently large, and its complement are respectively defined as follows

| (2.6) |

Since the jump operator is non-local, computing the integral (2.5) for typically requires knowledge of within the infinite outer boundary sub-domain . Therefor, appropriate boundary conditions must be established for . In the following, we define the definition domain and its sub-domains, discuss boundary conditions, and investigate the impact of artificial boundary conditions on .

The definition domain comprises a finite sub-domain and an infinite boundary sub-domain, defined as follows.

| (2.7) | ||||

For subsequent use, we also define the following region: . An illustration of the sub-domains for the localized problem corresponding to a fixed is given in Figure 2.1.

For the outer boundary sub-domain , boundary conditions are generally informed by financial reasonings or derived from the asymptotic behavior of the solution. In this study, we implement a straightforward Dirichlet boundary condition using a known bounded function for . Specifically, belongs to the space of bounded functions , which is defined as follows [7, 64]

| (2.8) |

We denote by the function that solves the localized problem on with the initial and boundary condition given below

| (2.9a) | ||||

| (2.9b) | ||||

| (2.9c) | ||||

The impact of the artificial boundary condition in , as specified in (2.9b), on the solution within is established in Lemma 2.1 below. For simplicity, in the lemma, we assume that . The proof can be generalized in a straightforward manner to accommodate different values for , , , and .

Lemma 2.1.

A proof of Lemma 2.1 is provided in Appendix A. This lemma establishes that, in log-price coordinates, the error between the localized and full-domain variational inequality solutions decays exponentially as the spatial interior domain size increases, i.e. the truncation error is of order as .111Equivalently, if represents the spatial domain size in the original price scale for both assets, the error is . The result is a local pointwise estimate, indicating that the localization error is more pronounced near the boundary. This rapid decay implies that smaller computational domains can be used, thereby significantly reducing computational costs.

The boundedness conditions and are satisfied for standard put options and Dirichlet boundary conditions. However, for payoffs that grow unbounded as (e.g. standard calls), a more refined analysis is required. Large-deviation techniques have been employed in pure-diffusion models (see [10]) to estimate the probability of large excursions beyond the truncated domain. These techniques can also be adapted to certain (finite-activity) jump processes (see, for example, [44]). A rigorous extension of these ideas to jump-diffusion models with unbounded payoffs, while theoretically possible, is considerably more involved and lies beyond the scope of this work. We therefore concentrate on bounded-payoff options—such as puts—where remains finite.

For the remainder of the analysis, we choose the Dirichlet condition based on discounted payoff as follows

| (2.10) |

While more sophisticated boundary conditions might involve the asymptotic properties of the variational inequality (2.4a) as or , our observations indicate that these sophisticated boundary conditions do not significantly impact the accuracy of the numerical solution within . This will be illustrated through numerical experiments in Subsection 6.2.4.

2.3 Viscosity solution and a comparison result

We now write (2.1) in a compact form, which includes the terminal and boundary conditions in a single equation. We let and represent the first-order and second-order partial derivatives of . The variational inequality (2.9) can be expressed compactly as

| (2.11) |

where

| (2.12a) | |||||

| (2.12b) | |||||

| (2.12c) | |||||

For a locally bounded function , where is a closed subset of , we recall its upper semicontinuous (u.s.c. in short) and the lower semicontinuous (l.s.c. in short) envelopes given by

| (2.17) |

Definition 2.1 (Viscosity solution of (2.11)).

(i) A locally bounded function is a viscosity supersolution of (2.11) in if and only if for all test function and for all points such that has a global minimum on at and , we have

| (2.18) |

Viscosity subsolutions are defined symmetrically.

(ii) A locally bounded function is a viscosity solution of (2.11) in if is a viscosity subsolution and a viscosity supersolution in .

In the context of numerical solutions to degenerate parabolic equations in finance, the convergence to viscosity solutions is ensured when the scheme is stable, consistent, and monotone, provided that a comparison result holds [43, 8, 7, 9, 5, 6, 4]. Specifically, stability, consistency and monotonicity facilitate the identification of u.s.c. subsolutions and l.s.c. supersolutions through the respective use of and of the numerical solutions as the discretization parameter approaches zero.

Suppose and respectively denote such subsolution and supersolution within a region, referred to as , where for an open set . By construction using for and for , and the nature of , we have for all . If a comparison result holds in , it means that for all . Therefore, is the unique, continuous viscosity solution within the region .

It is established that the full-domain variational inequality defined in (2.4) satisfies a comparison result in [64, 59, 3, 40]. Similarly, the localized variational inequality (2.11) also satisfies a comparison result, as detailed in the lemma below. We recall defined in (2.1).

Lemma 2.2.

The proof of the comparison result follows a similar approach to that in [51], and is therefore omitted here for brevity.

3 An associated Green’s function

Central to our numerical scheme for the variational inequality (2.11) is the Green’s function of an associated PIDE in the variables , analyzed independently of the constraints dictated by the variational inequality. To facilitate this analysis, for a fixed , let be such that , and proceed to consider the 2-D PIDE:

| (3.1) |

subject to the time- initial condition specified by a generic function . We denote by the function the Green’s function associated with the PIDE (3.1). The stochastic system described in (2.1) exhibits spatial homogeneity, which leads to the spatial translation-invariance of both the differential operator and the jump operator . As a result, the Green’s function depends only on the relative displacement between starting and ending spatial points, thereby simplifying to .

3.1 An infinite series representation of

We let be the Fourier transform of with respect to the spatial variables, i.e.

| (3.2) |

A closed-form expression for is given as follows [62]

| (3.3) | ||||

Here, , where is the joint probability density function of random variables and given in (2.2).

For convenience, we define , and are the column vectors, is the dot product of vectors and , is the transpose of a vector , and is the covariance matrix of and . The covariance matrix and its inverse are respectively given as follows

| (3.4) |

For subsequent use, we express the function given in (3.3) in a compact matrix-vector form as follows

| (3.5) |

where , and is the column vector. For brevity, we use to represent the 2-D integral .

Lemma 3.1.

A proof of Lemma 3.1 is provided in Appendix B. We emphasize that the infinite series representation in Lemma 3.1 does not rely on the specific form of the joint probability density function , and thus it applies broadly to general two-asset jump-diffusion model. In the specific case of the two-asset Merton jump-diffusion model, where the joint probability density function is given by (2.2), the terms of the series can be explicitly evaluated, as detailed in the corollary below.

Corollary 3.1.

3.2 Extension to the 2-D Kou model

In the 2-D Merton model of Corollary 3.1, are assumed to follow a bivariate normal distribution. Consequently, the sum of i.i.d. random vectors, each following this bivariate normal distribution, is also bivariate normal. Convolution with the 2-D diffusion kernel , which is itself Gaussian, then reduces to a straightforward Gaussian–Gaussian double integral. Summing over all possible numbers of jumps according to the Poisson distribution gives an infinite series of tractable Gaussian double integrals, thus leading to the closed‐form solution presented in Corollary 3.1.

However, extending this approach to the 2-D Kou model is much more challenging—even in the simplest case where and are independent. In this setting, the joint PDF of and is simply the product of two 1-D Kou PDFs, resulting in a four‐region, piecewise‐exponential density on . Summing i.i.d. random vectors, each following this independent 2-D Kou jump distribution, leads to multi‐region double integrals, since each dimension can exhibit positive or negative jumps. As grows, the number of piecewise‐exponential components increases combinatorially. Moreover, these sums produce factors that often combine polynomial or gamma‐type terms with exponentials in two variables.

When convolved with the 2-D Gaussian diffusion kernel, the resulting integrals become exponential–Gaussian double integrals, which generally do not reduce to standard special functions (unlike the 1-D case, which sometimes admits the family from [1], as shown in [48]). Although one can still construct a series expansion over the Poisson‐distributed jumps, the individual terms would require intricate generalized special functions or numerous piecewise integrals, making the final expression far more cumbersome and less recognizable as a “closed‐form” solution.

A possible way forward is inspired by [28], where a single-hidden-layer neural network (NN) with Gaussian activation functions is used to approximate an unknown transition density or Green’s function via a closed-form expression of its Fourier transform. However, this approach can lead to potential loss of nonnegativity and requires retraining the neural network for different .

For the general 2-D Kou model, an alternative approach is to instead approximate the joint PDF of and using a NN akin to that in [28], where Gaussian activation functions are employed. Once trained, the joint PDF is represented as a finite sum of 2-D Gaussians, effectively forming a Gaussian mixture model. Notably, the nonnegativity of this approximation can also be enforced, aligning with the monotonicity requirements of numerical schemes.222While we focus on the 2-D Kou model, this approach can, in principle, be applied to other 2-D jump-diffusion dynamics where the joint jump density can be effectively approximated by a Gaussian mixture.

This formulation transforms the convolution with the 2-D diffusion kernel into a tractable Gaussian–Gaussian double integral, allowing the same efficient techniques developed in this paper for the 2-D Merton model to be applied. Notably, because the NN does not depend on , it can be trained only once and reused for all , ensuring computational efficiency by eliminating the need for retraining at different time step sizes. We plan to explore this approach in future work in the context of fully 2-D asset allocation.

3.3 Truncated series and error

For subsequent analysis, we study the truncation error in the infinite series representation of the Green’s function as given in (3.6). Notably, this truncation error bound is derived independently of the specific form of the joint probability density function , ensuring its applicability to a broad range of two-asset jump-diffusion models.

Specifically, for a fixed , we denote by an approximation of the Green’s function using the first terms from the series (3.6). As approaches , the approximation becomes exact with no loss of information. However, with a finite , the approximation incurs an error due to the truncation of the series. This truncation error can be bounded as follows:

| (3.7) |

Here, in (i), we apply the following fact: if denotes a complex number, then the modulus of the complex exponential is equivalent to the exponential of the real part of , i.e and , (ii) is due to the Chernoff-Hoeffding bound for the tails of a Poisson distribution , which reads as , for [56].

Therefore, from (3.7), as , we have , resulting in no loss of information. For a given , we can choose such that the error . This can be achieved by enforcing

| (3.8) |

It is straightforward to see that, if , then , as . In summary, we can attain

| (3.9) |

4 Numerical methods

A common approach to handling the constraint posed by variational inequalities is to explicitly determine the optimal decision between immediate exercise and holding the contract for potential future exercise [66, 33, 47]. We define , , as an equally spaced partition of , where and . We denote by the continuation value of the option. For a fixed , the solution to the variational inequality (2.11) at , can be approximated by explicitly handling the constraint as follows

| (4.1) |

Here, the continuation value is given by the solution of the 2-D PIDE of the form (3.1), i.e.

| (4.2) |

subject to the initial condition at time given by a function , where

| (4.3) |

The solution for can be represented as the convolution integral of the Green’s function and the initial condition as follows [34, 29]

| (4.4) |

The solution for is given by the boundary condition (2.9b). In the analysis below, we focus on the convolution integral (4.4).

4.1 Computational domain

For computational purposes, we truncate the infinite region of integration of (4.4) to the finite region defined as follows

| (4.5) |

Here, where, for , and and are sufficiently large. This results in the approximation for the continuation value

| (4.6) |

We note that, in approximating the above truncated 2-D convolution integral (4.6) over the finite integration domain , it is also necessary to obtain values of the Green’s function at spatial points which fall outside . More specifically, it is straightforward to see that, with and , the point defined as follows

| (4.7) |

We emphasize that computing the solutions for is not necessary, nor are they required for our convergence analysis. The primary purpose of is to ensure the well-definedness of the Green’s function used in the convolution integral (4.6).

We now have a finite computational domain, denoted by , and its sub-domains defined as follows

| (4.8) | ||||

Here, and are respectively defined in (2.6) and (4.5). An illustration of the spatial computational sub-domains corresponding each is given in Figure 4.1. We note that and , where region is defined in (4.7).

4.2 Discretization

We denote by (resp. and ) the number of intervals of a uniform partition of (resp. and ). For convenience, we typically choose and so that only one set of -coordinates is needed. Also, let , , and . We define . We use an equally spaced partition in the -direction, denoted by , and is defined as follows

| (4.11) | |||||

Similarly, for the -dimension, with , , , , and , we denote by , an equally spaced partition in the -direction defined as follows

| (4.12) | |||||

We use the previously defined uniform partition , , with .333While it is straightforward to generalize the numerical method to non-uniform partitioning of the -dimension, to prove convergence, uniform partitioning suffices.

For convenience, we let and we also define the following index sets:

| (4.13) |

With , , and , we denote by (resp. ) a numerical approximation to the exact solution (resp. ) at the reference node . For , nodes having are in . Those with either or are in . For double summations, we adopt the short-hand notation: , unless otherwise noted. Lastly, it’s important to note that references to indices or pertain to points within . As noted earlier, no numerical solutions are required for these points.

4.3 Numerical schemes

For , we impose the initial condition

| (4.14) |

For , we impose the boundary condition (2.10) as follow

| (4.15) |

For subsequent use, we adopt the following notational convention: (i) , for and , and (ii) , for and . In addition, we denote by an approximation to using the first terms of the infinite series representation in Corollary 3.1. When the role of is important, we explicitly write , omitting for brevity.

The continuation value at node is approximated through the 2-D convolution integral (4.6) using a 2-D composite trapezoidal rule. This approximation, denoted by , is computed as follows:

| (4.16) |

Here, the coefficients in (4.16), where and , are the weights of the 2-D composite trapezoidal rule. Finally, the discrete solution is computed as follow

| (4.17) |

Remark 4.1 (Monotonicity).

We note that the Green’s function , as given by the infinite series in Corollary 3.1, is defined and non-negative for all , , , . Therefore, scheme (4.16)-(4.17) is monotone. We highlight that for computational purposes, is truncated to . However, since each term of the series is non-negative, this truncation ensures no loss of monotonicity, which is a key advantage of the proposed approach.

Remark 4.2 (Rescaled weights and convention).

In the scheme (4.16), the weights are multiplied by the grid area . As , the Green’s function approaches a Dirac delta function, becoming increasingly peaked and unbounded. However, as proved subsequently, with absorbed into the numerators of the terms in the (truncated) series representation of , the resulting rescaled weights remain bounded.

To formalize this, we define the rescaled weights of our scheme as follows:

| (4.18) |

Here, indicates that is effectively absorbed into the numerators of the terms in the (truncated) series representation of , ensuring that remains bounded as .

For subsequent use, we also define the infinite series counterpart as follows:

| (4.19) |

A formal proof of the boundedness of both and its truncated counterpart is provided in Lemma 5.1.

Convention: For the rest of the paper, we adopt the convention of continuing to write and in our scheme, implementation descriptions, and subsequent analysis. These expressions should respectively be understood as shorthand for and , where is the rescaled weight defined in (4.18). The same convention applies to expressions involving .

We note that the scheme in (4.17) explicitly enforces the American option’s early exercise constraint by taking at each timestep. While this approach is standard in computational finance and preserves the monotonicity of the scheme, it limits the global accuracy in time to first order due to the non-smooth operation.

A possible approach to achieving higher-order time accuracy is to adopt a penalty formulation (such as that in [33]), which leads to a system of nonlinear equations that must be solved iteratively at each timestep, typically using fixed-point or Newton-type methods [38]. Exploring these iterative methods in conjunction with the integral operator, while preserving the advantages of our convolution-based Green’s function framework in a fully 2-D jump-diffusion setting, is an interesting, but challenging, direction for future work. However, this lies beyond the scope of the present paper. We focus here on an explicit enforcement approach, which is simpler to implement and preserves the monotonicity of the scheme, thereby ensuring convergence to the viscosity solution.

4.4 Efficient implementation and complexity

In this section, we discuss an efficient implementation of the 2-D discrete convolution (4.16) using FFT. Initially developed for 1-D problems [69] and extended to 2-D cases [26], this technique enables efficient computation of the convolution as a circular product. Specifically, the goal of this technique is to represent (4.16) for , , and as a 2-D circular convolution product of the form

| (4.20) |

Here, is the first column of an associated circulant block matrix constructed from , where is sufficiently large, reshaped into a matrix, while is reshaped from an associated augmented block vector into a matrix. The notation denotes the circular convolution product. Full details on constructing and can be found in [26].

The resulting circular convolution product (4.20) can then be computed efficiently using FFT and inverse FFT (iFFT) as:

| (4.21) |

After computation, we discard components in for indices or , obtaining discrete solutions for .

As explained in Remark 4.2, the factor is incorporated into , yielding . Following our convention, we continue to write in (4.20)-(4.21) for simplicity.

The implementation outlined in (4.21) indicates that the rescaled weight array needs to be computed only once using the infinite series expression from Corollary 3.1, after which they can be reused across all time intervals. Specifically, for a given user-defined tolerance , we use (3.8) to determine a sufficiently large number of terms in the series representation (3.6) for these weights. The resulting rescaled weight array is then calculated. For the two-asset Merton jump-diffusion model, the rescaled weight array needs only be computed once as per (4.21), and can subsequently be reused across all time intervals. This step is detailed in Algorithm 4.1.

Putting everything together, the proposed numerical scheme for the American options under two-asset Merton jump-diffusion model is presented in Algorithm 4.2 below.

Remark 4.3 (Complexity).

Our algorithm involves, for , the following key steps:

-

•

Compute , , via the proposed 2-D FFT algorithm. The complexity of this step is , considering that and .

-

•

Finding the optimal control for each node by directly comparing with the payoff requires complexity. Thus, with a total of interior nodes, this gives a complexity .

-

•

Therefore, the major cost of our algorithm is determined by the step of FFT algorithm. With timesteps, the total complexity is .

5 Convergence to viscosity solution

In this section, we demonstrate that, as a discretization paremeter approaches zero, our numerical scheme in the interior sub-domain converges to the viscosity solution of the variational inequality (2.11) in the sense of Definition 2.1. To achieve this, we examine three critical properties: -stability, consistency, and monotonicity [20].

5.1 Error analysis

To commence, we shall identify errors arising in our numerical scheme and make assumptions needed for subsequent proofs. In the discussion below, is a test function in .

-

•

Truncating the infinite region of integration of the convolution integral (4.4) between the Green’s function and to results in a boundary truncation error , where

(5.1) It has been established that for general jump diffusion models, such as those considered in this paper, the error bound is bounded by [19, 18]

(5.2) where , and . For fixed and , (5.2) shows , as . However, as typical required for showing consistency, one would need to ensure as . Therefore, from (5.2), we need and as , which can be achieved by letting and , for finite independent of .

-

•

The next source of error is identified in approximating the truncated 2-D convolution integral

by the composite trapezoidal rule: where represents the exact Green’s function. We denote by the numerical integration error associated with this approximation. For a fixed integration domain , due to the smoothness of the test function , we have that as .

- •

Motivated by the above discussions, for convergence analysis, we make the assumption below about the discretization parameter.

Assumption 5.1.

We assume that there is a discretization parameter such that

| (5.3) |

where the positive constants , , , and are independent of .

Under Assumption 5.1, and for a test function , we have

| (5.4) |

It is also straightforward to ensure the theoretical requirement as . For example, with in (5.3), we can quadruple and as we halve . We emphasize that, for practical purposes, if and are chosen sufficiently large, both can be kept constant for all refinement levels (as we let ). The effectiveness of this practical approach is demonstrated through numerical experiments in Section 6. Finally, we note that, the total complexity of the proposed algorithm, as outlined in Remark 4.3, is .

We now present a lemma on the boundedness of the rescaled weights defined in (4.18).

Lemma 5.1 (Boundedness of the rescaled weights).

Let be the two-asset Merton jump-diffusion Green’s function from Corollary 3.1, and recall the rescaled weights and its infinite series counterpart , respectively defined in (4.18) and (4.19).

Under Assumption 5.1, there exists a finite constant , independent of the discretization parameter , such that for all sufficiently small ,

Consequently, the rescaled weights satisfy

A proof of Lemma 5.1 is provided in Appendix D. We reiterate that, as explained in Remark 4.2, we adopt the convention of writing and even though the factor can be interpreted as folded into . Under this convention, the discrete rescaled weights, as well as its full series counter parts, remain bounded as by Lemma 5.1.

For subsequent use, we present a result about in the form of a lemma.

Lemma 5.2.

Suppose the discretization parameter satisfies (5.3). For sufficiently small , we have

| (5.5) |

where with being a bounded constant independently of .

Proof of Lemma 5.2.

In this proof, we let be a generic positive constant independent of , which may take different values from line to line. We note that is an approximation to using the first terms of the infinite series. Recall that , and also, by (3.3), . Hence, setting in the above gives

| (5.6) |

As , we have:

Here, (i) is due to the fact that all terms of the infinite series are non-negative, so are the weights of the composite trapezoidal rule; in (ii), as previously discussed, the error is due to the boundary truncation error (5.2), together with as , as in (5.3); the error arises from the trapezoidal rule approximation of the double integral, as noted in (5.4); (iii) follows from (5.6); (iv) is due to and (5.3). Letting gives (iv). This concludes the proof. ∎

5.2 Stability

Our scheme consists of the following equations: (4.14) for , (4.15) for , and finally (4.17) for . We start by verifying -stability of our scheme.

Lemma 5.3 (-stability).

Proof of Lemma 5.3.

Since the function is a bounded, for any fixed , we have

| (5.7) |

Hence, . Motivated by this observation and Lemma 5.2, to demonstrate -stability of our scheme, we will show that, for a fixed , at any , we have

| (5.8) |

from which, we obtain for all , as wanted, noting .

For the rest of the proof, we will show the key inequality (5.8) when is fixed. We will address -stability for the boundary and interior sub-domains (together with their respective initial conditions) separately, starting with the boundary sub-domains and . It is straightforward to see that both (4.14) (for ) and (4.15) (for ) satisfy (5.8), respectively due to (5.7) and the following

| (5.9) |

We now demonstrate the bound (5.8) for using induction on , . For the base case, , the bound (5.8) holds for all and , which follows from (5.7). Assume that (5.8) holds for , , and , i.e. , for and . We now show that (5.8) also holds for . The continuation value , for and , satisfies

5.3 Consistency

While equations (4.14), (4.15), and (4.17) are convenient for computation, they are not well-suited for analysis. To verify consistency in the viscosity sense, it is more convenient to rewrite them in a single equation that encompasses the interior and boundary sub-domains. To this end, for grid point , we define operator

| (5.11) |

Using defined in (5.11), our numerical scheme at the reference node can be rewritten in an equivalent form as follows

| (5.17) |

where the sub-domains , and are defined in (4.1).

To establish convergence of the numerical scheme to the viscosity solution in , we first consider an intermediate result presented in Lemma 5.4 below.

Lemma 5.4.

Suppose the discretization parameter satisfies (5.3). Let be a test function in . For , where , , and , with , and for sufficiently small , we have

| (5.18) |

Here, and .

Proof of Lemma 5.4.

Lemma 5.4 can be proved using similar techniques in [51][Lemmas 5.2]. Starting from the discrete convolution on the left-hand-side (lhs) of (5.18), we need to recover an associated convolution integral of the form (4.4) which is posed on an infinite integration region. Since for an arbitrary fixed , is not necessarily in , standard mollification techniques can be used to obtain a mollifier which agrees with on [49], and has bounded derivatives up to second order across . For brevity, instead of , we will write . Recalling different errors outlined in (5.4), we have

| (5.19) |

Here, in (i), the error is the series truncation error; in (ii), two additional errors and are due to the boundary truncation error and the numerical integration error, respectively; in (iii) denotes the convolution of and ; in addition, , and as previously discussed in (5.4). In (5.3), with given in (3.3), expanding using a Taylor series gives

| (5.20) |

Therefore,

| (5.21) | |||||

Here, the first term in (5.21), namely is simply by construction of . For the second term in (5.21), we focus on . Recalling the closed-form expression for in (3.3), we obtain

| (5.22) |

Therefore, the second term in (5.21) becomes

| (5.23) |

For the third term in (5.21), we have

| (5.24) |

Noting , as shown in (5.20), where a closed-form expression for is given in (3.3), we obtain

The term can be written in the form , where are bounded coefficients. This is a quartic polynomial in and . Furthermore, the exponent of exponential term is bounded by

For , we have . Therefore, we conclude that for , the term is bounded since

is also bounded. Together with , the rhs of (5.3) is , i.e.

| (5.25) |

Substituting (5.23) and (5.25) back into (5.21), noting (5.3) and for all , we have

This concludes the proof. ∎

Below, we state the key supporting lemma related to local consistency of our numerical scheme (5.17).

Lemma 5.5 (Local consistency).

Proof of Lemma 5.5.

We now show that the first equation of (5.31) is true, that is,

where operators is defined in (5.11). In this case, the first argument of the operator in is written as follows

| arg | ||||

| (5.32) |

Here, (i) follows from Lemma 5.4, where the error term is divided by , yielding . Regarding the second term of (5.3), we have

| (5.33) |

The first term of (5.33) is simply , due to (5.6). The second term of (5.33) is simply due to infinite series truncation error, numerical integration error, and boundary truncation error, as noted earlier. Thus, the second term of (5.3) becomes

Substituting this result into (5.3) gives

| arg |

Here, in (i), we use ; for , ; and for the cross derivative term .

We now formally state a lemma regarding the consistency of scheme (5.17) in the viscosity sense.

Lemma 5.6.

5.4 Monotonicity

Below, we present a result concerning the monotonicity of our scheme (5.17).

Lemma 5.7.

(Monotonicity) Scheme (5.17) satisfies

| (5.44) |

for bounded and having , where the inequality is understood in the component-wise sense.

Proof of Lemma 5.7.

Since scheme (5.17) is defined case-by-case, to establish (5.44), we show that each case satisfies (5.44). It is straightforward that the scheme satisfies (5.44) in ) and . We now establish that , as defined in (5.11) for , also satisfies (5.44). We have

| (5.45) |

Here, (i) is due to the fact that for real numbers ; (ii) follows from , since and for all , , , and . This concludes the proof. ∎

5.5 Main convergence result

We have demonstrated that the scheme 5.17 satisfies three key properties in : (i) -stability (Lemma 5.3), (ii) consistency in the viscosity sense (Lemma 5.6) and (iii) monotonicity (Lemma 5.7). With a provable strong comparison principle result for in Theorem 2.2, we now present the main convergence result of the paper.

Theorem 5.1 (Convergence to viscosity solution in ).

Proof of Theorem 5.1.

6 Numerical experiments

In this section, we present the selected numerical results of our monotone integration method (MI) applied to the American options under a two-asset Merton jump-diffusion model pricing problem.

6.1 Preliminary

For our numerical experiments, we evaluate three parameter sets for the two-asset Merton jump-diffusion model, labeled as Case I, Case II, and Case III. The modeling parameters for these tests, reproduced from [36][Table 1], are provided in Table 6.1. Notably, the parameters in Cases I, II, and III feature progressively larger jump intensity rates . As previously mentioned, we can choose sufficiently large to remain constant across all refinement levels (as ). Due to the varying jump intensity rates, we select computational domains of appropriate size for each case, listed in Table 6.3, and confirm that these domains are sufficiently large through numerical validation in Subsection 6.2.3. Furthermore, values for , , , and were chosen according to (4.9). Unless specified otherwise, the details on mesh size and timestep refinement levels (“Refine. level”) used in all experiments are summarized in Table 6.2.

| Case I | Case II | Case III | |

| Diffusion parameters | |||

| 0.12 | 0.30 | 0.20 | |

| 0.15 | 0.30 | 0.30 | |

| 0.30 | 0.50 | 0.70 | |

| Jump parameters | |||

| 0.60 | 2 | 8 | |

| -0.10 | -0.50 | -0.05 | |

| 0.10 | 0.30 | -0.20 | |

| -0.20 | -0.60 | 0.50 | |

| 0.17 | 0.40 | 0.45 | |

| 0.13 | 0.10 | 0.06 | |

| 100 | 40 | 40 | |

| (years) | 1 | 0.5 | 1 |

| 0.05 | 0.05 | 0.05 |

| Refine. level | ||||

|---|---|---|---|---|

| () | () | () | ||

| 0 | 50 | |||

| 1 | 100 | |||

| 2 | 200 | |||

| 3 | 400 | |||

| 4 | 800 |

| Case I | Case II | Case III | |

|---|---|---|---|

6.2 Validation examples

For the numerical experiments, we analyze two types of options: an American put-on-the-min option and an American put-on-the-average option, each with a strike price , as described in [36, 13].

6.2.1 Put-on-the-min option

Our first test case examines an American put option on the minimum of two assets, as described in [36, 13]. The payoff function is defined as

| (6.1) |

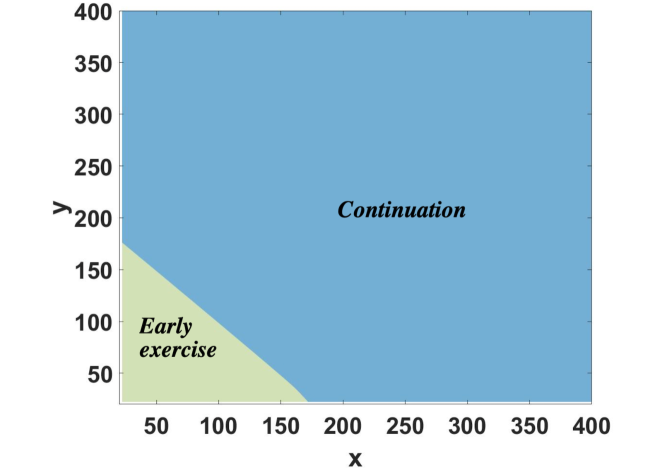

As a representative example, we utilize the parameters specified in Case I, with initial asset values and , for the put-on-the-min option. Computed option prices for this test case are presented in Table LABEL:tab:result01. To estimate the convergence rate of the proposed method, we calculate the “Change” as the difference between computed option prices at successive refinement levels and the “Ratio” as the quotient of these changes between consecutive levels. As shown, these computed option prices exhibit first-order convergence and align closely with results obtained using the operator splitting method in [13]. In addition, Figure 6.1 displays the early exercise regions at for this test case.

Tests conducted under Cases II and III demonstrate similar convergence behavior. Numerical results for American put-on-the-min options with various initial asset values and parameter sets are summarized in Section 6.2.5 [Table LABEL:tab:result06].

6.2.2 Put-on-the-average option

For the second test case, we examine an American option based on the arithmetic average of two assets. The payoff function, , is defined as:

| (6.2) |

As a representative example, we use the modeling parameters from Case I, with initial asset values set at and to illustrate the put-on-the-average option. The computed option prices, presented in Table LABEL:tab:result02, demonstrate a first order of convergence and show strong agreement with the results reported in [13]. In addition, the early exercise regions at for this case are depicted in Figure 6.2. Similar experiments conducted for Cases II and III yield comparable results. Further numerical results for American put-on-the-average options, encompassing various initial asset values and parameter sets, are presented in Section 6.2.5 [Table 6.10].

It is important to note that the convergence rates in our numerical experiments are not directly comparable to those reported in [62]. As highlighted earlier, the method presented in this work is more general and can be extended in a straightforward manner to stochastic control problems, such as asset allocation, where no continuation boundary exists. Furthermore, our method directly converges to the viscosity solution of the true American option pricing problem, unlike [62], which focuses on Bermudan options and does not examine the limiting case as .

6.2.3 Impact of spatial domain sizes

In this subsection, we validate the adequacy of the chosen spatial domain for our experiments, focusing on Case I for brevity. Similar tests for Cases II and III yield consistent results and are omitted here.

To assess domain sufficiency, we revisit the setup from Table LABEL:tab:result01 and double the sizes of the interior sub-domain , extending , , , and to , , , and . The boundary sub-domains are adjusted accordingly as in (4.9). We also double the intervals and to preserve and as in the setup from Table LABEL:tab:result01.

The computed option prices for this larger domain, presented in Table 6.6 under “Larger ” show minimal differences from the original results (shown under “Table LABEL:tab:result01), with discrepancies only appearing at the 8th decimal place. These differences are recorded in the “Diff.” column, which represents the absolute difference between the computed option prices from Table LABEL:tab:result01 and those obtained with either an extended or contracted interior sub-domain . This indicates that further enlarging the spatial computational domain has a negligible effect on accuracy.

| Refine. | Table LABEL:tab:result01 | (Larger ) | (Smaller ) | ||

|---|---|---|---|---|---|

| level | Price | Price | Diff. | Price | Diff. |

| 0 | 16.374702 | 16.374702 | 1.64e-08 | 16.374210 | 4.92e-04 |

| 1 | 16.383298 | 16.383298 | 1.60e-08 | 16.382820 | 4.78e-04 |

| 2 | 16.387210 | 16.387210 | 1.60e-08 | 16.386736 | 4.74e-04 |

| 3 | 16.389079 | 16.389079 | 1.62e-08 | 16.388605 | 4.74e-04 |

| 4 | 16.389991 | 16.389991 | 1.62e-08 | 16.389515 | 4.76e-04 |

In addition, we test a smaller interior domain with boundaries , , , and , while keeping and constant. The results, shown in Table 6.6 under “Smaller ”, reveal differences starting at the third decimal place compared to the original setup. This indicates that the selected domain size is essential for achieving accurate results; further expansion of the domain size offers negligible benefit, whereas any reduction may introduce noticeable errors.

In Table 6.7, we present the test results for extending and contracting for the American put-on-average option, which yield similar conclusions to those observed previously.

| Refine. | Table LABEL:tab:result02 | (Larger ) | (Smaller ) | ||

|---|---|---|---|---|---|

| level | Price | Price | Diff. | Price | Diff. |

| 0 | 3.431959 | 3.431959 | 2.91e-07 | 3.431348 | 6.12e-04 |

| 1 | 3.436727 | 3.436727 | 3.09e-07 | 3.436080 | 6.37e-04 |

| 2 | 3.439096 | 3.439096 | 3.21e-07 | 3.436080 | 6.69e-04 |

| 3 | 3.440278 | 3.440278 | 3.26e-07 | 3.438426 | 6.82e-04 |

| 4 | 3.440868 | 3.440868 | 3.29e-07 | 3.440178 | 6.91e-04 |

6.2.4 Impact of boundary conditions

In this subsection, we numerically demonstrate that our straightforward approach of employing discounted payoffs for boundary sub-domains is adequate. For brevity, we show the tests of impact of boundary conditions for Case I. Similar experiments for Cases II and III yield the same results.

We revisited previous experiments reported in Tables LABEL:tab:result01, introducing more sophisticated boundary conditions based on the asymptotic behavior of the PIDEs (3.1) as and for as proposed in [17]. Specifically, the PIDEs (3.1) simplifies to the 1D PDEs shown in (6.3) when or tends to :

| (6.3) | ||||

That can be justified based on the properties of the Green’s function of the PIDE [35]. As , the PIDEs (3.1) simplifies to the ordinary differential equation .

To adhere to these asymptotic boundary conditions, we choose a much large spatial domain: , , , , and adjust the number of intervals and accordingly to maintain the same grid resolution ( and ). Employing the monotone integration technique, tailored for the 1D case, we effectively solved the 1D PDEs in (6.3). The ordinary differential equation is solved directly and efficiently. The scheme’s convergence to the viscosity solution can be rigorously established in the same fashion as the propose scheme.

| Put-on-the-min | Put-on-the-average | |||

| Refine. | Price | Price | Price | Price |

| level | (Table LABEL:tab:result01) | (Table LABEL:tab:result02) | ||

| 0 | 16.374702 | 16.374702 | 3.431959 | 3.431959 |

| 1 | 16.383298 | 16.383298 | 3.436727 | 3.436727 |

| 2 | 16.387210 | 16.387210 | 3.439096 | 3.439096 |

| 3 | 16.389079 | 16.389079 | 3.440278 | 3.440278 |

| 4 | 16.389991 | 16.389991 | 3.440868 | 3.440868 |

The computed option prices for the put-on-the-min option, as shown in Table 6.8, are virtually identical with those from the original setup (see column marked “Table. LABEL:tab:result01”). In addition, Table 6.8 presents results for the put-on-the-average option using the similar sophisticated boundary condition, with findings consistent with the put-on-the-min option. These results confirm that our simple boundary conditions are not only easy to implement but also sufficient to meet the theoretical and practical requirements of our numerical experiments.

6.2.5 Comprehensive tests

In the following, we present a detailed study of two types of options: an American put-on-the-min option and an American put-on-the-average option, tested with various strike prices and initial asset values. Across all three parameter cases, our computed prices closely align with the reference prices given in [13][Tables 5 and 6].

| Put-on-average | ||||

|---|---|---|---|---|

| Case I | ||||

| 90 | 100 | 110 | ||

| MI | 90 | 10.000000 | 5.987037 | 3.440343 |

| 100 | 6.028929 | 3.440868 | 1.886527 | |

| 110 | 3.490665 | 1.890874 | 0.992933 | |

| Ref. [13] | 10.003 | 5.989 | 3.441 | |

| 6.030 | 3.442 | 1.877 | ||

| 3.491 | 1.891 | 0.993 | ||

| Case II | ||||

| 36 | 40 | 44 | ||

| MI | 36 | 5.405825 | 4.363340 | 3.547399 |

| 40 | 4.213899 | 3.338840 | 2.669076 | |

| 44 | 3.224979 | 2.506688 | 1.969401 | |

| Ref. [13] | 5.406 | 4.363 | 3.547 | |

| 4.214 | 3.339 | 2.669 | ||

| 3.225 | 2.507 | 1.969 | ||

| Case III | ||||

| 36 | 40 | 44 | ||

| MI | 36 | 12.472058 | 11.935904 | 11.446078 |

| 40 | 11.439979 | 10.948971 | 10.500581 | |

| 44 | 10.499147 | 10.049777 | 9.639534 | |

| Ref. [13] | 12.466 | 11.930 | 11.440 | |

| 11.434 | 10.943 | 10.495 | ||

| 10.493 | 10.043 | 9.633 | ||

7 Conclusion and future work

In this paper, we address an important gap in the numerical analysis of two-asset American options under the Merton jump-diffusion model by introducing an efficient and straightforward-to-implement monotone scheme based on numerical integration. The pricing of these options involves solving complex 2-D variational inequalities that include cross derivative and nonlocal integro-differential terms due to jumps. Traditional finite difference methods often struggle to maintain monotonicity in cross derivative approximations—crucial for ensuring convergence to the viscosity solution-and accurately discretize 2-D jump integrals. Our approach overcomes these challenges by leveraging an infinite series representation of the Green’s function, where each term is non-negative and computable, enabling efficient approximation of 2-D convolution integrals through a monotone integration method. In addition, we rigorously establish the stability and consistency of the proposed scheme in the viscosity sense and prove its convergence to the viscosity solution of the variational inequality. This overcomes several significant limitations associated with previous numerical techniques.

Extensive numerical results demonstrate strong agreement with benchmark solutions from published test cases, including those obtained via operator splitting methods, highlighting the utility of our approach as a valuable reference for verifying other numerical techniques.

Although our focus has been on American option pricing under a correlated two-asset Merton jump-diffusion model, the core methodology—particularly the infinite series representation of the Green’s function, where each term is non-negative—has broader applicability. One such application is asset allocation with a stock index and a bond index in both discrete and continuous rebalancing settings. Extending this approach to the 2-D Kou model introduces significant additional challenges, which could be addressed using a neural network-based approximation of the joint PDF of the jumps, along with a Gaussian activation function, as previously discussed. While we utilize the closed-form Fourier transform of the Green’s function for the Merton model, iterative techniques for differential-integral operators, such as those discussed in [34], could be employed to extend this framework to more general jump-diffusion models. Exploring such extensions and applying our framework to a wider range of financial models remains an exciting direction for future research.

Acknowledgments

The authors are grateful to the two anonymous referees for their constructive comments and suggestions, which have significantly improved the quality of this work.

References

- [1] M. Abramowitz and I. A. Stegun. Handbook of mathematical functions. Dover, New York, 1972.

- [2] J. Alonso-Garcca, O. Wood, and J. Ziveyi. Pricing and hedging Guaranteed Minimum Withdrawal Benefits under a general Lévy framework using the COS method. Quantitative Finance, 18:1049–1075, 2018.

- [3] S. Awatif. Equqtions D’Hamilton-Jacobi Du Premier Ordre Avec Termes Intégro-Différentiels: Partie II: Unicité Des Solutions De Viscosité. Communications in Partial Differential Equations, 16(6-7):1075–1093, 1991.

- [4] G. Barles. Convergence of numerical schemes for degenerate parabolic equations arising in finance. In L. C. G. Rogers and D. Talay, editors, Numerical Methods in Finance, pages 1–21. Cambridge University Press, Cambridge, 1997.

- [5] G. Barles and J. Burdeau. The Dirichlet problem for semilinear second-order degenerate elliptic equations and applications to stochastic exit time control problems. Communications in Partial Differential Equations, 20:129–178, 1995.

- [6] G. Barles, CH. Daher, and M. Romano. Convergence of numerical shemes for parabolic eqations arising in finance theory. Mathematical Models and Methods in Applied Sciences, 5:125–143, 1995.

- [7] G. Barles and C. Imbert. Second-order elliptic integro-differential equations: viscosity solutions’ theory revisited. Ann. Inst. H. Poincaré Anal. Non Linéaire, 25(3):567–585, 2008.

- [8] G. Barles and E. Rouy. A strong comparison result for the Bellman equation arising in stochastic exit time control problems and applications. Communications in Partial Differential Equations, 23:1945–2033, 1998.

- [9] G. Barles and P.E. Souganidis. Convergence of approximation schemes for fully nonlinear equations. Asymptotic Analysis, 4:271–283, 1991.

- [10] Guy Barles, C Daher, and Marc Romano. Convergence of numerical schemes for parabolic equations arising in finance theory. Mathematical Models and Methods in Applied Sciences, 5(01):125–143, 1995.

- [11] Alain Bensoussan and Jacques-Louis Lions. Applications of Variational Inequalities in Stochastic Control, volume 12 of Studies in Mathematics and its Applications. North-Holland Publishing Co., Amsterdam, 1982.

- [12] E. Berthe, D.M. Dang, and L. Ortiz-Gracia. A Shannon wavelet method for pricing foreign exchange options under the Heston multi-factor CIR model. Applied Numerical Mathematics, 136:1–22, 2019.

- [13] Lynn Boen and Karel J In’t Hout. Operator splitting schemes for American options under the two-asset Merton jump-diffusion model. Applied Numerical Mathematics, 153:114–131, 2020.

- [14] Olivier Bokanowski, Nadia Megdich, and Hasnaa Zidani. Convergence of a non-monotone scheme for Hamilton–Jacobi–Bellman equations with discontinous initial data. Numerische Mathematik, 115:1–44, 2010.

- [15] J Frédéric Bonnans and Housnaa Zidani. Consistency of generalized finite difference schemes for the stochastic HJB equation. SIAM Journal on Numerical Analysis, 41(3):1008–1021, 2003.

- [16] C. Christara and D.M. Dang. Adaptive and high-order methods for valuing American options. Journal of Computational Finance, 14(4):73–113, 2011.

- [17] Simon S Clift and Peter A Forsyth. Numerical solution of two asset jump diffusion models for option valuation. Applied Numerical Mathematics, 58(6):743–782, 2008.

- [18] R. Cont and P. Tankov. Financial Modelling with Jump Processes. Chapman and Hall/CRC Financial Mathematics Series. CRC Press, 2003.

- [19] R. Cont and E. Voltchkova. A finite difference scheme for option pricing in jump diffusion and exponential Lévy models. SIAM Journal on Numerical Analysis, 43(4):1596–1626, 2005.

- [20] M. G. Crandall, H. Ishii, and P. L. Lions. User’s guide to viscosity solutions of second order partial differential equations. Bulletin of the American Mathematical Society, 27:1–67, 1992.

- [21] Michael G Crandall, Lawrence C Evans, and P-L Lions. Some properties of viscosity solutions of Hamilton-Jacobi equations. Transactions of the American Mathematical Society, 282(2):487–502, 1984.

- [22] Michael G Crandall and Pierre-Louis Lions. Viscosity solutions of Hamilton-Jacobi equations. Transactions of the American mathematical society, 277(1):1–42, 1983.

- [23] Colin W Cryer. The solution of a quadratic programming problem using systematic overrelaxation. SIAM Journal on Control, 9(3):385–392, 1971.

- [24] D. M. Dang, K. R. Jackson, and S. Sues. A dimension and variance reduction Monte Carlo method for pricing and hedging options under jump-diffusion models. Applied Mathematical Finance, 24:175–215, 2017.

- [25] D.M. Dang and P.A. Forsyth. Continuous time mean-variance optimal portfolio allocation under jump diffusion: A numerical impulse control approach. Numerical Methods for Partial Differential Equations, 30:664–698, 2014.

- [26] D.M. Dang and H. Zhou. A monotone piecewise constant control integration approach for the two-factor uncertain volatility model. arXiv preprint arXiv:2402.06840, 2024.

- [27] Y. d’Halluin, P.A. Forsyth, and G. Labahn. A penalty method for American options with jump diffusion. Numeriche Mathematik, 97(2):321–352, 2004.

- [28] R. Du and D. M. Dang. Fourier neural network approximation of transition densities in finance. SIAM Journal on Scientific Computing, 2024. Accepted for publication, December 2024.

- [29] Dean G. Duffy. Green’s Functions with Applications. Chapman and Hall/CRC, New York, 2nd edition, 2015.

- [30] F. Fang and C.W. Oosterlee. A novel pricing method for European options based on Fourier-Cosine series expansions. SIAM Journal on Scientific Computing, 31:826–848, 2008.

- [31] Wendell H Fleming and Halil Mete Soner. Controlled Markov processes and viscosity solutions, volume 25. Springer Science & Business Media, 2006.

- [32] P. A. Forsyth and G. Labahn. -monotone Fourier methods for optimal stochastic control in finance. Journal of Computational Finance, 22(4):25–71, 2019.

- [33] P.A. Forsyth and K.R. Vetzal. Quadratic convergence of a penalty method for valuing American options. SIAM Journal on Scientific Computing, 23:2095–2122, 2002.

- [34] M. G. Garroni and J. L. Menaldi. Green functions for second order parabolic integro-differential problems. Number 275 in Pitman Research Notes in Mathematics. Longman Scientific and Technical, Harlow, Essex, UK, 1992.

- [35] Maria Giovanna Garroni and José Luis Menaldi. Green functions for second order parabolic integro-differential problems. (No Title), 1992.

- [36] Abhijit Ghosh and Chittaranjan Mishra. High-performance computation of pricing two-asset American options under the Merton jump-diffusion model on a GPU. Computers & Mathematics with Applications, 105:29–40, 2022.

- [37] Karel J Hout. An efficient numerical method for American options and their Greeks under the two-asset Kou jump-diffusion model. arXiv preprint arXiv:2410.10444, 2024.

- [38] Y. Huang, P.A. Forsyth, and G. Labahn. Combined fixed point and policy iteration for HJB equations in finance. SIAM Journal on Numerical Analysis, 50:1861–1882, 2012.

- [39] Y.T. Huang, P. Zeng, and Y.K. Kwok. Optimal initiation of Guaranteed Lifelong Withdrawal Benefit with dynamic withdrawals. SIAM Journal on Financial Mathematics, 8:804–840, 2017.

- [40] H. Ishii. On uniqueness and existence of viscosity solutions of fully nonlinear second-order elliptic PDE’s. Communications on pure and applied mathematics, 42(1):15–45, 1989.

- [41] S.D. Jacka. Optimal stopping and the American put. Mathematical Finance, 1(2):1–14, 1991.

- [42] P. Jaillet, D. Lamberton, and B. Lapeyre. Variational inequalities and the pricing of American options. Acta Applicandae Mathematica, 21:263–289, 1990.

- [43] Espen R Jakobsen and Kenneth H Karlsen. A “maximum principle for semicontinuous functions” applicable to integro-partial differential equations. Nonlinear Differential Equations and Applications NoDEA, 13:137–165, 2006.

- [44] Adam Jakubowski and Marek Kobus. Lévy processes: Large deviations. Probability and Mathematical Statistics, 25:165–176, 2005.

- [45] I. Karatzas. On the pricing of American options. Applied mathematics and optimization, 17(1):37–60, 1988.

- [46] Sato Ken-Iti. Lévy processes and infinitely divisible distributions, volume 68. Cambridge University Press, 1999.

- [47] I.J. Kim. The analytic valuation of American options. The Review of Financial Studies, 3(4):547–572, 1990.

- [48] S.G. Kou. A jump diffusion model for option pricing. Management Science, 48:1086–1101, August 2002.

- [49] J.M. Lee. Introduction to smooth manifolds. Springer-Verlag, New York, 2 edition, 2012.

- [50] Y. Lu and D. M. Dang. A pointwise convergent numerical integration method for Guaranteed Lifelong Withdrawal Benefits under stochastic volatility. Technical report, School of Mathematics and Physics, The University of Queensland, August 2023. 34 pages.

- [51] Y. Lu and D. M. Dang. A semi-Lagrangian -monotone Fourier method for continuous withdrawal GMWBs under jump-diffusion with stochastic interest rate. Numerical Methods for Partial Differential Equations, 40(3):e23075, 2024.

- [52] Y. Lu, D. M. Dang, P. A. Forsyth, and G. Labahn. An -monotone Fourier method for Guaranteed Minimum Withdrawal Benefit (GMWB) as a continuous impulse control problem. Technical report, School of Mathematics and Physics, The University of Queensland, June 2022. 45 pages.

- [53] K. Ma and P.A. Forsyth. An unconditionally monotone numerical scheme for the two-factor uncertain volatility model. IMA Journal of Numerical Analysis, 37(2):905–944, 2017.

- [54] C. Martini. American option prices as unique viscosity solutions to degenerated Hamilton-Jacobi-Bellman equations. PhD thesis, INRIA, 2000.

- [55] R.C. Merton. Option pricing when underlying stock returns are discontinuous. Journal of Financial Economics, 3:125–144, 1976.

- [56] M. Mitzenmacher and E. Upfal. Probability and computing: Randomization and probabilistic techniques in algorithms and data analysis. Cambridge university press, 2017.

- [57] A.M. Oberman. Convergent difference schemes for degenerate elliptic and parabolic equations: Hamilton–Jacobi Equations and free boundary problems. SIAM Journal Numerical Analysis, 44(2):879–895, 2006.

- [58] Bernt Øksendal and Kristin Reikvam. Viscosity solutions of optimal stopping problems. Preprint series: Pure mathematics http://urn. nb. no/URN: NBN: no-8076, 1997.

- [59] H. Pham. Optimal stopping of controlled jump diffusion processes: a viscosity solution approach. Journal of Mathematical Systems Estimation and Control, 8(1):127–130, 1998.

- [60] Huyên Pham. Optimal stopping, free boundary, and American option in a jump-diffusion model. Applied mathematics and optimization, 35:145–164, 1997.

- [61] D.M. Pooley, P.A. Forsyth, and K.R. Vetzal. Numerical convergence properties of option pricing PDEs with uncertain volatility. IMA Journal of Numerical Analysis, 23:241–267, 2003.

- [62] Marjon J Ruijter and Cornelis W Oosterlee. Two-dimensional Fourier cosine series expansion method for pricing financial options. SIAM Journal on Scientific Computing, 34(5):B642–B671, 2012.

- [63] M.J. Ruijter, C.W. Oosterlee, and R.F.T. Aalbers. On the Fourier cosine series expansion (COS) method for stochastic control problems. Numerical Linear Algebra with Applications, 20:598–625, 2013.

- [64] R.C. Seydel. Existence and uniqueness of viscosity solutions for QVI associated with impulse control of jump-diffusions. Stochastic Processes and Their Applications, 119:3719–3748, 2009.

- [65] P.V. Shevchenko and X. Luo. A unified pricing of variable annuity guarantees under the optimal stochastic control framework. Risks, 4(3):1–31, 2016.

- [66] D. Tavella and C. Randall. Pricing Financial Instruments: The Finite Difference Method. John Wiley & Sons, Inc, 2000.

- [67] J. Wang and P.A. Forsyth. Maximal use of central differencing for Hamilton-Jacobi-Bellman PDEs in finance. SIAM Journal on Numerical Analysis, 46:1580–1601, 2008.

- [68] Xavier Warin. Some non-monotone schemes for time dependent Hamilton-Jacobi-Bellman equations in stochastic control. Journal of Scientific Computing, 66(3):1122–1147, 2016.

- [69] H. Zhang and D.M. Dang. A monotone numerical integration method for mean-variance portfolio optimization under jump-diffusion models. Mathematics and Computers in Simulation, 219:112–140, 2024.

- [70] X. L. Zhang. Numerical analysis of American option pricing in a jump-diffusion model. Mathematics of Operations Research, 22(3):668–690, 1997.

- [71] R. Zvan, P.A. Forsyth, and K.R. Vetzal. Penalty methods for American options with stochastic volatility. Journal of Computational and Applied Mathematics, 91:199–218, 1998.

Appendices

Appendix A Proof of Lemma 2.1

We extend the methods from [19], originally developed for 1-D European options, to address 2-D variational inequalities (2.4) and (2.9). For simplicity, we denote by the discounting factor. The solution to the full-domain variational inequality (2.4) is simply

which comes from (2.3) with a change of variables from to and from to . To obtain a probabilistic representation of the solution to the localized variational inequality (2.9), for fixed , we define the random variables and to respectively represent the maximum deviation of processes and from and over the interval . We also define the random variable as the first exit time of the process from . Similarly, the random variable is defined for the process . Using these random variables, can be expressed as

Subtracting from gives