Nucleus-aware Self-supervised Pretraining Using Unpaired Image-to-image Translation for Histopathology Images

Abstract

Self-supervised pretraining attempts to enhance model performance by obtaining effective features from unlabeled data, and has demonstrated its effectiveness in the field of histopathology images. Despite its success, few works concentrate on the extraction of nucleus-level information, which is essential for pathologic analysis. In this work, we propose a novel nucleus-aware self-supervised pretraining framework for histopathology images. The framework aims to capture the nuclear morphology and distribution information through unpaired image-to-image translation between histopathology images and pseudo mask images. The generation process is modulated by both conditional and stochastic style representations, ensuring the reality and diversity of the generated histopathology images for pretraining. Further, an instance segmentation guided strategy is employed to capture instance-level information. The experiments on 7 datasets show that the proposed pretraining method outperforms supervised ones on Kather classification, multiple instance learning, and 5 dense-prediction tasks with the transfer learning protocol, and yields superior results than other self-supervised approaches on 8 semi-supervised tasks. Our project is publicly available at https://github.com/zhiyuns/UNITPathSSL.

Histopathology image, Self-supervised pretraining, Unpaired image-to-image translation, Co-modulation, Segmentation guided strategy

1 Introduction

Histopathology serves as a crucial element for the diagnosis, prognosis, and analysis of therapeutic response for nearly all cancer types discovered[1, 2]. In the field of computer-aided pathologic diagnosis (CAPD), fully supervised deep models dominate various pathology-related tasks, including cancer classification[3], nuclei segmentation[4], and molecular subtype identification[5]. However, these models rely on extensive annotation, especially for dense-prediction tasks that require instance-level annotations. Fortunately, it is easier to obtain unlabeled histopathology images, which are expected to be utilized correctly to reduce the annotation burden for professional annotators.

One of the most popular approaches meeting the criteria is self-supervised pretraining, which learns generalized representation from unlabeled data. It can be roughly categorized into discriminative and generative ones. Currently, discriminative self-supervised pretraining is dominated by contrastive learning methods such as SimCLR[6], MoCo v2[7], and SimSiam[8]. On the other hand, the most recent development in generative self-supervised pretraining is based on denoising autoencoders[9], which use an encoder-decoder architecture to reconstruct corrupted images[10, 11, 12]. While the corrupting procedure might work well for natural images that often contain large foreground objects against varying backgrounds, it is not ideal for histopathology images. Simply corrupting histopathology images results in the loss of nuclei, which are often small but crucial for cancer identification, grading, and prognosis[1]. For CAPD-related tasks, especially dense-prediction tasks, the importance of nuclei can also hardly be overstated[4, 13, 14, 15, 16, 17]. Therefore, it is important to be aware of these small instances when tailoring self-supervised pretraining to histopathology images.

As an extension of generative pretraining, adversarial pretraining with Generative Adversarial Networks (GAN) offers the possibility of nucleus-aware pretraining. It not only avoids the corrupting procedure, but also learns the data distribution prior for downstream tasks. Most of the relevant works utilize a bidirectional framework that extends GAN with an extra encoder[18, 19, 20], as shown in Fig. 1 (a). However, the latent space of GAN is too abstract and redundant for fine-grained representation learning, hindering their performance on dense-prediction tasks. Given that GAN can be employed as a semantic segmenter[21, 14], whose procedure can also be viewed as image-to-image translation from images to their corresponding masks, the original latent space can be replaced with more specific representation, i.e., nucleus mask images. This design is possible for histopathology images because of the low-cost feasibility of synthesizing mask images [14]. In this way, the framework transforms naturally into unpaired image-to-image translation (UNIT) and is expected to capture nucleus-level information. As illustrated in Fig. 1 (b), CycleGAN[22] serves as a simple yet effective UNIT framework to implement the design. It also consists of two networks that learn in an inverse manner, similar to the bidirectional framework. The main difference lies in the cycle consistency, which regulates the translation and directs the network to capture semantic information. Moreover, both generators are designed with an encoder-decoder architecture, which allows initializing the decoder for dense-prediction tasks.

It has been explored that the performance of adversarial pretraining is closely related to the quality of generated images[20]. Therefore, it is hard to yield optimal performance if we only pretrain with vanilla CycleGAN whose stochasticity is highly limited. To overcome this challenge, a solution is to modulate the synthesis process with both conditional and stochastic style representations, which ensure the conditional correspondence and the intra-conditioning variety. This co-modulation design was first proposed by CoModGAN[23], the most advanced large-scale image completion network. By incorporating this design into the adversarial pretraining framework, the performance is expected to be significantly improved due to the enhanced quality of generated images.

It is noteworthy that another generator is taken as the pretrained model. Although cycle consistency is employed to enforce conditional constraints, it may not guarantee perfect matching between mask images and generated images. Similar to previous works that enhanced the generation process with the guidance of segmentation[24, 25, 26], it is advantageous to incorporate the segmentation task into the pretraining framework. However, relying solely on semantic segmentation may not provide effective information because the translation from histopathology images to mask images can be viewed as a form of semantic segmentation, as discussed previously. Noticing the fact that we can conveniently obtain instance-level ground truth from mask images, it is possible to augment the pretraining framework with instance segmentation, which can lead to a more accurate interpretation of instance objects. Moreover, unlike many existing works which only pretrain the encoder, implementing instance segmentation in the pretraining process can yield a stronger initialization for downstream dense-prediction tasks.

Based on the analysis, we propose a novel nucleus-aware self-supervised pretraining framework for histopathology images using a CycleGAN-based UNIT between the mask domain and the histopathology domain. Pseudo mask images are first synthesized as the mask domain containing rich semantic information. We integrate co-modulated generator into our framework to get realistic and diverse images for pretraining. We also guide the framework with an instance segmentation task, which makes the constraints stricter and puts more focus on instance objects.

The novelties and contributions of this paper are summarized as follows:

-

•

We propose a novel adversarial self-supervised pretraining method within the framework of unpaired image-to-image translation, which is aware of nuclear instances in histopathology images.

-

•

Co-modulation of both conditional and stochastic style representations is introduced for high-quality histopathology image generation conditioned on pseudo mask images, ensuring that the generated images provided for the pretrained network are realistic and diverse enough.

-

•

We couple the framework with an instance segmentation task that offers instance-level representation, and is more effective for nucleus-aware pertaining than the common semantic segmentation guidance.

-

•

The results for 7 transfer learning experiments and 8 semi-supervised experiments demonstrate that our method provides more effective and robust initialization for 5 different networks on 7 datasets, compared with other pretraining methods.

2 Related Work

Our work is related to four categories: (1) Self-supervised pretraining for histopathology images, (2) self-supervised pretraining with GAN, (3) unpaired image-to-image translation (UNIT), and (4) segmentation-guided synthesis.

2.1 Self-supervised Pretraining for Histopathology Images

Self-supervised pretraining has been an established topic in natural images for years, but studies attended to histopathology images are limited, especially in terms of generative pretraining. Koohbanani et al.[13] designed three pathology-specific pretext tasks based on the multi-scale nature and the special staining property of digital pathology. Yang et al.[27] tailored contrastive learning to histopathology with stain vector perturbation and combined it with a cross-staining prediction task. Luo et al.[28] enhanced the encoder of MAE[10] with a self-distillation scheme that used tokens from visible histopathology patches. All of the above methods pretrained the encoder solely for classification tasks. Pretraining approaches which are also suitable for pathology-specific dense-prediction tasks are still under-explored. Generative pretraining is believed to provide low-level representation that is advantageous for dense-prediction tasks[29, 30]. However, the direct application of generative pretraining to histopathology is not as effective as it is for natural images. In this paper, we tailor generative self-supervised pretraining to histopathology. Unlike previous works which ignore nuclear morphology and distribution, we guide the network to capture cellular information with pseudo mask images. Moreover, unlike most previous studies that only pretrain the encoder for classification tasks, we simultaneously initialize the encoder and decoder for dense-prediction tasks.

2.2 Self-supervised Pretraining with GAN

First proposed by Goodfellow et al.[31], Generative Adversarial Networks (GAN) is one of the most popular generative learning frameworks. Recent works modified the architecture of GAN to generate high-quality images[32, 33, 34, 35], with StyleGAN[33, 35] achieving impressive results for unconditional image generation. Furthermore, CoModGAN[23] tailored StyleGAN to image-to-image translation, achieving leading results for image completion.

Because of the unsupervised property of adversarial training, GAN also provides the possibility for adversarial pretraining[29]. Previous works have considered the generator to be an implicit autoencoder by incorporating an additional encoder that maps images to latent representation[18, 19, 20]. DiRA[36] combined adversarial learning with discriminative and restorative learning, effectively guiding the encoder to capture more informative aspects of medical images. Tao et al. [37] embedded adversarial training to Rubik’s Cube restoration, which adopts volume-wise transformations for context permutation. In this work, we also pretrain a generator to provide powerful visual representation with adversarial self-supervised learning. Different from previous methods, we not only guide the generator to learn representation from original data, but also help it capture semantic information from high-quality images generated by CoModGAN within the framework of UNIT.

2.3 Unpaired Image-to-image Translation

To learn the mapping between two domains without paired images, CycleGAN[22] proposed a novel cycle consistency loss that preserves the structural information between domains. The bidirectional constraint has been tailored to several tasks. For instance, Mondal et al.[38] proposed a semi-supervised segmentation method by learning a bidirectional mapping between unlabeled images and available ground truth masks with CycleGAN. Hoffman et al.[39] proposed an effective domain adaptation method by adapting pixel-level and feature-level representation using the cycle consistency loss and the task loss. These studies highlight that the cycle consistency constraint leads the generator to be aware of the semantic meaningful structure when applying style transfer between domains. In this paper, we also use the cycle consistency constraint to preserve structural information, while enhancing the generation quality using CoModGAN and the instance segmentation guided strategy. These approaches are incorporated into self-supervised pretraining. To the best of our knowledge, none have additionally explored UNIT in the field of self-supervised pretraining for digital pathology at all.

2.4 Segmentation Guided Synthesis

Studies have shown that the segmentation-guided strategy (SG) improves the performance of GANs by imposing spatial limitations on generated images [24, 25, 26, 16]. Bazazian et al.[24] guided dual-domain image synthesis with part-based segmentation. Aakerberg et al.[25] utilized an auxiliary segmentation task to help produce accurate super-resolution results. Ardino et al.[26] leveraged the predicted segmentation map to facilitate the inpainting process, thereby improving the generation quality of images in complex scenes. Most of the studies utilized semantic segmentation to provide guidance. However, the strategy might not perform well in our framework because the information provided by semantic segmentation is similar to that in image-to-mask translation. Therefore, we incorporate instance segmentation to provide more efficient guidance. The most relevant method for our purposes has been proposed by Gong et al.[16], who fused an extra instance segmentation model into the generation procedure in an adversarial manner. However, this approach required a memory-consuming extra segmentation network. In this paper, we further improve the method by sharing the backbone of the generator with an instance segmentation network, thereby increasing the pretraining efficiency.

3 Methods

Our goal is to develop a novel nucleus-aware self-supervised pretraining method for histopathology images. The framework learns the mapping between the mask image domain and histopathology image domain with the CycleGAN-based UNIT, as shown in Fig. 2. Generator produces histopathology image conditioned by mask image . In order to generate images that are diverse enough for sufficient pretraining, we co-modulate by both the mask image and style representation . Generator learns the reverse mapping from to , extracting semantic representation from and . An auxiliary instance segmentation branch is implemented to provide instance-level information.

After self-supervised pretraining, we selectively take parts of to initialize the network for downstream tasks. For classification tasks, we take the encoder of as the pretrained model. For dense-prediction tasks, we are able to use the pretrained encoder, decoder, and even the segmentation branch to provide more comprehensive initialization for the segmenter, as we will detail in our experiments.

3.1 Self-supervised Pretraining with UNIT

We propose to capture the semantic information contained in histopathology images in an adversarial manner. Similar to BiGAN, CycleGAN can also be regarded as a generalized bidirectional framework, as shown in Fig. 1. The generators and respectively translate mask images and histopathology images to synthesized histopathology images and mask images. Discriminators and learn to distinguish real and synthesized images generated from and with an adversarial loss . For , the objective is defined as a non-saturation loss with regularization[33], which can be expressed respectively for the discriminator and the generator as:

| (1) | ||||

For , least square loss[40] with regularization is adopted as , whose objective functions are:

| (2) | ||||

The adversarial loss helps the generators produce realistic images which match the distribution of real data. As a result, is able to capture the nuclei distribution prior contained in mask images, yielding more reasonable nucleus-aware pretraining results.

Cycle consistency constraints are implemented to maintain structural information during the translation. The main idea is to ensure that one of the generators reconstructs the original inputs given samples generated by another generator, which can also be denoted as (the forward cycle consistency) and (the backward cycle consistency). The L1 norm is used to ensure the constraint, whose loss can be expressed as:

| (3) | ||||

With the constraint of forward cycle consistency, i.e. , generator learns to extract semantic features from high-quality histopathology images generated by . Specifically, the network learns to identify nuclei, recognize their boundaries, and distinguish the epithelial ones. Combining the adversarial loss and the cycle consistency loss together, our framework learns semantic representation from both real and synthetic histopathology images.

3.2 Unpaired Data Preparation

The unsupervised translation between domain and domain requires a substantial number of images in each domain. We can easily acquire sufficient histopathology images for domain by cropping patches from the scanned WSIs. Due to the inability to obtain sufficient real nuclei masks for domain , we arrange random polygons, representing nuclei, on a grid in order to generate arbitrary numbers of mask images. The preparation of mask images is similar to that described in [14], but we add more details to match the distribution of the nuclei and provide more information for high-quality generation. The preparation of mask images can be roughly split into two parts: How the nuclei are distributed and how they are stylized.

3.2.1 Distribution

Recognizing the glandular structures is beneficial for the diagnosis and grading of carcinomas originating in several organs, including the prostate, breast, and colon[41]. In these organs, nuclei are specially distributed due to the existence of glands. We simulate the phenomenon by positioning some of the nuclei around the lumens, which are represented by deformed, empty, and overlapping ovals. To be specified, we randomly determine the center point, orientation angle, and length of the axis for each oval. A polar coordinate system is then built upon the oval center, with nucleus centers being evenly distributed around the oval at each polar angle. The radial distance of each nucleus is perturbed, and more nuclei are randomly added at each polar angle.

3.2.2 Stylization

We stylize the masks of nuclei and place them on the centers determined by nuclei distribution. Nuclei masks are generated as random polygons smoothed with Bézier interpolation, and we distinguish epithelial cells which form the glandular structures and other cells by placing their nuclei in different channels. The intensity values of nuclei are perturbed to add stochasticity, and the nuclei boundaries are drawn in a separate channel in order to guide the network to focus more on boundary recognition. Moreover, we allow some of the nuclei to slightly overlap with each other, which is a common phenomenon in histopathology. Examples of the prepared mask images are shown in Fig. 2.

3.3 Co-Modulated GAN

The performance of our pretraining framework is directly related to the quality of generated histopathology images. Recent works advance the generation quality of GANs with style-based modulation[33, 35], but the unconditional property of these GANs makes it hard to constrain the contents. To bridge the gap between the image-conditioned and the style-based generator, one of the solutions is to modulate the generation process with both the stochastic style and conditional representations. The strategy has been explored in CoModGAN[23] and is proven to generate high-quality and diverse images conditioned by limited information. Following this, we utilize the co-modulation strategy to improve the quality and stochasticity of generated histopathology images. Moreover, we extend CoModGAN by adopting co-modulated StyleGAN2-ADA[42] to stabilize the pretraining in the limited data regime.

The main architecture of the co-modulated generator is shown in Fig. 3. Given a mask image , encodes it to a feature map, which is flattened into conditional style representation with the dropout rate of 0.5. In addition, a mapping network produces the stochastic style representation by transforming a noise vector . The two representations are then concatenated and produce a style vector with the affine transform:

| (4) |

The style vector is then used to modulate the synthesis network via the ”demodulation” operation applied to the convolution weight, as described in StyleGAN2. Note that the original constant input of the first synthesis blocks is replaced by a feature map, which is reshaped from the output of the fully connected layer following . To further preserve the structure of mask images, skipping residual connections are implemented between and following CoModGAN [23]. and are designed to be the same as those in StyleGAN2, and the architecture of is implemented the same with that in CoModGAN. We represent the whole generation as for simplification in the paper.

To further stabilize training, ADA[42] is adopted in the process of discriminating and . Specifically, augmentations including pixel blitting, geometric transforms, and color transforms are applied for both and before feeding them to the discriminator. In order to avoid the leakage of the augmentations to the generator, the strength of conducting each augmentation is constrained by the scalar , which is determined by the degree of overfitting. We use as the overfitting heuristic, and adjust once every four minibatchs:

| (5) |

where N is the batch size, and is initialized to be 0. Then the augmentation is applied in sequence to the images with the probability of .

The design of CoModGAN-ADA enhances the quality of generated histopathology images, and substantially improves the pretraining performance by providing more diverse synthesized images to in the forward cycle.

3.4 Segmentation Guided Strategy

We guide our pretraining framework with an auxiliary segmentation task, which has been proven to enhance the generation quality because of the extra attention to the region of interest[24, 25, 26, 16]. In contrast to the semantic segmentation-guided strategy in most of the previous works, we utilize instance segmentation to provide the guidance. Given a pseudo mask image generated by our algorithm, it is possible to obtain its instance-level label for nucleus segmentation. In conjunction with the forward cycle consistency, we perform instance segmentation by adding an extra instance segmentation branch to so that it can also predict the instance-level label for , which should be identical to the label derived from .

To make the generator be agnostic with different architectures of FPN-based instance segmentation networks, is designed to be similar to the generator of DeblurGAN-v2[34], which is based on Feature Pyramid Network (FPN) and works well with different backbones. Five final feature maps in the bottom-up pathway of FPN are all up-sampled to the input size and summed up into one tensor. The end of the network is designed the same as DeblurGAN-v2 to recover the original shape, except that batch normalization is replaced by instance normalization.

In order to conduct instance segmentation, we implement the region proposal network, bounding-box recognition head, and mask prediction head on top of the FPN structure of . The design of the instance segmentation branch is identical to those in Mask R-CNN[43], whose loss is expressed as:

| (6) | ||||

in which and are the classification loss and bounding-box regression loss for the region proposal network, ,, and are the classification loss, bounding-box regression loss, and mask loss for the region of interest (RoI). Note that the instance segmentation loss can be back-propagated to through . Hence, the constraint augments the cycle consistency loss and guides to generate histopathology images that correspond more closely to their masks. Moreover, the auxiliary task substantially improves the awareness of the nuclei in and the ability to capture the semantic representation.

3.5 Overall Losses and Training

The proposed framework is composed of four parts: Generator , , and their corresponding discriminator , . They are trained coherently so that: (1) takes pseudo mask images as conditional inputs and produces realistic translated images. With adversarial learning, leads the generated images to match the data distribution of histopathology images; (2) tries to generate mask images conditioned on histopathology images with the guidance of ; (3) Structural information is preserved during the translation with cycle consistency constraints; and (4) is able to capture instance-level representation with an auxiliary segmentation task. The overall losses corresponding to the above requirements can be written as:

| (7) | ||||

where the hyperparameters , , and are used to balance different parts. Note that instance segmentation is only introduced to the generator and is optimized with multi-task learning.

Due to the low generation quality of in the early training stage, we also design a two-stage training strategy to alleviate the mismatched convergence speed between and . After several iterations of training, when begins to overfit to generated images, and are reinitialized and another stage of training is performed. Additional training stages will not improve the pretraining quality, as demonstrated in our subsequent experiment.

4 Experiments

4.1 Datasets

Various datasets are included in our experiments for pretraining and for downstream tasks. The basic information of each dataset is summarized in Table 1.

4.1.1 In-house

We collect 1093 WSIs scanned from H&E stained colorectal tissues in the First Affiliated Hospital of Zhejiang University. Both normal and malignant slices are included and cropped into patches with a size of 512512 pixels. Finally, a total of 160,000 unlabeled histopathology images at 40 objective magnification (0.25 m/pixel) are prepared for pretraining.

4.1.2 Kumar

Kumar[44] is a public nuclear instance segmentation dataset containing 30 annotated tiles extracted from 30 patients in 18 institutes. These images are scanned and cropped from H&E stained tissues at 40 objective magnification (0.25 m/pixel) from 7 different organs (prostate, breast, colon, liver, kidney, stomach, and bladder) with the size of 10001000. According to the original work, 16 images are used for training and 14 for testing. We only use the dataset for the downstream task in the transfer learning protocol due to the extremely small amounts of data.

4.1.3 Kather

This dataset consists of colorectal histopathology images covering 9 classes for classification[45]. All images with the size of 224224 pixels at 20 objective magnification (0.5 m/pixel) are obtained from the tissue bank of the National Center for Tumor diseases (NCT). We only utilize two of the classes, namely the colorectal adenocarcinoma epithelium (TUM) and normal colon mucosa (NORM), resulting in 23,082 images for training and 1,976 images for testing. Similar to [13], we randomly sample 20% of the training data for validation.

4.1.4 Lizard

Lizard[46] is the largest nuclear instance segmentation and classification dataset currently available. It consists of 6 subsets (GlaS, CRAG, CoNSeP, DPath, PanNuke, and TCGA) of colorectal histopathology images with an average of 1,016917 pixels at 20 objective magnification ( 0.5m/pixel). Nearly half a million nuclei are boundary-labeled and classified into 6 subtypes (epithelial, neutrophil, lymphocyte, eosinophil, plasma, and connective tissue cells). The subset TCGA is currently not available and is excluded from our study, resulting in a total of 238 images. The two largest subsets (the Dpath subset with 69 images and the CRAG subset with 64 images) are used for downstream tasks. We equally divide the two subsets into 3 parts for training, validating, and testing.

4.1.5 Crag

Crag [47] is a public dataset proposed for segmenting colorectal adenocarcinoma glands. It is composed of 213 H&E images with a size of approximately 1,5121,516 pixels at 20 objective magnification ( 0.5m/pixel). We follow the official settings and divide the dataset into 173 images for training and 40 images for testing.

4.1.6 Pannuke

The PanNuke dataset [48] comprises 7,901 tiles with 256256 pixels at either 20 or 40 magnification, obtained from over 20,000 whole slide images (WSIs) of 19 organs. The dataset includes annotations of 189,744 nuclei belonging to 5 different cell types, namely neoplastic, non-neoplastic epithelial, inflammatory, connective, and dead cells. Following the official settings, the dataset is divided into a training set, a validation set, and a test set.

4.1.7 In-house-MIL

We also collect 260 images with the size of 10,000 10,000 pixels at 40 magnification from the same source with the In-house dataset. The dataset is composed of 130 malignant images and 130 normal images, which are annotated by an expert pathologist. A 65%-15%-20% split was used to split data for training, validation, and testing. The dataset is used for multiple instance learning. A bag indicates a set of patches with the size of 512512 extracted from an image Positive bags are malignant images that contain cancerous cells, and negative bags are images that only contain normal cells.

| Datasets | Size | Mag. | Num of Images | Nuclei | Protocol | |||

|---|---|---|---|---|---|---|---|---|

| Train | Val | Test | TL | SSL | ||||

| Datasets for Pretraining | ||||||||

| In-house | 512512 | 40 | 160,000 | - | - | - | ✓ | |

| Lizard | 1,055934 | 20 | 194 | - | - | - | ✓ | |

| Kather | 224224 | 20 | 23,082 | - | - | - | ✓ | |

| Datasets for downstream Tasks | ||||||||

| Kumar | 1,0001,000 | 40 | 16 | - | 14 | 16,954 | ✓ | |

| Lizard-Dpath | 1,2551,042 | 20 | 23 | 23 | 23 | 168,510 | ✓ | ✓ |

| Lizard-CRAG | 1,5031,516 | 20 | 22 | 21 | 21 | 189,043 | ✓ | ✓ |

| Kather | 224224 | 20 | 18,638 | 4,444 | 1,976 | - | ✓ | ✓ |

| Crag | 1,5121,516 | 20 | 173 | - | 40 | - | ✓ | |

| Pannuke | 256256 | 2040 | 2,656 | 2,523 | 2,722 | 189,744 | ✓ | |

| In-house-MIL | 10,00010,000 | 40 | 169 | 39 | 52 | - | ✓ | |

4.2 Evaluation

4.2.1 Evaluation Protocols

The pretraining quality is accessed using the transfer learning (TL) and semi-supervised learning (SSL) protocol. For TL, the framework is pretrained from scratch on the in-house dataset, followed by supervised training on various downstream tasks with end-to-end fine-tuning. For SSL, models are pretrained on all training data and fine-tuned on parts of the dataset.

4.2.2 Evaluation Metrics

(1) Classification. Accuracy (Acc) and F1 score are used for classification tasks, including patch-wise classification and multiple instance learning. (2) Nuclear Segmentation and Detection. Aggregated Jaccard Index (AJI)[44] is reported to evaluate the segmentation quality, and F1 score with an IoU threshold of 0.5 is used to evaluate the detection quality. (3) Multi-class Nuclear Segmentation and Detection. If the further classification of nuclei is required, the segmentation quality is evaluated with multi-class panoptic quality (mPQ+) proposed in the CoNIC challenge[17]. The metric is the average across per-class PQ, whose statics are calculated over all images. F1 score averaged across all classes is reported to quantify the detection quality. (4) Gland Segmentation. We report object-level Dice and Hausdorff distance, which is used in the GlaS challenge[49] to evaluate the instance-level gland segmentation quality. We run each downstream task five times with different random seeds and report the mean, standard deviation, and statistical analysis based on independent two-sample t-test.

4.3 Experiment Setting

4.3.1 Pretraining

ResNet-50 is used as the feature extractor in the bottom-up pathway of . The normalization layers in the FPN and the segmentation branch are implemented with Group Normalization (GN) instead of Batch Normalization (BN) to alleviate the problem caused by the small batch size[50]. and maintain the design in StyleGAN2-ADA and CycleGAN, respectively. We rescale the input images to be 40 in order so that networks can concentrate on fine-grained details. Images are then resized in a range of 0.8 1.0 of original size and cropped to 256256 before feeding to our framework. We optimize the framework with batch size 12 and Adam optimizer with , , and . Hyperparameters for the instance segmentation branch are set following Mask-RCNN. We also follow the settings of StyleGAN2 when training , except that the lazy regularization and the path length regularization are disabled. The weight-balancing hyperparameters , , and are empirically set to 2.0, 10.0, and 2.0. Both the and for regularization are set to be 1.0. For the two-stage training strategy, we train the framework for 40k iterations with learning rate of in the first stage, and 25k iterations with learning rate of in the second stage. The whole pretraining process is conducted on 4 GeForce RTX 2080 Ti GPUs.

| Method | Kumar | Kather | Lizard-Dpath | Lizard-CRAG | PanNuke | Crag | In-house-MIL | |||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AJI | F1 | Acc | F1 | mPQ+ | F1 | mPQ+ | F1 | mPQ+ | F1 | Obj Dice | Obj Haus | Acc | F1 | |||||||||||||||||||||||||||||

| Baselines | ||||||||||||||||||||||||||||||||||||||||||

| Random |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||||||||||||

| ImageNet* |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||||||||||||

| Generalized Pretraining Approaches | ||||||||||||||||||||||||||||||||||||||||||

| MoCo v2[7] |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||||||||||||

| Simsiam[8] |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||||||||||||

| Pretraining Approaches for Medical Images | ||||||||||||||||||||||||||||||||||||||||||

| Mormont*[51] |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||||||||||||

| DiRA[36] |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||||||||||||

| Ciga[52] |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||||||||||||

| Ours |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||||||||||||

| Kumar | |||

| Dice | AJI | PQ | |

| Segmenters | |||

| UNet [53] | 0.758 | 0.556 | 0.478 |

| Mask-RCNN [43] | 0.760 | 0.546 | 0.509 |

| Micro-Net [54] | 0.797 | 0.560 | 0.519 |

| Panoptic FPN [55] | 0.805 | 0.573 | 0.557 |

| CIA-Net [56] | 0.818 | 0.620 | 0.577 |

| Hover-Net [4] | 0.826 | 0.618 | 0.597 |

| DSF-CNN [57] | 0.826 | - | 0.600 |

| REU-Net [58] | 0.826 | 0.636 | 0.604 |

| Pretrained Hover-Net | |||

| Hover-Net (MoCo v2) | 0.842 | 0.640 | 0.612 |

| Hover-Net (Simsiam) | 0.841 | 0.628 | 0.602 |

| Hover-Net (Mormont) | 0.845 | 0.645 | 0.615 |

| Hover-Net (DiRA) | 0.836 | 0.624 | 0.586 |

| Hover-Net (Ciga) | 0.837 | 0.631 | 0.597 |

| Hover-Net (Ours) | 0.853 | 0.657 | 0.625 |

4.3.2 Fine-tuning

For classification tasks on Kather, we initialize the ResNet backbone with our pretrained model and build a classifier head with adaptive average pooling, fully-connected layer, and softmax on top of the backbone. For the nuclear detection and segmentation task on Kumar, we follow [15] and use Panoptic FPN[55] as the segmenter. The weight of FPN is initialized by our pretraining approach, whereas the semantic segmentation branch and instance segmentation branch are initialized randomly. For multi-class nuclear segmentation and detection on PanNuke and the subsets of Lizard, we utilize Mask-RCNN, whose FPN is initialized by our method. For gland segmentation on Crag, we take UperNet[59] as the semantic segmenter and initialize the ResNet-50 backbone. In this task, both the objects and contours are predicted to help separate the touching instances following [57]. Multiple instance learning experiment is implemented following C2C [60], whose backbone is replaced with ResNet-50. The learning rate, batch size, and optimizer of each experiment are determined with grid search.

| Organ | Baseline | ImageNet | MoCo v2 | Simsiam | Mormont | DiRA | Ciga | Proposed | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Stomach | 0.4821 | 0.5440 | 0.6590 | 0.6332 | 0.6678 | 0.6572 | 0.6317 | 0.6206 | ||||||||||||||||

| Colon | 0.3933 | 0.4939 | 0.5001 | 0.4066 | 0.4545 | 0.4967 | 0.4813 | 0.5008 | ||||||||||||||||

| Bladder | 0.5333 | 0.6364 | 0.5768 | 0.5652 | 0.5957 | 0.5847 | 0.5505 | 0.6404 | ||||||||||||||||

| Prostate | 0.5360 | 0.6139 | 0.6304 | 0.5953 | 0.6234 | 0.6257 | 0.6280 | 0.6302 | ||||||||||||||||

| Liver | 0.4405 | 0.5370 | 0.5455 | 0.4789 | 0.5253 | 0.5206 | 0.5190 | 0.5323 | ||||||||||||||||

| Kidney | 0.5216 | 0.6025 | 0.6075 | 0.6176 | 0.6185 | 0.6145 | 0.6090 | 0.6226 | ||||||||||||||||

| Breast | 0.4639 | 0.5795 | 0.5922 | 0.5652 | 0.6021 | 0.5960 | 0.5659 | 0.6056 | ||||||||||||||||

| Overall |

|

|

|

|

|

|

|

|

4.4 Transfer Learning Experiments

We experiment on our in-house dataset and prepare mask images with equivalent amounts of data for pretraining. We compare our approach against the two most recent self-supervised pretraining methods for medical images (DiRA[36] and Ciga-SimCLR[52]), and two methods for natural images (MoCo v2[7] and Simsiam[8]). The primary hyperparameters of these pretraining methods have been tuned referring to the ablation study of their original work on Kumar (e.g., the momentum value and the softmax temperature of MoCo v2). Supervised pretraining on ImageNet and Mormont et al.[51] are also included for comparison. Note that we can only initialize the ResNet backbone for downstream tasks with these approaches.

4.4.1 Multi-organ Nuclear Detection and Segmentation

As shown in Table 2, although no extra annotation is used in our pretraining framework, we outperform supervised pretraining on ImageNet (p0.05) and Mormont et al. (p0.05) on Kumar. Moreover, our method produces better results than previous SOTA pretraining approaches (p0.05, compared with MoCo v2), indicating that our nucleus-aware method is more effective for the dense-prediction task and generalizes better to histopathology images from various organs. The per-organ results reported in Table 4 indicate that our method consistently benefits nuclear segmentation across various organs. We not only improve the performance on the organs with glandular structures (e.g., colon, prostate, and breast), but also benefit that without the special structure (e.g., bladder). We also compare with the SOTA methods for nuclear segmentation on Kumar. To this end, we use HoVer-Net[4] as the segmenter, and replace the Preact-ResNet-50 backbone with the pretrained ResNet-50, similar to the previous work [61]. As reported in Table 3, it is interesting to find that all the pretraining methods improve the performance of Hover-Net (Dice), among which we achieve new SOTA results on Kumar.

| Organ | Baseline | ImageNet | MoCo v2 | Simsiam | Mormont | DiRA | Ciga | Proposed | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Adrenal | 0.3303 | 0.3698 | 0.3835 | 0.3807 | 0.3854 | 0.3774 | 0.3561 | 0.3807 | ||||||||||||||||

| Bile Duct | 0.3328 | 0.3696 | 0.3560 | 0.3510 | 0.3630 | 0.3648 | 0.3161 | 0.3613 | ||||||||||||||||

| Bladder | 0.3170 | 0.2918 | 0.3330 | 0.3397 | 0.3221 | 0.3161 | 0.3245 | 0.3566 | ||||||||||||||||

| Breast | 0.3773 | 0.3890 | 0.4022 | 0.4040 | 0.3956 | 0.4001 | 0.3690 | 0.4190 | ||||||||||||||||

| Cervix | 0.2820 | 0.3244 | 0.3166 | 0.3586 | 0.3435 | 0.3362 | 0.2688 | 0.3344 | ||||||||||||||||

| Colon | 0.3301 | 0.3482 | 0.3852 | 0.3527 | 0.3751 | 0.3817 | 0.3378 | 0.3932 | ||||||||||||||||

| Esophagus | 0.3651 | 0.3860 | 0.4017 | 0.3768 | 0.3989 | 0.3873 | 0.3539 | 0.3977 | ||||||||||||||||

| H&N* | 0.3113 | 0.3251 | 0.3817 | 0.3430 | 0.3725 | 0.3884 | 0.3622 | 0.3548 | ||||||||||||||||

| Kidney | 0.2473 | 0.2417 | 0.2506 | 0.2356 | 0.2726 | 0.2609 | 0.2045 | 0.2335 | ||||||||||||||||

| Liver | 0.3698 | 0.3996 | 0.4068 | 0.4112 | 0.4003 | 0.4098 | 0.3695 | 0.4242 | ||||||||||||||||

| Lung | 0.2480 | 0.2826 | 0.2834 | 0.2719 | 0.2700 | 0.2905 | 0.2547 | 0.2886 | ||||||||||||||||

| Ovarian | 0.3509 | 0.3849 | 0.4296 | 0.4185 | 0.4037 | 0.4258 | 0.3852 | 0.4278 | ||||||||||||||||

| Pancreatic | 0.2379 | 0.2952 | 0.3442 | 0.3020 | 0.3492 | 0.3332 | 0.2469 | 0.2530 | ||||||||||||||||

| Prostate | 0.2465 | 0.3187 | 0.3260 | 0.2950 | 0.3415 | 0.3089 | 0.2526 | 0.3039 | ||||||||||||||||

| Skin | 0.2093 | 0.2787 | 0.2718 | 0.2920 | 0.2780 | 0.3060 | 0.2624 | 0.3070 | ||||||||||||||||

| Stomach | 0.3211 | 0.3232 | 0.3659 | 0.3323 | 0.3567 | 0.3725 | 0.3427 | 0.3506 | ||||||||||||||||

| Testis | 0.3306 | 0.3544 | 0.3894 | 0.3876 | 0.3864 | 0.3752 | 0.3347 | 0.4051 | ||||||||||||||||

| Thyroid | 0.2989 | 0.3250 | 0.3397 | 0.3007 | 0.3474 | 0.3441 | 0.3111 | 0.3312 | ||||||||||||||||

| Uterus | 0.2354 | 0.2420 | 0.2575 | 0.2512 | 0.2548 | 0.2590 | 0.2385 | 0.2578 | ||||||||||||||||

| Overall |

|

|

|

|

|

|

|

|

| Method | Lizard-Dpath | Lizard-CRAG | Kather | |||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 10% (2) | 25% (5) | 50% (10) | 10% (2) | 25% (5) | 50% (10) | 0.25% (50) | 0.50% (88) | 1.00% (178) | ||||||||||||||||||||||||||||||||||||||||||||||||

| mPQ+ | F1 | mPQ+ | F1 | mPQ+ | F1 | mPQ+ | F1 | mPQ+ | F1 | mPQ+ | F1 | Acc | F1 | Acc | F1 | Acc | F1 | |||||||||||||||||||||||||||||||||||||||

| Baseline | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Random |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||||||||||||||||||||||

| Generalized Pretraining Approaches | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| MoCo v2[7] |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||||||||||||||||||||||

| Simsiam[8] |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||||||||||||||||||||||

| Pretraining Approaches for Medical Images | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| DiRA[36] |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||||||||||||||||||||||

| Ciga[52] |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||||||||||||||||||||||

| Ours |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||||||||||||||||||||||

4.4.2 Colorectal Cancer Classification

Cancer classification is performed on the Kather dataset. We only fine-tune on 1% training data to make the task more challenging and exacerbate the differences amongst pretraining approaches. Results in Table 2 indicate that ImageNet provides more effective initialization than most of the pretraining methods. The results are in line with the finding that the self-supervised methods do not always achieve superior results against ImageNet [52]. Despite this, our method not only significantly outperforms other self-supervised methods with an increase of at least 1.12% for accuracy and 1.08% for F1 score (0.9590 vs. 0.9478 and 0.9564 vs. 0.9456, compared with MoCo v2, p0.05), but also achieves slightly better results than ImageNet. One possible explanation for these results could be that the cancer classification task is highly dependent on the properties of nuclei, which our pretraining method can help recognize better.

4.4.3 Gland Segmentation

We perform gland segmentation tasks on the Crag dataset. It is noteworthy that although our pretraining method is nucleus-aware, we also provide stronger initialization for gland segmentation than supervised counterparts (ImageNet, p0.05 and Mormont, p0.05, Obj Dice). We also achieve superior results than other self-supervised methods, although the improvement is not significant (0.8649 vs. 0.8616, p=0.28, compared with Ciga). The improvements can be explained by our special design of the nuclear distribution in our mask images, which is similar to that of epithelial cells around the glands.

4.4.4 Multi-class Nuclear Detection and Segmentation

On the Dpath subset of Lizard, the proposed method outperforms other pretraining methods for mPQ+. However, no improvement in F1 score is observed compared to MoCo v2. We attribute the results to the trade-off between nuclear detection and subtyping, which is more obvious in the proposed approach because we do not classify the instances in our segmentation branch during pretraining. When experimenting on the CRAG subset of Lizard, we achieve the best results in terms of both mPQ+ and F1.

4.4.5 Multi-organ and Multi-class Nuclear Detection and Segmentation

We also experiment on a more diverse dataset to further evaluate the transferability of our pretrained model in case multi-organ and multi-class nuclei need to be segmented. The experiments performed on PanNuke indicate that our method outperforms DiRA (p0.05), which is the previous SOTA pretraining method. We can learn from the per-organ results in Table 5 that both the epithelial-like structures (e.g., those in breast, colon, and liver) and non-epithelial-like structures (e.g., those in bladder) benefit from our pretraining methods. The results suggest the robustness of our pretrained model that benefits various downstream situations.

4.4.6 Multiple Instance Learning

The multiple instance learning experiment is performed on the In-house-MIL dataset to evaluate whether our method benefits the special two-stage model for MIL. The original work [60] used ImageNet-pretrained backbone for sampling and aggregation. We also find the strategy effective compared with initializing the backbone randomly, which can hardly provide discriminative features after sampling. Moreover, it is observed that the generalized self-supervised pretraining methods fail to provide stronger initialization, whereas the pertaining methods for medical images benefit more compared with ImageNet. In such a special task, our method still demonstrates its superiority compared with ImageNet (p0.05), and outperforms other pretraining approaches.

4.5 Semi-supervised Learning Experiments

Let and denote the labeled samples and unlabeled samples from the same distribution (). Semi-supervised learning (SSL) aims to utilize to boost the model performance on . We conduct SSL following the protocol that self-supervised pretraining is performed on the whole training set and fine-tuning is later performed on parts of them[13]. We compare with other self-supervised pretraining approaches described in 4.4 with the same protocol. To evaluate the performance, we vary the annotation budget for the training set, keep the validation set fixed and report the performance on the test set.

4.5.1 Multi-class Nuclear Detection and Segmentation

We use the CRAG subset and the Dpath subset of Lizard for the nuclear object detection and segmentation experiment. We fine-tune Mask-RCNN with different annotation budgets (10%, 25%, and 50%) and present the results for each subset in Table 6. Our pretraining approach improves the performance on both subsets and all of the metrics over prior methods significantly (p0.05). On the CRAG subset, it is striking to find that we just need 5% of labeled data to match the best performance obtained from other methods that need 25% annotation budgets (0.2478 vs. 0.2522, mPQ+). Our method also performs well on the Dpath subset, where MoCo v2, Simsiam, and DiRA fail to provide effective pretrained models in most cases, whose results are even worse than random initialization. We attribute the decreased performance of these approaches to insufficient pretraining data. Specifically, these approaches may be overfitted to the pretext tasks in the Lizard training dataset, which contains only 194 images for pretraining. The proposed pretraining method, on the other hand, does not have this issue and yields more robust results.

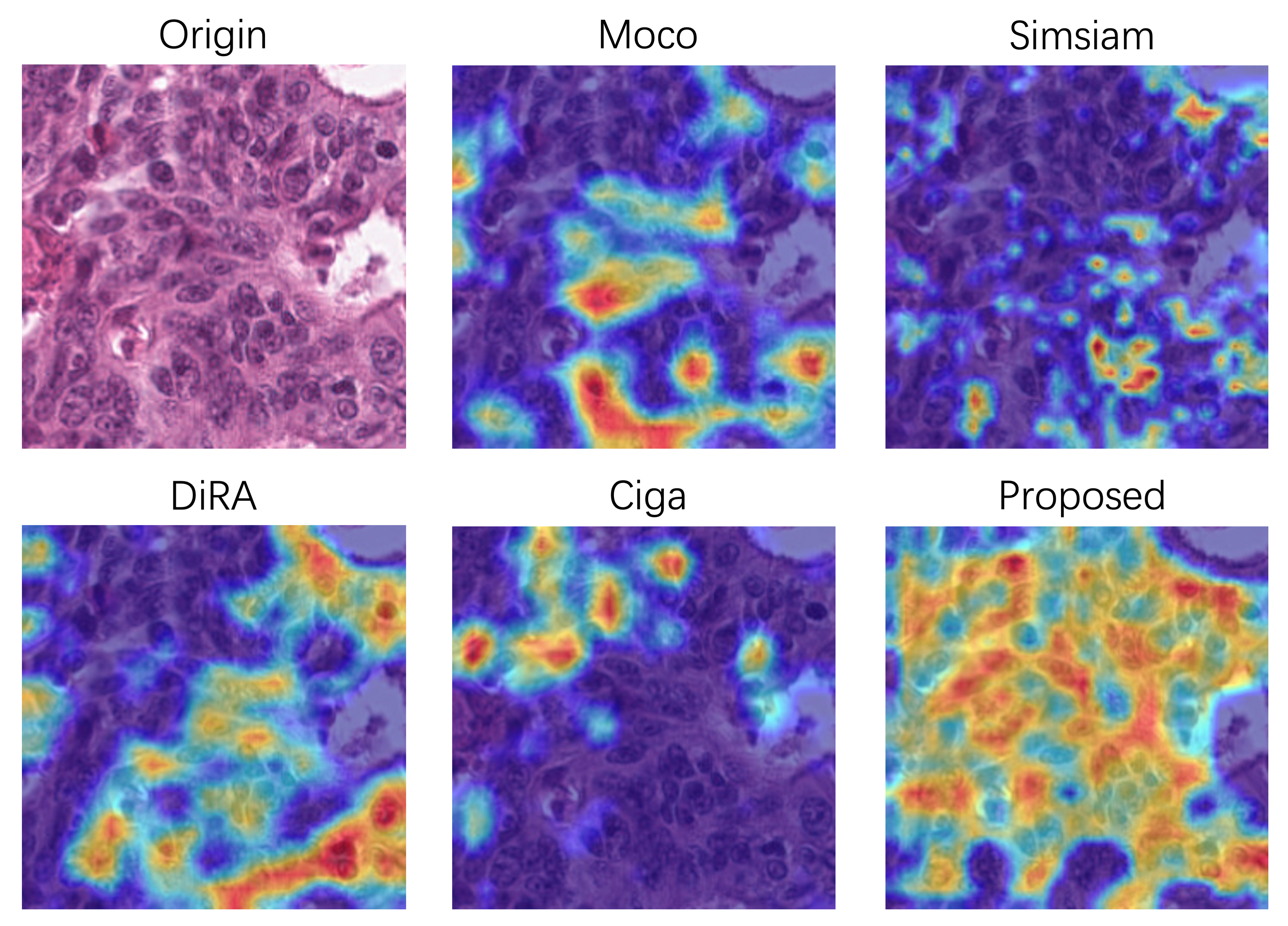

4.5.2 Cancer Classification

We pretrain on the whole training set and validation set of Kather without any annotations, and fine-tune the classifier with different annotation budgets (0.2%, 0.5%, and 1%). Similar to [13], all of the data regimes are extremely low due to the high baseline performance (0.9532 for Acc with 2% training data). Table 6 demonstrates that we outperform the baseline whose weights are initialized randomly (p0.05). It is noteworthy that our pretraining method focuses on fine-grained details where most of the nuclei are recognized. This is confirmed in Fig. 5 with Grad-Cam [62] visualization algorithm which generates a heatmap that highlights supportive pixels in the histopathology image for the classifier. It is also interesting to find that we not only focus on nucleus-level features, but also capture cell/tissue-level features, which may interpret the superior performance on gland segmentation in 4.4.3. Such multi-level features are beneficial for classification, where we outperform other discriminative self-supervised learning approaches (i.e., MoCo, Simsiam, Ciga) significantly (p0.05). However, the performance boost is not as significant as segmentation tasks compared with DiRA, which captures stronger global representation by incorporating discriminative self-supervised learning with generative and adversarial ones.

4.6 Ablation Study

A thorough ablation study is conducted to justify the benefit of our design by progressively applying each component for our transfer learning and semi-supervised learning protocols (25% training data). We modify the method[14] as the baseline, which also uses a CycleGAN-based framework to translate between mask images and histopathology images. It is noteworthy that their intention is to augment the training data for nuclear segmentation, which cannot be directly used for comparison. Therefore, we replace their mask generator with our ResNet-based generator and only implement their CycleGAN stage for a fair comparison.

| Configuration | Transfer Learning | Semi-supervised Learning | |||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Kumar | Kather | Lizard-Dpath | Lizard-CRAG | ||||||||||||||||||||||

| AJI | F1 | Acc | F1 | mPQ+ | F1 | mPQ+ | F1 | ||||||||||||||||||

| Mask Images | |||||||||||||||||||||||||

| Baseline[14] |

|

|

|

|

|

|

|

|

|||||||||||||||||

| +Distribution |

|

|

|

|

|

|

|

|

|||||||||||||||||

| +Stylization |

|

|

|

|

|

|

|

|

|||||||||||||||||

| Architecture and Training Strategy | |||||||||||||||||||||||||

| +Two-stage |

|

|

|

|

|

|

|

|

|||||||||||||||||

| +Co-modulation |

|

|

|

|

|

|

|

|

|||||||||||||||||

| +ADA |

|

|

|

|

|

|

|

|

|||||||||||||||||

| +ISG |

|

|

|

|

|

|

|

|

|||||||||||||||||

| Configuration | Transfer Learning | Semi-supervised Learning | |||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Kumar | Kather | Lizard-Dpath | Lizard-CRAG | ||||||||||||||||||||||

| AJI | F1 | Acc | F1 | mPQ+ | F1 | mPQ+ | F1 | ||||||||||||||||||

| w/o SG |

|

|

|

|

|

|

|

|

|||||||||||||||||

| w/ SSG |

|

|

|

|

|

|

|

|

|||||||||||||||||

| w/ ISG |

|

|

|

|

|

|

|

|

|||||||||||||||||

4.6.1 Mask Image Quality

We examine the effect of the quality of synthesized mask images. As illustrated in the corresponding section in Table 7, extra information brought by the nuclei distribution and stylization is introduced. We begin with a random distribution and binary nuclei mask design following [14], then progressively add the glandular structure and stylize the nuclei. Generally, we can observe the performance gain for both the transfer learning and semi-supervised learning with the introduction of distribution priors (p0.05). Stylization benefits most cases, but slightly degrades the segmentation performance on Kumar.

4.6.2 Architecture design

To analyze the effect of the network design, we investigate different architectures of our framework. As summarized in Table 7, we divide the design into three main parts: The co-modulation design for pathology generator , the adaptive discriminator augmentation strategy (ADA) for , and the segmentation guided strategy. For the first part, we find it effective in our transfer learning protocols. However, in our semi-supervised learning protocols where the data for pretraining is limited, the complex generator design is not beneficial due to the over-fitting problem. We alleviate the problem by augmenting the pretraining data with ADA, which effectively boosts the performance for semi-supervised learning, while also further improving the performance for transfer learning. Moreover, we discuss the benefits of instance segmentation guided strategy (ISG). The results in Table 7 and Table 8 show that the semantic segmentation guided strategy fails to offer useful information, supporting our analysis in 3.4. On the contrary, the instance segmentation guided strategy effectively improves the performance, especially for transfer learning experiments where the improvement of 1.68% is observed on Kumar and the improvement of 1.31 % is observed on Kather (both in terms of F1 score, p0.05).

4.6.3 Pretraining Schedule

Due to the inconsistent convergence rates of and , we design a two-stage pretraining strategy. Table 7 and Table 9 investigate the effect of the extra training stages. Overall, the additional training stage yields superior performance than pretraining for a single stage (p0.05 for transfer learning). We observe no significant performance improvement with more training stages.

| Configuration | Transfer Learning | Semi-supervised Learning | |||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Kumar | Kather | Lizard-Dpath | Lizard-CRAG | ||||||||||||||||||||||

| AJI | F1 | Acc | F1 | mPQ+ | F1 | mPQ+ | F1 | ||||||||||||||||||

| One-stage |

|

|

|

|

|

|

|

|

|||||||||||||||||

| Two-stage |

|

|

|

|

|

|

|

|

|||||||||||||||||

| Three-stage |

|

|

|

|

|

|

|

|

|||||||||||||||||

| Initialized | Transfer Learning | Semi-supervised Learning | |||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Kumar | Kather | Lizard-Dpath | Lizard-CRAG | ||||||||||||||||||||||

| AJI | F1 | Acc | F1 | mPQ+ | F1 | mPQ+ | F1 | ||||||||||||||||||

| ResNet |

|

|

|

|

|

|

|

|

|||||||||||||||||

| FPN |

|

|

- | - |

|

|

|

|

|||||||||||||||||

| All available |

|

|

- | - |

|

|

|

|

|||||||||||||||||

4.6.4 Network Initialization for Dense-prediction Tasks

We evaluate the effect of initializing the ResNet backbone, FPN, and all the structures we can initialize (including the FPN and the instance segmentation branch) in Table 10. Initializing FPN demonstrates better performance than merely initializing the encoder backbone. It is also inspiring to find that the performance can be further improved by further initializing the instance segmentation head on Kumar. However, when additional instance segmentation heads are further initialized, we observe a minor improvement on Kather but degraded performance on Lizard. This may be attributed to the different roles of the segmentation branch during pretraining and fine-tuning. In downstream tasks, when the segmentation branch further classifies the nuclei, initializing the heads of the segmenter might cause the network to ignore the differences among nuclei. Therefore, we only initialize the FPN for all the dense-prediction tasks.

5 Conclusion and Discussion

In this paper, a nucleus-aware self-supervised framework based on UNIT is introduced for histopathology images. Due to the importance of nuclear distribution and morphology for pathologic analysis, it is a requirement that a self-supervised learning framework includes these priors. In our method, the cycle consistency between histopathology images and pseudo mask images containing rich information about the nuclei is intended to impose the model’s awareness of nuclear instances. The whole framework is enhanced by the CoModGAN-ADA generator, which ensures the quality and variety of generated histopathology images. Moreover, the introduction of instance segmentation guided strategy improves the model’s ability to extract instance-level information.

The proposed self-supervised learning method is effective in extracting fine-grained features, which are more helpful in dense-prediction tasks than other pretraining methods. This is confirmed in 7 transfer learning experiments and 9 semi-supervised learning experiments, where our method significantly outperforms the SOTA methods in most cases.

We also investigate whether our method provides discriminative representation for classification tasks. Although the heatmap visualization in Fig. 5 and the semi-supervised (0.25%) results in Table 6 indicates that our method might get biased to nucleus-level features, which could be not as effective as global ones obtained by discriminative pretraining, the transfer learning results show that we can adapt to the classification task with high-quality. Moreover, we also perform the linear evaluation protocol [63] on Kather. As shown in Table 11, we observe that our features are more linear separable than randomly initialized ones (p0.05). However, the classification tasks on Kather described in the paper are only conducted with the TUM and NORM classes. In order to make a more comprehensive analysis of the pretraining methods, we perform an additional multi-class classification task on Kather. It is noteworthy that we exclude adipose, background, debris, and mucus here, because these classes are nuclei-free, which can hardly benefit from our nucleus-aware pretraining method. We report the performance on the remaining 5 classes of Kather in Table 12. The results show that our method is still more effective for differentiating various classes besides TUM and NORM, indicated by the highest Acc over other pretraining methods, although the macro F1 score is slightly lower than the previous SOTA method. In the future, we will focus on extending our method with more discriminative features which supplement the local ones for its border usage in classification tasks.

| Kather | ||

|---|---|---|

| Acc | F1 | |

| Random | 0.5936±0.0158 | 0.4966±0.0147 |

| Proposed | 0.7700±0.0146 | 0.7589±0.0146 |

| Method | Acc | F1 |

|---|---|---|

| Baseline | 0.6827±0.0134 | 0.6666±0.0098 |

| ImageNet | 0.8266±0.0041 | 0.7603±0.0021 |

| MoCo v2 | 0.8489±0.0089 | 0.8255±0.0042 |

| Simsiam | 0.7666±0.0030 | 0.7376±0.0029 |

| Mormont | 0.8479±0.0102 | 0.8235±0.0057 |

| DiRA | 0.8412±0.0057 | 0.8270±0.0021 |

| Ciga | 0.8390±0.0083 | 0.8225±0.0063 |

| Proposed | 0.8511±0.0073 | 0.8234±0.0024 |

It is also inspiring to find that the proposed pretraining method is robust to various situations. First of all, our method is robust to different tissues for pretraining. We validate this by pretraining on different tissue types and different tumor types. We collect equal amounts of histopathology images from breast tissues other than colorectal histopathology images for pretraining. As shown in Table 13, we observe insignificant differences between the models pretrained on colon or breast (p0.42, AJI). We also pretrain on both the colon and breast tissues to assess the potential benefits of leveraging more diverse pretraining sources for our method. In this case, we double the training iterations, ensuring that the number of times each image was presented to the network remains unchanged. It is inspiring to find that the performance on Kumar (AJI) and Kather is slightly improved with the incorporation of such diverse datasets. The observed improvement can be explained in Table 14, which shows that a more diverse pretraining source can mitigate biases towards specific organs. For example, when only using colon tissues for pretraining, the performance on the stomach, liver, and breast slightly decreased compared to pretraining on breast tissues alone. However, this bias was alleviated when employing the combined dataset, resulting in performance on these organs that match those achieved through pretraining on breast tissues. Similar results can also be found on the Pannuke dataset reported in Table 15, where the decreased metrics on adrenal, breast, cervix, liver, pancreatic, and uterus recover after adding breast tissues for pretraining. Therefore, more stable results may be achieved when we collect more diverse datasets for pretraining.

| Organ | Kumar | Kather | ||

|---|---|---|---|---|

| AJI | F1 | Acc | F1 | |

| Breast | 0.5928±0.0033 | 0.7547±0.0051 | 0.9554±0.0039 | 0.9526±0.0052 |

| Colon | 0.5932±0.0025 | 0.7591±0.0044 | 0.9588±0.0028 | 0.9560±0.0041 |

| Breast+Colon | 0.5961±0.0052 | 0.7582±0.0061 | 0.9611±0.0049 | 0.9584±0.0062 |

| Organ | Breast | Colon | Breast+Colon | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Stomach | 0.6275 | 0.6206 | 0.6278 | ||||||

| Colon | 0.4895 | 0.5008 | 0.5006 | ||||||

| Bladder | 0.6270 | 0.6404 | 0.6338 | ||||||

| Prostate | 0.6352 | 0.6302 | 0.6407 | ||||||

| Liver | 0.5495 | 0.5323 | 0.5451 | ||||||

| Kidney | 0.6056 | 0.6226 | 0.6223 | ||||||

| Breast | 0.6157 | 0.6056 | 0.6087 | ||||||

| Overall |

|

|

|

| Organ | Breast | Colon | Breast+Colon | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Adrenal | 0.4061 | 0.3807 | 0.3978 | ||||||

| Bile Duct | 0.3673 | 0.3613 | 0.3813 | ||||||

| Bladder | 0.3464 | 0.3566 | 0.3470 | ||||||

| Breast | 0.4203 | 0.4190 | 0.4201 | ||||||

| Cervix | 0.3711 | 0.3344 | 0.3567 | ||||||

| Colon | 0.3704 | 0.3932 | 0.3836 | ||||||

| Esophagus | 0.3998 | 0.3977 | 0.4087 | ||||||

| H&N* | 0.3698 | 0.3548 | 0.3866 | ||||||

| Kidney | 0.2377 | 0.2335 | 0.2412 | ||||||

| Liver | 0.4296 | 0.4242 | 0.4279 | ||||||

| Lung | 0.2662 | 0.2886 | 0.2790 | ||||||

| Ovarian | 0.4080 | 0.4278 | 0.4032 | ||||||

| Pancreatic | 0.2840 | 0.2530 | 0.2576 | ||||||

| Prostate | 0.3108 | 0.3039 | 0.3336 | ||||||

| Skin | 0.2819 | 0.3070 | 0.3284 | ||||||

| Stomach | 0.3523 | 0.3506 | 0.3538 | ||||||

| Testis | 0.3692 | 0.4051 | 0.3811 | ||||||

| Thyroid | 0.3374 | 0.3312 | 0.3203 | ||||||

| Uterus | 0.2677 | 0.2578 | 0.2671 | ||||||

| Overall |

|

|

|

Furthermore, we collect histopathology images scanned from poorly differentiated colorectal tumors, which have less obvious glandular patterns. It is expected that the glandular distribution of nuclei in the pseudo mask performs worst than the random distribution in such a case. However, as reported in Table 16, we observe comparable results of our method and the baseline (p=0.35), which indicate that our framework can robustly handle the distribution gap between the histopathology images and the mask images. Secondly, our method is robust to various downstream tasks, which can be seen in Table 2 where our method is effective in classification, instance segmentation, semantic segmentation, and multiple instance learning tasks. Thirdly, our method benefits various architectures for downstream tasks. We experiment on Panoptic FPN, Mask-RCNN, Hover-Net, UperNet, and C2C for various tasks, and report new SOTA results on Kumar using Hover-Net. Furthermore, our method is robust to the selection of hyperparameters. We conducted an additional ablation study to examine the sensitivity of our method to the selection of key hyperparameters. Specifically, we evaluated the impact of changing a weight-balancing hyperparameter while keeping the other hyperparameters fixed. The results are presented in Fig. 6, which reveal that the performance remains relatively stable across various weight-balancing hyperparameter settings within a specific range.

| Mask Image Design | Kumar | Kather | ||

|---|---|---|---|---|

| AJI | F1 | Acc | F1 | |

| Baseline | 0.5929±0.0054 | 0.7546±0.0067 | 0.9539±0.0048 | 0.9514±0.0054 |

| +Distribution | 0.5920±0.0042 | 0.7537±0.0050 | 0.9501±0.0046 | 0.9485±0.0052 |

Although the proposed self-supervised pretraining approach has demonstrated promising results for various tasks, some extensions remain to be made. First of all, the mask images are fixed and limited by the hand-crafted design, where we randomly redistribute parts of the nuclei to match the glandular structures. However, a better solution is to make the nuclear distribution vary with different datasets for pertaining, so that the aligned data distribution and the possibility of adopting more diverse datasets for pretraining may lead to better performance. Strategies such as pseudo-labeling or using pretrained nuclei segmenters may be useful, which will be investigated in the future. Moreover, the proposed method only focuses on the extraction of local features, which is orthogonal with pretraining approaches that obtain global representation. It is desirable to research for approaches to embed these methods. We hope that our method can serve as a baseline for nucleus-aware self-supervised pretraining methods.

References

- [1] M. N. Gurcan, L. E. Boucheron, A. Can, A. Madabhushi, N. M. Rajpoot, and B. Yener, “Histopathological image analysis: A review,” IEEE Rev. Biomed. Eng, vol. 2, pp. 147–171, 2009.

- [2] J. A. Ludwig and J. N. Weinstein, “Biomarkers in cancer staging, prognosis and treatment selection,” Nat. Rev. Cancer, vol. 5, no. 11, pp. 845–856, 2005.

- [3] Z. Yang, L. Ran, S. Zhang, Y. Xia, and Y. Zhang, “Ems-net: Ensemble of multiscale convolutional neural networks for classification of breast cancer histology images,” Neurocomputing, vol. 366, pp. 46–53, 2019.

- [4] S. Graham et al., “Hover-net: Simultaneous segmentation and classification of nuclei in multi-tissue histology images,” Med. Image Anal., vol. 58, p. 101563, 2019.

- [5] K. Sirinukunwattana et al., “Image-based consensus molecular subtype (imcms) classification of colorectal cancer using deep learning,” Gut, vol. 70, no. 3, pp. 544–554, 2021.

- [6] T. Chen, S. Kornblith, M. Norouzi, and G. Hinton, “A simple framework for contrastive learning of visual representations,” in Int. Conf. Learn. Represent. PMLR, 2020, pp. 1597–1607.

- [7] X. Chen, H. Fan, R. Girshick, and K. He, “Improved baselines with momentum contrastive learning,” arXiv:2003.04297, 2020.

- [8] X. Chen and K. He, “Exploring simple siamese representation learning,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit., 2021, pp. 15 750–15 758.

- [9] P. Vincent, H. Larochelle, Y. Bengio, and P.-A. Manzagol, “Extracting and composing robust features with denoising autoencoders,” in Int. Conf. Mach. Learn., 2008, p. 1096–1103.

- [10] K. He, X. Chen, S. Xie, Y. Li, P. Dollár, and R. Girshick, “Masked autoencoders are scalable vision learners,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit., 2022, pp. 16 000–16 009.

- [11] Z. Xie et al., “Simmim: A simple framework for masked image modeling,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit., 2022, pp. 9653–9663.

- [12] J. Zhou et al., “ibot: Image bert pre-training with online tokenizer,” in Int. Conf. Learn. Represent., 2022.

- [13] N. A. Koohbanani, B. Unnikrishnan, S. A. Khurram, P. Krishnaswamy, and N. Rajpoot, “Self-path: Self-supervision for classification of pathology images with limited annotations,” IEEE Trans. Med. Imag., vol. 40, no. 10, pp. 2845–2856, 2021.

- [14] F. Mahmood et al., “Deep adversarial training for multi-organ nuclei segmentation in histopathology images,” IEEE Trans. Med. Imag., vol. 39, no. 11, pp. 3257–3267, 2020.

- [15] D. Liu et al., “Unsupervised instance segmentation in microscopy images via panoptic domain adaptation and task re-weighting,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit., 2020.

- [16] X. Gong, S. Chen, B. Zhang, and D. Doermann, “Style consistent image generation for nuclei instance segmentation,” in Proc. IEEE Winter Conf. Appl. Comput. Vis., 2021, pp. 3994–4003.

- [17] S. Graham et al., “Conic: Colon nuclei identification and counting challenge 2022,” arXiv:2111.14485, 2021.

- [18] V. Dumoulin et al., “Adversarially learned inference,” in Int. Conf. Learn. Represent., 2017.

- [19] J. Donahue, P. Krähenbühl, and T. Darrell, “Adversarial feature learning,” arXiv:1605.09782, 2016.

- [20] J. Donahue and K. Simonyan, “Large scale adversarial representation learning,” in Adv. Neural Inf. Process. Syst., vol. 32. Curran Associates, Inc., 2019.

- [21] X. Zhu, X. Zhang, X.-Y. Zhang, Z. Xue, and L. Wang, “A novel framework for semantic segmentation with generative adversarial network,” J. Vis. Commun. Image R., vol. 58, pp. 532–543, 2019.

- [22] J.-Y. Zhu, T. Park, P. Isola, and A. A. Efros, “Unpaired image-to-image translation using cycle-consistent adversarial networks,” in Proc. IEEE Int. Conf. Comput. Vis., 2017.

- [23] S. Zhao et al., “Large scale image completion via co-modulated generative adversarial networks,” in Int. Conf. Learn. Represent., 2021.

- [24] D. Bazazian, A. Calway, and D. Damen, “Dual-domain image synthesis using segmentation-guided gan,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. Workshops, 2022, pp. 507–516.

- [25] A. Aakerberg, A. S. Johansen, K. Nasrollahi, and T. B. Moeslund, “Semantic segmentation guided real-world super-resolution,” in Proc. IEEE Winter Conf. Appl. Comput. Vis. Workshops, 2022, pp. 449–458.

- [26] P. Ardino, Y. Liu, E. Ricci, B. Lepri, and M. de Nadai, “Semantic-guided inpainting network for complex urban scenes manipulation,” in Int. Conf. on Pattern Recognit., 2021, pp. 9280–9287.

- [27] P. Yang et al., “Cs-co: A hybrid self-supervised visual representation learning method for h&e-stained histopathological images,” Med. Image Anal., vol. 81, p. 102539, 2022.

- [28] Y. Luo, Z. Chen, and X. Gao, “Self-distillation augmented masked autoencoders for histopathological image classification,” arXiv:2203.16983, 2022.

- [29] X. Liu et al., “Self-supervised learning: Generative or contrastive,” IEEE Trans. Knowl. Data Eng., pp. 1–1, 2021.

- [30] X. Chen et al., “Context autoencoder for self-supervised representation learning,” in Int. Conf. Learn. Represent, 2023.

- [31] I. Goodfellow et al., “Generative adversarial nets,” in Adv. Neural Inf. Process. Syst., vol. 27, 2014.

- [32] A. Brock, J. Donahue, and K. Simonyan, “Large scale GAN training for high fidelity natural image synthesis,” in Int. Conf. Learn. Represent., 2019.

- [33] T. Karras, S. Laine, and T. Aila, “A style-based generator architecture for generative adversarial networks,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit., 2019.

- [34] O. Kupyn, T. Martyniuk, J. Wu, and Z. Wang, “Deblurgan-v2: Deblurring (orders-of-magnitude) faster and better,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit., 2019.

- [35] T. Karras, S. Laine, M. Aittala, J. Hellsten, J. Lehtinen, and T. Aila, “Analyzing and improving the image quality of stylegan,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit., 2020.

- [36] F. Haghighi, M. R. H. Taher, M. B. Gotway, and J. Liang, “Dira: Discriminative, restorative, and adversarial learning for self-supervised medical image analysis,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit., 2022, pp. 20 824–20 834.

- [37] X. Tao, Y. Li, W. Zhou, K. Ma, and Y. Zheng, “Revisiting rubik’s cube: Self-supervised learning with volume-wise transformation for 3d medical image segmentation,” in Med. Image Comput. Comput. Assist. Interv, 2020, pp. 238–248.

- [38] A. K. Mondal, A. Agarwal, J. Dolz, and C. Desrosiers, “Revisiting cyclegan for semi-supervised segmentation,” arXiv:1908.11569, 2019.

- [39] J. Hoffman et al., “CyCADA: Cycle-consistent adversarial domain adaptation,” in Int. Conf. Mach. Learn., 2018, pp. 1989–1998.

- [40] X. Mao, Q. Li, H. Xie, R. Y. Lau, and Z. Wang, “Multi-class generative adversarial networks with the l2 loss function,” arXiv:1611.04076, 2016.

- [41] K. Sirinukunwattana, D. R. J. Snead, and N. M. Rajpoot, “A stochastic polygons model for glandular structures in colon histology images,” IEEE Trans. Med. Imag., vol. 34, no. 11, pp. 2366–2378, 2015.

- [42] T. Karras, M. Aittala, J. Hellsten, S. Laine, J. Lehtinen, and T. Aila, “Training generative adversarial networks with limited data,” in Adv. Neural Inf. Process. Syst., 2020, pp. 12 104–12 114.

- [43] K. He, G. Gkioxari, P. Dollar, and R. Girshick, “Mask r-cnn,” in Proc. IEEE Int. Conf. Comput. Vis., 2017.

- [44] N. Kumar, R. Verma, S. Sharma, S. Bhargava, A. Vahadane, and A. Sethi, “A dataset and a technique for generalized nuclear segmentation for computational pathology,” IEEE Trans. Med. Imag., vol. 36, no. 7, pp. 1550–1560, 2017.

- [45] J. N. Kather et al., “Predicting survival from colorectal cancer histology slides using deep learning: A retrospective multicenter study,” PLoS medicine, vol. 16, no. 1, p. e1002730, 2019.

- [46] S. Graham et al., “Lizard: A large-scale dataset for colonic nuclear instance segmentation and classification,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. Workshops, 2021, pp. 684–693.

- [47] S. Graham et al., “Mild-net: Minimal information loss dilated network for gland instance segmentation in colon histology images,” Med. Image Anal., vol. 52, pp. 199–211, 2019.

- [48] J. Gamper, N. Alemi Koohbanani, K. Benet, A. Khuram, and N. Rajpoot, “Pannuke: An open pan-cancer histology dataset for nuclei instance segmentation and classification,” in Eur. Congr. Digit. Pathol., 2019, pp. 11–19.

- [49] K. Sirinukunwattana et al., “Gland segmentation in colon histology images: The glas challenge contest,” Med. Image Anal., vol. 35, pp. 489–502, 2017.

- [50] K. He, R. Girshick, and P. Dollar, “Rethinking imagenet pre-training,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit., 2019.

- [51] R. Mormont, P. Geurts, and R. Marée, “Multi-task pre-training of deep neural networks for digital pathology,” IEEE Journal of Biomedical and Health Informatics, vol. 25, no. 2, pp. 412–421, 2021.

- [52] O. Ciga, T. Xu, and A. L. Martel, “Self supervised contrastive learning for digital histopathology,” Mach. Learn. Appl., vol. 7, p. 100198, 2022.

- [53] O. Ronneberger, P. Fischer, and T. Brox, “U-net: Convolutional networks for biomedical image segmentation,” in Med. Image Comput. Comput. Assist. Interv, 2015, pp. 234–241.

- [54] S. E. A. Raza et al., “Micro-net: A unified model for segmentation of various objects in microscopy images,” Med. Image Anal., vol. 52, pp. 160–173, 2019.

- [55] A. Kirillov, R. Girshick, K. He, and P. Dollar, “Panoptic feature pyramid networks,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit., 2019.

- [56] Y. Zhou et al., “Cia-net: Robust nuclei instance segmentation with contour-aware information aggregation,” in Inf. Process Med. Imag., 2019, pp. 682–693.

- [57] S. Graham, D. Epstein, and N. Rajpoot, “Dense steerable filter cnns for exploiting rotational symmetry in histology images,” IEEE Trans. Med. Imag., vol. 39, no. 12, pp. 4124–4136, 2020.