NSSI-Net: Multi-Concept Generative Adversarial Network for Non-Suicidal Self-Injury Detection Using High-Dimensional EEG Signals in a Semi-Supervised Learning Framework

Abstract

Non-suicidal self-injury (NSSI) is a serious threat to the physical and mental health of adolescents, significantly increasing the risk of suicide and attracting widespread public concern. Electroencephalography (EEG), as an objective tool for identifying brain disorders, holds great promise. However, extracting meaningful and reliable features from high-dimensional EEG data, especially by integrating spatiotemporal brain dynamics into informative representations, remains a major challenge. In this study, we introduce an advanced semi-supervised adversarial network, NSSI-Net, to effectively model EEG features related to NSSI. NSSI-Net consists of two key modules: a spatial-temporal feature extraction module and a multi-concept discriminator. In the spatial-temporal feature extraction module, an integrated 2D convolutional neural network (2D-CNN) and a bi-directional Gated Recurrent Unit (BiGRU) are used to capture both spatial and temporal dynamics in EEG data. In the multi-concept discriminator, signal, gender, domain, and disease levels are fully explored to extract meaningful EEG features, considering individual, demographic, disease variations across a diverse population. Based on self-collected NSSI data (n=114), the model’s effectiveness and reliability are demonstrated, with a 7.44% improvement in performance compared to existing machine learning and deep learning methods. This study advances the understanding and early diagnosis of NSSI in adolescents with depression, enabling timely intervention. The source code is available at https://github.com/Vesan-yws/NSSINet.

Index Terms:

Electroencephalography, NSSI, Decoding Model, Multi-Concept Discriminator, Domain AdaptationI Introduction

Major depressive disorder (MDD) is a widespread chronic mental illness that manifests across all age groups [1]. Adolescents, in particular, exhibit a notably high prevalence of MDD, with approximately 13% of this population affected [2]. Among those diagnosed with depression, nearly 50% are reported to engage in non-suicidal self-injury (NSSI) behaviors [3]. NSSI involves intentionally harming oneself, like cutting or burning, without the intent to die. It has drawn attention due to its connection to long-term psychological problems and increased risk of suicide [4, 5]. The rising occurrence of NSSI among adolescents with depression [6, 7] highlights an urgent need for effective tools and methods to understand, diagnose, and intervene in these behaviors. Early detection of NSSI behavior is absolutely vital for effective disease treatment and for preventing further severe harm. An Automated detection approach not only aids in the management of underlying psychological issues but also significantly reduces the risk of the individual engaging in more dangerous or life-threatening actions in the future.

Electroencephalography (EEG) provides a direct method for reflecting neuronal dynamics originating in the central nervous system[8], making it highly valuable in detecting abnormal brain activities. EEG has been widely used across various disease fields due to its non-invasive nature, high temporal resolution, and sensitivity to brain dynamics. For example, it plays a crucial role in epilepsy research [9], Alzheimer’s Disease (AD) studies [10], depression diagnosis and monitoring [11], autism spectrum disorder (ASD) analysis [12], and schizophrenia research [13]. These applications underscore the versatility and importance of EEG in understanding and managing neurological and psychiatric conditions. In addition, the extraction of spatiotemporal features from EEG signals is crucial in revealing brain activity patterns related to diseases. For example, in seizure detection, EEGWaveNet developed a multiscale convolutional neural network (CNN) to extract spatiotemporal features [14], leading to significant improvements in detection accuracy. Liu et al. proposed a three-dimensional convolutional attention neural network (3DCANN) for EEG emotion recognition, integrating spatio-temporal feature extraction with an EEG channel attention module to effectively capture dynamic inter-channel relationships over time [15]. Zhang et al. introduced two deep learning-based frameworks that utilize spatio-temporal preserving representations of raw EEG streams to accurately identify human intentions. These frameworks combine convolutional and recurrent neural networks, effectively exploring spatial and temporal information either in a cascade or parallel configuration [16]. Gao et al. developed a novel EEG-based spatial-temporal convolutional neural network (ESTCNN) designed to detect driver fatigue by leveraging the spatial-temporal structure of multichannel EEG signals [17]. These studies highlight the effectiveness of spatiotemporal feature extraction in enhancing both the interpretability and performance of EEG-based disease studies.

However, in existing EEG-based disease studies, the inherent individual variability in EEG signals and the heavy reliance on labeling information present considerable challenges. This individual variability, which can arise from differences in brain anatomy, age, gender, and even the state of disease, often leads to inconsistencies in data interpretation and can significantly impact the generalization of intelligent models. The dependence on accurate and extensive labeling requires large, well-annotated datasets that are often time-consuming and costly to obtain. Moreover, labeling is subject to human error and bias, which can introduce noise into the training process, leading to models that may perform well on specific datasets but fail to generalize effectively to new, unseen data. To address the challenges present in existing EEG-based disease studies and to develop a robust, clinically applicable method for NSSI detection across diverse patient populations, we propose a novel multi-concept Generative Adversarial Network (GAN) framework, named NSSI-Net. This framework is developed using a semi-supervised learning approach, allowing it to effectively handle the variability in EEG signals and reduce dependency on extensive labeled data. The main contributions of this study are summarized as follows.

-

•

We propose a novel framework, NSSI-Net, which integrates a multi-concept discriminator architecture. This architecture incorporates discriminators at the signal, gender, domain, and disease levels, taking into account variations in individual and demographic characteristics and enabling the efficient extraction of spatiotemporal EEG features across a diverse population.

-

•

NSSI-Net is designed to operate within a semi-supervised learning paradigm, reducing the model’s reliance on manually labeled data. It not only mitigates the challenges associated with obtaining large, fully labeled datasets but also enhances the model’s generalizability across different patient populations.

-

•

The proposed NSSI-Net is validated using a self-constructed EEG dataset, consisting of recordings from 114 adolescents diagnosed with depression. Extensive experimental results demonstrate the efficiency and effectiveness of NSSI-Net in accurately detecting NSSI behaviors, establishing its potential for clinical application in diverse settings.

II RELATED WORK

II-A Neural Mechanisms in Depressed Adolescents with NSSI

NSSI in adolescents with MDD has garnered significant research interest due to its rising prevalence and associated long-term psychological consequences. The neural underpinnings of NSSI in depressed youth are complex, involving both structural and functional alterations in brain regions critical for emotion regulation, impulse control, and self-referential processing. Recent neuroimaging studies, utilizing a range of techniques including EEG, magnetic resonance imaging (MRI), functional MRI (fMRI) and diffusion MRI (dMRI), have shed light on these neural mechanisms. Iznak et al. [18] utilized EEG identified notable differences in brain activity between depressive female adolescents with suicidal and non-suicidal auto-aggressive behaviors, particularly highlighting disruptions in regions involved in emotional regulation and impulse control. Auerbach et al. [19] reviewed MRI studies on NSSI in youth, identifying significant brain alterations. The research found reduced volume in the anterior cingulate cortex and other regions linked to emotion regulation. Functional MRI also showed altered frontolimbic connectivity and blunted striatal activation in adolescents with NSSI. Melinda et al. [20] used diffusion MRI to identify significant white matter microstructural deficits in adolescents and young adults with NSSI compared to healthy controls. These deficits may contribute to an increased vulnerability to maladaptive coping strategies like NSSI, with lower white matter integrity being linked to longer NSSI duration and higher impulsivity. The above studies collectively suggest that NSSI behaviors in depressed adolescents are driven by intricate neural mechanisms are highly related to the abnormalities in neural patterns. An efficient and reliable NSSI detection method using neural signals could reduce the occurrence and recurrence of NSSI, thereby preventing the escalation to more severe outcomes such as suicidal behavior in real life.

II-B EEG Studies Exploring NSSI in Depressed Adolescents

In recent years, researchers have increasingly focused on utilizing EEG to explore the neural underpinnings of NSSI. In 2021, a study highlighted significant differences in EEG frequency and spatial components between depressive female adolescents who had attempted suicide and those who engaged in NSSI, demonstrating the potential of EEG as a diagnostic tool to distinguish between these two groups [21]. Moreover, subsequent research has revealed notable alterations in emotion-related EEG components and brain lateralization in response to negative emotional stimuli among adolescents with NSSI, suggesting that these neural changes could be crucial in understanding the emotional dysregulation associated with NSSI [22]. Further investigations have explored the effects of therapeutic interventions on EEG patterns in NSSI patients. For example, Zhao et al. ’s work observed significant changes in EEG microstate patterns before and after repetitive transcranial magnetic stimulation (rTMS) treatment, indicating that EEG can not only reflect the abnormal neural activity related to NSSI but also monitor the impact of therapeutic interventions [23]. These findings underscore the importance of EEG as a non-invasive, real-time measure that can provide insights into the neural dynamics associated with NSSI, potentially leading to more targeted and effective treatments.

On the other hand, machine learning and deep learning approaches have also been investigated for analyzing EEG signals to predict NSSI behaviors. For example, Marti et al. used classification trees model that leverages EEG features to identify young adults at risk for NSSI [24]. Kim et al. developed a Graph Theory-Based Model utilizing resting-state EEG to analyze cortical functional networks and classify patients with MDD into those who have attempted suicide and those with suicidal ideation [25]. Kentopp et al. developed an Adaptive Transfer Learning-based EEG Classification model specifically designed to classify EEG signals of adolescents with and without a history of NSSI [26]. However, these approaches often depend heavily on prior knowledge for model parameter adjustment and require comprehensive label information for fully supervised learning. This reliance poses challenges in clinical settings, where data may be sparse, labels may be incomplete, and manual intervention in model tuning is impractical. These existing methods may fall short of meeting the complex demands of clinical practice. Therefore, there is a need for a more robust and adaptable end-to-end semi-supervised learning model capable of effectively addressing the complexities of clinical data.

II-C Deep Learning for Feature Extraction from High-Dimensional EEG Data

Efficient feature extraction from high-dimensional EEG signals is essential for unraveling the complexities of brain functions and advancing accurate computational modeling. Traditional machine learning methods often struggle to capture the inherent and dynamic nature of EEG signals [24]. Deep learning methods offer the capability to automatically identify and characterize informative spatio-temporal features within EEG data, which not only enhance the precision of feature extraction but also allow for the dynamic adaptation to the non-linear and non-stationary characteristics of brain activity [27, 28, 29]. For example, a three-dimensional convolutional neural network (3D-CNN) was introduced, which processed EEG data as volumetric sequences and enhanced feature representation capabilities [30]. Considering the temporal dynamics in EEG series, a hierarchical spatial-temporal neural network was proposed to analyze the transition from regional to global brain activity, demonstrating the efficacy of deep learning in capturing detailed spatiotemporal information [31]. Besides of supervised learning, Liang et al. introduced a deep unsupervised autoencoder (AE) model (EEGFuseNet) designed to automatically characterize the interplay between spatial and temporal information in EEG data [32]. The latent features extracted by EEGFuseNet showed great potential for generalization across various applications. These works have underscored the transformative potential of deep learning in EEG research, which provide a more comprehensive, accurate and reliable understanding of brain activities.

II-D Semi-Supervised Learning in EEG Modeling

Current deep learning approaches in EEG analysis are predominantly dependent on fully labeled datasets. However, in clinical environments, acquiring such well-annotated data is both challenging and expensive. Semi-supervised learning frameworks present a promising solution to this problem by enabling the use of both labeled and unlabeled data. Preliminary investigations into semi-supervised learning have shown encouraging results in various EEG studies. For example, a semi-supervised clustering method was proposed to effectively group EEG signals based on their underlying patterns, even with minimal labeled data [33]. Further, Zhang et al. introduced a pairwise alignment of representations in semi-supervised EEG learning (PARSE), which enhances the consistency of feature representations across different data domains by aligning similar features from labeled and unlabeled data [34]. Recently, Ye et al. proposed a semi-supervised dual-stream self-attentive adversarial graph contrastive learning model to effectively leverage limited labeled data and large amounts of unlabeled data for cross-subject EEG-based emotion recognition [35]. These methods successfully employed semi-supervised techniques to classify neurological conditions with a reduced amount of labeled data, achieving comparable accuracy to fully supervised models. This indicates the potential of semi-supervised learning in advancing EEG-based diagnostics and interventions, especially in resource-constrained clinical settings.

III PARTICIPANTS AND DATA

III-A Participants

We recruited a total of 114 adolescent patients, aged 13 to 18 years, from Shenzhen Kangning Hospital, all of whom had at least five years of education. These patients were diagnosed with MDD based on DSM-5 criteria and were further screened using the Mini-International Neuropsychiatric Interview (MINI) to exclude other psychiatric disorders. The study received approval from the Research Ethics Committee of Shenzhen Kangning Hospital (Approval No: 2020-K021-04-1). Informed consent was obtained from all participants and their guardians prior to enrollment.

In this study, NSSI behavior was defined based on the following criteria: (1) intentional self-inflicted injury to body tissue without suicidal intent within the past year; (2) the behavior served at least one of the following purposes: alleviating or relieving negative emotions, resolving interpersonal problems, or achieving a desired emotional state; (3) the self-injurious behavior was linked to at least one of the following: it occurred after interpersonal rejection or in response to negative emotions, there was an inability to resist the urge to self-harm, or thoughts of self-harm were present; (4) the behavior involved socially unacceptable actions, such as repeated cutting or picking at wounds. According to the NSSI criteria, the recruited patients were divided into two groups: Depression with NSSI (DN+), consisting of 77 subjects (65 females), and Depression without NSSI (DN-), consisting of 37 subjects (18 females).

III-B Data Acquisition and Preprocessing

Each participant completed a total of 35 trials, with each trial involving the viewing of a natural image related to social pain. Each trial lasted 5 seconds. Throughout these trials, EEG data were simultaneously recorded using a 63-channel BrainAmp system, following the international 10-20 system. The data were captured at a sampling rate of 500 Hz.

A standard EEG preprocessing pipeline was applied to the raw EEG signals to remove artificial artifacts, including eye movements, muscle activity, and environmental noise. Following artifact correction, the sampling rate was downsampled from 500 Hz to 384 Hz, reducing data dimensionality and making the data more efficient for subsequent processing and analysis. To increase the sample size for modeling, each 5-second trial was further segmented into multiple 1-second samples. As a result, the final data format can be represented as 114 participants 35 trials 5 seconds 63 channels 384 sampling points.

IV METHODOLOGY

This paper presents a novel semi-supervised learning framework (NSSI-Net), which incorporates a multi-concept GAN to characterize informative EEG patterns related to NSSI. NSSI-Net tackles the clinical challenge of label scarcity in EEG data through two key modules: an encoder-decoder-based feature extraction module and a multi-concept discriminator module, as illustrated in Fig. 1. Encoder-Decoder-based Feature Extraction Module. The encoder-decoder architecture is constructed using CNN and BiGRU to efficiently capture both spatial and temporal representations of NSSI-related brain activities. This module enables the extraction of meaningful patterns from the EEG signals by modeling their spatial dependencies and temporal dynamics. Multi-Concept Discriminator Module. The extracted EEG features are further refined to enhance the robustness and generalizability of the representations. This refinement process operates across four concepts: signal, gender, domain, and disease. A detailed explanation of the multi-concept discriminators will be provided in Section IV-C.

IV-A Input Data

Based on the collected data, we categorize it into two domains: the source domain and the target domain . Within the semi-supervised learning framework, the source domain () is further subdivided into a smaller labeled subset (denoted as ) and a larger unlabeled subset (denoted as ). Here, a division ratio, denoted as , determines that of the source data forms the labeled subset (), while the remaining constitutes the unlabeled subset ().

Considering the data imbalance (NSSI: 77 patients, including 65 females and 12 males; non-NSSI: 37 patients, including 18 females and 19 males) and the gender distribution, a data sampling strategy is implemented across , , and . As illustrated in Fig. 2, the sampling process for both the NSSI and non-NSSI groups is explained. For the NSSI group (denoted as DN+), 18 females are randomly selected from the 65 available candidates (represented by the red hatched figures), and all 12 males are included, ensuring equal gender representation within DN+. For the non-NSSI group (denoted as DN-), all 18 females (solid blue figures) are included, along with 12 randomly selected males from the 19 available candidates, achieving a balanced gender distribution comparable to the NSSI group. This sampling process results in a dataset of 30 NSSI patients (DN+) and 30 non-NSSI patients (DN-), balanced across both condition and gender.

IV-B Spatial-Temporal Feature Extraction

To effectively extract spatial and temporal features from input EEG signals, we designed a hybrid neural network that combines CNNs with GRUs. This architecture captures interactions both among different brain regions and across various time points, enhancing the representation of complex EEG dynamics.

Inspired by the EEGNet structure [36], we develop a deep encoder-decoder network based on CNNs. The CNN-based encoder for spatial information extraction is shown in Fig. 2, represented by the green horizontal arrow. The input EEG sample is denoted as , where is the number of EEG channels and represents the number of sampling points per second. The CNN-based feature extractor adopts 2D convolution to map the input EEG samples into a more expressive feature space, providing a compact yet comprehensive representation of the spatial dynamics of the EEG signals. The obtained feature map is represented as , where is the number of feature maps and is the feature dimensionality.

To further uncover the relationships of the brain patterns over time and encapsulate the key temporal dynamics of the entire EEG signal sequence, a BiGRU based encoder-decoder structure is designed. The BiGRU-based encoder for temporal information extraction is illustrated in Fig. 2, represented by the green vertical arrow. In our implementation, we used a BiGRU to process the sequence of feature vectors generated by the CNN encoder. The forward and backward passes of the BiGRU produce corresponding hidden states, which are concatenated to form the deep feature representation vector . Once is obtained from the concatenated hidden states of the BiGRU, it is fed into a Multi-Layer Perceptron (MLP) to derive . This MLP transforms the feature representation into a more refined feature space, capturing non-linear relationships between the elements of . Following this, a flatten operation is applied to , resulting in the output vector . The output is a rich representation that integrates both spatial features (extracted by the CNN layers) and temporal features (learned through the recurrent layers), providing a comprehensive understanding of the EEG signal’s dynamics.

IV-C Multi-Concept Discriminator

Traditional encoder-decoder networks, though easy to train, often produce lower-quality features and limit the effectiveness in complex tasks [37]. In contrast, GANs have shown great potential in generating high-quality features from time-series data [38, 39, 40]. To enhance the robustness and accuracy of EEG signal classification, particularly when dealing with the complexities of non-stationary time-series data, we develop a multi-concept discriminator as part of our hybrid CNN-BiGRU encoder-decoder structure. This multi-concept discriminator is designed to enhance the model’s quality and generalizability by addressing multiple aspects of the data, covering signal quality, gender differences, domain variability, and disease-specific characteristics.

IV-C1 Signal-Specific Discriminator

To identify the quality of signals generated by the CNN-BiGRU encoder-decoder architecture, a signal-specific discriminator is designed. This discriminator aims to enhance the feature representation capability by distinguishing between generated signals and authentic ones. By providing adversarial feedback, the discriminator plays a crucial role in boosting the feature characterization capacity of the entire model, ensuring that the generated signals are of high quality and effectively represent the desired characteristics. Specifically, the generator extracts latent features from the input EEG signals and then reconstructs the EEG signals . The signal-specific discriminator is . The loss function of the signal-specific discriminator is given as

| (1) |

is the GAN loss, defined as

| (2) |

is reconstruction loss, defined as

| (3) |

is a hyperparameter balancing the GAN loss and the reconstruction loss , encouraging the generation of high-quality EEG signals.

IV-C2 Gender-Specific Discriminator

Considering the gender bias present in NSSI patients and the variations in EEG pattern representations between females and males, a gender-specific discriminator is designed to capture and leverage gender-specific EEG features. By integrating gender-specific discriminator, the model not only accounts for the gender-based differences but also improves its overall classification accuracy, ensuring that the subtleties in EEG signals attributed to gender are adequately represented and utilized for better prediction outcomes. The loss function of the gender-specific discriminator is given as

| (4) |

Here, represents the parameters of the feature extractor (generator ). refers to the parameters of the gender classifier . refers to the features extracted as described in Section IV-B Spatial-Temporal Feature Extraction, where and represent the features of male and female samples, respectively. and denote the number of male and female samples in each batch, respectively. This loss function is designed to ensure that the model effectively captures gender-specific EEG features, which are crucial, particularly in addressing the gender bias present in the data.

IV-C3 Domain-Specific Discriminator

Due to the inherent individual differences in EEG signals, a domain-specific discriminator is designed to align the feature distributions across the labeled source domain (), the unlabeled source domain (), and the target domain (). This discriminator aims to reduce the variability between these domains by ensuring that the extracted features from different sources are more consistent. By aligning these distributions, the domain-specific discriminator helps in minimizing the domain gap, thereby enhancing the model’s ability to generalize effectively to new and unseen data. The loss function of the domain-specific discriminator is given as

| (5) |

Here, refers to the parameters of the domain classifier . is the domain label indicating whether is from the labeled source, unlabeled source, or target domain. denotes the number of samples in each batch. This loss function encourages the feature extractor to generate domain-invariant features by minimizing the ability of the domain classifier to distinguish between domains. As a result, the model learns to produce features that are robust across different domains, improving cross-subject generalization.

IV-C4 Disease-Specific Discriminator

To effectively capture the differential EEG features between DN+ and DN-, a disease-specific discriminator is designed. This discriminator plays a crucial role in distinguishing patients based on their EEG patterns, ensuring that the unique characteristics associated with each condition are accurately identified. The loss function of the disease-specific discriminator is given as

| (6) |

where represents the binary labels indicating the presence or absence of NSSI (DN+ or DN-). denotes the number of samples in a batch from the labeled source domain, is the disease classifier, and is the sigmoid activation function. This loss function aims to drive the model to accurately classify patients based on their EEG features, enhancing its predictive capability for identifying NSSI.

IV-C5 Overall Loss Function

The overall objective of the multi-concept discriminator is formulated as a weighted combination of the individual loss functions as

| (7) |

where , , and are weighting factors for the corresponding loss terms. The overall loss function ensures that the model learns robust, multi-dimensional EEG feature representations that are invariant to signal noise, sensitive to gender differences, adaptable across domains, and effective in classifying NSSI behaviors. By jointly optimizing these objectives, the model is better equipped to handle the complexities involved in EEG signal analysis for studies on depression and NSSI.

V Experimental Results

V-A Implementation details and model setting

In the proposed NSSI-Net, the CNN encoder begins with an initial convolutional layer that employs 16 filters and a kernel size of 1 . This layer is followed by a depthwise convolution with 32 filters. Subsequent separable convolutions further refine these features, using kernel sizes of 1 . The BiGRU encoder is bidirectional, with each direction containing 128 units per layer, spread across 2 layers. Following the BiGRU layers, fully connected layers are employed to condense the feature representation, which systematically reduce and refine the feature dimensionality before passing it to the final classification and adversarial networks. The adversarial discriminators incorporate fully connected layers with ReLU activation functions.

For model training, the RMSprop optimizer is used with a learning rate of 1e-3. The mini-batch size is set to 48, and L2 regularization with a coefficient of 1e-5 is applied to mitigate overfitting. Dropout is incorporated at a rate of 0.25 in the fully connected layers to improve generalization. The model is validated using a semi-supervised cross-subject ten-fold cross-validation. The division of labeled source data and unlabeled source data is based on subjects rather than data samples. Specifically, based on the training data, 75% of the subjects from the source domain are randomly assigned as labeled source data, and the rest 25% subjects are unlabeled source data. All parameters are randomly initialized, and training is conducted on a single GPU to ensure efficiency and consistency throughout the process.

V-B Model Results under Semi-Supervised Learning

We compare the proposed NSSI-Net with various machine learning and deep learning models using a semi-supervised cross-subject ten-fold cross-validation protocol. As shown in Table I, the proposed NSSI-Net achieves superior performance, with an accuracy of 70.00±13.90. This represents a significant improvement of 7.44% compared to the best-performing model in the literature.

The table could be divided into two parts: traditional machine learning methods and deep learning methods. Among traditional machine learning approaches, Random Forest (RF [41]) achieves the highest accuracy at 55.39±07.04, followed closely by CORAL [42] with 57.07±03.45. Other traditional models, such as Support Vector Machines (SVM [43]) and Adaboost [44], demonstrate comparatively lower performance, with accuracies ranging from 48.09% to 57.85%. In contrast, deep learning methods offer more competitive results. The best performance in the literature is achieved by DDC [45], with an accuracy of 62.56±2.52. DAN [46] achieves an accuracy of 61.46±03.86, while DCORAL [47] reaches 60.91±02.95. These models outperform most traditional methods but still fall short compared to NSSI-Net. Another deep learning approach, DANN [48], records moderate performance with an accuracy of 58.20±02.80. Through a comprehensive comparison with the literature, the proposed NSSI-Net emerges as the top-performing model, surpassing both traditional and deep learning methods. Its 7.44% improvement over the next best model (DDC [45]) highlights its effectiveness in capturing the complex patterns associated with NSSI behaviors in adolescents, establishing it as a promising tool for early detection and intervention.

| Methods | Accuracy | Methods | Accuracy |

| Traditional machine learning methods | |||

| SVM*[43] | 56.8704.96 | TCA*[49] | 53.0204.41 |

| SA*[50] | 48.0906.85 | KPCA*[51] | 51.2805.96 |

| RF*[41] | 55.3907.04 | Adaboost*[44] | 57.8506.57 |

| CORAL*[42] | 57.0703.45 | GFK*[52] | 56.3306.52 |

| Deep learning methods | |||

| DAN*[46] | 61.4603.86 | DANN*[48] | 58.2002.80 |

| DCORAL*[47] | 60.9102.95 | DDC*[45] | 62.5602.52 |

| NSSI-Net | 70.0013.90 (+7.44) | ||

V-C Analysis of Confusion Matrices

To further evaluate model performance across different subgroups and identify any biases or inconsistencies in the prediction outcomes, we analyze the confusion matrices for three demographic groups: the entire dataset, the female subgroup, and the male subgroup. As shown in Fig. 3, we consider DN+ as the positive class and DN- as the negative class.

The overall confusion matrix reflects the model’s performance across the entire dataset. The true negative (TN) rate is 78.60%, and the true positive (TP) rate is 60.70%, indicating that the model is reasonably effective at distinguishing between DN+ and DN- cases. However, the false positive (FP) rate of 21.40% and false negative (FN) rate of 39.30% reveal limitations, particularly in identifying DN+ cases. The higher FN rate suggests difficulty in correctly identifying DN+ instances, potentially due to the inherent variability in EEG signals among individuals.

For the female subgroup, the confusion matrix shows a TN rate of 82.86% and a TP rate of 59.35%, indicating slightly better performance in correctly identifying DN- cases compared to DN+ cases. The FP rate is lower at 17.14%, while the FN rate is 40.65%. These results imply that the model is more accurate in predicting DN- in females, potentially due to the higher prevalence of NSSI in females, making the EEG patterns associated with DN- more distinguishable. However, the relatively high FN rate indicates considerable room for improvement in detecting DN+ cases in this subgroup.

For the male subgroup, the confusion matrix reveals a different pattern, with a lower TN rate of 71.92% but a higher TP rate of 62.85%. The FP rate is notably higher at 28.08%, while the FN rate is lower at 37.15%. This distribution suggests that the model is more sensitive to detecting DN+ cases in males but at the cost of a higher rate of false positives, indicating a potential gender-specific bias. This bias might be influenced by the generally lower prevalence of NSSI among males, making it more challenging for the model to distinguish between DN- and DN+ cases in this subgroup.

In summary, the confusion matrices reveal that while the model performs reasonably well overall, there are notable differences in performance across gender-specific subgroups. The model tends to be more accurate in identifying DN- cases in females and DN+ cases in males, which may be attributed to underlying biological and psychological differences between genders. However, the higher FP rate in males and the higher FN rate in females highlight areas for further improvement, particularly in addressing potential gender biases in the model’s predictions. Future improvements could focus on refining the gender-specific discriminator or incorporating a larger, more balanced dataset to enhance the model’s robustness and accuracy.

VI Discussion and Conclusion

To thoroughly assess the performance and robustness of the proposed NSSI-Net, we conduct a series of experiments to evaluate the contribution of each component within the model. We also examine the impact of various hyperparameter settings on its performance. Additionally, we discuss the revealing neural mechanisms observed in NSSI-Net. These experimental analyses provide a comprehensive understanding of the model’s capabilities and help identify the factors most crucial for its success in detecting NSSI.

VI-A Ablation Study

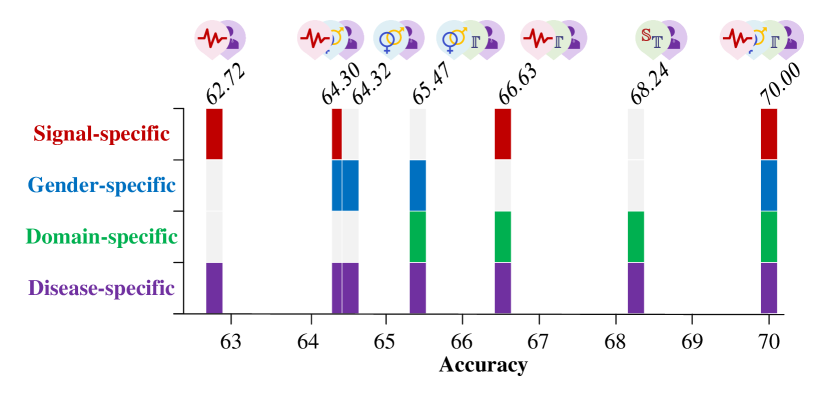

In this section, we conduct an ablation study to evaluate the contribution of each discriminator in the proposed model. Table II presents the model results using semi-supervised cross-subject ten-fold cross-validation under different discriminator configurations. (1) Signal-Specific Discriminator Contribution. Removing the signal-specific discriminator reduces the accuracy to 65.47±10.22, highlighting its crucial role in extracting relevant signal-based features. (2) Gender-Specific Discriminator Contribution. Without the gender-specific discriminator, the accuracy drops slightly to 66.63±09.66, indicating that gender-specific features contribute to overall classification performance, though less significantly than signal-specific features. (3) Domain-Specific Discriminator Contribution. When the domain-specific discriminator is removed, the accuracy decreases to 64.30±09.88, showing the importance of domain adaptation in improving the model’s performance. (4) Comparison with Traditional Domain Discriminator. Traditional domain adaptation methods are based on the DANN framework [48]. In line with previous approaches [53, 54], we treat both labeled and unlabeled source data as a unified domain for adaptation. Replacing the proposed discriminator setup with a traditional domain discriminator yields an accuracy of 67.17±12.99, which is lower than the proposed NSSI-Net’s performance of 70.00±13.90, underscoring the effectiveness of the proposed multi-concept discriminator framework. The results demonstrate the importance of each discriminator in enhancing the model’s performance, with the signal-specific and domain-specific discriminators having the most significant impact.

In addition to removing individual discriminators to evaluate the contribution of each component, we also explore different combinations of discriminators (signal-specific, gender-specific and domain-specific) to assess their collective impact on the overall prediction accuracy of the model. The model performance under different combinations are presented in Fig. 4. (1) Signal + Disease-Specific. When using only the signal-specific module, the model achieves a baseline accuracy of 62.72%, highlighting the importance of capturing key signal-related features for distinguishing between classes. (2) Signal + Gender + Disease-Specific. Adding the gender-specific module alongside the signal-specific module raises the accuracy to 64.30%, demonstrating the added value of incorporating gender-related information, as gender-specific EEG patterns contribute additional predictive power. (3) Gender + Domain + Disease-Specific. The combination of gender-specific and domain-specific modules yields an accuracy of 65.47%. This shows that combining domain-related information with gender-specific features further improves prediction, indicating the significance of domain variations for classification. (4) Signal + Gender + Domain + Disease-Specific. The highest accuracy, 70.00%, is achieved when all discriminators are combined. This demonstrates that each discriminator captures unique, complementary aspects of the data, and that their integration provides the most accurate predictions.

The above analyses show that the signal-specific module captures foundational features, while the gender-specific and domain-specific modules provide additional refinement by accounting for gender and domain-specific variations. Although individual modules are informative, their combined effect maximizes the model’s predictive performance.

| Methods | Accuracy |

|---|---|

| Without signal-specific discriminator | 65.4710.22 |

| Without gender-specific discriminator | 66.6309.66 |

| Without domain-specific discriminator | 64.3009.88 |

| With tradition domain discriminator | 67.1712.99 |

| NSSI-Net | 70.0013.90 |

VI-B Evaluating Model Performance under Varying Labeled Source Data Ratios in Semi-Supervised Learning

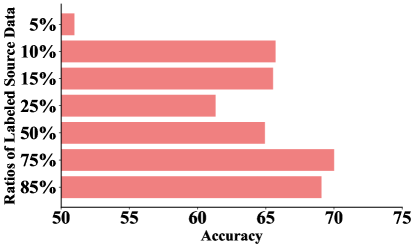

The availability of labeled source data during semi-supervised learning is crucial in determining the effectiveness of model training, especially when working with complex EEG signals. Understanding the impact of different ratios of labeled source data helps evaluate the trade-off between data quantity and model performance, providing insights into how much labeled data is required to achieve optimal outcomes. As shown in Fig. 5, we adjust the ratios of labeled source data from 5% to 85%.

The experimental results show that with only 5% of the labeled source domain data, the model struggles to learn effectively, achieving a relatively low accuracy rate of 50.97%. When the ratio increases to 10%, there is a significant improvement in accuracy, with the model reaching an average accuracy of 65.71%. This suggests that the model requires a minimum ratio of labeled source data to begin capturing meaningful patterns in the EEG signals. Beyond this 10% ratio, the accuracy continues to improve steadily, reaching a plateau when 75% is reached, with an accuracy of 70.00%. This suggests that the model, leveraging its semi-supervised learning framework, effectively utilizes both labeled and unlabeled data to maintain steady and robust performance, even when labeled source data is relatively limited.

VI-C The Effect of Sampling Process

To balance the data distribution and gender representation, a data sampling strategy is adopted as described in Section IV-A. Here, we further evaluate the effect of this sampling process on model performance. As illustrated in Fig. 6, we randomly form different sample groups and re-implement the semi-supervised cross-subject ten-fold cross-validation for each group separately. The model performance across these sampled groups ranges from 65.21% to 70.48%, with corresponding standard deviations between 13.18% and 14.59%. The results demonstrate that the model consistently delivers stable performance across various sampling configurations, indicating its robustness to variations in data selection during the sampling process.

VI-D Topographic Analysis of Channel Contributions to NSSI Classification

To deepen our understanding of the neural mechanisms underlying NSSI detection, we analyze the contribution of each EEG channel to the model’s ability to distinguish between DN+ and DN-. Instead of using signals from all brain regions as input, we examine the role of individual EEG channels by feeding data from each channel independently into the model. For each channel, we determine the model’s classification accuracy and subsequently normalize these accuracy values across all channels.

The normalized accuracy values are visualized in a topographic analysis, which allows us to discern spatial patterns of channel importance. As shown in Fig. 7, the parietal, frontal and occipital regions display notably higher significance in predicting NSSI behaviors compared to other brain areas. These regions are known to play key roles in emotional processing [55, 56, 57], visuospatial functions [58, 59], and self-referential thoughts [60, 61, 62], which could be critical in the development and maintenance of self-injurious behaviors.

VI-E Conclusion

In this paper, we introduce a novel semi-supervised framework with a multi-concept discriminator (NSSI-Net), designed for the detection of NSSI using EEG signals. NSSI-Net leverages spatial-temporal EEG patterns through a hybrid CNN-BiGRU encoder-decoder structure, which allows us to characterize the complexity of neural activities in adolescents with depression. The multi-concept discriminator is proposed to address the heterogeneous and complex nature of EEG data by focusing on multiple discriminative perspectives. It specifically focuses on capturing signal-specific, gender-specific, domain-specific, and disease-specific characteristics, enhancing the model’s ability to accurately classify NSSI-related features. Based on a self-collected dataset of 114 adolescents diagnosed with depression, the performance of the proposed NSSI-Net is validated using a semi-supervised cross-subject cross-validation approach. Comprehensive analysis of model components and hyperparameters are carefully conducted to assess the framework’s robustness. The results show that NSSI-Net achieves high efficiency and accuracy in detecting NSSI and provides interpretable insights into the neural mechanisms associated with NSSI. Future work would focus on enhancing the model’s ability to handle class imbalances and sample size issues, ensuring consistent performance across a broader range of real-world applications.

VII Acknowledgments

This work was supported in part by the National Natural Science Foundation of China under Grant 62276169, in part by the Medical-Engineering Interdisciplinary Research Foundation of Shenzhen University under Grant 2024YG008, in part by the Shenzhen University-Lingnan University Joint Research Programme, in part by the Shenzhen-Hong Kong Institute of Brain Science-Shenzhen Fundamental Research Institutions under Grant 2022SHIBS0003, and in part by the STI 2030-Major Projects 2021ZD0200500.

References

- [1] D. Xu, Y.-L. Wang, K.-T. Wang, Y. Wang, X.-R. Dong, J. Tang, and Y.-L. Cui, “A scientometrics analysis and visualization of depressive disorder,” Current Neuropharmacology, vol. 19, no. 6, pp. 766–786, 2021.

- [2] M. W. Flores, A. Sharp, N. J. Carson, and B. L. Cook, “Estimates of major depressive disorder and treatment among adolescents by race and ethnicity,” JAMA pediatrics, vol. 177, no. 11, pp. 1215–1223, 2023.

- [3] S. V. Swannell, G. E. Martin, A. Page, P. Hasking, and N. J. St John, “Prevalence of nonsuicidal self-injury in nonclinical samples: Systematic review, meta-analysis and meta-regression,” Suicide and Life-Threatening Behavior, vol. 44, no. 3, pp. 273–303, 2014.

- [4] K. R. Cullen, M. W. Schreiner, B. Klimes-Dougan, L. E. Eberly, L. L. LaRiviere, K. O. Lim, J. Camchong, and B. A. Mueller, “Neural correlates of clinical improvement in response to n-acetylcysteine in adolescents with non-suicidal self-injury,” Progress in neuro-psychopharmacology and biological psychiatry, vol. 99, p. 109778, 2020.

- [5] I. Levkovich and B. Stregolev, “Non-suicidal self-injury among adolescents: Effect of knowledge, attitudes, role perceptions, and barriers in mental health care on teachers’ responses,” Behavioral Sciences, vol. 14, no. 7, p. 617, 2024.

- [6] M. Guan, J. Liu, X. Li, M. Cai, J. Bi, P. Zhou, Z. Wang, S. Wu, L. Guo, and H. Wang, “The impact of depressive and anxious symptoms on non-suicidal self-injury behavior in adolescents: a network analysis,” BMC psychiatry, vol. 24, no. 1, p. 229, 2024.

- [7] Y. Zhang, L. Gong, Q. Feng, K. Hu, C. Liu, T. Jiang, and Q. Zhang, “Association between negative life events through mental health and non-suicidal self-injury with young adults: evidence for sex moderate correlation,” BMC psychiatry, vol. 24, no. 1, p. 466, 2024.

- [8] W. Ye, J. Wang, L. Chen, L. Dai, Z. Sun, and Z. Liang, “Adaptive spatial–temporal aware graph learning for EEG-based emotion recognition,” Cyborg and Bionic Systems, vol. 5, p. 0088, 2024.

- [9] S. J. Smith, “EEG in the diagnosis, classification, and management of patients with epilepsy,” Journal of Neurology, Neurosurgery & Psychiatry, vol. 76, no. suppl 2, pp. ii2–ii7, 2005.

- [10] J. Jeong, “EEG dynamics in patients with alzheimer’s disease,” Clinical neurophysiology, vol. 115, no. 7, pp. 1490–1505, 2004.

- [11] F. S. de Aguiar Neto and J. L. G. Rosa, “Depression biomarkers using non-invasive EEG: A review,” Neuroscience & Biobehavioral Reviews, vol. 105, pp. 83–93, 2019.

- [12] W. Bosl, A. Tierney, H. Tager-Flusberg, and C. Nelson, “EEG complexity as a biomarker for autism spectrum disorder risk,” BMC medicine, vol. 9, pp. 1–16, 2011.

- [13] N. N. Boutros, C. Arfken, S. Galderisi, J. Warrick, G. Pratt, and W. Iacono, “The status of spectral EEG abnormality as a diagnostic test for schizophrenia,” Schizophrenia research, vol. 99, no. 1-3, pp. 225–237, 2008.

- [14] P. Thuwajit, P. Rangpong, P. Sawangjai, P. Autthasan, R. Chaisaen, N. Banluesombatkul, P. Boonchit, N. Tatsaringkansakul, T. Sudhawiyangkul, and T. Wilaiprasitporn, “EEGWaveNet: Multiscale CNN-based spatiotemporal feature extraction for EEG seizure detection,” IEEE Transactions on Industrial Informatics, vol. 18, no. 8, pp. 5547–5557, 2021.

- [15] S. Liu, X. Wang, L. Zhao, B. Li, W. Hu, J. Yu, and Y.-D. Zhang, “3DCANN: A spatio-temporal convolution attention neural network for EEG emotion recognition,” IEEE Journal of Biomedical and Health Informatics, vol. 26, no. 11, pp. 5321–5331, 2021.

- [16] D. Zhang, L. Yao, K. Chen, S. Wang, X. Chang, and Y. Liu, “Making sense of spatio-temporal preserving representations for EEG-based human intention recognition,” IEEE transactions on cybernetics, vol. 50, no. 7, pp. 3033–3044, 2019.

- [17] Z. Gao, X. Wang, Y. Yang, C. Mu, Q. Cai, W. Dang, and S. Zuo, “EEG-based spatio–temporal convolutional neural network for driver fatigue evaluation,” IEEE transactions on neural networks and learning systems, vol. 30, no. 9, pp. 2755–2763, 2019.

- [18] E. Iznak, E. Damyanovich, I. Oleichik, and N. Levchenko, “EEG features in depressive female adolescents with suicidal and non-suicidal auto-aggressive behavior,” European Psychiatry, vol. 64, no. S1, pp. S175–S175, 2021.

- [19] R. P. Auerbach, D. Pagliaccio, G. O. Allison, K. L. Alqueza, and M. F. Alonso, “Neural correlates associated with suicide and nonsuicidal self-injury in youth,” Biological psychiatry, vol. 89, no. 2, pp. 119–133, 2021.

- [20] M. Westlund Schreiner, B. A. Mueller, B. Klimes-Dougan, E. D. Begnel, M. Fiecas, D. Hill, K. O. Lim, and K. R. Cullen, “White matter microstructure in adolescents and young adults with non-suicidal self-injury,” Frontiers in psychiatry, vol. 10, p. 1019, 2020.

- [21] A. F. Iznak, E. V. Iznak, E. V. Damyanovich, and I. V. Oleichik, “Differences of EEG frequency and spatial parameters in depressive female adolescents with suicidal attempts and non-suicidal self-injuries,” Clinical EEG and neuroscience, vol. 52, no. 6, pp. 406–413, 2021.

- [22] L. Zhao, D. Zhou, L. Ma, J. Hu, R. Chen, X. He, X. Peng, Z. Jiang, L. Ran, J. Xiang et al., “Changes in emotion-related EEG components and brain lateralization response to negative emotions in adolescents with nonsuicidal self-injury: an erp study,” Behavioural brain research, vol. 445, p. 114324, 2023.

- [23] L. Zhao, D. Zhou, J. Hu, X. He, X. Peng, L. Ma, X. Liu, W. Tao, R. Chen, Z. Jiang et al., “Changes in microstates of first-episode untreated nonsuicidal self-injury adolescents exposed to negative emotional stimuli and after receiving rtms intervention,” Frontiers in psychiatry, vol. 14, p. 1151114, 2023.

- [24] P. Marti-Puig, C. Capra, D. Vega, L. Llunas, and J. Solé-Casals, “A machine learning approach for predicting non-suicidal self-injury in young adults,” Sensors, vol. 22, no. 13, p. 4790, 2022.

- [25] S. Kim, K.-I. Jang, H. S. Lee, S.-H. Shim, and J. S. Kim, “Differentiation between suicide attempt and suicidal ideation in patients with major depressive disorder using cortical functional network,” Progress in Neuro-Psychopharmacology and Biological Psychiatry, vol. 132, p. 110965, 2024.

- [26] S. Kentopp, “Deep transfer learning for prediction of health risk behaviors in adolescent psychiatric patients,” Ph.D. dissertation, Colorado State University, 2021.

- [27] T. Zhang, W. Zheng, Z. Cui, Y. Zong, and Y. Li, “Spatial–temporal recurrent neural network for emotion recognition,” IEEE transactions on cybernetics, vol. 49, no. 3, pp. 839–847, 2018.

- [28] W. Li, Z. Zhang, B. Hou, and X. Li, “A novel spatio-temporal field for emotion recognition based on EEG signals,” IEEE Sensors Journal, vol. 21, no. 23, pp. 26 941–26 950, 2021.

- [29] W.-C. L. Lew, D. Wang, K. Shylouskaya, Z. Zhang, J.-H. Lim, K. K. Ang, and A.-H. Tan, “EEG-based emotion recognition using spatial-temporal representation via Bi-GRU,” 2020 42nd annual international conference of the IEEE engineering in medicine & biology society (EMBC), pp. 116–119, 2020.

- [30] J. Cho and H. Hwang, “Spatio-temporal representation of an electoencephalogram for emotion recognition using a three-dimensional convolutional neural network,” Sensors, vol. 20, no. 12, p. 3491, 2020.

- [31] Y. Li, W. Zheng, L. Wang, Y. Zong, and Z. Cui, “From regional to global brain: A novel hierarchical spatial-temporal neural network model for EEG emotion recognition,” IEEE Transactions on Affective Computing, vol. 13, no. 2, pp. 568–578, 2019.

- [32] Z. Liang, R. Zhou, L. Zhang, L. Li, G. Huang, Z. Zhang, and S. Ishii, “EEGFuseNet: Hybrid unsupervised deep feature characterization and fusion for high-dimensional EEG with an application to emotion recognition,” IEEE Transactions on Neural Systems and Rehabilitation Engineering, vol. 29, pp. 1913–1925, 2021.

- [33] Y. Dan, J. Tao, J. Fu, and D. Zhou, “Possibilistic clustering-promoting semi-supervised learning for EEG-based emotion recognition,” Frontiers in Neuroscience, vol. 15, p. 690044, 2021.

- [34] G. Zhang, V. Davoodnia, and A. Etemad, “PARSE: Pairwise alignment of representations in semi-supervised EEG learning for emotion recognition,” IEEE Transactions on Affective Computing, vol. 13, no. 4, pp. 2185–2200, 2022.

- [35] W. Ye, Z. Zhang, F. Teng, M. Zhang, J. Wang, D. Ni, F. Li, P. Xu, and Z. Liang, “Semi-supervised dual-stream self-attentive adversarial graph contrastive learning for cross-subject EEG-based emotion recognition,” IEEE Transactions on Affective Computing, 2024.

- [36] V. J. Lawhern, A. J. Solon, N. R. Waytowich, S. M. Gordon, C. P. Hung, and B. J. Lance, “EEGNet: a compact convolutional neural network for EEG-based brain–computer interfaces,” Journal of neural engineering, vol. 15, no. 5, p. 056013, 2018.

- [37] M. Akbari and J. Liang, “Semi-recurrent CNN-based VAE-GAN for sequential data generation,” 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 2321–2325, 2018.

- [38] A. Makhzani, J. Shlens, N. Jaitly, I. Goodfellow, and B. Frey, “Adversarial autoencoders,” arXiv preprint arXiv:1511.05644, 2015.

- [39] X. Chen and E. Konukoglu, “Unsupervised detection of lesions in brain mri using constrained adversarial auto-encoders,” arXiv preprint arXiv:1806.04972, 2018.

- [40] S. Sahu, R. Gupta, G. Sivaraman, W. AbdAlmageed, and C. Espy-Wilson, “Adversarial auto-encoders for speech based emotion recognition,” arXiv preprint arXiv:1806.02146, 2018.

- [41] Breiman, “Random forests,” Machine Learning, vol. 45, no. 1, pp. 5–32, 2001.

- [42] B. Sun, J. Feng, and K. Saenko, “Return of frustratingly easy domain adaptation,” Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, p. 2058–2065, 2016.

- [43] J. Suykens and J. Vandewalle, “Least squares support vector machine classifiers,” Neural Processing Letters, vol. 9, no. 3, pp. 293–300, 1999.

- [44] J. Zhu, A. Arbor, and T. Hastie, “Multi-class adaboost,” Statistics & Its Interface, vol. 2, no. 3, pp. 349–360, 2006.

- [45] E. Tzeng, J. Hoffman, N. Zhang, K. Saenko, and T. Darrell, “Deep domain confusion: Maximizing for domain invariance,” CoRR, vol. abs/1412.3474, 2014. [Online]. Available: http://arxiv.org/abs/1412.3474

- [46] H. Li, Y.-M. Jin, W.-L. Zheng, and B.-L. Lu, “Cross-subject emotion recognition using deep adaptation networks,” Neural Information Processing, pp. 403–413, 2018.

- [47] B. Sun and K. Saenko, “Deep CORAL: Correlation alignment for deep domain adaptation,” Computer Vision – ECCV 2016 Workshops, pp. 443–450, 2016.

- [48] Y. Ganin, E. Ustinova, H. Ajakan, P. Germain, H. Larochelle, F. Laviolette, M. Marchand, and V. Lempitsky, “Domain-adversarial training of neural networks,” The journal of machine learning research, vol. 17, no. 1, pp. 2096–2030, 2016.

- [49] S. J. Pan, I. W. Tsang, J. T. Kwok, and Q. Yang, “Domain adaptation via transfer component analysis,” IEEE Transactions on Neural Networks, vol. 22, no. 2, pp. 199–210, 2011.

- [50] B. Fernando, A. Habrard, M. Sebban, and T. Tuytelaars, “Unsupervised visual domain adaptation using subspace alignment,” 2013 IEEE International Conference on Computer Vision, pp. 2960–2967, 2013.

- [51] S. Mika, B. Schölkopf, A. Smola, K.-R. Müller, M. Scholz, and G. Rätsch, “Kernel PCA and de-noising in feature spaces,” Advances in neural information processing systems, vol. 11, 01 1999.

- [52] B. Gong, Y. Shi, F. Sha, and K. Grauman, “Geodesic flow kernel for unsupervised domain adaptation,” 2012 IEEE Conference on Computer Vision and Pattern Recognition, pp. 2066–2073, 2012.

- [53] J. Li, S. Qiu, Y.-Y. Shen, C.-L. Liu, and H. He, “Multisource transfer learning for cross-subject EEG emotion recognition,” IEEE transactions on cybernetics, vol. 50, no. 7, pp. 3281–3293, 2019.

- [54] H. Chen, M. Jin, Z. Li, C. Fan, J. Li, and H. He, “MS-MDA: Multisource marginal distribution adaptation for cross-subject and cross-session EEG emotion recognition,” Frontiers in Neuroscience, vol. 15, 2021.

- [55] M. Esslen, R. D. Pascual-Marqui, D. Hell, K. Kochi, and D. Lehmann, “Brain areas and time course of emotional processing,” Neuroimage, vol. 21, no. 4, pp. 1189–1203, 2004.

- [56] J. Li, C. Xu, X. Cao, Q. Gao, Y. Wang, Y. Wang, J. Peng, and K. Zhang, “Abnormal activation of the occipital lobes during emotion picture processing in major depressive disorder patients,” Neural Regeneration Research, vol. 8, no. 18, pp. 1693–1701, 2013.

- [57] P. Fusar-Poli, A. Placentino, F. Carletti, P. Landi, P. Allen, S. Surguladze, F. Benedetti, M. Abbamonte, R. Gasparotti, F. Barale et al., “Functional atlas of emotional faces processing: a voxel-based meta-analysis of 105 functional magnetic resonance imaging studies,” Journal of psychiatry and neuroscience, vol. 34, no. 6, pp. 418–432, 2009.

- [58] D. J. Kravitz, K. S. Saleem, C. I. Baker, and M. Mishkin, “A new neural framework for visuospatial processing,” Nature Reviews Neuroscience, vol. 12, no. 4, pp. 217–230, 2011.

- [59] W. Chai, P. Zhang, X. Zhang, J. Wu, C. Chen, F. Li, X. Xie, G. Shi, J. Liang, C. Zhu et al., “Feasibility study of functional near-infrared spectroscopy in the ventral visual pathway for real-life applications,” Neurophotonics, vol. 11, no. 1, pp. 015 002–015 002, 2024.

- [60] G. Northoff, A. Heinzel, M. De Greck, F. Bermpohl, H. Dobrowolny, and J. Panksepp, “Self-referential processing in our brain—a meta-analysis of imaging studies on the self,” Neuroimage, vol. 31, no. 1, pp. 440–457, 2006.

- [61] R. G. Benoit, S. J. Gilbert, E. Volle, and P. W. Burgess, “When i think about me and simulate you: medial rostral prefrontal cortex and self-referential processes,” Neuroimage, vol. 50, no. 3, pp. 1340–1349, 2010.

- [62] G. Wagner, C. Schachtzabel, G. Peikert, and K.-J. Bär, “The neural basis of the abnormal self-referential processing and its impact on cognitive control in depressed patients,” Human brain mapping, vol. 36, no. 7, pp. 2781–2794, 2015.