Not So Robust After All: Evaluating the Robustness of Deep Neural Networks to Unseen Adversarial Attacks

Abstract

Deep neural networks (DNNs) have gained prominence in various applications, such as classification, recognition, and prediction, prompting increased scrutiny of their properties. A fundamental attribute of traditional DNNs is their vulnerability to modifications in input data, which has resulted in the investigation of adversarial attacks. These attacks manipulate the data in order to mislead a DNN. This study aims to challenge the efficacy and generalization of contemporary defense mechanisms against adversarial attacks. Specifically, we explore the hypothesis proposed by Ilyas et. al [11], which posits that DNN image features can be either robust or non-robust, with adversarial attacks targeting the latter. This hypothesis suggests that training a DNN on a dataset consisting solely of robust features should produce a model resistant to adversarial attacks. However, our experiments demonstrate that this is not universally true. To gain further insights into our findings, we analyze the impact of adversarial attack norms on DNN representations, focusing on samples subjected to and norm attacks. Further, we employ canonical correlation analysis, visualize the representations, and calculate the mean distance between these representations and various DNN decision boundaries. Our results reveal a significant difference between and norms, which could provide insights into the potential dangers posed by norm attacks, previously underestimated by the research community.

Keywords:

machine learning deep learning adversarial attacks1 Introduction

The growth of computing power and data availability has led to the development of more efficient pattern recognition techniques, such as machine learning and its subclasses, neural networks and deep learning. When a sample accurately represents a data population and is of sufficient size, machine learning methods can yield impressive results, even on unseen data, making them suitable for tasks such as classification and prediction tasks [14, 21]. Although these methods offer significant advantages, they also come with limitations, such as the sensitivity of deep neural networks (DNNs) to data quality and source, and overfitting when trained on insufficient amounts of data. Researchers continue to develop new approaches and techniques to address these challenges and improve the overall effectiveness of pattern recognition [3, 2, 24, 13]. Despite these attempts, variations in input samples from different domains or insufficient training data can still significantly impact DNNs’ performance. As DNNs are increasingly being employed in critical applications such as medicine and transportation, enhancing their robustness is essential due to the potentially severe consequences of their unpredictability.

One approach to examine DNN robustness to diverse inputs is through the use of adversarial attacks for analysis or training. Adversarial attacks aim to generate the smallest adversarial perturbation - a change in input that results in a misclassification by the model.

This study investigates the abilities and drawbacks of modern defense techniques against adversarial attacks, such as adversarial and robust training. The latter one refers to the hypothesis proposed by Ilyas et al. [11], which suggests that adversarial attacks exploit non-robust features inherent to the dataset rather than the objects in the images. According to this hypothesis, removing these features from the dataset and training a model on the modified data should render adversarial attacks ineffective. We replicate the experiments from the original paper, training a model on robust features and testing it on unseen attacks. Our tests reveal that the models trained on robust features are generally not resistant to norm perturbations.

To explain this behavior, we compare the representations of adversarial examples using canonical correlation analysis (CCA) and discover that norm attacks cause the most dispersion in the latent representation. We visualize neural network representations under and norm attacks to illustrate the impact of norms on the distributions of representations. Additionally, we tested the corresponding adversarial trained models used in robust training, which provided insight into the relationship between robust and adversarial training, suggesting that robust training is a specific case of adversarial training.

These insights might be used by the researchers for development of more robust neural networks by adversarial training: while and norm perturbations look similar, the norm ones are much harder to resist. As far as we know, no one paid such attention to the differences between the attack norms before.

The structure of this paper is as follows. Section 2 presents a brief overview of various attacks on image classifiers and discusses potential defense strategies that we use in our experiments. Section 3 explores current hypotheses regarding the nature of adversarial examples. Section 4 details the experimental methodology and the results. The conclusion of our study is presented in Section 5.

2 Related works

We focus on the attacks on image classifiers as they are the most widespread and mature, however, adversarial attacks are not limited by the type of input or task. The reader may find the examples of attacks in other domains, such as malicious URLs classification [23], communication systems [15], time series classification[12], malware detection in PDF files [4], etc.

In this research, we suppose that an adversary has complete information about the neural network, including weights, gradients, and other internal details (white-box scenario). We also assume that in most of the cases the adversary’s goal is to simply cause the classifier to produce an incorrect output, without specifying a particular target class (untargeted attack scenario). Other possible scenarios can be found, for example, in [6].

2.0.1 Fast gradient sign method (FGSM)

FGSM was proposed by Goodfellow et al. in [10]. Adversarial example for image is calculated as:

| (1) |

where is the perturbation, is the cost function for neural network with weights , calculated for the input image with true classification label .

2.0.2 Projected gradient descent (PGD)

2.0.3 Carlini-Wagner (C-W) attack

Carlini and Wagner [5] introduced a targeted attack which solves the adversarial optimization problem without using . The authors derived a new optimization task for adversarial attack which could be solved by a regular optimizer (for example, SGD) and does not constrain .

2.0.4 DeepFool

Moosavi-Dezfooli et al. [19] provided a simple iterative algorithm to perturb images to the closest wrong class. In other words, DeepFool is equal to the orthogonal projection onto the classifier’s decision boundary. This property allows to use the attack to test the robustness of a models:

| (3) |

where - is the average robustness, - is the classifier, - is the successful perturbation from DeepFool, - is the dataset. DeepFool can be used to calculate the distance from a data point to the closest point on the decision boundary [18].

2.1 Adversarial training as a defense method

One of the most popular approaches to defending the neural networks against attacks is called adversarial training. It proposes to "include" possible adversarial examples into the training data sets to prepare a model for the attacks. The following min-max optimization problem [17] should be solved to get the adversarial trained model:

| (4) |

where, - are the weights of neural network, - the training data set, - the space of possible perturbations, for a given radius .

Adversarial training was introduced by Goodfellow et al. in [10]. Madry et al. [17] proposed using a PGD attack during the training procedure and presented better robustness against adversarial attacks. However, Wong et al. [28] achieved about the same accuracy against adversarial attacks, using simple one-step FGSM.

It is important to note that the provable defense (i.e. "certified robust") against any small- attack has already been studied, for example, by Wong and Kolter [27] and Wong et al. [29]. However, the experiments in these works were conducted with relatively small perturbations: in [29] the maximum radius of perturbation is , while the same norm in adversarial training package [9] is . Thus, we do not include certified robust methods in our research.

3 Hypothesis about the cause of adversarial attacks

The exact reason why neural networks are susceptible to small changes in input data remains unclear. Goodfellow et al. [10] argued that adversarial examples result from models being overly linear rather than nonlinear. However, another perspective considers poor generalization as the source of attacks. Ilyas et al. [11] hypothesized that neural networks’ vulnerability to adversarial attacks arises from their data representation. Classifiers aim to extract useful features from data to minimize a cost function. Ideally, these features should be related to object classification (robust features), but neural networks may utilize unexpected properties specific to a particular dataset (non-robust features), which can be manipulated by an adversary. If a classifier can be trained on a dataset containing only robust features, it should be resistant to adversarial attacks. We refer to this process as robust training.

We decided to challenge this hypothesis for several reasons. First, it is not fully proven, except for the toy example in [11] and experiments on robust and non-robust dataset creation. Second, subsequent works like [25] and [30] consider this hypothesis, while it might not be entirely accurate. For example, Zhang et al. [30] proposed the similar experiment, referring to [11], and developed it onto universal perturbation. Although the results of [11] was discussed in [8], the accuracy of robust trained model was tested nowhere but in the original work, and we would like to fill this gap.

By testing this hypothesis, we discover certain properties of adversarial attacks and further explore them through our experiments. We found not only cases where robust features can be corrupted, but also that -norm attacks are more dangerous than those with -norm. We hypothesize that even if perturbations of these two norms are both imperceptible to humans, -norm attacks have a greater impact on model representations.

4 Experiments

In this work, we establish the following Research Aims (RAs):

-

•

(RA 1) Our research aims to explore the generalization of robust and adversarial training methods. Specifically, we investigate the classification accuracy of robust and adversarial trained models when subjected to various unseen attacks. Additionally, we aim to compare the behavior of these models under identical attack conditions and develop a methodology to measure such differences.

-

•

(RA 2) Our study also seeks to identify potential shortcomings of robust trained models. We aim to determine the proximity of adversarial and benign samples in the latent space of a robust trained model, i.e. measure the distance between the representations. The degree of similarity between representations of samples with small or norms would indicate the stability of a model.

-

•

(RA 3) Addressing the previous RA, we aim to investigate the impact of robust and adversarial training methods on the decision boundary of a model. We seek to determine how the mean distance between samples and decision boundaries varies for different models when exposed to adversarial attacks.

To address the research aims, we present a series of experiments. In the first experiment, we replicate robust training from [11], employing a broader testing setup that included various attack norms, data sets, and model architectures. To compare the performance of adversarial and robustly trained models, we conduct the Kolmogorov-Smirnov test. Next, we analyze the representations of neural networks from the first experiment by singular value canonical correlation analysis (SVCCA) and perform principal component analysis (PCA) for their visualization. Finally, we assess the mean distance from samples to the decision boundaries of different models using the DeepFool attack. In the rest of the section, we describe in details the experiment setups and the achieved results.

4.1 The broad testing of robust and adversarial training (RA 1)

Here and later, we refer to training based on empirical risk minimization as "regular" since it does not involve any adversarial attack and is traditionally used to train neural networks.

The motivation for these experiments stems from the work of Tramer et al. [26], which demonstrated that even adversarially trained models could be compromised by unseen attacks. The core premise of this experiment involves taking an adversarially trained deep model, trained to defend against a specific attack on a given dataset, and utilizing it to develop the robust version of this model, and then evaluate the generalisation perofmance of both models against unseen attacks. This process is undertaken as follows:

-

1.

We select every image within the dataset and use the process outlined in [11] to extract an image that only possesses robust features. The adversarially trained model aids in this extraction. Initially, we generate a random noise image for each target image. Then, we iteratively compute the representations of both the random and target images as interpreted by the pre-trained model. At each iteration, we slightly adjust the random image to minimize the distance between the vectors of the two images. After a set number of steps, this method produces a modified dataset.

-

2.

We then proceed to train the regular version of our selected adversarially trained model on this modified dataset, ultimately resulting in a robustly trained model.

-

3.

Subsequently, we test the generalisation capacity of both the adversarial and robust models against attacks which were not considered during the adversarial training phase, and hence, not used in the formation of the robust model.

This entire experiment is practically implemented for two distinct models: ResNet50s and InceptionV3. For ResNet50, we create both the adversarially trained and robust versions, aiming them at the CIFAR-10 [16] dataset and defending against the PGD attack with and norms. We then test their performance against FGSM (, , norms), PGD (, , norms), C-W ( norm), and DeepFool ( norm) attacks. The performance results of the -trained model are outlined in Table 1, and those of the trained model are presented in Table 2.

The adversarially trained and robust versions of InceptionV3, on the other hand, were trained only against the PGD attack with the norm for CIFAR-10 dataset. The testing parameters for these models were akin to those used with ResNet50, and the results are displayed in Table 3. All tables highlight the corresponding values of -s and the number of steps for iterative attacks used in each case. Note that for the attacks differs from the similar ones for ResNet50-s because the model has the bigger input shape (224x224 vs 32x32, respectively).

| Attack | Norm | Epsilon | Steps | Robust acc. | Adv. acc. |

| No attack | - | - | - | 0.813 | 0.91 |

| FGSM | 0.5 | 1 | 0.81 | 0.91 | |

| FGSM | 0.25 | 1 | 0.59 | 0.87 | |

| FGSM | 0.25 | 1 | 0.1 | 0.13 | |

| FGSM | 0.025 | 1 | 0.22 | 0.62 | |

| PGD | 0.5 | 100 | 0.81 | 0.91 | |

| PGD | 0.25 | 1000 | 0.483 | 0.82 | |

| PGD | 0.5 | 100 | 0.202 | 0.75 | |

| PGD | 0.025 | 5 | 0.168 | 0.54 | |

| PGD | 0.25 | 5 | 0.08 | 0.06 | |

| C-W | 0.25 | 10 | 0.219 | 0.86 | |

| DeepFool | 0.25 | - | 0.124 | 0.127 |

| Attack | Norm | Epsilon | Steps | Robust acc. | Adv. acc. |

| No attack | - | - | - | 0.73 | 0.87 |

| FGSM | 0.5 | 1 | 0.73 | 0.87 | |

| FGSM | 0.25 | 1 | 0.504 | 0.826 | |

| FGSM | 0.25 | 1 | 0.07 | 0.19 | |

| FGSM | 0.025 | 1 | 0.2 | 0.724 | |

| PGD | 0.5 | 100 | 0.731 | 0.87 | |

| PGD | 0.25 | 1000 | 0.414 | 0.813 | |

| PGD | 0.5 | 100 | 0.195 | 0.663 | |

| PGD | 0.025 | 5 | 0.155 | 0.683 | |

| PGD | 0.25 | 5 | 0.11 | 0.052 | |

| C-W | 0.25 | 10 | 0.51 | 0.81 | |

| DeepFool | 0.25 | - | 0.13 | 0.111 |

| Attack | Norm | Epsilon | Steps | Robust acc. | Adv. acc. |

| No attack | - | - | - | 0.89 | 0.94 |

| FGSM | 0.25 | 1 | 0.49 | 0.83 | |

| FGSM | 0.25 | 1 | 0.10 | 0.415 | |

| FGSM | 0.025 | 1 | 0.63 | 0.79 | |

| PGD | 100 | 5 | 0.87 | 0.912 | |

| PGD | 45 | 100 | 0.88 | 0.903 | |

| PGD | 0.5 | 100 | 0.89 | 0.94 | |

| PGD | 0.5 | 5 | 0.06 | 0.378 | |

| PGD | 1.0 | 5 | 0.04 | 0.281 | |

| C-W | 0.25 | 10 | 0.51 | 0.85 | |

| DeepFool | 0.25 | - | 0.48 | 0.784 |

On examination, it’s apparent that both tested models demonstrate reasonable stability against some variations of PGD and FGSM attacks. However, all attacks with an norm significantly undermined the accuracy of the models. Hence, the model doesn’t ensure generalisation against all attack types, primarily as a simple increase in norm or perturbation shows a drastic impact. Despite this, the models did demonstrate resistance to certain unseen attacks, particularly those with an norm, exhibiting only a minor drop in accuracy. This resistance to norm attacks is crucial for these models in terms of generalisation, given they weren’t exposed to such an attack during training.

The accuracy of adversarially and robustly trained models might vary, but a similar ratio between them implies similar behavior. We evaluate this accuracy employing the Kolmogorov-Smirnov test for goodness. The null hypothesis at a significance level of 0.05 asserts that the samples, i.e., accuracy of robust and adversarial trained models under different attacks, are from the same distribution. The test for accuracy presented in Table 1 illustrates that, with a , we cannot reject the hypothesis. Therefore, robust models may be considered as a specific case of adversarial training, with its strengths and weaknesses.

In a bid to further extend this experiment, we opt to manage the entire adversarial training pipeline ourselves, thereby performing the process from scratch. Owing to computational constraints, we select the relatively more manageable ResNet18 architecture. The models are trained on the PGD attack with 5 iterations and and norms. For datasets, we utilize CIFAR-10 (the results are displayed in Table 4) and CINIC-10 [7] (refer to Table 5), and for test attacks, we use PGD and FGSM. Consistent with the previous pattern, attacks with the L-inf norm result in a more significant drop in accuracy than similar ones with the L2 norm.

| Regular model | Adv.trained, norm | Adv. trained, norm | |

| Accuracy on CINIC-10 (no attack) | 76 % | 75 % | 72 % |

| Accuracy on CIFAR-10 (no attack) | 95 % | 94 % | 91 % |

| Accuracy on CINIC-10 (PGD attack) | 7% - attack, 11% - attack | 37% - attack, 36% - attack | 40 % - attack, 43% - attack |

| Accuracy on CIFAR-10 (PGD attack) | 7% - attack, 27% - attack | 57% - attack, 55% - attack | 61 % - attack, 64 % - attack |

| Accuracy on CINIC-10 (FGSM attack) | 49 % - attack | 67 % - attack | 66 % - attack |

| Accuracy on CIFAR-10 (FGSM attack) | 72 % - attack | 89 % - attack | 87 % - attack |

| Regular model | Adv.trained, norm | Adv. trained, norm | |

| Accuracy on CINIC-10 (no attack) | 86 % | 84 % | 80 % |

| Accuracy on CIFAR-10 (no attack) | 94 % | 93 % | 90 % |

| Accuracy on CINIC-10 (PGD attack) | 3% - attack, 6% - attack | 33% - attack, 30% - attack | 42 % - attack, 46% - attack |

| Accuracy on CIFAR-10 (PGD attack) | 4% - attack, 7% - attack | 45% - attack, 41% - attack | 55 % - attack, 59 % - attack |

| Accuracy on CINIC-10 (FGSM attack) | 50 % - attack | 75 % - attack | 73 % - attack |

| Accuracy on CIFAR-10 (FGSM attack) | 63 % - attack | 87 % - attack | 85% - attack |

4.2 Comparison of representations under adversarial attacks (RA 2)

Canonical correlation analysis (CCA) is a method utilized to compare the representations of neural networks. Its objective is to identify linear combinations of two sets of random variables that maximize their correlation. In previous studies [20], [1], CCA has been employed to compare activations from different layers of neural networks. Raghu et al. [22] proposed an extension of CCA, singular value canonical correlation analysis (SVCCA), for the analysis of neural networks.

In this study, we employ SVCCA to compare the representations of the original and corresponding adversarial images. For each experiment, we take a batch of 128 images, compute the related adversarial examples under some attack, calculate SVCCA for the representations, and take the mean. We test three models in each experiment: regular and two adversarial ResNet50-s trained with PGD using and norms. In this experiment, a high mean correlation coefficient indicates that the representations are similar to each other and that small perturbations do not impact the model.

While the attack norms are the primary variables in these experiments, we also test two different attacks (FGSM and PGD) to eliminate the threat of validity. The means of SVCCA for the attacks are presented in Table 6, while Table 7 shows the same for the best and worst cases from Tables 1 and 2.

| Attack | Parameters | Regular model | Adv. trained, | Adv.trained, |

| PGD | , , | 0.686 | 0.981 | 0.989 |

| , , | 0.587 | 0.751 | 0.822 | |

| FGSM | , | 0.833 | 0.982 | 0.991 |

| , | 0.618 | 0.78 | 0.837 |

| Attack | Parameters | Regular model | Adv. trained, | Adv.trained, |

| PGD, best | , , | 0.682 | 0.989 | 0.99 |

| PGD, worst | , , | 0.46 | 0.532 | 0.598 |

| FGSM, best | , | 0.833 | 0.982 | 0.991 |

| FGSM, worst | , | 0.422 | 0.346 | 0.411 |

Overall, the results presented in tables 6 and 7 demonstrate that the impact of adversarial attacks on models is most significant when using -norm perturbation. This effect is evident even in models that have been trained specifically to handle -norm attacks. These findings emphasize the importance of considering the norms of adversarial attacks when evaluating the robustness of models because testing on -norm may provide a false sense of security.

4.3 Visualization of representations (RA 2)

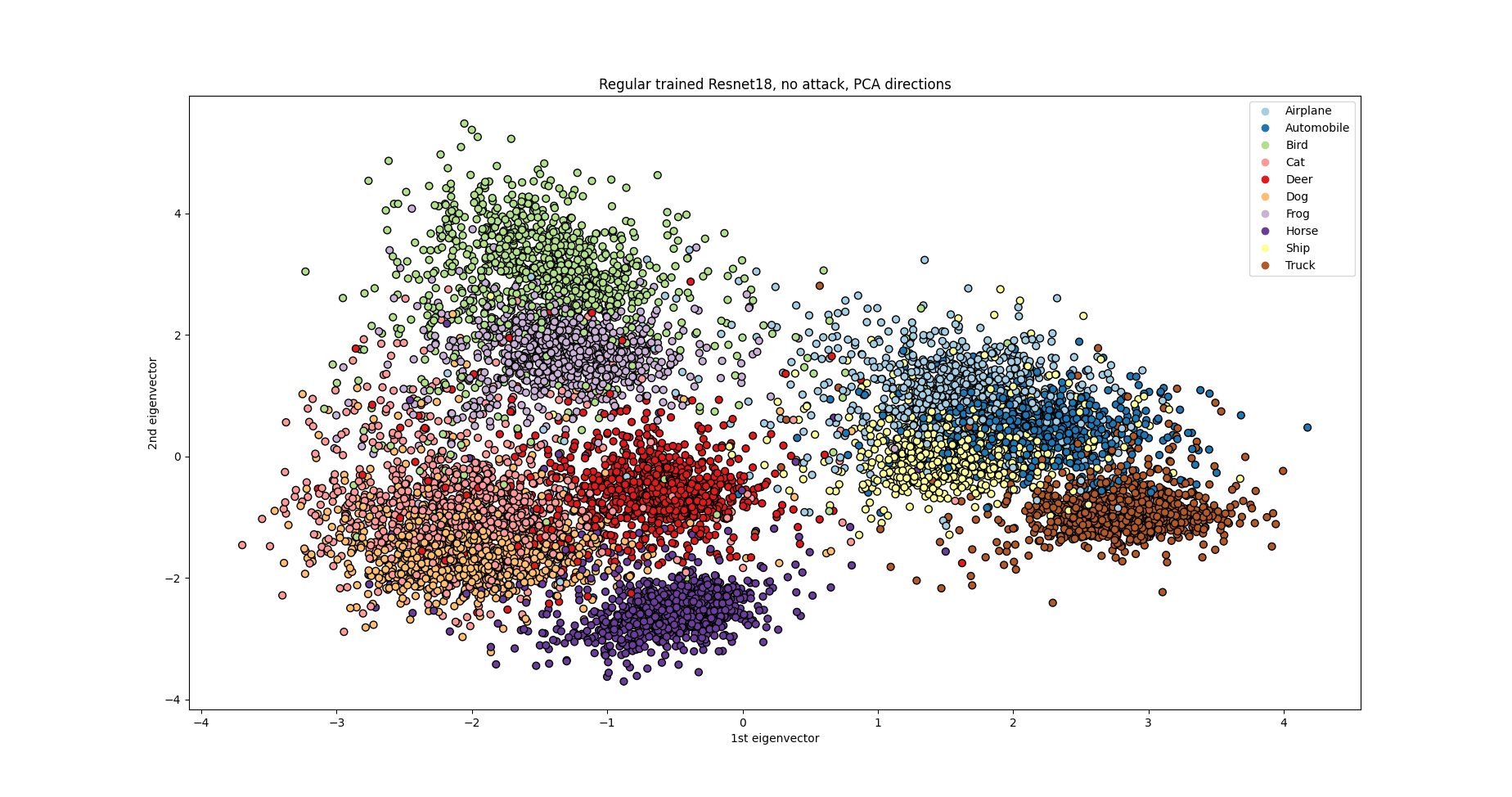

The visualization of representations presents a challenge due to the high dimensionality of representation vectors. Nonetheless, such visualization can be useful for analysis purposes. To address this issue, we employ Principal Component Analysis (PCA) to reduce the dimensionality of representations from 512 to 2. We limit our experimentation to ResNet18 due to computational constraints.

We present the visualization of representations for different combinations of models and norms of PGD attacks in Figures 1 - 5. Figure 1 depicts the representations of samples from CIFAR-10 for a regularly trained ResNet18, which serves as a baseline case. In Figures 2 and 3, we visualize the data as adversarial samples with and norms of attack, respectively. Furthermore, we examine the representations of adversarial samples for adversarial trained models in Figures 4 and 5. To challenge the models, we use alter norms of attacks from the training ones, where the model trained on PGD with norm is tested on -norm PGD attack (Figure 5), and vice versa (Figure 4). We group the representations of different classes by colors in all figures to comprehend how the representations of different classes intermingle under adversarial attacks.

The regular trained network representations are clustered according to the classes in the data set, as depicted in Figure 1. However, when subjected to adversarial attacks, all representations become heavily mixed, resulting in a more challenging classification task due to overlapping representations of different classes. The most mixed representations were observed on the regular trained network through the -norm PGD attack (Figure 3). In contrast, adversarial training, as shown in Figures 4 and 5, resulted in some class representations (e.g., "Automobile" or "Truck") remaining clustered while becoming closer to each other than those without attacks. The same pattern was observed for the -norm attack (Figure 3).

Both and demonstrate a marked decrease in accuracy, as evidenced by the aforementioned experiments (Tables 4 and 5). However, the impact of these norms on representations is not immediately apparent from a plain accuracy analysis. Indeed, the visualization of the representations distribution yielded intriguing patterns that warrant further investigation.

4.4 Distance to decision boundary (RA 3)

This study seeks to investigate the impact of adversarial training on the decision boundaries of models. Specifically, the mean distance between samples and the decision boundary is examined to determine how it differs for adversarially trained models. We use the idea of Mickisch et al. [18] who utilized the DeepFool attack 2.0.4 to measure this distance.

The decision boundary is defined as the set of input images where two or more classes share the same maximum probability, indicating that the classifier is uncertain about the class of the image.

where - is the number of classes. Under this definition, the usage of the DeepFool attack looks natural because it aims to find a perturbation to the closest wrong class. The distance of the sample to the decision boundary can be measured as:

The pretrained ResNet50 model is used with 20 steps of PGD attack during training, while ResNet18 models are trained under PGD with only 5 steps. The testing dataset is CIFAR-10. The outcomes of ResNet18 and ResNet50 models with different training configurations are presented in Table 8. The table displays the mean difference between decision boundaries of models and images from CIFAR-10, calculated using both and distances. The "steps" column represents the mean iteration of DeepFool spent during the attack generation. The comparison is made between the models from Tables 4, 1, 2.

| Model | Mean distance | Mean distance | Steps |

| Regular trained ResNet18 | 0.1793 | 0.0227 | 1.92 |

| Adversarial trained ResNet18 ( norm) | 0.659 | 0.0824 | 1.98 |

| Adversarial trained ResNet18 (norm) | 0.1018 | 0.0139 | 2.5 |

| Regular trained ResNet50 | 0.17 | 0.02 | 2.58 |

| Adversarial trained ResNet50 ( norm) | 1.3728 | 0.162 | 1.77 |

| Adversarial trained ResNet50 ( norm) | 1.18 | 0.303 | 2.66 |

The results indicate that the mean distance for ResNet18-s (for all types of training) to the decision boundary is relatively small, especially for norm. A small distance from a sample to the decision boundary implies that it is easier to misclassify this sample because it does not require a significant perturbation. Interestingly, the distance for norm adversarial trained model is actually less than training . However, testing of this model under PGD attack (Table 4) suggests that it has some robustness. Therefore, the reliability of the popular method of model testing used in this study is called into question.

5 Conclusion

The experiments demonstrate that the model, trained on a "robust" data set, is still vulnerable to some attacks; thus, adversarial attacks do not compromise only non-robust features. Therefore, the robust features are not well generalized, especially on norm of attack. We assume that the small difference between clean and adversary inputs for the attack leads to a huge gap in latent space between them; SVCCA analysis of different attack representations confirms this assumption. Our visualization of neural network representation also displays the difference between and norms of attack.

In light of these results, we recommend that researchers in the field of adversarial attacks and defense mechanisms pay closer attention to -norm attacks to avoid a false sense of security. It is crucial to consider this norm in their tests to ensure the robustness of models against potential attacks.

References

- [1] An, S., Bhat, G., Gumussoy, S., Ogras, Ü.Y.: Transfer learning for human activity recognition using representational analysis of neural networks. ACM Transactions on Computing for Healthcare 4, 1 – 21 (2020)

- [2] Batanina, E., Bekkouch, I.E.I., Khan, A., Khattak, A.M., Bortnikov, M.: Domain adaptation for car accident detection in videos. In: Ninth International Conference on Image Processing Theory, Tools and Applications, IPTA 2019, Istanbul, Turkey, November 6-9, 2019. pp. 1–6. IEEE (2019). https://doi.org/10.1109/IPTA.2019.8936124

- [3] Bekkouch, I.E.I., Youssry, Y., Gafarov, R., Khan, A., Khattak, A.M.: Triplet loss network for unsupervised domain adaptation. Algorithms 12(5), 96 (2019). https://doi.org/10.3390/a12050096

- [4] Biggio, B., Corona, I., Maiorca, D., Nelson, B., Šrndić, N., Laskov, P., Giacinto, G., Roli, F.: Evasion attacks against machine learning at test time. In: Machine Learning and Knowledge Discovery in Databases: European Conference, ECML PKDD 2013, Prague, Czech Republic, September 23-27, 2013, Proceedings, Part III 13. pp. 387–402. Springer (2013)

- [5] Carlini, N., Wagner, D.A.: Towards evaluating the robustness of neural networks. 2017 IEEE Symposium on Security and Privacy (SP) pp. 39–57 (2016)

- [6] Chakraborty, A., Alam, M., Dey, V., Chattopadhyay, A., Mukhopadhyay, D.: Adversarial attacks and defences: A survey. arXiv preprint arXiv:1810.00069 (2018)

- [7] Darlow, L.N., Crowley, E.J., Antoniou, A., Storkey, A.J.: Cinic-10 is not imagenet or cifar-10. arXiv preprint arXiv:1810.03505 (2018)

- [8] Engstrom, L., Gilmer, J., Goh, G., Hendrycks, D., Ilyas, A., Madry, A., Nakano, R., Nakkiran, P., Santurkar, S., Tran, B., Tsipras, D., Wallace, E.: A discussion of ’adversarial examples are not bugs, they are features’. Distill (2019). https://doi.org/10.23915/distill.00019, https://distill.pub/2019/advex-bugs-discussion

- [9] Engstrom, L., Ilyas, A., Salman, H., Santurkar, S., Tsipras, D.: Robustness (python library) (2019), https://github.com/MadryLab/robustness

- [10] Goodfellow, I.J., Shlens, J., Szegedy, C.: Explaining and harnessing adversarial examples. In: 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, May 7-9, 2015, Conference Track Proceedings (2015)

- [11] Ilyas, A., Santurkar, S., Tsipras, D., Engstrom, L., Tran, B., Madry, A.: Adversarial examples are not bugs, they are features. In: Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, December 8-14, 2019, Vancouver, BC, Canada. pp. 125–136 (2019)

- [12] Karim, F., Majumdar, S., Darabi, H.: Adversarial attacks on time series. IEEE Transactions on Pattern Analysis and Machine Intelligence 43, 3309–3320 (2019)

- [13] Khan, A., Fraz, K.: Post-training iterative hierarchical data augmentation for deep networks. In: Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H. (eds.) Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, December 6-12, 2020, virtual (2020)

- [14] Khan, A.M., Tufail, A., Khattak, A.M., Laine, T.H.: Activity recognition on smartphones via sensor-fusion and kda-based svms. Int. J. Distributed Sens. Networks 10 (2014). https://doi.org/10.1155/2014/503291

- [15] Kim, B., Sagduyu, Y.E., Davaslioglu, K., Erpek, T., Ulukus, S.: Channel-aware adversarial attacks against deep learning-based wireless signal classifiers. IEEE Transactions on Wireless Communications PP, 1–1 (2020)

- [16] Krizhevsky, A.: Learning multiple layers of features from tiny images (2009)

- [17] Madry, A., Makelov, A., Schmidt, L., Tsipras, D., Vladu, A.: Towards deep learning models resistant to adversarial attacks. In: 6th International Conference on Learning Representations, ICLR 2018, Vancouver, BC, Canada, April 30 - May 3, 2018, Conference Track Proceedings. OpenReview.net (2018)

- [18] Mickisch, D., Assion, F., Greßner, F., Günther, W., Motta, M.: Understanding the decision boundary of deep neural networks: An empirical study. ArXiv abs/2002.01810 (2020)

- [19] Moosavi-Dezfooli, S.M., Fawzi, A., Frossard, P.: Deepfool: A simple and accurate method to fool deep neural networks. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) pp. 2574–2582 (2015)

- [20] Morcos, A.S., Raghu, M., Bengio, S.: Insights on representational similarity in neural networks with canonical correlation. In: Neural Information Processing Systems (2018)

- [21] Protasov, S., Khan, A., Sozykin, K., Ahmad, M.: Using deep features for video scene detection and annotation. Signal, Image and Video Processing 12 (07 2018). https://doi.org/10.1007/s11760-018-1244-6

- [22] Raghu, M., Gilmer, J., Yosinski, J., Sohl-Dickstein, J.: Svcca: Singular vector canonical correlation analysis for deep learning dynamics and interpretability. Advances in neural information processing systems 30 (2017)

- [23] Rasheed, B., Khan, A., Kazmi, S., Hussain, R., Jalil Piran, M., Suh, D.: Adversarial attacks on featureless deep learning malicious urls detection. Computers, Materials & Continua 680, 921–939 (01 2021). https://doi.org/10.32604/cmc.2021.015452

- [24] Rivera, A.R., Khan, A., Bekkouch, I.E.I., Sheikh, T.S.: Anomaly detection based on zero-shot outlier synthesis and hierarchical feature distillation. IEEE Transactions on Neural Networks and Learning Systems 33, 281–291 (2020)

- [25] Salman, H., Ilyas, A., Engstrom, L., Kapoor, A., Madry, A.: Do adversarially robust imagenet models transfer better? Advances in Neural Information Processing Systems 33, 3533–3545 (2020)

- [26] Tramèr, F., Kurakin, A., Papernot, N., Goodfellow, I.J., Boneh, D., McDaniel, P.D.: Ensemble adversarial training: Attacks and defenses. In: 6th International Conference on Learning Representations, ICLR 2018, Vancouver, BC, Canada, April 30 - May 3, 2018, Conference Track Proceedings. OpenReview.net (2018)

- [27] Wong, E., Kolter, Z.: Provable defenses against adversarial examples via the convex outer adversarial polytope. In: International conference on machine learning. pp. 5286–5295. PMLR (2018)

- [28] Wong, E., Rice, L., Kolter, J.Z.: Fast is better than free: Revisiting adversarial training. In: 8th International Conference on Learning Representations, ICLR 2020, Addis Ababa, Ethiopia, April 26-30, 2020. OpenReview.net (2020)

- [29] Wong, E., Schmidt, F.R., Metzen, J.H., Kolter, J.Z.: Scaling provable adversarial defenses. In: Neural Information Processing Systems (2018)

- [30] Zhang, C., Benz, P., Imtiaz, T., Kweon, I.S.: Understanding adversarial examples from the mutual influence of images and perturbations. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 14521–14530 (2020)