Non-Markovian Reduced Models to Unravel Transitions in Non-equilibrium Systems

Abstract

This work proposes a general framework for analyzing noise-driven transitions in spatially extended non-equilibrium systems and explains the emergence of coherent patterns beyond the instability onset. The framework relies on stochastic parameterization formulas to reduce the complexity of the original equations while preserving the essential dynamical effects of unresolved scales. The approach is flexible and operates for both Gaussian noise and non-Gaussian noise with jumps.

Our stochastic parameterization formulas offer two key advantages. First, they can approximate stochastic invariant manifolds when these manifolds exist. Second, even when such manifolds break down, our formulas can be adapted through a simple optimization of its constitutive parameters. This allows us to handle scenarios with weak time-scale separation where the system has undergone multiple transitions, resulting in large-amplitude solutions not captured by invariant manifolds or other time-scale separation methods.

The optimized stochastic parameterizations capture then how small-scale noise impacts larger scales through the system’s nonlinear interactions. This effect is achieved by the very fabric of our parameterizations incorporating non-Markovian (memory-dependent) coefficients into the reduced equation. These coefficients account for the noise’s past influence, not just its current value, using a finite memory length that is selected for optimal performance. The specific “memory” function, which determines how this past influence is weighted, depends on both the strength of the noise and how it interacts with the system’s nonlinearities.

Remarkably, training our theory-guided reduced models on a single noise path effectively learns the optimal memory length for out-of-sample predictions. This approach retains indeed good accuracy in predicting noise-induced transitions, including rare events, when tested against a large ensemble of different noise paths. This success stems from our “hybrid” approach, which combines analytical understanding with data-driven learning. This combination avoids a key limitation of purely data-driven methods: their struggle to generalize to unseen scenarios, also known as the “extrapolation problem.”

pacs:

05.45.-a, 89.75.-kI Introduction

Non-equilibrium systems are irreversible systems characterized by a continuous flow of energy that is driven by external forces or internal fluctuations. There are many different types of non-equilibrium systems, and they can be found in a wide variety of fields, including physics, chemistry, biology, and engineering. Non-equilibrium systems can exhibit complex behavior, including self-organization, pattern formation, and chaos. These complex behaviors arise from the interplay of nonlinear dynamics and statistical fluctuations. The emergence of coherent, complex patterns is ubiquitous in many spatially extended non-equilibrium systems; see e.g. [1, 2, 3, 4, 5, 6, 7, 8, 9, 10]. Mechanisms to explain the emergence of these patterns include the development of instability saturated by nonlinear effects whose calculations can be conducted typically near the onset of linear instability; see e.g. [11, 12]. Historically, hydrodynamic systems have been commonly used as prototypes to study instabilities out of equilibrium in spatially extended systems, from both an experimental and a theoretical point of view [13, 3].

To describe how physical instability develops in such systems with infinitely many degrees of freedom, we often focus on the amplitudes’ temporal evolution of specific normal modes. The latter correspond typically to those that are mildly unstable and that are only slightly damped in linear theory. When the number of these nearly marginal modes is finite, their amplitudes are governed by ordinary differential equations (ODEs) in which the growth rates of the linear theory have been renormalized by nonlinear terms [1, 11, 3, 12]. Intuitively, the reason for this reduction is a simple separation of time scales. Modes that have just crossed the imaginary axis have a small real part and are evolving slowly on long time scales, all the other fast modes rapidly adapting themselves to these slow modes.

However, for non-equilibrium systems away from the onset of linear instability, such as when the Reynolds number for a fluid flow increases far beyond a laminar regime, the emergence of coherent patterns does not fit within this instability/nonlinear saturation theory as the reduction principle of the fast modes onto the slow ones breaks down [11] and calls for new reduction techniques to explain emergence. Furthermore, in the presence of random fluctuations, reduced equations under the form of deterministic ODEs are inherently incapable to capture phenomena like noise-induced transitions. Noise can have unexpected outcomes such as altering the sequence of transitions, possibly suppressing instabilities [14], the onset of turbulence [15] or, to the opposite, exciting transitions [16, 7]. Examples in which noise-induced transitions have an important role include the generation of convective rolls [17, 18], electroconvection in nematic liquid crystals [16, 19], certain oceanic flows [20, 21], and climate phenomena [22, 23, 24, 25].

This work aims to gain deeper insights into the efficient derivation of reduced models for such non-equilibrium systems subject to random fluctuations [3, 7]. In that respect, we seek reduced models able to capture the emergence of noise-driven spatiotemporal patterns and predict their transitions triggered by the subtle coupling between noise and the nonlinear dynamics. Our overall goal is to develop a unified framework for identifying the key variables and their interactions (parameterization) in complex systems undergoing noise-driven transitions. We specifically focus on systems that have experienced multiple branching points (bifurcations), leading to high-multiplicity regimes with numerous co-existing metastable states whose amplitude is relatively large (order one or more) resulting from a combination of strong noise, inherent nonlinear dynamics, or both. The purpose is thus to address limitations of existing approaches like amplitude equations and center manifold techniques in dealing with such regimes.

To address these limitations, the framework of optimal parameterizing manifolds (OPMs) introduced in [26, 27, 28] for forced-dissipative systems offers a powerful solution. This framework allows for the efficient derivation of parameterizations away from the onset of instability, achieved through continuous deformations of near-onset parameterizations that are optimized by data-driven minimization. Typically, the loss function involves a natural discrepancy metric measuring the defect of parameterization of the unresolved variables by the resolved ones. The parameterizations that are optimized are derived from the governing equations by means of backward-forward systems providing useful approximations of the unstable growth saturated by the nonlinear terms, even when the spectral gap between the stable and unstable modes is small [27, 29, 28]. These approximations, once optimized, do not suffer indeed the restrictions of invariant manifolds (spectral gap condition [27, Eq. (2.18)]) allowing to handle situations with e.g. weak time-scale separation between the resolved and unresolved modes.

In this article, we carry over this variational approach to the case of spatially extended non-equilibrium systems within the framework of stochastic partial differential equations (SPDEs). In particular, we extend the parameterization formulas obtained in [30, 29, 31] for SPDEs driven by multiplicative noise (parameter noise [18, 7]), to SPDEs driven, beyond this onset, by more realistic, spatio-temporal noise either of Gaussian or of non-Gaussian nature with jumps. For this class of SPDEs, our framework allows for dealing with the important case of cutoff scales larger than the scales forced stochastically.

For this forcing scenario, the stochastic parameterizations derived in this work, give rise to reduced systems taking the form of stochastic differential equations (SDEs) with non-Markovian, path-dependent coefficients depending on the noise’s past; see e.g. Section V.2 below. We mention that many time series records from real-world datasets, are known to exhibit long-term memory. This is the case of long-range memory features assumed to represent the internal variability of the climate on time scales from years to centuries [32, 33, 34, 35]. There, the surface temperature is considered as a superposition of internal variability (the response to stochastic forcing) and a forced signal which is the linear response to external forcing. The background variability may be modeled using a stochastic process with memory, or a different process that incorporates non-Gaussianity if this is considered more appropriate [36]. As will become apparent at the end of this paper, our reduction approach is adaptable to PDEs under the influence of stochastic forcing with long-range dependence; see Section IX.

In parallel and since Hasselmann’s seminal work [37], several climate models have been incorporating stochastic forcing to represent unresolved processes [38, 39, 40, 41, 42, 43]. Our approach shows that reduced models derived from these complex systems are expected to exhibit finite-range memory effects depending on the past history of the stochastic forcing, even if the latter is white in time. These exogenous memory effects, stemming from the forcing noise’s history, are distinct from endogenous memory effects encountered in the reduction of nonlinear, unforced systems [44, 45, 43]. The latter, predicted by the Mori-Zwanzig (MZ) theory, are functionals of the past of the resolved state variables. Endogenous memory effects often appear when the validity of the conditional expectation in the MZ expansion, breaks down [46, 26, 47, 43]. The exogenous memory effects dealt with in this work, have a different origin, arising as soon as the cutoff scale is larger than the scales forced stochastically.

It is worth mentioning that reduced models with non-Markovian coefficients responsible for such exogenous memory effects, have been encountered in the reduction of stochastic systems driven by white noise, albeit near a change of stability. These are obtained from reduction approaches benefiting from timescale separation such as stochastic normal forms [48, 49, 50], stochastic invariant manifold approximations [51, 30, 29, 31], or multiscale methods [52, 53, 54, 55]. Our reduction approach is not limited to timescale separation.

In that respect, our reduction framework is tested against a stochastic Allen-Cahn equation (sACE), a powerful tool for modeling non-equilibrium phase transitions in various scientific fields [1, 3, 56, 57]. The latter model is set in a parameter regime with weak timescale separation and far from the instability onset, in which the system exhibits multiple coexisting metastable states connected to the basic state through rare or typical stochastic transition paths.

We show that our resulting OPM reduced systems, when trained over a single path, are not only able to retain a remarkable predictive power to emulate ensemble statistics (correlations, power spectra, etc.) obtained as average over a large ensemble of noise realizations, but also anticipate what are the system’s typical and rare metastable states and their statistics of occurrence. The OPM reduced system’s ability to reproduce accurately such transitions is rooted in its very structure. Its coefficients are nonlinear functionals of the aforementioned non-Markovian terms (see Eq. (85) below) allowing for an accurate representation of the genuine nonlinear interactions between noise and nonlinear terms in the original sACE, which drive fluctuations in the large-mode amplitudes.

We emphasize, that because the cutoff scale, defining the retained large-scale dynamics, is chosen here to be larger than the forcing scale, any deterministic reduction (e.g. Galerkin, invariant manifold, etc.) would filter out the underlying noise’s effects. This leaves them blind to the subtle fluctuations driving the system’s behavior and leading eventually to stochastic transitions. In contrast, our stochastic parameterization approach sees right through this filter. It tracks how the noise, acting in the “unseen” part of the system, interacts with the resolved modes through our reduction method’s non-Markovian coefficients.

To demonstrate the broad applicability of our reduction approach, we apply it in Section VI to another significant class of spatially extended non-equilibrium systems: jump-driven SPDEs. The unforced, nonlinear dynamics is here chosen to exhibit an S-shaped solution curve. Many systems sharing this attribute are indicative of multistability, tipping points, and hysteresis. Such behaviors are observed in various fields, including combustion theory [58, 59], plasma physics [60, 61], ecology [9], neuroscience [62], climate science [63, 64], and oceanography [65, 66]. By understanding the complex interactions between noise and nonlinearity into these phenomena, we can gain insights into critical transitions and tipping points, which are increasingly relevant to addressing global challenges like climate change [67, 68, 69, 70, 43].

Jump processes offer a powerful tool for modeling complex systems with non-smooth dynamics. They offer valuable insights into multistable behaviors [71, 72] and have been applied to various real-world phenomena, such as paleoclimate events [73, 36], chaotic transport [74], atmospheric dynamics [75, 76, 77, 78, 79, 80], and cloud physics [81, 82].

SPDEs driven by jump processes are gaining increasing attention in applications [83]. For instance, jump processes can replace (non-smooth) ”if-then” conditions in such models, enabling more efficient simulations [80]. Nevertheless, efficient reduction techniques to disentangle the jump interactions with other smoother nonlinear components of the model, are still under development [84, 85, 86]. Our stochastic parameterization framework provides a promising solution in this direction, as demonstrated in Section VI. By capturing the intricate interplay between jump noise and nonlinear dynamics within the reduced equations, our approach can significantly simplify the analysis of such complex systems.

II Invariance Equation and Approximations

II.1 Spatially extended non-equilibrium systems

This article is concerned with the efficient reduction of spatially extended non-equilibrium systems. To do so, we work within the framework of Stochastic Equations in Infinite Dimensions [87] and its Ergodic Theory [88]. Formally, these equations take the following form

| (1) |

in which is a stochastic process, either composed of Brownian motions or jump processes and whose exact structure is specified below. This formalism provides a convenient way to analyze stochastic partial differential equations (SPDEs) by means of semigroup theory [89, 90], in which the unknown evolves typically in a Hilbert space .

The operator represents a linear differential operator while is a nonlinear operator that accounts for the nonlinear terms. Both of these operators may involve loss of spatial regularity when applied to a function in . To have a consistent existence theory of solutions and their stochastic invariant manifolds for SPDEs requires to take into account such loss of regularity effects [87, 88].

General assumptions encountered in applications are made on the linear operator following [89]. More specifically, we assume

| (2) |

where is sectorial with domain which is compactly and densely embedded in . We assume also that is stable, while is a low-order perturbation of , i.e. is a bounded linear operator such that for some in . We refer to [89, Sec. 1.4] for an intuitive presentation of fractional power of an operator and characterization of the so-called operator domain . In practice, the choice of should match the loss of regularity effects caused by the nonlinear terms so that

is a well-defined -smooth mapping that satisfies , (tangency condition), and

| (3) |

with , and denoting the leading-order operator (on ) in the Taylor expansion of , near the origin. A broad class of spatially extended stochastic equations from physics can be recasted into this framework (see [91, 87, 30, 92, 31] for examples), as well as time-delay systems subject to stochastic disturbances [82, 93].

Throughout this article, we assume that the driving noise in Eq. (1) is an -valued stochastic process that takes the form

| (4) |

where the are eigenmodes of , the denote either a finite family of mutually independent jump processes, or mutually independent Brownian motions, , over their relevant probability space endowed with its canonical probability measure and filtration ; see e.g. [50, Appendix A.3].

Within this framework, the reduction of spatially extended non-equilibrium systems is organized in terms of resolved and unresolved spatial scales. The resolved scales are typically spanned by large wavenumbers and the unresolved by smaller ones. In that respect, the eigenmodes of the operator plays a central role throughout this paper to rank the spatial scales. Typically, we assume that the state space of resolved variables is spanned by the following modes

| (5) |

where the correspond to large-scale modes up to a cutoff-scale associated with some index (see Remark II.1). The subspace of unresolved modes is then the orthogonal complement of in , namely

| (6) |

To these subspaces, we associate their respective canonical projectors denoted by

| (7) |

Throughout Section III below and the remaining of this Section, we focus on the case of driving Brownian motions; the case of driving jump processes is dealt with in Section VI.

Our goal is to provide a general approach to derive reduced models that preserve the essential features of the large-scale dynamics without resolving the small scales.

We present hereafter and in Section III below, the formulas of the underlying small-scale parameterizations in the Gaussian noise case. The formalism is flexible and easily adaptable to the case of non-Gaussian noises with jumps such as discussed in Sections VI and VII, below.

Remark II.1

Within our working assumptions, the spectrum consists only of isolated eigenvalues with finite multiplicities. This combined with the sectorial property of implies that there are at most finitely many eigenvalues with a given real part. The sectorial property of also implies that the real part of the spectrum, , is bounded above (see also [94, Thm. II.4.18]). These two properties of allow us in turn to label elements in according to the lexicographical order which we adopt throughout this article:

| (8) |

such that for any we have either

| (9) |

or

| (10) |

This way, we can rely on a simple labeling of the eigenvalues/eigenmodes by positive integers to organize the resolved and unresolved scales and the corresponding parameterization formulas derived hereafter. In practice, when the spatial domain is 2D or 3D, it is usually physically more intuitive to label the eigenelements by wave vectors. The parameterization formulas presented below can be easily recast within this convention; see e.g. [95].

II.2 Invariance equation and backward-forward systems

As mentioned in Introduction, this work extends the parameterization formulas in [27, 28] (designed for constant or time-dependent forcing) to the stochastic setting. In both deterministic and stochastic contexts, deriving the relevant parameterizations and reduced systems relies on solving Backward-Forward (BF) systems arising in the theory of invariant manifolds. Similar to [27, 28], our BF framework allows us to overcome limitations of traditional invariant manifolds, particularly the spectral gap condition ([27, Eq. (2.18)]). This restrictive condition, which requires large gaps in the spectrum of the operator , is bypassed through data-driven optimization (detailed in Section III.3) of the backward integration time over which the BF systems are integrated.

Before diving into the parameterization formulas retained to address these limitations (Section II.3), we recall the basics of reducing an SPDE to a Stochastic Invariant Manifold (SIM). In particular, this detour enables us to better appreciate the emergence of BF systems as a natural framework for parameterizations. For that purpose, adapting the approach from [30], we transform the SPDE reduction problem into the more tractable problem of reducing a PDE with random coefficients. This simplification makes the problem significantly more amenable to analysis than its original SPDE form.

To do so, consider the stationary solution to the Langevin equation

| (11) |

(Ornstein-Uhlenbeck (OU) process), where is defined in Eq. (4) with the corresponding mutually independent Brownian motions, , in place of the , i.e.:

| (12) |

Then, for each noise’s path , the change of variable

| (13) |

transforms the SPDE,

into the following PDE with random coefficients in the -variable:

| (14) |

Under standard conditions involving the spectral gap between the resolved and unresolved modes [96], the path-dependent PDE (14) admits a SIM, and the underlying stochastic SIM mapping, (), satisfies the stochastic invariance equation:

| (15) |

where denotes the operator acting on differentiable mappings from into , as follows:

| (16) |

with and .

To simplify, assume that and are chosen such that . Then in particular . Consider now the Lyapunov-Perron integral

| (17) |

where . In what follows, we denote by the Hermitian inner product on defined as , while denotes the leading-order term of order in the Taylor expansion of around , and denotes the -th eigenmode of the adjoint operator of adopting the same labelling convention as for ; see Remark. II.1. To the order we associate the set of indices , and denote by any subset of indices made of (possibly empty) of disjoint elements of .

We have then the following result.

Theorem II.1

Under the previous assumptions, assume furthermore that the following non-resonance condition holds, for any in , , and any subset such as defined above:

| (18) | ||||

with .

Then, the Lyapunov-Perron integral (Eq. (17)) is well-defined almost surely, and is a solution to the following homological equation with random coefficients:

| (19) |

Moreover, we observe that the integral is (formally) obtained as the limit, when goes to infinity, of the -solution to the following backward-forward (BF) auxiliary system:

| (20a) | |||

| (20b) | |||

| (20c) | |||

That is

| (21) |

where denotes the solution to Eq. (20b) at time , when initialized with at .

For a proof of this Theorem, see Appendix A. This theorem extends to the stochastic context Theorem III.1 of [28]. To simplify the notations, we omit below the -dependence in certain notations unless specified otherwise.

Theorem II.1 teaches us that solving the BF system (20) gives the solution to the homological equation (17) which is an approximation of the full invariance equation Eq. (15). In the deterministic setting, solutions to the homological equation (17) are known to provide actual approximations of the invariant manifolds with rigorous estimates; see [27, Theorem 1] and [28, Theorem III.1]. Such results extend to the case of SPDEs driven by multiplicative (parameter) noise; see [31, Theorem 2.1] and [30, Theorem 6.1 and Corollary 7.1]. It is not the scope of this article to deal with rigorous error estimates regarding the approximation problem of stochastic invariant manifolds for SPDEs driven by additive noise, but the rationale stays the same: solutions to the homological equation (17) or equivalently to the BF system (20) provide actual approximations of the underlying stochastic invariant manifolds, in the additive noise case as well.

Going back to the SPDE variable , the BF system (20) becomes

| (22a) | |||

| (22b) | |||

| (22c) | |||

Note that in Eq. (22), only the low-mode variable is forced stochastically, and thus from what precedes, , provides a legitimate approximation of the SIM at time when in the original SPDE, only the low modes are forced stochastically, i.e. in (12). In the next section, we consider the general case when the low and high modes are stochastically forced.

II.3 Approximations of fully coupled backward-forward systems

Backward-forward systems have been actually proposed in the literature for the construction of SIMs [97], through a different route than what presented above, i.e. without exploiting the invariance equation. The idea pursued in [97] is to envision SIMs as a fixed point of an integral form of the following fully coupled BF system

| (23a) | |||

| (23b) | |||

| (23c) | |||

where . Note that in Eq. (23), we do not assume here the noise term to be a finite sum of independent Brownian motions. In the case of an infinite sum one can thus covers the case of space-time white noise due to [98].

In any case, it is known that this type of fully coupled nonlinear problems involving backward stochastic equations does not have always solutions in general and we refer to [97, Proposition 3.1] for conditions on and ensuring existence of solutions and thus SIM. This (nonlinear) BF approach to SIM is also subject to a spectral gap condition that requires gap to be large enough as in [96].

Denoting by the solution to Eq. (23b) at , Proposition 3.4 of [97] ensures then that exists in and that this limit gives the sought SIM, i.e.

| (24) |

The SIM is thus obtained, almost surely, as the asymptotic graph of the mapping , when is sent to infinite.

Instead of relying on an integral form of Eq. (23), one can address though the existence and construction of a SIM via a more direct approach, exploiting iteration schemes built directly from Eq. (23). It is not the purpose of this article to analyze the convergence of such iterative schemes (subject also to a spectral gap condition as in [97]) but rather to illustrate how informative such schemes can be in designing stochastic parameterizations in practice.

To solve (23), given an initial guess, , we propose the following iterative scheme:

| (25a) | |||

| (25b) | |||

where and with in and . Here again, the first equation (Eq. (25a)) is integrated backward over , followed by a forward integration of the second equation (Eq. (25b)) over the same interval. To simplify the notations, we will often omit to point out the interval in the BF systems below.

To help interpret the parameterization produced by such an iterative scheme, we restrict momentarily ourselves to the case of a nonlinearity that is quadratic (denoted by ) and to noise terms that are scaled by a parameter . This case covers the important case of the Kardar–Parisi–Zhang equation [99]. The inclusion of this scaling factor allows us to group the terms constituting the stochastic parameterization according to powers of providing in particular useful small-noise expansions; see Eq. (30) below.

Under this working framework, if we start with , we get then

| (26) | ||||

which leads to

and

| (27) | ||||

To further make explicit that provides the stochastic parameterization we are seeking (after 2 iterations), we introduce

| (28) | ||||

We can then rewrite given in (LABEL:Eq_1st_iteration) as follows:

| (29) | ||||

By introducing additionally

we get for any the following explicit expressions

and

Let us denote by the projector onto the mode . Using the above identities in (LABEL:Eq_2nd_iteration), and setting , we get for ,

| (30) | ||||

where

| (31) | ||||

| (32) | ||||

and

with .

In the classical approximation theory of SIM, one is interested in conditions ensuring convergence of the integrals involved in the random coefficients and as , since (and its higher-order analogues with ) aims to approximate the SIM defined in Eq. (24). In our approach to stochastic parameterization, we do not restrict ourselves to such a limiting case but rather seek optimal backward integration time that minimizes a parameterization defect as explained below in Section III.3.

However, computing this parameterization defect involves the computation of the random coefficients in the course of time (see Eq. (53) below). The challenge is that the structure of and involves repeated stochastic convolutions in time, and as such one wants to avoid a direct computation by quadrature. We propose below an alternative and efficient way to compute such random coefficients, for a simpler, more brutal approximation than . As shown in Sections V and VI, this other class of stochastic parameterizations turns out to be highly performant for the important case where the stochastically forced scales are exclusively part of the neglected scales.

This approximation consists of setting in Eq. (LABEL:Eq_2nd_iteration), namely to deal with the stochastic parameterization

| (33) | ||||

after restoring as a more general nonlinearity than .

Note also that this parameterization is exactly the solution at of the following BF system

| (34a) | |||

| (34b) | |||

| (34c) | |||

with .

Section III.2 details an efficient computation of the single stochastic convolution in equation (33) using auxiliary ODEs with random coefficients. It is worth noting that previous SPDE reduction approaches have, in certain circumstances or under specific assumptions (e.g., as seen in [54, Eq. (2.2)] and [40, Eq. (2.10)]), deliberately opted to avoid directly computing these convolutions via quadrature [100, 101, 102].

The stochastic convolution in Eq. (33) accounts for finite-range memory effects stemming from the stochastic forcing to the original equation. Such terms have been encountered in the approximation of low-mode amplitudes, albeit in their asymptotic format when see e.g. [54, 103]. As shown in our examples below (Sections V and VI), stochastic convolution terms become crucial for efficient reduction when the cutoff scale, defining the retained large-scale dynamics, is larger than the forcing scale. There, we show furthermore that the finite-range memory content (measured by ) is a key factor for a skillful reduction when optimized properly. Also, as exemplified in applications, optimizing the nonlinear terms in Eq. (33) may turn to be of utmost importance to reproduce accurately the average motion of the SPDE dynamics; see Section V.5 below.

The efficient computation of repeated convolutions involved in the coefficients and is however more challenging and will be communicated elsewhere. This is not only a technical aspect though, as these repeated convolutions in given by Eq. (31) characterize important triad interactions reflecting how the noise interacts through the nonlinear terms into three groups: the low-low interactions in (), the low-high interactions in () and high-high interactions in (). By using the simpler parameterization defined in Eq. (33) we do not keep these interactions at the parameterization level, however as approximates the high-mode amplitude it still allows us to account for triad interactions into the corresponding reduced models; see e.g. Eq. (85) below.

III Non-Markovian Parameterizations: Formulas and Optimization

The previous discussion leads us to consider, for each , the following scale-aware BF systems

| (35a) | |||

| (35b) | |||

| (35c) | |||

where denotes the projector onto the mode . Here, is a free parameter to be adjusted, no longer condemned to approach as in the approximation theory of SIM discussed above. Note that compared to Eq. (34), we break down the forcing mode by mode for each high mode to allow for adjusting the free backward parameter, , per scale to parameterize (scale-awareness). This strategy allows for a greater degree of freedom to calibrate useful parameterizations, as will be apparent in applications dealt with in Sections V and VI.

Also, compared to Eq. (34), the initial condition for the forward integration in Eq. (35) is a scale-aware parameter in Eq. (35c). It is aimed at resolving the time-mean of the -th high-mode amplitude, with solving Eq. (1). In many applications, it is enough to set though.

In the following, we make explicit the stochastic parameterization obtained by integration of the BF system (35) for SPDEs with cubic nonlinear terms, for which the stochastically forced scales are part of the neglected scales.

III.1 Stochastic parameterizations for systems with cubic interactions

We consider

| (36) |

Here, is a partial differential operator as defined in (2) for a suitable ambient Hilbert space ; see also [29, Chap. 2]. The operators and are quadratic and cubic, respectively. Here again, we assume the eigenmodes of and its adjoint to form a bi-orthonormal basis of ; namely, the set of eigenmodes of and of each forms a Hilbert basis of , and they satisfy the bi-orthogonality condition , where denotes the Kronecker delta function. The noise term takes the form defined in Eq. (12). Stochastic equations such as Eq. (36) arise in many areas of physics such as in the description of phase separation [104, 105, 56], pattern formations [3, 7] or transitions in geophysical fluid models [20, 106, 107, 108, 25, 21, 31].

We assume that Eq. (36) is well-posed in the sense of possessing for any in a unique mild solution, i.e. there exists a stopping time such that the integral equation,

| (37) | ||||

possesses, almost surely, a unique solution in (continuous path in ) for all stopping time .

Here the stochastic integral can be represented as a series of one-dimensional Itô integrals

| (38) |

where the are the independent Brownian motions in (12). It is known that Eq. (36) admits a unique mild solution in with the right conditions on the coefficients of and (confining potential) [87]; see also [109, Prop. 3.4]. We refer to [88, 87] for background on the stochastic convolution term .

Throughout this section we assume that only a subset of unresolved modes in the decomposition (6) of are stochastically forced according to Eq. (12), namely that the following condition holds:

-

(H)

for in (12), and that there exists at least one index in such that .

In this case, the BF system (35) becomes

| (39) | ||||

The solution to Eq. (LABEL:Eq_BF_SPDEcubic) at provides a parameterization aimed at approximating the th high-mode amplitude, , of the SPDE solution when is equal to the low-mode amplitude . The BF system (LABEL:Eq_BF_SPDEcubic) can be solved analytically. Its solution provides the stochastic parameterization, , expressed, for , as the following stochastic nonlinear mapping of in :

| (40) | ||||

with

The stochastic term is given by

| (41) |

and as such, is dependent on the noise’s path and the “past” of the Wiener process forcing the -th scale: it conveys exogenous memory effects, i.e. it is non-Markovian in the sense of [110].

The coefficients and in (40) are given respectively by

| (42) |

while the coefficients and are given by

| (43) |

and

| (44) |

with and . From Eqns. (43) and (44), one observes that the parameter (depending on ) allows, in principle, for balancing the small denominators due to small spectral gaps, i.e. when the or the are small. This attribute is shared with the parameterization formulas obtained by the BF approach in the deterministic context; see [28, Remark III.1]. It plays an important role in the ability of our parameterizations to handle physically relevant situations with a weak time-scale separation; see Section V.5 below.

Remark III.1

In connection with the stochastic convolution in Eq. (33), we note that the stochastic terms in Eq. (40), involving the processes , , and , can be rewritten as a scalar stochastic integral, more precisely:

| (45) |

Note that the RHS in Eq. (45) is simply the projection of onto mode (Eq. (38)).

Equation (45) is a direct consequence of Itô’s formula applied to the product followed by integration over . Indeed, we have (dropping the -dependence),

by treating as zero due to Itô calculus [111].

By introducing now and denoting by the stochastic convolution , we arrive due to Eq. (41) at

| (46) |

which gives the relation , where we have set to simplify.

In the expression of given by Eq. (40), we opt for the expression involving the Riemann–Stieltjes integral for practical purpose. The latter can be indeed readily generalized to the case of jump noise (Eqns. (99)–(100) below) without relying on stochastic calculus involving a jump measure (Section VII).

In what follows we drop the dependence of on and sometimes on , unless specified. The parameterization of the neglected scales writes then as

| (47) |

with , and defined in Eq. (40).

We observe that letting approach infinity in Eq. (47) recovers Eq. (33) when . This implies that the manifold constructed from Eq. (47) can be viewed as a homotopic deformation of the SIM approximation defined in Eq. (33), controlled by the parameters .

This connection motivates utilizing the variational framework presented in [27, 28] to identify the “optimal” stochastic manifold within the family defined by Eq. (47). In Section III.3, we demonstrate that a simple data-driven minimization of a least-squares parameterization error, applied to a single training path, yields trained parameterizations (in terms of ) with remarkable predictive power. For instance, these parameterizations can be used to infer ensemble statistics of SPDEs for a large set of unseen paths during training.

However, unlike the deterministic case, the stochastic framework necessitates addressing the efficient simulation of the coefficients involved in Eq. (47) for optimization purposes. This challenge is tackled in the following subsection.

III.2 Non-Markovian path-dependent coefficients: Efficient simulation

Our stochastic parameterization given by (47) contains random coefficients , each of them involving an integral of the history of the Brownian motion making them non-Markovian in the sense of [110], i.e. depending on the noise path history. We present below an efficient mean to compute these random coefficients by solving auxiliary ODEs with path-dependent coefficients, avoiding this way the cumbersome computation of integrals over noise paths that would need to be updated at each time . This approach is used for the following purpose:

-

(i)

To find the optimal vector made of optimal backward-forward integration times and form in turn the stochastic optimal parameterization , and

-

(ii)

To efficiently simulate the corresponding optimal reduced system built from along with its path-dependent coefficients.

According to (41), the computation of boils down to the computation of the random integral

which is of the following form:

| (48) |

where , and due to assumption (H). We only need to consider the case that , since we consider here only cases in which the unstable modes are included within the space of resolved scales.

By taking time derivative on both sides of (48), we obtain that satisfies the following ODE with path-dependent coefficients also called a random differential equation (RDE):

| (49) |

Since , the linear part in (49) brings a stable contribution to (49). As a result, can be computed by integrating (49) forward in time starting at with initial datum given by:

| (50) |

where is computed using (48).

Now, to determine in (41) it is sufficient to observe that

| (51) |

The random coefficient is thus computed by using (51) after is computed by solving, forward in time, the corresponding RDE of the form (49) with initial datum

| (52) |

This way, we compute only the integral (52) and then propagate it through Eq. (49) to evaluate , instead of computing for each the integral in the definition (41). The resulting procedure is thus much more efficient to handle numerically than a direct computation of based on the integral formulation (41) which would involve a careful and computationally more expensive quadrature at each time-step. Note that a similar treatment has been adopted in [29, Chap. 5.3] for the computation of the time-dependent coefficients arising in stochastic parameterizations of SPDEs driven by linear multiplicative noise, and in [28, Sec. III.C.2] for the case of non-autonomous forcing.

III.3 Data-informed optimization and training path

Thanks to Section III.2, we are now in position to propose an efficient variational approach to optimize the stochastic parameterizations of Section III.1 in view of handling parameter regimes located away from the instability onset and thus a greater wealth of possible stochastic transitions.

Given a solution path to Eq. (36) available over an interval of length , we aim for determining the optimal parameterization (given by (40)) that minimizes—in the -variable—the following parameterization defect

| (53) |

for each . Here denotes the time-mean over while and denote the projections of onto the high-mode and the reduced state space , respectively. Figure 1 provides a schematic of the stochastic optimal parameterizing manifold (OPM) found this way.

The objective is obviously not to optimize the parameterizations for every solution path, but rather to optimize on a single solution path—called the training path and then use the optimized parameterization for predicting dynamical behaviors for any other noise path, or at least in some statistical sense. To do so, the optimized -values (denoted by below) is used to build the resulting stochastic OPM given by (47) whose optimized non-Markovian coefficients are aimed at encoding efficiently the interactions between the nonlinear terms and the noise, in the course of time.

Sections V and VI below illustrate how this single-path training strategy can be used efficiently to predict from the corresponding reduced systems the statistical behavior as time and/or the noise’s path is varied. The next section delves into the justification of this variational approach, and the theoretical characterization of the notion of stochastic OPM by relying on ergodic arguments. The reader interested in applications can jump to Sections V and VI.

IV Non-Markovian Optimal Parameterizations and Invariant Measures

The problem of ergodicity and mixing of dissipative properties of PDEs subject to a stochastic external force has been an active research topic over the last two decades. It is rather well understood in the case when all deterministic modes are forced; see e.g. [112, 113]. The situation in which only finitely many modes are forced such as considered in this study is much more subtle to handle in order to prove unique ergodicity. The works [114, 109] provide answers in such a degenerate situation based on a theory of hypoellipticity for nonlinear SPDEs. In parallel, the work [115] generalizing the asymptotic coupling method introduced in [116], allows for covering a broad class of nonlinear stochastic evolution equations including stochastic delay equations.

Building upon these results, we proceed under the assumption of a unique ergodic invariant measure, denoted by . Given a reduced state space , we demonstrate in this section the following:

-

(i)

The path-dependence of the non-Markovian optimal reduced model arises from the random measure denoted by that is obtained through the disintegration of over the underlying probability space .

-

(ii)

The non-Markovian optimal reduced model provides an averaged system in that is still path-dependent. For a given noise path , it provides the reduced system in that averages out the unresolved variables with respect to the disintegration of over . A detailed explanation is provided in Theorem IV.2.

IV.1 Theoretical insights

Adopting the framework of random dynamical systems (RDSs) [50], recall that an RDS, , is said to be white noise, if the associated “past” and “future” -algebras (see (56)) and are independent. Given a white noise RDS, , the relations between random invariant measures for and invariant measures for the associated Markov semigroup is well known [117]. We recall below these relationships and enrich the discussion with Lemma IV.1 below from [118].

Assume that Eq. (1) generates a white noise RDS, , associated with a Markov semigroup having as an invariant measure, then the following limit taken in the weak∗ topology:

| (54) |

exists -a.s., and provides a random probability measure that is -invariant in the sense that

| (55) |

A random probability measure that satisfies (55) is called below a (random) statistical equilibrium. Recall that here denotes the standard ergodic transformation on the set of Wiener path defined through the helix identity [50, Def. 2.3.6], , in .

A statistical equilibrium such as defined above is furthermore Markovian [119], in the sense it is measurable with respect to the past -algebra

| (56) |

see [117, Prop. 4.2]; see also [50, Theorems 1.7.2 and 2.3.45].

Note that the limit (54) exists in the sense that for every bounded measurable function , the real-valued stochastic process

| (57) |

is a bounded martingale, and therefore converges -a.s by the Doob’s first martingale convergence theorem; see e.g. [111, Thm. C.5 p. 302]. This implies, in particular, the -almost sure convergence of the measure-valued random variable in the topology of weak convergence of , which in turn implies the convergence in the narrow topology of ; see [120, Chap. 3].

Reciprocally, given a Markovian -invariant random measure of an RDS for which the -algebras and are independent, the probability measure, , defined by

| (58) |

is an invariant measure of the Markov semigroup ; see [121, Thm. 1.10.1].

In case of uniqueness of (and thus ergodicity) satisfying then this one-to-one correspondence property implies that any other random probability measure different from the random measure obtained from (54), is non-Markovian which means in particular that otherwise we would have , -a.s.

In case a random attractor exists, since the latter is a forward invariant compact random set, the Markovian random measure must be supported by ; see [120, Thm. 6.17] (see also [122, Prop. 4.5]). We conclude thus — in the case of a unique ergodic measure for — to the existence of a unique Markov -invariant random measure supported by , and that all other -invariant random measures (also necessarily supported by ) are non-Markovian.

RDSs where the future and past -algebras are independent, are generated for many stochastic systems in practice. This is the case for instance of a broad class of stochastic differential equations (SDEs); see [50, Sect. 2.3]. The problem of generation of white noise RDSs in infinite dimension is much less clarified and it is beyond the scope of this article to address this question.

Instead, we point out below that there exists other ways to associate to an RDS which is not necessarily of white-noise type, a meaningful random probability measure that still for instance satisfies (58). This is indeed the case if the RDS satisfies some weak mixing property with respect to a (non-random) probability on as the following Lemma from [118], shows:

Lemma IV.1

Assume there exists in Pr() satisfying the following weak mixing property

| (59) |

for each bounded, continuous .

Then there exists an -measurable random probability measure given by

| (60) |

that satisfies

| (61) |

and is -invariant in the sense of (55), i.e. that is a statistical equilibrium.

Thus, either built from an invariant measure of the Markov semigroup, in the case of a white-noise RDS, or from a weakly mixing measure in the sense of (59), a statistical equilibrium satisfying (58) can be naturally associated with Eq. (36) as long as the appropriate assumptions are satisfied. At this level of generality, we do not enter into addressing the important question dealing with sufficient conditions on the linear part and and nonlinear terms that ensure for an SPDE like Eq. (36), the generation of an RDS that is either of white-noise type or weakly mixing in the sense of (59); see [118] for examples in the latter case.

We also introduce the following notion of pullback parameterization defect.

Definition IV.1

Given a parameterization that is measurable (in the measure-theoretic sense), and a mild solution of Eq. (36), the pullback parameterization defect associated with over the interval and for a noise-path , is defined as:

| (62) |

Given the statistical equilibrium , we denote by the push-forward of by the projector onto , namely

| (63) |

where denotes the family of Borel sets of ; i.e. the family of sets that can be formed from open sets (for the topology on induced by the norm ) through the operations of countable union, countable intersection, and relative complement.

The random measure allows us to consider the following functional space of parameterizations, defined as

| (64) | ||||

namely the Hilbert space constituted by -valued random functions of the resolved variables in , that are square-integrable with respect to , almost surely.

We are now in position to formulate the main result of this section, namely the following generalization to the stochastic context of Theorem 4 from [27].

Theorem IV.1

Assume that one of the following properties hold:

- (i)

- (ii)

Let us denote by the weak∗-limit of defined in (54) for case (i), and by the statistical equilibrium ensured by Lemma IV.1, in case (ii). We denote by the disintegration of on the small-scale subspace , conditioned on the coarse-scale variable .

Assume that the small-scale variable has a finite energy in the sense that

| (65) |

Then the minimization problem

| (66) |

possesses a unique solution whose argmin is given by

| (67) |

Furthermore, if the RDS possesses a pullback attractor in , and is pullback mixing in the sense that for all in ,

| (68) | ||||

then -almost surely

| (69) |

Proof. The proof follows the same lines of [27, Theorem 4]. It consists of replacing:

-

(i)

The probability measure therein by the probability measure on that is naturally associated with the family of random measures and whose marginal on is given by ; see [117, Prop. 3.6],

-

(ii)

The function space therein by the function space .

By applying to the ambient Hilbert space , the standard projection theorem onto closed convex sets [123, Theorem 5.2], one defines (given ) the conditional expectation of as the unique function in that satisfies the inequality

| (70) |

Now by applying the general disintegration theorem of probability measures, applied to (see [27, Eq. (3.18)]), we obtain the following explicit representation of the random conditional expectation

| (71) |

with denoting the disintegrated measure of over . By noting that this disintegrated measure is the same as the disintegration (over ) of the random measure , we conclude by taking as for the proof of [27, Theorem 4] that given by (67) solves the minimization problem (66).

IV.2 Non-Markovian optimal reduced model, and conditional expectation

The mathematical framework of Section IV.1 allows us to provide a useful interpretation of non-Markovian optimal reduced model for SPDE of type Eq. (36). To simplify the presentation, we restrict ourselves here to the case .

We denote then by the vector field in , formed by gathering the linear and nonlinear parts of Eq. (36) in this case, namely

| (72) |

The theorem formulated below characterizes the relationships between the non-Markovian optimal reduced model and the random conditional expectation associated with the projector onto the reduced state space ; i.e. the resolved modes. Its proof is almost identical to that of [27, Theorem 5] with only slight amendment and the details are thus omitted.

Theorem IV.2

Consider the SPDE of type Eq. (36) with . Let be a (random) statistical equilibrium satisfying either (54) (in case (i) of Theorem IV.1) or ensured by Lemma IV.1 (in case (ii) of Theorem IV.1).

Then, under the conditions of Theorem IV.1, the random conditional expectation associated with ,

satisfies

| (73) | ||||

with

| (74) |

for which denotes the disintegration of over .

Hereafter, we simply refer to Eq. (75) as the (random) conditional expectation.

IV.3 Practical implications

Thus, Theorem IV.2 teaches us that an approximation of the (actual) non-Markovian optimal parameterization, given by (67), provides in fine an approximation of the conditional expectation involved in Eq. (75).

The non-Markovian optimal parameterization involves, from its definition, averaging with respect to the unknown probability measure . As such, designing a practical approximation scheme with rigorous error estimates remains challenging. Near the instability onset, the non-Markovian optimal parameterization simplifies to the stochastic invariant manifold. In this regime, probabilistic error estimates have been established for a wide range of SPDEs, including those relevant to fluid dynamics [31]. However, obtaining such guarantees becomes significantly more difficult for scenarios away from the instability onset, even for low-dimensional SDEs [124].

This is where the data-informed optimization approach from Section III.3 offers a practical solution within a relevant class of parameterizations. Variational inequality (69) provides a key insight: the parameterization defect serves as a good measure of a parameterization’s quality. Lower defect values indicate a better parameterization, one that is expected to yield a reduced model closer to the (theoretical) non-Markovian optimal reduced model defined by Eq. (75).

Even with a very good approximation of the actual non-Markovian optimal parameterization, , a key question remains: under what conditions does the theoretical conditional expectation (Eq. (75)) provide a sufficient system’s closure on its own? We address this question in Sections V and VI, through concrete examples. Analyzing two universal models for non-equilibrium phase transitions, we demonstrate that the non-Markovian reduced models constructed using the formulas from Section III become particularly relevant for understanding and predicting the underlying stochastic transitions when the cutoff scale exceeds the scales forced stochastically (Sections V.3,V.4,V.5 and VI.4).

To deal with these non-equilibrium systems, we consider the class of parameterizations for approximating the true non-Markovian optimal parameterization, . This class consists of continuous deformations away from the instability onset point of parameterizations that are valid near this onset (as discussed in Section III.1).

Our numerical results demonstrate that this class effectively models and approximates the true non-Markovian terms in the optimal reduced model (Eq. (75)) across various scenarios. After learning an optimal parameterization within class using a single noise realization, the resulting approximation of the optimal reduced model typically becomes an ODE system with coefficients dependent on the specific noise path. These path-dependent coefficients encode the interactions between noise and nonlinearities, as exemplified by the reduced models Eqns. (85) and (LABEL:Eq_reduced_S_shaped) below, with their ability to predict noise-induced transitions within the reduced state spaces.

V Predicting Stochastic Transitions with Non-Markovian Reduced Models

V.1 Noise-induced transitions in a stochastic Allen-Cahn model

We consider now the following stochastic Allen-Cahn Equation (sACE) [125, 126, 56]

| (76) |

with homogeneous Dirichlet boundary conditions. The ambient Hilbert space is endowed with its natural inner product denoted by .

This equation and variants have a long history. The deterministic part of the equation provides the gradient flow of the Ginzburg-Landau free energy functional [127]

| (77) |

with the potential given by the standard double-well function . Indeed, one can readily check that , where denotes the Fréchet derivative of at . The sACE provides a general framework for studying pattern formation and interface dynamics in systems ranging from liquid crystals and ferromagnets to tumor growth and cell membranes [1, 57].

Its universality makes it relevant across diverse fields, contributing to a unified understanding of these complex phenomena. By incorporating the spatio-temporal noise term, , into the deterministic Allen-Cahn equation, the sACE accounts for inherent fluctuation and uncertainty present in real systems [56]. This allows for a more realistic description of transitions between phases, providing valuable insights beyond what pure deterministic models can offer. Near critical points, where phases coexist, the sACE reveals the delicate balance between deterministic driving forces and stochastic fluctuations. Studying these transitions helps us understand critical phenomena in diverse systems, from superfluid transitions to magnetization reversal in magnets [1, 56].

Phase transitions for the sACE and its variants have indeed been analyzed both theoretically utilizing the large deviation principle [128] and also numerically through different rare event algorithms. For instance, in [129] the expectation of the transition time between two metastable states is rigorously derived for a class of 1D parabolic SPDEs with bistable potential that include sACE as a special case. The phase diagram and various transition paths have also been computed by either an adaptive multilevel splitting algorithm [130] or a minimum-action method [131], where the latter deals with a nongradient flow generalization of the sACE that includes a constant force and also a nonlocal term.

Despite its importance in numerous physical applications, the reduction problem for the accurate reproduction of transition paths in the sACE has received limited attention, with existing works focusing primarily on scenarios near instability onset and low noise intensity (e.g., [132, 103, 102, 133, 134]).

In this study, we consider the sACE placed away from instability onset, after several bifurcations have taken place where multiple steady states coexist; see Fig. 2. It corresponds to the parameter regime

| (78) |

in which the parameter and indicates the modes forced stochastically in Eq. (76), according to

| (79) |

where we set for . Note that as in Section III.1, , denote the eigenmodes of the operator (with homogeneous Dirichlet boundary conditions), given here by

| (80) |

with corresponding eigenvalues . We refer to Appendix B for the numerical details regarding the simulation of Eq. (76).

More specifically, the domain size is chosen so that when the trivial steady state has experienced three successive bifurcations leading thus to a total of seven steady states with two stable nodes ( and ) and five saddle points (, , , , and ). These steady states are characterized here by their zeros: the saddle states are of sign-change type while the stable ones are of constant sign; see [126, 135]. Note also that the same conclusion holds for the case with either Neumann boundary conditions or periodic boundary conditions; see e.g. [129, Figure 2]. In our notation, and denote the string made of sign changes over , with leftmost symbol in to be . For instance, for (resp. ), (resp. ), while (resp. ) for (resp. ).

By analyzing the energy distribution of these steady states carried out by the eigenmodes (see Table 1), one observes that are nearly collinear to , while are nearly collinear to , and to .

| 94.69% | 0 | 4.79% | 0 | 0.46% | 0 | 0.05% | 0 | |

| 0 | 99.16% | 0 | 0 | 0 | 0.83% | 0 | 0 | |

| 0 | 0 | 99.93% | 0 | 0 | 0 | 0 | 0 |

A sketch of the bifurcation diagram as is varied (for ) is shown in Fig. 2A; the vertical dashed line marks the domain size considered in our numerical simulations. For this domain size, the system exhibits three unstable modes whose interplay with the nonlinear terms yields the deterministic flow structure depicted in Fig2B. There, the deterministic global attractor is made of the seven steady states mentioned above (black filled/empty circles) connected by heteroclinic orbits shown by solid/dashed curves with arrows. To this attractor, we superimposed a solution path to the stochastic model (76) emanating from (light grey “rough” curve). We adopt the Arnol’d’s convention for this representation: black filled circles indicate the stable steady states while the empty ones indicate the saddle/unstable steady states.

These steady states organize the stochastic dynamics when the noise is turned on: a solution path to Eq. (76) transits typically from one metastable states to another; the latter corresponding to profiles in the physical space that are random perturbations of an underlying steady state’s profile; see insets of Fig. 4 below. For the parameter setting (78), we observe though, over a large ensemble of noise paths, that the SPDE’s dynamics exhibit transition paths connecting to neighborhoods of the steady states and , but does not meanders near over the interval considered ().

Noteworthy is the visual rendering of the sojourn time of the solution path shown in Fig. 2B. It is more substantial in the neighborhood of than in that of . It is a depiction of a solution path to Eq. (76) over : most of the trajectory spends time near a sign-change metastable state () rather than to the constant-sign one (). Large ensemble simulations of the sACE for the parameter regime (78) reveals that what is shown for a single path here is actually observed at the final time , when the noise path is varied: the sign-change states are the rule whereas the constant-sign states are the exceptions, corresponding here to rare events. Figure 3 shows two solution paths in the space-time domain connecting to either a sign-changing metastable state (typical) or a constant-sign metastable state (rare event). In Section V.3 below we shall discuss the mechanisms behind these patterns and their transition phenomenology, and the extent to which non-Markovian reduced models described below are able to reproduce it.

V.2 Non-Markovian optimal reduced models

Recall that our general goal is the derivation of reduced models able to reproduce the emergence of noise-driven spatiotemporal patterns and predict their transitions triggered by the subtle coupling between noise and the nonlinear dynamics.

For the sACE (76) at hand, this goal is declined into the following form: To derive reduced models not only able to accurately estimate ensemble statistics (averages across many realizations) of the states reached at a given time , but also to anticipate the system’s typical metastable states as well as those reached through the rarest transition paths pointed above.

To address this issue, we place ourselves in the challenging situation for which the cutoff scale is larger than the scales forced stochastically; i.e. the case where noise acts only in the unresolved part of the system. We show below that stochastic parameterization framework of Section III allows us to derive such an effective reduced model. This model is built from the stochastic parameterization (40) obtained from the BF system (LABEL:Eq_BF_SPDEcubic) applied to Eqns. (76) and (79), that is optimized—on a single training path—following Section III.3. We describe below the details of this derivation.

Thus, we take the reduced state space to be spanned by the first unforced eigenmodes, i.e.

| (81) |

In other words, the reduced state space is spanned by low modes that are not forced stochastically; i.e. in (79). The subspace is taken to be the orthogonal complement of in and contains the stochastically forced modes; see (79).

Equation (76) fits into the stochastic parameterization framework of Section III (see Eq. (36)) with subject to homogenous Dirichlet boundary conditions, and with denoting the cubic Nemytski’i operator defined as for any and in , in .

The resulting stochastic parameterization given by (40) (with ) takes then the following form:

| (82) | ||||

Since the linear operator is here self-adjoint, we have , and the coefficients are given by

| (83) | ||||

where , and .

For each in , the free parameter in (82) is optimized over a single, common, training path, , solving the sACE, by minimizing in (53) with given by (82); see Section III.3. To do so, we follow the procedure described in Section III.2 to simulate efficiently the required random random coefficients (here the -terms), solving in particular the random ODEs (49).

Once is obtained, the corresponding -dimensional optimal reduced system in the class (see Section IV.3), is given component-wisely by

| (84) |

where and .

The optimal reduced system (84), also referred to as the OPM closure hereafter, can be further expanded as

| (85) | ||||

Here also, the coefficients are those defined in (83) for . Note that the required -terms to simulate Eq. (85) are advanced in time by solving the corresponding Eq. (49). A total of such equations are solved, each corresponding to a single random coefficient per mode to parameterize. Thus, a total of, , ODEs with random coefficients are solved to obtain a reduced model of the amplitudes of the first modes. We call Eq. (85) together with its auxiliary equations (49), the optimal reduced model for the sACE.

A compact form of Eq. (85) can be written as follows:

| (86) | |||

with defined in Eq. (82). We refer to Appendix B for the numerical details regarding the simulation of Eq. (86).

Noteworthy is that an approximation of the sACE solution paths can be obtained from the solutions to the optimal reduced model. Such an approximation consists of simply lifting the surrogate low-mode amplitude simulated from Eq. (86), to via the optimal parameterization, , defined in Eq. (86). This approximation takes then the form

| (87) | ||||

The insets of Fig. 7A below show the approximation skills achieved by this formula obtained thus only by solving the reduced equation Eq. (86).

V.3 Why energy flows in a certain direction: The role of non-Markovian effects

The sACE (76) is integrated over a large ensemble of noise paths ( members), from at up to a time . In our simulations with a fixed time horizon (), reaching a constant-sign state at the end () is a rare event, even though these states correspond to the deeper energy wells in the system’s landscape (as shown in Fig. 4). Conversely, sign-change states, which reside in shallower wells, are more common. This might seem counterintuitive from an energy perspective. Nevertheless, this can be explained by the degenerate noise (Eq. (79)) used to force the sACE: only a specific group of unresolved (stable) modes is excited by noise and this may limit the system’s ability to explore the deeper energy wells efficiently within the simulation timeframe.

Over time (as increases), the noise term in the sACE interacts with the nonlinear terms, gradually affecting the unforced resolved modes. Our experiments show that the amplitude of the sACE’s solution amplitude carried by grows the fastest for most noise paths. Because is nearly aligned with (as shown in Table 1), this means that for most noise paths, the solution is expected to reach a metastable state close to when the nonlinear effects become significant (nonlinear saturation kicks in).

Understanding why energy primarily accumulates in the direction is complex. It stems from how noise initially affecting unresolved modes (modes to ) propagates through nonlinear interactions, gradually influencing larger-scale resolved modes. As the initial state () lacks energy, this noise-induced energy transfer to larger scales takes some time. Our analysis reveals that during the interval , the solution is dominated by these small-scale features inherited from the noise forcing (Table 2 and Fig. 3).

This finding has significant implications for closure methods: to accurately reproduce both rare and typical transitions, the underlying parameterization must effectively represent the system’s small-scale response to stochastic forcing before the transition occurs.

Figures 5 and 6 illustrate how the optimal parameterization and reduced model (Eq. (86)) capture the subtle differences in the small-scale component that ultimately lead to rare or typical events in the full sACE system. Notably, for both rare (Figure 5) and typical paths (Figure 6), the low-mode amplitudes of the sACE solution are near zero during the interval (Panels C-F and Table 2). Therefore, it is the spatial patterns of the small-scale component, rather than its energy content, that control the triggering of rare or typical events (Figure 3A and 3D). The successful parameterization of these small-scale features (Figures 5B and 6B) is crucial for the optimal reduced model’s ability to reproduce the key behaviors of the sACE’s large-scale dynamics.

The non-Markovian terms are essential for accurately capturing these small-scale patterns before a rare or typical event occurs. When large-scale mode amplitudes are near zero during interval I (Table 2), the optimal parameterization (Eq. (86)) is dominated by the (optimized) non-Markovian field given by:

| (88) | ||||

After this initial transient period, nonlinear effects become significant, and the large-scale mode amplitudes undergo substantial fluctuations. Panels C-F in Figures 5 and 6 demonstrate this behavior and the optimal reduced model’s ability to predict these large-scale fluctuations beyond interval I. The OPM reduced system’s ability to capture such transitions is rooted in its very structure. Its coefficients in Eq. (85) are nonlinear functionals of the non-Markovian -terns within allowing for an accurate representation of the genuine nonlinear interactions between noise and nonlinear terms in the original sACE, which drive fluctuations in the large-mode amplitudes (Modes 1 to 4).

After a transition occurs and energy is transferred to the low modes, the stochastic component and its non-Markovian terms become secondary while the nonlinear terms in the stochastic parameterization (82) become crucial for capturing the average behavior of the sACE dynamics, as highlighted in Section V.5 below.

| Rare path | 0 | 0.05% | 0.01% | 0 | 49.6% | 26.1% | 15.1% | 9.2% |

| Typical path | 0 | 0.22% | 0 | 0 | 34.8% | 33% | 19.7% | 12.4% |

V.4 Rare and typical events statistics from non-Markovian reduced models

Here, we evaluate the ability of our non-Markovian reduced model (Eq. (86)) to statistically predict the rare and typical transitions discussed in the previous section. We test the reduced model’s performance in this task using a vast ensemble of one million noise paths.

Recall that the optimal parameterization (, defined in (82)) is trained on a single, “typical” noise path (see Fig. 8A). In other words, we minimized the parameterization defect in equation (53) for this specific path. Our key objective here is to assess how well the reduced model (Eq. (86)) can predict the distribution of final states (metastable states at time ) across a much larger set of out-of-sample noise paths.

To do so, we look at a natural “min+max metric.” This metric consists of adding the minimum to the maximum values of the sACE’s solution profile at time . The shape of this probability distribution shows that a value close to zero corresponds to a typical sign-change metastable state, while a value close to or , corresponds to a rare constant-sign metastable state.

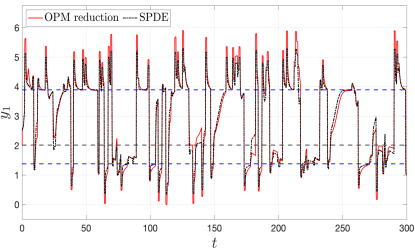

Figure 7A demonstrates the effectiveness of the non-Markovian optimal reduced model in capturing rare events. The optimal reduced system (Eq. (86)) can predict noise-driven trajectories connecting an initial state () to rare final states (sACE solution’s profile with a constant sign at time ) with statistically significant accuracy (see Fig. 7A). While these rare events occur less frequently in the reduced system compared to the full sACE (smaller red bumps compared to black ones near 1 and -1), the ability of the reduced equation Eq. (86) to predict them highlights the optimal parameterization’s success in capturing the system’s subtle interactions between the noise and nonlinear effects responsible for these rare events.

Thus, the optimal reduced system reproduces faithfully the probability distribution of both common (frequently occurring sign-change profiles) and rare (constant-sign profiles) final states at time (Fig. 7A). This is particularly impressive considering the model was trained on just a single path, yet generalizes well to predict the behavior across a large ensemble of out-of-sample paths.

Figure 8A shows the training stage over a typical transition path. The optimization of the free parameter in the stochastic parameterization (see Eq. (82)) is executed over a typical transition path, by minimizing the normalized parameterization defects ( defined in Eq. (53)) for all the relevant (here ). We observe that and exhibit clear minima, whereas the other modes have their parameterization defect not substantially diminished at finite -values compared to its asymptotic value (as ). Same features are observed over a rare transition path; see Figure 8B. Recall that our reduced system is an ODE system whose stochasticity comes exclusively from its random coefficients as the resolved modes in the full system are not directly forced by noise. It is this specificity that enables the reduced equations to effectively predict noise-induced transitions, including rare events. This remarkable capability relies on the optimized memory content embedded within the path-dependent, non-Markovian coefficients.

Thus, the optimized, non-Markovian and nonlinear reduced equation (85) is able to track efficiently how the noise, acting on the “unseen” part of the system, interacts with the resolved modes through these non-Markovian coefficients. While these coefficients effectively encode the fluctuations for a good reduction of the sACE system, they alone are insufficient to handle regimes with weak time-scale separation such as dealt with here. Understanding the average behavior of the resolved dynamics is equally important. The next section explores how our parameterization, particularly its nonlinear terms, play a crucial role in capturing this average behavior.

V.5 Weak time-scale separation, nonlinear terms and conditional expectation

We assess the ability of our reduced system to capture this average dynamical behavior in the physical space. In that respect, Figures 7B and 7D show the expected profiles of the sACE’s states obtained at (solid black curves) from the sACE (76), along with their standard deviation (grey shaded areas), for the ensemble used for Fig. 7A. Similarly, Figures 7C and 7E show the expected profiles (solid red curves) with their standard deviation as obtained at from defined in Eq. (87), once the low-mode amplitude is simulated by the optimal reduced model (86) and lifted to by using the stochastic parameterization .

By conducting large-ensemble (online) simulations of our reduced model (Eq. (86)), we accurately reproduce the mean motion and second-order statistics of the original sACE. This success stems from our parameterization’s ability to effectively represent the average behavior of the unresolved variables during the offline training phase, as defined by the probability measure in (58). Figure 9 illustrates this point for the fifth and sixth modes’ amplitudes of the sACE system. Notably, the nonlinear nature of the Markovian optimal parameterization, evident after taking the expectation (see Eq. (89) below), highlights the crucial role of nonlinear terms in our stochastic parameterization (Eq. (82)) in achieving this accurate representation.

The manifold shown in Fig. 9A (resp. Fig. 9B) is obtained as the graph of the following Markovian OPM for the 6th (resp. 5th) mode’s amplitude, given by the expectation of Eq. (82) with (resp. ) after optimization (Fig. 8),

| (89) | ||||

for with and , where and denote the most probable values of the 3rd and 4th solution components, and , to enable visualization as a 2D surface while favoring a certain ”typicalness” of the visualized manifold. Note that the Markovian OPM corresponds simply to the deterministic cubic term in (82) after replacing by the optimal , since the remaining stochastic terms equal to (see Remark III.1), whose expectation is zero as stochastic integral in the sense of Itô.

Without the optimization stage, the parameterizations of Section II experience deficient accuracy when the memory content is taken to be , due to small values that the spectral gaps take in denominators. As a reminder, small spectral gaps are indeed a known limitation of traditional invariant manifold techniques, often leading to inaccurate results in this case. Optimizing the parameter helps address this issue. It introduces corrective terms like in the cubic coefficients (, Eq. (44)) that effectively compensate for these small gaps. This is especially important for fifth and sixth (unresolved) modes whose parameterization defects show a clear minimum during optimization (Figure 8).