Noise and Edge Based Dual Branch Image Manipulation Detection

Abstract

Unlike ordinary computer vision tasks that focus more on the semantic content of images, the image manipulation detection task pays more attention to the subtle information of image manipulation. In this paper, the noise image extracted by the improved constrained convolution is used as the input of the model instead of the original image to obtain more subtle traces of manipulation. Meanwhile, the dual-branch network, consisting of a high-resolution branch and a context branch, is used to capture the traces of artifacts as much as possible. In general, most manipulation leaves manipulation artifacts on the manipulation edge. A specially designed manipulation edge detection module is constructed based on the dual-branch network to identify these artifacts better. The correlation between pixels in an image is closely related to their distance. The farther the two pixels are, the weaker the correlation. We add a distance factor to the self-attention module to better describe the correlation between pixels. Experimental results on four publicly available image manipulation datasets demonstrate the effectiveness of our model.

Index Terms:

Image forensics, image manipulation detection, image noise extraction, edge detection, self-attention with distance.I Introduction

With the development of technology, people can easily use image editing software such as Photoshop and GIMP to obtain a manipulated image. It is often difficult for human eyes to discern traces of manipulation. While image editing technology brings convenience, it also brings some problems. If it is used for unlawful purposes, it can have serious negative consequences. To solve this problem, a general image manipulation detection technology is needed.

Image manipulation detection is different from general computer vision (CV) tasks, such as object detection and semantic segmentation. Ordinary CV tasks focus on learning the semantic content of images, while image manipulation detection pays more attention to the details left by manipulation operations. Traditional image manipulation detection uses methods such as image color consistency [1], photo response non-uniformity [2], keypoint similarity of image blocks [3], and blur type inconsistency [4] to identify the difference between manipulation and non-manipulation regions. Nevertheless, these methods can only detect a specific type of manipulation and are not very general. In addition, they cannot provide accurate pixel-level detection results.

Recent studies on image manipulation detection are mainly based on convolutional neural networks (CNN). As the network depth increases, existing CNN architectures tend to learn features representing the semantic content of images, which is not entirely consistent with the purpose of the image manipulation detection task. To solve this problem, the image is processed by the spatial-domain rich model (SRM) [5] or constrained convolution [6] to obtain the corresponding noise image. Then the noise image is input into the subsequent neural network. Nevertheless, the weights of SRM are fixed and cannot be well adapted to different datasets. Due to the constrained process, the weights of constrained convolutions are prone to drastic changes in practical training (detailed explanation in Section III-A). So, we propose an improved constrained convolution to obtain the corresponding noise image.

The manipulation edge is critical information for the manipulation detection task. Because the three basic manipulation operations of copy-move, splicing, and removal will leave artifacts on the manipulation boundary. To capture such subtle manipulation information, the features in the CNN need to maintain high resolution. Due to the local characteristics of CNN, a certain degree of downsampling is indispensable to obtain sufficient image context information. So, both the bilateral segmentation network (BiSeNet) [7] and the deep dual-resolution network (DDRNet) [8] use dual branches to construct the model. One of the branches is used to obtain the context information of the image, and the other branch maintains a certain resolution to avoid losing too much detail. We build a model based on this dual branch structure and design an edge prediction module to detect manipulation edges from the features of the dual branch. With manipulation edge supervision, the overall detection performance improves a lot.

Global correlation information is necessary for manipulation detection. Still, due to the local characteristics of CNN, it is difficult for CNN-based models to obtain global correlations. So, similar to self-attention [9] in the field of natural language processing (NLP), the non-local module [10] is used in CNN to obtain the correlations between each pixel and all other pixels, i.e. the global correlation. However, unlike NLP, the spatial correlations among image pixels are more affluent because the spatial correlations among words in NLP are one-dimensional. In contrast, the spatial correlations among pixels are two-dimensional. The non-local module can break the distance limit to calculate the correlation between pixels but also ignore their distance relationship simultaneously. Therefore, we add a distance metric to the non-local module so that it can better describe the global correlations among pixels.

Overall, the main contributions of this work are as follows.

-

•

We optimize the constrained convolution process to make constrained convolution more stable and easier to train.

-

•

We design a dual branch network and the corresponding manipulation edge detection module. The overall manipulation detection effect is improved by edge detection.

-

•

We add the distance factor to the non-local module so that the non-local module can better capture the global correlations among pixels.

-

•

The results of four publicly available manipulation datasets demonstrate that our model has advantages over state-of-the-art manipulation detection models.

II Relate Work

Image manipulation includes three primary methods: copy-move, splicing, and removal. Some works would identify a specific manipulation method. Wu et al. [11] designed a parallel dual branch network consisting of a manipulation detection branch and a similarity detection branch to detect copy-move type manipulation. The network can distinguish the source and the target regions. Islam et al. [12] proposed a generative adversarial network based on a two-order attention mechanism to detect and localize copy-move manipulation. Mayer et al. [13] extracted the features of two blocks of an image through CNN and calculated the similarity of the blocks to judge whether the two blocks have different forensic traces. The forensic trace is irrelevant to semantic content. If the forensic traces are different, there is a splicing operation.

There are certain limitations to the generality of the above works. Although the three types of manipulation are pretty different, there are commonalities in the traces of manipulation they leave behind. As shown in Table I, generally speaking, the manipulation region and the non- manipulation region of copy-move type manipulation come from the same image, so the two are similar in the original image and the noise image. The manipulation region and the non- manipulation region of splicing type manipulation come from different images, so the two are not similar in the original image and the noise image. Since there are many methods in the removal type manipulation, the manipulation region and the non- manipulation region may be similar or dissimilar in the original image and the noise image. Additionally, all three types of manipulation leave inconsistent traces at the manipulation edge. The inconsistency in the original image (semantic content) cannot indicate manipulation. And the inconsistency in noise or edge can be used as proof of manipulation. So, a generic image manipulation detection can be achieved by utilizing the image’s noise and edge information.

| Original image | Noise | Edge | |

|---|---|---|---|

| Copy-move | Y | Y | N |

| Splicing | N | N | N |

| Removal | Y / N | Y / N | N |

Zhou et al. [14] used SRM to extract the noise of an image. The original image and noise are simultaneously fed into Faster R-CNN [15] for general-type manipulation detection. Since the value of SRM is fixed, Bayar et al. [6] impose certain constraints after back-propagation of the ordinary convolution kernel, so that this convolution kernel can both train and suppress the semantic content of the image. Because Faster R-CNN is unsuitable for outputting pixel-level results, Yang et al. [16] added constrained convolution to Mask R-CNN [17] for more accurate predictions. Wu et al. [18] used the original image and two noise images obtained by SRM and constrained convolution as the model’s input, respectively. And the long short-term memory (LSTM) cell is used to simulate the law of “near big and far small”. Ultimately, more granular manipulation operations can be identified.

Since the three basic manipulation methods will leave manipulation traces on the manipulation edge, if the manipulation edge can be detected correctly, it will benefit the overall detection effect. Salloum et al. [19] added a manipulation edge detection task based on a fully convolutional network, which can simultaneously predict the region and corresponding edge of manipulation. Zhou et al. [20] selected features from the middle three blocks of DeepLab [21] to construct an edge detection task. And used the predicted manipulation edge to optimize the overall manipulation region prediction. Chen et al. [22] added the traditional Sobel edge detection operator to the progressive edge extraction structure of the Border network [23], which can obtain better edge prediction results.

In addition, the global correlations between each pixel and other pixels are significant for the image manipulation detection task. Wang et al. [10] implemented the self-attention mechanism in the CV domain similar to that in the NLP domain. Since this method is computationally intensive, Zhu et al. [24] reduced the computation by compressing the dimensions of key and value or using the key directly as the value. Huang et al. [25] computed the correlations of each pixel only using the pixels at their cross-shaped positions, thus avoiding the participation of most relatively unimportant pixels. The amount of computation is reduced while maintaining the effect. The first few methods compute correlations only in the spatial dimension. Fu et al. [26] calculated the correlations among the channel dimension and then fused them with correlations of the spatial dimension.

III Proposed Method

As shown in Fig. 1, we propose a model named NEDB-Net. The improved constrained convolution processes the input image to obtain the corresponding noise image. The noise image is then fed into a dual branch network with ResNet-34 [27] as the backbone. The high-resolution branch can maintain the resolution of the feature to obtain more details, and the context branch is used to obtain richer correlations among pixels. The edge extraction block (EEB) extracts corresponding manipulation edge predictions from features output from each layer in the model. And then all the edge predictions are fused to get the final prediction. Features of the context branch are optimized by the attention modules and fused with the feature of the high-resolution branch. The fused feature is upsampled and convolved to obtain the final mask of manipulation regions.

III-A Improved Constrained Convolution

Ordinary CNN tends to learn features representing semantic information of images rather than manipulation details. To solve this problem, some works use SRM, as shown in Fig. 2, to obtain the noise image corresponding to the image as the model input. SRM is essentially a set of high-pass filters that can get the image’s different high-frequency information through different weights.

Since the weights of SRM are predefined and cannot be learned, some works use constrained convolution instead of SRM. Constrained convolution imposes constraints after kernels update weights, making the weights distribution of kernels similar to the high-pass filter. The constraints are as follows:

| (1) |

where represents the -th convolution kernel, is the center position coordinate of , and is the non-center position coordinate. After the constraints, the center position weight of the convolution kernel is -1, and the sum of the weights of other positions is 1. The constraints can be achieved by the following stpdf:

-

1.

Calculate the sum of the weights of the non-central positions.

-

2.

Divide the weights of the non-center positions by the sum in 1).

-

3.

Set the center position weight to -1.

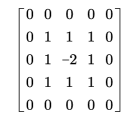

Although constrained convolution solves the problem that SRM cannot be trained, it is unstable during actual training, resulting in poor training results. As shown in Fig. 3, we assume that the matrix in Fig. 3LABEL:sub@fig_cc_problem_a is the weight of a convolution kernel updated by backpropagation. Fig. 3LABEL:sub@fig_cc_problem_b is the result after it is constrained by constrained convolution. It can be seen that since the weights before constraints may be positive or negative, their sum may be negative, and the absolute value of the sum may be relatively small. At this time, according to the constraint rules, dividing the weights of all non-central positions by this sum will cause three problems:

-

•

Dividing the weight by this relatively small sum causes the weight to be amplified a lot, but the center position weight is still -1. The weights of the non-central positions and the weight of the central position are much different in order of magnitude, which affects the effect of high-pass filtering.

-

•

If the sum is negative, the division operation will make the positive weights negative and the negative weights positive, causing the model’s input to change drastically.

-

•

There is an order of magnitude difference between the weights of the constrained convolution and the weights of the subsequent normal convolution, which will affect the weights already trained and cause training fluctuations.

The kernel weights shown in Fig. 4 are the result of training with constrained convolutions. It can be seen that the weights of the convolution are very different. To address the above issues, we set new constraints on convolution. The improved constrained convolution training process is shown in Algorithm 1.

Firstly, as shown in Fig. 5LABEL:sub@fig_laplace_operator, we learn from the traditional edge detection operator Laplacian to initialize the convolution kernel. Unlike the Laplacian operator, we set the weight of the center position to -1, and the weights of other positions equal to 1 divided by the number of non-center positions. The initialized weights are shown in Fig. 5LABEL:sub@fig_cc_initial. On this basis, the distance factor is further added. For example, for a convolution kernel, if the Euclidean distance is used to calculate the distance between the center position and other positions, there are only two distances of 1 and 1.414. The number of both distances is 4. Then the following equation can be constructed:

| (2) |

Solving the equation to get equal to 0.146, then the weight of the position with a distance of 1 is 0.416, and the weight of the position with a distance of 1.414 is . The overall weights are shown in Fig. 5LABEL:sub@fig_cc_initial_distance. We initialize the constrained convolution in this way.

Secondly, calculating the sum of the absolute values of the weights can avoid the result being negative or very small in absolute value. Thirdly, after the division operation, the small positive values and all negative values are set to 0.001 so that their effect is small and the values are not particularly small. Finally, the weight of the center position is not strictly equal to -1 but is equal to the negative sum of the weights of all non-center positions. With these constraints, the convolution can act as a high-pass filter and slow down fluctuations during training.

III-B Dual Branch Network and Edge Extraction Block

For the image manipulation detection task, the traces of image manipulation are very subtle. In addition to traces of manipulation regions, as described in Section II, the subtle differences between the manipulation edge and the non-manipulation region around it are also very important. To better capture this subtle information, features in CNN need to be maintained at a relatively high resolution. However, due to the local characteristics of convolution, it isn’t easy to obtain sufficient contextual information from high-resolution features.

To solve this problem, we draw on BiSeNet and DDRNet to design a dual branch network with Resnet-34 as the backbone. As shown in Fig. 1, the high-resolution branch maintains the feature’s height and width at 1/8 of the input image for more detailed information. The context branch changes the feature’s height and width to 1/32 of the input image through multiple convolutions to obtain richer contextual information. Finally, the features of the two branches are fused to obtain information with sufficient context and details.

Since each layer in the CNN learns different feature contents, we extract the manipulation edge based on the features output by each layer of the network. To extract edges better, we propose a specially designed edge extraction block (EEB). The flow of EEB is shown in Fig. 6. To reduce the calculation and fully use the feature information, we first use convolution to reduce the number of features’ channels to 1/4 of the original and then construct residual learning. Finally, convolution is used to reduce the number of channels to 1.

III-C Self-attention Mechanism with the Distance Factor

The global correlations of pixels in features are essential for common CV tasks and image manipulation detection. Due to the locality limitation of convolution, it isn’t easy to obtain a complete global relationship. Therefore, similar to the self-attention mechanism in the NLP field, the non-local module is applied to CV tasks, which can break through the distance limitation of convolution and obtain the correlations between each pixel and other pixels.

However, ignoring the channel dimension, the image is 2-dimensional and contains much richer spatial information than 1-dimensional natural language. The influence of one pixel on other pixels in an image is closely related to distance. The closer two pixels are in the distance, the stronger their correlation is (pixels of the same class are positively correlated, and pixels of different classes are negatively correlated). As shown in Fig. 7, it is assumed that the yellow part in the figure is class A, and the rest is class B. Points a, b, and c belong to class A, so they are positively correlated. But the distance from point a to point b is closer than the distance from point a to point c, so the correlation between point a and point b is stronger. Point a and d belong to different classes, so they correlate negatively. But the distance from point a to point d is closer than the distance from point a to point b, so the correlation between point a and point d is stronger.

The non-local module calculates the correlations among pixels across distances while ignoring the effect of distance factors on the correlation. To reflect the distance relationship between pixels, we use Euclidean distance to construct the distance matrix of each pixel to other pixels. As shown in Fig. 8LABEL:sub@fig_distance_matrix_a, if we have an image with a height of 2 and a width of 2, then its corresponding distance matrix D is shown in Fig. 8LABEL:sub@fig_distance_matrix_b, with a size of . The image has a total of 4 pixels. in the distance matrix represents the distance from the i-th pixel to the j-th pixel, where i is the row, and j is the column. As shown in Fig. 9, the matrix Cor obtained by multiplying Q and K in the Non-local module represents the underlying correlations between each pixel and other pixels. We obtain the matrix Cor_D by element-wise division of the basic correlation matrix Cor and the distance matrix D. The matrix Cor_D represents the correlation after distance optimization. Then the final relationship among pixels is obtained through the Softmax operation. As shown in Fig. 8LABEL:sub@fig_distance_matrix_c, to avoid the divisor being 0, we add 1 to the value of the distance matrix D.

III-D Manipulation Regions and Manipulation Edges Prediction

Since the features of the high-resolution branch and the context branch contain different granularities of information, we use BiSeNet’s attention refinement module (ARM) and feature fusion module (FFM) to fuse them to predict the manipulation mask. ARM and FFM are shown in Fig. 10LABEL:sub@fig_bisenet_arm and Fig. 10LABEL:sub@fig_bisenet_ffm, respectively.

First, The ARM is used to optimize the features output by layer 3l and layer 4l in the channel dimension:

| (3) |

where and are the features output by layer 3l and layer 4l. and are ARM-optimized features. Then use the non-local module to perform self-attention calculation in the spatial dimension:

| (4) |

where and are features optimized by the non-local module. Then we perform the element-wise sum of the feature output by layer 4h with and , respectively. Since they differ in resolution and dimensionality, they are processed using bilinear upsampling or convolution. Finally, use FFM to get the fused features:

| (5) | ||||

where represents spatial upsampling, represents a convolution, and represents the fused feature. The number of ’s channels is 256.

convolution is performed after upsampling ff by a factor of 2. After repeating the above operation three times, the final prediction of manipulation regions is obtained. Three upsampling changes the resolution of ff to 1/4, 1/2, and 1/1 of the original input, respectively. That is, the resolution of the prediction result is the same as the original input. Three convolutions change the number of channels to 1/4, 1/16, and 1/256 of the initial . That is, the final number of channels in the prediction result is 1. These operations prevent the channel from decreasing too drastically while upsampling, thereby increasing the accuracy of the manipulation prediction.

We use the EEB in Section III-B to separately predict the manipulation edges of the features output by the six layers in the model. Because the feature size in layer 1 production is 1/4 of the original input, we upsample the remaining five predicted manipulation edges by the same size. After concatenating the six prediction results in the channel dimension, a convolution is used to obtain the final predicted manipulation edge. The process is as follows:

| (6) | ||||

where represents the connection of the channel dimension, represents the six edge prediction results, and represents the convolution of .

| Dataset | Copy-move | Splicing | Removal | Total |

|---|---|---|---|---|

| CASIAv1 | 459 | 461 | 0 | 920 |

| CASIAv2 | 3263 | 1843 | 0 | 5106 |

| COVERAGE | 93 | 0 | 0 | 93 |

| COLUMBIA | 0 | 180 | 0 | 180 |

| NIST16 | 68 | 288 | 208 | 564 |

The manipulation regions of the image and the manipulation edges are small parts of the image. We use Dice Loss [28] as the loss function to alleviate the problem of an unbalanced number of pixels in the manipulation and non-manipulation regions. The ground-truth of manipulation regions is processed by the dilation operation and the erosion operation to obtain and , respectively. obtains the ground-truth of manipulation edges. The overall loss consists of the manipulation region prediction loss and the manipulation edge prediction loss. The calculation process is as follows:

| (7) |

where represents the loss of the manipulation regions, represents the loss of the manipulation edges, and is the weight.

IV Experiment

IV-A Experimental Setup

Implementation Details. We implement our model using the Pytorch framework. The high-resolution branch and the context branch of the model are initialized with the weights of ResNet-34 pre-trained on ImageNet [29]. The width and height of the input image are uniformly adjusted to 512. Image pixel values are divided by 255 for normalization. Standardize by subtracting the mean and dividing by the variance. The mean values of the three channels of BGR are 0.406, 0.456, and 0.485, respectively. The variances are 0.225, 0.224, and 0.229, respectively. Flipping and mirroring are used to perform simple data augment. The training batch size is set to 48. In the loss function, is set to 0.3. We train the model for 12K stpdf. The learning rate is initially set to 0.01 and then reduced to 0.0075, 0.005, and 0.0025 after stpdf 5K, 7.5K, and 10K, respectively. All experiments are performed on a single NVIDIA Tesla V100 GPU with 32GB memory. The source code is available at: https://github.com/kakashiz/NEDB-Net.

Evaluation Criteria. We evaluate the prediction results using pixel-level precision, recall, F1, and AUC. Because in data without GT, the most appropriate threshold cannot be predicted, we use the median value of 0.5 as the threshold to determine the positive and negative classes.

| CASIAv1 | COVERAGE | COLUMBIA | NIST16 | Mean | |

| FCN | 0.441 | 0.199 | 0.223 | 0.167 | 0.258 |

| HP-FCN | 0.154 | 0.003 | 0.067 | 0.121 | 0.086 |

| Mantra-Net | 0.155 | 0.286 | 0.364 | 0.000 | 0.201 |

| CR-CNN | 0.405 | 0.291 | 0.436 | 0.238 | 0.343 |

| GSR-Net | 0.387 | 0.285 | 0.613 | 0.283 | 0.392 |

| MVSS-Net | 0.452 | 0.453 | 0.638 | 0.292 | 0.459 |

| D-FCN (pre-train) | 0.007 | 0.194 | 0.376 | 0.402 | 0.245 |

| D-FCN (re-train) | 0.331 | 0.260 | 0.233 | 0.136 | 0.240 |

| Ours | 0.516 | 0.461 | 0.756 | 0.288 | 0.505 |

IV-B Comparison with the State of the art

Datasets. We select CASIAv1 [30], CASIAv2 [31], COVERAGE [32], COLUMBIA [33], NIST16 [34] these publicly available image manipulation datasets as the experimental datasets. The composition of the manipulation image types of datasets is shown in Table II. It is worth noting that some of the manipulation images lack the corresponding ground-truth or do not match the shape of the ground-truth. We only count the correct manipulation images here.

Evaluation method. When comparing effects in many studies, the model will be trained on other large datasets and then tested on the above datasets. Or select a portion of each dataset for fine-tuning and another portion for testing. We do not think this comparison is particularly plausible because it does not adequately demonstrate the model’s generality against unknown data. So, we adopt the same evaluation method as [22], let the model train only on the CASIAv2 dataset, and then directly test it on the remaining dataset. This approach directly reflects whether the model has learned how to detect manipulation rather than just fitting the dataset.

Baselines. The baseline models we chose are as follows: Fully Convolutional Networks (FCN) [35], High-Pass Fully Convolutional Network (HP-FCN) [36], Manipulation Tracing Network (Mantra-Net) [18], Constrained R-CNN (CR-CNN) [16], Generate, Segment, Refine Network (GSR-Net) [20], Multi-View Multi-Scale Supervision Network (MVSS-Net) [22], and Dense Fully Convolutional Network (D-FCN) [37]. Among them, HP-FCN and ManTra-Net directly use the models provided by the authors due to the lack of training codes and private datasets. The authors provide the weight trained on the private manipulation dataset and 10% of the NIST16 dataset. We train on the CASIAv2 dataset based on this pretrained weight and report two experimental results, where D-FCN (pre-train) means directly using the author’s pre-trained weights for testing, and D-FCN (re-train) means using our retrained model for testing. Other methods either follow the same evaluation protocol or retrain on the CASIAv2 dataset. The experimental results are shown in Table III (part of the experimental results are obtained from [22]).

Manipulation detection effect comparison. As can be seen from Table III, our model outperforms other models on other datasets, except that it is worse than D-FCN (pre-train) and MVSS-Net on the NIST16 dataset. Especially the results on COLUMBIA and CASIAv1 are much ahead of other models. The experimental results verify the detection effect of our model and show that our model can also achieve good results on unknown data sets.

Robustness evaluation. To compare the robustness of the models, we follow the same approach as [22], applying different levels of JPEG compression and Gaussian blur to CASIAv1, respectively. The experimental results are shown in Fig. 11. From this, we can see that our model is not ideal in terms of robustness performance. As the interference to the original image gradually increases, the detection effects of CR-CNN and our model decay more than the detection effects of other models. For this case, one possible reason is that both CR-CNN and our model only use the noise image as the model’s input. Compression and blurring damage the noise information more than semantic information. On the other hand, if the entire image is compressed or blurred, can the whole image be considered a manipulation region? If so, the above experimental results are not accurate. However, many studies’ robustness experiments use post-processing on the whole image. We think there should be a better way for ablation experiments.

IV-C Ablation Study

To better illustrate the effect of each module in our model, we gradually add each component on ResNet-34 for training and compare the results. Since the number of images in the datasets mentioned in Section IV-B is small, to make the model fully trained, we use the COCO [38] dataset to generate a large number of simple manipulation images as the dataset for ablation experiments. The COCO dataset contains a large number of images and annotations of objects in the images. Based on these object annotations, manipulation images can be easily created. The generation process of the manipulation image is as follows:

-

1.

Randomly select one image (copy-move type) or two images (splicing type) from the dataset as the source and destination images. The source and destination images are the same if only one image is selected.

-

2.

Randomly select an object from the source image, and perform operations such as rotation and scaling on the object.

-

3.

Paste the object randomly to a certain position of the destination image.

-

4.

Perform post-processing operations such as blurring.

A total of 76K synthetic manipulation images were generated using the above method. We train models using these manipulation images and test them on the COLUMBIA dataset and the CASIAv1 dataset. The models for the ablation experiment are as follows:

-

•

SB: Use ResNet-34 as the base model.

-

•

DB: Use ResNet-34 to build a dual-branch network and add the ARM module and FFM module for features fusion.

-

•

DB+origin-CC: Add the original constrained convolution on the dual-branch network.

-

•

DB+CC: Add the improved constrained convolution on the dual-branch network.

-

•

DB+CC+Edge: Add the improved constrained convolution and edge detection on the dual-branch network.

-

•

DB+CC+Edge+NL: Add the improved constrained convolution, edge detection, and Non-local module on the dual branch network.

-

•

DB+CC+Edge+NL-D: Add the improved constrained convolution, edge detection, and Non-local module with distance factor on the dual-branch network, i.e. the final model.

The results of the ablation experiments are shown in Table IV. Comparing SB and DB shows that the dual-branch network can capture more detailed information that is beneficial for manipulation detection. The comparison of the results of DB, DB+origin-CC, and DB+CC confirms the problem of the original constrained convolution we mentioned in Section III-A and illustrates the effect of our improved constrained convolution. From the comparison between DB+CC and DB+CC+Edge, we can find that manipulation edge detection is very helpful to the overall detection effect. The results of DB+CC+Edge, DB+CC+Edge+NL, and DB+CC+Edge+NL-D show that the global correlations computed by the self-attention mechanism are beneficial to distinguishing manipulation and non-manipulation pixels, and the self-attention mechanism after adding the distance factor works better.

| Models | COLUMBIA | COVERAGE | ||||||

|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | AUC | Precision | Recall | F1 | AUC | |

| SB | 0.821 | 0.655 | 0.719 | 0.808 | 0.610 | 0.438 | 0.474 | 0.714 |

| DB | 0.814 | 0.719 | 0.750 | 0.831 | 0.632 | 0.498 | 0.505 | 0.739 |

| DB+origin-CC | 0.766 | 0.717 | 0.741 | 0.822 | 0.651 | 0.533 | 0.553 | 0.752 |

| DB+CC | 0.803 | 0.765 | 0.771 | 0.845 | 0.632 | 0.591 | 0.568 | 0.771 |

| DB+CC+Edge | 0.831 | 0.755 | 0.784 | 0.851 | 0.665 | 0.613 | 0.602 | 0.796 |

| DB+CC+Edge+NL | 0.801 | 0.809 | 0.794 | 0.862 | 0.696 | 0.718 | 0.676 | 0.831 |

| DB+CC+Edge+NL-D | 0.848 | 0.800 | 0.815 | 0.872 | 0.682 | 0.769 | 0.688 | 0.851 |

| Initialization method | COLUMBIA | COVERAGE | ||||||

|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | AUC | Precision | Recall | F1 | AUC | |

| Random1 | 0.830 | 0.741 | 0.769 | 0.848 | 0.717 | 0.587 | 0.604 | 0.785 |

| Random2 | 0.830 | 0.766 | 0.786 | 0.853 | 0.721 | 0.681 | 0.645 | 0.822 |

| Random3 | 0.816 | 0.795 | 0.798 | 0.858 | 0.687 | 0.703 | 0.640 | 0.824 |

| Random-sum1 | 0.807 | 0.809 | 0.799 | 0.856 | 0.701 | 0.757 | 0.688 | 0.855 |

| Random-sum2 | 0.817 | 0.760 | 0.781 | 0.849 | 0.693 | 0.566 | 0.576 | 0.773 |

| Random-sum3 | 0.824 | 0.797 | 0.799 | 0.859 | 0.707 | 0.636 | 0.636 | 0.807 |

| Laplace-like | 0.807 | 0.809 | 0.798 | 0.856 | 0.701 | 0.757 | 0.688 | 0.849 |

| Laplace-like-D | 0.848 | 0.800 | 0.815 | 0.872 | 0.682 | 0.769 | 0.688 | 0.851 |

IV-D Constrained convolution initialization

Since our model uses the noise image extracted by the constrained convolution instead of the original image as the manipulation detection cue, the initialization method of the constrained convolution is very important for the model network. The initialization methods for comparison are as follows:

-

•

Random: The weight of the center position is -1, and the weight of other positions is randomly initialized to a value between 0-1.

-

•

Random-Sum: The center position weight is -1. The weight of other positions is randomly initialized to a value between 0-1, and the sum of the weights of the non-central positions is 1.

- •

- •

The training and test sets of the experiments are the same as in Section IV-C. Since Random and Random-Sum are initialized randomly, we retrain them three times. The experimental results are shown in Table V. It can be seen that the results of Random and Random-Sum are both unstable due to random initialization. But the initialization of Random-Sum is similar to a high-pass filter, so the overall effect is better than Random. Laplace-like and Laplace-like-D perform better than the two random initialization methods. And Laplace-like-D has a better overall effect because it can better reflect the correlation between pixels according to the distance in the initialization process.

IV-E Kernel size of constrained convolution

Different sizes of convolution kernels greatly influence the effect of constrained convolution. If the convolution kernel is too small, its receptive field is too small to capture complete information. If the convolution kernel is too large, it will also capture a lot of irrelevant information while affecting the calculation speed. So, we gradually increase the convolution size of the constrained convolution kernel from to for experiments. The training and test sets of the experiments are the same as in Section IV-C. Experimental results are shown in Table VI. From the experimental results, we can see that when the convolution kernel size is , the overall detection effect is better. Although the effect is also good when the convolution kernel size is , a too-large convolution kernel will affect the calculation speed.

| Kernal size | COLUMBIA | COVERAGE | ||||||

|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | AUC | Precision | Recall | F1 | AUC | |

| 3x3 | 0.810 | 0.722 | 0.751 | 0.835 | 0.667 | 0.589 | 0.583 | 0.786 |

| 5x5 | 0.848 | 0.800 | 0.815 | 0.872 | 0.682 | 0.769 | 0.688 | 0.851 |

| 7x7 | 0.823 | 0.775 | 0.791 | 0.852 | 0.690 | 0.626 | 0.620 | 0.795 |

| 9x9 | 0.836 | 0.811 | 0.813 | 0.873 | 0.693 | 0.680 | 0.648 | 0.818 |

| 11x11 | 0.773 | 0.853 | 0.794 | 0.862 | 0.725 | 0.778 | 0.694 | 0.857 |

Since the number of channels of the input image is three, to keep the number of channels of the noise image consistent, the size of the constrained convolution in the batch dimension is three. Different sizes of convolution kernels capture different information, but it is questionable whether setting the convolution kernels of different batches to different sizes can get better results. To verify this question, we conduct experiments with the following settings:

-

•

3, 5, 7: The sizes of three batches of convolution kernels are set to , , and , respectively.

-

•

5, 7, 9: The sizes of three batches of convolution kernels are set to , , and , respectively.

-

•

5, 5, 5: The sizes of three batches of convolution kernels are all set to .

The experimental results are shown in Table VII. Contrary to our assumption, combining convolution kernels of different sizes does not improve the detection effect but leads to poor detection results. One possible reason for the poor results is that the noise features extracted by different convolution kernels are quite different. These differences are not conducive to subsequent predictions.

| Kernal size | COLUMBIA | COVERAGE | ||||||

|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | AUC | Precision | Recall | F1 | AUC | |

| 3, 5, 7 | 0.753 | 0.661 | 0.682 | 0.795 | 0.494 | 0.526 | 0.462 | 0.725 |

| 5, 7, 9 | 0.765 | 0.656 | 0.684 | 0.791 | 0.502 | 0.492 | 0.451 | 0.713 |

| 5, 5, 5 | 0.848 | 0.800 | 0.815 | 0.872 | 0.682 | 0.769 | 0.688 | 0.851 |

| Edge kernel | COLUMBIA | COVERAGE | ||||||

|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | AUC | Precision | Recall | F1 | AUC | |

| ELLIPSE_ | 0.845 | 0.777 | 0.799 | 0.859 | 0.740 | 0.635 | 0.645 | 0.808 |

| ELLIPSE_ | 0.848 | 0.800 | 0.815 | 0.872 | 0.682 | 0.769 | 0.688 | 0.851 |

| ELLIPSE_ | 0.830 | 0.798 | 0.803 | 0.867 | 0.707 | 0.661 | 0.638 | 0.816 |

| ELLIPSE_ | 0.836 | 0.797 | 0.807 | 0.864 | 0.681 | 0.665 | 0.645 | 0.813 |

| RECT_ | 0.827 | 0.808 | 0.805 | 0.868 | 0.689 | 0.693 | 0.660 | 0.827 |

| RECT _ | 0.831 | 0.769 | 0.791 | 0.857 | 0.727 | 0.707 | 0.675 | 0.837 |

| RECT _ | 0.809 | 0.787 | 0.787 | 0.854 | 0.682 | 0.693 | 0.647 | 0.825 |

| RECT _ | 0.837 | 0.784 | 0.801 | 0.863 | 0.669 | 0.624 | 0.598 | 0.797 |

| CROSS_ | 0.834 | 0.793 | 0.801 | 0.865 | 0.701 | 0.640 | 0.625 | 0.804 |

| CROSS _ | 0.847 | 0.782 | 0.804 | 0.864 | 0.668 | 0.634 | 0.615 | 0.802 |

| CROSS _ | 0.798 | 0.777 | 0.775 | 0.846 | 0.707 | 0.716 | 0.670 | 0.840 |

| CROSS _ | 0.851 | 0.767 | 0.796 | 0.862 | 0.661 | 0.611 | 0.607 | 0.796 |

IV-F Generation method of manipulation edge ground-truth

As mentioned earlier, the manipulation edge is a vital clue. The ground-truth of manipulation regions is processed by OpenCV’s dilation and erosion operations to obtain and . gets the ground-truth of manipulation edges. In OpenCV’s dilation and erosion operations, the shape and size of the kernel can be set. The shape of the kernel can be oval, rectangular, or cross. The width of manipulation edges in the ground-truth can be adjusted by setting the size of the kernel. If the edge is too narrow, it is tough for training and prediction. If the edge is too wide, it will cause too many irrelevant pixels to affect the prediction effect.

So, we use kernels of different shapes and sizes to generate the ground-truth of manipulation edges for experiments. The training and test sets of the experiments are the same as in Section IV-C. Experimental results are shown in Table VIII. From this table, we can see that the average effect of elliptical and rectangular kernels is better than that of cross-shaped kernels, and the elliptical kernels perform best. One possible reason is that the number of pixels involved in the cross-shaped kernel operation is too small, while the rectangular kernel operation involves more irrelevant pixels. In addition, the detection performance does not continually improve as the kernel size increases. That is, the width of the ground-truth of edges should be moderate. In our experiments, the edges produced by the kernel performed best for manipulation detection.

V Conclusion

In this paper, we propose an image manipulation detection model based on image noise and manipulation edge. The improved constrained convolution can better extract the noise information of the image while addressing the training stability. The Non-local module ignores the distance relationship among pixels while computing the correlations among pixels spanning distances. We add a distance factor to it to better capture the global information among pixels. The manipulation edge detection task constructed based on the features of the high-resolution branch and the context branch greatly improves the overall manipulation detection effect. Experiments on public manipulation datasets and synthetic manipulation datasets demonstrate the state-of-the-art detection performance of our model.

There are still some problems to be solved here. Firstly, the noise information of the image is not robust to JPEG compression and Gaussian blur. In addition to noise, how can factors such as illumination and chromaticity related to manipulation inconsistency be better applied in neural networks? Secondly, there is disagreement in the identification of large manipulation regions. If 90% of an image is spliced, is it correct for the model to identify the original 10% as manipulation? In the future we will further optimize the structure of the model.

Acknowledgments

The authors would like to thank the Jiutian deep learning platform of China Mobile. This work used the platform for project construction and model training.

References

- [1] T. J. de Carvalho, C. Riess, E. Angelopoulou, H. Pedrini, and A. de Rezende Rocha, “Exposing digital image forgeries by illumination color classification,” IEEE Transactions on Information Forensics and Security, vol. 8, no. 7, pp. 1182–1194, 2013.

- [2] G. Chierchia, G. Poggi, C. Sansone, and L. Verdoliva, “A bayesian-mrf approach for prnu-based image forgery detection,” IEEE Transactions on Information Forensics and Security, vol. 9, no. 4, pp. 554–567, 2014.

- [3] J. Li, X. Li, B. Yang, and X. Sun, “Segmentation-based image copy-move forgery detection scheme,” IEEE Transactions on Information Forensics and Security, vol. 10, no. 3, pp. 507–518, 2015.

- [4] K. Bahrami, A. C. Kot, L. Li, and H. Li, “Blurred image splicing localization by exposing blur type inconsistency,” IEEE Transactions on Information Forensics and Security, vol. 10, no. 5, pp. 999–1009, 2015.

- [5] J. Fridrich and J. Kodovsky, “Rich models for steganalysis of digital images,” IEEE Transactions on Information Forensics and Security, vol. 7, no. 3, pp. 868–882, 2012.

- [6] B. Bayar and M. C. Stamm, “Constrained convolutional neural networks: A new approach towards general purpose image manipulation detection,” IEEE Transactions on Information Forensics and Security, vol. 13, no. 11, pp. 2691–2706, 2018.

- [7] C. Yu, J. Wang, C. Peng, C. Gao, G. Yu, and N. Sang, “Bisenet: Bilateral segmentation network for real-time semantic segmentation,” in Proceedings of the European Conference on Computer Vision (ECCV), September 2018.

- [8] Y. Hong, H. Pan, W. Sun, and Y. Jia, “Deep dual-resolution networks for real-time and accurate semantic segmentation of road scenes,” arXiv preprint arXiv:2101.06085, 2021.

- [9] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is all you need,” Advances in neural information processing systems, vol. 30, 2017.

- [10] X. Wang, R. Girshick, A. Gupta, and K. He, “Non-local neural networks,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 7794–7803.

- [11] Y. Wu, W. Abd-Almageed, and P. Natarajan, “Busternet: Detecting copy-move image forgery with source/target localization,” in Proceedings of the European Conference on Computer Vision (ECCV), September 2018.

- [12] A. Islam, C. Long, A. Basharat, and A. Hoogs, “Doa-gan: Dual-order attentive generative adversarial network for image copy-move forgery detection and localization,” in 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2020, pp. 4675–4684.

- [13] O. Mayer and M. C. Stamm, “Forensic similarity for digital images,” IEEE Transactions on Information Forensics and Security, vol. 15, pp. 1331–1346, 2020.

- [14] P. Zhou, X. Han, V. I. Morariu, and L. S. Davis, “Learning rich features for image manipulation detection,” in 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018, pp. 1053–1061.

- [15] S. Ren, K. He, R. Girshick, and J. Sun, “Faster r-cnn: Towards real-time object detection with region proposal networks,” in Advances in Neural Information Processing Systems, C. Cortes, N. Lawrence, D. Lee, M. Sugiyama, and R. Garnett, Eds., vol. 28. Curran Associates, Inc., 2015. [Online]. Available: https://proceedings.neurips.cc/paper/2015/file/14bfa6bb14875e45bba028a21ed38046-Paper.pdf

- [16] C. Yang, H. Li, F. Lin, B. Jiang, and H. Zhao, “Constrained r-cnn: A general image manipulation detection model,” in 2020 IEEE International Conference on Multimedia and Expo (ICME), 2020, pp. 1–6.

- [17] K. He, G. Gkioxari, P. Dollar, and R. Girshick, “Mask r-cnn,” in Proceedings of the IEEE International Conference on Computer Vision (ICCV), Oct 2017.

- [18] Y. Wu, W. AbdAlmageed, and P. Natarajan, “Mantra-net: Manipulation tracing network for detection and localization of image forgeries with anomalous features,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2019.

- [19] R. Salloum, Y. Ren, and C.-C. Jay Kuo, “Image splicing localization using a multi-task fully convolutional network (mfcn),” Journal of Visual Communication and Image Representation, vol. 51, pp. 201–209, 2018. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S1047320318300178

- [20] P. Zhou, B.-C. Chen, X. Han, M. Najibi, A. Shrivastava, S.-N. Lim, and L. Davis, “Generate, segment, and refine: Towards generic manipulation segmentation,” in Proceedings of the AAAI conference on artificial intelligence, vol. 34, no. 07, 2020, pp. 13 058–13 065.

- [21] L.-C. Chen, G. Papandreou, I. Kokkinos, K. Murphy, and A. L. Yuille, “Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 40, no. 4, pp. 834–848, 2018.

- [22] X. Chen, C. Dong, J. Ji, J. Cao, and X. Li, “Image manipulation detection by multi-view multi-scale supervision,” in Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), October 2021, pp. 14 185–14 193.

- [23] C. Yu, J. Wang, C. Peng, C. Gao, G. Yu, and N. Sang, “Learning a discriminative feature network for semantic segmentation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2018.

- [24] Z. Zhu, M. Xu, S. Bai, T. Huang, and X. Bai, “Asymmetric non-local neural networks for semantic segmentation,” in Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), October 2019.

- [25] Z. Huang, X. Wang, L. Huang, C. Huang, Y. Wei, and W. Liu, “Ccnet: Criss-cross attention for semantic segmentation,” in Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), October 2019.

- [26] J. Fu, J. Liu, H. Tian, Y. Li, Y. Bao, Z. Fang, and H. Lu, “Dual attention network for scene segmentation,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2019.

- [27] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2016.

- [28] F. Milletari, N. Navab, and S.-A. Ahmadi, “V-net: Fully convolutional neural networks for volumetric medical image segmentation,” in 2016 Fourth International Conference on 3D Vision (3DV), 2016, pp. 565–571.

- [29] J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei, “Imagenet: A large-scale hierarchical image database,” in 2009 IEEE Conference on Computer Vision and Pattern Recognition, 2009, pp. 248–255.

- [30] J. Dong, W. Wang, and T. Tan, “Casia image tampering detection evaluation database 2010,” doi, vol. 10, pp. 422–426, 2010.

- [31] J. Dong, W. Wang, and T. Tan, “Casia image tampering detection evaluation database,” in 2013 IEEE China Summit and International Conference on Signal and Information Processing. IEEE, 2013, pp. 422–426.

- [32] B. Wen, Y. Zhu, R. Subramanian, T.-T. Ng, X. Shen, and S. Winkler, “Coverage—a novel database for copy-move forgery detection,” in 2016 IEEE international conference on image processing (ICIP). IEEE, 2016, pp. 161–165.

- [33] J. Hsu and S. Chang, “Columbia uncompressed image splicing detection evaluation dataset,” Columbia DVMM Research Lab, 2006.

- [34] H. Guan, M. Kozak, E. Robertson, Y. Lee, A. N. Yates, A. Delgado, D. Zhou, T. Kheyrkhah, J. Smith, and J. Fiscus, “Mfc datasets: Large-scale benchmark datasets for media forensic challenge evaluation,” in 2019 IEEE Winter Applications of Computer Vision Workshops (WACVW). IEEE, 2019, pp. 63–72.

- [35] J. Long, E. Shelhamer, and T. Darrell, “Fully convolutional networks for semantic segmentation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2015.

- [36] H. Li and J. Huang, “Localization of deep inpainting using high-pass fully convolutional network,” in Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), October 2019.

- [37] P. Zhuang, H. Li, S. Tan, B. Li, and J. Huang, “Image tampering localization using a dense fully convolutional network,” IEEE Transactions on Information Forensics and Security, vol. 16, pp. 2986–2999, 2021.

- [38] T.-Y. Lin, M. Maire, S. Belongie, J. Hays, P. Perona, D. Ramanan, P. Dollár, and C. L. Zitnick, “Microsoft coco: Common objects in context,” in European conference on computer vision. Springer, 2014, pp. 740–755.