No-Regret Learning for Stackelberg Equilibrium Computation in Newsvendor Pricing Games

Abstract

We introduce the application of online learning in a Stackelberg game pertaining to a system with two learning agents in a dyadic exchange network, consisting of a supplier and retailer, specifically where the parameters of the demand function are unknown. In this game, the supplier is the first-moving leader, and must determine the optimal wholesale price of the product. Subsequently, the retailer who is the follower, must determine both the optimal procurement amount and selling price of the product. In the perfect information setting, this is known as the classical price-setting Newsvendor problem, and we prove the existence of a unique Stackelberg equilibrium when extending this to a two-player pricing game. In the framework of online learning, the parameters of the reward function for both the follower and leader must be learned, under the assumption that the follower will best respond with optimism under uncertainty. A novel algorithm based on contextual linear bandits with a measurable uncertainty set is used to provide a confidence bound on the parameters of the stochastic demand. Consequently, optimal finite time regret bounds on the Stackelberg regret, along with convergence guarantees to an approximate Stackelberg equilibrium, are provided.

††footnotetext: A extended abstract was accepted at the 8th International Conference on Algorithmic Decision Theory (ADT 2024).Keywords: Stackelberg Games, Online Learning, Dynamic Pricing

1 Introduction

We study the problem of dynamic pricing in a two-player general sum Stackelberg game. An economic interaction between learning agents in a dyadic exchange network is modelled as a form of economic competition between two firms in a connected supply chain. Economic competition can arise between self-interested firms in the intermediate stages of a supply chain, especially when they are non-centralized or not fully coordinated, operating as individual entities [Per07] [CZ99].

From a single agent perspective, online learning for dynamic pricing has been studied extensively, and known algorithms exist guaranteeing sub-linear regret [GP21] [BSW22] [Bab+15]. [KL03] first presented the problem of demand learning, where the firm must optimally learn the parameters of demand in an online learning setting. From a single-agent perspective, this has been previously studied in [BP06] and [PK ̵01], to name a few. When more than one agent is involved, the dynamic pricing environment becomes more complex. [Bra+18] investigates the problem setting where a seller is selling to a regret-minimizing buyer. Under the assumption that the buyer is running a contextual bandit algorithm, a reward-maximizing algorithm for the seller upper-bounded by a margin above the monopoly revenue is provided. [GP21] applied the use of linear contextual bandits to model multinomial logit demand in the multi-assortment dynamic pricing problem. [BSW22] investigates online learning for dynamic pricing with a partially linear demand function. Multi-agent dynamic pricing has also been previously studied in [DD00] and [KUP03], to name a few. Nevertheless, these aforementioned works do not consider the context of agents in a supply chain setting, where the inventory policy has an impact on the expected profits.

Dynamic pricing can also be considered in scenarios with limited supply [Bab+15], thereby limiting the retailer from profiting from the theoretical revenue maximizing price for the product. In situations where the retailer must order in advance the product to be sold, and bear the risk of limited supply, or supply surplus, this is known as a Newsvendor firm [AHM51] [Mil59] [HW63]. Specifically, learning and optimization within the Newsvendor framework have also been studied in [BR19]. When the Newsvendor must also dynamically price the item, this is referred to as the price-setting Newsvendor [JK13] [PD99] [Mil59], and methods to compute such optimal solutions under perfect information were first provided in [Mil59]. We further extend the well-known problem of online learning in a single agent dynamic pricing environment to a two agent competitive learning environment. One example of such competition is the rivalry between independent self-interested suppliers and retailers within a supply chain. Research by [Per07] and [Ma+22] has demonstrated that this type of competition can lead to a degradation in the general social welfare.

In our case we model the competition in a dyadic exchange network as a repeated Stackelberg game, which finds numerous applications in market economics [DD90] [AE92] and supply chain analysis [NKS19]. In previous work within the domain of supply chain management, the elements of parameter learning and regret minimization remain under-investigated. Specifically, in scenarios where suppliers and retailers engage in myopic supply chain competition aimed at profit maximization through the exchange of demand function information, as in [ZC13], equilibrium is often established straightforwardly under a perfect information setting. Most saliently, the ramifications of worst-case scenarios and suboptimal deviations in the face of uncertainty remain wholly unquantified. Therefore, addressing this challenge with mathematical rigor constitutes one of the focal points of our work.

In the framing of online learning for a supply chain Stackelberg game, [Ces+23] considered Newsvendor competition between supplier and retailer, under perfect information and limited information settings. Utilizing bandit learning techniques, such as explore-then-commit (ETC) and follow-the-leader (FTL), they are able to produce bounds on regret under mild assumptions [GLK16].

Our supply chain game consists of a leader (supplier) and a follower (retailer). The leader dynamically prices the item to sell to the follower, and the follower must thereafter dynamically price the item for the market.

The objective of this repeated game is to determine the optimal policy to maximize the expected profit in the face of stochastic demand for the leader, and determine the best response of the follower (under the assumptions in Section 2.4.2), leading to an approximate Stackelberg equilibrium. The rationale behind this is that, often times, decisions between firms competing with one another do not occur simultaneously, but rather turn-based. We define this Newsvendor pricing game more concretely in Section 2.4.

In most cases, given the known parameters of the model, the best response of the follower can be computed for any of the leader’s first action. Assuming rationality of both agents, it follows from the best response of the follower that the optimal policy of the leader can be computed. However, the sample-efficient learning of the parameters of the joint reward function presents a genuine problem for Stackelberg gamess, as the leader is learning both the joint reward function of the system and computing the best response of the follower. To increase the sample efficiency of parameter learning in Stackelberg games, bandit algorithms have been proposed to address this problem [Bai+21] [FCR19] [FCR20] [Bal+15]. Our paper proposes online learning algorithms for Stackelberg games in a dyadic exchange network utilizing linear contextual bandits [APS11] [Chu+11], borrowing bounds on misspecification from these bandit methods to achieve theoretical guarantees on the behaviour of the algorithm in terms of regret and optimality, later discussed in Section 3.

1.1 Key Contributions

Our study integrates established economic theory with online learning theory within a practical game theoretic framework. Specifically, we employ a linear contextual bandit algorithm within a Stackelberg game to address a well-defined economic scenario. The primary technical challenge lies in optimizing for both contextual and Stackelberg regret, particularly when facing stochastic environmental parameters, as well as uncertainty in agent strategies. Incorporating economic theory into online learning theory is inherently challenging due to the unique characteristics of reward functions and best response functions, as expounded in Section 2.4.3. In some cases, closed-form solutions are unattainable, requiring innovative approaches for optimization and bounding functions, as expounded in Section 3.5, in order to derive theoretical worst-case bounds on regret.

To summarize, we provide technical contributions in the following areas:

-

1.

We prove the existence of a unique Stackelberg equilibrium under perfect information for a general sum game within a Newsvendor pricing game (described in Section 2.4).

-

2.

An online learning algorithm for the Newsvendor pricing game, leveraging stochastic linear contextual bandits, is outlined, which is integrated with established economic theory.

-

3.

The convergence properties of an online learning algorithm to approximate Stackelberg equilibrium, as well theoretical guarantees for bounds on finite-time regret, are provided.

-

4.

We demonstrate our theoretical results via economic simulations, to show that our learning algorithm outperforms baseline algorithms in terms of finite-time cumulative regret.

2 Model Formulation

Definitions: We define two players in a repeated Stackelberg game. Player A is the leader, she acts first with action . Player B is the follower, he acts second with action . The follower acts in response to the leaders action, and both players earn a joint payoff as a function of the reward function. In the abstract sense, and denote the actions of the leader and follower in action spaces and respectively. To be precise, given an action space with dimension , and are vectors of different dimensions (we later illustrate that and in Section 2.4.1). This is due to the fact that the leader’s and follower’s actions do not necessarily follow the same form.

2.1 Repeated Stackelberg Games

In a repeated Stackelberg game, the leader takes actions , and the follower takes actions in reaction to the leader. The joint action is represented as for each time step from to . The joint utility (reward) functions for the leader and follower are denoted as and , respectively. From an economic standpoint, the application of pure strategies aligns with the prevalent strategic behaviours observed in many firms, emphasizing practicality and realism [PS80] [Tir88].

Strategy Definition: Let and denote the pure strategies of both agents represented as standard maps,

| (2.1) |

The strategy of the leader at time is denoted by , which maps from the filtration to the set of possible actions . Where the filtration consists of all possible -algebras of observations up until time , i.e., . To clarify, belongs to the set of integers such that , where is a fixed finite-time endpoint. The action taken by the leader at time , denoted as , is determined by the leader’s strategy , which depends on the observed actions up to time . Therefore, is a morphism from the set of filtrations to the set of actions in discrete time, . On the other hand, the strategy of the follower is formulated as a response to the leader’s action; therefore, it is a morphism, .

Best Response Function: To be specific, is expressed as a parametric function with exogenous sub-Gaussian noise (the exact parametric form for is provided in Eq. (2.14)). Conversely, for the leader, is a deterministic function solely driven by the direct action of the leader followed by the reaction of the follower . At each discrete time interval, the best response of the follower, , is defined as follows:

| (2.2) |

Eq. (2.2) makes the assumption that there exists a unique best response to . We demonstrate the uniqueness of later in Theorem 1. is the best response function of to , as defined in Equation (2.2) under perfect information. Given is unique, the objective for the leader is to maximize her reward based on the expectation of the follower’s best response. In a Stackelberg game, the leader takes actions, from time , and receives rewards , as the follower is always reacting to the leader with his perfect or approximate best response, he receives rewards . We shall use the notation to represent the joint strategy of both players.

Contextual Regret (Follower): In the bandit setting with no state transitions, the leader’s strategy represents a sequence consisting of actions, or contexts, , for the follower. The contextual regret is defined as,

| (2.3) |

Contextual regret, defined as in Eq. (2.3), is the difference between cumulative follower rewards from the best-responding follower and any other strategy adhered to by the follower in response to a series of actions form the leader, . Given context and environmental parameters , the follower attempts to maximize his rewards via some no-regret learner (we choose an optimistic stochastic linear contextual bandit algorithm as outlined in Section 3). indicates that expectation over uncertainty is with respect to the stochastic demand environment, governed by parameter .

Stackelberg Regret (Leader): The Stackelberg regret, defined as for the leader, is the difference in cumulative rewards when players optimize their strategies in hindsight, versus via any joint strategy . Stackelberg regret, also known as the external regret [Hag+22] [Zha+23] is defined from the leader’s perspective in Eq. (2.4) as the cumulative difference in leader’s rewards between the maximization of under a best responding follower, versus any other joint strategy adhered to by both agents. This definition assumes that the rational leader must commit to a strategy at the beginning of the game, which she believes is optimal on expectation. The leader maximizes their reward taking into account their anticipated uncertainty of the follower’s response . When computing the expectation of , the randomness of is fully strategic, that is, it arises from the follower’s action according to the strategy . Our algorithm seeks to minimize the Stackelberg regret, constituting a no-regret learner from the leader’s perspective.

| (2.4) |

For convenience of notation, moving forward, we will establish the equivalences for and .

2.2 Literature Review on Learning in Stackelberg Games

| Source | Prob. Setting | Info. | Methodology | Theorem | Regret |

|---|---|---|---|---|---|

| [Zha+23] | Noisy omniscient follower. with noise | Partial Info. | UCB with expert information. | Thm. 3.3 | |

| [Hag+22] | Stackelberg Security Game. | Partial Info. | Batched binary search, with discounting, and delayed feedback. | Thm. 3.13 | |

| [Hag+22] | Dynamic pricing. | Partial Info. | Successive elimination with upper-confidence bound. | Thm. 4.7 | or |

| [FKM04] | Convex joint reward function* | Partial Info. | Gradient approximation. | Thm. 2 | |

| [CLP19] | Spam Classification | Full Info. | Adaptive polytope partitioning. | Thm. 4.1 | |

| [Bal+15] | Stackelberg Security Game | Full Info. | Follow the leader. | Thm. 5.1 |

We classify game theoretic learning as consisting of two key aspects. The first is the learning of the opposing player’s strategy. In particular, the strategy of the follower can be optimal, in the case of an omniscient follower, or -optimal [CLP19] [Bal+15] [Gan+23], in the case of an imperfect best response stemming from hindered information sharing or suboptimal follower policies. In this setting, the parameters of the reward function are known, and the players are learning an approximate or perfect equilibrium strategy. Notably, [Bal+15] demonstrated that there always exists an algorithm that will produce a worst case leader regret bound of . In another example, [CLP19] presents a method where polytopes constructed within the joint action space of the players can be adaptively partitioned to produce a learning algorithm achieving sublinear regret under perfect information, demonstrated in a spam classification setting.

The second aspect of online learning in SG’s pertains to the learning of the parameters of the joint reward function which corresponds to and influences . In this environment, is unknown or only partially known, and must be learned over time in a bandit setting. Several approaches address this problem setting. For example, a worst case regret bound of was proven in [Zha+23], where the authors use a -covering method to achieve the bound on regret. Where is the -covering over the joint action space. [Hag+22] produce a worst-case regret bound of by leveraging batched binary search in a demand learning problem setting. Furthermore, they investigate the problem of a non-myopic follower, where the anticipation of future rewards ameliorated by a discount factor , induces the follower to suboptimally best respond. represents a parameter proportional to the delay in the revelation of the follower’s actions by a third party. Under this mechanism, the regret is proportional to both as well as , where for very small values of (close to non-discounting), the could potentially dominate the regret. [FKM04] provide a bound for a convex joint reward function using approximate gradient descent, where represents a polynomial function with respect to . Note that in the partial information setting, the bound on worst-case leader regret is typically superlinear.

2.3 Approximate Stackelberg Equilibrium

The Stackelberg Equilibrium of this game is defined as any joint strategy which,

| (2.5) |

Where is the best response of the follower, as defined in Eq. (2.2). Let denote a probability simplex over possible strategies , thus the expectation on is with respect to . From the follower’s perspective, suppose he adopts an approximate best response strategy . This implies that there exists some , such that,

| (2.6) |

This formalization allows for an deviation, potentially making the problem computationally tractable, particularly when optimization over certain functions lacks simple closed-form solutions or is computationally intensive (as we explore in detail in Section 2.4.3). It’s worth noting that the expectation for the leader reflects uncertainty about the follower’s strategy, while the expectation for the follower relates to the randomness of the environment. Acknowledging the presence of the error of best response function resulting from the possibility of the follower to act imperfectly, or strategically with purpose influence the leader’s strategy, we propose an -approximate equilibrium with full support, [Rou10] [DGP09].

Upper-Bounding Approximation Function: Let represent a Lipschitz function, such that it is consistently greater than or equal to . The selection of this upper-bounding function is appropriate within our problem framework for two key reasons. Firstly, it leverages on established theory on economic pricing, allowing us to derive a rational best response for the follower (see Section 2.4.3). Secondly, its adoption aligns with our implementation of an optimistic bandit algorithm, ensuring an inherent tendency to overestimate reward potential in the face of uncertainty.

| (2.7) |

Therefore, we define the approximate follower best response as,

| (2.8) |

In accordance with Eq. (2.7), we note that the follower reward utilizing the approximate best response is less than that of the optimal best response as a consequence, where . Furthermore, in our problem definition, the follower approximates his best response with such that, across its domain of , the image of the approximating function, , is greater or equal than the image of ]. The motivation behind this is that, can represent a simple parametric function with a known analytical solution, whereas could represent an more elaborate function, with no known exact analytical solution. Therefore it is possible that, and may not necessarily belong to the same parametric family, nor adhere to the same functional form.

Approximate SE: As a consequence, we can therefore infer that there exists some additive which upper-bounds this approximation error in the image of . We define an approximate -Stackelberg equilibrium with approximation error the follower’s perspective as follows,

| (2.9) |

Effectively, defines an upper bound of the range on where could lie, given the approximate best response under perfect information, defined as . represents the worst case additive approximation error of , where .

| (2.10) |

The suboptimality of is attributed to the error in the approximation of the theoretically optimal response with , in response to the leader’s action (the economic definitions of , and are later illustrated in Section 2.4). In our learning scenario, the follower has access to , which shares the same parameters but differs from the functional form of . This allows us devise a learning algorithm which converges to . Thus, any algorithm which learns an -approximate Stackelberg equilibrium, is formally defined as an algorithm that converges to an equilibrium solution with approximation error, as defined in Eq. (2.10).

2.4 Multi-Agent Economic Setting - the Newsvendor Pricing Game (NPG)

We model the two learning agents in a Newsvendor pricing game, involving a supplier and a retailer . The leader, a supplier, is learning to dynamically price the product for the follower, a retailer, aiming to maximize her reward. To achieve this, the follower adheres to classical Newsvendor theory, which involves finding the optimal order quantity given a known demand distribution before the realization of the demand. We provide further economic details in Section 2.4.3.

Differing from the closely related work of [Ces+23], we do make some relaxations to the problem in their setting. Firstly, we assume no production cost for the item, which provides a non-consequential change to the economic theory. Secondly, we assume full observability of the realized demand of both the supplier and retailer. This is commonplace in settings where a disclosure of the realized sales must be disclosed to an upstream supplier, which could occur as a stipulation as a part of the supplier contract, and/or when the sales ecosystem is part of an integrated eCommerce platform.

Nevertheless, our problem setting further sophisticates the solution from [Ces+23] in multiple ways. Firstly, in addition to determining the optimal order amount (known as the Newsvendor problem), the follower must also dynamically determine the optimal retail price for the product in the face of demand uncertainty (known as the price-setting Newsvendor). Thereby, we introduce the problem of demand learning coupled with inventory risk. Secondly, in our problem setting, although the linear relation of price to demand is assumed, the distribution of neither demand nor market price is explicitly given. Third, we apply the optimism under uncertainty linear bandit (OFUL) [APS11] to the Stackelberg game, whereas in [Ces+23] the Piyavsky-Schubert [Bou+20] algorithm is applied. Lastly, we provide Stackelberg regret guarantees on the cumulative regret, whereas [Ces+23] provides guarantees on the simple regret.

2.4.1 Rules of the Newsvendor Pricing Game

Moving forward, and away from abstraction, we explicitly denote , and . Where denotes wholesale price from the supplier firm, and denote the retail price and order amount of the retail firm.

-

1.

The supplier selects wholesale price , and provides it to the retailer.

-

2.

Given wholesale cost , the retailer reacts with his best response , consisting of retail price , and order amount .

-

3.

As the retailer determines the optimal order amount , he pays to the supplier.

-

4.

At time , nature draws demand , and it is revealed to the retailer.

-

5.

The retailer makes a profit of .

- 6.

2.4.2 Economic Game Assumptions

From the price setting Newsvendor, the majority of the assumptions from [Mil59] are inherited as standard practice. In our current problem setting, we explicitly state the assumptions of the Newsvendor pricing game as follows:

-

1.

Expected demand, , is an additive linear model, as illustrated later in Eq. (2.11).

-

2.

Inventory is perishable, and we assume no goodwill costs and/or salvage value.

-

3.

Market demand, , and action history, , , is fully observable to both retailer and supplier.

-

4.

The follower is rational and greedy, he maximizes for his immediate reward under the available information and best response computation.

-

5.

No deviation, stochastic or otherwise, exists in , denoting the amount of goods ordered and goods received, from the supplier by the retailer.

-

6.

Decisions are made during fixed periodic discrete time intervals.

At each time period the leader dynamically selects a wholesale price, . The follower must make two decisions in response to , the order amount, , and the market price , (which we show later by consequence of Eq. 2.15 are unique bijections). The uniqueness of the optimal , given , is proven in Theorem 1. This game can be viewed as an ultimatum game, where the retailer is constrained to accept or reject the the terms dictated by the supplier. However, the inclusion of inventory risk and demand parameter uncertainty in our model complicates this dynamic. The retailer must weigh his ordering decision against his risk assessment in the face of these uncertainties. Thus the problem setting can be interpreted as a variant of the ultimatum game, where a learning agent operates under market uncertainty.

2.4.3 Economic Theory of the Newsvendor Pricing Game

Stochastic demand is represented in Eq. 2.12, which is governed by a linear additive demand function representing the expected demand, , as a function of in Eq. 2.12. The demand function is governed by parameters .

| (2.11) | ||||

| (2.12) |

We stipulate that noise is zero mean and -sub-Gaussian. The retailer profit, is restricted by the order quantity , is therefore equal to, given wholesale price ,

| (2.13) | ||||

| (2.14) |

The retailer’s profit should be greater than the supplier’s wholesale price multiplied by the order quantity, denoted as . Let be the demand probability function given expected demand , and be the cumulative demand function. As the noise with respect to is -sub-Gaussian, consequently by the additive property so is the noise with respect to the expected retailer profit given supplier cost is . Given any price , we can compute expected profit of the retailer as,

| (2.15) |

Given and we can obtain a probability distribution , which has a unique solution [AHM51]. The retailer can select generating , and retailer must decide on to order from the supplier. Suppose we express demand as , then the retailer must order an optimal amount as determined by Eq. (2.16). The general solution to the Newsvendor order quantity is to maximize profit via , the unique closed form solution is presented in Eq. (2.16). In classical Newsvendor theory, the optimal solution is a function of the value , referred to as the critical fractile, where .

| (2.16) |

Unlike typical dynamic pricing, in a price-setting Newsvendor problem setting, the reward maximizing price is not necessarily the same as the profit maximizing price under no inventory risk, also known as the riskless price. From [Mil59] we know that the optimal price to set in the price-setting Newsvendor with linear additive demand is,

| (2.17) |

Where is the probability distribution of demand, such that .

Lemma 2.1.

Invariance of : Assuming symmetric behaviour of with respect to and constant standard deviation , the term representing supply shortage probability defined as , is invariant of the estimate of . (Proof in Appendix A.2.)

The optimal price , has the relation . expresses the probability of demand equal to , under demand parameters , and price . This defines the unique relation of to as evidenced by Eq. (2.17), and is a unique function. Knowing this relation, it is also logical to see that the riskless price, , of the retailer must be always above the wholesale price, , as introduced in the constraint, thus, .

Theorem 1.

Uniqueness of Best Response under Perfect Information: In the perfect information NPG (from Sec. 2.4), with a known demand function, under the assumptions listed in Sec. 2.4.2, the optimal order amount which maximizes expected profit of the retailer is and is a surjection from , and a bijection when . Furthermore, within the range , as monotonically increases, is monotonically decreasing. Where denotes the optimistic estimate of the riskless price . (Proof in Appendix A.1.)

Theorem 2.

Unique Stackelberg Equilibrium: A unique pure strategy Stackelberg equilibrium exists in the full-information Newsvendor pricing game. (Proof in Appendix A.4.)

Sketch of Proof (Theorem 1 and 2): Under perfect information, the unique solution to in Eq. (2.16) can be obtained, so long as . If each leader action elicits a unique action , then there must exist at least one optimal action for the leader in . Note that, under perfect information, the follower would always act in accordance to their best approximation of , which is unique under the assumptions in Section 2.4.2. This determines a unique best response. (Mathematical details are given in Appendix A.1 and A.4).

Evident from the previous discourse, , representing the optimal expected reward (profit) function for the follower under perfect information, does not lend itself to a straightforward computation. Instead, it necessitates inverse look up of a probability density function, from Eq. (2.16), which has no parametric representation nor closed form solution. In addition, the solution to an integral equation from Eq. (2.17) is required, which also lacks a closed-form solution. Consequently, this motivates the introduction of an upper-bounding approximation function, such as (represented in Eq. (2.18)), which both bounds , and is characterized by a straightforward parametric structure, . Observing the absence of the variable from the argument is noteworthy, albeit without consequence, as effectively provides an upper bound for across all admissible values of in . Subsequently, we can then leverage this approximation within an online learning framework.

| (2.18) |

Note that the linear parametric form is preserved under this representation, where , with and .

Economic Interpretation of : in Eq. (2.18) represents the theoretical reward the follower could achieve if all realized demand was met, and no cost of inventory surplus or shortage is considered. This is simply the unit profit margin, denoted as , multiplied by the expected demand generated by the price, denoted as , assuming all inventory is sold. Therefore, this riskless profit, , is effectively a concave polynomial with respect to , and is always greater or equal than the expected reward with inventory risk (a key result from [Mil59] [PD99]).

3 Online Learning Algorithms

We present a learning algorithm for the Newsvendor Pricing Game (NPG) involving two learning agents, namely two firms representing a supplier (leader) and retailer (follower), in a repeated Stackelberg game. In this online learning setting, both agents must learn the parameters of a reward function that is derived from a linear demand function. This presents additional challenge for both agents. For the leader, she bears the opportunity costs of suboptimal pricing given the best response of the retailer. For the retailer, he bears the cost of additional surplus or shortage when inaccurately estimating the demand function. These factors create additional complexity in comparison with a pure dynamic pricing problem setting.

More specifically, we adapt a contextual linear bandit learning algorithm [APS11] [Chu+11], which already enjoys robust theoretical guarantees in a single-agent setting, to a multi-agent setting. Furthermore, we extend its application to the economic domain by integrating classical economic theory from the price-setting Newsvendor, as discussed in Section 2.4.3, with contextual linear bandits. In this game, the follower optimizes to maximize , an optimistic upper-bounding concave function on his perspective reward function, while the leader aims to learn an optimal strategy by leveraging information about the follower’s policy. We present technically novel analytical techniques for quantifying bounds on estimated parameters and best response functions. For example, we introduce an innovative argument via analysis in Euclidean space in Theorem 3 and approximation methods for non-elementary functions in Theorem 5. Given this, we successfully extend the robust theoretical guarantees of single-agent contextual linear bandits to an economic multi-agent setting.

3.1 Optimism in the Face of Uncertainty

We propose an adaptation of the optimism-under-uncertainty (OFUL) algorithm from [APS11] (illustrated in Appendix B.1 for reference) to learn the parameters of demand from Eq. (2.11). The OFUL algorithm is a contextual linear bandit algorithm, also previously studied in [DHK08] and [RT10], which constructs a confidence ball where the parameters of an estimated reward function fall within.

| (3.1) |

Given context , we have two parameters to estimate and , leading us to learning of the optimal riskless price optimization. From Eq. (3.1) we see that grows logarithmically, matching the covariance of , where is defined as the self-normalizing norm [PKL04]. Lemma 3.1 extends this relationship to a shrinking finite 2-norm bound, which we later use to derive bounds on regret.

3.2 Online Learning Algorithm for Newsvendor Pricing Game

Let . We introduce a learning algorithm where both the leader and the follower optimistically estimates , while the follower learns . We denote by , the confidence set, wherein the true parameters lie with high probability (i.e. ). Our intuition, supported by Lemma 3.1, suggests that the size of decreases linearly with the sample size . Moreover, the linear structure of enables Lemma 3.1 to remain valid during estimation of via linear contextual bandits. To highlight, the follower executes the optimistic linear contextual bandit, while the leader selects her actions maximizing over the follower’s optimistic best response.

Given leader action , the optimal follower, is unique from Theorem 2. Thus there there exists a unique solution where is maximized for any estimate of . Although Theorem 2 illustrates the uniqueness of the SE under perfect information, there still exists uncertainty under imperfect information, when the parameters of demand are unknown. From the leader’s perspective, she is rewarded depending on the reaction of the follower. The follower’s economic reaction is determined by , which is affected by estimate . We present empirical results of Algorithm 1 in Appendix E.

3.3 Measuring the Best Response under Optimism

To characterize the best response of the follower, we must first establish a bound on the optimistic, yet uncertain, pricing behaviour of the follower given leader action . From Theorem (1) there exists a direct relationship between and . As the follower is learning parameters via OFUL, its certainty about the model parameters is bounded by . For each given action , and a confidence bound on the parameters , denoted as , we can have an optimistic and pessimistic estimate of the best response, , denoted as respectively. We define the pessimistic estimate of as,

| (3.2) |

The pessimistic best response to occurs when the optimistic parameter estimate is equal to the maximum likelihood estimate itself, denoted as . Thus for realism, we set a lower bound of 0 for . Additionally, we recognize that the riskless price estimate is always greater than or equal to , represented as in Eq. (3.2). Later, in Lemma 3.3, we will demonstrate that when the algorithm converges to , the learned parameters enable the follower to calculate and respectively.

The upper bound of the optimistic best response, (Eq. (3.4)), can be determined by estimating the optimistic riskless price . Let denote the expected profit under parameter , give . Both the left and right terms of Eq. (3.3) are optimistic estimates of expected profit, , using the riskless price as a surrogate for the optimal price. The follower’s pricing with , and ordering with , under an optimistic estimate of the demand parameters given data , can be summarized from Eq. (3.3) to Eq. (3.4). (The derivation from Eq. (3.3) to Eq. (3.4) is in Appendix C.2.)

| (3.3) | ||||

| (3.4) |

Theorem 3.

Bounding the Optimistic Follower Pricing: Let represent the deviation in pricing action of the follower (retailer) at time . Then for some and . (Proof in Appendix B.4.)

| (3.5a) | |||||

| subject to | (3.5b) | ||||

Sketch of Proof: As illustrated in Fig. 3, we specify the optimization problem, where with probability , the 2-norm error of the parameter estimates for lie within the confidence ball characterized by a radius less than . The general maximization problem is expressed in Eq. (3.5a) and (3.5b). The optimistic estimate of the , denoted as (which we can denote in short as ) lies in the extreme point between a ray and the boundary of .

| (3.6) | ||||

| (3.7) |

Leveraging Eq. (3.7), we can construct a bound on . Theorem 3 characterizes the shrinking nature of with respect to , as more samples are observed. Similar to Lemma 3.1, there also exists some , where bounds as expressed in Theorem 3. The establishment of this bound characterizes the pricing deviation of the follower pricing strategy, and is used to prove Theorem 4. To obtain a closed form solution to this optimization problem, we note the closed form expression of the Euclidean distance between a point in 2-space to a line described in Eq. (3.6). This distance measure is expressed in Eq. (3.7).

From Lemma 3.2 we can see that the cumulative optimistic pricing deviation of the follower is . This will have further ramifications when we compute the Stackelberg regret in Theorem 5. For interest to the reader, the current bound in Lemman 3.2 would naturally extend to non-linear settings such as exponential class General Linear Models (GLM’s) (we provide a description of this Appendix C.6).

Lemma 3.2.

Bounding the Cumulation of : Suppose we wish to measure the cumulative value of from to , denoted as . Then . (Proof in Appendix B.5.)

Lemma 3.3.

Convergence of to : Suppose the solution to Eq. (2.17) is computable. For any symmetric demand distribution, the optimistic estimate of the optimal market price from Eq. (2.17), denoted as which is the solution to under optimistic parameters , is decreasing with respect to such that , where the difference is bounded by . (Proof in Appendix A.5 )

In Algorithm 1 we propose that the follower prices the product at the optimistic riskless price , and not necessarily the optimistic optimal price . The profits derived from pricing with any of these suboptimal prices would result in suboptimal follower rewards (we characterize this regret later in Theorem 4).

3.4 Analysis from the Follower’s Perspective

For any approximately best responding follower, we can always expect a worst case error of , defined in Eq. (2.10), for the follower at any discrete time interval. Thus, by intuition alone, we can presume the regret to be at least . Nevertheless, it is worth noting that there exists these two distinct components to the regret, one caused specifically by the approximation of the best response, and one caused by the parameter uncertainty of the learning algorithm itself. The problem ultimately reduces to a contextual linear bandit problem.

Given our bounds on the optimistic pricing behaviour of the follower under context and uncertainty , for any sequence of actions, , the expected regret of the follower is expressed in Eq. (2.3).

Theorem 4.

Sketch of Proof: As the follower implements the OFUL algorithm (see Appendix B.1) to estimate the demand parameters , we propose as the theoretically maximum achievable expected reward. We quantify the variation in , represented by , across a finite number of samples . Furthermore, we provide an upper bound on the instantaneous regret using the larger upper-bounding discrepancy . It is worth noting that although is a concave function, may not be. Under perfect information, the follower may incur an approximation error derived from this discrepancy, rendering it -suboptimal in the worst case scenario. Nonetheless, this error is independent of , and the learning error for . Next, we establish a bound for the linear function estimation to compute optimal riskless pricing, for this we can apply the results of Theorem 3, and establish the bound of . We then partition the learning time period into two phases: Case 2 denotes the period where , occurring within the time interval , and Case 1 denotes when , occurring within the interval (assuming sufficiently large values of ). We note the differences in regret behaviour within these regimes and focus on the asymptotic regime as approaches , where the cumulative regret is bounded between the worst-case approximation error for , denoted as , and the logarithmic term pertaining to linear stochastic bandit learning, presented as . (Complete details are provided in Appendix C.3.)

To provide further insight, Theorem 4 suggests that the regret is bounded by the linear worst case approximation error , subtracted by the sublinear term, , for sufficiently large values of . As the follower forms an approximate best response to the leader’s action based on optimistic riskless pricing, we can expect linear regret in the worst-case. Nevertheless, knowing the characteristic behaviour of this regret is useful when we can quantify the learning error caused by the approximation of with .

3.5 Stackelberg Regret in the Newsvendor Pricing Game

We focus on providing a theoretical worst-case guarantee of the regret from the leader’s perspective as defined in Eq. (2.4), also known as Stackelberg regret under an approximate best responding follower. We see that given the equation Eq. (2.16), the critical fractile used to compute is decreasing with respect to . This makes also strictly decreasing with respect to , so is strictly decreasing with respect to . Therefore there exists a unique , under the assumptions provided in Section 2.4.2, which maximizes the upper bound on the leader profit , given the best response of the follower, , for any leader action .

| (3.8) |

To bound the Stackelberg regret, we must effectively characterize the best response of the follower given the assumptions outlined in Sec. 2.4.2. The key idea is that the follower will 1) act optimistically in the face of uncertainty, and rationally and be greedy under his best approximation of the best response. Meaning he will not try to deceive or misplay its own actions in order to influence the leader’s future actions.

If the follower’s strategy is known to the leader, and that the information available to both agents are the same, then in theory there exists no regret from the leader’s perspective, as the leader has perfect premonition over the actions of the follower. In our case, regret comes from the existence of information asymmetry, for example when the follower has received more observations about the demand than the leader, and therefore tends to be more confident in their estimate of , and less optimistic in their ordering and pricing strategies. Algorithm 1 (LNPG) seeks to minimize the Stackelberg regret, as defined in Eq. (2.4), constituting a no-regret learner from the over the follower’s strategy from the leader’s perspective. Where denotes the optimal action from the leader’s perspective.

| (3.9) |

Theorem 5.

Sketch of Proof: The technicalities in the proof for Theorem 5 are best illustrated in Fig. 4. The change in the best response of the follower are affected by two components. reflects the change in due to changes in the critical fractile , and reflects the change in due to the overall shift in the expected demand due to the increasing confidence on . We construct linear function approximator, with a bounded estimation error. One existing challenge is that has no closed form solution in the form of natural expression. We utilize the function to provide an estimation for the value of , given our confidence set . The core technical challenges lie in securing a sufficiently accurate approximation for while imposing upper bounds on the error arising from such a function approximation. To address this, we employ a Taylor series expansion to approximate , subsequently applying the Wasserstein approximation theorem for polynomials to confine the approximation error. Following this, we measure this error within the function’s domain, exploiting certain inherent properties such as the concavity of the upper-bounding function (refer to details in Lemma C.1 in Appendix). Consequently, the approximation of establishes of a bound on , while can be bounded using the outcome derived from Theorem 3 (the mathematical details for the proof of Theorem 3 are outlined in Appendix C.4).

Corollary 3.1.

As one of our key assumptions in Section 2.4.2 was that the follower acts via risk-free pricing due to the lack of a closed-form solution for the price-setting Newsvendor problem, a prudent reader might question what would happen if an alternative approximation for the price-setting Newsvendor were devised and applied in this Stackelberg game setting, thereby violating one of the assumptions in Section 2.4.2. Corollary 3.1 states that, in this case, a penalty term of , which can be computed in advance, would be added to the Stackelberg regret from Theorem 5. This notion is relatively intuitive, as the two objective functions are converging to different solutions for the leader, and , while maintaining the alternate best response . This is an interesting notion that relaxes the constraint of assuming a specific unique best response for the leader. Nevertheless, we stress that this serves only as an extension of the theoretical framework, and all Stackelberg games require some form of assumptions on the structure of the follower’s best response.

3.6 Convergence to Stackelberg Equilibrium

Theorem 6.

4 Empirical Results

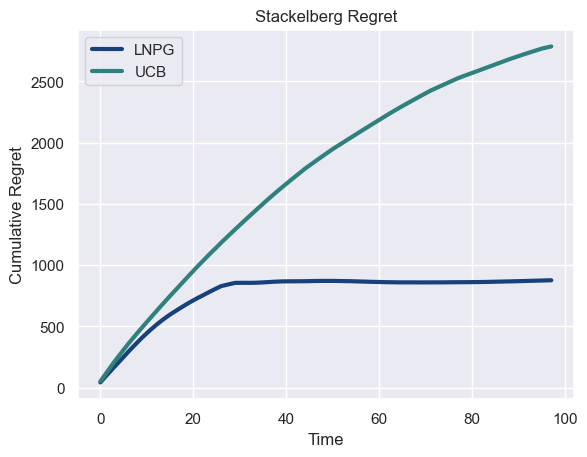

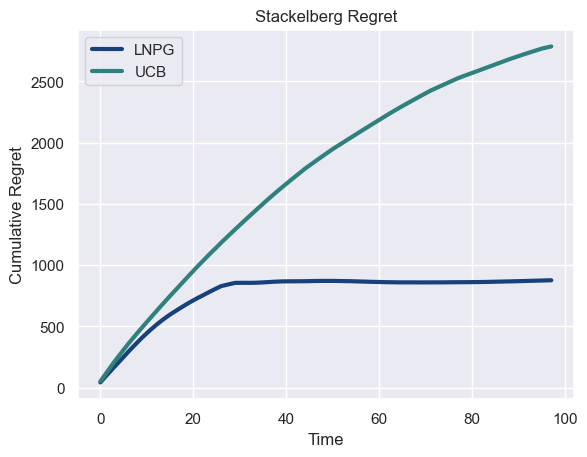

We compare the performance of our proposed algorithm, LNPG, with a well-known and widely used algorithm in the field of multi-armed bandit problems: Upper Confidence Bound (UCB) [Sze10]. UCB follows a straightforward exploration-exploitation trade-off strategy by selecting the action with the highest upper confidence bound. It requires discretization over the action space, and we use this as a baseline measure when computing empirical Stackelberg regret. The results are presented in Fig. 5, and additional results and configurations are presented in Appendix E.

5 Conclusion

We demonstrated the effective use of an optimistic online learning algorithm in a dyadic exchange network. We define a Newsvendor pricing game (NPG) as an economic game between two learning agents, representing firms in a supply chain. Both agents are learning the parameters of an additive demand function. The follower, or retailer, is a Newsvendor firm, meaning it must bear the cost of surplus and shortages when subject to stochastic demand. The follower must also determine the optimal price for the product, constituting the classical price-setting Newsvendor problem. We establish that, under perfect information, the NPG contains a unique follower best response and that a Stackelberg equilibrium must exist. In the context of online learning, we combine established economic theory of the price-setting Newsvendor with that of linear contextual bandits to learn the parameters of reward function. We demonstrate that from the leader’s perspective, the this algorithm achieves a Stackelberg regret for finite samples, and is guaranteed to converge to an approximate Stackelberg equilibrium. One limitation of this work is the structure of the demand function. Currently, the demand function is a linear function of price. To better represent reality, we propose in future work to expand the structure of the demand function to accommodate, in addition to price, multiple features (such as customer demographic features, product features etc.). This implies the extension of the Lemma 3.1 to p-norm rather than 2-norm, and a restructuring of Theorem 3 to accommodate a more general solution to the maximization problem from Eq. (3.5a).

References

- [AE92] Simon P Anderson and Maxim Engers “Stackelberg versus Cournot oligopoly equilibrium” In International Journal of Industrial Organization 10.1 Elsevier, 1992, pp. 127–135

- [AHM51] Kenneth J Arrow, Theodore Harris and Jacob Marschak “Optimal inventory policy” In Econometrica: Journal of the Econometric Society JSTOR, 1951, pp. 250–272

- [APS11] Yasin Abbasi-Yadkori, Dávid Pál and Csaba Szepesvári “Improved algorithms for linear stochastic bandits” In Advances in neural information processing systems 24, 2011

- [Bab+15] Moshe Babaioff, Shaddin Dughmi, Robert Kleinberg and Aleksandrs Slivkins “Dynamic pricing with limited supply” ACM New York, NY, USA, 2015

- [Bai+21] Yu Bai, Chi Jin, Huan Wang and Caiming Xiong “Sample-efficient learning of stackelberg equilibria in general-sum games” In Advances in Neural Information Processing Systems 34, 2021, pp. 25799–25811

- [Bal+15] Maria-Florina Balcan, Avrim Blum, Nika Haghtalab and Ariel D Procaccia “Commitment without regrets: Online learning in stackelberg security games” In Proceedings of the sixteenth ACM conference on economics and computation, 2015, pp. 61–78

- [Bou+20] Clément Bouttier, Tommaso Cesari, Mélanie Ducoffe and Sébastien Gerchinovitz “Regret analysis of the Piyavskii-Shubert algorithm for global Lipschitz optimization” In arXiv preprint arXiv:2002.02390, 2020

- [BP06] Dimitris Bertsimas and Georgia Perakis “Dynamic pricing: A learning approach” In Mathematical and computational models for congestion charging Springer, 2006, pp. 45–79

- [BR19] Gah-Yi Ban and Cynthia Rudin “The big data newsvendor: Practical insights from machine learning” In Operations Research 67.1 INFORMS, 2019, pp. 90–108

- [Bra+18] Mark Braverman, Jieming Mao, Jon Schneider and Matt Weinberg “Selling to a no-regret buyer” In Proceedings of the 2018 ACM Conference on Economics and Computation, 2018, pp. 523–538

- [BSW22] Jinzhi Bu, David Simchi-Levi and Chonghuan Wang “Context-Based Dynamic Pricing with Partially Linear Demand Model” In Advances in Neural Information Processing Systems 35, 2022, pp. 23780–23791

- [Ces+23] Nicolò Cesa-Bianchi et al. “Learning the stackelberg equilibrium in a newsvendor game” In Proceedings of the 2023 International Conference on Autonomous Agents and Multiagent Systems, 2023, pp. 242–250

- [Chu+11] Wei Chu, Lihong Li, Lev Reyzin and Robert Schapire “Contextual bandits with linear payoff functions” In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, 2011, pp. 208–214 JMLR WorkshopConference Proceedings

- [CLP19] Yiling Chen, Yang Liu and Chara Podimata “Grinding the space: Learning to classify against strategic agents” In arXiv preprint arXiv:1911.04004, 2019

- [CZ99] Gérard P Cachon and Paul H Zipkin “Competitive and cooperative inventory policies in a two-stage supply chain” In Management science 45.7 INFORMS, 1999, pp. 936–953

- [DD00] Prithviraj Dasgupta and Rajarshi Das “Dynamic pricing with limited competitor information in a multi-agent economy” In Cooperative Information Systems: 7th International Conference, CoopIS 2000 Eilat, Israel, September 6-8, 2000. Proceedings 7, 2000, pp. 299–310 Springer

- [DD90] Giovanni De Fraja and Flavio Delbono “Game theoretic models of mixed oligopoly” In Journal of economic surveys 4.1 Wiley Online Library, 1990, pp. 1–17

- [DGP09] Constantinos Daskalakis, Paul W Goldberg and Christos H Papadimitriou “The complexity of computing a Nash equilibrium” In Communications of the ACM 52.2 ACM New York, NY, USA, 2009, pp. 89–97

- [DHK08] Varsha Dani, Thomas P Hayes and Sham M Kakade “Stochastic linear optimization under bandit feedback”, 2008

- [FCR19] Tanner Fiez, Benjamin Chasnov and Lillian J Ratliff “Convergence of learning dynamics in stackelberg games” In arXiv preprint arXiv:1906.01217, 2019

- [FCR20] Tanner Fiez, Benjamin Chasnov and Lillian Ratliff “Implicit learning dynamics in stackelberg games: Equilibria characterization, convergence analysis, and empirical study” In International Conference on Machine Learning, 2020, pp. 3133–3144 PMLR

- [FKM04] Abraham D. Flaxman, Adam Tauman Kalai and H. McMahan “Online convex optimization in the bandit setting: gradient descent without a gradient” arXiv:cs/0408007 arXiv, 2004 URL: http://arxiv.org/abs/cs/0408007

- [Gan+23] Jiarui Gan, Minbiao Han, Jibang Wu and Haifeng Xu “Robust Stackelberg Equilibria” In Proceedings of the 24th ACM Conference on Economics and Computation, EC ’23 London, United Kingdom: Association for Computing Machinery, 2023, pp. 735 DOI: 10.1145/3580507.3597680

- [GLK16] Aurélien Garivier, Tor Lattimore and Emilie Kaufmann “On explore-then-commit strategies” In Advances in Neural Information Processing Systems 29, 2016

- [GP21] Vineet Goyal and Noemie Perivier “Dynamic pricing and assortment under a contextual MNL demand” In arXiv preprint arXiv:2110.10018, 2021

- [Hag+22] Nika Haghtalab, Thodoris Lykouris, Sloan Nietert and Alexander Wei “Learning in Stackelberg Games with Non-myopic Agents” In Proceedings of the 23rd ACM Conference on Economics and Computation, 2022, pp. 917–918

- [HW63] George Hadley and Thomson M Whitin “Analysis of inventory systems”, 1963

- [JK13] Werner Jammernegg and Peter Kischka “The price-setting newsvendor with service and loss constraints” In Omega 41.2 Elsevier, 2013, pp. 326–335

- [KL03] Robert Kleinberg and Tom Leighton “The value of knowing a demand curve: Bounds on regret for online posted-price auctions” In 44th Annual IEEE Symposium on Foundations of Computer Science, 2003. Proceedings., 2003, pp. 594–605 IEEE

- [KUP03] Erich Kutschinski, Thomas Uthmann and Daniel Polani “Learning competitive pricing strategies by multi-agent reinforcement learning” In Journal of Economic Dynamics and Control 27.11-12 Elsevier, 2003, pp. 2207–2218

- [LS20] Tor Lattimore and Csaba Szepesvári “Bandit algorithms” Cambridge University Press, 2020

- [Ma+22] Zu-Jun Ma, Yu-Sen Ye, Ying Dai and Hong Yan “The price of anarchy in closed-loop supply chains” In International Transactions in Operational Research 29.1 Wiley Online Library, 2022, pp. 624–656

- [Mil59] Edwin S Mills “Uncertainty and price theory” In The Quarterly Journal of Economics 73.1 MIT Press, 1959, pp. 116–130

- [NKS19] Jiseong Noh, Jong Soo Kim and Biswajit Sarkar “Two-echelon supply chain coordination with advertising-driven demand under Stackelberg game policy” In European journal of industrial engineering 13.2 Inderscience Publishers (IEL), 2019, pp. 213–244

- [PD99] Nicholas C Petruzzi and Maqbool Dada “Pricing and the newsvendor problem: A review with extensions” In Operations research 47.2 INFORMS, 1999, pp. 183–194

- [Per07] Georgia Perakis “The “price of anarchy” under nonlinear and asymmetric costs” In Mathematics of Operations Research 32.3 INFORMS, 2007, pp. 614–628

- [PK ̵01] Praveen K Kopalle PK Kannan “Dynamic pricing on the Internet: Importance and implications for consumer behavior” In International Journal of Electronic Commerce 5.3 Taylor & Francis, 2001, pp. 63–83

- [PKL04] Victor H Pena, Michael J Klass and Tze Leung Lai “Self-normalized processes: exponential inequalities, moment bounds and iterated logarithm laws”, 2004

- [PS80] Michael E Porter and Competitive Strategy “Techniques for analyzing industries and competitors” In Competitive Strategy. New York: Free, 1980

- [Rou10] Tim Roughgarden “Algorithmic game theory” In Communications of the ACM 53.7 ACM New York, NY, USA, 2010, pp. 78–86

- [RT10] Paat Rusmevichientong and John N Tsitsiklis “Linearly parameterized bandits” In Mathematics of Operations Research 35.2 INFORMS, 2010, pp. 395–411

- [Sze10] Csaba Szepesvári “Algorithms for reinforcement learning” In Synthesis lectures on artificial intelligence and machine learning 4.1 Morgan & Claypool Publishers, 2010, pp. 1–103

- [Tir88] Jean Tirole “The theory of industrial organization” MIT press, 1988

- [Wei85] Karl Weierstrass “Über die analytische Darstellbarkeit sogenannter willkürlicher Functionen einer reellen Veränderlichen” In Verl. d. Kgl. Akad. d. Wiss 2, 1885

- [ZC13] Juliang Zhang and Jian Chen “Coordination of information sharing in a supply chain” In International Journal of Production Economics 143.1 Elsevier, 2013, pp. 178–187

- [Zha+23] Geng Zhao, Banghua Zhu, Jiantao Jiao and Michael I. Jordan “Online Learning in Stackelberg Games with an Omniscient Follower” arXiv:2301.11518 [cs] arXiv, 2023 URL: http://arxiv.org/abs/2301.11518

Appendix A Economic Theory

A.1 Proof of Theorem 1

Uniqueness of Best Response under Perfect Information: In the perfect information NPG (from Sec. 2.4) with a known demand function, under the assumptions listed in Sec. 2.4.2, the optimal order amount which maximizes expected profit of the retailer is and is a surjection from , and a bijection when . Furthermore, within the range , as monotonically increases, is monotonically decreasing. Where denotes the optimistic estimate of the riskless price .

Proof.

Under the assumptions in Section 2.4.2, given any , there exists a unique maximizing solution to Eq. (2.15). From [Mil59] we know that the optimal price to set in the price-setting Newsvendor, with linear additive demand, is of the form,

| (A.1) |

Where is the riskless price, the price which maximizes the linear expected demand. And therefore, the optimal price , has the relation . expresses the probability of demand equal to , under demand parameters , and price . This defines the unique relation of to from [Mil59] and as evidenced by Eq. (2.17) is a unique function. Knowing this relation, it is also logical to see that the riskless price of the retailer must be always above the wholesale price of the product which, as introduced in the constraint in Eq. (A.2).

| (A.2) |

Given the unique relation from to , represents the optimal pricing for ordering quantity above the expected demand from . Having this unique relation, we are ultimately faced with the optimization problem in Eq. (A.3).

| (A.3) |

is unique under certain conditions outlined in [PD99] and [Mil59], and there exists a unique optimal price for each problem defined by . Examining the behaviour of the critical fractile , and we see that is monotonically decreasing with respect to .

| (A.4) |

We see from Eq. (A.4) that is unique function of , as is strictly monotonic increasing, as is strictly monotonic decreasing.

∎

A.2 Proof of Lemma 2.1

Invariance of : Assuming symmetric behaviour of with respect to and constant standard deviation , the term representing supply shortage probability defined as , is invariant of the estimate of .

Proof.

First we define the properties of the probability density function of demand and . Where is the invariant version of the demand function, and is the probabilistic demand function with respect to the realized value of demand, such that Eq. (A.5) holds. is the difference between realized demand and the expected demand , and is the difference between the order amount expected demand and the expected demand .

| (A.5) | ||||

| (A.6) | ||||

| (A.7) |

For the price-setting Newsvendor, the optimal price is the is the solution to the equation denoting the expected profit, given retail price , , is expressed in Eq. (2.15).

| (A.8) |

From [Mil59] and [PD99] we know that under perfect information, the optimal price for the price setting Newsvendor is always the risk-free price subtracted by a term proportional to for expected inventory loss. It follows that is concave in given , and conversely is also concave given . Therefore, there exists a unique solution to given , as illustrated in Eq. (2.17).

| (A.9) | ||||

| (A.10) |

Given this relation we can then maximize over a subspace of more efficiently.

| (A.11) |

We wish to show that remains the same despite variation in .

| (A.12) | ||||

| (A.13) | ||||

| (A.14) |

We show the equivalance of and via equivalence of the integral definitions from Eq. (A.13) and Eq. (A.14) respectively, utilizing the relation from Eq. (A.5). For convience, let .

| (A.15) | ||||

| (A.16) | ||||

| (A.17) | ||||

| (A.18) |

Because can only vary by updating samples with respect to , it follows that is also invariant with respect to .

∎

A.3 Theorem A.3

Theorem A.3. Strictly decreasing : Given the condition , is strictly decreasing with respect to .

Proof.

Sketch of idea, as increases, the boundary between decreases.

This boundary due to symmetry.

| (A.19) | ||||

| (A.20) | ||||

| (A.21) |

Therefore given , we know that is decreasing w.r.t increasing , holding constant.

Given , and ,

| (A.22) |

The behaviour of , where will also be decreasing with increasing .

∎

A.4 Proof of Theorem 2

Theorem 2. Unique Stackelberg Equilibrium: A unique pure strategy Stackelberg Equilibrium exists in the full-information Newsvendor pricing game.

Proof.

Let us define an arbitrary probability density function which operates on the of , and is strictly positive. The cumulative probability function function is therefore,

| (A.23) |

Therefore, from Eq. (A.23), its cumulative distribution, defined as the integral of such a probability distribution function is strictly monotonically increasing from . Therefore the inverse of , defined as , is an injection from . We also that the critical fractile is strictly decreasing with respect to . Therefore, from the follower’s perspective, since is an injection from , there is always a best response function defined by given and is an injection. As is unique given , therefore the Eq. (A.24) has a unique maximum.

| (A.24) |

∎

A.5 Proof of Lemma 3.3

Lemma 3.3. Convergence of to : Suppose the solution to Eq. (2.17) is computable. For any symmetric demand distribution, the optimistic estimate of the optimal market price from Eq. (2.17), denoted as which is the solution to under optimistic parameters , is decreasing with respect to such that , where the difference is bounded by .

Proof.

Suppose the solution to Eq. (2.17) is computable. We examine the equation for the optimal price function, from Eq. (2.17), and we make the assumption that the term is computable,

| (A.25) |

Due to Lemma 2.1 we know that is invariant of , for symmetric probability distributions with constant variance. Thus using this advantage, we know from Eq. (B.43) that the rate of convergence to is . We then look at the behaviour of , from Eq. (3.5b), we can obtain an optimistic upper bound on denoted as as , when given the confidence ball , by setting , this allows for the slack, denoted as, to be completely maximized for maximizing . In other words, we assume that the estimate of is exact. Subsequently, we have a 1-dimensional optimization problem.

| (A.26) |

Let , we maximize by setting , and this maximum value is shrinking at a rate of . We examine the asymptotic behaviour of as .

| (A.27) |

Examining Eq. (A.27) we know that ultimately, , and as . And thus, asymptotically as .

∎

Appendix B Online Learning Theory

B.1 Optimism Under Uncertainty (OFUL) Algorithm

B.2 Optimization Under Uncertainty (OFUL)

The OFUL algorithm presented in Appendix B.1 is a linear bandit optimization function. It works by constructing a confidence ball which provides a bound on the variation of estimated parameters . This confidence bound shrinks as more observations are obtained. When running this algorithm, the error of estimated parameters is bounded by the self-normalizing norm, , where by definition,

| (B.1) |

Where is the identity matrix, for some . With these definitions in Eq. (B.1), the OFUL algorithm, with respect to the shrinking confidence ball admits a bound of the confidence of the parameters as,

| (B.2) |

Where the random variables , and filtration are -sub Gaussian, for some , such that,

| (B.3) |

Where is the dimension of the parameters. is a condition for the random variables where . And is a constraint on the parameters such that .

B.3 Proof of Lemma 3.1

OFUL - Bound on 2-Norm: With probability , and , the constraint from [APS11] implies the finite -norm bound , and also thereby the existence of such that (Proof in Appendix B.3.)

Proof.

We assume is full rank, and that sufficient sufficient exploration has occurred. Let . From [APS11], we define as,

| (B.4) |

Let be a positive definite real-valued matrix defined on , represented as a diagonal matrix with entries , where . Moreover, is the vector corresponding to the arm selected (specifically in our case, this corresponds to the pricing action of the follower). Furthermore, we define the self-normalizing norm of as,

| (B.5) |

Fundamentally, we wish to demonstrate that given there exists such that,

| (B.6) | ||||

| (B.7) |

We note that with non-decreasing eivengalues of with respect to , this generally denotes a volume decreasing ellipsoid [LS20]. Suppose there exists a scalar , where we impose that the minimum eigenvalue of scales as for all . This would result in the inequality,

| (B.8) |

The inequality in Eq. (B.8) holds independent of , as is constant and is non-decreasing with respect to time. Therefore, the existence of a scalar constant implies that,

| (B.9) |

Next suppose we select some , such that obeys the constraint in Eq. B.10, we show the existence of next.

| (B.10) |

Existence of : We wish to show the existence of , such that,

| (B.11) |

Let us define constants,

| (B.12) | ||||

| (B.13) |

We first look at the relation where,

| (B.14) |

We wish to find an value for such that Eq. (B.14) holds. Let , so for , we have,

| (B.15) | ||||

| (B.16) |

Suppose can be arbitrarily large, and we want to infer the existence of a such that Eq. (B.14) holds.

| (B.17) | ||||

| (B.18) | ||||

| (B.19) |

Therefore we select as,

| (B.20) | ||||

| (B.21) |

Such that Eq. (B.19) holds, as long as . The second condition is that,

| (B.22) |

For this we can argue that so long as , can choose , such that Eq. (B.22) holds. Therefore, we can select as,

| (B.23) |

Which holds when , allowing for an upper bound for in Eq. (B.23). We combine our bound with Eq. (B.9), where

| (B.24) | ||||

| (B.25) |

Where .

∎

B.4 Proof of Theorem 3

Bounding the Optimistic Follower Action: Let represent the deviation in pricing action of the follower (retailer) at time . Then for some and .

Proof.

We connect the bound on estimated parameters with bounds on the optimal expected follower rewards . Under the optimism principle, we therefore solve the optimization problem to maximize defined in Eq. (B.26a) and (B.26b). In this optimization problem, we optimize for the optimistic estimate of by maximizing .

By Lemma 3.1, we have an exact bound on the inequality Eq. (B.26b). Therefore we can solve for the maximization problem for the value to give the optimistic estimate of the upper bound on follower reward. With probability ,

| (B.26a) | |||||

| subject to | (B.26b) | ||||

Where and can describe a hyperplane, (a line in the 2D case), and the confidence ball constraint in Eq. (B.26b) can denote the parameter constraints. The optimization problem can be visualized in 2D space by maximizing the distance a from point to line, and solving for the parameters which maximizes the slope , as illustrated in Fig. 3.

| (B.27) | ||||

| (B.28) |

Solving for Eq. (3.7) for gives us a closed form expression for in terms of as expressed in Eq. (B.29).

| (B.29) | ||||

| (B.30) | ||||

| (B.31) |

When we obtain extreme points for we obtain the bounds for . The extremum of always occurs at the tangent of the confidence ball constraints, where the slope of the line denoted by is maximized or minimized. We note is convex in both and .

As denotes the optimistic estimate of , we see from the bounded inequality expressed in Eq. (B.31) that is a decreasing function as the estimate of improves. Therefore, the critical fractile is also decreasing, with respect to , where , with reference to Eq. (B.32).

| (B.32) |

The learning algorithms converges to a gap, we define this gap as, at large values of ,

| (B.33) |

As the for sufficiently large values of , approaches 0, as approaches 0.

| (B.34) | ||||

| (B.35) | ||||

| (B.36) | ||||

| (B.37) | ||||

| (B.38) |

We have decreasing behaviour, at sufficiently large values of , such that , which is not difficult to obtain, as the function , is always decreasing for , and upper-bounded by (See Appendix B.7). The upper-bound on is denoted in Eq. (B.39).

| (B.39) |

Essentially Eq. (B.39) is a linear combination of and , where for . We denote two constants and such that . Therefore,

| (B.40) |

Where,

| (B.41) |

We can see that from the inequality Eq. (B.40), that for some , then,

| (B.42) |

Therefore, we can deduce that the decreasing order of magnitude of is for sufficiently large values of , where the bound in Eq. (B.39).

| (B.43) |

describes the behaviour of the convergence of towards the true with respect to , and this decreases with .

∎

B.5 Proof of Lemma 3.2

Bounding the Cumulation of : Suppose we wish to measure the cumulative value of from to , denoted as . Then .

Proof.

To quantify we take the integral of noting that the integral of from to will always be greater than the discrete sum so longs as .

| (B.44) | ||||

| (B.45) | ||||

| (B.46) | ||||

| (B.47) | ||||

| (B.48) | ||||

| (B.49) |

∎

From Lemma 3.2 we can see that the optimistic pricing deviation of the follower is,

| (B.50) |

B.6 Proof of Lemma B.1

Lemma B.1.

Demand estimate reduction: Given any price , the maximum optimistic demand estimate is , and . (Proof in Appendix B.6.)

B.7 Notes on the Bound on

We want to show that for .

Therefore,

| (B.53) |

Appendix C Regret Bounds

C.1 Proof for Lemma C.1

Lemma C.1.

Approximation of Smooth Concave Function: Suppose any smooth differentiable concave function , and its approximation, , which is also smooth and differentiable (but not guaranteed to be concave), if exhibits an decreasing upper-bound on the approximation error , then consequently implies almost surely, where , , and denotes the supremum norm.

Proof.

Suppose some controllable function is monotonically decreasing with respect to increasing bounding the supremum norm of the approximation error of , then the following statements hold true for any .

| (C.1) |

Summing these conditions, we have the new relation,

| (C.2) |

By triangle inequality,

| (C.3) |

Note: the order of versus can be swapped and will yield the same result. The right left hand side of Eq. (C.3), allows us to rearrange the terms to obtain the equivalent expression rearranging the terms inide the norm on the left hand side,

| (C.4) |

By definition, for any smooth function,

| (C.5) |

Thus we have equality,

| (C.6) |

Thus we have the new inequality,

| (C.7) |

As , therefore this implies both,

| (C.8) | |||

| (C.9) |

Concavity of : This is where it is important to note the concavity of particularly in Eq. (C.9). Due to the concavity of , as the function approximation error approaches 0, so does .

| (C.10) |

∎

C.2 Notes on Optimistic Best Response

The upper-bound of the optimistic best response, , can be determined by estimating the optimistic riskless price , while both sides of Eq. (3.3) provide optimistic estimates of expected profit, , assuming the optimal price is at the riskless price, . These expressions summarize follower pricing and ordering under a greedy strategy, accounting for optimistic parameter estimation. In principle, although the follower may never converge to the optimal given the limitations of the approximation tools, aiming for convergence towards serves as a practical approach. represents not only a simplifying mathematical assumption, but also aligns with the idea of optimization under optimism - as . By pricing as the riskless price, the follower aims to price optimistically, serving as a reasonable economic strategy for dynamic pricing.

| (C.11) | ||||

| (C.12) | ||||

| (C.13) | ||||

| (C.14) | ||||

| (C.15) |

C.3 Proof of Theorem 4

Follower Regret in Online Learning for NPG: Follower Regret in Online Learning for NPG: Given pure leader strategy , the worst-case regret of the follower, , as defined in Eq. (2.3) when adopting the LNPG algorithm, is bounded by , for sufficiently large values of , where are positive real constants in .

Proof.

Let represent the riskless profit for the follower at follower action (retail price) , give leader action (supplier price) .

| (C.16) |

We argue that given any pure strategy , the contextual regret , is bounded by , for well-defined positive real value constants . For the sake of the argument, we assume that given , which is the solution to Eq. (A.3) is known. Where represents the quantity above the expected demand from (refer to Appendix A.1). For the mechanics of this proof, this allows us to consider a constant value.

Riskless Pricing Difference : First we begin by analyzing the convergence properties of the upper-bounding function . We show that . The difference in exact profit between pricing at the riskless price and some optimistic riskless price , denoted as is such that .

For convenience, in equations where no ambiguity exists, we may omit from explicitly including the variable in the subsequent equations, denoting them as , for and so forth.

| (C.17) | ||||

| (C.18) | ||||

| (C.19) | ||||

| (C.20) | ||||

| (C.21) |

The difference in the riskless price, note , combining Theorem 3,

| (C.22) | ||||

| (C.23) | ||||

| (C.24) | ||||

| (C.25) |

We integrate the term to obtain obtain a closed form expression for Eq. (C.25).

| (C.26) |

Regret Analysis: We introduce back again into the notation, as the expected profit, and upper-bounding functions , are conditioned on the leader action . We present the definition of regret conditioned upon the policy of , which is a pure strategy consisting of a sequence of action. As we assume a rational and greedy follower; he does not attempt to alter the strategy of the leader.

| (C.27) | ||||

| (C.28) |

We also know that if we use the riskless price estimate.

| (C.29) | ||||

| (C.30) |

Where is the upper estimate of the riskless price . And in the general case,

| (C.31) | ||||

| where, | (C.32) | |||

| (C.33) |

For any price , we know that the profits under no inventory risk is always higher than when inventory risk exists, . We make note of the relation . Thus we define as,

| (C.34) |

As a consequence of this approximation error, , we note there must be a constant term which upper bounds the regret of the follower.

| (C.35) |

Our approach involves analyzing two cases.

Case 1: ,

| (C.36) | ||||

| (C.37) | ||||

| (C.38) |

Case 2: ,

| (C.39) | ||||

| (C.40) | ||||

| (C.41) | ||||

| (C.42) |

We define as,

| (C.43) | ||||

| (C.44) | ||||

| (C.45) |

We take the definition of and insert it back to the definition in Eq. (C.42).

| (C.46) | ||||

| (C.47) | ||||

| (C.48) |

We note the peculiarity that and . Thus in the worst case,

| (C.49) |

We upper bound by , where,

| (C.50) | ||||

| (C.51) |

We note that can be expressed in linear form, with parameters , whereas requires the solution to Eq. (A.3), which may not always have a closed form solution.

| (C.52) |

We can see that there are two contributions to the contextual regret. is the worst-case error term of the approximation of via . represents the learning error of learning to achieve riskless pricing under imperfect information. Economically, represents the upper-bound on the difference between expected profit with and without potential inventory risk. Thus using this approximation, we learn to price at , and converge to a constant error term .

In Case 2, where , the follower learns to price at due to misspecification. Nevertheless, in terms of asymptotic behaviour, as , and almost surely. Since by definition , we know that the system will approach Case 1 as . In this case, the learning error is vanishing, while the riskless inventory approximation error is persistent, and bounded by . We can now we can impose a worst case bound on expected regret, assuming is sufficiently large, and (which is true in Case 1). We consider the behaviour of as it approaches infinity. When considering relatively small values of , the regret may exhibit a worst-case regret spanning the entire range of the , given the absence of constraints on the magnitude of . Therefore, it becomes imperative to establish the assumption that the temporal horizon is sufficiently large.

| (C.53) |

Therefore, when examining the asymptotic behaviour for Case 2, when letting be the maximum value of for all , we observe that,

| (C.54) | ||||

| (C.55) |

Where Eq. (C.55) serves as an alternative expression for Eq. (2.10). As recounted in Eq. (C.51) and Eq. (C.53), the system will approach Case 1 almost surely as . We note that the constant worst-case linear scaling via due to the approximate best response of the follower is external to the learning algorithm, and thus is dependent on the error of the approximation of the solution to Eq. (A.3), via .