NN-Steiner: A Mixed Neural-algorithmic Approach for the Rectilinear Steiner Minimum Tree Problem

Abstract

Recent years have witnessed rapid advances in the use of neural networks to solve combinatorial optimization problems. Nevertheless, designing the “right” neural model that can effectively handle a given optimization problem can be challenging, and often there is no theoretical understanding or justification of the resulting neural model. In this paper, we focus on the rectilinear Steiner minimum tree (RSMT) problem, which is of critical importance in IC layout design and as a result has attracted numerous heuristic approaches in the VLSI literature. Our contributions are two-fold. On the methodology front, we propose NN-Steiner, which is a novel mixed neural-algorithmic framework for computing RSMTs that leverages the celebrated PTAS algorithmic framework of Arora to solve this problem (and other geometric optimization problems). Our NN-Steiner replaces key algorithmic components within Arora’s PTAS by suitable neural components. In particular, NN-Steiner only needs four neural network (NN) components that are called repeatedly within an algorithmic framework. Crucially, each of the four NN components is only of bounded size independent of input size, and thus easy to train. Furthermore, as the NN component is learning a generic algorithmic step, once learned, the resulting mixed neural-algorithmic framework generalizes to much larger instances not seen in training. Our NN-Steiner, to our best knowledge, is the first neural architecture of bounded size that has capacity to approximately solve RSMT (and variants). On the empirical front, we show how NN-Steiner can be implemented and demonstrate the effectiveness of our resulting approach, especially in terms of generalization, by comparing with state-of-the-art methods (both neural and non-neural based).

1 Introduction

Given a set of points in , a Steiner tree spanning is a tree whose vertex set is together with a set of additional points called Steiner points. A rectilinear Steiner tree is a Steiner tree where all edges are axis-parallel. Given , the rectilinear Steiner minimum tree (RSMT) problem aims to compute the rectilinear Steiner tree spanning with smallest possible cost, defined as the total length of all edges in the tree. The RSMT problem has fundamental importance in VLSI physical design, as minimum wiring is correlated with key figures of merit including dynamic power, congestion, and timing delay. Hence, RSMT constructions have been well-studied for interconnect planning and estimation, timing estimation, global routing, and other applications (Kahng et al. 2022).

The existence of an optimal RSMT whose Steiner points are restricted to the Hanan grid, which is formed by intersections of all axis-parallel lines passing through points in , was established in (Hanan 1966). The RSMT problem was subsequently shown to be NP-complete (Garey and Johnson 1977). It was proved by (Hwang 1976) that the rectilinear minimum spanning tree gives a -approximation of the RSMT. A series of results leveraging Zelikovsky’s method led to a -approximation (Berman et al. 1994). Theoretically, the best known approximation algorithm for RSMT in fixed-dimensional Euclidean space is obtained via the PTAS (polynomial-time approximation scheme) proposed by Arora (Arora 1998). Arora’s method provides a -approximation for a range of problems, such as the traveling salesperson problem, in addition to RSMT. Unfortunately, while this algorithm runs in time polynomial in the number of points, its time complexity depends exponentially on and, consequently, the method has not yet found its way to practice.

On one hand, given the importance of the RSMT problem in chip design and its intractability, a large number of heuristics have been developed in the VLSI CAD community, aiming to improve the quality of RSMT computation with practical running time, e.g., (Kahng, Măndoiu, and Zelikovsky 2003; Liu, Chen, and Young 2021; Hu et al. 2006; Fallin et al. 2022; Wang et al. 2005; Cinel and Bazlamaçci 2008). The current state-of-the-art (SOTA) heuristic algorithm is FLUTE (Wong and Chu 2008; Chu and Wong 2007, 2005; Chu 2004). FLUTE constructs a lookup table encoding optimal RSMTs for all instances smaller than and then constructs RSMTs for larger pointsets by partitioning the input pointset into subsets of size or smaller, and combining the optimal trees over these subsets. FLUTE has been shown to be very close to optimal for small pointsets and is widely used in practice in VLSI CAD. GeoSteiner (Juhl et al. 2018) is the SOTA algorithm for exact RSMT computation, and is an ILP-based approach.

On the other hand, with recent success of deep neural networks (NNs) in many applications, there has been a surge in use of NNs to help tackle combinatorial optimization problems (Bengio, Lodi, and Prouvost 2021; Khalil et al. 2017; Li, Chen, and Koltun 2018; Selsam et al. 2018; Gasse et al. 2019; Sato, Yamada, and Kashima 2019), such as traveling salesperson or other routing-related problems, using reinforcement learning (RL) (Vinyals, Fortunato, and Jaitly 2015; Bello et al. 2017; Deudon et al. 2018; Prates et al. 2019). Recently, REST (Liu, Chen, and Young 2021) achieved the first NN-based approach for RSMT by finding so-called rectilinear edge sequences using RL. (Chen et al. 2022) designed an RL framework to find obstacle-avoiding Steiner minimum trees. Significant challenges in neural combinatorial optimization (NCO) remain. NNs are often used in an ad-hoc manner with limited theoretical understanding of the resulting framework. It is also often not known if machine-learning pipelines have the capacity to solve a given combinatorial optimization problem, or how network-architecture design could leverage problem structure to design more effective and efficient neural models.

One potential way to inject theoretical justification into the design of neural approaches for combinatorial problems is to leverage the vast literature on approximation algorithms. In particular, instead of using one NN to solve an optimization problem in an end-to-end manner, one can use neural components in a high-level algorithmic framework. An exemplary thread of works uses NNs to learn variable-selection decisions in branch-and-bound frameworks solving mixed integer-linear programming problems (Gasse et al. 2019; Gupta et al. 2020; Nair et al. 2020). (McCarty et al. 2021) propose a mixed neural-algorithmic framework NN-Baker to solve problems such as maximum independent set in the geometric setting by using Baker’s technique to decompose problems into small instances of bounded size, and then training a single NN to solve these instances.

This Work.

In this paper, we develop an approach to compute RSMTs in . (While we use and Manhattan geometry, the framework extends to for any constant value of .) Specifically, we develop NN-Steiner111Code is open-sourced at https://github.com/ABKGroup/NN-Steiner., a mixed neural-algorithmic framework that leverages the ideas behind Arora’s PTAS for RSMT (Arora 1998).

At a high level, Arora’s PTAS partitions the input domain in a hierarchical manner, then solves the problem via a bottom-up dynamic programming (DP) procedure. One key result of (Arora 1998) is that each DP subproblem is of only bounded size, and thus, can be solved in time independent of the number of input points. However, the complexity of this DP step is prohibitive in practice.

In Section 3.2, we develop a mixed neural-algorithmic approach to simulate Arora’s PTAS; Fig. 4 gives a high-level illustration. The costly DP step is replaced by a single NN component that outputs a learned embedding of the solutions to the DP subproblems. This single NN module is called repeatedly within the algorithmic framework. Other NN components simulate the backward retrieval of Steiner points in a top-down manner. As each of the four NN components is of size independent of input problem size, the model complexity of each component is bounded.

On the theoretical front, we show in Theorem 3.1 that this framework has the capacity to produce approximate RSMTs using only NNs of bounded complexity. On the practical front, NNs replace a key but costly component in Arora’s PTAS, leading to an efficient architecture. Furthermore, since the neural component only needs to handle fixed-size instances, training is easier. Once trained, NN-Steiner generalizes well to problems of much larger sizes than seen in training, as we demonstrate in Section 2. Indeed, extensive experimental results show that NN-Steiner achieves better performance than NN-based and non-NN-based SOTA methods for sufficiently large problem sizes. NN-Steiner outperforms the existing RL-based neural approach significantly for large pointsets. As input size increases, the RL-based policy must handle a larger action space, which makes it challenging both to learn the policy and to generalize. NN-Steiner learns an algorithmic component of fixed size, leading to superior generalization.

In summary, we propose NN-Steiner, a novel neural-algorithmic framework for the RSMT problem, which leverages the algorithmic idea of Arora’s PTAS (which is the theoretical best approximation algorithm for this problem). NN-Steiner is, to our best knowledge, the first neural architecture of bounded size that has capacity to approximately solve the RSMT problem. Moreover, the algorithmic alignment of NN-Steiner leads to better practical performance than existing SOTA methods for large instances. While we focus on the RSMT problem in this paper due to its practical importance in VLSI, the versatility of Arora’s framework means our methodology can be potentially applied to other geometric optimization problems, such as the obstacle-avoiding RSMT problem (Ajwani, Chu, and Mak 2010).

Our work is one of the first NCO frameworks to use algorithmic alignment to remove dependence on problem size. To our best knowledge, the only other work in this direction is NN-Baker (McCarty et al. 2021), which is limited to a very simple algorithmic setup (a flat partitioning of the input domain). The dynamic programming (DP) framework we consider is much more general: for example, a similar DP framework exists for many optimization problems (e.g., max independent set) for graphs with bounded tree width (Cygan et al. 2015).

Removing problem-size dependence is important, as size generalization is a fundamental obstacle in NCO (Garmendia, Ceberio, and Mendiburu 2022) that is challenging to overcome (Liu et al. 2022). Training on large instances is prohibitively expensive: for supervised learning this requires computation of exact solutions to large instances, and for RL and unsupervised learning, training becomes exponentially more challenging as size increases. Thus, size generalization is essential for performance on large instances. In some cases, such generalization is even provably hard (Yehudai et al. 2020). Notably, our experiments show NN-Steiner exhibits strong size generalization on a hard optimization problem that has practical implications.

2 Preliminaries

We now introduce the RSMT problem, then briefly describe Arora’s PTAS for this problem (Arora 1998). For simplicity, we henceforth treat the case where input points lie in . Our definitions and Arora’s algorithm can both be extended to , as well as to the standard Euclidean Steiner minimum tree problem (without rectilinear constraints).

Definition 1 (RSMT).

Given a set of points , the rectilinear Steiner minimum tree (RSMT) for is a tree with vertex set with minimum total edge length under the norm. The set is the set of Steiner points.

|

|

2.1 Arora’s PTAS

We now describe the high-level idea behind Arora’s polynomial-time approximation algorithm, which we refer to as Arora’s PTAS. For simplicity, we assume that the input points have integral coordinates, and are contained in a bounding box of side length with . A perturbation process given in (Arora 1998) rounds input coordinates so that this assumption holds without changing theoretical guarantees for the algorithm.

Step 1: Construct a shifted quadtree.

First, we pick integers uniformly at random and then translate the input pointset by the vector . We then construct a quadtree, where the splitting of quadtree cells terminates if a cell contains 1 or 0 points. The quadtree is a tree where each internal vertex has degree 4. The root has level , and is associated with a cell of side length . Any vertex at level is associated with a cell of size (i.e., side length) . Bisecting a level- cell with a horizontal line and with a vertical line decomposes it into four level- child cells, each of size , corresponding to the four children of . As the side length of the bounding box is , and points have integral coordinates, the height (max-level) of the quadtree is at most and the total number of vertices in (and thus the number of cells across all levels) is .

We now consider a special family of Steiner trees for which the crossing of quadtree cells is constrained.

Definition 2.

Let be positive integers. The -regular set of portals is the set of points such that each cell has a portal at each of its 4 corners, and other equally-spaced portals on each of its four sides. A Steiner tree is -light if it crosses each edge of each cell at most times and always at a portal.

Note that if a side of a cell is contained in the sides of multiple cells, then the portals on are spaced according to the cell with side containing that has the lowest level . We say that the portals on have level . See Fig. 2 for an example of quadtree decomposition and a -light rectilinear Steiner tree.

Step 2: Dynamic programming.

The following theorem guarantees that the minimal -light rectilinear Steiner tree is an approximate RSMT (Arora 1998). A proof is given in the supplemental (Kahng et al. 2023).

Theorem 2.1 (Structure Theorem).

If shifts are chosen uniformly randomly in , then with probability at least , the minimum-length -light rectilinear Steiner tree has length at most , where is the length an RSMT.

Hence, our goal is to compute a minimum -light rectilinear Steiner tree. In particular, Arora proposed to use DP in a bottom-up construction. We sketch the idea here.

We process all quadtree cells in a bottom-up manner. For a fixed quadtree cell , consider an -light Steiner tree restricted to ; this gives rise to some Steiner forest in , which can exit this cell only via portals on sides of . In particular, the portion of the Steiner tree outside can be solved independently as long as we know the following portal configuration: (i) the set of exiting portals on the side of this cell that are used by (which connect points outside with those inside), and (ii) how these exiting portals are connected by trees in the Steiner forest .

Let be the set of portal configurations for a cell , and let . Each cell has subsets of portals, and each subset of portals can be partitioned ways. As the Bell number is bounded above by we have . Our goal is to compute, for each portal configuration , the minimum cost of any rectilinear Steiner forest within that gives rise to this boundary condition. Assuming an arbitrary but fixed order of portal configurations in , the costs of all configurations can then be represented by a vector , where . We call the cost-vector for .

We now describe the DP algorithm to compute this cost-vector for all cells in a bottom-up manner (decreasing order of levels).

Base case: is a leaf cell. In this case, there is at most one point from contained in . We enumerate all configurations, and compute directly, which requires solving RSMT instances of bounded size.

Inductive step: is not a leaf cell. The four child-cells of are from the level below ’s level, and thus, by the inductive hypothesis, we have already computed the cost-vectors for . Consider any portal configuration . We simply need to enumerate all choices of portal configurations for child-cells that are consistent with , meaning that the portals on common sides are the same, and the connected components of portals from the 4 child-cells do not form cycles (hence, still induce a valid Steiner forest). We have

| (1) |

Final construction of approximate RSMT. At the end of DP, after we compute the cost-vector for the root cell, we identify the portal configuration with lowest cost. To obtain the corresponding rectilinear Steiner tree, we perform a top-down backtracking: (i) for the root cell, from we can retrieve the set of child-cell configurations generating ; (ii) we repeat this until we reach all leaf cells. At each leaf cell we once again compute the optimal Steiner forest for the chosen configuration. Combining these Steiner forests yields an optimal -light RSMT.

3 NN-Steiner

Although the running time of Arora’s PTAS is polynomial in the problem size, its computation is prohibitively expensive in practice as we compute all possible portal configurations. Instead of brute-force enumeration of portal configurations, we propose a framework, NN-Steiner, which infuses neural networks (NNs) into Arora’s PTAS to select Steiner points from portals to build the output Steiner tree. In Sec. 3.1, we show that the key components within the DP algorithm can be simulated exactly by certain NNs. However, such NNs are not efficient either, as their size depends exponentially on and . In Sec. 3.2, we show a practical instantiation of NN-Steiner and demonstrate its performance in Sec. 2.

3.1 Theoretical NN-Steiner to simulate Arora’s PTAS

We can simulate key components in the DP framework of Arora’s PTAS. Specifically, we use four neural networks: and respectively implement the base case and inductive step of DP to compute encodings of cost-vectors in a bottom-up manner, while and obtain optimal portal configurations from cells in a top-down manner.

There exist designs and parameters of these NNs simulating Arora’s DP algorithm exactly; moreover, these NNs are each of only bounded size independent of (depending only on parameters and , which are set to be constant in practice). Here, we describe how to construct to simulate one inductive step in the DP algorithm. For brevity, we leave the other NN cases to a full version.

Recall that, as described in Sec. 2, the inductive step of the DP algorithm can be rewritten as applying a function , where is the total number of portal configurations for a single cell. In particular, for any quadtree cell with child-cells , the input to is the four cost-vectors , and the output is the cost-vector .

Eqn. (1) gives how to compute each entry in the output vector . That is, has a simple form modeled as a certain linear function followed by a min-pooling, which can be simulated by a NN as shown in Fig. 3. In particular, to compute , each neuron takes in a set of four portal configurations , , consistent with , and simply does a sum operation. Then the output takes a min-pooling over all values at s. As there are at most sets of four portal configurations for each , the entire model has complexity . (Recall that is independent of the input pointset size .) That is, there is a of bounded complexity simulating the DP step exactly. The following theorem summarizes the existence of NNs to implement Arora’s PTAS.

Theorem 3.1.

There exist four NNs, each of only bounded size depending only on and , that can simulate the DP algorithm of Arora’s PTAS, such that the resulting mixed neural-algorithmic framework can find a -approximate rectilinear Steiner tree (i.e., with length at most ). The framework calls these NNs only times.

3.2 Practical Instantiation of NN-Steiner

The theoretical neural-algorithmic framework described in the previous section is not practical as it is explicitly encoding the exponential number of portal configurations (exponential in ). We now present NN-Steiner, a practical instantiation of this framework. Theorem 3.1 implies that the NN-Steiner architecture has the capacity to approximately solve the RSMT problem using NN components of bounded size. In practice, one hopes that NN-Steiner can leverage data to encode portal configurations more efficiently.

The pipeline starts with a neural network , that acts on each leaf cell to produce an encoding of the configuration costs. Next, we apply a NN simulation of the dynamic programming step , for each non-leaf cell. We then use two NNs, and , to simulate the backtracking stage and return the likelihood that each portal is a Steiner point. By selecting high-likelihood portals, we construct a set of Steiner points and a corresponding Steiner tree. As the selected Steiner points must lie on cell boundaries, we finish with a local refinement scheme which introduces Steiner points that lie in the interior of leaf cells. See Fig. 4 for a high-level overview of our practical NN-Steiner pipeline.

Forward pass.

The forward processing involves two MLPs, and , and calls recursively in a bottom-up manner to compute an implicit encoding of the costs of possible portal configurations.

Base-case neural network . At the leaf level of Arora’s PTAS, each cell contains at most point. We instead terminate the quadtree decomposition when a cell contains no more than points, where is a hyperparameter. Given a leaf cell with the set of points contained in , takes relative coordinates of as well as the set of portals on the sides of as input, and outputs a -dimensional vector as an implicit encoding of the cost vector ; note that in practice. Coordinates are specified relative to the bottom left corner of the cell, and are normalized by the cell size. If a leaf cell has input points, we pad the remaining coordinates with . Each cell can have at most portals ( + 2 on each side) and these portals can appear only at distinct relative locations in the cell. Portals are then provided to as indicators in . Here, each corner contains two portals to simplify computation by distinguishing connections passing through from different sides. (Arora’s PTAS uses a single portal at each corner.)

Terminating with at most points in each leaf cell is advantageous as, for a quadtree cell with very few points, it is challenging to learn a meaningful encoding of portal configurations. If is small, the majority of cells have few points inside. (Note that a complete degree-4 tree has around 75% of its nodes at the leaf level.) Hence, training on such a collection of cells tends to provide bad supervision, harming the effectiveness of learning. On the other hand, as increases, larger Steiner-tree instances must be computed at leaves, which may negatively affect the performance of the framework. In our experiments, is a hyperparameter; see Sec. 2 for its effect and choice.

DP-inductive-step neural network . Next, we use another MLP, , to simulate the function which, as mentioned in Sec. 3.1, is equivalent to the DP in Arora’s PTAS. In detail, given a cell at level , let be its 4 child-cells at level in a fixed order. The neural network takes encodings of , and the portals of , and generates an encoding of the cost vector of the parent cell . Note that the encodings of are produced by applying either or at . Again, portals are provided via indicators in .

Backward pass.

As described above, the forward pass applies to all leaf cells, and then to all internal vertices, to simulate the bottom-up DP algorithm of Arora’s PTAS. Now, we use two more NNs to simulate the backtracking stage of the DP algorithm. Together, these NNs construct a portal-likelihood map , where is the set of all portals.

Root-level retrieval neural network . For a cell let denote the portals in that are one level higher than ’s level, i.e., the portals on the vertical and horizontal segments bisecting . Let be the root cell with children . The input to is the output of at , and the input of at . The output of is a vector of the likelihoods of , where is the root cell.

Backward retrieval step neural network . We compute the rest of the portal likelihoods in a top-down manner. This is achieved by using a neural network (at all non-root non-leaf cells ) which computes the likelihoods of . The input of applied at a cell with child-cells and , is comprised of two parts. First, takes the output of four instances of either or applied at , and (same as ). Second, receives likelihoods for every portal on the boundary of . At this step, these likelihoods will have been computed previously by an instance of either or applied at the level above ’s level.

Retrieval of Steiner points and postprocessing.

After the backward pass, we have a portal-likelihood map over all portals. We select all portals with likelihood greater than a threshold as the initial set of Steiner points . We then compute the minimum spanning tree over , which takes time . Next, we apply three local refinement steps:

-

1.

First, we iterate over all leaf cells and for each cell replace every connected component with the optimal Steiner tree connecting all of the component’s Steiner points (selected portals) and input points.

-

2.

Next, we remove Steiner points with degree less than 3 and round the locations of the remaining Steiner points to integer coordinates.

-

3.

Finally, we partition222We partition by iterating the following procedure: (i) select a leaf, (ii) use breadth-first search to select vertices until input points or all remaining input points are selected, then (iii) remove these vertices. The subtree induced by each removed set of vertices, which contains at most input points, forms an element of the partition. the tree into subtrees with or fewer input points. We replace each subtree with the optimal Steiner tree over both its input points and any Steiner point which is adjacent to a vertex in another partition.

Optimal Steiner trees are computed using GeoSteiner 5.1 (Juhl et al. 2018). The first step, cell refinement, introduces Steiner points into the interior of cells, since initially Steiner points can only be at portals. The second step removes unnecessary Steiner points and rounds the locations of Steiner points. Rounding simplifies the tree and can be done with minimal effect on the solution since an optimal Steiner tree always lies on the Hanan grid and thus has integer Steiner points. The final step, subtree refinement, allows Steiner points at portals to be moved.

Training.

For each training pointset, we compute the optimal Steiner tree using GeoSteiner 5.1 (Juhl et al. 2018). Each time this optimal tree crosses a side of a cell, we move the crossing to the nearest portal. The resultant set of portals with crossings is used as the target in computing the loss. For the loss, we use binary cross entropy with weights of for portals that are Steiner points in the target, and weights of 1 for other portals. As the classification is imbalanced, with most portals not being Steiner points, this weighting scheme prevents the classifier from achieving low loss with uniformly near-zero portal likelihoods.

We train all four networks in an end-to-end manner. In training, the models are connected in a tree structure similar to that used in RNNs (recursive neural networks) in that the output of is fed into the same instance repeatedly in our architecture. However, RNNs are often connected linearly while NN-Steiner connects NNs in a tree structure. To accelerate training, we utilize batch-mode training. Batch mode necessitates that the same tree structure is used for each training sample. Otherwise, the model shape would be different between training samples, and these samples could not be learned in parallel.

We use pointsets sampled from a distribution tailored for compatibility with batch-mode training. Pointsets are constructed as follows: (i) Sample points from a distribution on an integer grid of size . (ii) Construct a depth- quadtree. (iii) In each quadtree leaf cell with greater than points, remove points randomly until only points remain. With these pointsets, a depth- quadtree can always be used with no more than points in every leaf cell. Note that while we use a fixed tree structure throughout training, for testing, the tree structure is decided by the specific test pointset and can have any shape or depth.

4 Experimental performance

| Number of points | 50 | 100 | 200 | 500 | 800 | 1000 | 2000 | 5000 |

|---|---|---|---|---|---|---|---|---|

| NN-Steiner | 2.10 | 1.38 | 0.74 | -0.67 | -1.11 | -1.43 | -2.44 | -2.99 |

| REST (T=8) | -0.14 | 1.08 | 7.40 | 22.68 | 35.16 | 42.52 | 75.12 | 147.48 |

| FLUTE (A=18) | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| GeoSteiner | -0.54 | -1.23 | -2.25 | -3.71 | -4.43 | -4.78 | – | – |

| Number of points | 50 | 100 | 200 | 500 | 800 | 1000 | 2000 | 5000 |

|---|---|---|---|---|---|---|---|---|

| NN tree construction + refinement (NN-Steiner) | 2.10 | 1.38 | 0.74 | -0.67 | -1.11 | -1.43 | -2.44 | -2.99 |

| MST of + refinement | 2.54 | 2.50 | 1.44 | 0.04 | -0.75 | -1.04 | -1.73 | -2.46 |

| NN tree construction + cell refinement | 3.01 | 2.54 | 1.84 | 0.39 | -0.10 | -0.45 | -1.39 | -2.08 |

| NN tree construction + subtree refinement | 6.76 | 5.55 | 4.90 | 3.39 | 3.20 | 3.07 | 1.64 | 1.44 |

| FLUTE + refinement | -0.03 | -0.08 | -0.15 | -0.22 | -0.27 | -0.27 | -0.31 | -0.33 |

We present experimental results of NN-Steiner on planar RSMTs. Our experiments show that NN-Steiner outperforms SOTA algorithms for large point sets and has approximately linear runtime. We demonstrate the effectiveness of NN-Steiner on differently distributed pointsets. We give ablations that elucidate the role of our portal-retrieval and refinement schemes and we show the dependence of NN-Steiner on critical hyperparameters. All of our experiments run on a 64-bit Linux server with a 2.25GHz AMD EPYC 7742 Processor (256 threads) and three Nvidia RTX A100-SXM4 GPUs allocating 80GB RAM.

Implementation. Each of is implemented using a 4-layer MLP, with ReLU activation and size-4096 hidden layers. The output of and is a vector of dimension . An additional sigmoid layer is appended to and to output the portal likelihoods in . For training, we use a dropout of and a batch size of 5000. Training pointsets are generated using a uniform distribution with initial points, grid lines, and using a quadtree of depth . ( In testing, the quadtree may have depth much greater than , depending on the number and distribution of points.) We train the models on 120,000 pointsets solved exactly by GeoSteiner. For the refinement step, we partition into subtrees of size . We train for 5000 epochs using Adam (Kingma and Ba 2014) as our optimizer, with a learning rate of . The default setting of NN-Steiner is and with the threshold set to .

Performance comparison. The results of the exact RSMT solver GeoSteiner are ground truth solutions. Since we could not compute GeoSteiner for large instances, we use FLUTE (Chu and Wong 2007), the SOTA heuristic solver, as the base for comparison of approaches. Table 1 shows the performance comparison. Smaller values indicate better performance and negative values indicate superior performance to FLUTE, i.e., length is smaller than the output of FLUTE. All the FLUTE instances are run with , the setting which produces the highest-quality solutions. We also make comparisons against REST (Liu, Chen, and Young 2021) with , the best-performing setting claimed by the work. Batch sizes are set to 1. Test pointsets are generated uniformly at random from a grid. Reported values are averages over 100 pointsets. Table 1 shows that NN-Steiner generalizes to large pointsets. (Recall that training pointsets have less than points.) Furthermore, NN-Steiner outperforms FLUTE and REST for pointsets of size 500 or greater, with margin of outperformance increasing with pointset size. NN-Steiner has advantage over FLUTE for large problems, however, it performs best on small point sets relative to the exact solution. Also, Fig. 5 shows that the runtime of NN-Steiner scales approximately linearly.

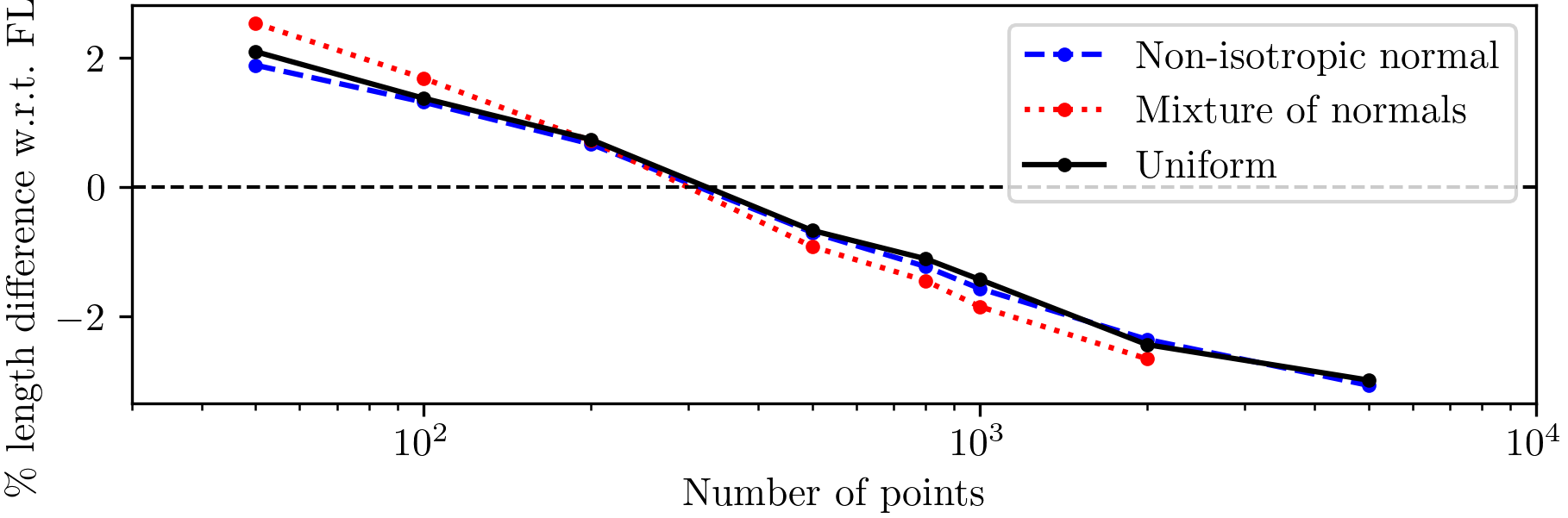

Generalization to Different Distributions. We test the generalization of NN-Steiner to different pointset distributions. In particular, NN-Steiner is trained on uniformly distributed pointsets, but tested on pointsets with mixed normal and non-isotropic normal distributions. Points are restricted to a grid. The standard deviation of the non-isotropic normal distribution is taken to be for both and and for mixed-normal distribution. Means are distributed uniformly. The covariance matrix of the non-isotropic normal distribution is given by uniformly picking a correlation in . For the mixed-normal distribution, we use a uniform mixture of 10 normal distributions.333We do not generate mixed-normal distributions of 5000 points because the granularity is too restrictive. Fig. 6 shows that NN-Steiner generalizes to different distributions, despite only being trained on uniformly distributed pointsets.

Ablations. We use an ablation study to show the importance of each component of our algorithm. To determine the impact of different components on performance as a whole, we evaluate the performance after removing each component. The components we consider are tree construction, cell refinement, and subtree refinement (Fig. 4). Tree construction produces an MST over where is found by portal retrieval. To evaluate performance without this component, we instead apply refinement to the MST over (here, edges between cells are preserved in cell refinement). Table 2 shows that each of these stages contributes to the overall performance, with refinement contributing the most. However, this observation does not undermine the importance of tree construction; if we apply refinement to the output of FLUTE (Chu and Wong 2007), also shown in Table 2, we see limited improvement. This suggests that the global topology produced by tree construction and cell refinement for large pointsets is superior to that produced by FLUTE.

Hyperparameter choice. We evaluate how and affect performance (Fig. 7). Results show poor performance for small values of , which we attribute to overfitting. In theory, -light trees are better approximations with greater values of . However, for smaller instances, yields similar performance as . This suggests that, for smaller instances, the fine granularity from larger is not needed to generate high-quality global tree topologies. Also, models with smaller may exhibit better performance as they are easier to train due to less classification imbalance. Experiments and discussion for threshold selection are given in the supplemental material (Kahng et al. 2023)

5 Conclusion

We present a mixed neural-algorithmic framework, NN-Steiner, for solving large-scale RSMT problems. This is the first neural architecture that has the capacity to solve RSMT approximately. In experiments, our framework shows generalization and scalability, and outperforms both heuristic algorithms and machine learning baselines on large instances.

Our ongoing research pursues the following directions. First, Arora’s PTAS can solve higher-dimensional RSMT problems; this motivates us to generalize our framework to 3D IC designs. Second, the methodology behind NN-Steiner can be extended to compute obstacle-avoiding RSMTs, which again have important applications in VLSI design.

Acknowledgments

This work is partially supported by NSF under grants CCF-2112665, CCF-2217033 and CCF-2310411, and by DARPA IDEA HR0011-18-2-0032. The authors thank Anastasios Sidiropoulos for helpful discussions during initial stages of this project. We also thank Qi Zhao for early explorations and inputs to the early writing of this paper.

References

- Ajwani, Chu, and Mak (2010) Ajwani, G.; Chu, C. C. N.; and Mak, W.-K. 2010. FOARS: FLUTE based obstacle-avoiding rectilinear Steiner tree construction. IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, 30: 194–204.

- Arora (1998) Arora, S. 1998. Polynomial time approximation schemes for Euclidean traveling salesman and other geometric problems. Journal of the ACM (JACM), 45(5): 753–782.

- Bello et al. (2017) Bello, I.; Pham, H.; Le, Q. V.; Norouzi, M.; and Bengio, S. 2017. Neural combinatorial optimization with reinforcement learning. arXiv:1611.09940.

- Bengio, Lodi, and Prouvost (2021) Bengio, Y.; Lodi, A.; and Prouvost, A. 2021. Machine learning for combinatorial optimization: a methodological tour d’horizon. European Journal of Operational Research, 290(2): 405–421.

- Berman et al. (1994) Berman, P.; Fößmeier, U.; Karpinski, M.; Kaufmann, M.; and Zelikovsky, A. 1994. Approaching the 5/4—approximation for rectilinear Steiner trees. In Proceedings of the European Symposium on Algorithms, 60–71. Springer.

- Chen et al. (2022) Chen, P.-Y.; Ke, B.-T.; Lee, T.-C.; Tsai, I.-C.; Kung, T.-W.; Lin, L.-Y.; Liu, E.-C.; Chang, Y.-C.; Li, Y.-L.; and Chao, M. C.-T. 2022. A reinforcement learning agent for obstacle-avoiding rectilinear Steiner tree construction. In Proceedings of the International Symposium on Physical Design (ISPD), 107–115.

- Chu and Wong (2007) Chu, C.; and Wong, Y.-C. 2007. FLUTE: Fast lookup table based rectilinear Steiner minimal tree algorithm for VLSI design. IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, 27(1): 70–83.

- Chu (2004) Chu, C. C. N. 2004. FLUTE: fast lookup table based wirelength estimation technique. In Proceedings of the IEEE/ACM International Conference on Computer Aided Design (ICCAD), 696–701.

- Chu and Wong (2005) Chu, C. C. N.; and Wong, Y.-C. 2005. Fast and accurate rectilinear Steiner minimal tree algorithm for VLSI design. In Proceedings of the ACM International Symposium on Physical Design (ISPD), 28–35.

- Cinel and Bazlamaçci (2008) Cinel, S.; and Bazlamaçci, C. F. 2008. A distributed heuristic algorithm for the rectilinear Steiner minimal tree problem. IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, 27: 2083–2087.

- Cygan et al. (2015) Cygan, M.; Fomin, F.; Kowalik, L.; Lokshtanov, D.; Marx, D.; Pilipczuk, M.; Pilipczuk, M.; and Saurabh, S. 2015. Parameterized Algorithms. Springer.

- Deudon et al. (2018) Deudon, M.; Cournut, P.; Lacoste, A.; Adulyasak, Y.; and Rousseau, L.-M. 2018. Learning heuristics for the TSP by policy gradient. In Proceedings of the International Conference on the Integration of Constraint Programming, Artificial Intelligence, and Operations Research (CPAIOR).

- Fallin et al. (2022) Fallin, A.; Kothari, A.; He, J.; Yanez, C.; Pingali, K.; Manohar, R.; and Burtscher, M. 2022. A simple, fast, and GPU-friendly Steiner-tree heuristic. In Proceedings of the IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW), 838–847.

- Garey and Johnson (1977) Garey, M. R.; and Johnson, D. S. 1977. The rectilinear Steiner tree problem is NP-complete. SIAM Journal on Applied Mathematics, 32(4): 826–834.

- Garmendia, Ceberio, and Mendiburu (2022) Garmendia, A. I.; Ceberio, J.; and Mendiburu, A. 2022. Neural Combinatorial Optimization: a New Player in the Field. ArXiv, abs/2205.01356.

- Gasse et al. (2019) Gasse, M.; Chételat, D.; Ferroni, N.; Charlin, L.; and Lodi, A. 2019. Exact combinatorial optimization with graph convolutional neural networks. Advances in Neural Information Processing Systems, 32: 15580–15592.

- Gupta et al. (2020) Gupta, P.; Gasse, M.; Khalil, E.; Mudigonda, P.; Lodi, A.; and Bengio, Y. 2020. Hybrid models for learning to branch. Advances in Neural Information Processing Systems, 33: 18087–18097.

- Hanan (1966) Hanan, M. 1966. On Steiner’s problem with rectilinear distance. SIAM Journal on Applied Mathematics, 14(2): 255–265.

- Hu et al. (2006) Hu, Y.; Jing, T.; Feng, Z.; Hong, X.; Hu, X.; and Yan, G. 2006. ACO-Steiner: ant colony optimization based rectilinear Steiner minimal tree algorithm. Journal of Computer Science and Technology, 21: 147–152.

- Hwang (1976) Hwang, F. K. 1976. On Steiner minimal trees with rectilinear distance. SIAM Journal on Applied Mathematics, 30(1): 104–114.

- Juhl et al. (2018) Juhl, D.; Warme, D. M.; Winter, P.; and Zachariasen, M. 2018. The GeoSteiner software package for computing Steiner trees in the plane: an updated computational study. Mathematical Programming Computation, 10(4): 487–532.

- Kahng et al. (2022) Kahng, A. B.; Lienig, J.; Markov, I. L.; and Hu, J. 2022. VLSI physical design: from graph partitioning to timing closure. Springer, second edition.

- Kahng, Măndoiu, and Zelikovsky (2003) Kahng, A. B.; Măndoiu, I. I.; and Zelikovsky, A. Z. 2003. Highly scalable algorithms for rectilinear and octilinear Steiner trees. In Proceedings of the Asia and South Pacific Design Automation Conference (ASPDAC), 827–833.

- Kahng et al. (2023) Kahng, A. B.; Nerem, R. R.; Wang, Y.; and Yang, C.-Y. 2023. NN-Steiner: A mixed neural-algorithmic approach for the rectilinear Steiner minimum tree problem. arXiv:2312.10589.

- Khalil et al. (2017) Khalil, E.; Dai, H.; Zhang, Y.; Dilkina, B.; and Song, L. 2017. Learning combinatorial optimization algorithms over graphs. Advances in Neural Information Processing Systems, 32: 6351–6361.

- Kingma and Ba (2014) Kingma, D. P.; and Ba, J. 2014. Adam: a method for stochastic optimization. arXiv:1412.6980.

- Li, Chen, and Koltun (2018) Li, Z.; Chen, Q.; and Koltun, V. 2018. Combinatorial optimization with graph convolutional networks and guided tree search. Advances in Neural Information Processing Systems, 31: 537–546.

- Liu, Chen, and Young (2021) Liu, J.; Chen, G.; and Young, E. F. 2021. REST: Constructing rectilinear Steiner minimum tree via reinforcement learning. In Proccedings of the 58th ACM/IEEE Design Automation Conference (DAC), 1135–1140.

- Liu et al. (2022) Liu, S.; Zhang, Y.; Tang, K.; and Yao, X. 2022. How good is neural combinatorial optimization? A systematic evaluation on the traveling salesman problem. IEEE Computational Intelligence Magazine, 18: 14–28.

- McCarty et al. (2021) McCarty, E.; Zhao, Q.; Sidiropoulos, A.; and Wang, Y. 2021. NN-Baker: A neural-network infused algorithmic framework for optimization problems on geometric intersection graphs. Advances in Neural Information Processing Systems, 34.

- Nair et al. (2020) Nair, V.; Bartunov, S.; Gimeno, F.; von Glehn, I.; Lichocki, P.; Lobov, I.; O’Donoghue, B.; Sonnerat, N.; Tjandraatmadja, C.; Wang, P.; et al. 2020. Solving mixed integer programs using neural networks. arXiv:1706.03762.

- Prates et al. (2019) Prates, M.; Avelar, P. H.; Lemos, H.; Lamb, L. C.; and Vardi, M. Y. 2019. Learning to solve NP-complete problems: A graph neural network for decision TSP. In Proceedings of the AAAI Conference on Artificial Intelligence, 4731–4738.

- Sato, Yamada, and Kashima (2019) Sato, R.; Yamada, M.; and Kashima, H. 2019. Approximation ratios of graph neural networks for combinatorial problems. Advances in Neural Information Processing Systems, 32: 4081–4090.

- Selsam et al. (2018) Selsam, D.; Lamm, M.; Benedikt, B.; Liang, P.; de Moura, L.; Dill, D. L.; et al. 2018. Learning a SAT solver from single-bit supervision. In Proceedings of the International Conference on Learning Representations (ICLR).

- Vinyals, Fortunato, and Jaitly (2015) Vinyals, O.; Fortunato, M.; and Jaitly, N. 2015. Pointer networks. Advances In Neural Information Processing Systems, 28: 2692–2700.

- Wang et al. (2005) Wang, Y.; Hong, X.; Jing, T.; Yang, Y.; Hu, X.; and Yan, G. 2005. The polygonal contraction heuristic for rectilinear Steiner tree construction. In Proceedings of the Asia and South Pacific Design Automation Conference (ASPDAC), 1–6.

- Wong and Chu (2008) Wong, Y.-C.; and Chu, C. C. N. 2008. A scalable and accurate rectilinear Steiner minimal tree algorithm. In Proceedings of the IEEE International Symposium on VLSI Design, Automation and Test (VLSI-DAT), 29–34.

- Yehudai et al. (2020) Yehudai, G.; Fetaya, E.; Meirom, E. A.; Chechik, G.; and Maron, H. 2020. From local structures to size generalization in graph neural networks. In Proceedings of the International Conference on Machine Learning.

Appendix A Proof of Theorem 2.1

Our proof of Theorem 2.1 follows a similar argument as the one in (Arora 1998) for geometric TSP. We first state the following lemma, which we use later to argue that any Steiner tree can be converted to an -light Steiner tree with bounded cost increase.

Lemma A.1 (Patching Lemma).

Let be any line segment of length and be a Steiner tree. The segment could be crossed by an arbitrary number of times. We can modify to a new Steiner tree that crosses the segment at most once while increasing the cost of the tree by at most .

Proof.

Without loss of generality, assume the segment in Lemma A.1 is vertical. Then, the present lemma is shown by removing crossings one by one in a top-down manner, as follows. Let be the set of intersection points between and the line segment , sorted by decreasing -coordinate. Consider ; imagine “cutting” the tree at , which breaks into two connected components; one containing a “left copy” of and the other containing a “right copy” of . One of these two components, say the one connecting , is disconnected to the components containing : we thus simply connect to the “left copy” by a vertical edge. The resulting tree is still a valid Steiner tree. We repeat this until we finish processing all crossing points other than the last one, . Overall, the total length of extra (vertical) edges we add is at most . In the end only one crossing point (i.e, ) remains. ∎

Lemma A.2.

Grid the bounding box by putting a sequence of vertical and horizontal lines at unit distance from one another. If is one of these lines and is a Steiner tree, denote the number of times that crosses as . Then we have that

| (2) |

Lemma A.2 follows immediately from the fact that an edge of with length can contribute at most to the left hand side.

Now let be a rectilinear Steiner minimum tree and suppose is picked randomly. We show how to convert to an -light Steiner tree without increasing cost too much, via the following tree-transformation procedure.

First, similar to (Arora 1998), given any grid line , we say has level in the shifted grid if it contains an edge of some level- square. Note that a level- line is touched by level- cells, which partition it into segments of length . In general, for each , line is also touched by level- cells. We refer to the portion of that lies in a level- cell as a level- segment. Our goal is to reduce the number of crossings in each level- segment to or less.

An overloaded segment of is one that the Steiner tree crosses more than times. For every segment at level that is overloaded, we apply the Patching Lemma and reduce the crossings to . Then we proceed to level and apply the Patching Lemma to all overloaded segments at this level. We continue this procedure until no segments are overloaded at level for all from down to . By construction, the resulting new Steiner tree can cross each quadtree cell boundary at most times.

Next, for each grid line , consider its highest-level , which is the smallest level that this line is at (intuitively, the smaller the level is, the higher it is up the quadtree). We then move all crossings of to their nearest portals along at this level. We denote the resulting Steiner tree by , which by construction is -light.

What remains is to bound the cost of w.r.t. the optimal cost . First, we bound the cost increase due to crossing-reduction step of the tree-transformation procedure. Without loss of generality, let us fix a vertical grid line . We refer to the boundary segment of any level- quadtree cell as a level- segment. The aforementioned crossing-reduction procedure processes from level to till no segment of any level from is overloaded. Let be a random variable denoting the number of overloaded level- segments encountered in this procedure. Note is determined by vertical shift (chosen randomly from ), which determines the location of crossings on . We claim that for every , we have with probability 1

| (3) |

This is because the optimal input Steiner tree crossed grid line only times, and each application of the Patching Lemma counted on the left hand side of Eqn. (3) replaces at least number of crossings by 1, thus eliminating at least crossings each time.

Since a level- segment has length , the total extra cost incurred during the transformation procedure (as we described above) is at most by the Patching Lemma.

Now we want to bound the total cost-increase produced by all segments across all levels that grid line can generate. The actual cost increase at during the crossing-reduction process depends on the level of , which is determined by horizontal shift (which was chosen randomly from during our random shift step). If the highest-level of is , then the cost increase can be upper-bounded by . As is chosen randomly in , the probability that is the highest-level of line is at most . Let denote the total cost-increase due to changes to when the horizontal shift is . We can now bound the expectation of ; in particular, for any vertical shift :

| (4) | ||||

The expectation of cost-increase for all vertical lines is therefore . A symmetric argument can be used to bound the expected cost-increase for all horizontal lines by . It then follows from Lemma 2 that the expected cost-increase incurred by all grid lines is bounded above by

| (5) |

Finally, we also need to bound the cost increase when moving crossings to their nearest portals. If a grid line has highest-level , the distance between each of the number of crossings and its nearest portal is at most . Instead of actually moving a crossing to a portal, we break each edge at the crossing and add two line segments (on each side of ) connecting the portal to the origional crossing. Thus the expected increase for moving every crossing in to nearest portals can be bounded by .

Using Lemma 2, the total expected cost increase for all crossings in all lines is bounded from above by

| (6) |

Denote by the Steiner tree obtained from by -shift, followed by the aforementioned transformation procedure (consisting of crossing-reduction step, and the moving of crossings to portals). Putting everything together, the expected cost can be bounded by: Using Markov’s inequality, with probability at least , the cost of the best light Steiner tree for the shifted dissection is at most OPT. This finishes the proof of Theorem 2.1.

Appendix B Additional experimental results

In this section we give tables containing the data displayed in the figures of the main text and the corresponding runtimes for these experiments. All the runtimes are totals over 100 pointsets in seconds, and all other values are averages over 100 pointsets.

| Number of points | 50 | 100 | 200 | 500 | 800 | 1000 | 2000 | 5000 |

|---|---|---|---|---|---|---|---|---|

| NN-Steiner | 9.23 | 19.82 | 36.68 | 95.97 | 147.53 | 186.65 | 383.45 | 1021.00 |

| REST (T=8) | 59.69 | 118.13 | 230.56 | 586.91 | 927.63 | 1174.98 | 2316.37 | 5707.48 |

| FLUTE (A=18) | 3.68 | 6.30 | 13.85 | 42.23 | 74.65 | 97.25 | 218.35 | 613.77 |

| Geosteiner | 0.83 | 2.74 | 10.27 | 86.32 | 534.99 | 5998.50 | – | – |

| Number of points | 50 | 100 | 200 | 500 | 800 | 1000 | 2000 | 5000 |

|---|---|---|---|---|---|---|---|---|

| NN tree construction + refinement (NN-Steiner) | 9.23 | 19.82 | 36.68 | 95.97 | 147.53 | 186.65 | 383.45 | 1021.00 |

| MST of + refinement | 2.96 | 6.30 | 11.88 | 30.77 | 48.58 | 62.04 | 125.55 | 332.46 |

| NN tree construction + cell refinement | 8.90 | 19.38 | 35.83 | 91.70 | 139.82 | 178.42 | 367.94 | 989.00 |

| NN tree construction + subtree refinement | 8.80 | 19.06 | 35.30 | 91.79 | 137.49 | 175.91 | 362.50 | 958.87 |

| Number of points | 50 | 100 | 200 | 500 | 800 | 1000 | 2000 | 5000 |

|---|---|---|---|---|---|---|---|---|

| Uniform | 2.10 | 1.38 | 0.74 | -0.67 | -1.11 | -1.43 | -2.44 | -2.99 |

| Mixture | 2.54 | 1.69 | 0.72 | -0.92 | -1.45 | -1.85 | -2.66 | – |

| Non-isotropic normal | 1.89 | 1.32 | 0.67 | -0.70 | -1.23 | -1.57 | -2.36 | -3.07 |

| Number of points | 50 | 100 | 200 | 500 | 800 | 1000 | 2000 | 5000 |

|---|---|---|---|---|---|---|---|---|

| Uniform | 9.23 | 19.82 | 36.68 | 95.97 | 147.53 | 186.65 | 383.45 | 1021.00 |

| Mixture | 10.45 | 20.95 | 40.64 | 97.96 | 153.89 | 191.43 | 379.80 | – |

| Non-isotropic normal | 9.45 | 19.74 | 38.95 | 95.31 | 151.64 | 187.16 | 378.56 | 993.56 |

| Number of points | 50 | 100 | 200 | 500 | 800 | 1000 | 2000 | 5000 |

|---|---|---|---|---|---|---|---|---|

| 2.10 | 1.38 | 0.74 | -0.67 | -1.11 | -1.43 | -2.44 | -2.99 | |

| 1.96 | 1.52 | 0.68 | -0.62 | -1.13 | -1.35 | -2.30 | -2.81 | |

| 2.36 | 2.05 | 0.99 | -0.46 | -1.09 | -1.31 | -2.26 | -2.76 |

| Number of points | 50 | 100 | 200 | 500 | 800 | 1000 | 2000 | 5000 |

|---|---|---|---|---|---|---|---|---|

| 9.23 | 19.82 | 36.68 | 95.97 | 147.53 | 186.65 | 383.45 | 1021.00 | |

| 7.15 | 14.90 | 27.61 | 72.07 | 110.55 | 139.72 | 285.94 | 763.76 | |

| 5.77 | 12.38 | 22.72 | 58.81 | 90.34 | 115.50 | 235.79 | 630.96 |

| Number of points | 50 | 100 | 200 | 500 | 800 | 1000 | 2000 | 5000 |

|---|---|---|---|---|---|---|---|---|

| 2.78 | 2.86 | 2.03 | 0.65 | -0.05 | -0.29 | -1.11 | -1.63 | |

| 2.10 | 1.38 | 0.74 | -0.67 | -1.11 | -1.43 | -2.44 | -2.99 | |

| 1.65 | 1.64 | 0.37 | -0.62 | -1.58 | -1.92 | -2.29 | -3.18 |

| Number of points | 50 | 100 | 200 | 500 | 800 | 1000 | 2000 | 5000 |

|---|---|---|---|---|---|---|---|---|

| 35.17 | 70.97 | 136.38 | 337.83 | 545.86 | 674.61 | 1386.92 | 3569.93 | |

| 9.23 | 19.82 | 36.68 | 95.97 | 147.53 | 186.65 | 383.45 | 1021.00 | |

| 5.89 | 11.52 | 24.33 | 62.90 | 97.79 | 114.05 | 251.53 | 577.57 |

Appendix C Threshold selection

We conducted experiments evaluating performance of NN-Steiner across a range of thresholds and found that a threshold of .95 yields superior results. The threshold results are shown in Fig. 8 and Table 11.

| Threshold \ Number of points | 50 | 100 | 200 | 500 | 800 | 1000 | 2000 | 5000 |

|---|---|---|---|---|---|---|---|---|

| 0.1 | 5.48 | 2.46 | 1.78 | -0.05 | -0.15 | -0.50 | -1.75 | -2.26 |

| 0.2 | 5.04 | 2.01 | 1.46 | -0.33 | -0.45 | -0.74 | -1.94 | -2.45 |

| 0.3 | 3.86 | 1.70 | 1.18 | -0.42 | -0.63 | -0.90 | -2.06 | -2.57 |

| 0.4 | 2.99 | 1.44 | 1.16 | -0.48 | -0.70 | -0.99 | -2.14 | -2.65 |

| 0.5 | 2.58 | 1.37 | 1.04 | -0.51 | -0.77 | -1.06 | -2.21 | -2.71 |

| 0.6 | 2.37 | 1.34 | 0.95 | -0.54 | -0.85 | -1.12 | -2.26 | -2.78 |

| 0.7 | 2.24 | 1.36 | 0.91 | -0.59 | -0.92 | -1.20 | -2.32 | -2.85 |

| 0.8 | 1.98 | 1.39 | 0.84 | -0.63 | -0.97 | -1.30 | -2.37 | -2.91 |

| 0.9 | 2.01 | 1.43 | 0.82 | -0.67 | -1.07 | -1.39 | -2.42 | -2.96 |

| 0.95 | 2.10 | 1.38 | 0.74 | -0.67 | -1.11 | -1.43 | -2.44 | -2.99 |

| 0.975 | 2.12 | 1.49 | 0.79 | -0.64 | -1.15 | -1.45 | -2.44 | -2.99 |

| 1 | 2.53 | 2.44 | 1.36 | -0.02 | -0.78 | -1.08 | -1.78 | -2.49 |