Newton-PnP:

Real-time Visual Navigation for Autonomous Toy-Drones

Abstract

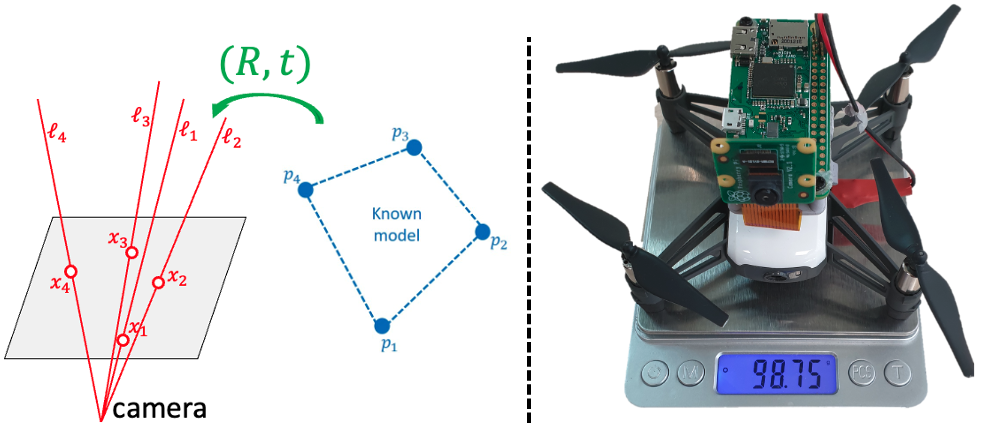

The Perspective-n-Point problem aims to estimate the relative pose between a calibrated monocular camera and a known 3D model, by aligning pairs of 2D captured image points to their corresponding 3D points in the model. We suggest an algorithm that runs on weak IoT devices in real-time but still provides provable theoretical guarantees for both running time and correctness. Existing solvers provide only one of these requirements. Our main motivation was to turn the popular DJI’s Tello Drone (<90gr, <$100) into an autonomous drone that navigates in an indoor environment with no external human/laptop/sensor, by simply attaching a Raspberry PI Zero (<9gr, <$25) to it. This tiny micro-processor takes as input a real-time video from a tiny RGB camera, and runs our PnP solver on-board. Extensive experimental results, open source code, and a demonstration video are included.

I Introduction

The term “Perspective-n-Point problem”, or PnP in short, was first introduced by Fischer and Bolles in [18]. The PnP problem aims to recover the position and orientation (6 degrees of freedom) of a calibrated monocular camera, by aligning its captured 2D image to a given 3D model (map) that describes the real-world. Solving this problem on each image in a real-time video from a moving camera mounted on a robot, such as an autonomous car [16], a humanoid [7], or a vacuum cleaner [42], provides us the location and orientation of the robot in the world. Using pre-recorded models of the streets, Google’s Liveview displays addresses and pointing arrows on top of the smartphone’s captured video stream in real-time, to enable navigation using augmented reality based on a Visual Positioning System.

The PnP is a fundamental problem in Computer Vision [5, 19] with many other applications in Robotics [12, 33, 39, 16] and Augmented Reality [13, 11, 38].

I-A Main challenge: Lightweight Optimal Solver

While there are dozens of papers that aims to solve the PnP problem, there is still a serious remaining challenge in the context of real-time robotics, especially for weak and lightweight IoT devices as in robotics and drone applications.

Existing provable solvers for the PnP problem typically use external packages such as GloptiPoly [23], SOSTools [36, 41] or SeDuMi [44, 41]. Other solutions, either heuristics, approximations, or methods which solve alternative cost functions are discussed in Section I-D.

The main disadvantages of the above optimal solvers in the context of our robotic application are: (i) Memory. The very generic libraries above require both RAM and external memory, both of which are very limited, especially when it comes to IoT micro-computers such as the Raspberry PI zero (RPI0) micro-computer which we use in our case, that has 1GHz single-core CPU 512MB RAM; see Section IV-B. (ii) Running time. Those libraries are built to tackle a very wide range of optimization problems; they are not tailored for our problem. Thus, their running time is far from being optimal. (iii) deployment dependencies. Compilation and integration is often hard or even impossible, especially for non-Windows operating systems or non-Intel/AMD CPUs, as in our RPI case. Recent results such as [41] and GPOSolver [22] were able to remove dependencies on commercial tools such as MATLAB. However they still depend on optimization libraries such as SeDuMi [44] Mosek [31].

I-B Our Contributions

(i) Theoretical guarantees. We propose the first algorithm that, in theory, returns optimal provable results for input data that satisfies some weak assumption which was satisfied in all our experiments. Our algorithm runs in time where is the accuracy and is the model precision; see Assumption 1, Theorem 2, and Algorithm 1.

(ii) Self-contained algorithm. Our proposed novel algorithm is self-contained and requires only few lines of code to implement. It does not depend on commercial tools or optimization libraries. It only requires standard and widely used libraries such as Eigen [20].

(iii) Experimental results. We provide extensive experimental results on our own collected real world datasets with ground truths. We empirically demonstrate that our algorithm consistently provides more precise results as compared to the competing methods; see Section IV-A.

(iv) Real-time system. As an application, we build our very own light-weight, low-cost, autonomous, and on-board toy-drone navigation system, which is based on a RPI0 with our novel PnP solver; see Section IV-B and the video.

(v) Open code. We provide full open source code for our algorithm, which can run in real-time on IoT devices [1].

I-C Novelty

To solve the above challenges we designed a simple algorithm, called Newton-PnP, or NPnP in short.

(i) Newton’s PnP. To provide a self contained algorithm with competitive performance, we follow [41] and formalize the PnP as an instance of a semidefinite programming (SDP) problem in (5), and utilize the simple Newton’s method for solving this objective, without external sophisticated solvers.

However, the classic Newton’s method alone does not support non-equality constraints as the ones that arise in our problem. Hence, we also combine the barrier method [8], as explained in Section III-B. The only external library required is Eigen for basic linear algebra that is common in robotics and real-time applications. In particular, it supports the LAPACK interface that is provided by CPU manufacturers for direct hardware implementations, simply by downloading their packages. In particular, our implementation was easily compiled on a RPI0 and a standard laptop.

(ii) Focus on the dual problem. The first issue when using the barrier method for tackling the SDP instance above, which is called the primal problem, is that the number of free variables is , as also explained in [41]. This would be much too slow for our real-time drone application that runs on an RPI0. To this end, we observe that the corresponding dual optimization problem requires only unknown variables; see Section III-A. An important observation is that the duality gap is zero; see proof of Theorem 2. That is, an optimal solution to the dual problem corresponds to the optimal solution of the primal version of the problem, with no approximation gap.

(iii) Suggesting a provably good initial solution. The barrier method uses Newton’s method with equality constraints, which is an iterative algorithm that requires an initial solution. This solution must: (i) be feasible, i.e., be in a set that satisfies the constraints of our dual problem, and (ii) the value of the objective function at this initial point must be close to the optimal objective value, since the running time depends linearly on this distance; see Theorem 2.

To this end, we first observe that many of the parameters in our optimization problem remain fixed between different PnP instances. We leverage this observation, along with careful tuning of the barrier method’s parameter, to compute a good initial solution for our problem. This solution is computed in advance and is independent of the input; see Section III-C.

I-D Related Work

The PnP problem has been intensely studied in literature, where the proposed methods are broadly categorized into either iterative or non-iterative methods.

The iterative methods usually aim to iteratively minimize complex cost functions, which makes them potentially slow and non-optimal due to local minima of the cost function. However, such method achieve high accuracy when converging properly, and can handle arbitrary number of input pairs. Examples include [14, 34, 29, 40].

In comparison, the non-iterative methods tend to be unstable in the presence of noise [27], and in many cases gain speed by alternating or approximating the target cost function. Examples include [18, 15, 37, 17, 6], whose running times are polynomial in the number of input pairs. To enhance the stability of such methods, one can leverage additional redundant points, as in, for example, the well known Direct Linear Transformation (DLT) algorithm [2].

Among the most notable non-iterative results is the popular work of Lepetit et al. [27], termed EPnP, which reduced the problem into recovering a set of virtual control points, where the resulting quadratic polynomials were solved with simple linearization techniques in time. Follow-up works have tried to enhance the stability of EPnP by replacing the linearization with polynomial solvers. Among those is the work of Li et al. [28], termed RPnP, which explicitly retrieves the roots of a seventh degree polynomial. However, the proposed improvements have still been shown to be unstable [47]. To resolve these drawbacks, Hesch and Roumeliotis [24] developed the direct least squares (DLS) method requiring time. Unfortunately, they parameterized rotation by using the Cayley representation, which is degenerate in many cases. The accuracy deteriorates seriously when the camera pose approaches these singularities.

Branch and Bound. In a different line of works, branch and bound (BnB) methods have been proposed. In [21], Hartley et al. proposed a BnB algorithm which solves an approximated version of the problem, taking advantage of the rotation matrix constraints. Olsson et al. [35] proposed a similar algorithm which leverages quaternion representation for the rotation matrix. Unfortunately, such BnB algorithms are unpractical due to their tremendous computational cost.

Globally optimal and efficient methods. The limitations mentioned above led to a search for algorithms that are both provably globally optimal and efficient. For example, [25] utilizes Grobner basis, while the great works [41, 47] reduce the problem to some instance of semidefinite programming (SDP). Common SDP solvers use primal-dual interior-point methods [44] [4]. The most commonly used solution approaches are Mehrotra’s predictor-corrector based algorithms [30][43]. Mehrotra’s approach tackles the Karush-Kuhn-Tucker conditions of the primal and dual optimization problem to reach a global optimum. As for the PnP case, these equations produce variables in the Mehrotra’s approach, which is far more than only in our modified approach; see Section III.

Our algorithm draws inspiration from those theoretically globally optimal methods, but aims to be faster in practice.

II Preliminaries

In this section we first give some notations, and then we formally define the PnP problem discussed in the previous sections, and reduce the problem to solving a set of polynomials of constant degree.

Notations. We define the set to be the set of all paired rotation matrices and translation vectors. For , and , we denote by and the slice of and row slicing of respectively, and by the row stacking of into a square matrix, when possible.

II-A Problem Formulation

Let be a set of 3D (model) points and let be a corresponding set of 2D (observed) points in a calibrated camera frame. Since the intrinsic camera parameters are given, each pixel corresponds to a 3D line passing through the camera center and the pixel in the frame, whose direction vector is ; see Fig. 1. The pair form the input for the PnP problem, where consists of all the 3D lines that correspond to points in .

The goal is to recover an alignment that minimizes the sum of squared Euclidean distances between every after applying the transformation, and its corresponding line . Formally, the PnP objective reads:

| (1) |

over every .

As detailed in [41], we can recover the optimal vector as a linear function of the optimal rotation matrix by setting the derivative of (1) with respect to to zero. Plugging back, (1) can be rewritten as

| (2) |

for some known matrix of coefficients , and where is the row stacking of .

Using quaternions. Due to the difficulty of handling the constraint above, a quaternion based representation for is used instead. Let , and define as

Now (2) can be rewritten using , where the constraint is implicitly enforced using the quaternion representation and the constraint . The PnP problem can thus be reduced into the following system of polynomials:

| (3) |

II-B Polynomial System Solvers

There are multiple different approaches for solving polynomial systems as in (3), which include: (i) classical solutions which utilize combinatorics, (ii) Grobner basis solvers [10], and (iii) Semidifinite programming (SDP) [46] using the Sum Of Squares (SOS) decomposition [36]. Assuming there are equations of constant rank, each containing unknowns, all the above methods require theoretically running time. However, the classical solutions tend to be very impractical due to the large constants hidden in the notation. Similarly, the Grobner basis methods are not only impractical in most cases, but are also numerically unstable. To this end, we adopt the SOS approach.

SOS hierarchy. The SOS approach is governed by some relaxation level , which controls the trade-off between accuracy and running time; larger relaxation level corresponds to more accurate but slower results. These levels are commonly referred to as The Lasserre hierarchy [26]. The computational time increases exponentially fast when raising the relaxation level. The difficulty lies in balancing the accuracy and the running time, as to provide a theoretically fast algorithm which is also always accurate in practice.

PnP via SOS relaxation. The above SOS relaxation approach was also used in [41], where the first Lasserre hierarchy level was chosen in order to solve (3). Using this first level already leads to relatively high computational times, but produces accurate results. In our work, we utilize the same hierarchy level, for which we empirically obtained accurate results in all our experiments. However, as explained in Section I and in the following sections, we provide an alternative and much faster implementation, which can run faster by up to x than the alternative algorithms which utilize the same SOS approach as ours, e.g., [41]. This is by exploiting the barrier method, a smart initial guess, along a range of enhancements and techniques. This enables us to develop an efficient algorithm which can run in real-time on a microcomputer; see more details in Section IV.

III Our Approach – PnP via SDP Dual

In this section we present our main provable algorithm for the PnP problem. We aim to cast the PnP problem as an instance of a semidefinite programming (SDP) problem, and then solve this instance via the dual formulation only. Considering the dual formulation reduces dramatically the number of variables, as well as the practical running time.

The quaternion-based formulation in (3) is relaxed to an SDP optimization problem, as follows.

| (4) |

where is a polynomial of degree with unknown coefficients.

SOS relaxation. Problem (3) is equivalent to Problem (4) if we replace “.. is sum of squares” (SOS) by “…is a positive polynomial”. Unfortunately, while an SOS polynomial is always a positive polynomial, the opposite is not true. Indeed, there exists a very specific and synthetic example of a PnP input that SOS fails to solve, at least in its first degree [3].

Nevertheless, recent studies showed that, in the case of computer vision applications, the SOS relaxation usually yields the optimal solution in practice. See possible explanations in [9] and many references therein. However, SOS method either provides a proof, called certificate, that the returned solution is -optimal, or states that it failed.

Indeed, in our experimental results we never encountered such a failure of the SOS relaxation, which explains why our algorithm performs so well in Fig. 2. The required condition on the input is a known open problem [3] so in Theorem 2 we simply assume that the relaxation holds as follows.

Assumption 1 (SOS is optimal)

Further relaxations. For , let be the vector of monomials of up to degree . To enforce that a polynomial is SOS, one must find a positive semidifinite matrix such that . Hence, (4) reduces to minimizing under the constraints that and .

By simply comparing coefficients between both sides of the above constraint, similar to the notation in the book [8] by Boyd et al., we obtain the following SDP primal problem

| (5) |

where , is a constant matrix that represents the coefficients comparison, is a vector that depends only on the matrix , and is a vector of unknowns such that: represents , contains the unknown coefficients of the polynomial , and contains the unknowns of the matrix . The above equation contains variables and equations (as the number of monomials of the four quaternions with degree at most ).

Input dependence. Observe that while the vector depends on the PnP problem’s input, the matrix and vector are constant. To this end, the PnP instance at hand is encapsulated in the vector only.

III-A SDP Dual Formulation

The primal problem (5) has a dual formulation of the form

| (6) |

For more details on the relation between the primal and dual problems see Section in [8].

Existing solvers. Software such as SeDuMi [44] and Gloptipoly [23] aim to solve such problems via the Centering-Predictor-Corrector method [43], where both the primal and the dual problems, (5) and (6) respectively, are solved simultaneously.

Why the dual formulation? The dual formulation in (6) has only variables, as compared to the primal problem (5) which has . Furthermore, the solution of the primal problem only provides an SOS decomposition for the SDP objective along with the minimal value of the objective function. However, we are interested in recovering the quaternion vector from (3) which minimizes the objective. This quaternion can be extracted from the vector of the dual problem, as detailed in [26]. To this end, we aim to solve the dual problem only, via the barrier method [8]. As of correctness, by Section 5.9.1 of [8], strong duality exists in our problem. In other words, the optimal solutions for the primal and dual problems are the same.

III-B Barrier Method for Solving the Dual SDP Problem

The barrier method replaces a constraint in an optimization problem with a self-concordant convex barrier to the objective function; see more details in [8]. A suggested barrier for a PSD constraint is the log-determinant function. The barrier method incorporates a weight that is multiplied by the original objective function, which increases over time, thus the summation of the two components slowly converges to the global minimum or maximum) of the original objective:

| (7) |

Observe that and are constants, as detailed in (5) above, and and are parameters of this function as they are not constant; will change during the optimization process, and depends on the input.

We aim to solve the above maximization problem using Newton steps with equality constraints, as explained in section 10.2 of [8]. Newton steps can be performed relatively fast. The most time consuming operation is formulating the hessian, whose dimensions are . Each Newton step with equality constraints requires solving a linear equation system, and then performing a line search over that direction; see Algorithm 1. The line search is an exact-line-search; see Section 9.2 of [8].

The convergence analysis given in Section 10.2 of [8] conclude that applying Newton’s method with equality constraints is exactly the same as applying Newton’s method to the reduced problem obtained by eliminating the equality constraints; the convergence guarantees and bounds of Newton’s method for unconstrained problems are also relevant for the linearly constrained instances. Thus, the constraint in (6) does not affect our algorithm’s theoretical convergence and guarantees in what follows.

III-C Initial Guess

As mentioned in Section I, the bound over the number of required Newton steps depends linearly on the distance between the initial objective function value and its optimal value , for the current weight .

To this end, we observe that the matrix in (5)–(7) depends on the PnP problem, but it is independent of a specific PnP instance (the input sets of paired points and lines), which is encoded in the vector . Also, if we define , the vector

| (8) |

is also independent of . Hence, above can be computed once (offline) for all future instances. Indeed, it is encoded in our open code [1]; see also Algorithm 1.

III-D Algorithm

In this section we provide our main algorithm for solving the PnP problem via the SDP Dual formulation and the barrier method, as explained in the previous section; see Algorithm 1. Our main claim is given in Theorem 2.

Overview of Algorithm 1. Algorithm 1 takes as input a paired set of 3D points and lines as described in Section II-A, as well as the desired accuracy . The algorithm aims to output an alignment that satisfies

| (9) |

where the minimum is over every pair in . This is by maximizing the objective function in (6).

We first compute the matrix and vectors and from Eq. (5)–(7). Then, at Line 1, we recover an initial guess for Eq. (7), as described in Section III-C. After that, we tune some parameters which are necessary for bounding the running time of the algorithm; see Theorem 2.

In the loop at Line 1 we apply the barrier method, where at each iteration we: (i) utilize Newton’s method (Lines 1–1) to minimize from (7), (ii) multiply by , and (iii) repeat. Each Newton step requires computing the Hessian and the gradient of this function in order to recover the optimization direction . The optimal point along the direction is recovered via exact line search at Line 1. The condition for the number of Newton iterations is derived in Section of [8]. Lastly, we extract the desired output from the recovered .

The following theorem gives our main claim of correctness and running time for Algorithm 1. While the running time depends on the model’s finite-precision , in practice the number of iterations is almost always in .

Theorem 2

Let and be a pair of ordered sets of points and corresponding lines, respectively, both in . Let , and let be the output of a call to . Then, the pair can be computed in time such that, under Assumption 1,

Proof:

Correctness. Fortunately, strong duality holds for our dual problem and the dual’s dual (i.e., the primal), as the conditions for strong duality in Section of [8] hold. Hence, solving (6) is equivalent to solving (5).

Let be the vector in hand at Line 1 of Algorithm 1. By Assumption 1, it suffices to prove that maximizes the dual problem (6) up to an additive error of .

It is known that the Log-Determinant function is the barrier of the semidefinite cone; see e.g., [8]. By this and the barrier method [8], an -approximation to (7) for minimizes (6) up to . Finally, the constraint in (7) can be removed as explained in Section III-B. The resulting function in (7) is the sum of a pair of functions, . The function is a linear function and thus a concave function, and it is easy to prove that is also a concave function. Hence, is a concave function that can be maximized via Newton’s method for every value .

Bounding the running time. The function is linear and hence self-concordant, and is self-concordant as proven in Section of [8]. Therefore, our objective function is also self-concordant (i,e., satisfies some condition on the ratio between its second and third derivatives). This is crucial for what follows.

We need to bound the number of Newton’s iterations for a specific value of , i.e, the number of iterations for the internal while loop in Algorithm 1. We do this separately for the initial value of , and for the other values of .

Initial value of . Let be the set of all vectors that satisfy the constraints in (7), be the initial value of , where is the constant such that is the number of bits used for storing variables in our finite-precision model. We can now compute , using the initial solution above which (i) is in and thus feasible, and (ii) satisfies:

| (10) | |||

| (11) | |||

| (12) |

where (10) and (11) hold by the optimality of in (8) and , respectively, and (12) hold since and the maximum absolute coordinate value is at most .

Other values of . The running time for Newton’s method within the barrier method for non-initial values of , over a self-concordant objective function is bounded by since the number of inequalities is and our weight multiplication constant is in our case. See formal proof in Section of [8]. The number of barrier method iterations is upper bounded by .

Therefore, the total Newton iterations is bounded by , where each iteration takes time. ∎

IV Experimental Results

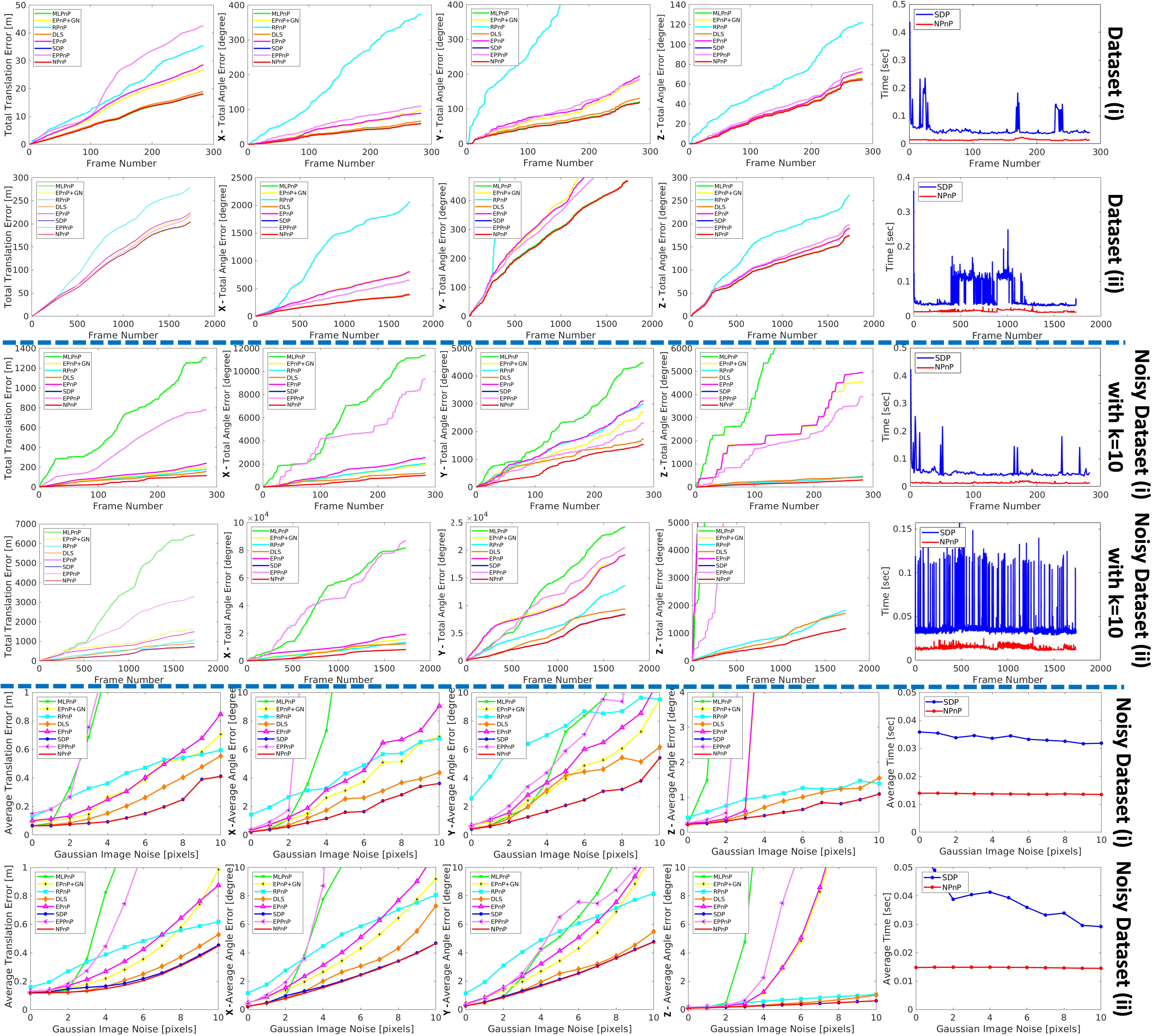

IV-A Pose Estimation using Real-World Data

We implemented our PnP Algorithm from Section III in C++, and in this section we evaluate its empirical results on real-world datasets for pose estimation and autonomous drone navigation. Open source code can be found in [1]. The hardware used was a standard HP ZBook laptop with an Intel Core i7-10750H CPU @2.60GHzx12 and 32GB of RAM. The results demonstrate that the proposed PnP Algorithm consistently achieves more accurate and stable results as compared to the state of the art and widely common PnP implementations.

Competing methods

Throughout the experiments, we consider the following PnP implementations:

-

(i)

ourPnP: A direct implementation of the proposed algorithm from Section III.

-

(ii)

SDP: An SDP relaxation of the PnP problem, which also utilizes SOS [41].

-

(iii)

EPnP: Expresses the points as a weighted sum of four virtual control points [27].

-

(iv)

EPnP+GN: Similar to EPnP with an additional Gauss-Newton refinement.

-

(v)

DLS: Computes all pose solutions, as a minima of a non-linear least-squares cost function [24].

-

(vi)

RPnP: An official implementation of [28].

-

(vii)

DLT: An official implementation of [2].

-

(viii)

LHM: An official implementation of [29].

Evaluation metric

Given a ground truth and some recovered , we utilize the following translation and angle errors: and , , , where and are the three Euler angles obtained from decomposing and respectively.

Real-World Datasets

For our experiments, we collected our own real-world datasets, as follows.

Dataset (i): Real-world data from a webcam To collect this data, an OptiTrack motion capture system consisting of cameras was deployed in a room along with a set of distinct ArUco markers. This setup is illustrated in the supplementary video. The 3D positions of the ArUco markers in the OptiTrack’s coordinates system were extracted using the OptiTrack system itself. A calibrated Logitech C920 RGB camera was placed on a moving rig, and a set of IR markers were placed on top of this camera so it can be easily tracked. The rig was moved around in a continuous movement to form a rectangle with side lengths of roughly x meters. For every frame captured from the Logitech camera using FPS we: (i) detect the ArUco marker positions which are visible in the image, and (ii) use the OptiTrack system to estimate the ground truth pose of the camera (via the IR markers).

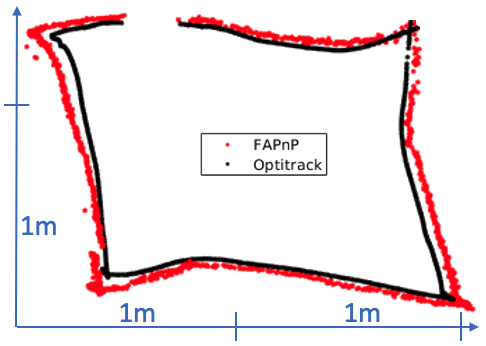

Dataset (ii): Real-world data from a toy drone This dataset was collected similarly to Dataset (i) above, but the Logitech camera and its moving rig were replaced with a toy micro drone, called DJI Tello, which is equipped with a small RGB camera, as in Fig. 1. The drone was manually controlled and flown around the room. The 3D positions of the ArUco markers were the same as in Dataset (i), and the detection of the 2D pixels in each frame was conducted in a similar manner as well. This dataset aims to reflect a real world autonomous drone navigation experiment. The goal is to recover the pose of the drone during its flight, by estimating the camera’s pose via an external laptop. This is crucial for autonomous drone navigation tasks; see Section IV-B for such usage of our algorithm. The drone’s trajectory as captured using the ground truth motion capture system is presented in Fig. 3. The drone’s position, as computed using our NPnP is also presented.

Noisy Datasets (i) and (ii). To test the resiliency of our algorithm in the presence of noise, we conducted experiments where synthetic noise was added to the datasets above. More formally, to create noisy datasets, we added noise drawn from a normal distribution with zero mean and an std of to the set of 2D input points in each dataset, prior to their conversion to a set of 3D lines ; see Section II. Each such test was repeated times and the results were averaged.

The experiment

The goal in this experiment was to estimate the camera’s alignment for every frame of the experiment, using the data collected in each of the datasets above (or their noisy version), via each of the PnP methods. The recovered pose was compared to the camera’s ground truth pose extracted using the OptiTrack. The results are presented in Fig. 2.

IV-B Autonomous Drone Navigation

To demonstrate a potential usages of our fast and accurate PnP solver, we placed a micro computer equipped with a small camera, namely, a Raspberry PI Zero chip [45] with its dedicated RGB camera, on top of the toy drone from the previous section. The goal was to perform autonomous navigation along a predefined route in an indoor environment, given a known 3D map of the space. Such a 3D map was constructed in a pre-processing step using a known SLAM system [32]. To our knowledge, this is the first such light-weight (<gr), autonomous, and on-board nano-drone navigation system. The system is also low-cost (<). The autonomous flight is presented in the supplementary video.

IV-C Overall Discussion.

Accuracy. As presented in Fig. 2, our NPnP consistently achieves results with an error smaller by x up to x, as compared to the completing methods. The only exception is the SDP, which, as expected, outputs the exact same results as NPnP. As the graphs demonstrate, the globally optimal algorithms achieve smaller errors compared to the non-provable alternatives.

Time comparison. Firstly, we observe that, in practice, the number of Newton iterations that were actually needed was always a small constant (), independent of . Secondly, as our main concern is the accuracy of the output results, our time comparison is focused only on the most accurate competing methods. As the graphs show, those methods are NPnP and SDP, which obtain roughly the same accuracy. As Fig. 2 shows, our naive implementation of NPnP is already faster by up to x as compared to SDP, and more consistent. We leave further code optimizations to our naive implementation for future work.

V Conclusions and Future Work

We presented an SOS-based PnP solver that, unlike previous works, has both (i) provable guarantees on its running time and approximation error, and (ii) efficient implementation for real-time systems on weak IoT devices. Experimental results show that our algorithm is consistently more accurate than existing solvers, and faster then the state-of-the-art SOS solvers. The main challange was to have a self-contained algorithm that does not use external heavy solvers. The companion video and open code show how to apply our NPnP algorithm for real-time indoor navigation by using DJI’s toy-drone and an on-board RPI0.

A main open problem is to remove Assumption 1. While our SOS solver always returned the optimal solution in practice as expected by [9], there is synthetic input where it should fail [3]. Since in practice the number of required Newton steps was roughly , we believe that the running time of our algorithm can be reduced to only .

References

- [1] Open source code for all the algorithms presented in this paper. will be published upon acceptance of the paper or reviewer’s request.

- [2] Y. I. Abdel-Aziz, H. Karara, and M. Hauck. Direct linear transformation from comparator coordinates into object space coordinates in close-range photogrammetry. Photo. Eng. & Remote Sensing, 81(2):103–107, 2015.

- [3] Y. Alfassi, D. Keren, and B. Reznick. The non-tightness of a convex relaxation to rotation recovery. Sensors, 21(21):7358, 2021.

- [4] E. D. Andersen and K. D. Andersen. The mosek interior point optimizer for linear programming. In High performance optimization, pages 197–232. 2000.

- [5] A. M. Andrew. Multiple view geometry in computer vision. Kybernetes, 2001.

- [6] A. Ansar and K. Daniilidis. Linear pose estimation from points or lines. IEEE TPAMI, 25(5):578–589, 2003.

- [7] T. Asfour, K. Welke, P. Azad, A. Ude, and R. Dillmann. The karlsruhe humanoid head. In Humanoids 2008-8th IEEE-RAS Int. Conf. Humanoid Robots, pages 447–453. IEEE, 2008.

- [8] S. Boyd, S. P. Boyd, and L. Vandenberghe. Convex optimization. Cambridge University Press, 2004.

- [9] L. Brynte, V. Larsson, J. P. Iglesias, C. Olsson, and F. Kahl. On the tightness of semidefinite relaxations for rotation estimation. Journal Math. Imaging and Vision, 64(1):57–67, 2022.

- [10] B. Buchberger and F. Winkler. Gröbner bases and applications, volume 17. Cambridge University Press, 1998.

- [11] S. M. Chacko and V. Kapila. An augmented reality interface for human-robot interaction in unconstrained environments. In IROS, pages 3222–3228. IEEE, 2019.

- [12] C. Choi and H. I. Christensen. Robust 3d visual tracking using particle filtering on the special euclidean group: A combined approach of keypoint and edge features. IJRR, 31(4):498–519, 2012.

- [13] F. Cutolo, P. D. Parchi, and V. Ferrari. Video see through ar head-mounted display for medical procedures. In 2014 IEEE ISMAR, pages 393–396. IEEE, 2014.

- [14] D. F. DeMenthon and L. S. Davis. Model-based object pose in 25 lines of code. IJCV, 15(1-2):123–141, 1995.

- [15] M. Dhome, M. Richetin, J.-T. Lapreste, and G. Rives. Determination of the attitude of 3d objects from a single perspective view. IEEE TPAMI, 11(12):1265–1278, 1989.

- [16] X. Du, M. H. Ang, and D. Rus. Car detection for autonomous vehicle: Lidar and vision fusion approach through deep learning framework. In IROS, pages 749–754. IEEE, 2017.

- [17] P. D. Fiore. Efficient linear solution of exterior orientation. IEEE TPAMI, 23(2):140–148, 2001.

- [18] M. A. Fischler and R. C. Bolles. Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Comm. of the ACM, 24(6):381–395, 1981.

- [19] D. Forsyth and J. Ponce. Computer vision: A modern approach. Prentice hall, 2011.

- [20] G. Guennebaud, B. Jacob, et al. Eigen. URl: http://eigen. tuxfamily. org, 3, 2010.

- [21] R. I. Hartley and F. Kahl. Global optimization through searching rotation space and optimal estimation of the essential matrix. In ICCV, pages 1–8. IEEE, 2007.

- [22] J. Heller and T. Pajdla. GpoSolver. Optimization Methods and Software, 31(2):405–434, 2016.

- [23] D. Henrion, J.-B. Lasserre, and J. Löfberg. Gloptipoly 3: moments, optimization and semidefinite programming. Optimization Methods & Software, 24(4-5):761–779, 2009.

- [24] J. A. Hesch and S. I. Roumeliotis. A direct least-squares (dls) method for pnp. In ICCV, pages 383–390. IEEE, 2011.

- [25] L. Kneip, H. Li, and Y. Seo. Upnp: An optimal o (n) solution to the absolute pose problem with universal applicability. In European Conference on Computer Vision, pages 127–142. Springer, 2014.

- [26] J. B. Lasserre. Moments, positive polynomials and their applications, volume 1. World Scientific, 2009.

- [27] V. Lepetit, F. Moreno-Noguer, and P. Fua. Epnp: An accurate o (n) solution to the pnp problem. IJCV, 81(2):155, 2009.

- [28] S. Li, C. Xu, and M. Xie. A robust o (n) solution to the perspective-n-point problem. IEEE TPAMI, 34(7):1444–1450, 2012.

- [29] C. Lu, G. Hager, and E. Mjolsness. Fast and globally convergent pose estimation from video images. TPAMI, 22(6):610–622, 2000.

- [30] S. Mehrotra. On the implementation of a primal-dual interior point method. SIAM Journal on optimization, 2(4):575–601, 1992.

- [31] A. Mosek. The mosek optimization toolbox for matlab manual, 2015.

- [32] R. Mur-Artal and J. D. Tardós. Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras. IEEE transactions on robotics, 33(5):1255–1262, 2017.

- [33] S. Nasser, I. Jubran, and D. Feldman. Autonomous toy drone via coresets for pose estimation. Sensors, 20(11):3042, 2020.

- [34] D. Oberkampf, D. F. DeMenthon, and L. S. Davis. Iterative pose estimation using coplanar feature points. Computer Vision and Image Understanding, 63(3):495–511, 1996.

- [35] C. Olsson, F. Kahl, and M. Oskarsson. Optimal estimation of perspective camera pose. In ICPR’06, volume 2, pages 5–8. IEEE, 2006.

- [36] S. Prajna, A. Papachristodoulou, and P. A. Parrilo. Introducing sostools: A general purpose sum of squares programming solver. In Conf. on Decision and Control., volume 1, pages 741–746. IEEE, 2002.

- [37] L. Quan and Z. Lan. Linear n-point camera pose determination. IEEE TPAMI, 21(8):774–780, 1999.

- [38] C. P. Quintero, S. Li, M. K. Pan, W. P. Chan, H. M. Van der Loos, and E. Croft. Robot programming through augmented trajectories in augmented reality. In IROS, pages 1838–1844. IEEE, 2018.

- [39] R. Rabinovich, I. Jubran, A. Wetzler, and R. Kimmel. Cobe-coded beacons for localization, object tracking, and slam augmentation. In CASE, pages 1367–1374. IEEE, 2020.

- [40] G. Schweighofer and A. Pinz. Robust pose estimation from a planar target. IEEE TPAMI, 28(12):2024–2030, 2006.

- [41] G. Schweighofer and A. Pinz. Globally optimal O(n) solution to the pnp problem for general camera models. In BMVC, pages 1–10, 2008.

- [42] A. Smirnov, A. Kashevnik, and A. Ponomarev. Multi-level self-organization in cyber-physical-social systems: Smart home cleaning scenario. Procedia Cirp, 30:329–334, 2015.

- [43] J. F. Sturm. Primal-dual interior point approach to semidefinite programming. Master’s thesis, Rotterdam, Nederland, 1997.

- [44] J. F. Sturm. Using SeDuMi 1.02. Optimization methods and software, 11(1-4):625–653, 1999.

- [45] E. Upton and G. Halfacree. Raspberry Pi user guide. John Wiley & Sons, 2014.

- [46] L. Vandenberghe and S. Boyd. Semidefinite programming. SIAM review, 38(1):49–95, 1996.

- [47] Y. Zheng, Y. Kuang, S. Sugimoto, K. Astrom, and M. Okutomi. Revisiting the pnp problem: A fast, general and optimal solution. In ICCV, pages 2344–2351, 2013.