Newton Informed Neural Operator for Computing Multiple Solutions of Nonlinear Partials Differential Equations

Abstract

Solving nonlinear partial differential equations (PDEs) with multiple solutions using neural networks has found widespread applications in various fields such as physics, biology, and engineering. However, classical neural network methods for solving nonlinear PDEs, such as Physics-Informed Neural Networks (PINN), Deep Ritz methods, and DeepONet, often encounter challenges when confronted with the presence of multiple solutions inherent in the nonlinear problem. These methods may encounter ill-posedness issues. In this paper, we propose a novel approach called the Newton Informed Neural Operator, which builds upon existing neural network techniques to tackle nonlinearities. Our method combines classical Newton methods, addressing well-posed problems, and efficiently learns multiple solutions in a single learning process while requiring fewer supervised data points compared to existing neural network methods.

1 Introduction

Neural networks have been extensively applied to solve partial differential equations (PDEs) in various fields, including biology, physics, and materials science [17, 9]. While much of the existing work focuses on PDEs with a singular solution, nonlinear PDEs with multiple solutions pose a significant challenge but are widely encountered in applications such as [1, 3, 27]. In this paper, we aim to solve the following nonlinear PDEs that may contain multiple solutions:

| (1) |

Here, is the domain of equation, is a nonlinear function in , and is a second-order elliptic operator given by

for given coefficient functions with

Various neural network methods have been developed to tackle partial differential equations (PDEs), including PINN [29], the Deep Ritz method [37], DeepONet [24], FNO [20], MgNO [11], and OL-DeepONet [22]. These methods can be broadly categorized into two types: function learning and operator learning approaches. In function learning, the goal is to directly learn the solution. However, these methods often encounter the limitation of only being able to learn one solution in each learning process. Furthermore, the problem becomes ill-posed when there are multiple solutions. On the other hand, operator learning aims to approximate the map between parameter functions in PDEs and the unique solution. This approach cannot address the issue of multiple solutions or find them in a single training session. We will discuss this in more detail in the next section.

In this paper, we present a novel neural network approach for solving nonlinear PDEs with multiple solutions. Our method is grounded in operator learning, allowing us to capture multiple solutions within a single training process, thus overcoming the limitations of function learning methods in neural networks. Moreover, we enhance our network architecture by incorporating traditional Newton methods [31, 1], as discussed in the next section. This integration ensures that the problem of operator learning becomes well-defined, as Newton methods provide well-defined locally, thereby ensuring a robust operator. This approach addresses the challenges associated with directly applying operator networks to such problems. Additionally, we leverage Newton information during training, enabling our method to perform effectively even with limited supervised data points. We introduce our network as the Newton Informed Neural Operator.

As mentioned earlier, our approach combines the classical Newton method, which translates nonlinear PDEs into an iterative process involving solving linear functions at each iteration. One key advantage of our method is that, once the operator is effectively learned, there’s no need to solve the linear equation in every iteration. This significantly reduces the time cost, especially in complex systems encountered in fields such as material science, biology, and chemistry. Above all, our network, termed the Newton Informed Neural Operator, can efficiently handle nonlinear PDEs with multiple solutions. It tackles well-posed problems, learns multiple solutions in a single learning process, requires fewer supervised data points compared to existing neural network methods, and saves time by eliminating the need to repeatedly learn and solve the inverse problem as in traditional Newton methods.

The following paper is organized as follows: In the next section (Section 2), we will delve into nonlinear PDEs with multiple solutions and discuss related works on solving PDEs using neural network methods. In Section 3, we will review the classical Newton method for solving PDEs and introduce the Newton Informed Neural Operator, which combines neural operators with the Newton method to address nonlinear PDEs with multiple solutions. In this section, we will also analyze the approximation and generalization errors of the Newton Informed Neural Operator. Finally, in Section 4, we present the numerical results of our neural networks for solving nonlinear PDEs.

2 Backgrounds and Relative Works

2.1 Nonlinear PDEs with multiple solutions

Significant mathematical models depicting natural phenomena in biology, physics, and materials science are rooted in nonlinear partial differential equations (PDEs) [5]. These models, characterized by their inherent nonlinearity, present complex multi-solution challenges. Illustrative examples include string theory in physics, reaction-diffusion systems in chemistry, and pattern formation in biology [16, 10]. However, experimental techniques like synchrotronic and laser methods can only observe a subset of these multiple solutions. Thus, there is an urgent need to develop computational methods to unravel these nonlinear models, offering deeper insights into the underlying physics and biology [12]. Consequently, efficient numerical techniques for identifying these solutions are pivotal in understanding these intricate systems. Despite recent advancements in numerical methods for solving nonlinear PDEs, significant computational challenges persist for large-scale systems. Specifically, the computational costs of employing Newton and Newton-like approaches are often prohibitive for the large-scale systems encountered in real-world applications. In response to these challenges [15], we propose an operator learning approach based on Newton’s method to efficiently compute multiple solutions of nonlinear PDEs.

2.2 Related works

Indeed, there are numerous approaches to solving partial differential equations (PDEs) using neural networks. Broadly speaking, these methods can be categorized into two main types: function learning and operator learning.

In function learning, neural networks are used to directly approximate the solutions to PDEs. Function learning approaches aim to directly learn the solution function itself. On the other hand, in operator learning, the focus is on learning the operator that maps input parameters to the solution of the PDE. Instead of directly approximating the solution function, the neural network learns the underlying operator that governs the behavior of the system.

Function learning methods

In the function learning, a commonly employed method for addressing this problem involves the use of Physics-Informed Neural Network (PINN)-based learning approaches, as introduced by Raissi et al. [29], and Deep Ritz Methods [37]. However, in these methods, the task becomes particularly challenging due to the ill-posed nature of the problem arising from multiple solutions. Despite employing various initial data and training methods, attaining high accuracy in solution learning remains a complex endeavor. Even when a high accuracy solution is achieved, each learning process typically results in the discovery of only one solution. The specific solution learned by the neural network is heavily influenced by the initial conditions and training methods employed. However, discerning the relationships between these factors and the learned solution remains a daunting task. In [14], the authors introduce a method called HomPINNs for learning multiple solution PDEs. In their approach, the number of solutions that can be learned depends on the availability of supervised data. However, for solutions with insufficient supervised data, whether HomPINN can successfully learn them or not remains uncontrollable.

Operator learning methods

Various approaches have been developed for operator learning to solve PDEs, including DeepONet [24], which integrates physical information [7, 22], as well as techniques like FNO [20] inspired by spectral methods, and MgNO [11], HANO [23], and WNO [21] based on multilevel methods, and transformer-based neural operators [4, 8]. These methods focus on approximating the operator between the parameters and the solutions. Firstly, they require the solutions of PDEs to be unique; otherwise, the operator is not well-defined. Secondly, they focus on the relationship between the parameter functions and the solution, rather than the initial data and multiple solutions.

3 Newton Informed Neural Operator

3.1 Review of Newton Methods to Solve Nonlinear Partial Differential Equations

To tackle this problem Eq. 1, we employ Newton’s method by linearizing the equation. Given any initial guess , for , in the -th iteration of Newton’s method, we have by solving

| (2) |

To solve Eq.(2) and repeat it times will yield one of the solutions of the nonlinear equation (1). For the differential initial data, it will converge to the differential solution of Eq.(1), which constitutes a well-posed problem. However, applying the solver to solve Eq. (1) multiple times can be time-consuming, especially in high-dimensional structures or when the number of discrete points in the solver is large. In this paper, we employ neural networks to address these challenges.

3.2 Neural Operator Structures

In this section, we introduce the structure of the DeepONet [24, 18] to approximate the operator locally in the Newton methods from Eq.(2), i.e., , where is the solution of Eq.(2), which depends on . If we can learn the operator well using the neural operator , then for an initial function , assume the -th iteration will approximate one solution, i.e., . Thus,

For another initial data, we can evaluate our neural operator and find the solution directly.

Then we discuss how to train such an operator. To begin, we define the following shallow neural operators with neurons for operators from to as

| (3) |

where , , and denote all the parameters in . Here, denotes the set of all bounded (continuous) linear operators between and , and defines the nonlinear point-wise activation.

In this paper, we will use shallow DeepONet to approximate the Newton operator. To provide a more precise description, in the shallow neural network, represents an interpolation of operators. With proper and reasonable assumptions, we can present the following theorem to ensure that DeepONet can effectively approximate the Newton method operator. The proof will be provided in the appendix. Furthermore, MgNO is replaced by as a multigrid operator [33], and FNO is some kind of kernel operator; our analysis can be generalized to such cases.

Before the proof, we need to establish some assumptions regarding the input space of the operator and in Eq. (1).

Assumption 1.

(i): For any , we have that

(ii): There exists a constant such that .

(iii): All coefficients in are and .

(iv): There exists a linear bounded operator on , a small constant , and a constant such that

Furthermore, is an -dimensional term, i.e., it can be denoted by the -dimensional vector .

Remark 1.

We want to emphasize the reasonableness of our assumptions. For condition (i), we are essentially restricting our approximation efforts to local regions. This limitation is necessary because attempting to approximate the neural operator across the entire domain could lead to issues, particularly in cases where multiple solutions exist. Consider a scenario where the input function lies between two distinct solutions. Even a small perturbation of could result in the system converging to a completely different solution. Condition (i) ensures that Equation (2) has a unique solution, allowing us to focus our approximation efforts within localized domains.

Conditions (ii) and (iii) serve to regularize the problem and ensure its tractability. These conditions are indeed straightforward to fulfill, contributing to the feasibility of the overall approach.

For the embedding operator in (iv), there are a lot of choices in DeepONet, such as finite element methods like Argyris elements [2] or embedding methods in [19, 13]. We will discuss more in the appendix. The approximation order would be , and the differential method may achieve a different order. We omit the detailed discussion in the paper. Furthermore, for the differential neural network, this embedding may be different; for example, we can use Fourier expansion [35] or multigrid methods [11] to achieve this task.

Theorem 1.

Suppose and Assumption 1 holds. Then, there exists a neural network defined as

| (4) |

such that

| (5) |

where is a smooth non-polynomial activation function, is a constant independent of , , and , is a constant depended on , is the scale of the in Assumption 1. And depends on . and are defined in Assumption 1.

3.3 Loss Functions of Newton Informed Neural Operator

3.3.1 Mean Square Error

The Mean Square Error loss function is defined as:

| (6) |

where are independently and identically distributed (i.i.d) samples in , and are uniformly i.i.d samples in .

However, using only the Mean Squared Error loss function is not sufficient for training to learn the Newton method, especially since in most cases, we do not have enough data . Furthermore, there are situations where we do not know how many solutions exist for the nonlinear equation (1). If the data is sparse around one of the solutions, it becomes impossible to effectively learn the Newton method around that solution.

Given that can be viewed as the finite data formula of , where

The smallness of can be inferred from Theorem 1. To understand the gap between and , we can rely on the following theorem. Before the proof, we need some assumptions about the data in :

Assumption 2.

(i) Boundedness: For any neural network with bounded parameters, characterized by a bound and dimension , there exists a function such that

for all , and there exist constants , such that

| (7) |

(ii) Lipschitz continuity: There exists a function , such that

| (8) |

for all , and

for the same constants as in Eq. (7).

(iii) Finite measure: There exists , such that

Theorem 2.

3.3.2 Newton Loss

As we have mentioned, relying solely on the MSE loss function can require a significant amount of data to achieve the task. However, obtaining enough data can be challenging, especially when the equation is complex and the dimension of the input space is large. Hence, we need to consider another loss function to aid learning, which is the physical information loss function [29, 7, 14, 21], referred to here as the Network loss function.

The Newton loss function is defined as:

| (9) |

where are independently and identically distributed (i.i.d) samples in , and are uniformly i.i.d samples in .

Given that can be viewed as the finite data formula of , where

To understand the gap between and , we can rely on the following theorem:

Corollary 1.

The proof of Corollary 1 is similar to that of Theorem 2; therefore, it will be omitted from the paper.

Remark 3.

If we only utilize as our loss function, as demonstrated in Theorem 2, we require both and to be large, posing a significant challenge when dealing with complex nonlinear equations. Obtaining sufficient data becomes a critical issue in such cases. In this paper, we integrate Newton information into the loss function, defining it as follows:

| (10) |

where represents the cost of the data involved in unsupervised learning. If we lack sufficient data for , we can adjust the parameters by selecting a small and increasing and . This strategy enables effective learning even when data for is limited.

In the following experiment, we will use the neural operator established in Eq. (3) and the loss function in Eq. (10) to learn one step of the Newton method locally, i.e., the map between the input and the solution in eq. (2). If we have a large dataset, we can choose a large in (10); if we have a small dataset, we will use a small to ensure the generalization of the operator is minimized. After learning one step of the Newton method using the operator neural networks, we can easily and quickly obtain the solution by the initial condition of the nonlinear PDEs (1) and find new solutions not present in the datasets.

4 Experiments

4.1 Experimental Settings

We introduce two distinct training methodologies. The first approach employs exclusively supervised data, leveraging the Mean Squared Error Loss (6) as the primary optimization criterion. The second method combines both supervised and unsupervised learning paradigms, utilizing a hybrid loss function 10 that integrates Mean Squared Error Loss (6) for small proportion of data with ground truth (supervised training dataset) and with Newton’s loss (9) for large proportion of data without ground truth (unsupervised training dataset). We call the two methods as method 1 and method 2. The approaches are designed to simulate a practical scenario with limited data availability, facilitating a comparison between these training strategies to evaluate their efficacy in small supervised data regimes. We choose the same configuration of neural operator (DeepONet) which is align with our theoretical analysis. One can find the detailed experimental settings and the datasets for each examples below in Appendix A.

4.2 Case 1: Convex problem

We consider 2D convex problem , where , and on . We investigate the training dynamics and testing performance of neural operator (DeepONet) trained with different loss functions and dataset sizes, focusing on Mean Squared Error (MSE) and Newton’s loss functions.

MSE Loss Training (Fig. 1(a)): In method 1, Effective training is observed but exhibits poor generalization, suggesting only MSE loss is not enough. Performance Comparison (Fig. 1(b)): Newton’s loss model (method 2) exhibits superior performance in both and error metrics, highlighting its enhanced generalization accuracy. This study shows the advantages of using Newton’s loss for training DeepONet models, requiring fewer supervised data points compared to method 1.

4.3 Case 2: Non-convex problem with multiple solutions

We consider a 2D Non-convex problem,

| (11) |

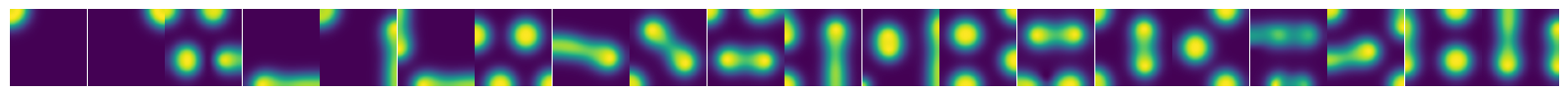

where [3]. In this case, and it is non-convex, with multiple solutions (see Figure2 for its solutions).

In the experiment, we let one of the multiple ground truth solutions is rarely touched in the supervised training dataset such that the neural operator trained via method 1 will saturate in terms of test error because of the it relies on the ground truth to recover all the patterns for multiple solution cases (as shown by the curves in Figure 2). On the other hand, the model trained via method 2 is less affected by the limited supervised data since the utilization of the Newton’s loss. One can refer Appendix A for the detailed experiments setting.

Efficiency

We utilize this problem as a case study to demonstrate the superior efficiency of our neural operator-based method as a surrogate model to Newton’ method. By benchmarking both approaches, we highlight the significant performance advantages of our neural operator model. The performance was assessed in terms of total execution time, which includes the setup of matrices and vectors, computation on the GPU, and synchronization of CUDA streams. Both methods leverage the parallel processing capabilities of the GPU. Specifically, the Newton solver explicitly uses 10 streams and CuPy with CUDA to parallelize the computation and fully utilize the GPU parallel processing capabilities, aiming to optimize execution efficiency. In contrast, the neural operator method is inherently parallelized, taking full advantage of the GPU architecture without the explicit use of multiple streams as indicated in the table. The computational times of both methods were evaluated under a common hardware configuration. One can find the detailed description of the experiments in A.5.

| Parameter | Newton’s Method | Neural Operator |

|---|---|---|

| Number of Streams | 10 | - |

| Data Type | float32 | float32 |

| Execution Time for 500 linear Newton systems (s) | 31.52 | 1.1E-4 |

| Execution Time for 5000 linear Newton systems (s) | 321.15 | 1.4E-4 |

The data presented in the table illustrates that the neural operator is significantly more efficient than the classical Newton solver on GPU. This efficiency gain is likely due to the neural operator’s ability to leverage parallel processing capabilities of GPUs more effectively than the Newton solver, though the Newton solver is also parallelized. This enhancement is crucial to improves efficiency for calculating the vast amounts of unknown patterns for complex nonlinear system such as Gray Scott model.

4.4 Gray-Scott Model

The Gray-Scott model [27] describes the reaction and diffusion of two chemical species, and , governed by the following equations:

where and are the diffusion coefficients, and and are rate constants.

Newton’s Method for Steady-State Solutions

Newton’s method is employed to find steady-state solutions ( and ) by solving the nonlinear system:

| (12) | ||||

The Gray-Scott model is highly sensitive to initial conditions, where even minor perturbations can lead to vastly different emergent patterns. Please refer Figure 4 for some examples of the patterns. This sensitivity reflects the model’s complex, non-linear dynamics that can evolve into a multitude of possible steady states based on the initial setup. Consequently, training a neural operator to map initial conditions directly to their respective steady states presents significant challenges. Such a model must learn from a vast functional space, capturing the underlying dynamics that dictate the transition from any given initial state to its final pattern. This complexity and diversity of potential outcomes is the inherent difficulty in training neural operators effectively for systems as complex as the Gray-Scott model. One can refer A.1.2 for the detailed discussion on Gray-Scott model. We employ a Neural Operator as a substitute for the Newton solver in the Gray-Scott model, which recurrently maps the initial state to the steady state.

In subfigure (a), we use a ring-like pattern as the initial state to test our learned neural operator. This particular pattern does not appear in the supervised training dataset and lacks corresponding ground truth data. Despite this, our neural operator, trained using Newton’s loss, is able to approximate the mapping of the initial solution to its correct steady state effectively. we further test our neural operator, utilizing it as a surrogate for Newton’s method to address nonlinear problems with an initial state drawn from the test dataset. The curve shows the average convergence rate of across the test dataset, where represents the prediction at the -th step by the neural operator. In subfigure (c), both the training loss curves and the test error curves are displayed, illustrating their progression throughout the training period.

5 Conclusion

In this paper, we consider using neural operators to solve nonlinear PDEs (Eq. (1)) with multiple solutions. To make the problem well-posed and to learn the multiple solutions in one learning process, we combine neural operator learning with classical Newton methods, resulting in the Newton informed neural operator. We provide a theoretical analysis of our neural operator, demonstrating that it can effectively learn the Newton operator, reduce the number of required supervised data, and learn solutions not present in the supervised learning data due to the addition of the Newton loss (9) in the loss function. Our experiments are consistent with our theoretical analysis, showcasing the advantages of our network as mentioned earlier, i.e., it requires fewer supervised data, learns solutions not present in the supervised learning data, and costs less time than classical Newton methods to solve the problem.

Reproducibility Statement

Code Availability: The code used in our experiments can be accessed via https://github.com/xll2024/newton/tree/main and also the supplementary material. Datasets can be downloaded via urls in the repository. This encompasses all scripts, functions, and auxiliary files necessary to reproduce our results. Configuration Transparency: All configurations, including hyperparameters, model architectures, and optimization settings, are explicitly provided in Appendix.

Acknowledgments and Disclosure of Funding

Y.Y. and W.H. was supported by National Institute of General Medical Sciences through grant 1R35GM146894. The work of X.L. was partially supported by the KAUST Baseline Research Fund.

References

- [1] H. Amann and P. Hess. A multiplicity result for a class of elliptic boundary value problems. Proceedings of the Royal Society of Edinburgh Section A: Mathematics, 84(1-2):145–151, 1979.

- [2] S. Brenner. The mathematical theory of finite element methods. Springer, 2008.

- [3] B. Breuer, P. McKenna, and M. Plum. Multiple solutions for a semilinear boundary value problem: a computational multiplicity proof. Journal of Differential Equations, 195(1):243–269, 2003.

- [4] Shuhao Cao. Choose a transformer: Fourier or galerkin. Advances in Neural Information Processing Systems, 34, 2021.

- [5] Mark C Cross and Pierre C Hohenberg. Pattern formation outside of equilibrium. Reviews of modern physics, 65(3):851, 1993.

- [6] L. Evans. Partial differential equations, volume 19. American Mathematical Society, 2022.

- [7] Somdatta Goswami, Aniruddha Bora, Yue Yu, and George Em Karniadakis. Physics-informed neural operators. 2022 arXiv preprint arXiv:2207.05748, 2022.

- [8] Ruchi Guo, Shuhao Cao, and Long Chen. Transformer meets boundary value inverse problems. In The Eleventh International Conference on Learning Representations, 2022.

- [9] J. Han, A. Jentzen, and W. E. Solving high-dimensional partial differential equations using deep learning. Proceedings of the National Academy of Sciences, 115(34):8505–8510, 2018.

- [10] W. Hao and C. Xue. Spatial pattern formation in reaction–diffusion models: a computational approach. Journal of mathematical biology, 80:521–543, 2020.

- [11] J. He, X. Liu, and J. Xu. Mgno: Efficient parameterization of linear operators via multigrid. In The Twelfth International Conference on Learning Representations, 2023.

- [12] Rebecca B Hoyle. Pattern formation: an introduction to methods. Cambridge University Press, 2006.

- [13] J. Hu and S. Zhang. The minimal conforming finite element spaces on rectangular grids. Mathematics of Computation, 84(292):563–579, 2015.

- [14] Y. Huang, W. Hao, and G. Lin. Hompinns: Homotopy physics-informed neural networks for learning multiple solutions of nonlinear elliptic differential equations. Computers & Mathematics with Applications, 121:62–73, 2022.

- [15] Carl T Kelley. Solving nonlinear equations with Newton’s method. SIAM, 2003.

- [16] Shigeru Kondo and Takashi Miura. Reaction-diffusion model as a framework for understanding biological pattern formation. science, 329(5999):1616–1620, 2010.

- [17] I. Lagaris, A. Likas, and D. Fotiadis. Artificial neural networks for solving ordinary and partial differential equations. IEEE transactions on neural networks, 9(5):987–1000, 1998.

- [18] Samuel Lanthaler, Siddhartha Mishra, and George E Karniadakis. Error estimates for deeponets: A deep learning framework in infinite dimensions. Transactions of Mathematics and Its Applications, 6(1):tnac001, 2022.

- [19] Z. Li and N. Yan. New error estimates of bi-cubic hermite finite element methods for biharmonic equations. Journal of computational and applied mathematics, 142(2):251–285, 2002.

- [20] Zongyi Li, Nikola Borislavov Kovachki, Kamyar Azizzadenesheli, Kaushik Bhattacharya, Andrew Stuart, and Anima Anandkumar. Fourier NeuralOperator for Parametric Partial Differential Equations. In International Conference on Learning Representations, 2020.

- [21] Zongyi Li, Hongkai Zheng, Nikola B Kovachki, David Jin, Haoxuan Chen, Burigede Liu, Kamyar Azizzadenesheli, and Anima Anandkumar. Physics-informed neural operator for learning partial differential equations. CoRR, abs/2111.03794, 2021.

- [22] B. Lin, Z. Mao, Z. Wang, and G. Karniadakis. Operator learning enhanced physics-informed neural networks for solving partial differential equations characterized by sharp solutions. arXiv preprint arXiv:2310.19590, 2023.

- [23] Xinliang Liu, Bo Xu, Shuhao Cao, and Lei Zhang. Mitigating spectral bias for the multiscale operator learning. Journal of Computational Physics, 506:112944, 2024.

- [24] Lu Lu, Pengzhan Jin, and George Em Karniadakis. Deeponet: Learning nonlinear operators for identifying differential equations based on the universal approximation theorem of operators. arXiv preprint arXiv:1910.03193, 2019.

- [25] Lu Lu, Xuhui Meng, Shengze Cai, Zhiping Mao, Somdatta Goswami, Zhongqiang Zhang, and George Em Karniadakis. A comprehensive and fair comparison of two neural operators (with practical extensions) based on fair data. Computer Methods in Applied Mechanics and Engineering, 393:114778, 2022.

- [26] H. Mhaskar. Neural networks for optimal approximation of smooth and analytic functions. Neural computation, 8(1):164–177, 1996.

- [27] J. Pearson. Complex patterns in a simple system. Science, 261(5118):189–192, 1993.

- [28] T. Poggio, H. Mhaskar, L. Rosasco, B. Miranda, and Q. Liao. Why and when can deep-but not shallow-networks avoid the curse of dimensionality: a review. International Journal of Automation and Computing, 14(5):503–519, 2017.

- [29] Maziar Raissi, Paris Perdikaris, and George E Karniadakis. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. Journal of Computational Physics, 378:686–707, 2019.

- [30] A. Schmidt-Hieber. Nonparametric regression using deep neural networks with relu activation function. Annals of statistics, 48(4):1875–1897, 2020.

- [31] M. Ulbrich. Semismooth newton methods for operator equations in function spaces. SIAM Journal on Optimization, 13(3):805–841, 2002.

- [32] T. Welti. High-dimensional stochastic approximation: algorithms and convergence rates. PhD thesis, ETH Zurich, 2020.

- [33] J. Xu. Two-grid discretization techniques for linear and nonlinear pdes. SIAM journal on numerical analysis, 33(5):1759–1777, 1996.

- [34] Y. Yang and J. He. Deeper or wider: A perspective from optimal generalization error with sobolev loss. International Conference on Machine Learning, 2024.

- [35] Y. Yang and Y. Xiang. Approximation of functionals by neural network without curse of dimensionality. arXiv preprint arXiv:2205.14421, 2022.

- [36] Y. Yang, H. Yang, and Y. Xiang. Nearly optimal VC-dimension and pseudo-dimension bounds for deep neural network derivatives. NuerIPS 2023, 2023.

- [37] B. Yu and W. E. The deep ritz method: a deep learning-based numerical algorithm for solving variational problems. Communications in Mathematics and Statistics, 6(1):1–12, 2018.

Appendix A Experimental settings

A.1 Background on the PDEs and generation of datasets

A.1.1 Case 1: convex problem

Function and Jacobian

The function might typically be defined as:

The Jacobian , for the given function , involves the derivative of with respect to , which includes the Laplace operator and the derivative of the nonlinear term:

The dataset are generated by sampling the initial state and then calculate the convergent sequence by Newton’s method. Each convergent sequence is one data entry in the dataset.

The analysis of function and Jacobian for the non-convex problem (case 2) is similar to the convex problem except that its Jacobian such that Newton’s system is not positive definite.

A.1.2 Gray Scott model

Jacobian Matrix

The Jacobian matrix of the system is crucial for applying Newton’s method:

with components:

The numerical simulation of the Gray-Scott model was configured with the following parameters:

-

•

Grid Size: The simulation grid is square with points on each side, leading to a total of grid points. This resolution was chosen to balance computational efficiency with spatial resolution sufficient to capture detailed patterns. The spacing between each grid point, , is computed as . This ensures that the domain is normalized to a unit square, which simplifies the analysis and scaling of diffusion rates.

-

•

Diffusion Coefficients: The diffusion coefficients for species and are set to and , respectively. These values determine the rate at which each species diffuses through the spatial domain.

-

•

Reaction Rates: The reaction rate and feed rate are crucial parameters that govern the dynamics of the system. For this simulation, is set to 0.065 and to 0.04, influencing the production and removal rates of the chemical species.

Simulations

The simulation utilizes a finite difference method for spatial discretization and Newton’s method to solve the steady state given the initial state. The algorithm is detailed in 1.

A.2 Implementations of loss functions

Discrete Newton’s Loss

In solving partial differential equations (PDEs) numerically on a regular grid, the Laplace operator and other differential terms can be efficiently computed using convolution. Here, we detail the method for calculating where is the Jacobian matrix, is the Newton step, and is the function defining the PDE.

Discretization

Consider a discrete representation of a function on a grid. The function and its perturbation are represented as matrices:

The function , which involves both linear and nonlinear terms, is similarly represented as .

Laplace Operator

Regarding the representing the with grid function , the discretized Laplace operator using a finite difference method can be expressed as a convolution:

This convolution computes the result of the Laplace operator applied to the grid function . The boundary conditions can be further incorporated in the convolution with different padding mode. Dirichlet boundary condition corresponds to zeros padding while Neumann boundary condition corresponds to replicate padding.

A.3 Architecture of DeepONet

A variant of DeepONet is used in our Newton informed neural operator. In the DeepONet, we introduce a hybrid architecture that combines convolutional layers with a static trunk basis, optimized for grid-based data inputs common in computational applications like computational biology and materials science.

Branch Network

The branch network is designed to effectively downsample and process the spatial features through a series of convolutional layers:

-

•

A Conv2D layer with 128 filters (7x7, stride 2) initiates the feature extraction, reducing the input dimensionality while capturing coarse spatial features.

-

•

This is followed by additional Conv2D layers (128 filters, 5x5 kernel, stride 2 and subsequently 3x3 with padding, 1x1) which further refine and compact the feature representation.

-

•

The convolutional output is flattened and processed through two fully connected layers (256 units then down to branch features), using GELU activation.

Trunk Network

The trunk utilizes a static basis represented by the tensor V, incorporated as a non-trainable component: The tensor V is precomputed, using Proper Orthogonal Decomposition (POD) as in [25], and is dimensionally compatible with the output of the branch network.

Forward Pass

During the forward computation, the branch network outputs are projected onto the trunk’s static basis via matrix multiplication, resulting in a feature matrix that is reshaped into the grid dimensionality for output.

Hyperparameters

The following table 2 summarizes the key hyperparameters used in the DeepONet architecture:

| Parameter | Value |

|---|---|

| Number of Conv2D layers | 5 |

| Filters in Conv2D layers | 128, 128, 128, 128, 256 |

| Kernel sizes in Conv2D layers | 7x7, 5x5, 3x3, 1x1, 5x5 |

| Strides in Conv2D layers | 2, 2, 1, 1, 2 |

| Fully Connected Layer Sizes | 256, branch features |

| Activation Function | GELU |

A.4 Training settings

Below we summarize the key configurations and parameters employed in the training for three cases:

Dataset

-

•

Case 1: For method 1, we use 500 supervised data samples (with ground truth) while for method 2, we use 5000 unsupervised data samples (only with the initial state) and 500 supervised data samples.

-

•

Case 2: For method 1, we use 5000 supervised data while for method 2, we use 5000 unsupervised data samples and 5000 supervised data samples.

-

•

Case 3 (Gray-Scott model): We only perform the method 2, with 10000 supervised data samples and 50000 unsupervised data samples.

Optimization Technique

-

•

Optimizer: Adam, with a learning rate of and a weight decay of .

-

•

Training Epochs: The model was trained over 1000 epochs to ensure convergence and we use Batch Size: 50.

These settings underscore our commitment to precision and detailed examination of neural operator efficiency in computational tasks. Our architecture and optimization choices are particularly tailored to explore and exploit the capabilities of neural networks in processing complex systems simulations.

A.5 Benchmarking Newton’s Method and neural operator based method

Experimental Setup

The benchmark study was conducted to evaluate the performance of a GPU-accelerated implementation of Newton’s method, designed to solve systems of linear equations derived from discretizing partial differential equations. The implementation utilized CuPy with CUDA to leverage the parallel processing capabilities of the NVIDIA A100 GPU. The hardware comprises Intel Cascade Lake 2.5 GHz CPU, an NVIDIA A100 GPU.

Software Environment:

Ubuntu 20.04 LTS. Python Version: 3.8. CUDA Version: 11.4.

The Newton’s method was implemented to solve the Laplacian equation over a discretized domain. Multiple system solutions were computed in parallel using CUDA streams. Key parameters of the experiment are as follows: Data Type (dtype): Single precision floating point (float32). Number of Streams: 10 CUDA streams to process data in parallel. Number of Repeated Calculations: The Newton method was executed multiples times for 500/5000 Newton linear systems, respectively, distributed evenly across the streams. Function to Solve Systems: The CuPy’s spsolve was used for solving the sparse matrix systems. The following algorithm 1 summary the procedure to benchmark the time used for solving Newton’s system for 5000 different initial state.

Appendix B Supplemental material for proof

B.1 Preliminaries

Definition 1 (Sobolev Spaces [6]).

Let be and let be the operator of the weak derivative of a single variable function and be the partial derivative where and is the derivative in the -th variable. Let and . Then we define Sobolev spaces

with a norm

if , and .

Furthermore, for , if and only if for each and

When , denote as for .

Proposition 1 ([26]).

Suppose is a is a continuous non-polynomial function and is a compact in , then there are positive integers , constants for and bounded linear functionals such that for any ,

| (13) |

Proposition 2 ([28, 36]).

Suppose is a continuous non-polynomial function and is a compact subset of . For any Lipschitz-continuous function , there exists a shallow neural network such that

| (14) |

where depends on the Lipschitz constant but is independent of .

Lemma 1 ([18]).

The -covering number of , , satisfies

for some constant , independent of , , and .

Step 5: Now we estimate

B.2 Proof of Theorem 1

In this subsection, we present the proof of Theorem 1, which describes the approximation ability of DeepONet. The sketch of the proof is illustrated in Fig. 6.

Proof of Theorem 1.

Step 1: Firstly, we need to verify that the target operator is well-defined.

Due to Assumption 1 (i) and [6, Theorem 6 in Section 6.2], we know that for , Equation (2) will have unique solutions. This means that is a well-defined operator for the input space .

Step 2: Secondly, we aim to verify that is a Lipschitz-continuous operator in for .

Consider the following:

| (15) |

where is the solution of Eq.(2) for the input , and is the solution of Eq.(2) for the input . Denote

Therefore, we have:

| (16) |

Since and are in and is in (Assumption 1 (iii)), according to [6, Theorem 4 in Section 6.3], there exist constants 111In this paper, we consistently employ the symbol as a constant, which may vary from line to line. and such that:

| (17) |

The last inequality is due to the boundedness of (Assumption 1 (ii)).

Step 3: In the approximation, we first reduce the operator learning to functional learning.

When the input function belongs to , the output function also belongs to , provided that is of class . The function can be approximated by a two-layer network architected by the activation function , which is not a polynomial, in the following form by Proposition 1 [26] (given in Subsection 16):

| (18) |

where , for , is a continuous functional, and is a constant independent of the parameters.

Denote , which is a Lipschitz-continuous functional from to , which is due to is a Lipschitz-continuous operator and is a linear functional. The remaining task in approximation is to approximate these functionals by neural networks.

Step 4: In this step, we reduce the functional learning to function learning by applying the operator as in Assumption 1 (iv).

Based on being a Lipschitz-continuous functional in , we have

where is the Lipschitz constant of for .

Furthermore, since is an -dimensional term, i.e., it can be denoted by the -dimensional vector , we can rewrite as , where for . Furthermore, is a Lipschitz-continuous function since is Lipschitz-continuous and is a continuous linear operator.

Step 5: In this step, we will approximate using shallow neural networks.

Due to Proposition 2, we have that there is a shallow neural network such that

| (19) |

where , , and . For the simplicity notations, we can replace by a operator .

Above all, we have that there is a neural network in such that

| (20) |

where is a constant independent of , , and , is a constant depended on .

∎

Here, we discuss more about the embedding operator . One approach is to use the Argyris element [2]. This method involves considering the degrees of freedom shown in Fig. 7. In this figure, each denotes evaluation at a point, the inner circle represents evaluation of the gradient at the center, and the outer circle denotes evaluation of the three second derivatives at the center. The arrows indicate the evaluation of the normal derivatives at the three midpoints.

B.3 Proof of Theorem 2

Proof of Theorem 2.

Step 1: To begin with, we introduce a new term called the middle term, denoted as , defined as follows:

This term represents the limit case of as the number of samples in the domain of the output space tends to infinity ().

Then the error can be divided into two parts:

| (21) |

Step 2: For , this is the classical generalization error analysis, and the result can be obtained from [30, 34]. We omit the details of this part, which can be expressed as

| (22) |

where is independent of the number of parameters and the sample size . In the following steps, we are going to estimate , which is the error that comes from the sampling of the input space of the operator.

Step 3: Denote

We first estimate the gap between and for any bounded parameters . Due to Assumption 2 (i) and (ii), we have that

| (23) |

Step 4: Based on Step 3, we are going to estimate

by covering the number of the spaces.

Set is a -covering of i.e. for any in , there exists with . Then we have

| (24) |

For , it can be approximate by

Step 5: Now we estimate .

Due to Assumption 2 and directly calculation, we have that

for constants , depending only the measure and the constant appearing in the upper bound (7).

Next, let . Then,

and thus we conclude that

On the other hand, we have

Increasing the constant , if necessary, we can further estimate

where depends on and the constant appearing in (7), but is independent of and . We can express this dependence in the form , as the constants and depend on the Gaussian tail of and the upper bound on .

Therefore,

| (25) |

∎