New Variants of Frank-Wolfe Algorithm for Video Co-localization Problem

Abstract

The co-localization problem is a model that simultaneously localizes objects of the same class within a series of images or videos. In [3], authors present new variants of the Frank-Wolfe algorithm (aka conditional gradient) that increase the efficiency in solving the image and video co-localization problems. The authors show the efficiency of their methods with the rate of decrease in a value called the Wolfe gap in each iteration of the algorithm. In this project, inspired by the conditional gradient sliding algorithm (CGS) [1], We propose algorithms for solving such problems and demonstrate the efficiency of the proposed algorithms through numerical experiments. The efficiency of these methods with respect to the Wolfe gap is compared with implementing them on the YouTube-Objects dataset for videos.

keywords:

Frank-Wolfe, conditional gradient sliding, video co-localization1 Image and Video Co-Localization Problems

Problems in recognizing and localizing particular objects in images and videos have received much attention recently as internet photo and video sharing have become increasingly popular. Co-localization involves localizing with bounding boxes in a set of images or videos as a sequence of images (frames).

2 Model Setup for Images

Our ultimate goal is to localize the common object in a set of images or in a series of frames of a video. Here we first have a brief review of image and video models based on formulation in [3]. To this end we review the required back grounds in each step as much as the features and variables in the mathematical programming model become understandable. Note that this formulation is based on formulation introduced in [4] for image co-localization. Quadratic formulation that we review in this section localizes any set of images and videos, simultaneously. In [6, 7, 8] also, we can find similar discrete optimization approaches in various computer vision applications.

2.1 Objectness for Images

Suppose that we have a set of given images, and our goal is to localize the common object in each image. One approach is to find candidate boxes in each image that potentially contain an object using objectness [5].

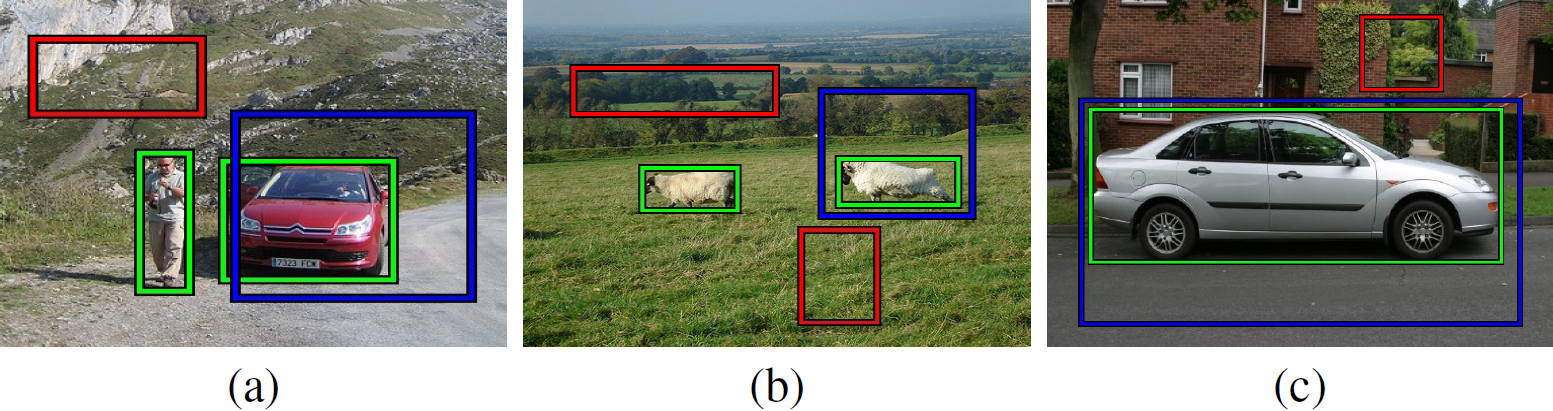

While object detectors for images are usually specialized for one object class such as cars, airplanes, cats, or dogs, objectness quantifies how likely it is for an image window to cover an object of any class. In an image, objects have a well-defined boundary and center, cats, dogs, and chairs, as opposed to indefinite background, such as walls, sky, grass, and road. Figure 1 illustrates the desired behavior of an objectness measure. Green windows must score highest windows fitting an object tight, blue windows should score lower windows covering partly an object and partly the background, and red windows should score lowest windows containing only partial background. This approach and the way we score the windows is designed in [5] and explicitly trained to distinguish windows containing an object from background windows.

Using objectness, we generate candidate boxes (e.g. green boxes in Figure 1) for each image that could potentially contain an object. In other words, if we define to be the set of all boxed in image . Then the goal is to select the box that contains the object, from each image, jointly. Also. for simplicity let and the total number of boxes in all images.

2.2 Feature representation

Assume that we have determined candidate boxes in each of two the different images and for any . A common object in and might be in different shape, scale, color, brightness, angle and many other features. Therefore, it is critical to extract distinctive invariant features from images that can be used to perform reliable matching between different views of an object. David G. Lowe in [9] introduces a method that finds features that are invariant to image scaling and rotation, and partially invariant to change in illumination and 3D camera view point. Using his method, large number of features can be extracted from typical images with efficient algorithms, as well as the cost of extracting these features is minimized. The major stages of computation used to generate the set of image features are as follows.

-

1.

Scale-space extrema detection: The first stage of computation searches over all scales and image locations. It is implemented efficiently by using a difference-of-Gaussian function to identify potential interest points that are invariant to scale and orientation.

-

2.

Keypoint localization: At each candidate location, a detailed model is fit to determine location and scale. Keypoints are selected based on measures of their stability.

-

3.

Orientation assignment: One or more orientations are assigned to each keypoint location based on local image gradient directions. All future operations are performed on image data that has been transformed relative to the assigned orientation, scale, and location for each feature, thereby providing invariance to these transformations.

-

4.

Keypoint descriptor: The local image gradients are measured at the selected scale in the region around each keypoint. These are transformed into a representation that allows for significant levels of local shape distortion and change in illumination.

This process is called Scale Invariant Feature Transform (SIFT). SIFT transforms image data into scale-invariant coordinates relative to local features. Using SIFT we can generate large numbers of features that densely cover the image over full range of scales and locations.

Let be a box in . Then we denote the SIFT feature representation of as where is the dimensional feature descriptor for each box in . Finally, we stack the feature vectors to form a feature matrix .

2.3 Prior, Similarity, and Discriminability of boxes

Let us denote the boxes that contain an instance of the common object as positive boxes, and the ones that don’t as negative boxes. Then a prior is introduced for each box that represents a score that the box is positive. This happens using a saliency map [10] for each box and the prior is in fact the average saliency within the box, weighted by the size of the box. Finally we stack these values into the dimensional vector as the prior vector.

In addition, boxes that have the similar appearance should be labeled the same. This happens through a matrix called similarity matrix denoted by . Similarity matrix of boxes in is based on the box feature matrix described above. Let and be any two boxes in where . Then similarity matrix is computed based on the -distance as

| (1) |

where . For and where boxes and belong to the same image we set . Then the normalized Laplacian matrix [11] is computed as

| (2) |

where is the diagonal matrix composed of row sums of .

2.4 Model Formulation

Associated with each box we define a binary variable where when is a positive box (contains an instance of the common object) and 0 otherwise. Then we define the integer vector variable

| (3) |

Making the assumption that in each image there exist at most 1 positive box, our set of constraints are define by

| (4) |

As we introduced a prior for each box and defined the dimensional vector of average saliency within the boxes, we obtain a linear term that penalizes less salient boxes as part of the objective function:

| (5) |

Similarly, our choice of normalized Laplacian matrix defined in (2) results in a quadratic term that handles the selection of similar boxes:

| (6) |

This is motivated by the work of Shi and Malik [11] in which they have taken advantage of eigenvalues of the Laplacian for clustering by the similarity matrix. In fact, they have shown that with the eigenvector corresponding to the second smallest eigenvalue of a normalized Laplacian matrix we can cluster along the graph defined by the similarity matrix, leading to normalized cuts when used for image segmentation. Also, Belkin and Niyogi [12] showed that this problem is equivalent to minimizing (6) under linear constraints. In fact, the similarity term works as a generative term which selects boxes that cluster well together [4].

Although discriminative learning techniques such as support vector machines and ridge regression has been widely used on many supervised problems in which there are know labels, they can be used in this unsupervised case where the labels of boxes are unknown [13, 14]. Motivated by [15], we consider the ridge regression objective function for boxes:

| (7) |

where is the dimensional weight vector of the classifier, and is the bias. This cost function is being used among discriminative cost functions because the ridge regression problem has a explicit (closed form) solution for weights and bias which implies the quadratic function in the box labels [13]:

| (8) |

where

| (9) |

is the discriminative clustering term and in (9) is the centering projection matrix. Note that this quadratic term allows us to utilize a discriminative objective function to penalize the selection of boxes whose features are not easily linearly separable from other boxes.

Summing up our results in (4), (5), (6), and (8), the optimization problem to select the best box in each image is given by

| (10) |

where parameter regularizes the trade-off between the quadratic terms (6) and (8), and parameter handles the trade-off between the linear term (5) and the quadratic terms (6) and (8). Recall that the linear constraints ensures that one box from each image is selected in the optimal solution. Note that Hastie, Tibshirani, and Friedman in [16] showed that is a positive semi-definite matrix. Also, since matrix is positive semi-definite as well, the objective function of (10) is convex.

3 Model Setup for Videos

Co-localization in a video is very similar to the image case, as a video is a sequence of images that are called frames. While an object might not have an extreme change in size, shape, color, etc in two frames in row, co-localization in a video could be a simpler task at some point. In this section we describe the localization of a common object in a set of videos. In fact, if is a set of given videos, we explore an approach to localize a common object in each frame of each video. More precisely, we consider to be the temporally ordered set of frames of video . Here is the -th frame of the -th video and is the total number of frames, or the length of for and . Similar to what we did in image case, we set to be the set of generated candidate boxes, using objectness [5], for -th of -th video. Then, considering frames in video and m boxes in each frame, we set to be the total number of boxes in , the set of all videos.

Note that, if we set to be the ordered set of all frames in , model (10) returns a single box in each frame (image) as an optimal solution. Although the objective function of this model capture the box prior, similarity, and discriminability within different videos, as we can define a more efficient similarity mapping withing boxes in the sequence of frames in a video.

3.1 Temporal Consistency In Frames of a Video

As discussed earlier in this section, objects in consecutive frames in video data are less likely to change drastically in appearance, position, and size. This is a motivation to use a separate prior for frames or images in video case. Temporal consistency [17, 18, 23, 22, 21, 20, 19] is a powerful prior that is often leveraged in video tasks such as tracking [3]. In this approach, in consecutive frames, boxes with great difference in size and position should be unlikely to be selected together. To this end, a simple temporal similarity measure is defined between two boxes and from consecutive frames with:

| (11) |

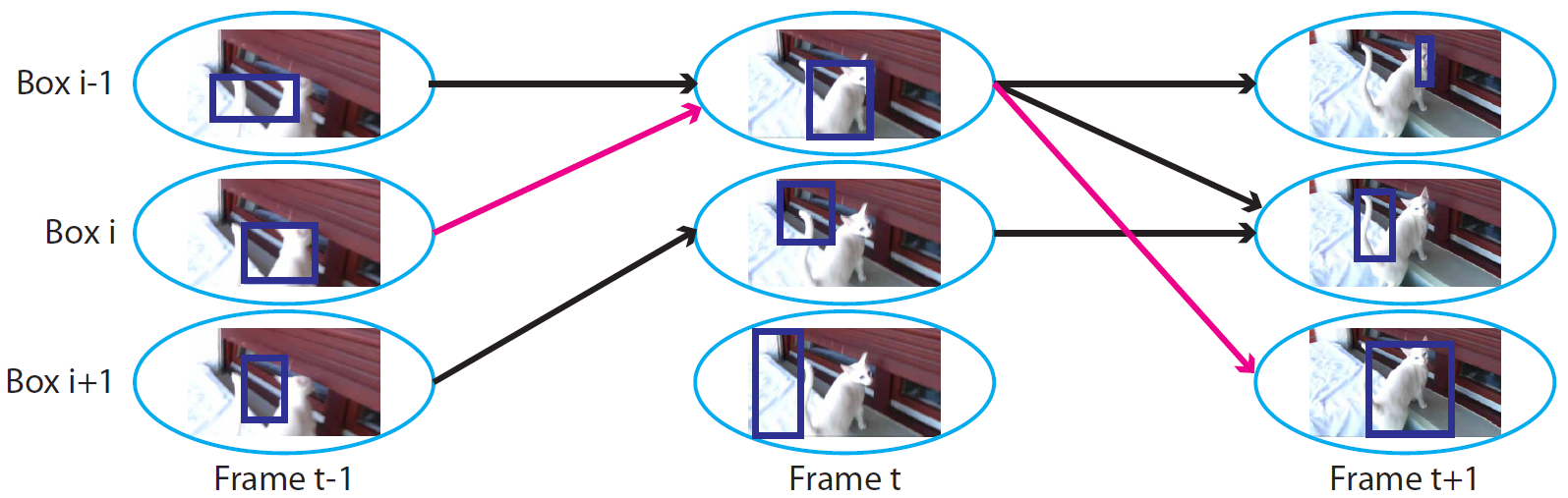

A few comments comes in place about the prior defines in (11). First, is the vector of the pixel area of box and are the vectors of the center coordinates of box , normalized by the width and height of the frame. Second, the metric defined in (11) is a similarity metric that is defined between all pairs of boxes in adjacent frames. From this metric we can define a weighted graph for video for with nodes being the boxes in each frame and edges connecting boxes in consecutive frames and weights of edges defined as temporal similarity in (11). Figure 3 is a graphical representation of graph . For small values of similarity measure with some threshold we disconnect the nodes and remove the edge. Finally, as long as we can create a weighted graph with boxes, any similarity measure other than the temporal consistency in (11) can be used to weight the edges between two boxes, which makes the temporal framework pretty flexible.

Let us define

| (12) |

to be the similarity matrix define by the temporal similarity measure, where and are any two boxes in the set of all boxes in . Similar to our approach to obtain (2), with we can compute the normalized Laplacian

| (13) |

where is the diagonal matrix composed of the row sums of . This matrix encourages us to select boxes that are similar based on the temporal similarity metric (11).

3.2 Video Model Formulation

As we discussed above, temporal similarity suggests a weighted graph for video for . In fact, a valid path in from the the first to the last frame in corresponds to feasible boxes chosen in each frame of . This motivates us to define a binary variable to be on when there is an edge between any two nodes in and off otherwise. In better words, we define the binary variable for video and boxes and in as

| (14) |

In fact, variable corresponds to the existence of edge between boxes and in . Also, we define the binary variable to be 1 if the box in frame of video contains the common object, and 0 otherwise. A type of constraint that we need to consider here is the fact that there might exist an edge between boxes and only if they are boxes in two consecutive frames. Then, for a typical box in frame of video , we define index sets and to be the set of indices of parents and children boxes in frames and , respectively, that are connected to in frame in the graph . Therefore, a required set of constraints for localization in video case are defines by:

| (15) |

The other set of constraints, which are quite similar to the image co-localization case, are the set of constraints restricting each frame of each video to has only one box that contains the common object. These constraints are defined by:

| (16) |

Finally, we define the vectors of variables

where . Then if we combine the temporal terms defined by (13) with the terms in the objective function of the original image model (10), then with constraint defines in (15) and (16), we obtain the following optimization formulation to select the box containing the common object in each frame of video:

| (17) |

where is the trade-off weight for the temporal Laplacian matrix. Note that with the new objective function in problem (17) the extra constraint (15) in video case is necessary and without that the temporal Laplacian matrix would lead the solution to an invalid path. This formulation allows us to incorporate temporal consistency into the image model.

4 Optimization

The formulation (10) obtained to find the best box in each image of the set of the given images is a standard binary constrained quadratic problem. The only issue that makes this problem a non-convex problem are the binary constraints. Relaxing these constraints to the continuous linear constraints lead the problem to the convex optimization problem and can be solved efficiently using standard methods. In fact, first order methods such as like Frank-Wolfe method that we discussed in previous chapters can handle the relaxed problem efficiently as they linearize the quadratic objective function and use a linear optimization oracle in each iteration.

Denoting the feasible region of the problem (17) by , we can follow a similar approach for this problem as we did for (10). We can relax the discrete non-convex set into the convex hull, or the integer hull for this specific case, conv(). Although standard algorithms such as interior point methods can be applied to solve this problem, but as the number of videos increases to hundreds and the dimension of the problem increases exponentially, such problems with complexity of with number of boxes, would perform very weakly. Similarly, for the relaxation of the video problem we will show in our implementations section that suggested first order methods perform efficiently. We will also propose a first order method later in this chapter and will show that it performs better than other first order methods that have been applied to this problem.

Note that, the constraints defining the set are separable in each video. In fact, for each video, these constraints are equivalent to the constraints of the shortest-path problem. This implies that the linear optimization step appears in each iteration of the first order methods are actually shortest-path problems that can be solved efficiently using dynamic programming.

Recall that Frank-Wolfe algorithm is a first order method that in each of its iteration updates the new point toward a direction by calling a linear optimization oracle. This objective function of this linear optimization is in fact a linear approximation of the objective function of (10), and (17). Frank-Wolfe algorithm specifically results in a simple linearizations with integer solution for the image and video co-localization optimization problems. For the image model, the linearlized cost function is separable for each image, and we can efficiently find the best integer solution with some threshold for this problem. For the video model also, the cost function and the constraints are separable for each video and optimizing the linearized function over the feasible region results in the shortest-path problem for each video.

In the following section we will propose an algorithm that can be applied on image and video co-localization optimization problems efficiently and we finally compare the performance of the proposed algorithm to the algorithms that are applied to these problems.

5 Proposed Algorithms

Conditional Gradient Sliding (CGS) algorithm [1], is a first order projection free method for solving convex optimization problems in which the feasible region is a convex and compact set. The major advantage of the CGS algorithm is that it skips gradient evaluation from time to time and uses the same information within some inner iterations. This property of the CGS algorithm becomes helpful when the dimension of the problem as size of the variable is relatively large and computations become more and more expensive.

As showed in previous chapters, CGS algorithm and its proposed variant, Conditional Gradient Sliding with Linesearch (CGS-ls) perform very well in many practical instances. Although the CGS and CGS-ls algorithms out-perform the Frank-Wolfe (FW) algorithm many cases, the variants of FW, such as Away-steps FW or Pairwise FW [3] converge faster to the optimal value than CGS for the image and video co-localization problem as we will show this in numerical experiments later in this chapter.

Motivated from the CGS algorithm and also Away-steps and pairwise FW methods, we propose an algorithms called Away-Steps Conditional Gradient Sliding (ACGS) and Pairwise Conditional Gradient Sliding (PCGS) that perform very well for image and video co-localization problems. ACGS and PCGS methods have iterations of the CGS method but the direction to update the new point in each iteration is motivated from the away steps and pairwise steps in the Away-steps and Pairwise FW. We will also show that the ACGS and PCGS out-perform all of the variants of the FW applied to the image and Video co-localization problem.

5.1 Away-Steps and Pairwise Conditional Gradient Sliding

The basic scheme of the ACGS and PCGS methods is obtained by performing a new search direction in CGS method, if the new direction leads the algorithm to smaller Wolfe gap. Also, similar to the CGS algorithm, the classical FW method (as procedure) is incorporated in this algorithm to solve the projection subproblems in the accelerated gradient (AG) with some approximations. The ACGS and PCGS algorithms are described as in 1 and 3.

| (18) | |||

| (19) | |||

| (20) | |||

| (21) | |||

| (22) | |||

| (23) | |||

| (24) | |||

| (25) | |||

| (26) | |||

| else | (27) | ||

| (28) | |||

| (29) | |||

| end if | (30) | ||

| (31) | |||

| (32) | |||

| (33) |

-

1.

Set and .

-

2.

Let be the optimal solution for the subproblem of

(34) -

3.

If , set and terminate the procedure.

-

4.

Set , with

(35) -

5.

Set and go to step 2.

Note that the purpose of the proposed algorithm is to be applied to the image and video co-localization problems (10) and (17). The objective function in both problems, as discussed before, are convex functions, and the feasible region is a set of finite binary vectors called atoms in for some . We denote this set by and its convex hull conv() by . As is finite, is a polytope.

The first difference between the AGCS(PCGS) and the CGS method is that we incorporate the set of active atoms in the ACGS(PCGS) algorithm. This set keeps record of atoms (integer points) in that are being used for the away direction at each iteration such that the point at current iteration is the sum of corners in reweighted by . This direction that is given in (22), is defined by finding the atom in that maximized the potential of descent given by . Note that obtaining in is fundamentally easier as the linear optimization is over the , the active set of possibly small finite set of points.

The second difference is in the way we update the step-size to update the new iteration point. As we observe in (31) we incorporate a line-search method to obtain a step-size with maximum reduction in the objective toward a prespecified direction from the point at current iteration. With defined in (26) and (29) as the maximum step-size for the line-search step the algorithm guarantees that the new iterates stays feasible in each iteration. Note that the parameter in CGS algorithm is required to be set up in appropriate way to maintain the feasibility in each iteration. Such set ups are represented in [1] as and and in fact, we can us these set ups for CGS steps in step (26) as the upper bound for instead of 1 in line-search step (31). Also, it is easy to check that for the special case of the image and video co-localization problem in which the objective is a convex quadratic function in step (31) has the closed form

| (36) |

if is the quadratic term in the objective. This value is projected to 0 or if is outside of the range for (31) case.

| (37) | |||

| (38) | |||

| (39) | |||

| (40) | |||

| (41) | |||

| (42) | |||

| (43) | |||

| (44) | |||

| (45) | |||

| (46) |

Finally, we incorporate the Wolfe gap as an stopping criterion in the ACGS and PCGS algorithms. In fact, at steps (23) and (41), the algorithms checks if they have reached the given threshold to stop before the preset max number of iterations . As in classical FW, the Wolfe gap is an upper bound on the unknown suboptimality and from the convexity of the objective we have

| (47) |

Note that for the image and video co-localization problem with binary decision variables in a CGS step we have

| (48) |

Also, for we have

| (49) |

On the other hand, for an away step we have

| (50) |

This step is called a drop step. Also, for we have

| (51) |

ACGS and PCGS algorithms are slightly different in the direction that they use to update the new point at each iteration. More precisely, steps (24) to (30) in Algorithm 1 are replaced with steps (42) and (43) in Algorithm 3. Similar to the Paiwise FW, the idea here is to only move weight from the away atom to the CGS atom and keep all other weight unchanged. In other words

| (52) |

for some .

An important property of the formulation (10) and (17) is that their constraints are separable for each image and video. This helps computation to be more efficient if we use parallel computing. This, however, is a property of any first-order method and practically it is very memory efficient. In addition, as a solution to the convex relaxation is not necessarily an integer solution optimal or feasible to the original problem, we need to come up with a solution as close as possible to the obtained relaxation optimum. In image and video co-localization case, the most natural way of finding such a solution is to solve

| (53) |

where is the feasible region of the original problem and is the solution to the relaxed problem. It is easy to check that the projection problem (53) is equivalent to

| (54) |

which for the video model is just a shortest path problem that can be solved efficiently using dynamic programming.

6 Experimental Results

In this section we experiment the proposed Algorithm 1 to the problems introduced in (10) and (17) for image and video co-localization task. Recall that these problems are quadratic problems over the convex hull of paths in a network, the linear minimization oracle in first order methods is equivalent to find a shortest path in the network. We compare the performance of the proposed algorithm with the works in [3] and [24] on FW algorithm and its variants for the similar problem. For this comparison we reuse the codes available and shared for [24, 3, 4] and the included dataset of airplanes consist of 660 variables.

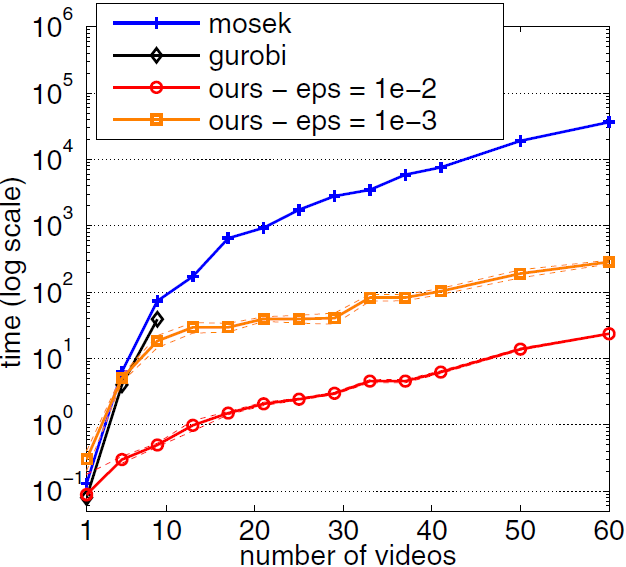

We begin this section by reviewing the performance of Away steps Frank-Wolfe (AFW) and its comparison to the solvers such as Gurobi and Mosek. These results are derived and shown in [3] and the goal in this section is to show how AFW outperforms other methods for our problem of interest. In [24], however, Joulin A., Tang K., and Fei-Fei L. showed that their proposed Pairwise Frank-Wolfe (PairFW) algorithm outperforms any other variants of FW in solving this problem. We will end this section by showing that our proposed ACGS algorithm performs better any first order methods that have been utilized to solve the video co-localization problem.

6.1 FW v.s. Mosek and Gurobi

Algorithm 4 is a variant of FW algorithm proposed in [3] in which the authors examined it on two datasets, the PASCAL VOC 2007 dataset [25] and the Youtube-Objects dataset [26]. This algorithm is in fact the AWF Algorithm introduced in [3] with some slight changes and some extra rounding steps. Also, the set in this algorithm is conv the convex hull of the feasible region of problems (10) or (17). Their implementation of Algorithm 4 was coded in MATLAB and they compare it to two standard Quadratic Programming (QP) solvers, Mosek and Gurobi on a single-core 2.66GHz Intel CPU with 6GB of RAM. In addition, they set for the image model and for the video model and and , for both image and video models. They extracted 20 objectness boxes from each image and sample each video every 10 frames as there is little change frames in short amount time.

| (55) | |||

| (56) | |||

| (57) | |||

| (58) | |||

| (59) | |||

| (60) | |||

| else | (61) | ||

| (62) | |||

| (63) | |||

| end | (64) | ||

| (65) | |||

| (66) | |||

| (67) | |||

| (68) | |||

| (69) | |||

| end | (70) |

The stopping criterion of Algorithm 4 is based on the relative duality gap. This criterion, that is given in function duality-gap() in the algorithm, is defined as , where is the objective function and is its dual. In the implementation of this algorithm, authors consider two values - 2 and - 3 for the stopping threshold .

Figures 4 presents some comparisons of the Algorithm 4 as a variant of FW algorithm with QP solvers Mosek and Gurobi in logarithmic scale. Indeed, this comparison is based on the CPU time performance of the algorithms depending on the number of images and videos, or in better words, the dimension of the decision variables. This time is the time that takes that algorithms reach a duality gap less than the threshold . As we can observe from these plots, the variant of FW algorithm with away steps outperforms the standard QP solvers Mosek and Gurobi.

The reason that we review and represent these comparisons directly from [3]local is that in our implementations in next section we will only compare our proposed algorithms to some other first order methods. These first order methods include the AWF algorithm that we already know from this section that it outperforms standard QP solvers.

The PASCAL Visual Object Classes 2007 dataset [25] provides standardized image data of 20 objects for object classes recognition along with annotations for images and bounding box and object class label for each object. Challenges and competitions have been used to recognize objects from a number of visual object classes in realistic scenes. The YouTube-Objects dataset [26] consists of YouTube videos collected for 10 classes from PASCAL [25]: ”aeroplane”, ”bird”, ”boat”, ”car”, ”cat”, ”cow”, ”dog”, ”horse”, ”motorbike”, and ”train”. Although authors in [3] did the study on multiple objects of this dataset, in our implementations our focus will be on the ”aeroplane” object class.

6.2 Implementations

Knowing that AFW Algorithm 4 outperforms the standard QP solvers Mosek and Gurobi from the works in [3], in this section we compare our proposed variants of the CGS algorithm, the ACGS Algorithm 1 and the PCGS Algorithm 3 to some other first order methods, including the AFW method. More precisely, we will compare the performance of our algorithms to all of the variants of the FW namely, the FW, the FW Algorithm with away steps (AFW), and the pairwise FW Algorithm as discussed in [3]. We also compare our algorithms to the original CGS Algorithm [1]. These comparisons include the duality gap, CPU time, and objective function value versus the iterations.

The implementations are over the YouTube Objects dataset [25] explained in previous section, and specifically its ”aeroplane” class. We obtain the dataset for this class and also the codes for AFW and Pairwise FW algorithms available in the repositories for [3, 24, 4]. We only consider the task of video co-localization with the problem formulation defined in (17) for this implementation. All algorithms are coded in MATLAB and run on a computer with Interl Core i5-6500 CPU 3.2 GHz processor with 16 GB of RAM.

In our implementations, we set all algorithms to stop either after the maximum number of iterations or after reaching the Wolfe duality gap threshold. We set the threshold to and the max number of iterations to 2000 iterations. All of the parameters exist in (17) are set the same as in [24] for consistency in the comparison.

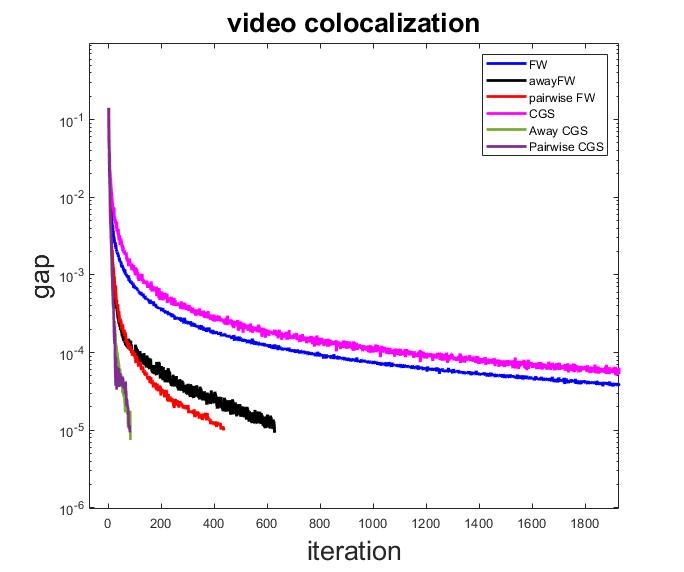

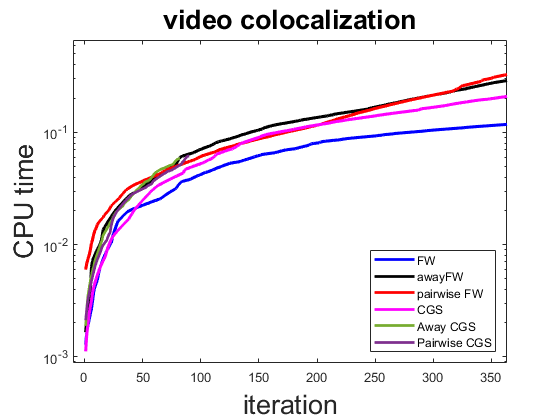

Note that both original versions of FW and CGS algorithms do not reach the desired duality gap before the preset 2000 max number of iterations. Also, the AFW algorithm takes 628 iterations, the Pairwise FW takes 436 iterations, the ACGS takes 84 iterations, and PCGS takes 82 iterations to reach the threshold for the duality gap.

As we observe in Figure 5 both proposed variants of CGS algorithm, the ACGS and PCGS algorithms outperform the FW algorithms and its variants as well as the original CGS algorithm. The performance of the algorithms in terms of the CPU time versus iterations increments also is represented in Figure 6. As we observe in this figure the CPU time per iteration of AFW and ACGS and PCGS are quite similar, although the ACGS and PCGS algorithms reach the gap much earlier than the AFW algorithm.

In addition, while FW algorithm requires one linear optimization oracle per iteration, its CPU time per iteration is not significantly better than the other algorithms. Also, note that out of 84 iteration of the ACGS algorithm, it chooses the away direction in 34 iteration which improves the performance of CGS (with more than 2000 iterations) for this problem significantly.

Finally, authors in [24] proved, for the first time, the global linear convergence of the variants of FW algorithms, AFW and Pairwise FW, under strong convexity of the objective. One potential research work related to the current chapter is figure out the convergence of the proposed algorithms 1 and 3.

References

- [1] Lan, Guanghui, and Yi Zhou. ”Conditional gradient sliding for convex optimization.” SIAM Journal on Optimization 26.2 (2016): 1379-1409

- [2] Nesterov, Y.: Introductory lectures on convex optimization: A basic course, vol. 87. Springer Science & Business Media (2013)

- [3] Joulin, A., Tang, K., Fei-Fei, L.: Efficient image and video co-localization with frank-wolfe algorithm. In: European Conference on Computer Vision, pp. 253–268. Springer (2014)

- [4] Tang, K., Joulin, A., Li, L.J., Fei-Fei, L.: Co-localization in real-world images. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 1464–1471 (2014)

- [5] Alexe, B., Deselaers, T., Ferrari, V.: Measuring the objectness of image windows. IEEE trans-actions on pattern analysis and machine intelligence 34(11), 2189–2202 (2012)

- [6] Boykov, Y., Veksler, O., Zabih, R.: Fast approximate energy minimization via graph cuts. IEEE Transactions on pattern analysis and machine intelligence 23(11), 1222–1239 (2001)

- [7] Delong, A., Gorelick, L., Veksler, O., Boykov, Y.: Minimizing energies with hierarchical costs. International journal of computer vision 100(1), 38–58 (2012)

- [8] Delong, A., Osokin, A., Isack, H.N., Boykov, Y.: Fast approximate energy minimization with label costs. International journal of computer vision 96(1), 1–27 (2012)

- [9] Lowe, D.G.: Distinctive image features from scale-invariant keypoints. International journal of computer vision 60(2), 91–110 (2004)

- [10] Perazzi, F., Krauhenbuhl., Pritch, Y., Hornung, A.: Saliency filters: Contrast based filtering for salient region detection. In: 2012 IEEE conference on computer vision and pattern recognition, pp. 733–740. IEEE (2012)

- [11] Shi, J., Malik, J.: Normalized cuts and image segmentation. IEEE Transactions on pattern analysis and machine intelligence 22(8), 888–905 (2000)

- [12] Belkin, M., Niyogi, P.: Laplacian eigenmaps for dimensionality reduction and data representation. Neural computation 15(6), 1373–1396 (2003)

- [13] Bach, F., Harchaoui, Z.: Diffrac: a discriminative and flexible framework for clustering. Advances in Neural Information Processing Systems 20 (2007)

- [14] Xu, L., Neufeld, J., Larson, B., Schuurmans, D.: Maximum margin clustering. Advances in neural information processing systems 17 (2004)

- [15] Joulin, A., Bach, F., Ponce, J.: Discriminative clustering for image co-segmentation. In: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 1943–1950. IEEE (2010)

- [16] Hastie, T., Tibshirani, R., Friedman, J.: The Elements of Statistical Learning: Data Mining, Inference, and Prediction, Second Edition. Springer Series in Statistics. Springer (2009)

- [17] Babenko, B., Yang, M.H., Belongie, S.: Robust object tracking with online multiple instance learning. IEEE transactions on pattern analysis and machine intelligence 33(8), 1619–1632 (2010)

- [18] Berclaz, J., Fleuret, F., Turetken, E., Fua, P.: Multiple object tracking using k-shortest paths optimization. IEEE transactions on pattern analysis and machine intelligence 33(9), 1806–1819 (2011)

- [19] Yilmaz, A., Javed, O., Shah, M.: Object tracking: A survey. Acm computing surveys (CSUR) 38(4), 13–es (2006)

- [20] Tang, K., Ramanathan, V., Fei-Fei, L., Koller, D.: Shifting weights: Adapting object detectors from image to video. Advances in Neural Information Processing Systems 25 (2012)

- [21] Perez, P., Hue, C., Vermaak, J., Gangnet, M.: Color-based probabilistic tracking. In: European Conference on Computer Vision, pp. 661–675. Springer (2002)

- [22] Pang, Y., Ling, H.: Finding the best from the second bests-inhibiting subjective bias in evaluation of visual tracking algorithms. In: Proceedings of the IEEE International Conference on omputer Vision, pp. 2784–2791 (2013)

- [23] Hare, S., Saffari, A., Torr, P., Struck, S.: Structured output tracking with kernels. In: IEEE International Conference on Computer Vision. IEEE, pp. 263–27

- [24] Lacoste-Julien, S., Jaggi, M.: On the global linear convergence of frank-wolfe optimization variants. Advances in neural information processing systems 28 (2015)

- [25] Everingham, M., Van Gool, L., Williams, C.K., Winn, J., Zisserman, A.: The pascal visual object classes (voc) challenge. International journal of computer vision 88(2), 303–338 (2010)

- [26] Prest, A., Leistner, C., Civera, J., Schmid, C., Ferrari, V.: Learning object class detectors from weakly annotated video. In: 2012 IEEE Conference on computer vision and pattern recognition, pp. 3282–3289. IEEE (2012)