Neural Network Approximations of Compositional Functions With Applications to Dynamical Systems ††thanks: This work was supported in part by U.S. Naval Research Laboratory - Monterey, CA

Abstract

As demonstrated in many areas of real-life applications, neural networks have the capability of dealing with high dimensional data. In the fields of optimal control and dynamical systems, the same capability was studied and verified in many published results in recent years. Towards the goal of revealing the underlying reason why neural networks are capable of solving some high dimensional problems, we develop an algebraic framework and an approximation theory for compositional functions and their neural network approximations. The theoretical foundation is developed in a way so that it supports the error analysis for not only functions as input-output relations, but also numerical algorithms. This capability is critical because it enables the analysis of approximation errors for problems for which analytic solutions are not available, such as differential equations and optimal control. We identify a set of key features of compositional functions and the relationship between the features and the complexity of neural networks. In addition to function approximations, we prove several formulae of error upper bounds for neural networks that approximate the solutions to differential equations, optimization, and optimal control.

1 Introduction

In recent years, the research at the intersection of the fields of optimal control, dynamical systems and machine learning have attracted rapidly increasing attention. Progresses have been made at a fast pace, for instance [7, 8, 10, 11, 12, 14, 21, 22, 25]. In these papers, machine learning based on artificial neural networks are used as an effective tool for finding approximate solutions to high dimensional problems of optimal control and partial differential equations. Many of these problems are not solvable using other numerical methods due to the curse of dimensionality, i.e. the solution’s complexity grows exponentially when the dimension of the problem increases. Why do neural networks work well? Despite of years of research efforts, the fundamental reasons are far from clear. Reviews of some related topics and results can be found in [6, 13]. It is proved in [2] that the neural network approximation of a function whose gradient has an integrable Fourier transform can achieve an error upper bound , where is the number of neurons, i.e., the complexity of the neural network. This rate has a constant exponent that is independent of the problem’s dimension, . Therefore, the curse of dimensionality is mitigated. However, it is pointed out in [2] that a constant in can indirectly depend on . If is exponentially large in , then an exponentially large value of the neural network’s complexity would be required for the approximation to be bounded by a given error tolerance. Similar issues are also brought up in [13] in a review of various neural network approximation methods. If norm is used to measure errors, which is the norm adopted in this paper, the complexity of deep neural networks for the approximation of compositional functions is bounded above by for some constant , where is the error tolerance [19, 20, 23]. This theory is based on the assumption that complicated functions can be represented by the compositions of relatively simple functions. The upper bound of complexity, , has an unknown constant which can grow with the increase of the function’s input dimension. The growth rate depends on various factors or features associated with the function. The relationship is not fully understood. What makes the problem even more challenging is that some functions studied in this paper are associated with dynamical systems such as the trajectory of differential equations or the feedback law of optimal control. For these problems, most existing results including those mentioned above are not directly applicable to prove or guarantee error upper bounds.

In this paper, we aim to reveal the relationship between the complexity of neural networks and the internal structure of the function to be approximated. A theoretical foundation is developed that supports the error analysis of neural networks for not only functions as input-output relations, but also functions generated based on the trajectories of differential equations and the feedback law of optimal control. By the structure of a function, we mean compositional structure. In engineering applications, it is quite common that a complicated function with a high input dimension is the result of a sequence of compositions of simple functions that have low input dimensions. These simple functions as well as the network structure determine the neural network’s complexity that is required to accurately approximate the compositional function. The main contributions of the paper include: 1) We develop an algebraic framework and an approximation theory for compositional functions. These results form the theoretical foundation for the proofs of several theorems on the error upper bound and neural network complexity in the approximation of compositional functions. The theoretical foundation is developed in a way so that it supports the error analysis for numerical algorithms. This capability is critical because it enables the analysis of approximation errors for problems for which analytic solutions are not available, such as differential equations and optimal control. 2) We identify a set of key features of compositional functions. These key features are quantitative. Their value determines an upper bound of the complexity of neural networks when approximating compositional functions. These key features serve as sufficient conditions for overcoming the curse of dimensionality. 3) We prove several formulae of error upper bounds for neural networks that approximate the solutions to differential equations, optimization, and optimal control.

2 Composition vs. projection: what is the difference?

A widely used idea of representing functions is to project a function to a sequence of finite dimensional subspaces. For instance, a large family of functions can be represented by Fourier series

| (1) |

In this representation, the -th terms, , are considered to be the elementary units of the function. Because the projection operator is linear, this representation is closed under linear combination in the following sense: If both and are Fourier series, then so is for any constants and ; and the -th term of is determined by the -th term of and , i.e., the elementary units of and are the elementary units of as well. However, the Fourier series representation is not closed under some other important algebraic operations such as function composition. It is obvious that , or , is not in the form of Fourier series. Although one can find a Fourier series representation for , its -th term is not determined by the -terms of and , i.e., the -th terms of and are not elementary units in the Fourier series of .

Let’s consider functions generated through a network of multiple function compositions. A formal definition of compositional functions is given in the next section. Algebraic expressions of compositional functions can be cumbersome due to the complexity of the function. Instead, we use directed graphs. As an example, consider the following two vector valued functions

| (2) |

In this paper, we use bold letters, such as and , to represent a vector or a vector valued function and regular font to represent a scalar or a scalar valued function. The functions and can be represented by a directed graph in which each node is a function with a relatively low input dimension (Figure 1). These nodes are the elementary units of the function.

Different from representations based on projection, the family of compositional functions is closed under both linear combinations and compositions. For example, is a compositional function; and every elementary unit (or node) of and is an elementary unit of . The graph of and its nodes are shown in Figure 2.

If , then is a compositional function; the nodes of and are also nodes of (Figure 3). In addition to linear combinations and compositions, we will prove in the next section that the family of compositional functions is also closed under some other algebraic operations, including inner product, division, and substitution.

Why is this interesting? In real-world applications, mathematical models of complicated problems oftentimes have a compositional structure in which the model can be represented by the composition of multiple functions that are relatively simple. The compositional structure reflects the interactive relations among basic components and sub-systems determined by physical laws or engineering design and thus contains valuable insights of the problem. In addition to functions as input-output relations, many computational algorithms are compositional. For instance, an iterative algorithm is a process in which a function is repeatedly applied to the result from the previous step until the process converges to a small neighborhood of the target point. The iterative process is equivalent to a finite sequence of function compositions. For discrete dynamical systems, their trajectories are all compositional functions. Obviously, neural networks are also compositional functions. In fact, the family of neural networks is closed under the operation of composition and linear combination. From the wide spectrum of subjects covered by compositional functions, we believe that an algebraic framework for compositional functions can provide a unified theoretical foundation for the study of neural networks in the approximation of not only functions as input-output relations, but also other subjects that have effective iterative algorithms, such as differential equations and optimal control. Because the family of compositional functions are closed under linear combinations and compositions, it simplifies the problem of tracking error propagations inside complicated compositional functions.

Mathematical study on compositional functions has a rich history. In Hilbert’s 13th problem it was conjectured that the roots of some 7th order polynomials cannot be represented as compositions of functions of two variables. Hilbert’s conjecture was disproved by Arnold and Kolmogorov. In [15] Kolmogorov showed that any continuous function defined on a d-dimensional cube can be exactly represented by a composition of a set of continuous one-dimensional functions as

where and are continuous univariate functions. This astonishing result of Kolmogorov’s representation theorem reveals that compositional structure could be viewed as an inherent property of any high-dimensional continuous functions. By relaxing Kolmogorov’s exact representation to approximation and leveraging on techniques developed by Kolmogorov, Ref. [16] gives a direct proof of a universal approximation theorem of multilayer neural networks for any continuous functions.

In deep learning, compositional functions have been addressed by many authors [3, 5, 6, 19, 20, 23]. In [3], which is a study of statistic risk with a given sample size, the approach takes the advantage of the fact that deep networks with ramp activation functions can be represented as a tree rooted at the output node. In [5, 6], tree-like function spaces are introduced. They are considered as the natural function spaces for the purposes of approximation theory for neural networks. Compositional functions in [19, 20, 23] are defined using directed acyclic graphs. Our definition of compositional functions is also based on directed acyclic graphs. However, we give an emphasis on the graph’s layering, in addition to its nodes and edges.

Before we introduce the main theorems, it is important to develop a theoretical foundation for error estimation. In Section 3.1, some basic concepts about compositional functions are defined. In Sections 3.2 and 3.3, some algebraic properties of compositional functions are proved. These concepts and properties form a set of pillars that supports the approximation theory for compositional functions. In Section 4, neural networks are introduced as a family of special compositional functions. The family of neural networks is closed under several algebraic operations including linear combination, composition, and substitution. This property enables the capability for one to compose complicated deep neural networks based on simple networks and algebraic operations. Also in Section 4, a set of key features of compositional functions is identified. For neural network approximations, we prove an error upper bound that is determined by the key features. Because iterative computational algorithms can be considered as compositional functions, the algebraic framework and the approximation theory are applicable to the solutions to differential equations, optimization, and optimal control. The results are proved in Sections 5 and 6.

3 Algebraic properties of compositional functions

Compositional functions are functions consisting of multiple layers of relatively simple functions defined in low dimensional spaces. These simple functions are integrated together through algebraic operations such as function composition and linear combination. If compositional functions are estimated by neural networks, errors are propagated through layers of functions in a complicated way. Analyzing the estimation error requires a good understanding of the interplay between the error propagation and the internal structure of the compositional function. In this paper, some proofs of theorems on neural network solutions to differential equations and optimal control are impossible without first developing an algebraic foundation and approximation theory for the family of compositional functions. In the following, we first define a set of fundamental concepts and then prove some basic algebraic properties of compositional functions. In Table 1, we summarize a list of repeatedly used notations in the following sections.

|

|

|

|

||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

|

|

|

||||||||

|

|

|

|

|

||||||||

|

|

|

|

|

||||||||

|

|

|

|

|

||||||||

|

|

|

|

|

||||||||

|

|

|

|

||||||||

|

|

|

|

||||||||

|

|

|

|

|

||||||||

|

|

||||||||||

3.1 Definitions and notations

A directed acyclic graph (DAG) is a directed graph without directed cycles. A DAG consists of a set of nodes and a set of directed edges. If each node is a function with input (inward edges) and output (outward edges), then the DAG represents a compositional function. Figure 4 is an example of DAG associated with a function . The nodes that have no inward edges are call input nodes; those that have no outward edges are called output nodes.

DAGs allow layerings that agree with the direction of edges. More specifically, suppose is a directed edge in a DAG in which is a node in the -th layer and is in the -th layer, then . For the compositional function in Figure 4, it has a total of five layers. In this paper, the set of input nodes is always the first layer, labeled as layer ; two layers next to each other are labeled using adjacent integers; the set of output nodes forms the terminal layer which has the highest layer number. We would like to point out that the layering of a DAG is not unique. Moreover, a function may have more than one associated DAGs. For example, consider the following function

| (3) |

In Figure 5, three DAGs are shown. As a function, they all represent . Figure 5(a) has three layers. Both (b) and (c) have four layers. However, the set of nodes in two of the layers are different. In Figure 5, some nodes are colored and some are white. The reason will be explained following Definition 1.

Definition 1

(Compositional function) A compositional function is a triplet, , consisting of a function, , a layered DAG, , and a mapping, . If is a node in , then equals an integer that is the layer number of . We also use subscripts to represent the layer number, i.e., is the -th node in the -th layer. Every node in that has at least one inward edge is a function, , where is the number of inward edges of the node. A node that has no inward edge is called an input node. It can take any value in the domain of . All input nodes in are labeled as layer , called the input layer. A node that has no outward edge is called an output node. All output nodes are located in the final layer, called the output layer. All layers between input and output layers are called hidden layers. We always assume that the ranges and domains of all nodes are compatible for composition, i.e. if is an edge in , then the range of is contained in the interior of the domain of .

For each node in a compositional function, the number of inward edges, , defines the number of free variables of the node. Each node takes its value in , i.e., all nodes are scalar valued functions. The set of nodes and edges in are denoted by and , respectively. Given a node, , if it is a 1st order polynomial, then it is called a linear node. Otherwise, it is called a general node. All input nodes are treated as linear nodes. In the figure of , linear nodes have white color. The set of linear nodes are denoted by and the set of general nodes are denoted by . The set of input nodes, i.e., the first layer of , is denoted by . Similarly, the layer formed by output nodes is . Therefore, is the largest layer number in the graph. It is denoted by . Given an edge . If , then we say that this edge skips layers.

Example 1

A complicated function defined in high dimensional spaces can be a compositional function consisting of simple nodes. In a model of power systems [1, 24], the electric air-gap torque, , is a vector valued function that can be approximated by

| (4) |

where is the number of generators, , , are rotor angles of the generators. Other notations represent constant parameters. This function is used to determine the equilibrium point of the power system. It is also a part of the torques applied to a rotor in a generator. If the system have generators, then the model is a function . This function can be represented as a compositional function with simple nodes. Shown in Figure 6 is in a DAG with four layers. Except for the layer of and , all nodes are linear. It will be shown later that linear nodes in a compositional function do not increase the complexity of the neural network that approximates the function. For all general nodes, their domains have a low dimension, . Theorems in this paper help to reveal that a low input dimension of individual nodes implies low complexity when the function is approximated by neural networks. The complexity is not directly dependent on the overall dimension, in this case .

Example 2

Many numerical algorithms are compositional functions. For instant, numerical algorithms of ODEs such as the Euler method and all explicit Runge-Kutta methods are compositional functions. Consider

| (5) |

For any initial state , the following is a numerical solution based on the forward Euler method:

| (6) |

where is the step size. This algorithm is, in fact, a compositional function. The structure of its DAG is shwon in Figure 7. Each box represents a layer that may have multiple nodes if . In fact, the box of is a DAG if is also a compositional function.

Example 3

Neural networks are compositional functions. Let be an activation function. A function

| (7) |

is a shallow neural network with a single hidden layer (Figure 8), where , and are parameters. Similarly, deep neural networks are also compositional functions.

In this paper, the algebraic foundation and approximation theory on compositional functions are developed as a unified framework for various applications of neural networks. Before we introduce these applications, we must define some basic concepts about algebraic operations between compositional functions, such as linear combination, multiplication, division, substitution and composition. Many complicated functions and numerical algorithms, such as the solutions of differential equations or optimal control, are the results of a sequence of these algebraic operations of compositional functions.

Definition 2

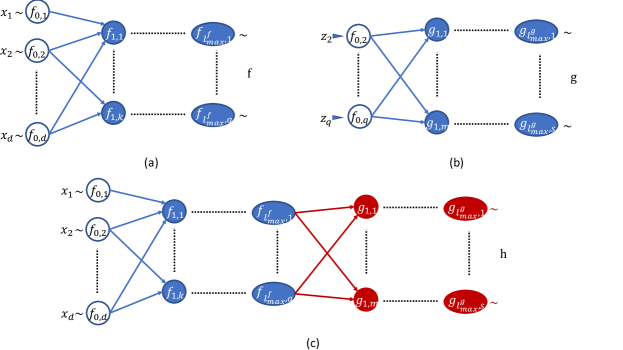

(Linear combintation, multiplication and division of compositional functions) Given compositional functions and in which both and are from into . Let be constant numbers. Define

| (8) |

Then is a compositional function associated with a layered DAG that is induced by and . The induced DAG and its layering are defined as follows (see Figure 9 for an illustration).

-

1.

Input layer: Let , i.e., the input layer of is formed by overlapping the input nodes of and . The outward edges of the input nodes in and are combined.

-

2.

Hidden layers: all nodes in are nodes of . The layer number of each node is kept the same as that in their original DAG. The edges pointing to or from these nodes are kept unchanged.

-

3.

Output layer: Create output nodes. Each one has two inward edges. For the -th node, where , one inward edge starts from and the other one from . Each output node is a function

(9) The layer number of output nodes in is

(10)

One can define the induced DAGs for the compositional functions and (if , and are scalar valued functions and ) by modifying the output layer accordingly.

This definition implies that the family of compositional functions is closed under inner product and division, in addition to linear combination and composition. Unless otherwise stated, in this paper we always use the induced DAG for functions that are generated through algebraic operations of compositional functions. In the following, we define the induced DAG for a few more types of algebraic operations involving compositional functions. In Figure 10(a)-(b), two compositional functions, and , are shown. We would like to substitute for the node , a function that has the same number of inputs as . Figure 10(c) shows a layered DAG of , the function resulting from the substitution. The blue nodes and edges are those from . The red nodes and edges are those from . In the substitution, all input nodes of are removed; their outward edges are attached to the corresponding nodes in ; is replaced by the output node of . The layering is changed depending on the depth of .

Definition 3

(Node substitution) Given a compositional function . Let be a node in the -th layer, where . Let , , be a compositional function. Substituting for results in a new compositional function, . The induced DAG and its layering are defined as follows.

-

1.

-

2.

If is the ordered set of inward edges of , then each is replaced by the set of outward edges of . For the outward edges of , their new starting node in is the output node of . All other edges in and are kept unchanged.

-

3.

Let be a positive integer so that be the highest layer number of layers in from which at least one node has an outward edge pointing to . The layering in is defined as follows.

(11)

Many numerical algorithms are iterative, i.e., the same function is repeatedly applied to the result from the previous iteration until the process converges to a small neighborhood of the target point. If each iteration is a compositional function, then the numerical process can be considered as a sequence of compositions of compositional functions.

Definition 4

(Composition of compositional functions) Given positive integers , and . Consider two compositional functions and , , . Let . Then is a compositional function. Stacking horizontally to the right of forms the induced DAG of as illustrated in Figure 11. More specifically,

-

1.

.

-

2.

The outward edges from the input nodes in are attached to the corresponding output nodes in as new starting nodes.

-

3.

The order of layers are kept unchanged, i.e.,

(12)

In this study, we find that the layering of a DAG plays an important role in the propagation of approximation errors through a network of nodes. For example, given a node in the -th layer of a compositional function. The variation of this node impacts the value of nodes in the layers , but not those in the layers . In the following, we introduce the concept of compositional truncation along a layer. The concept leads us to the definition of the Lipschitz constant associated with nodes. Consider a compositional function with five layers shown in Figure 12(a). Its truncation along the layer is a function, , that is shown in Figure 12(b). In the truncation process, we first remove all edges that end at nodes in layers . Subsequently, and become isolated nodes, i.e., they have neither outward nor inward edge. Remove them from the graph. Align all nodes in layers , , , and , to form the input layer of the truncated function. In the new input layer, the nodes from layer in (in this example, and ) are kept in the same order; the nodes moved up from layer (in this case and ) are located at the lower half of the layer. Their order is not important. A set of dummy variables, , is introduced as the input of , the truncated function. It is important to clarify that the domain of is the intersection of the domains of all nodes that are directly connected with in . For example, in Figure 12 can take any value that is in the domains of , and simultaneously. The value is not necessarily in the range of the original node in .

Definition 5

(Compositional truncation) Given a compositional function . Let be an integer, . The compositional truncation of along layer is another compositional function, . Its layered DAG is obtained by the following process: (i) removing all edges in whose ending nodes are located in layers ; (ii) removing all isolated nodes whose inward and outward edges are all removed in (i); (iii) forming the input layer of by aligning all nodes in layers in that are not removed in (ii). We find it convenient in discussions if the order of ’s in remains the same in the new input layer and they are located at the top portion of the layer. All other nodes in the new input layer are located at the bottom of the layer organized in any order one may choose. Given any , , its layer number in is . The variables associate with the input layer of are called dummy inputs. Each dummy input can take any value that is in the common domain of all nodes in that have inward edges starting from this input node.

The concept of the Lipschitz constant is essential in several theorems proved in this paper. In the following, we define the Lipschitz constant associated with nodes based on a given norm. For vectors in , its -norm is

| (13) |

Definition 6

(The Lipschitz constant associated with a node) Given a compositional function and a constant . Consider a node, , in the -th layer, where . Let be the truncation of along the -th layer. If is a constant satisfying

| (14) |

for all and within the domain of , is called a Lipschitz constant (under the -norm) associated with . In some discussions, we denote the constant by to differentiate Lipschitz constants associated with different functions.

We should point out that a Lipschitz constant associated with a node, , is different from the Lipschitz constant of . The later is about the rate of change of when is changed; the former is about the rate of change of , the compositional truncation, when the value of is changed, where the node itself is treated as a free variable. The role of the Lipschitz constant of individual nodes in a DAG is studied in [19, 20], where a good error propagation phenomenon is illustrated. Definition 6 provides a different way of tracking error propagation. It leads to a feature (to be introduced in Section 4) that has direct impact on an error upper bound of function approximations using neural networks.

3.2 Some properties of Lipschitz constants

Algebraic operations may change Lipschitz constants associate with nodes. The propositions in this section are about the rules that Lipschitz constants follow in some algebraic operations of compositional functions.

Proposition 1

Given a compositional function, , and a node, , in the -th layer. Suppose is another compositional function in which is a scalar valued function that has the same domain as . Let be the compositional function resulting from substituting for , assuming that the range of is compatible for the substitution. For all nodes in in layers , their associated Lipschitz constants can be carried over to . Or equivalently, one can assign

| (15) |

where is the highest layer number of layers in from which at least one edge points to .

Proof. From Definition 3, substituting for does not change the nodes and edges above the -th layer. The number of nodes in the -th and higher layers in is the same as that in the layers in . All edges in that end at nodes in the layers have a one-to-one correspondence with the edges in that end at nodes in the layers . Therefore, the compositional truncation of along the -th layer () is identical to the compositional truncation of along the -th layer. Because Lipschitz constants are defined based on compositional truncations, (15) holds true.

The following result is about the change of Lipschitz constants after the composition of two compositional functions.

Proposition 2

Given positive integers , and . Consider two compositional functions , , and , . Let . Let , and be some Lipschitz constants of , and under a -norm.

-

1.

Given any node in . As a node in , a Lipschitz constant associate with this node is .

-

2.

A Lipschitz constant of is .

Proof. Part (2) is well known. For part (1), let be the truncation of along the -th layer in which is located. For , the truncation along the layer of the same node is . For any ,

| (16) |

3.3 Some properties of error propagation

For compositional functions in which all nodes have low input dimensions, one can approximate each node using a shallow NN. Substituting these neural networks for the corresponding nodes that they approximate, the process results in a deep neural network. The error of using this deep neural network as an approximation of the function depends on all “local errors”, i.e., the estimation error of each node. These local errors propagate through the DAG network interactively. In this section, we study the relationship between the Lipschitz constants associated with nodes and the propagation of local errors. To measure errors, we need to introduce an appropriate norm. Given a function, , where is a compact set. Its -norm is the essential supremum

| (17) |

Suppose is an approximation of . We say that the approximation error is bounded by if

| (18) |

If both functions are continuous, this is equivalent to

| (19) |

In this paper, all approximations are measured using a uniformly bounded error like the one shown in (19). If is a vector valued function, a -norm is used to measure the estimation error

| (20) |

When deep neural networks are used to approximate compositional functions, two types of algebraic operations are frequently used. One is node substitution and the other is composition. The following propositions are about the relationship between the approximation errors before and after an algebraic operation.

Proposition 3

Let be a compositional function. Let be a set of nodes. Under a -norm, let be a Lipschitz constant associated with , . Suppose

| (21) |

is a set of compositional functions in which has the same domain as and a compatible range for substitution. Assume that

| (22) |

where , , are some positive numbers. Let be the function obtained by substituting for , . Then

| (23) |

Proof. We prove the case for and . The proof can be extended to arbitrary through mathematical induction. Let . Let and be the compositional truncation of and along the layer in which is located. From the proof of Proposition 1, we know that . The following fact follows the definition of compositional truncations,

| (24) |

where , the dummy input of the truncated function, takes the value of the corresponding nodes in before the truncation. Therefore, it depends on . The value of is similarly defined. Without loss of generality, assume that is the first node in its layer. Then, takes the value of in the evaluation of , and takes the value of . The value of is different from that of because is replaced by . All other components in and are equal because is the only node that is substituted. We have

| (25) |

where takes the value of nodes that have edges pointing to . If , suppose is ordered in consistent with the layer number of the nodes, i.e., . Let be the function obtained by substituting for in ; and be the function obtained by substituting both and for and , respectively. When the node is considered as a node in , its associated Lipschitz constant as a node in can be carried over to because (Proposition 1). Therefore, is a Lipschitz constant associated with as a node in . Applying the proved result for to both and , we have

| (26) |

for all in the domain of .

Given a function . A sequence of repeated self-compositions is denoted by

| (27) |

Proposition 4

Consider compositional functions , , , , and , . Suppose that the domains and ranges of them are compatible for compositions. Let and be Lipschitz constants of and under a -norm. Suppose , and are functions satisfying

| (28) |

for some , and . Given any integer , we have

| (29) |

and

| (30) |

Proof. The inequality (29) is true if because

| (31) |

Suppose the inequality holds if the power of is . Then,

| (32) |

The inequality (30) is implied by (29) and the following inequality

| (33) |

The following proposition is simply a version of the triangular inequality. It is useful when a sequence of approximations are applied to a function.

Proposition 5

Given a sequences of functions , . Suppose is a sequence of positive numbers satisfying

| (34) |

Then,

| (35) |

4 Neural networks as compositional functions

Let be the space of scalar valued functions whose derivatives up to -th order exist almost everywhere in and they are all essentially bounded. The Sobolev norm of is defined by

| (36) |

where represents a vector of integers and . Most theorems proved in this paper are based on similar assumptions. They are stated as follows.

Assumption A1 (Compositional functions) Given a compositional function . We assume that is a function from to for some and positive integers and . Assume that the nodes, , are functions in for some integers and . The domain of is a hypercube with edge length . The ranges and domains of all nodes are compatible for composition, i.e., if is an edge in , then the range of is contained in the interior of the domain of .

In the following, we introduce four key features of compositional functions. These features play a critical role in the estimation of the complexity of deep neural networks when approximating compositional functions.

Definition 7

(Features of compositional functions) Given a compositional function , , that satisfies A1. The notations , and represent positive numbers satisfying

| (37) |

for all nodes in , where is defined under a fixed -norm. These upper bounds, , and , together with are called features of the compositional function.

Example 4

The function in (44) is the vector field that defines the Loranz-96 model [17],

| (44) |

where , , and is a constant. Let’s treat as a compositional function, , with a DAG shown in Figure 13.

All general nodes in are located in the second layer. They are defined by

| (45) |

The formulae of linear nodes are shown in Figure 13. All general nodes have the dimension and the domain . To compute the Lipchitz constant associated with the general node, , we construct the truncation of along the second layer, which is given by

| (46) |

where the dummy inputs for all . Clearly , for all . Since is smooth, we can set for any integer . Thus,

Now it is straightforward to compute the features of the compositional function :

| (47) |

Shallow and deep neural networks are defined as follows. They are compositional functions in which all general nodes are based on a single function.

Definition 8

(Neural network) A Neural network is a compositional function of a special type. Given a function , which is called an activation function.

-

1.

A shallow NN is a function

(48) where and , , are parameters. Its DAG is shown in Figure 14(a), which has one hidden layer and a linear output node. Each node in the hidden layer, , is a function of the form . In this paper, unless otherwise stated, a shallow neural network is a scalar valued function.

-

2.

A deep neural network is a compositional function , , that has multiple hidden layers (Figure 14(b)). The output layer consists of linear nodes. Every node in hidden layers is a function of the form .

-

3.

A node in a hidden layer is called a neuron. The complexity of a neural network or deep neural network is the total number of neurons in it.

Remark 1

In many applications of neural networks, edges connecting neurons do not skip layers. This is different from the deep neural network defined in Definition 8 where edges can skip layers. It is addressed later in this section that one can construct neural networks without edges that skip layers by adding identity functions in the DAG. We are not the first ones in the study of DAG based neural networks. For instance, error analysis and potential computational advantages were addressed for DAG based neural networks in [4, 19, 20, 23] and references therein. In this paper, all neural networks are DAG based. For the reason of simplicity, we do not always specify the layered DAG, and , when introducing a neural network.

Remark 2

In Definition 8, there is no linear nodes in the hidden layers. This requirement is not essential because one can easily modify the DAG of a neural network to remove all linear nodes by merging them into neurons. Figure 15(a) shows a portion of a DAG in which is a linear node. It evaluates the summation of the three inputs. This linear node is directly connected with a neuron . We can remove the linear node; and then connect its inward edges directly to the neuron. Adjusting the neuron’s parameters accordingly, the result is a simplified DAG shown in Figure 15(b). Following the same process, one can remove all linear nodes in hidden layers without increasing the number of neurons. The compositional function resulting from this process is a deep neural network defined in Definition 8. In the rest of the paper, we call a function a deep neural network even if it has linear nodes in addition to neurons, with the understanding that the function is equivalent to a deep neural network as defined in Definition 8 by merging all linear nodes into neurons.

Based on Remark 2, it is obvious that the family of neural networks is closed under several algebraic operations, including linear combination, substitution, and composition.

Proposition 6

Given two neural networks, and . Suppose that their domains and ranges are compatible for the operations discussed below. Then the following statements hold true.

-

1.

The linear combination, for any constants and , is a neural network.

-

2.

The compositional function, , is a neural network.

-

3.

If a neuron in is substituted by , the resulting compositional function is still a neural network.

Based on this proposition, one can compose complicated deep neural networks using simple and shallow neural networks through sequences of algebraic operations. When this approach is applied to approximate functions, the approximation error of a complicated neural network depends on the accuracy of individual shallow neural networks that are used as building blocks in the composition. The following theorem on the approximation error of shallow neural networks, which is proved in [18], is fundamental in the proof of several theorems about deep neural network approximations of various subjects, including the solutions of differential equations, optimization problems and optimal control.

Theorem 1

(Shallow neural network approximation [18]) Let be integers. Let be an infinitely differentiable function in some open interval in . Assume that there is a point, , at which and all its derivatives do not equal zero. For any positive integer, , there exist vectors and a constant satisfying the following property. For any function, in , there exist coefficients such that

| (49) |

The constant is determined by and but is independent of and .

This theorem is based on the assumption that the domain of is a hypercube whose edge length is 2. However, the domain of each node in a compositional function do not necessarily satisfy this assumption. The following is an error upper bound for defined in a hypercube that has an arbitrary edge length. The result is proved for domains centered at the origin. Applying translation mappings, the result can be extended to hypercubes centered at any point.

Corollary 1

Making the same assumptions as in Theorem 1 except that the domain of is . Then, there exist vectors , , a constant and coefficients such that

| (50) |

The constant is determined by and but independent of and .

Proof. Define a mapping and a new function . The domain of the new function is . From Theorem 1, we have

| (51) |

for all in . It is obvious that

| (52) |

Therefore,

| (53) |

Consider a compositional function in which the nodes, , are functions in for some positive integers . From Theorem 1, there exists a set of constant and a set of shallow neural networks, , such that

| (54) |

where is the complexity, i.e. the number of neurons in . Substituting the shallow neural networks for all general nodes in results in a new compositional function consisting of neurons and linear nodes, i.e. a deep neural network. It is an approximation of . Its error upper bound is proved in the following theorem.

Theorem 2

Consider a compositional function , , and a set of its features (Definition 7). Suppose that the function satisfies A1.

-

1.

For any integer , there exists a set of shallow neural networks of width that approximates the nodes in . The deep neural network, , resulting from substituting the shallow neural networks for all general nodes in has the following error upper bound,

(55) where is a constant.

- 2.

-

3.

The complexity of is .

Remark 3

Theorem 2 implies that approximations using neural networks can avoid the curse of dimensionality, provided that the features of the compositional function do not grow exponentially with the dimension. More specifically, for a compositional function defined in a domain in , the complexity of its neural network approximation depends directly on the features of the function, but not directly on the dimension . Given a family of compositional functions for . If the value of their features do not increase exponentially as , then neural network approximations of these compositional functions do not suffer the curse of dimensionality. We would like to point out that the inequality (55) can be found in [23]. Different from [23], we rigorously prove the inequality as well as the relationship between and the features of compositional functions.

Proof of Theorem 2. For every node , there exists a shallow neural network, , that approximates the node (Theorem 1 and Corollary 1). If the number of neurons in is , we can find one that satisfies

| (57) |

for all in the domain of , where depends on and but not . Suppose is the largest . From Proposition 3, satisfies

| (58) |

Remark 4

The expression of in (56) is conservative. From the proof, an alternative error upper bound is in (58). More specifically,

| (59) |

Moreover, the proof suggests that the number of hidden layers in the neural network (after all linear nodes in hidden layers are merged into neurons) is . The output layer consists of all linear nodes. The width of layer in the neural network is bounded by , where is the number of general nodes in the -th layer in .

Remark 5

The edges in in Theorem 2 may skip layers, which is not in the traditional format of deep neural networks. Any compositional function, in fact, allows a DAG in which no edges skip layers. Adding identity functions as nodes is a way to achieve this. Shown in Figure 16(a) is the DAG of a compositional function in which two edges skip layers, and . In Figure 16(b), three identity functions are added as nodes to the DAG at each location where an edge skips a layer. Under the new DAG, no edges skip layers. In Theorem 2, if has no edges that skip layers and if we substitute shallow neural networks for all nodes in (including identity and linear nodes), then the DAG of in Theorem 2 has no edges that skip layers. After merging linear nodes in into neurons, one can achieve a traditional deep neural network in which edges do not skip layers.

Corollary 2

Consider a compositional function that satisfies the same assumptions made in Theorem 2. Then, there is an . For any , there always exists a deep neural network, , satisfying

| (60) |

The complexity of is

| (61) |

where is a constant determined by .

Proof. From Theorem 2, there exists a deep neural network, denoted by , that satisfy (55)-(56). Let be the smallest integer such that

| (62) |

Then (60) holds. As the smallest integer satisfying (62), satisfies

| (63) |

Because is a constant depending on and , (63) implies

| (64) |

If is small enough, then we can double the constant and remove the last term, , i.e. (61) holds.

Example 5

Consider the compositional function (44) in Example 4, whose features are given in (47). By Theorem 2, for any integer , there exists a deep neural network, , such that

for all and all integer , where is a constant determined by . The complexity of is . The approximation error and the complexity depend on the dimension, , linearly. If the value of and the domain size are set to be constants, the neural network approximation is curse-of-dimensionality free.

Given a function . The radius of its range is defined by

| (65) |

Theorem 3

Consider two compositional functions , , and , . Suppose they both satisfy A1. Let and be two neural networks with complexity and , respectively. Suppose

| (66) |

for some positive numbers , and for all in the domain.

-

1.

Consider , where are constants. Then is a neural network satisfying

(67) for all in the domain. The complexity of is .

-

2.

Consider . Let in (66). Then, for any integers and , there is a deep neural network, , satisfying

(68) where is a constant depending on and . The parameter, , is defined as follows based on and (the -th component of and , respectively)

(69) The complexity of is .

-

3.

If and are scalar valued compositional functions. Suppose . Consider . Suppose

(70) Then, for any integers and , there is a deep neural network, , satisfying

(71) where is a constant depending on ,

(72) The complexity of is .

Proof. The inequality (67) is obvious. From Definition 2, the last layer of the induced DAG for consists of all linear nodes. It does not change the complexity of the deep neural network.

To prove (2), we construct a compositional function (Figure 17(a)). Then

| (73) |

The Lipschitz constants associated with each general node in (all located in the layer ) is . It is straightforward to show that

| (74) |

for any . From Theorem 2, there exists a deep neural network, , so that

| (75) |

where C is a constant depending on (), is defined in (69). Define . It is a deep neural network. We have

| (76) |

The proof of (3) is similar to the idea shown above but for . We construct a compositional function . This is a compositional function with a single general node and two input nodes (Figure 17(b)). Then

| (77) |

A Lipschitz constant associated with the single general node in is . Skipping the proof that is based on typical algebraic derivations, we claim that the norm of satisfies

| (78) |

for any . From Theorem 2, there exists a deep neural network, , so that

| (79) |

where C is a constant depending on , is defined in (72). Define . We have

| (80) |

Because of (70), we know

| (81) |

Therefore,

| (82) |

5 Ordinary differential equations

In the following, we say that a function is if all derivatives of order less than or equal to are continuous in the domain. Consider a system of ordinary differential equations (ODEs)

| (83) |

Without loss of generality, in (83) does not explicitly depend on . If the right-hand side of the ODE is a function of , one can add a new state variable to convert the equation to a time-invariant one. Let represent the solution of (83) satisfying . In this section, we study the complexity of deep neural networks in the approximation of the function . We assume that , the right-hand side of (83), is a compositional function that satisfies A1. After integration, the compositional structure of becomes unknown.

The solution of an ODE can be approximated using the Euler method. Let represent the operator of the Euler method, i.e.,

| (84) |

Let be a positive integer and . Then

| (85) |

As a compositional function, has a DAG, whose framework is shown in Figure 18. The first box on the left represents the layer of input nodes. The blue box in the middle contains all nodes in and their edges. The box on the right consists of output nodes, which are all linear nodes. Applying Definition 4, is a compositional function with an induced DAG.

Theorem 4

Consider the ODE (83) in which is a compositional function satisfying A1. Its features are defined in Definition 7. Assume that all nodes in are . Suppose is a closed set such that whenever and . Let be a real number.

-

1.

There is a constant so that, for any integer , there exists a deep neural network, , satisfying

(86) -

2.

Let be the constant so that a Lipschitz constant of has the form . The constant, , in (86) satisfies

(87) where is a constant determined by .

-

3.

The complexity of is bounded by

(88)

Remark 6

The error upper bound in (86)-(87) increases at a polynomial rate with respect to the features of . It also depends on the terms and . If the Euler method is stable [9], then . The value of is always bounded. For a stable system, the error estimation using is conservative. In this case the error does not increase exponentially with if a stable Euler method is applied.

Proof of Theorem 4. For any integer , from Theorem 2, there exists a deep neural network approximation of satisfying

| (89) |

for some constant determined by and . The complexity of the neural network is bounded by . Let be the neural network obtained by substituting for . Then,

| (90) |

Define

| (91) |

Applying Proposition 4, we have

| (92) |

On the other hand, it is well known (see, for instance, [9]) that the global error of the Euler method satisfies

| (93) |

From Proposition 5 and the inequalities (92) and (93), we have

| (94) |

Substituting by , where the value of is the smallest integer that is greater than or equal to , we achieve the inequalities in (86)-(87) because

| (95) |

The calculation of the complexity is straightforward. There are a total of iterations in the Euler approximation. In each , there is one copy of , which is substituted by , a neural network whose complexity is . Thus, the total number of neurons in is , which implies (88) because .

Example 6

Consider Lorenz-96 system [17],

| (96) |

where the vector field is the compositional function (44) in Example 4. For a suitable choice of the constant , system (96) is chaotic; thus trajectories are bounded. Choose a sufficiently large such that for all . By Theorem 4 and the features of given in (47), for any integers and , there exists a deep neural network, , satisfying

| (97) |

where

| (98) |

Moreover, the complexity of is bounded by

| (99) |

6 Optimal control

Let be a bounded and closed set in . Let be a cost function, where is the state and is the control input. Suppose that is . Let represent the Hessian of with respect to , i.e.

| (100) |

If is positive definite, then is a convex function for every fixed . Consider the problem of optimization

| (101) |

Given any , (101) has a unique solution. The optimal control is denoted by . The minimum cost is called the value function,

| (102) |

Because of the convexity and the smoothness of , it can be proved that is continuous and the set of control inputs,

| (103) |

is bounded. Its diameter is denoted by

| (104) |

Let be any fixed point satisfying

| (105) |

In the following, we apply an iterative algorithm starting from in the searching for . It is a fact that will become clear later that the following set is invariant under the searching process:

| (106) |

In the following, we prove an error upper bound for the approximation of using neural network.

Theorem 5

Suppose is a function. Assume that is positive definite in . Let and be the lower and upper bounds of the eigenvalues of in . Suppose is a neural network satisfying

| (107) |

for some .

-

1.

Given any integer and real number , there exists a deep neural network, , that approximates with the following error upper bound

(108) where is the diameter of the set (103), , and

(109) -

2.

Let be the complexity of . The complexity of satisfies

(110)

Proof. Define a function

| (111) |

where is chosen so that the matrix norm satisfies

| (112) |

for some constant . This is always possible because is bounded and is positive definite and continuous. For instance, one can chose

| (113) |

In this case, we can prove that

| (114) |

satisfies (112). By the contraction mapping theorem, for every , there is a fixed point such that

| (115) |

By the definition of in (111), this implies that

| (116) |

i.e., the fixed point is the optimal control. From Proposition 2, for any integer , we have

| (117) |

This implies that . Let be a deep neural network approximation (to be constructed) of with an error bound

| (118) |

From Proposition 4,

| (119) |

The inequalities (117) and (119) and Proposition 5 imply

| (120) |

In the next, we construct and derive . Let be a step size for finite difference. Then

| (121) |

where

| (122) |

Define

| (123) |

Then

| (124) |

By assumption, is a deep neural network that approximates satisfying

| (125) |

We define by substituting for in . Then

| (126) |

From Proposition 5, (124) and (126) imply

| (127) |

Using the right side of the above inequality as , (120) implies

| (128) |

The parameter is removed from the inequality because . If we define

| (129) |

then (128) is equivalent to (108). From the structure of , we know that the complexity of is bounded by

| (130) |

Consider a control system

| (131) |

where is a bounded closed set. We assume a zero-order hold (ZOH) control input function. Specifically, given any positive integer . Let and for . Then the control input is a step function that takes a constant value in each time interval . Let be the trajectory of the control system (131) with the initial condition and a step function as the control input. For a fixed , we can treat as a long vector , where and is the value of , . Define

| (132) |

If is in , then is a function of . Let be a function. Define the cost function as follows:

| (133) |

The problem of ZOH optimal control is

| (134) |

Given any , let represent the optimal control. Let be the set defined in (106) where is replaced by . Suppose in (131) is defined for all where is large enough so that

| (135) |

for all and . For the simplicity of derivation in the following theorem, we assume .

Theorem 6

Suppose that in the control system (131) and in the cost function (133) are both compositional functions that satisfy A1. Assume that all nodes in and are . Assume that the Hession of with respect to is positive definite for all . Let and be the diameter of . Then, for any , there exists a deep neural network, that approximates . The estimation error is bounded by

| (136) |

The complexity of is bounded by

| (137) |

for some constant .

Remark 7

The inequality (137) is a simplified version that contains only three explicitly presented parameters, , and . For fixed , the constant in (137) depends on , , , , , , , , the Lipschitz constants of , and with respect to for , the norms of and its Jacobian, the eigenvalues and diagonal entries of the Hession matrix of , and the diameter of . We avoid showing an explicit expression of as a function of these parameters because such a formula would be too long. However, following the proof, an algebraic expression of the complexity upper bound in terms of all these parameters can be derived in detail if needed.

Proof. Suppose we can find a deep neural network, (to be found later), that approximates with an error upper bound , i.e.,

| (138) |

From Theorem 5, there is a deep neural network, , that approximates the optimal control with an error upper bound

| (139) |

where is a constant determined by the eigenvalues of the Hession of , is the diameter of the set and depends on the diagonal of the Hession of . Given any . For the first term to be bounded by , we need . This is obvious if , because . If , implies

| (140) |

We can choose

| (141) |

for some constant that is determined by and the eigenvalues of the Hessian of . For the second term in (139) to be bounded by , we have

| (142) |

We choose

| (143) |

for . For the third term in (139) to be less than or equal to , we have

| (144) |

or

| (145) |

where . To summarize, we proved that the existence of satisfying (138) and (145) implies

| (146) |

Now, we need to prove the existence of a whose estimation error, , satisfies (145). Suppose we have deep neural networks that approximates and . Let be the deep neural network obtained by substituting functions in (132)-(133) by and . From Proposition 4, the estimation error, , of is bounded by

| (147) |

where depends on , and the Lipschitz constants of and with respect to . From Theorem 2 and Theorem 4, we can find and so that

| (148) |

where depends on , , , , , , , and the norms of and its Jacobian. It also depends on (see (87)). However, for a fixed , this term has an upper bounded determined by the norm of the Jacobian of . To satisfy (145), (148) implies that we can choose

| (149) |

where . From Theorem 2, Theorem 4 and (149), the complexity of , denoted by , satisfies

| (150) |

where . From (110) in Theorem 5, the complexity of is bounded by

| (151) |

where .

7 Conclusions

In this paper, we introduce new concepts and develop an algebraic framework for compositional functions. In addition to functions defined in , iterative numerical algorithms and dynamical systems fall into the category of compositional functions as well. The algebraic framework forms a unified theoretical foundation that supports the study of neural networks with applications to a variety of problems including differential equations, optimization, and optimal control. It is proved that the error upper bound of approximations using neural networks is determined by a set of key features of compositional functions. In the approximation of functions, differential equations and optimal control, the complexity of neural networks is bounded by a polynomial function of the key features and error tolerance. The results could also shed light on the reason why using neural network approximations may help to avoid the curse of dimensionality. As shown in examples, increasing the dimension of a system may not increase the value of the key features.

The study in this paper raises more questions than answers. All neural networks used in the proofs of theorems have layered DAGs that are similar to the DAG of the function being approximated. What is the underlying connection between the shallow neural networks that approximate individual nodes and the neural network that approximates the overall function? How can this connection help to generate initial guess of parameters for neural network training? Instead of functions, to what extent one can apply the idea in this paper to a larger family of functions to include or even discontinuous functions? This is important because optimal control problems with constraints are likely to have or discontinuous feedbacks. The DAG associated with a given function is not unique. What types of DAGs are advantageous for more accurate approximation with less complexity? In general, many problems of nonlinear control such as finding Lyapunov functions, designing output regulators, control and differential game do not have tractable numerical solutions if the dimension of state space is high. Finding compositional function representations of these problems may lead to innovative and practical solutions based on deep learning.

References

- [1] P. M. Anderson and A. A. Fouad. Power Systems Control and Stability, IEEE Press, 1994.

- [2] A. R. Barron, Universal approximation bounds for superpositions of a sigmoidal function, IEEE Trans. on Information Theory, Vol. 39 (3), pp. 930-945, 1993.

- [3] A. R. Barron and J. M. Klusowski, Approximation and estimation for high-dimensional deep learning networks. arXiv:1809.03090, 2018.

- [4] C. Chiu and J. Zhan, An evolutionary approach to compact DAG neural network optimization, IEEE Access, Vol. 7, pp. 178331-178341, 2019.

- [5] W. E, C. Ma, S. Wojtowytsch and L. Wu, Towards a mathematical understanding of neural network-based machine learning: what we know and what we don’t, arXiv:2009.10713v3, 2020.

- [6] W. E and S. Wojtowytsch, On the Banach spaces associated with multi-layer ReLU networks: function representation, approximation theory and gradient descent dynamics, arXiv:2007.15623v1, 2020.

- [7] P. Giesl, B. Hamzi, M. Rasmussen and K. Webster. Approximation of Lyapunov functions from noisy data, Journal of Computational Dynamics, Vol. 7(1), pp 57-81, 2020.

- [8] L. Grüne, Overcoming the curse of dimensionality for approximating Lyapunov functions with deep neural networks uder a small-gain condition, arXiv:2001.08423v3, 2020.

- [9] E. Hairer, S. P. Nørsett, G. Wanner, Solving Ordinary Differential Equations I: Nonstiff Problems, second edition, Springer, 1993.

- [10] B. Hamzi and H. Owhadi. Learning dynamical systems from data: a simple cross-validation perspective, arXiv.org/abs/2007.05074, 2020.

- [11] J. Han, A. Jentzen and W. E. Solving high-dimensional partial differential equations using deep learning, Proceedings of the National Academy of Sciences, 115(34):8505–8510, 2018.

- [12] J. Han and W. E. Deep learning approximation for stochastic control problems, arXiv:1611.07422v1, 2016.

- [13] P. C. Kainen, V. Krková, M. Sanguineti, Approximating multivariable functions by feedforward neural nets. In: M. Bianchini, M. Maggini, L. Jain (eds) Hanbook on Neural Information Processing. Intelligent Systems Reference Library, Vol 49, pp143-181, Springer, 2013.

- [14] W. Kang, Q. Gong and T. Nakamura-Zimmerer, Algorithms of data development for deep learning and feedback design, arXiv:1912.00492v2, 2020.

- [15] A. N. Kolmogorov, On the representation of continuous functions of many variables by superposition of continuous functions of one variable and addition, In Doklady Akademii Nauk, Vol. 114, No. 5, pp. 953-956, Russian Academy of Sciences, 1957.

- [16] V. Kůrková, Kolmogorov’s theorem and multilayer neural networks, Neural networks, Vol. 5, No. 3, pp. 501-506, 1992.

- [17] E. Lorenz, Predictability – A problem partly solved, Seminar on Predictability, Vol. I, ECMWF, 1996.

- [18] H. N. Mhaskar, Neural networks for optimal approximation of smooth and analytic functions, Neural Computation, Vol. 8, No. 1, pp. 164-177, 1996.

- [19] H. N. Mhaskar and T. Poggio, Deep vs. shallow networks: an approximation theory perspective, arXiv: 1608.03287v1, 2016.

- [20] H. N. Mhaskar and T. Poggio, Function approximation by deep networks, arXiv:1905.12882v2, 2019.

- [21] T. Nakamura-Zimmerer, Q. Gong and W. Kang, Adaptive deep learning for high dimensional Hamilton-Jacobi-Bellman equations, arXiv:1907.05317, 2019.

- [22] T. Nakamura-Zimmerer, Q. Gong and W. Kang, QRnet: optimal regulator design with LQR-augmented neural networks, IEEE Control Systems Letters, Vol. 5, No. 4, pp.1303-1308, 2020.

- [23] T. Poggio, H. Mhaskar, L. Rosasco, B. Miranda, Q. Liao, Why and when can deep - but not shallow - networks avoid the curse of dimensionality: a review, arXiv:1611.00740v5, 2017.

- [24] J. Qi, K. Sun, and W. Kang, Optimal PMU placement for power system dynamic state estimation by using empirical observability Gramian, IEEE Trans. Power Systems, Vol. 30, No. 4, pp. 2041-2054, 2015.

- [25] M. Raissi, P. Perdikaris, and G. E. Karniadakis, Physics-informed neural networks: a deep learing framework for solving forward and inverse problems involving nonlinear partial differential equations. J. of Computational Physics, 378:686–707, 2019.