Neural Interactive Proofs

Abstract

We consider the problem of how a trusted, but computationally bounded agent (a ‘verifier’) can learn to interact with one or more powerful but untrusted agents (‘provers’) in order to solve a given task. More specifically, we study the case in which agents are represented using neural networks and refer to solutions of this problem as neural interactive proofs. First we introduce a unifying framework based on prover-verifier games (Anil et al., 2021), which generalises previously proposed interaction protocols. We then describe several new protocols for generating neural interactive proofs, and provide a theoretical comparison of both new and existing approaches. Finally, we support this theory with experiments in two domains: a toy graph isomorphism problem that illustrates the key ideas, and a code validation task using large language models. In so doing, we aim to create a foundation for future work on neural interactive proofs and their application in building safer AI systems.

1 Introduction

Recent years have witnessed the proliferation of large machine learning (ML) systems (Villalobos et al., 2022), useful for solving an increasingly wide range of tasks. Often, however, it can be difficult to trust the output of these systems, raising concerns about their safety and limiting their applicability in high-stakes situations (Amodei et al., 2016; Bengio et al., 2023; Hendrycks et al., 2023). At the same time, traditional approaches in verification do not scale to today’s most powerful systems (Seshia et al., 2022). There is thus a pressing need to identify new angles via which to gain such assurances.

In response to this need, we take inspiration from interactive proofs (IPs) (Goldwasser et al., 1985), one of the most important developments in computational complexity theory and cryptography. In an IP, a computationally bounded but trustworthy verifier interacts with a more powerful but untrustworthy prover in order to solve a given problem (Figure 1). Under reasonable assumptions, it can be shown that such interactions can allow the verifier to solve many more kinds of problem than it could alone, all while limiting the chance of being misled by the prover.

In this work, we investigate neural interactive proofs, in which the prover and verifier are represented by neural networks. Several different instances of this general idea have been proposed in recent years (Irving et al., 2018; Anil et al., 2021; Wäldchen et al., 2022), though there is still a gap between the theory inspiring these methods and their practical implementation. Our work aims to help bridge this gap.

1.1 Contributions

We seek to provide a comprehensive treatment of neural interactive proofs spanning both theory and practice. In particular, we provide the following contributions:

-

(i)

a unifying game-theoretic framework that generalises existing neural IP protocols;

-

(ii)

several new neural IP protocols, including those that allow for zero-knowledge proofs;

-

(iii)

a theoretical and empirical comparison of both new and existing protocols; and

-

(iv)

an extensible codebase for testing different protocols in different domains.222The codebase will be provided in a later version of the paper.

In so doing, we hope to create a foundation for future work on neural interactive proofs and their application in building safer ML systems.

1.2 Example

In order to aid the reader’s intuition for the concepts we introduce, we provide an example scenario to which all neural IP protocols can be applied, and refer back to this throughout the paper.

Example 1.

Consider a domain of challenging programming tasks, each of which is defined by some combination of natural language specification and implicit world knowledge.333Importantly, we assume that it is impractical or impossible to convert the task description into a specification amenable to standard formal verification tools. There is a powerful AI system (a “prover”) capable, in theory, of solving every task, but who does so in an inscrutable way that is difficult to trust. We would like to use a weaker but trusted AI system (a “verifier”) – which is incapable of solving the task by itself – to check that any code produced by the prover really does satisfy the corresponding specification. A pictorial representation is given in Figure 1.

Formally, this example is a decision problem where the input is a specification and a piece of code. In a neural IP, the agents learn to interact to decide whether the code satisfies the specification.

1.3 Related Work

The most closely related work to ours was – until recently – that of Anil et al. (2021), who introduce prover-verifier games played between neural networks, which we generalise and build on. While an important first step, this work is limited by the formal strength of the proof systems that result from their specific protocol (as we show), and by its application only to small models and problem instances. Similar to prover-verifier games are the works of Irving et al. (2018) and Wäldchen et al. (2022), whose proof systems make use of two provers in competition with one another and are stronger from a theoretical perspective, but are again only applied to very simple problems.

More recently, three papers (concurrent with our own and with each other) have sought to overcome some of the practical limitations of these earlier works by evaluating protocols using LM agents. Kenton et al. (2024) moves beyond earlier efforts (Michael et al., 2023; Khan et al., 2024) by considering several tasks aside from question answering, and also computational (instead of merely informational) asymmetries between the provers and verifiers. They find that multi-prover ‘debate’ protocols outperform single-prover ‘consultancy’ protocols but that there is a relatively limited benefit to debate compared to the verifier baseline performance. The authors hypothesise that one reason for this is that they do not train their models using the protocol (which is the focus of our work). Kirchner et al. (2024) do train their agents to play prover-verifier games using multiple rounds of expert iteration Anthony et al. (2017), but only on the protocol introduced by Anil et al. (2021), which we show has important theoretical limitations. They find that the helpful prover’s accuracy and the verifier’s robustness to adversarial attacks increase over the course of training, though their primary focus is on the legibility of solutions to humans. Finally, and most recently, Arnesen et al. (2024) combine several of the strengths of these two investigations by comparing multiple protocols and training the provers using a novel variant of Direct Preference Optimisation (Rafailov et al., 2023), though they restrict their attention to question-answering. Mirroring Kenton et al. (2024), they find that optimising the provers leads to higher verifier accuracy in debate but not consultancy, and that debate training introduces stronger argumentation (as measured by the use of quotations).

Unlike these recent works, our investigation is not only empirical but aims to further understand the theoretical implications of different protocols. In the same spirit, Brown-Cohen et al. (2023) study doubly efficient debate, where the provers run in polynomial time and the verifiers are more efficient still (Goldwasser et al., 2008). They prove that under appropriate assumptions, any polynomial-time computation can be verified using only a constant number of queries to the black-box representing human judgement (and in time linear in the size of a single query). Other closely related research includes work on interactive proofs for PAC verification (Goldwasser et al., 2020), where the verifier’s task is to assess whether the prover has produced a near-optimal hypothesis, and – concurrent with, and most similar to, our own work – on self-proving models (Amit et al., 2024), where the authors devise a method for training provers to demonstrate the correctness of their outputs to a fixed verifier. Both of these latter works, however, focus on hand-crafted rather than learnt proof systems. In contrast, we take inspiration from Gowal et al. (2019) and hypothesise that such ideas can best be scaled to real-world ML systems if the verifier can learn the protocol.

2 Preliminaries

This section provides a brief technical background on games and interactive proofs, which are the two main building blocks of neural interactive proofs. In general, we index agents using superscripts and time (or other variables) using subscripts. Vectors are written in bold, and elements of sets are written as lowercase and uppercase letters, respectively. denotes the set of distributions over and represents the indicator function for , i.e. if and only if . Given a vector , we write for where .

2.1 Proof Systems

Interactive proofs are standardly defined with respect to a decision problem , where is the set of problem instances and is the set of ‘positive’ instances. In Example 1, is the set of all specification-code pairs produced by the prover, and is the set of pairs where the code satisfies the specification. The prover and verifier exchange messages from their message spaces and respectively. In our example, these could be the space of all text strings under a certain length.

Definition 1 (Goldwasser et al., 1985; Goldreich, 2001).

An interactive proof system for comprises a prover and verifier which, given an input , interact to (stochastically) generate a sequence of messages (a ‘ proof’). The (finite) sequence length is determined by , whose eventual output is given by , corresponding to ‘accept’ and ‘reject’, respectively. We denote this (stochastic) proof produced by on input as . We say that is -valid (or simply ‘valid’) for if it satisfies:444Technically, we may generalise this to polynomial time functions such that for some polynomial .

-

•

Completeness: If , then ;

-

•

Soundness: If , then for any prover .

The classes of decision problem for which there exists a valid interactive proof system depend on the sets of provers and verifiers under consideration. For example, in the the original formulation due to Goldwasser et al. (1985), the prover is unbounded and the verifier is a probabilistic polynomial time Turing machine, which gives rise to the class (equal to ) (Shamir, 1992).

Definition 2 (Goldwasser et al., 1985; Goldreich, 2001).

We say that is (-statistically) zero-knowledge if for every verifier there is some verifier such that . We call a simulator.

While validity can be viewed as a property of the verifier, being zero-knowledge can be viewed as a property of the prover. Intuitively, is zero-knowledge if the verifier learns only whether and nothing else, i.e. does not gain any additional power through their interaction with (represented by the fact that ).

2.2 Games

In this work, we study -player games where are the agents, is a product strategy space and contains loss functions for . Each agent selects a strategy in an attempt to minimise their loss . More specifically, we focus our attention on what we term ‘messaging games’, which centre around rounds of communication between the different agents via multiple channels. In Example 1, for instance, the verifier might cross-reference portions of the code or the prover’s answers by sending them to a second, independent prover via a separate channel.

Definition 3.

In a messaging game , play proceeds by agents sending messages via a number of channels according to a mechanism , which determines the set of agents who can sent a message in channel at time . When is deterministic we write . Agents can only observe messages in channels they belong to, denoted , and cannot identify the sender of any message beyond the channel’s other members. When , agent sends a message based on their previously observed messages across . Whenever , a random message is sent. Finally, play terminates whenever a decision is sent in a special channel . We drop , , and from the notation for when unambiguous or unimportant.

We use to denote the -player game induced when agent plays strategy in , but where the remaining agents have not yet chosen their strategies. In practice, we assume that each agent’s strategy space is defined by some finite number of parameters , and will often refer to instead of . Within these games, we make use of two standard equilibrium concepts, which can be defined both locally and globally.

Definition 4.

A local Nash equilibrium (LNE) on is a strategy profile such that:

for all . A local Stackelberg equilibrium led by player () on is a strategy profile such that:

If then is a (global) Nash/Stackelberg equilibrium (NE/SE). We denote the local and global NEs/-led SEs of by / and /, respectively. We consider approximate versions of these concepts, where the for each agent has some tolerance .555Formal mathematical characterisations are provided in the proof of Section 4 – see Section B.1. Given , we denote the approximate equilibria as and .

3 Prover-Verifier Games

Prover-verifier games (PVGs) were introduced by Anil et al. (2021) as a game-theoretic framework to incentivise learning agents to solve decision problems in a verifiable manner. Concretely, we consider probabilistic decision problems where is a distribution over . In Example 1, for instance, there might be many kinds of programming task and solutions, jointly distributed according to , with the set then representing the specification-code pairs. Upon receiving an input , a verifier interacts with one or more provers according to a high-level protocol determined by the structure of the PVG to see if they can generate a ‘proof’ that . The agents in the game receive losses as a function of their strategies for interacting.

In the remainder of the section, we make the above setting and earlier assumptions more formal by introducing a generalisation of PVGs based on the messaging games defined earlier. This generalised definition is sufficiently broad so as to capture several other protocols (Irving et al., 2018; Wäldchen et al., 2022), as well as the new protocols that we introduce in this paper. A summary of the different protocols is shown in Table 1.

Definition 5.

A generalised prover-verifier game (PVG) for a probabilistic decision problem is a messaging game played between provers and verifiers, where . When there is just one prover or verifier, we denote their index as or , respectively. Play begins via the common observation of some , i.e. for every channel , and . We assume that that for some and that . Finally, let denote the strategy for agent that samples uniformly at random from their message space at every turn, and let . We additionally require that:

-

1.

if and only if (the deciding verifier’s objective is to output the true label);

-

2.

for any , where (the verifier(s) cannot solve the problem);

-

3.

If (counterfactually) for and then (any prover can solve the problem);

-

4.

There is and such that (the provers’ and verifiers’ objectives are misaligned).

Different PVGs represent different messaging specifications between the prover(s) and verifier(s), with the basic idea being that we wish to construct a game such that its equilibria correspond to valid proof systems. For example, Anil et al. (2021) introduce a model – which they refer to as an ‘Abstract Decision Problem’ (adp) – in which the prover (deterministically) sends a single message to the verifier, and the verifier must make its decision in response. They show that there is indeed a correspondence when is given by a set of deterministic distributions – i.e. functions – and contains the convex combinations of functions . Unfortunately (as we explain further in Section B.1), these restrictions limit the power of the protocol, and relaxing means that the correspondence no longer holds.

propositionpvgbroken There is a probabilistic decision problem and an adp game such that – even though there exists some valid interactive proof protocol with – the fact that is neither necessary nor sufficient for to be valid.

Motivated by this negative result, we introduce a new protocol in Section 4 that overcomes these issues. Other forms of protocol can be characterised, for example, as a competition between two provers, which is judged by the verifier. Formal definitions of adp and its limitations, the debate model (Irving et al., 2018) and the ‘Merlin-Arthur Classifier’ (mac) model (Wäldchen et al., 2022) can be found in Appendix A.

4 Neural Interactive Proofs

Unlike in the case of standard interactive proofs, we assume that the verifier has some access to a dataset of labelled examples and aims to learn a strategy for interacting with the prover that generalises to any input. More formally, we assume that the verifier has access to a dataset with each and . Letting , the verifier’s aim is to minimise across inputs .

In this work, we consider two ways of operationalising this aim: the expected loss (or risk), and the worst-case loss. We denote the empirical risk with respect to as , and the empirical worst-case loss by . Minimisers of these losses are denoted and respectively. We drop from our notation when considering the full space inputs , with expectations taken with respect to in the ER case.

While the expected loss is by far the most common objective in contemporary ML, from the perspective of safety-critical systems, we would ideally like to minimise the worst-case loss. In other words, we wish to find some such that is small. Moreover, this is despite the fact that is large, as per Definition 5.

Concretely, optimising in the code validation example aims to produce a verifier which on unseen specification-code pairs gives a decision that has a high probability of being correct, even if the prover is able to fool it with incorrect code. Conversely, optimising aims to produce a verifier that has low probability of being fooled on any code. Taking inspiration from the adp protocol (formally defined in Appendix A), let us consider a PVG with, , , and , and loss functions given by:

We refer to this protocol as nip, and it is straightforward to show that this corresponds closely to the notion of an interactive proof. {restatable}theoremnipstackelberg Let be a probabilistic decision problem that has a valid proof system and a nip game. Then is a valid IP system if and only if it is an approximate verifier-leading Stackelberg equilibrium of . While this proposition essentially reduces the problem of finding a valid proof system to the task of finding an equilibrium in a given game, this task is far from straightforward. In particular, there are two key difficulties. Firstly, there is the challenge of learning to minimise the worst-case (as opposed to the expected) loss. Secondly, there is the challenge of finding a Stackelberg equilibrium.

4.1 Worst-Case Loss

The simplest approach to minimising the worst-case loss using finitely many data generated from is to ignore the worst-case performance and simply return some . The question then becomes: when is minimising the empirical risk with respect to sufficient for minimising the worst-case risk with respect to ? The following result shows that we can break this down into two properties (defined formally in Section B.2): (a) the empirical worst-case loss being similar to the actual worst-case loss; and (b) for a given , the empirical worst-case loss of being similar to that of . These conditions do not always hold, but can do when the decision problem is sufficiently ‘regular’.

propositionworstcaseloss If has the worst-case uniform convergence property (a) and the worst-case robustness property (b) then there is some such that for every , if then with probability .

Alternatively, we can introduce an adversary, , whose strategy space is and whose loss function is . We then replace the terms in the original loss functions for the prover and verifier with and respectively. The verifier-leading Stackelberg equilibria of the original nip game are then identical to the verifier-prover-leading Stackelberg equilibria in this new three-player game, denoted . Unlike the classical learning-theoretic approach above in which we assumed we were given a fixed dataset of pairs, we are here assuming access to an adversary capable of outputting any . This stronger assumption may not always hold, but when it does, learning can be more efficient (Goldman & Kearns, 1995).

propositionadversarialstackelberg Let be a probabilistic decision problem and a nip game. Then is an approximate verifier-leading SE () of if and only if there is some such that is an approximate verifier-prover SE () of (the adversarial version of ).

4.2 Solving Stackelberg Games

Computing Stackelberg equilibria can be naturally modelled as a bi-level optimisation problem. A standard solution to such problems using gradient-based methods is to employ a timescale separation (Borkar, 2008). In particular, we take the sequential nature of the problem setting into account by explicitly modelling the dependence of on and updating more quickly as part of an ‘inner loop’. Fiez et al. (2020) show that if then with high probability the following dynamics will converge locally to the neighbourhood of a :

where we drop the dependence on from our notation and write and for and , respectively. These updates require computing an inverse Hessian vector product, which is intractable when is large. Replacing the term with leads to the LOLA (Learning with Opponent Learning Awareness) update (Foerster et al., 2018), which aims to actively influence the future policy updates of its opponents. While LOLA may fail to converge, interpolating between the LOLA update and LookAhead (Zhang & Lesser, 2010) leads to local convergence to stable fixed points in differentiable games under self-play (Letcher et al., 2019).

5 Extensions

Finally, we generalise the nip protocol along two natural dimensions in order to strengthen the properties of the resulting proof systems.

5.1 Multiple Provers

Multi-prover interactive proofs (MIPs) are a natural generalisation of classical IPs (Ben-Or et al., 1988), whose additional power results from the fact that while the two provers may correlate their strategies, they are prevented from communicating with one another during their interactions with the verifier (Babai et al., 1991). This allows the verifier to ‘cross-examine’ the provers.

We define the mnip protocol identically to the nip protocol, but now with two provers, and , each of which has the same loss. Valid MIP systems are defined as in Definition 1, with the soundness condition altered such that must be robust to any choice of . Using a similar proof to that of Section 4, it can be shown that the equilibria of the mnip PVG correspond to valid MIP systems. The only subtlety is that due to the provers’ ability to coordinate on a joint strategy and shared random signal beforehand, we must consider correlated equilibria (defined in Section B.1).

theoremmnipstackelberg Let be a probabilistic decision problem that has a valid proof system and a mnip game. Then is a valid MIP system if and only if it is an approximate verifier-leading correlated Stackelberg equilibrium of .

5.2 Zero Knowledge

We now consider whether the prover can prevent the verifier from learning any more than to solve the task at hand. We formalise this question as whether the resulting proof system is zero-knowledge (ZK). Far from a theoretical curiosity, this may have important practical implications, such as for the prevention of model cloning/stealing via black-box access (Orekondy et al., 2019).

Recall from Definition 2 that is ZK if for any and any such that , there is another weak agent capable of generating approximately the same distribution over message sequences as , without interacting with . While it is possible to introduce a precise analogue of this condition, we wish to investigate whether the verifier can gain knowledge regardless of the value of .

We therefore provide a slightly stronger condition that requires statistical closeness between and for any . In other words, short of the answer , there is nothing else that can learn by interacting with . We can implement this protocol using a PVG by introducing an additional verifier agent and a simulator , whose objectives and are defined via:

and by adding the term to the prover’s loss . We refer to the resulting protocol as zk-nip, and show that the equilibria of this game correspond to ZK proof systems. {restatable}theoremzknipstackelberg Let be a probabilistic decision problem that has a valid -statistically zero-knowledge system and a zk-nip game. Then is a valid -zero-knowledge IP system if it is an approximate verifier-prover-leading Stackelberg equilibrium () of . We note that in this protocol the equilibria contain a Stackelberg hierarchy with more than two levels. Namely, the prover commits to their strategy before agents and . Technically, should also be viewed as committing before , but is a zero-sum game and so reaching an NE is sufficient.

6 Experiments

Having characterised the theoretical power of various PVG protocols, it remains to be seen whether they are sufficient for solving real-world problems empirically. Indeed, until very recently (Kenton et al., 2024; Arnesen et al., 2024; Khan et al., 2024), no previous study has attempted to rigorously compare different protocols, and despite this recent progress, it remains unclear what their relative strengths and weaknesses are in practice. To provide further clarity, we conduct experiments in two domains: a toy graph isomorphism problem that illustrates the key ideas, and a code validation task using large language models. Further details about the setup of our experiments can be found in Appendix C, and we release a comprehensive codebase that supports the easy specification of new protocols and their evaluation in new domains. Throughout this section, error bars represent one standard deviation, taken across 10 seeds.

6.1 Graph Isomorphism

As a warm-up exercise, we consider the problem of testing for graph isomorphism using a synthetic dataset of 10,000 graphs (each with approximately ten vertices) generated via the Erdős-Rényi model. The verifiers and provers are implemented using a graph neural network (GNN) and transformer body, with standard feedforward heads used for sending messages and estimating the value of each state. Agents are trained using independent PPO (Schulman et al., 2017; Schroeder de Witt et al., 2020) and evaluated on a held-out test set. This simple setting has the benefit that by varying the number of GNN layers we can precisely calibrate the capability of the agents to distinguish between isomorphic and non-isomorphic graphs. By controlling the number of rounds of the Weisfeiler-Leman test (Weisfeiler & Leman, 1968) needed to distinguish non-isomorphic graph pairs in the dataset, we ensure a theoretical limit on the accuracy attainable by the verifier alone.

We begin by directly comparing the adp, debate, nip, and mnip protocols, shown in Figure 2(a) (plots of the accuracy and mean episode length over training are shown in Section D.1). While we see that nip performs favourably, with verifiers learning to near-perfectly classify valid instances when interacting with the prover, other protocols do not see an increase in performance. This, however, is mainly due to the problem setting, in which there is a relatively simple algorithm for testing for isomorphic graphs: the verifier selects a random node from one graph, and if the prover can return a similar node in the second graph, then they are likely isomorphic. This algorithm is not possible under adp as the verifier does not send any messages to the prover, and is harder to learn in the presence of multiple provers (as in debate and mnip).

Rather, the graph isomorphism task is useful because it allows us to assess how the verifier’s performance changes with its computational abilities. More precisely, we vary both the verifier’s network width and the its number of layers. We show results for the nip protocol in Figure 2(b). We observe that – as expected – larger models are able to solve the task whereas smaller models are not, and that by interacting with a (non-random) prover, verifiers gain capability in comparison with interacting with a random prover. Notably, this gap is most pronounced for verifiers that are incapable of solving the task by themselves, but are capable of doing so after learning to interact with the prover.

We also investigate the zk-nip protocol by varying the coefficient of the term in the prover’s loss, which controls the strength of the prover’s incentive to minimise the simulator’s loss. In Figure 2(c), we plot the mean difference between and against the coefficient. We observe a roughly negative correlation, suggesting that the training converges on an equilibrium with lower information leakage as the prover coefficient increases.

6.2 Code Validation

Our second experiment involves a much more complex problem: checking that a given Python program satisfies a natural language specification. In particular, we make use of the Automated Programming Progress Standard (APPS) dataset (Hendrycks et al., 2021), which consists of Python coding challenges with ground-truth solutions written by humans, as well as test cases for checking the solutions. We create a new dataset by drawing two solutions for each problem, one of which we insert a subtle bug into using GPT-4o-mini (OpenAI, 2024), checking that the result is still valid Python code but that it fails on at least one of the test cases. An example is given in Section C.2.1, and the median (Levenshtein) edit distance when inserting a bug is seven. We use GPT-4o as the provers and GPT-4o-mini as the verifiers, fine-tuning both using eight rounds of expert iteration (Anthony et al., 2017). In each iteration, we fine-tune the models from scratch using all previous rollouts, following Kirchner et al. (2024). The models are then evaluated on a held-out test set.

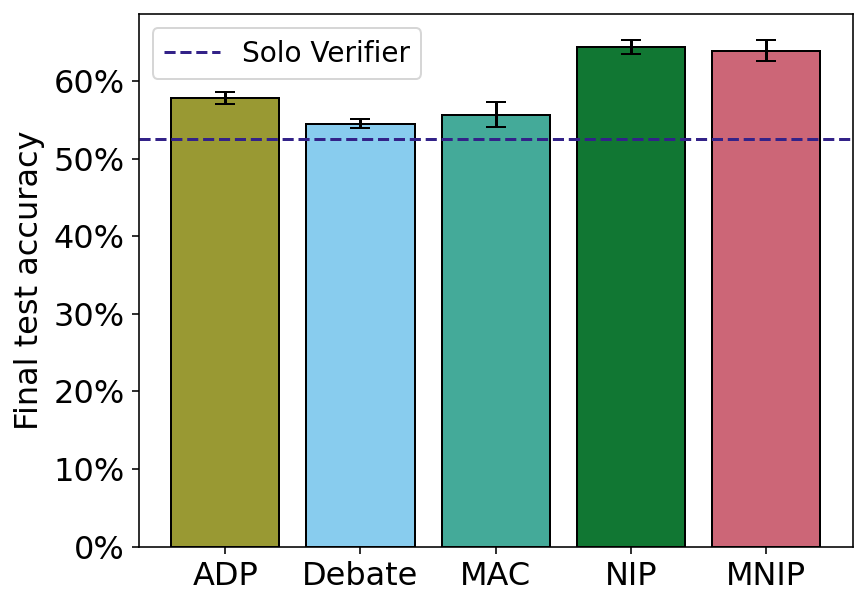

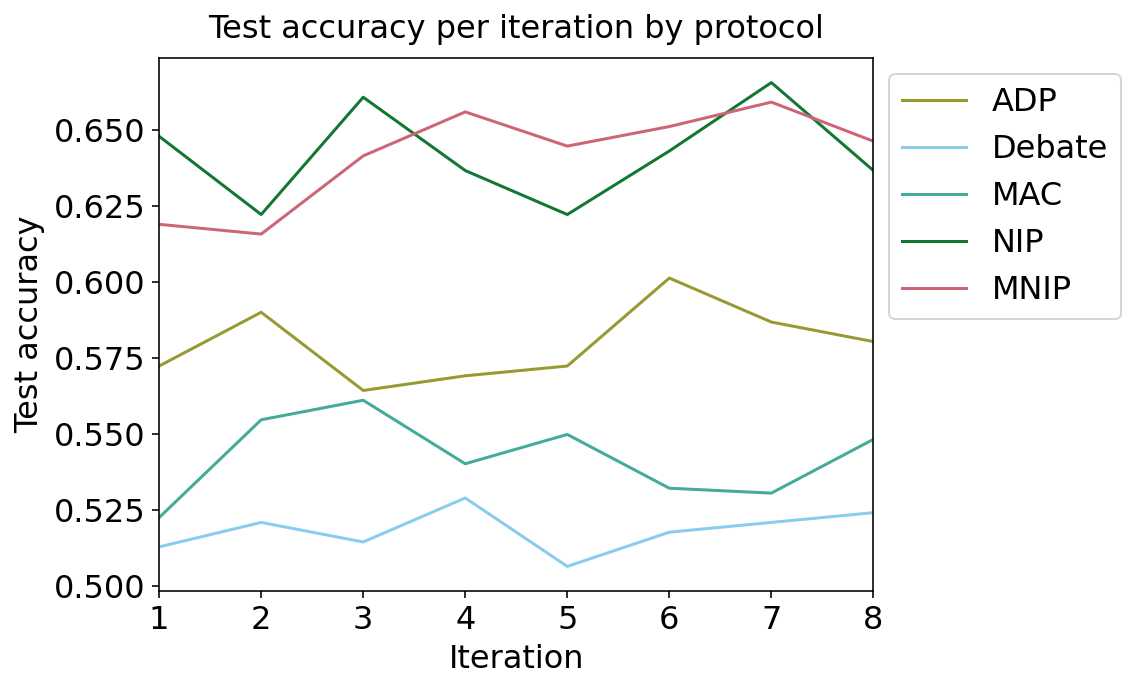

We test each of the protocols introduced above, and in all cases we observe a modest improvement in final test accuracy over the baseline of a verifier trained in isolation (Figure 3(a)). The nip and mnip protocols see the greatest improvements in accuracy (around 11%). A key differentiator between the protocols is presented in Figure 3(b). In line with Section 4, an IP system corresponds to minimising the worst-case loss. To measure the extent to which worst-case performance is optimised, we sample rollouts from the trained agents 10 times for each test data point, and record the percentage of these where the verifier always guesses incorrectly. As shown in Figure 3(b), most protocols perform significantly better than the solo verifier baseline, with mnip performing the best. Figure 3(c) refines the accuracy metric by plotting the final precision and recall of each protocol. We see that the nip and mnip protocols perform well by obtaining a lower false negative rate than the other protocols, while maintaining a high true positive rate.

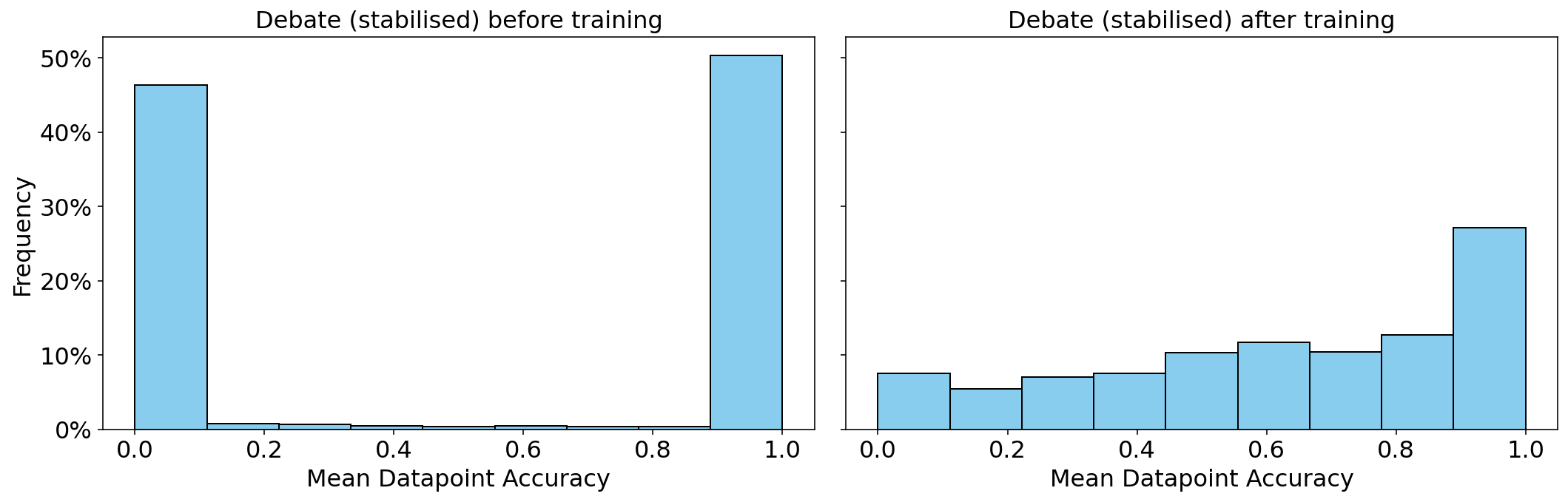

We observed that both debate and the solo verifier have a high precision-to-recall ratio. Further analysis reveals that expert iteration exacerbates an initial low acceptance rate in both cases, because only rollouts where the verifier guesses correctly are included in the fine-tuning data. To remedy this, we introduce a new training scheme (‘stabilised’ expert iteration) where in an annealed proportion of the rollouts, the verifier’s guess is replaced with the true label. Figure 4(a) shows that this greatly reduces the ‘mode collapse’ dynamics seen with vanilla expert iteration. In Figure 4(b) we see that replacing the verifier guess also allows the solo verifier to obtain much higher mean accuracy, which we hypothesise is due to the training scheme being closer to standard supervised fine-tuning. Using this scheme has a small effect on the mean accuracy of each interaction protocol, improving some and worsening others. However, Figure 4(c) shows that the worst-case performance of every protocol is improved by this training scheme, with the debate protocol performing particularly well.

7 Discussion

Motivated by the problem of developing scalable methods for gaining assurances about the trustworthiness of increasingly large models, we sought to provide a comprehensive treatment of neural interactive proofs spanning both theory and practice. Such assurances will become increasingly important as ML systems are used to complete tasks where it is inefficient or impossible for humans to check for correct behaviour – a problem known as scalable oversight (Amodei et al., 2016; Leike et al., 2018; Christiano et al., 2018). Our results contribute to growing body of work which tentatively suggests that such techniques may be increasingly viable, and moreover may be aided by continuing advances in AI capabilities Khan et al. (2024); Arnesen et al. (2024).

Our present work has a number of limitations, however. First, the algorithms we use for training in our experiments do not make use of some of the more advanced methods described in Section 4.1 and Section 4.2 (for the graph isomorphism task), or RL-based learning (for the code-validation task), which would bring our empirical results closer to their theoretical underpinnings. Second, we only evaluate the protocols on two domains which, while providing a suitable testbed for some of the primary questions we ask in this paper, are far from representative of the increasingly wide range of tasks that contemporary AI systems can be used to solve. Third, we do not evaluate all variations of all protocols, such as debate with simultaneous vs. sequential messaging or “open protocols in which the provers choose what outcome to argue for in training” (Kenton et al., 2024).

Aside from addressing the limitations described above, the game-theoretic framework and codebase we have introduced in this paper support the future development and evaluation of new protocols, which may provide better theoretical or empirical performance than the protocols we discuss here. Another important avenue for further work is in closing the gap between theory and practice by developing learning-theoretic results (as opposed to complexity-theoretic results based on abstract models of computation such as Turing machines) about the extent to which the computational abilities of learning agents and the amount of data available to them affects the ability for weaker agents to verify stronger agents. We hope that with such advances, it will eventually be possible to generate more rigorous arguments for the safety of models even more advanced than today’s state of the art.

Ethics Statement

Our contributions are squarely aimed at improving the safety and trustworthiness of advanced AI, both now and in the future. In our paper we also make use of synthetic data in two domains (graph isomorphism and code validation) that present few immediate ethical considerations. With that said, there are at least two remarks worth briefly making. The first is that the methods we present are far from being ready to enforce safety in complex real-world applications, and though inspired by the existence of strong theoretical results in complexity theory, do not enjoy the strength when applied to ML systems (unlike, say, applications in cryptography). Thus, while believe this line of research warrants further work, it should not – in its current form – be understood as guaranteeing safety. The second remark is that training in PVGs revolves fundamentally on training a powerful prover to at least partially deceive or mislead the weaker verifier. Needless to say, training powerful AI systems to be as persuasive as possible (even if only to other AI systems) need not always be societally beneficial, and could lead to the manipulation of humans as well. This concern is especially important to keep in mind as AI systems grow more capable and become more ingrained in peoples’ lives.

Reproducibility Statement

To make sure that our work is reproducible, we provide a full version of our codebase in the supplementary material, which will be made public upon release of the paper. This includes the datasets we used for our two experiments, as well as the code for generating them. Additional details about the data generation processes, agent architectures, and training processes can be found in Appendix C. Finally, we provide proofs for all of our results in Appendix B.

References

- Amit et al. (2024) Noga Amit, Shafi Goldwasser, Orr Paradise, and Guy Rothblum. Models that prove their own correctness. arXiv:2405.15722, May 2024. doi: 10.48550/ARXIV.2405.15722.

- Amodei et al. (2016) Dario Amodei, Chris Olah, Jacob Steinhardt, Paul Christiano, John Schulman, and Dan Mané. Concrete problems in AI safety. arXiv:2108.12099, 2016.

- Anil et al. (2021) Cem Anil, Guodong Zhang, Yuhuai Wu, and Roger Grosse. Learning to give checkable answers with prover-verifier games. arXiv:2108.12099, 2021.

- Anthony et al. (2017) Thomas Anthony, Zheng Tian, and David Barber. Thinking fast and slow with deep learning and tree search. In Proceedings of the 31st International Conference on Neural Information Processing Systems, NIPS’17, pp. 5366–5376, Red Hook, NY, USA, 2017. Curran Associates Inc. ISBN 9781510860964.

- Arnesen et al. (2024) Samuel Arnesen, David Rein, and Julian Michael. Training language models to win debates with self-play improves judge accuracy. arXiv:2409.16636, September 2024. doi: 10.48550/ARXIV.2409.16636.

- Babai et al. (1991) László Babai, Lance Fortnow, and Carsten Lund. Non-deterministic exponential time has two-prover interactive protocols. Computational Complexity, 1(1):3–40, 1991.

- Ben-Or et al. (1988) Michael Ben-Or, Shafi Goldwasser, Joe Kilian, and Avi Wigderson. Multi-prover interactive proofs: How to remove intractability assumptions. In Proceedings of the Twentieth Annual ACM Symposium on Theory of Computing, pp. 113–131, New York, NY, USA, 1988. Association for Computing Machinery.

- Bengio et al. (2023) Yoshua Bengio, Geoffrey Hinton, Andrew Yao, Dawn Song, Pieter Abbeel, Yuval Noah Harari, Ya-Qin Zhang, Lan Xue, Shai Shalev-Shwartz, Gillian Hadfield, Jeff Clune, Tegan Maharaj, Frank Hutter, Atılım Güneş Baydin, Sheila McIlraith, Qiqi Gao, Ashwin Acharya, David Krueger, Anca Dragan, Philip Torr, Stuart Russell, Daniel Kahneman, Jan Brauner, and Sören Mindermann. Managing AI risks in an era of rapid progress. arXiv:2310.17688, 2023.

- Borkar (2008) Vivek S. Borkar. Stochastic Approximation. Hindustan Book Agency, 2008.

- Brown-Cohen et al. (2023) Jonah Brown-Cohen, Geoffrey Irving, and Georgios Piliouras. Scalable AI safety via doubly-efficient debate. arXiv:2311.14125, 2023.

- Christiano et al. (2018) Paul Christiano, Buck Shlegeris, and Dario Amodei. Supervising strong learners by amplifying weak experts. arXiv:1810.08575, October 2018.

- Drexler (2019) K. Eric Drexler. Reframing superintelligence: Comprehensive ai services as general intelligence. Technical Report 2019-1, Future of Humanity Institute, University of Oxford, 2019.

- Fiez et al. (2020) Tanner Fiez, Benjamin Chasnov, and Lillian Ratliff. Implicit learning dynamics in stackelberg games: Equilibria characterization, convergence analysis, and empirical study. In Proceedings of the 37th International Conference on Machine Learning (ICML), pp. 3133–3144, 2020.

- Foerster et al. (2018) Jakob Foerster, Richard Y. Chen, Maruan Al-Shedivat, Shimon Whiteson, Pieter Abbeel, and Igor Mordatch. Learning with opponent-learning awareness. In Proceedings of the 17th International Conference on Autonomous Agents and MultiAgent Systems (AAMAS), pp. 122–130, 2018.

- Fürer et al. (1989) Martin Fürer, Oded Goldreich, Y. Mansour, Michael Sipser, and Stathis Zachos. On completeness and soundness in interactive proof systems. Advances in Compututing Research, 5:429–442, 1989.

- Goldman & Kearns (1995) S.A. Goldman and M.J. Kearns. On the complexity of teaching. Journal of Computer and System Sciences, 50(1):20–31, 1995.

- Goldreich (2001) Oded Goldreich. Foundations of Cryptography: Basic Tools. Cambridge University Press, 2001.

- Goldwasser et al. (1985) Shafi Goldwasser, Silvio Micali, and Charles Rackoff. The knowledge complexity of interactive proof-systems. In Proceedings of the Seventeenth Annual ACM Symposium on Theory of Computing, 1985.

- Goldwasser et al. (2008) Shafi Goldwasser, Yael Tauman Kalai, and Guy N. Rothblum. Delegating computation: interactive proofs for muggles. In Proceedings of the fortieth annual ACM symposium on Theory of computing, STOC ’08. ACM, May 2008. doi: 10.1145/1374376.1374396.

- Goldwasser et al. (2020) Shafi Goldwasser, Guy N. Rothblum, Jonathan Shafer, and Amir Yehudayoff. Interactive proofs for verifying machine learning. Technical Report 58, Electronic Colloquium Computational Complexity, 2020.

- Gowal et al. (2019) Sven Gowal, Krishnamurthy Dvijotham, Robert Stanforth, Timothy Mann, and Pushmeet Kohli. A dual approach to verify and train deep networks. In Proceedings of the 28th International Joint Conference on Artificial Intelligence (IJCAI), 2019.

- Hendrycks et al. (2021) Dan Hendrycks, Steven Basart, Saurav Kadavath, Mantas Mazeika, Akul Arora, Ethan Guo, Collin Burns, Samir Puranik, Horace He, Dawn Song, and Jacob Steinhardt. Measuring coding challenge competence with APPS. In Thirty-fifth Conference on Neural Information Processing Systems Datasets and Benchmarks Track (Round 2), 2021. URL https://openreview.net/forum?id=sD93GOzH3i5.

- Hendrycks et al. (2023) Dan Hendrycks, Mantas Mazeika, and Thomas Woodside. An overview of catastrophic AI risks. arXiv:2306.12001, 2023.

- Irving et al. (2018) Geoffrey Irving, Paul Christiano, and Dario Amodei. AI safety via debate. arXiv:1805.00899, 2018.

- Kenton et al. (2024) Zachary Kenton, Noah Y. Siegel, János Kramár, Jonah Brown-Cohen, Samuel Albanie, Jannis Bulian, Rishabh Agarwal, David Lindner, Yunhao Tang, Noah D. Goodman, and Rohin Shah. On scalable oversight with weak LLMs judging strong LLMs. arXiv:2407.04622, July 2024. doi: 10.48550/ARXIV.2407.04622.

- Khan et al. (2024) Akbir Khan, John Hughes, Dan Valentine, Laura Ruis, Kshitij Sachan, Ansh Radhakrishnan, Edward Grefenstette, Samuel R. Bowman, Tim Rocktäschel, and Ethan Perez. Debating with more persuasive llms leads to more truthful answers. In Proceedings of the Forty-First International Conference on Machine Learning, 2024. URL https://openreview.net/forum?id=iLCZtl7FTa.

- Kirchner et al. (2024) Jan Hendrik Kirchner, Yining Chen, Harri Edwards, Jan Leike, Nat McAleese, and Yuri Burda. Prover-verifier games improve legibility of llm outputs. arXiv:2407.13692, July 2024. doi: 10.48550/ARXIV.2407.13692.

- Leike et al. (2018) Jan Leike, David Krueger, Tom Everitt, Miljan Martic, Vishal Maini, and Shane Legg. Scalable agent alignment via reward modeling: A research direction. arXiv:1811.07871, November 2018.

- Letcher et al. (2019) Alistair Letcher, David Balduzzi, Sébastien Racanière, James Martens, Jakob N. Foerster, Karl Tuyls, and Thore Graepel. Differentiable game mechanics. Journal of Machine Learning Research, 20(84):1–40, 2019.

- Michael et al. (2023) Julian Michael, Salsabila Mahdi, David Rein, Jackson Petty, Julien Dirani, Vishakh Padmakumar, and Samuel R. Bowman. Debate helps supervise unreliable experts. arXiv:2311.08702, November 2023. doi: 10.48550/ARXIV.2311.08702.

- OpenAI (2024) OpenAI. Gpt-4o system card, 2024.

- Orekondy et al. (2019) Tribhuvanesh Orekondy, Bernt Schiele, and Mario Fritz. Knockoff nets: Stealing functionality of black-box models. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019.

- Rafailov et al. (2023) Rafael Rafailov, Archit Sharma, Eric Mitchell, Christopher D Manning, Stefano Ermon, and Chelsea Finn. Direct preference optimization: Your language model is secretly a reward model. In Proceedings of the Thirty-Seventh Conference on Neural Information Processing Systems, 2023. URL https://openreview.net/forum?id=HPuSIXJaa9.

- Schroeder de Witt et al. (2020) Christian Schroeder de Witt, Tarun Gupta, Denys Makoviichuk, Viktor Makoviychuk, Philip H. S. Torr, Mingfei Sun, and Shimon Whiteson. Is independent learning all you need in the starcraft multi-agent challenge? arXiv:2011.09533, November 2020.

- Schulman et al. (2017) John Schulman, Filip Wolski, Prafulla Dhariwal, Alec Radford, and Oleg Klimov. Proximal policy optimization algorithms. arXiv:1707.06347, July 2017.

- Seshia et al. (2022) Sanjit A. Seshia, Dorsa Sadigh, and S. Shankar Sastry. Toward verified artificial intelligence. Communications of the ACM, 65(7):46–55, 2022.

- Shamir (1992) Adi Shamir. IP = PSPACE. Journal of the ACM, 39(4):869–877, 1992.

- Skalse et al. (2022) Joar Skalse, Lewis Hammond, Charlie Griffin, and Alessandro Abate. Lexicographic multi-objective reinforcement learning. In Proceedings of the Thirty-First International Joint Conference on Artificial Intelligence, pp. 3430–3436, 2022.

- Villalobos et al. (2022) Pablo Villalobos, Jaime Sevilla, Tamay Besiroglu, Lennart Heim, Anson Ho, and Marius Hobbhahn. Machine learning model sizes and the parameter gap. arXiv:2207.02852, 2022.

- Wäldchen et al. (2022) Stephan Wäldchen, Kartikey Sharma, Max Zimmer, and Sebastian Pokutta. Merlin-arthur classifiers: Formal interpretability with interactive black boxes. arXiv:2206.00759, 2022.

- Weisfeiler & Leman (1968) Boris Weisfeiler and Andrey Aleksandrovich Leman. A reduction of a graph to a canonical form and an algebra arising during this reduction. Nauchno-Technicheskaya Informatsia, 2(9):12–16, 1968.

- Xu et al. (2019) Keyulu Xu, Weihua Hu, Jure Leskovec, and Stefanie Jegelka. How powerful are graph neural networks? In International Conference on Learning Representations, 2019.

- Zhang & Lesser (2010) Chongjie Zhang and Victor Lesser. Multi-agent learning with policy prediction. In Proceedings of the 24th AAAI Conference on Artificial Intelligence, pp. 927–934, 2010.

Appendix A Additional Protocols

While a comprehensive comparison is beyond the scope of the present work, we include a brief description and high-level comparison of additional models for neural interactive proofs from the literature, with a summary given in Table 1.

Definition 6 (Anil et al., 2021).

The adp model is a PVG where and . is given by a set of deterministic distributions – i.e. functions – and contains the convex combinations of functions . The specification is such that and and the loss functions are given by:

where the expectation is taken with respect to and .

Under the debate protocol, two provers declare their answers as and respectively, and the verifier chooses to accept the answer given by prover after witnessing the ensuing debate .

Definition 7 (Irving et al., 2018).

The debate model is a PVG where and , with . The protocol is and we let . The loss functions are given by:

for the two provers and the verifier, respectively.

The Merlin-Arthur Classifier (mac) protocol is closely related to debate. In this setting, the verifier must output the correct class for the input (or output ‘not sure’) based on a feature provided by either a helpful (player ) or unhelpful (player ) prover, not knowing which provided the feature.

Definition 8 (Wäldchen et al., 2022).

The mac model is a PVG where and , with representing classes and a ‘not sure’ answer (), and are a set of features. Unlike debate and adp, the protocol is stochastic, with for and . The loss functions are given by:

where is a hyperparameter.

For a given PVG model model and solution concept , we denote the class of decision problems solved by , with provers and verifiers in classes of Turing machines and , respectively, as . Typically, we consider the case when and , where where is the set of all Turing machines and is the class of all probabilistic polynomial time machines. In this setting, we can draw analogies between the PVG models we discuss and the complexity classes they correspond to.

For example, by employing well-known results about the complexity class (Shamir, 1992), it follows immediately from Section 4 that nip corresponds to . Irving et al. (2018) similarly prove that debate corresponds to . On the other hand, while Anil et al. (2021) show that the s of adp correspond exactly to valid interactive proof protocols (when the verifier is deterministic), the theoretical strength of this result is severely limited due to its stipulation of zero soundness error, as we prove in Appendix B.

Appendix B Proofs

In this section, we provide proofs for the results presented in the main text , as well as further discussion and interpretation of these results.

B.1 Protocol Correspondences

We begin by restating a result from Anil et al. (2021), demonstrating a correspondence between the equilibria of adp PVGs and valid proof protocols.

Proposition 1 (Anil et al., 2021).

Let be a (probabilistic) decision problem and a adp game. Suppose that there exists some deterministic such that and . Then is a valid interactive proof protocol (with ) for :

-

•

If and only if ,

-

•

Only if .

Allowing for a soundness error is widely held to be critical to the power of interactive proofs. Indeed, if a set has a valid interactive proof protocol with , then .666On the other hand, having non-zero completeness error still results in (Fürer et al., 1989). Similarly, the restriction to deterministic verifiers is also theoretically significant: if a set has a valid interactive proof protocol where is deterministic, then we must also have . Unfortunately, if we consider a more realistic setting by relaxing these assumptions then the correspondence between the s of an adp PVG and valid proof protocols no longer holds.

*

Proof.

Let use consider the specific PVG with and , with the following deterministic strategies for the prover (who has message space ):

and with the verifier choosing a strategy that forms a convex combination over:

where are Iverson brackets (i.e. an indicator function), and thus the codomain of each is . We write explicitly as , where . Writing these strategies out explicitly we have:

| 0 | 1 | 2 | 3 | |

|---|---|---|---|---|

| 1 | 0 | 1 | 0 | |

| 0 | 1 | 2 | 1 | |

| 0 | 1 | 2 | 3 |

| 0 | 1 | 2 | 3 | |

|---|---|---|---|---|

| 0 | 1 | 1 | 0 | |

| 1 | 1 | 0 | 0 | |

| 1 | 1 | 1 | 1 |

Let . Then if (i.e., either or ) we have , and hence . Similarly, note that that for any , we have that for any such that (i.e., either or ). As , then is a valid proof protocol.

Suppose, for the remainder of the proof, that and . First, we show lack of necessity. By the reasoning above, we have that is a sound and complete interactive proof protocol for . But under the loss functions for adp while , and so the prover can beneficially deviate by switching to . Thus, is not an .

Second, we show lack of sufficiency. As we explain further below, the unique of the resulting PVG is given by , where and . Under this equilibrium, however, we have that (hence ) and (hence ). Therefore, we have , and so is valid if and only if . But because , this is false for any . In such cases, being an is insufficient for validity, completing the proof.

The intuition behind the equilibrium is that the larger the probability mass on the setting when (i.e. the smaller is) the more the verifier (and also the prover, as ) has an overriding incentive to make sure that it outputs the correct answer in this particular case. Because if or (for any ), the verifier is thus incentivised to encourage the prover to play . The only way the prover can lower its loss by playing is if the verifier plays with high probability.

Given that is being played with some probability, then the loss from extra probability mass placed on or is symmetric, hence we only need to find the probability of the verifier playing . The negative loss for the verifier is given by:

Given that we must have to avoid infinite loss, the verifier-optimal choice of can be found analytically by solving for the stationary points of the expression above with respect to , resulting in the solution . ∎

We next prove the correspondence result for nip games, which avoids the issues with Proposition 1 by considering approximate equilibria and by not blurring the distinction between soundness and completeness when considering worst-case outcomes. Alongside these theoretical results (and existing complexity-theoretic arguments), we note that our experimental results also suggest that using nip over adp leads to improved performance (see, e.g. Figure 3).

*

Proof.

Before beginning the proof notice that for any , the least upper bound of the resulting completeness and soundness errors are given by and respectively.

In an approximate equilibrium, the prover and verifier each select their strategies in a way that brings them within some or of the loss from their optimal strategy, respectively. We will show that is a valid IP system if and only if it is a (strict) verifier-leading -SE of . Concretely, we set:

where (because is a two-player game) contains the prover’s approximate (local) best-responses to , denoted . is the set of valid proof systems for , defined as:

where we assume that .

First, let us assume that is a valid IP system, with bounds and . Let us show that is a Stackelberg equilibrium. The optimal strategy for the prover in response to has loss . Suppose for a contradiction that the prover can deviate from to reduce their loss by at least :

Because is a valid IP system, then . Combining these inequalities yields a contradiction:

Thus is a strict of .

Conversely, let us assume that is a strict of with the aim of showing that a valid IP system. Define as the set of approximate best responses to that maximise the soundness error, i.e. maximise the loss when . Next, let us take some . We claim that is a valid IP system with soundness and completeness errors and respectively. Clearly, by construction, we have that for any prover strategy . Finally, for a contradiction, let us assume that:

It follows immediately that is not a strict of after all, as the verifier can deviate to lower their loss by at least , and thus we must have , meaning that is valid. ∎

Here we establish a correspondence between the Stackelberg equilibria of a nip game and its adversarial counterpart . We refer the reader to Section B.2 for further results and discussion on optimising for the worst-case loss.

*

Proof.

First consider some . By definition, the adversary best responds to . Considering their loss:

this is achieved by picking that maximises and that maximises . Furthermore, the prover -best responds to given that will be chosen in this way. This means that:

is within of the minimum. Now note that:

for . Therefore, we have that:

is within of the minimum. In other words, the prover -best responds to under the loss functions of . Using similar reasoning for the verifier, we see that .

Conversely, let be a verifier-leading -Stackelberg equilibrium. Let be the strategy for the adversary which selects such that and are maximised. Then by repeating the above argument in reverse we see that is a verifier-prover-leading -Stackelberg equilibrium, i.e. . ∎

We now prove the correspondence result for mnip games. The proof is very similar to that of the correspondence for nip games, so we limit ourselves to noting the differences.

*

Proof.

We follow the proof of Section 4. This time we define the approximation bound as follows.

In the mnip protocol, the provers are assumed to be able to agree on a joint strategy beforehand – including a commonly observed source of randomness – though their interactions with the verifier during the game are independent. The source of randomness then essentially forms a correlation device for the provers, allowing them to sample their actions using the agreed upon joint strategy . If neither prover has an incentive to deviate from this agreement given their action (provided by this ‘correlation device’), then we say that they are playing as in a correlated equilibrium.777We note that there is a slight discrepancy in our usage of this term from the classic definition, as we consider equilibria in which only the provers (not the verifier) correlate their strategies. In our formulation, the provers correlate their behavioural strategies and can randomise each time they send a message. However, because each prover is assumed to have perfect recall, then there is an equivalent joint mixed strategy in which the provers use their correlation device to each sample a deterministic strategy, which they then follow. Since and have the same loss, for a correlated Stackelberg equilibrium we can consider their combined strategy effectively as the strategy of a single player aiming to minimise the shared loss.

Now assume that is a valid MIP system, with bounds and . The optimal strategy pair for the provers in response to has (shared) loss:

Supposing for a contradiction that the provers can deviate from to reduce their loss by at least (), as before we obtain that:

and . Combining these inequalities again yields the contradiction:

The converse direction proceeds as before, again noting that in correlated Stackelberg equilibrium the combined strategy can reasoned about as the strategy of a single player. In particular, the fact that and can vary together when considering a best response to the verifier’s strategy means that the verifier’s strategy must be robust to both provers independently, which is what is required in the soundness condition. ∎

Finally, we prove the correspondence result for zk-nip games. As above, the proof is very similar to the previous cases, so we do not include the full proof. Regarding the motivation behind our discussion of zero-knowledge protocols, the idea is that while prover-verifier games may describe a training setup (in today’s current ML paradigm where there is a training-deployment dichotomy), in the future we will likely have large numbers of AI systems and services interacting with one another in order to solve tasks (see, e.g., Drexler (2019) for one vision of this scenario). While we may want such systems to be able to query one another we may not wish for agents to gain additional knowledge from doing so (perhaps because it represents private information, or could imbue the agent with new, potentially dangerous capabilities). While this risk is not novel, the concept of zero-knowledge interactions between such agents provides a firm theoretical foundation for addressing such problems. On the other hand (from the verifier’s perspective instead of the prover’s), it also suggests a fundamental limit to the amount that might be learnt from interacting with another, more powerful agent.

*

Proof.

Recall that in zk-nip we introduce two new verifiers, and , where is a ‘dishonest’ verifier that attempts to extract knowledge from the prover , in the sense of being able to generate a distribution over transcripts that cannot be generated (by the simulator ) simply by learning . If this is possible, then must be providing more knowledge than to . In this new game, are defined via:

In addition, we add the term to the prover’s standard loss. There is a slight subtlety here, however, in that we would like the prover to minimise conditional on the system being valid, as opposed to reducing while potentially violating the validity of the system. (This would be true, for example, if the prover adopted a strategy that always outputs a single message at every turn.) This leads to a lexicographic objective in which first minimises and, from the set of such minimisers, minimises . While this may seem somewhat convoluted, such objectives can be easily optimised in practice via a simple modification of any standard (MA)RL algorithm (Skalse et al., 2022).

In the remainder of the proof, we assume that the bound is tight – i.e. it is the minimal ZK bound achievable for any valid IP system for . We also note that if:

then:

where:

Conditioning on the value of does not introduce any new information for either , , or , because the prover is assumed to be able to compute , and the ZK error for the ‘dishonest’ verifier and simulator is based only on the case where , meaning that their behaviour when is irrelevant for the purposes of the ZK bound in Definition 2. As such, the bound introduced by our ZK variant is at least as strong as this definition, and so it suffices to consider the former.

Let us first suppose that is an of , where is defined as in the proof of Section 4 and the approximation errors for and are 0. First note that for any and then is zero-sum game between and . Hence, under – which is an NE in – we have a unique value .

In particular, because the prover seeks to minimise given that it is best-responding to , we must have that , where is the nip game underlying the zk-nip game in question. In other words, we end up with a valid proof system for (as per the reasoning in the proof of Section 4) that minimises the ZK error.888Here we assume a strong Stackelberg equilibrium in which is assumed to break any ties in favour of , hence our minimisation over . Thus, we have that is a valid -statistically zero-knowledge system for . ∎

B.2 Worst-Case Loss

The next result establishes that, under certain conditions, minimising the empirical risk is sufficient to minimise the worst-case loss. While optimising for the worst-case loss is inherently intractable for extremely complex, real-world scenarios. Our aim with Section 4.1 is to gesture at the high-level conditions of a problem that imply that despite this difficulty it can be enough to minimise the empirical risk. As more advanced techniques and theory become available for targeting worst-case optimisation, satisfying these conditions may become available by other means.

We also refer the reader to Section 4.1, which establishes a correspondence between optimising for the worst-case loss and the use of an additional adversarial agent in a given protocol. Our aim with Section 4.1 is merely to formalise the intuitive idea that the introduction of an adversary is a natural example of one such technique and mirrors, for instance, the use of an adversary in the debate protocol. To complement these theoretical results, we include empirical results regarding the worst-case performance of different protocols (see Figures 3(b) and 4(c)), which indicate that progress can indeed be made in this direction.

Definition 9.

has the worst-case uniform convergence property with respect to , , and if there is some function such that for every , if consists of samples then for all , with probability .

Definition 10.

has the worst-case robustness property with respect to , , and if there is some function such that for every , if consists of samples then with probability .

*

Proof.

Let us begin by defining . Next, we expand into four expressions, which we denote by to , respectively:

Fix some and let . Consider some drawn iid from such that . Then by worst-case uniform convergence we have that, with probability , for . Thus, we have directly that , and furthermore that:

The second two terms are non-positive as minimises , and for any . This second observation also implies that . Finally, by worst-case robustness, we have that, with probability , . Hence, with probability , as required. ∎

Appendix C Additional Experimental Details

A summary of the two experimental settings we consider in this work is show in Table 2.

| Task | Graph Isomorphism | Code Validation |

|---|---|---|

| X | Pairs of graphs | Natural language specifications and Python code |

| S | The graphs are isomorphic | The code satisfies the specification |

| M | Pairs of nodes | Natural language messages (max 150 words) |

| 10,000 pairs | 4,877 questions (each with two solutions) | |

| Agents | GNN + Transformer + MLP | GPT-4o and GPT-4o-mini |

| Training | Independent PPO | Expert Iteration |

C.1 Graph Non-Isomorphism

C.1.1 Data

Graphs generated using the Erdős-Rényi model using the following steps. In our experiments, we generated graphs ranging between 7 and 11 vertices with edge probabilities ranging between 0.2 and 0.8, and , , , and .

-

1.

Generate non-isomorphic graphs. The pairs are divided equally between the different graph sizes and edge probabilities. The number of graphs with a Weisfeiler-Leman score999I.e. the number of rounds of the Weisfeiler-Lehman algorithm required to determine that the graphs are not isomorphic. of 1, 2 and greater than 2 are divided according to the proportions and .

-

2.

Generate isomorphic graphs, by sampling from the non-isomorphic graph pairs and shuffling the nodes.

-

3.

Generate the remaining isomorphic graphs, by generating new graphs and shuffling the nodes.

The train-test split is 80:20.

C.1.2 Agents

Agents are comprised a shared body with three heads: a value head, a messaging head, and a decision-making head. The first component of the shared body is a Graph Isomorphism Network (GIN) (Xu et al., 2019) which is run in parallel on both graphs. The prover’s GIN consists of five layers, while the verifier’s consists of two. The outputs of the GIN are then passed to a one-layer transformer, where the attention layer is configured so that nodes in one graph may only attend to nodes in the other. The purpose of the transformer is to allow information for each graph to be combined into shared representations, one for each node and a global sum-pooled representation. These are the outputs of the shared body.

The value and decision heads are two-layer feedforward networks which take the global representations as input. The messaging head is a two-layer feedforward network which operated in parallel on the node representations and outputs a logit for each node.

C.1.3 Training

Agents are trained using independent PPO (Schulman et al., 2017; Schroeder de Witt et al., 2020). We use a clipped objective with value , with hyperparameters and . We additionally use advantage normalisation and entropy regularisation with coefficient . The learning rate is . For each protocol we train across seeds for steps.

C.2 Code Validation

C.2.1 Data

We used the Automated Programming Progress Standard (APPS) dataset (Hendrycks et al., 2021), which consists of 10,000 coding problems (specified in natural language), with 131,777 test cases for checking Python solutions and 232,421 ground-truth solutions written by humans. The average length of a problem is 293.2 words, and questions are categorised into three difficult levels (‘introductory’, ‘interview’, and ‘competition’). We generate our training data as follows:

-

•

For each problem , we iterate over the given ground-truth solutions until we are successful in modifying such that it both compiles as valid Python code, and it fails to pass at least one of the automatic checks for .

-

•

More concretely, we use GPT-4o-mini to produce , instructing it (using the prompt shown below) to subtly modify the original answer, not merely introduce a syntactic error, and to maintain stylistic consistency.

-

•

Finally, we clean the data by removing all comments and split the data into a train and test set, where each instance contains input , the problem description and the answer, and the (in)correctness label .

The train-test split of the eventual dataset is 90:10. An example of an edit made to generate the buggy code is as follows, where the deletion and addition are highlighted.

The system prompt given to GPT-4o-mini in order to generate the buggy solutions is as follows:

The prompt then given for each input, i.e. with the $question and $solution variables are drawn from the APPS dataset, was:

C.2.2 Agents

For the agents we use GPT-4o for the provers and GPT-4o-mini for the verifiers. Below we include the prompts given to the agents as part of the nip protocol as an example. Prompts for the other protocols can be found in our codebase.101010The codebase is provided in the supplementary material and will be made public upon release of the paper.

The prover’s system prompt is as follows:

The the verifier’s system prompt is:

C.2.3 Training

We train both provers and verifiers via the OpenAI fine-tuning API using expert iteration for eight rounds (Anthony et al., 2017). This works by fine-tuning the models in each round on the rollouts on which they received positive reward. We use 10% of the underlying dataset at a time, iteratively adding positive examples to the fine-tuning dataset. Following Kirchner et al. (2024), we fine-tune each model from scratch in each iteration.

In addition, we test a variation of expert iteration with verifier guess replacement that we refer to as ‘stabilised’ expert iteration. In this scheme, the prover agents are trained normally, while the verifier agent is trained with a modified method. In each iterations the rollouts are divided into two partitions. From the first partition we select those rollouts where the verifier receives positive reward. We then select all rollouts from the second partition, but in each case we replace the verifier’s guess with the true label. The proportional size of the second partition in annealed throughout training, starting at 80% and decreasing linearly to 0%.

Appendix D Additional Experimental Results

In further experiments, we seek to more deeply understand the nature of neural interactive proofs and the strengths and weaknesses of different protocols.

D.1 Graph Isomorphism

Figure 5 show the effect of scaling train dataset size on verifier accuracy in the NIP protocol. We observe a phase-transition effect at size 10,000. Figure 6 shows the (lack of) change in performance as a function of the verifier’s GNN depth for the other protocols. As noted in Section 6.1, however, the nature of this task means that other protocols should not be expected to fare as well as nip, which is able to relatively easily uncover a natural algorithm in this toy setting. Figure 7 shows how the verifier accuracy and the number of rounds of communication changes throughout training.

D.2 Code Validation

We begin with additional quantitative analysis of neural interactive proofs for the code validation task. We further supplement this with qualitative evidence in the form of transcripts for each of the protocols that we evaluate.

D.2.1 Additional Analysis

Figure 8 compares the test accuracy between the solo verifier baseline and the nip protocol across APPS difficulty levels, without training the agents. In all cases we see that the verifier is able to achieve higher accuracy when interacting with the prover. Moreover, the accuracy achieved decreases as the difficulty of the problem increases, which is expected.

In Figures 9 and 10, we present the distribution of per-data-point accuracies for the verifier, where for each data-point we sample 10 rollouts and compute the proportion of where the verifier guesses correctly. We do this across all protocols, before and after training, and using both variations of expert iterations presented in Section 6.2.

Under both training schemes, the accuracy distribution for the solo verifier collapses to a bi-modal distribution, with modes at both extremes. Strikingly, this does not occur when using the various interaction protocols, where the distribution remains relatively unchanged. The only exception is the debate protocol trained using stabilised expert iteration (where recall that this means that the verifier guess is replaced in an annealed proportion training samples during rounds of expert iteration). There the distribution starts out as bi-modal, but spreads out after training, reducing the proportion of data points where the verifier is always incorrect significantly.

These plots help to point out a key reason that nip and mnip outperform debate which is that using standard expert iteration leads to the verifier rejecting very often under the debate setting. Our leading hypothesis is that this stems from the presence of a prover arguing for rejection, not just acceptance, combined with the fact that for code correctness there is a certain kind of asymmetry where arguing for the presence of a single flaw that undermines the correctness of a program is intuitively easier than arguing for correctness of the entire program. Another way to phrase this is that the debater arguing for rejection need only make an existential claim (“there is a line with a bug”), whereas the debater arguing for acceptance must make a universal claim (“all lines are bug-free”). Combined with the fact that solo verifier is also reluctant to accept any potentially buggy code (even after extensive prompt tuning to prevent this behaviour), this helps to explain the biassed and thus lower performance of debate relative to nip and mnip. When this bias is removed using stabilised experience replay, debate’s performance improves (see Figure 4(b)), though our ongoing and future work that tests additional learning algorithms and problem domains is likely to add further clarity here.

Figures 11, 12 and 13 show how the verifier train and test accuracy and the number of rounds of communication change throughout training.

Finally we analysed the transcripts of the rollouts across training using GPT-4o-mini. We prompt the model to decide each of the following questions for each transcript, and plot the proportion of times it answered ‘yes’ in the corresponding figure.

-

•

Did the prover(s) conform to their assigned role, not conceding that they are wrong? (Figure 14)

-

•

Did each agent follow up on previous discussion points? (Figure 15)

-

•

Did each the prover(s) make use of quotations form the problem description? (Figure 16)

-

•

When the prover(s) made use of quotations, did they do so completely accurately? (Figure 17)

D.2.2 adp Transcripts

Before training:

After training:

D.2.3 debate Transcripts

Before training:

After training:

D.2.4 mac Transcripts

Before training:

After training:

D.2.5 nip Transcripts

Before training:

After training:

D.2.6 mnip Transcripts

Before training:

After training: