capbtabboxtable[][\FBwidth]

Zhilu Lai, Internet of Things Thrust, Information Hub, HKUST(GZ), Guangzhou 511458, China. Formerly at Singapore-ETH Centre.

Neural Extended Kalman Filters for Learning and Predicting Dynamics of Structural Systems

Abstract

Accurate structural response prediction forms a main driver for structural health monitoring and control applications. This often requires the proposed model to adequately capture the underlying dynamics of complex structural systems. In this work, we utilize a learnable Extended Kalman Filter (EKF), named the Neural Extended Kalman Filter (Neural EKF) throughout this paper, for learning the latent evolution dynamics of complex physical systems. The Neural EKF is a generalized version of the conventional EKF, where the modeling of process dynamics and sensory observations can be parameterized by neural networks, therefore learned by end-to-end training. The method is implemented under the variational inference framework with the EKF conducting inference from sensing measurements. Typically, conventional variational inference models are parameterized by neural networks independent of the latent dynamics models. This characteristic makes the inference and reconstruction accuracy weakly based on the dynamics models and renders the associated training inadequate. In this work, we show that the structure imposed by the Neural EKF is beneficial to the learning process. We demonstrate the efficacy of the framework on both simulated and real-world structural monitoring datasets, with the results indicating significant predictive capabilities of the proposed scheme.

keywords:

Neural Extended Kalman Filters, variational inference, learnable filters, deep learning, structural dynamics, structural health monitoring, digital twin1 Introduction

Digital twins have attracted interest in a wide range of applications, among which structural digital twins have shown promise in virtual health monitoring, decision-making and predictive maintenance tao2018digital . A digital twin is a virtual representation of a connected physical asset and encompasses its entire product lifecycle aiaa2020digital . They acquire and assimilate observational data from the physical system and adopt this information to update dynamics models, which represent the evolving physical system kapteyn2021probabilistic . The underlying dynamic model thus significantly underpins the establishment of a digital twin wagg2020digital . Unlike physics-based modeling, which relies on first principles kerschen2006past , data-driven modeling resorts to use of learning algorithms to infer the underlying mechanisms that drive a system’s response brunton2022data . The latter class of models plays an essential role in vibration-based structural health monitoring (SHM) farrar2012structural , as a main approach to understanding the actual properties of the assessed structural systems, and to performing accurate dynamical response prediction with the established models. Diverse data-driven techniques have been developed in the context of SHM, including vibration-based modeling based on modal parameters derived from measured structural response signals dohler2013uncertainty and conventional time series analysis techniques, including those of the AutoRegressive class bogoevska2017data ; avendano2021virtual . These methods are typically non-trivial to apply on complex structures with large inherent uncertainties, such as large-scale civil infrastructure systems.

Further to classical data-driven approaches, which draw from system identification, machine learning (ML) and deep learning (DL), in particular, offer powerful and promising tools for modeling complex systems or capturing latent phenomena. Important applications include image recognition he2016deep ; svensen2018deep ; krizhevsky2012imagenet , speech recognition hinton2012deep , autonomous driving bojarski2016end , and more. Their aptitude in recognizing patterns in data and doing so via use of reduced latent features renders such schemes potent tools for large complex structural dynamics modeling ljung2020deep ; farrar2012structural ; dervilis2013machine , which exhibit complex, oftentimes nonlinear, behaviour.

Many recent works have been focusing on bridging deep learning and structural dynamics chen2018neural ; jiang2017fuzzy . We classify these methods into two main categories, namely direct mappings and generative models. The first class essentially learns a function that maps the input (force/load) to the output (response) of the system. This class of methods exploits neural networks with customized architectures and physical constraints tailored to the problem at hand raissi2019physics ; zhang2019deep ; zhang2020physics ; xu2021phymdan , such as recurrent neural networks (RNN) and convolutional neural networks (CNN). The caveat of these methods lies in constructing a mere input-output mapping without explicitly modeling the governing dynamics, thus implying that interpretability and generalizability may not be guaranteed. Meanwhile, vibration data measured from operating engineered systems usually embody and reflect the underlying governing dynamics of that system. In this respect, the second class of methods, which fall in the generative model class, attempts to learn a dynamical model that best captures the underlying dynamic evolution. Deep learning-based state space modeling, either in continuous or in discretized format, has been employed to perform data-driven modeling with various deep learning methods liu2022physics ; lai2021structural ; fraccaro2017disentangled ; karl2016deep ; gedon2020deep ; girin2020dynamical ; bacsa2023symplectic . This class of methods aligns with the common approach to modeling dynamics, rendering such a representation interpretable, even though it is delivered via learned neural network functions. This paper will be focusing on the second class.

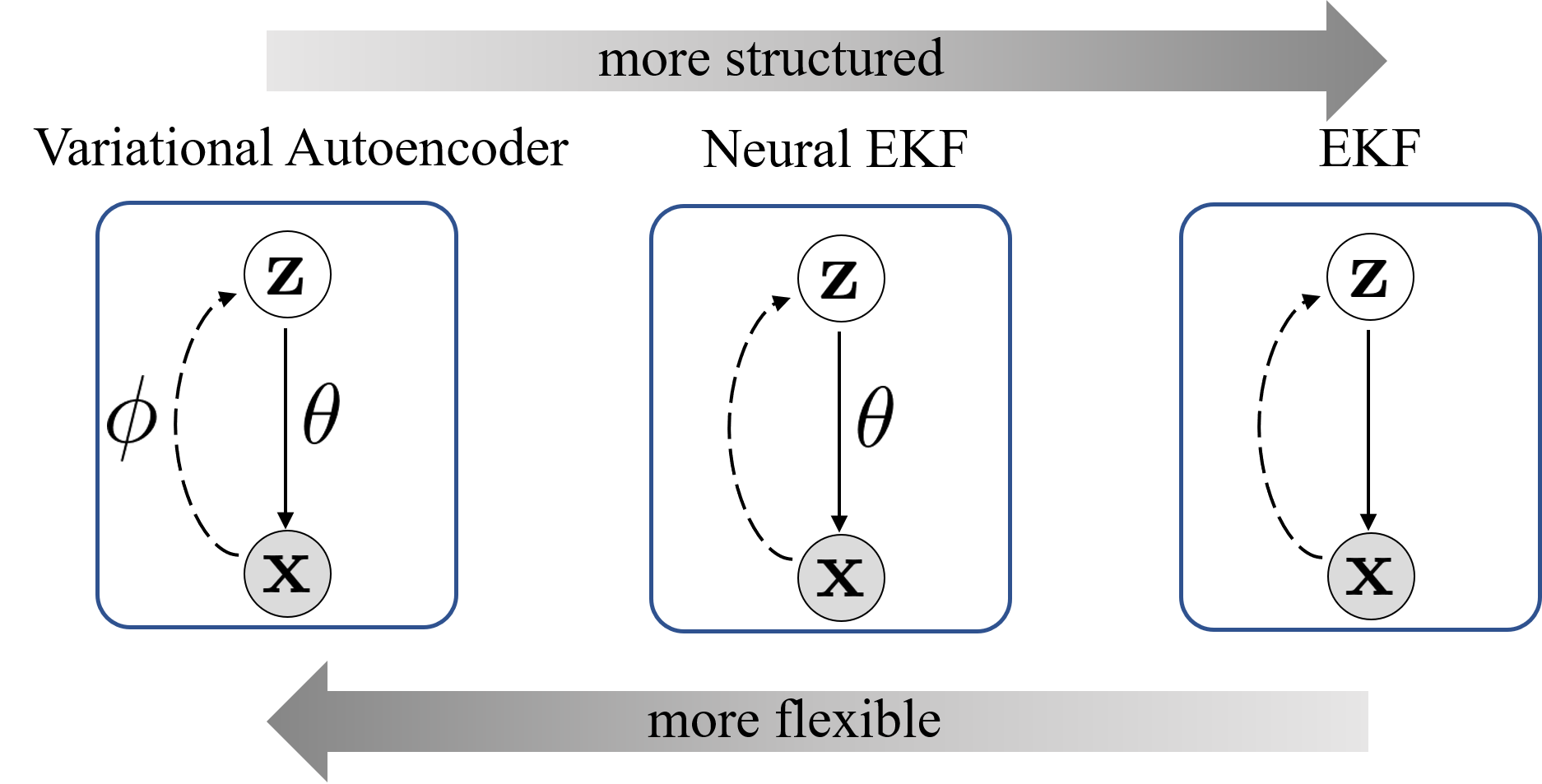

As shown in Figure 1, the key idea of this second class lies in learning three models: (i) the inference model: modeling how the latent variable vector z can be estimated from observation/data x; (ii) the transition model: modeling how the latent variable z evolves over time; and (iii) the observation model: modeling how the measured data x are generated from z. For convenience, the transition and observation models are collectively referred to as dynamics models throughout this paper.

Dynamical variational autoencoders (VAEs) kingma2013auto ; girin2020dynamical is a class of methods following the architecture described in Figure 1, for modeling a dynamical system from data (). In addition to the dynamics models, the inference models in VAE-based methods are typically parameterized by neural networks. As shown in krishnan2017structured ; karl2016deep and studied further in li2021replay , in this setting, the variational objective depends mainly on the inference model, while the dynamic models are relegated to a regularizer for the inference network. Often, this makes the learned model unsuitable for prediction due to the inaccuracy of the dynamics models employed to generate predictions.

On the other hand, while VAEs can be too flexible with both dynamics models and inference models parameterized by neural networks, the conventional Bayesian filtering methods chatzi2009unscented ; azam2015dual ; chatzis2017discontinuous go to the other extreme, requiring known dynamics models, usually derived from physics-based representations, and conduct the inference only using these dynamics models with no further inference models. Formulating suitable transition and observation models can be infeasible and challenging in many scenarios, especially when the dynamics of the system is not easily modeled or fully understood, or the sensory observations stem from vision-based data (images, videos). Over the past years, deep learning has become the method of choice for processing such data. Recent work haarnoja2016backprop ; li2021replay ; kloss2021train ; jonschkowski2018differentiable ; karkus2018particle showed that it is also possible to render the dynamics models of Bayesian filters learnable and train them end-to-end, leveraging deep learning techniques and filtering algorithms. For Kalman Filters haarnoja2016backprop , Extended Kalman Filters li2021replay , Unscented Kalman Filters kloss2021train and Particle Filters jonschkowski2018differentiable ; karkus2018particle , the respective works showed that such learnable filters systematically outperform unstructured neural networks like RNN, LSTM and CNN kloss2021train .

Combining the benefits of the VAE- and Kalman filtering-based strategies, and inspired by related work in the robotics community haarnoja2016backprop ; li2021replay ; kloss2021train ; jonschkowski2018differentiable ; karkus2018particle , we utilize the learnable EKF proposed in li2021replay and name it as Neural Extended Kalman Filter (Neural EKF) in this paper for learning and predicting complex structural dynamics in real scenarios, based on these main principles: (i) we use EKF formulas to conduct closed-form inference instead of implementing an additional neural network to overtake this task, thus allowing for the training to focus on the dynamics models rather than the inference task; and (ii) we eliminate the requirement of explicitly known transition and observation models imposed by conventional Bayesian filtering approaches. At this point, we wish to clarify that the idea we here present is originally adapted from li2021replay , where a Replay Overshooting framework is presented. The Neural EKF presented in this paper is a modified version of the previous scheme, adapted to structural dynamics problems. We further wish to clarify that an existing framework termed Neural EKF owen2003neural appears in existing literature, which is however different to what we here propose. In that work, the transition model comprises the Jacobian of estimated system dynamics as a prior, with an error correction term modeled by neural networks. That framework is termed Neural EKF due to the use of the Jacobian in the transition model, while the neural network simply learns a discrepancy term. There is no actual extended Kalman filtering process, while further the observation and inference models are not considered, as carried out in this work. Here we choose to term this framework the Neural EKF since our suggested scheme comprises a fully learnable version of the original EKF.

From the perspective of Kalman filtering, the Neural EKF is a learnable implementation of the EKF, where the transition and observation models can be learned by end-to-end training. From the perspective of VAE modeling, the Neural EKF draws from a variational learning methodology with the EKF serving as the inference model, which conducts inference using only dynamics models and does not impose additional hyper-parameters to be learned. We use Figure 2 to summarize the comparisons between VAEs, the Neural EKF and the EKF. We validate the framework on three different scenarios to demonstrate its value in learning dynamical systems. Further to verification on simulated data, two experimental case studies on seismic and wind turbine monitoring are presented to validate the capability of the framework to capture the underlying dynamics of complex physical systems. The scheme is shown to perform accurate predictions, which is essential for adoption in downward applications, such as structural health monitoring and decision making.

2 Preliminaries

2.1 Variational Autoencoders and Deep Markov Models

Variational autoencoders (VAEs) kingma2013auto are popularly used for capturing useful latent representations z from data x by reconstructing the VAE input to an output vector. The VAE can be extended to a dynamical version by considering the temporal evolution of the latent variables girin2020dynamical . Among various dynamical VAEs, Deep Markov Models (DMMs krishnan2017structured ) offer a primary example of unsupervised training of a deep state-space model. By chaining an approximate inference model with a generative model and using the variational lower bound maximization approach, DMMs deliver the most direct extension of static VAEs to account for temporal data girin2020dynamical . The DMM couples a transition model , describing temporal evolution of the latent states, with an observation model , serving as a decoder and describing the process from latent states to observations, and an inference model , serving as an encoder, which approximates the true posterior distribution .

The training of a VAE consists in maximizing a variational lower bound of the data log-likelihood . Upon application of the variational principle on the inference model , the evidence lower bound (ELBO) of the data log-likelihood is given as follows:

| (1) |

where the KL-divergence is defined as .

Apart from the parameters involved in transition and observation models, the VAE architecture typically employs an additional inference network, which is independently parameterized by the hyper-parameters of the corresponding neural networks. The key idea of VAE is to approximate the posterior distribution with the introduced inference network. However, the objective function ELBO largely depends on the quality of the inference model through the first reconstruction term, which does not involve a transition model, and the influence of the transition models is indirectly relegated to a regularizer for the inference model, reflected in the second KL-divergence term li2021replay . The KL-divergence itself is a regularization term and it attempts to only make the single-step dynamics transition similar to the posterior given by the inference network. The above arguments indicate that the quality of VAE reconstructions, as measured by the value of the objective ELBO, strongly depends on the inference model but rather weakly on the transition models. This characteristic of the training objective makes the learned model capable of delivering accurate inference results under availability of observation (monitoring) data, but unsuitable for prediction due to the insufficient training of the transition models.

As shown in Fig. 3, the result demonstrates the advantages of the Neural EKF over the DMM, which is, as mentioned, a dynamical VAE model that directly extends the static VAE to account for temporal data. The results are demonstrated on a 2-DOF Duffing system, defined by Equation (28) appearing in the Applications section. It is observed that the DMM fails to deliver sufficiently accurate predictions, while the predicted responses, furnished by the Neural EKF, very well fit the true (reference) responses.

2.2 Extended Kalman Filtering and Smoothing

For nonlinear state estimation and parameter identification in civil and mechanical engineering, the Extended Kalman Filter (EKF) has been a popular choice for weakly nonlinear systems, mainly due to its ease of implementation, robustness and suitability for real-time applications. It assumes a sequence of measurements from a monitored dynamical system, which are generated by some latent states that are not necessarily directly observed. The transition from a state to the next state is termed as transition, and the process from a state to its corresponding observation is termed as observation. Compared to dynamical VAEs, the EKF assumes that the transition and observation models are given, as described by the following two equations:

| (2) | ||||

| (3) |

where and are two known linear/nonlinear functions governing the transition and observation models, and , are Gaussian noise sources with mean zero and covariances and , respectively. EKF assumes that the inferred posterior distributions of latent states are based on past and current observations and driving force , and follow a Gaussian distribution:

| (4) |

Here and represent the mean and covariance of the posterior distribution. The Kalman Filter (KF) 10.1115/1.3662552 offers a closed-form of the posterior distributions of latent states and provides exact inference for linear systems. The EKF conducts approximate inference, employing a linearization of the nonlinear equations. For EKF, the inference of the posterior distribution is obtained by iteratively executing the following two steps. The first step predict is to compute the posterior distribution , which is simply based on previous observations :

| (5) | ||||

| (6) |

where is the Jacobian of at . The second step update pertains in updating the posterior distribution from the predict step using the current observation :

| (7) | ||||

| (8) | ||||

| (9) |

where is the Jacobian of at . The Jacobian matrices and are used as linear approximations of the original nonlinear functions and , respectively, for conveniently computing the involved covariances and .

The EKF is a recursive filtering method for conducting inference based on , i.e., the observations until the present time . Since we assume that a sequence of observations is available, it is possible and reasonable to further update the inference of posterior distributions with the whole sequence of observations, with respect to a time step within the sequence. This process is termed as smoothing. The Rauch-Tung-Striebel smoothing rauch1965maximum is a Kalman smoothing algorithm to infer such posterior distributions of latent states based on the whole sequence of available observations. The smoothing process can fully make use of available data when computing the posterior distributions and generate more accurate inference results with adequate information, instead of only utilizing information before each time step, which is the case for the Kalman filtering process. Note that, compared to Eq.(4), now the posterior depends on the whole sequence of and . Once the EKF process is completed, the smoothing algorithm operates in a backward manner, from time to , to update the posteriors:

| (10) | ||||

| (11) | ||||

| (12) |

The intermediate quantities , , and in this smoothing process are already available at Eqs.(5)-(9), in the EKF stage.

2.3 Prior and posterior distributions

Here we introduce the prior and posterior distributions that will be used for computing the loss function of Neural EKFs in the later section. The final posterior distribution after application of the EKF algorithm and the smoothing step is given as , where and are given in Eqs.(11) and (12). Furthermore, given , we can compute the distribution of each evolved :

| (13) |

where is the Jacobin of at . Subsequently, the distribution of observation , given , can be derived as

| (14) |

where .

3 Neural Extended Kalman Filters

A salient limitation of the EKF when applied to learning dynamical systems lies in the requirement for the transition model and the observation model to be explicitly defined or at least of known functional format. However, this is not practically feasible when applied to real-world complex systems. In tackling this limitation, the key idea of the proposed Neural EKF is to replace and by learnable functions, typically by neural networks. By doing this, the transition and observation models turn to be trainable and efficiently learned by minimizing the defined loss function, making the Neural EKF a flexible tool for learning the dynamics of complex systems. Also, another benefit compared to VAEs, where the inference model is parameterized by neural networks, is that the inference model of the Neural EKF follows a closed-form model. In this section, we will detail the modeling and training of the Neural EKF framework.

3.1 Extended Kalman Filters with Learnable Dynamics Models

In Eqs.(2) and (3), if we replace and by learnable transition and observation functions, the framework can be described as:

| (15) | ||||

| (16) |

where and are the learnable functions governing the transition and observation models, both parameterized by neural networks with parameters . The process noise sources and observation noise are assumed to follow Gaussian distributions, with respective time-invariant covariances, i.e., and for all time steps . The time-invariant covariances and are also set as learnable parameters during the training process. It is noted that here the latent dynamics is formulated as a discrete model. Alternatively, continuous models such as Neural Ordinary Differential Equations chen2018neural can also be implemented to model the latent dynamics if one wants to treat the latent dynamics as a continuous model, as explained in Appendix.

The inference model of the Neural EKF follows the format of the EKF. This is different from the inference model in VAEs, where is a separate inference network parameterized by independent of parameters within and . Since the objective ELBO largely depends on the goodness of reconstruction and inference, a separate inference network is thus weakening the training of the transition and observation models.

3.2 Evidence Lower Bound and Training

With Kalman filters conducting inference, the parameters to be learned are summarized in the vector , which includes neural network parameters of both the transition model and the observation model . In addition, the initial values and and covariances , for respective noises are also parameters to be learned. Similar to the VAE, the training of Neural EKFs is embedded in the variational inference methodology, with the EKF algorithm charged with conducting inference. Given any inference model and Markovian property implied by the dynamics, the ELBO in Eq.(1) can be expressed in a factorized form ( and are abbreviated as and in the following formulations for simplicity):

| (17) |

where, in the Neural EKF, is actually , which alleviates the requirement of additional parameters, further to , within the transition and observation models, and thus reduces to . Since the posterior distributions can be computed in closed form by EKF, the distributions in Eq.(17) can thus be computed explicitly given , as shown in Section Prior and posterior distributions. Thus, the ELBO Eq.(17) can be computed in a surrogate way as:

| (18) |

The above involved distributions are based on the prior . The log-probability and KL-divergence can be computed analytically when all the involved distributions are Gaussian. The log-probability of a Gaussian distribution has an explicit format as:

| (19) |

where is the dimension of . As stated in Eq.(14), since and replace by , we have . Using Eq.(19), the log-likelihood term in Eq.(18) can be computed approximately as:

| (20) |

Similarly, the KL-divergence term , when and are both Gaussian distributions, comprises an analytical form as:

| (21) |

where is the dimension of . As stated in Eq.(13), and replace by ,

| (22) |

since we have and . Substitute these mean and covariance into Eq.(21), the KL-divergence term in Eq.(18) can be computed as:

| (23) |

Thus the final objective ELBO enforced by EKF and smoothing formulas can be written as:

| (24) |

Typically, the variational objective function for the dynamical VAE framework focuses on the reconstruction loss, which largely depends on the inference model (encoder) and observation model (decoder), whereas the transition model plays a minor role in the variational objective and works as an intermediate process for training. This often results in an accurate inference model and a less meaningful transition model, which is not applicable for prediction because the transition model cannot reflect the true underlying dynamics. Therefore, it is necessary that the objective function also takes the accuracy of the transition model into consideration to ensure its closeness to the true latent dynamic process. To address this issue, we adopt the overshooting method proposed in li2021replay , which is termed as replay overshooting. The key point lies in simply introducing the prediction loss of the generative model into the objective function. After obtaining a sequence of means and covariances , the initial value will be further used for prediction process. Then similarly as the prediction step in EKF, the predicted values are obtained via the generative model as:

| (25) | ||||

| (26) |

and we receive another set of distributions from the generative model. The final reconstruction loss is composed of the both sets of posterior distributions, i.e., the posterior distributions obtained from the Kalman inference model in Eq.(20), as well as those obtained from the transition model, weighted by (in this paper, we set ). These form the objective function combined with the KL loss, expressed as:

| (27) |

where the first and third term are the same as in (24), while the second term is for overshooting.

The pipeline of the Neural EKF is summarized in Algorithm 1.

3.3 Neural EKF for Response Predictions

Once a Neural EKF model is trained, the learned function and is available and can be used for response prediction. The prediction is performed using and , given a new initial condition and corresponding driving forces . Note that a new initial condition can be either specified by the user, or inferred from actual data via use of the inference model of the Neural EKF.

The Neural EKF is capable of generating predictions in real-time to detect structural anomalies and changes as they occur for structural health monitoring. On the other hand, it requires offline training on a characteristic batch of operational data, prior to it being applied in prediction mode. However, in intervals where loads significantly increase with respect to the training set, possibly triggering more severe nonlinearity, or if the system experiences damage, the model must be updated offline using newly acquired data for training, in order to adapt to the changed dynamics. This is a trade-off that is often present in real-time monitoring systems, where the need for accurate predictions must be balanced with the need for timely updates.

4 Application Case Studies

We verify and validate the proposed Neural EKF framework on both simulated and real-world datasets. The numerical simulation aims to demonstrate the predictive capabilities of the learned model via Neural EKF, as compared against the commonly adopted option of variational autoencoders.

The experiments involving real-world monitoring datasets aim to further demonstrate the Neural EKF’s ability to learn the dynamics when the system is considerably complex. In this case, physics-based modeling can be challenging due to the unknown model structure, or computationally unaffordable. In the employed seismic monitoring case study, we validate the effectiveness of the Neural EKF in predicting seismic responses on the basis of available ground motion inputs (earthquakes). Furthermore, in the experimentally tested wind turbine case study, we perform a thorough analysis on dynamic response prediction, damage detection and structural health monitoring based on the learned Neural EKF model.

The transition function and observation functions are modeled as multilayer perceptrons consisting of three hidden layers. Each hidden layer comprises 64 nodes for the Duffing and seismic response examples, and 128 nodes for the wind turbine example. The dimension of the latent states is assumed to correspond to twice the DOFs of the modeled system, which is an assumption commonly adopted in conventional state-space modeling. The dimension of latent states is a predefined hyperparameter and can be fine-tuned if needed. This is different from conventional Kalman filters, where a fixed state equation, and thus a corresponding dimension, has to be assumed. In this case, issues may arise in terms of the observability of the system’s states, in case the observed variables are not carefully chosen, and particularly if they are lower than the number of predefined latent states. However, in our proposed method, the latent state mainly serves as an intermediate quantity to optimally reconstruct and predict the observed quantities. Similar to the width and depth of neural networks, a larger latent state dimension usually benefits the training result but may lead to overfitting. In our examples, we opted for a latent state dimension that equals twice the number of DOFs of the modeled system, as this is a natural choice. The data and codes used in this paper are publicly available on GitHub at https://github.com/liouvill/NeuralEKF.

4.1 Duffing Oscillator System

We first demonstrate the performance of the Neural EKF framework for predicting the dynamic response through a numerical example. In this example, we consider a 2 degrees-of-freedom (DOF) nonlinear duffing oscillator subjected to random excitations. The data used for this case study are simulated by the following differential equation:

| (28) |

where , the mass matrix , the stiffness matrix , the damping matrix and the coefficient of the cubic stiffness term . Here the displacements of both DOFs and are assumed available as measurements. It is noted that this parameter setting falls into the scenario where the Duffing oscillator presents a single stable equilibrium point at the origin. We consciously make this choice, as we here adopt this as a nonlinear example, in order to demonstrate the capability of the Neural EKF to deal with nonlinearity, but not rare instabilities or chaotic behavior, which would require a more complex treatment.

For this numerical case study, a number of 1000 random trajectories are generated with both transition and observation noise variances equal to 0.001 for training by the simulated 2-DOF nonlinear Duffing system shown in Eq.(28). Another 5 randomly generated trajectories are used as an unknown dataset for testing the prediction capability of the Neural EKF framework. The prediction results for the free vibration case are shown in Figure 4. As indicated by the results, the Neural EKF is capable of producing satisfactory predictions that reasonably match the reference well for this simulated nonlinear system. As comparison, which is already shown in Fig 3, it is observed that the DMM, which is a pure VAE-based method, fails to capture the dynamics and the predictions are much less accurate.

| Noise variance | ||

|---|---|---|

| 0.001 | 0.04865 | 0.01691 |

| 0.01 | 0.03770 | 0.01331 |

| 0.1 | 0.05192 | 0.01709 |

To further demonstrate the robustness of the proposed model, we have generated artificial observations (measured data) with different levels of noise contamination. The data is generated by Eq.(28) using the same initial values across three cases, but with different levels of additive Gaussian observation noise. More specifically, we generate three different simulated cases corresponding to an observation noise variance of 0.001, 0.01 and 0.1, respectively. The “process noise” is set to be zero, when generating artificial observation data, since integration of a larger transition process noise would cause the solution of the ODE to diverge due to nonlinearity of the Duffing system. The root mean squared error between the predicted and true (noise-free) system response is reported in Table 1. Our results indicate that the difference between the predicted and noise-free system response, for training and prediction under assumed contaminated measurements, are similar across different noise covariance levels. The proposed method thus proves particularly robust to noise contamination, as revealed by the results shown in Figure 5 for the case with different observation noise variances. As comparison, the DMM-predicted results are plotted in dark green, which deviate significantly from the ground-truth and fail to provide accurate and reliable predictions.

4.2 Seismic Response Data

The framework is further investigated using real-world seismic data obtained from the Center for Engineering Strong Motion Data (CESMD) haddadi2008center for a 6-story hotel building in San Bernardino, California. It is a mid-rise concrete building installed with a total of nine accelerometers on the first, third and roof floors in different directions. The sensor placement is shown in Figure 6, where three accelerometers are mounted on each of the ground floor, third floor, and roof. The sensors have recorded multiple seismic events from 1987 to 2021. 17 corresponding structural response and ground motion datasets are used to train the Neural EKF with one dataset reserved for evaluating prediction performance, which corresponds to the San Bernardino earthquake in 2009. The data we used are extracted from sensors 1, 4 and 7, which lie along the same direction. The data from sensor 1 are used as the input ground motion, while the data from sensors 4 and 7 are used as output responses. Due to the inconsistency of the sample frequencies, we first pre-process the data using resampling techniques. The signals are uniformly resampled at 50 Hz (the downloaded data was already pre-processed by the website with noise filtering, baseline and sensor offset corrections).

After training the Neural EKF model with a pre-processed training dataset, the predictions on another independent test dataset are obtained by simply feeding the new seismic inputs into the trained model. The predictions and ground truth values are compared in Figure 7. A strong agreement between prediction responses and ground truth responses can be observed. While the data are sampled at different frequencies and earthquakes vary widely in magnitudes, directions, and frequencies, the Neural EKF essentially learns dynamics of the building, thus generating highly consistent predictions.

4.3 Experimentally Tested Wind Turbine Blade

Among various application fields of Structural Health Monitoring (SHM), wind turbines are gaining increased attention due to their critical significance and competitiveness as a major source of renewable energy. To further demonstrate the value of the framework in SHM for assessing performance and assisting decision making, we validate use of the Neural EKF for vibration monitoring of operational wind turbines. The data used in this paper were obtained and illustrated in ou2021vibration by experimentally testing a small-scale wind turbine blade, as shown in Figure 8, in both healthy and damaged states under varying environmental temperature conditions. All the testing cases are listed in Table 13. Multiple accelerometers and strain gauges are implemented on different positions of the wind turbine. We only make use of accelerometer measurements as the system outputs and the external forces measured at the force transducer as the system input to conduct a vibration-based assessment in this case study. The sensor configuration is shown in Figure 9, where the red dots indicate the positions of accelerometers , with denoting the label of each sensor. The measured signals are low-pass filtered with a cut-off frequency of 380 Hz.

4.3.1 Structural response prediction

We train the model using the R(+25) dataset (the healthy state under 25∘C) and leverage the trained model to carry out response predictions given the corresponding forcing data for each case. Figure 10 shows an example of the prediction results for the case R(+25), plotted against the measured true responses. Note that the shown prediction results are based on an independent experiment different from the dataset used for training. Strong agreement between the predictions and true responses can be observed for all the eight channels, with an average root mean squared error (RMSE) of 1.08 . Also, the DMM is trained on the same training dataset and performs prediction on the same test dataset. The results are also plotted in gray in Figure 10, with an average RMSE of 3.82, and it is obvious that the conventional VAE type model fails to generate accurate predictions as Neural EKF.

4.3.2 Anomaly detection

| Case label | Description |

|---|---|

| R (+25) | Healthy state |

| R (+20) | Healthy state |

| R (-15) | Healthy state |

| R (+40) | Healthy state |

| A (+25) | Added mass |

| B (+25) | Added mass |

| C (+25) | Added mass |

| D (+25) | |

| E (+25) | |

| F (+25) | |

| G (+25) | |

| H (+25) | |

| I (+25) | |

| J (+25) | |

| K (+25) | |

| L (+25) |

As stated, the model we trained uses the data from the healthy state. It is expected that for cases that deviate from the healthy baseline, such as damage, added mass, and diversified temperature conditions, the measured responses should bear discrepancies to the response predicted from the model trained on healthy data. An example of predicting the response for the case L(+25) is shown in Figure 11, where apparent inconsistency in the response prediction is observed. We quantify the prediction errors of 8 channels for each case using RMSE, also presented in Figure 13 using a box plot.

For each case, the RMSE is 8-dimensional (as we have 8 channels of response measurement). We leverage principal component analysis (PCA) and K-means clustering, to reduce the dimension and cluster the RMSE values in terms of the first two principal components, as shown in Figure 14. Three distinct clusters are formed, and the data points inside each cluster suggest that the RMSEs have similar features:

-

•

the first cluster in green contains Case R (+25) (baseline model), Case R (+20), Case A and Case D to Case G, which are of minor discrepancy from the baseline model

-

•

the second cluster in yellow contains Case B, C, and Case H, I, all of which are of medium discrepancy from the baseline model;

-

•

the third cluster in purple contains Case R (-15), Case R (+40) and Case J, K, L, all of which are of the most discrepant situations.

Notably, the clustering of RMSEs is consistent with the different degrees of anomaly. This clustering result concludes that the RMSEs are reasonable and can be used as an appropriate indicator of the anomaly degree. Here we simply use clustering to visualize the implication of RMSEs, instead of making it more quantifiable. Advanced investigation can be employed to further perform damage quantification and localization in future work.

5 Conclusions

In this work, we utilize a Neural Extended Kalman Filter (Neural EKF) that blends the benefits from both VAE and EKF, for data-driven modeling and response prediction of complex dynamical systems. The structure of the Extended Kalman Filters is exploited for conducting inference under the variational inference approach, which guarantees a more accurate dynamics model compared to conventional variational autoencoders. We investigate the framework on different vibration-based datasets from real-world structural/mechanical systems. The results validate the capability of the proposed Neural EKF to adequately capture the underlying dynamics of complex systems and therefore to generate accurate prediction. This is essential for downward applications, such as structural health monitoring and predictive maintenance planning. The Neural EKF-learned model offers a potent surrogate for real-world operating systems and provides the potential to establish robust structural digital twins for complex monitored systems.

The authors declared no potential conflicts of interest with respect to the research, authorship, and publication of this article.

The research was conducted at the Future Resilient Systems at the Singapore-ETH Centre, which was established collaboratively between ETH Zurich and the National Research Foundation Singapore. This research is supported by the National Research Foundation Singapore (NRF) under its Campus for Research Excellence and Technological Enterprise (CREATE) programme. The project is also supported by the Stavros Niarchos Foundation through the ETH Zurich Foundation and the ETH Zurich Postdoctoral Fellowship scheme.

References

- (1) Tao F, Zhang H, Liu A et al. Digital twin in industry: State-of-the-art. IEEE Transactions on industrial informatics 2018; 15(4): 2405–2415.

- (2) Committee ADEI et al. Digital twin: Definition & value. AIAA and AIA Position Paper 2020; .

- (3) Kapteyn MG, Pretorius JV and Willcox KE. A probabilistic graphical model foundation for enabling predictive digital twins at scale. Nature Computational Science 2021; 1(5): 337–347.

- (4) Wagg D, Worden K, Barthorpe R et al. Digital twins: state-of-the-art and future directions for modeling and simulation in engineering dynamics applications. ASCE-ASME J Risk and Uncert in Engrg Sys Part B Mech Engrg 2020; 6(3).

- (5) Kerschen G, Worden K, Vakakis AF et al. Past, present and future of nonlinear system identification in structural dynamics. Mechanical systems and signal processing 2006; 20(3): 505–592.

- (6) Brunton SL and Kutz JN. Data-driven science and engineering: Machine learning, dynamical systems, and control. Cambridge University Press, 2022.

- (7) Farrar CR and Worden K. Structural health monitoring: a machine learning perspective. John Wiley & Sons, 2012.

- (8) Döhler M, Lam XB and Mevel L. Uncertainty quantification for modal parameters from stochastic subspace identification on multi-setup measurements. Mechanical Systems and Signal Processing 2013; 36(2): 562–581.

- (9) Bogoevska S, Spiridonakos M, Chatzi E et al. A data-driven diagnostic framework for wind turbine structures: A holistic approach. Sensors 2017; 17(4): 720.

- (10) Avendaño-Valencia LD, Abdallah I and Chatzi E. Virtual fatigue diagnostics of wake-affected wind turbine via gaussian process regression. Renewable Energy 2021; 170: 539–561.

- (11) He K, Zhang X, Ren S et al. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 770–778.

- (12) Svensen M, Powrie H and Hardwick D. Deep neural networks analysis of borescope images. In Proceedings of the European Conference of the PHM Society, volume 4.

- (13) Krizhevsky A, Sutskever I and Hinton GE. Imagenet classification with deep convolutional neural networks. Advances in neural information processing systems 2012; 25.

- (14) Hinton G, Deng L, Yu D et al. Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal processing magazine 2012; 29(6): 82–97.

- (15) Bojarski M, Del Testa D, Dworakowski D et al. End to end learning for self-driving cars. arXiv preprint arXiv:160407316 2016; .

- (16) Ljung L, Andersson C, Tiels K et al. Deep learning and system identification. IFAC-PapersOnLine 2020; 53(2): 1175–1181.

- (17) Dervilis N. A machine learning approach to structural health monitoring with a view towards wind turbines. PhD Thesis, University of Sheffield, 2013.

- (18) Chen RT, Rubanova Y, Bettencourt J et al. Neural ordinary differential equations. Advances in neural information processing systems 2018; 31.

- (19) Jiang X, Mahadevan S and Yuan Y. Fuzzy stochastic neural network model for structural system identification. Mechanical Systems and Signal Processing 2017; 82: 394–411.

- (20) Raissi M, Perdikaris P and Karniadakis GE. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. Journal of Computational Physics 2019; 378: 686–707.

- (21) Zhang R, Chen Z, Chen S et al. Deep long short-term memory networks for nonlinear structural seismic response prediction. Computers & Structures 2019; 220: 55–68.

- (22) Zhang R, Liu Y and Sun H. Physics-guided convolutional neural network (phycnn) for data-driven seismic response modeling. Engineering Structures 2020; 215: 110704.

- (23) Xu S and Noh HY. Phymdan: Physics-informed knowledge transfer between buildings for seismic damage diagnosis through adversarial learning. Mechanical Systems and Signal Processing 2021; 151: 107374.

- (24) Liu W, Lai Z, Bacsa K et al. Physics-guided deep markov models for learning nonlinear dynamical systems with uncertainty. Mechanical Systems and Signal Processing 2022; 178: 109276.

- (25) Lai Z, Mylonas C, Nagarajaiah S et al. Structural identification with physics-informed neural ordinary differential equations. Journal of Sound and Vibration 2021; 508: 116196.

- (26) Fraccaro M, Kamronn S, Paquet U et al. A disentangled recognition and nonlinear dynamics model for unsupervised learning. arXiv preprint arXiv:171005741 2017; .

- (27) Karl M, Soelch M, Bayer J et al. Deep variational bayes filters: Unsupervised learning of state space models from raw data. arXiv preprint arXiv:160506432 2016; .

- (28) Gedon D, Wahlström N, Schön TB et al. Deep state space models for nonlinear system identification. arXiv preprint arXiv:200314162 2020; .

- (29) Girin L, Leglaive S, Bie X et al. Dynamical variational autoencoders: A comprehensive review. arXiv preprint arXiv:200812595 2020; .

- (30) Bacsa K, Lai Z, Liu W et al. Symplectic encoders for physics-constrained variational dynamics inference. Scientific Reports 2023; 13(1): 2643.

- (31) Kingma DP and Welling M. Auto-encoding variational bayes. arXiv preprint arXiv:13126114 2013; .

- (32) Krishnan R, Shalit U and Sontag D. Structured inference networks for nonlinear state space models. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 31.

- (33) Li AH, Wu P and Kennedy M. Replay overshooting: Learning stochastic latent dynamics with the extended kalman filter. In 2021 IEEE International Conference on Robotics and Automation (ICRA). IEEE, pp. 852–858.

- (34) Chatzi EN and Smyth AW. The unscented kalman filter and particle filter methods for nonlinear structural system identification with non-collocated heterogeneous sensing. Structural Control and Health Monitoring: The Official Journal of the International Association for Structural Control and Monitoring and of the European Association for the Control of Structures 2009; 16(1): 99–123.

- (35) Azam SE, Chatzi E and Papadimitriou C. A dual kalman filter approach for state estimation via output-only acceleration measurements. Mechanical systems and signal processing 2015; 60: 866–886.

- (36) Chatzis MN, Chatzi EN and Triantafyllou SP. A discontinuous extended kalman filter for non-smooth dynamic problems. Mechanical systems and signal processing 2017; 92: 13–29.

- (37) Haarnoja T, Ajay A, Levine S et al. Backprop kf: Learning discriminative deterministic state estimators. Advances in neural information processing systems 2016; 29.

- (38) Kloss A, Martius G and Bohg J. How to train your differentiable filter. Autonomous Robots 2021; 45(4): 561–578.

- (39) Jonschkowski R, Rastogi D and Brock O. Differentiable particle filters: End-to-end learning with algorithmic priors. arXiv preprint arXiv:180511122 2018; .

- (40) Karkus P, Hsu D and Lee WS. Particle filter networks with application to visual localization. In Conference on robot learning. PMLR, pp. 169–178.

- (41) Owen MW and Stubberud AR. A neural extended kalman filter multiple model tracker. In Oceans 2003. Celebrating the Past… Teaming Toward the Future (IEEE Cat. No. 03CH37492), volume 4. IEEE, pp. 2111–2119.

- (42) Kalman RE. A New Approach to Linear Filtering and Prediction Problems. Journal of Basic Engineering 1960; 82(1): 35–45. 10.1115/1.3662552.

- (43) Rauch HE, Tung F and Striebel CT. Maximum likelihood estimates of linear dynamic systems. AIAA journal 1965; 3(8): 1445–1450.

- (44) Haddadi H, Shakal A, Stephens C et al. Center for engineering strong-motion data (cesmd). In 14th World Conference on Earthquake Engineering, Beijing, China.

- (45) Ou Y, Tatsis KE, Dertimanis VK et al. Vibration-based monitoring of a small-scale wind turbine blade under varying climate conditions. part i: An experimental benchmark. Structural Control and Health Monitoring 2021; 28(6): e2660.

6 Appendix

6.1 Latent Dynamics

Given that both the transition and observation models are constructed using neural networks, there exist non-unique combinations of these two models that can comparably produce reasonable system responses. It is possible to achieve a good output response with incorrect dynamics and an appropriate selection of parameters and , which is in fact the philosophy of Kalman filters. and represent the process and measurement noise covariance matrices, which are meant to account for modeling and measurement noise, but which nonetheless necessitate a fairly competent process and measurement model for successful operation. The difference in a Deep version of such a Bayesian filter (as the Neural EKF) is that the state-space equations (process and measurement equations), as well as the noise sources, are not prescribed, but learned. This is why the dynamics, as stated in the title, is actually learnable. This is a main difference with respect to standard Bayesian filters, where these parameters, including the noise covariances, need to be preset. As in conventional filtering, here as well, different combinations of transition and observation models with different and matrices exist that can accurately reconstruct the response; the algorithm is searching for a local optimum that represents one of these solutions. This implies that the transition model may not be aligned with the actual dynamics of the system, but rather a nonlinear transformation of it, as the observation model is not explicitly defined.

Figure 15 illustrates the rotated learned latent states by identifying an optimal linear relationship with the true system states. The rotated latent states exhibit a similar pattern to the true system states, albeit with presence of some stretching. This is because the relationship between learned and true states is not a simple linear relationship, but rather a nonlinear transformation. In contrast, the DMM-predicted latent states are plotted on the bottom and exhibit distinctly different patterns to the true system states, even after optimal rotation.

When inference of such true latent states is of interest, we suggest the use of a continuous model, such as Neural Ordinary Differential Equations (Neural ODEs18), which allows to directly obtain the derivative of the latent state variables. Neural ODEs parameterize the derivative of the latent state (vector field) using a neural network. The output of the network is computed using a black-box differential equation solver. Neural ODEs have proven to be particularly useful for continuous-time latent variable models and can be used to model continuous dynamics, thereby enabling the introduction of derivative relations in the latent space and structure our observation model. We do not illustrate more on this approach here, since this comprises an extension which is beyond the objective of this work, which aimed to illustrated the benefits of the EKF structure in learning dynamical systems. We note , where and represent the first and second half elements of the latent state, respectively. Similar to the formulations in Eq.(2) and Eq.(3), but now set in a continuous form with the Neural ODE modeling the transition function, the dynamics can be modeled as:

| (29) | ||||

| (30) | ||||

| (31) |

| Transition process | Observation process | |||||

|---|---|---|---|---|---|---|

| Noise variance | ||||||

| 0.001 | 0.000 | 0.7886e-26 | 4.9305e-34 | 6.7735e-25 | 3.3331e-9 | 6.8190e-8 |

| 0.01 | 9.4104e-35 | 2.8987e-14 | 5.4440e-15 | 1.1494e-27 | 2.4185e-5 | 2.7647e-5 |

| 0.1 | 6.0510e-31 | 6.3190e-09 | 2.6425e-11 | 1.6114e-19 | 0.0030 | 0.0029 |

The first set of equations serves for enforcing the second half of the latent state to assume the form of the derivatives of the first half, while the second equation models the dynamics. Again, this is only when it is of interest to specifically infer a physical latent space. Since the second set of equations is usually chosen to generate a system’s accelerations (as derived from a physics-based equation of motion), it is possible in this case to eliminate the requirement for an additional neural network, for example in Eq.(3), for modeling the observation process, and the observation function is merely in this case, as indicated in Eq.(31). However, in this case the purpose of the network is modified, since the idea here is to impose some sort of physics-based structure, as opposed to more freely learn the unknown underlying dynamics.

6.2 Discussion on Noises

As described in the theoretical section, the covariance matrices and are assumed diagonal, thus for the duffing oscillator example, these are represented by and , respectively. The estimated diagonal elements are listed in Table 2.

It is observed that 1) the estimated values of are close to zero and much smaller than the defined values of , as no process noise was in this example added to the simulated data. 2) Without structuring the observation model, both transition and observation models are parameterized by neural networks, jointly optimized to reconstruct system response. Thus, it is not surprising that the estimated and are not exactly matching the true value, but one can still observe that the estimated values of increase when larger observation variances are added to the simulated data, thereby accounting for increasing uncertainty in the measured data. It is important to underline that, unlike the conventional EKF, the proposed Neural EKF is a deep learning-based method, meant to learn (infer) the transition and emission models from data. The results in Figure 5 show that even with highly noisy data, Neural EKF is able to recover the true response with high confidence, thus the estimated is observed to be smaller. Using the estimated standard deviations, which are the square roots of the estimated variances, 99.7% confidence intervals for predicted responses can be calculated. These intervals should be highly precise around mean values, considering the extremely low values of estimated variances.