Neural Common Neighbor with Completion for Link Prediction

Abstract

In this work, we propose a novel link prediction model and further boost it by studying graph incompleteness. First, we introduce MPNN-then-SF, an innovative architecture leveraging structural feature (SF) to guide MPNN’s representation pooling, with its implementation, namely Neural Common Neighbor (NCN). NCN exhibits superior expressiveness and scalability compared with existing models, which can be classified into two categories: SF-then-MPNN, augmenting MPNN’s input with SF, and SF-and-MPNN, decoupling SF and MPNN. Second, we investigate the impact of graph incompleteness—the phenomenon that some links are unobserved in the input graph—on SF, like the common neighbor. Through dataset visualization, we observe that incompleteness reduces common neighbors and induces distribution shifts, significantly affecting model performance. To address this issue, we propose to use a link prediction model to complete the common neighbor structure. Combining this method with NCN, we propose Neural Common Neighbor with Completion (NCNC). NCN and NCNC outperform recent strong baselines by large margins, and NCNC further surpasses state-of-the-art models in standard link prediction benchmarks. Our code is available at https://github.com/GraphPKU/NeuralCommonNeighbor.

1 Introduction

Link prediction is a crucial task in graph machine learning, finding applications in various domains, such as recommender systems (Zhang & Chen, 2020), knowledge graph completion (Zhu et al., 2021), and drug interaction prediction (Souri et al., 2022). Graph Neural Networks (GNNs) have gained prominence in link prediction tasks, with Graph Autoencoder (GAE) (Kipf & Welling, 2016) being a notable representation. GAE utilizes Message Passing Neural Network (MPNN) (Gilmer et al., 2017) representations of two individual target nodes to predict link existence. However, Zhang et al. (2021) point out a limitation in GAE: it overlooks pairwise relations between target nodes. For example, in Figure 1, GAE always produces the same prediction for two links and despite their differing pairwise relationships, because MPNN generates identical representations for nodes due to graph symmetry. Nevertheless, the two links have different structural features. For example, and have a common neighbor , while and do not have any. Therefore, various methods combine structural feature (SF) and MPNN for better expressivity and have dominated the link prediction task (Zhang et al., 2021; Yun et al., 2021; Chamberlain et al., 2023).

However, models combining SF and MPNN still have much room for improvement. They can generally be concluded into two architectures: SF-then-MPNN and SF-and-MPNN, as shown in Figure 2. For example, SEAL (Zhang & Chen, 2018) adds target-link-specific hand-crafted features to the node features of the input graphs of MPNN, whose output node representations are pooled to represent links. SEAL belongs to the SF-then-MPNN architecture, using SF to augment the input graph of MPNN. Though SF-then-MPNN models achieve provably high expressivity (Zhang et al., 2021), they require running MPNN on a different graph for each target link, leading to significant computational overhead. In contrast, Neo-GNN (Yun et al., 2021) and BUDDY (Chamberlain et al., 2023) decouple the structural feature from MPNN, directly incorporating manually created pairwise features with individual node representations produced by MPNN as link representations, necessitating only a single run of MPNN on the original graph. These models fall under the SF-and-MPNN category, where structural features and MPNN are independent. Such methods have limited expressivity. For example, SEAL can capture the representations of common neighbor nodes, while BUDDY can only count the number of common neighbors.

To solve the drawback of two architectures above, we propose MPNN-then-SF architecture, which initially applies MPNN to the original graph and then uses structural features to guide the pooling of node representations. This approach offers strong expressivity and scalability: similar to SF-then-MPNN models, MPNN-then-SF can capture the node features of common neighbors, and similar to SF-and-MPNN models, it runs MPNN only once for all target links. We introduce the Neural Common Neighbor (NCN) as an instantiation of the MPNN-then-SF architecture. In experiments, NCN outperforms existing models in both scalability and performance.

Furthermore, since NCN heavily relies on common neighbor structure, which is significantly affected by graph incompleteness, we also investigate the impact of incompleteness. Graph incompleteness is ubiquitous in link prediction tasks because the goal is to predict unobserved edges not present in the input graph. We empirically observe that incompleteness reduces the number of common neighbor and leads to a shift in the distribution of common neighbor between the training and test sets. These phenomena collectively lead to performance degradation. To mitigate this issue, we first employ NCN to complete the common neighbor structure and then apply NCN to the completed graph. In experiments, this method significantly improves the performance of NCN.

In conclusion, our contributions are as follows:

-

•

We introduce Neural Common Neighbor (NCN) with MPNN-then-SF architecture for link prediction, demonstrating superior performance and scalability compared to existing models.

-

•

We analyze the impact of graph incompleteness and propose Neural Common Neighbor with Completion (NCNC), which completes the input common neighbor structure and applies NCN to the completed graph. NCNC outperforms state-of-the-art models.

2 Preliminary

We consider an undirected graph , where is a set of nodes, is the set of edges, is a node feature matrix whose -th row is the feature of node , and a symmetric adjacency matrix has element if and otherwise. The degree of node is . Node ’s neighbors are nodes connected to , . For simplicity, we use to denote when is fixed. Common neighbor means nodes connected to both and : .

High Order Neighbor.

We define as a high-order adjacency matrix, where represents the number of walks of length between nodes and . denotes the set of nodes connected to by a walk of length in graph . denotes the set of nodes whose shortest path distance to in graph is . Existing works define high-order neighbors as either or . More generally, the neighborhood of can be expressed as , returning all nodes with a shortest path distance of to in the high-order graph . We use to denote when is fixed. Given a target link , their general neighborhood overlap is given by , and their neighborhood difference is given by .

Message Passing Neural Network (MPNN).

Comprising message passing layers, MPNN (Gilmer et al., 2017) is a common GNN framework. The layer is as follows.

| (1) |

where is the representation of node at the layer, are functions like multi-layer perceptron (MLP), and AGG denotes an aggregation function like sum or max. The initial node representation is node feature . In each message passing layer, information is aggregated from neighbors to update the node representation. The final node representations produced by MPNN are the output of the last message passing layer, denoted as .

3 Related Work

Link Prediction Model

There are three primary categories of link prediction models. Node embeddings (Perozzi et al., 2014; Grover & Leskovec, 2016; Tang et al., 2015) map each node to an embedding vector and combine target nodes’ embeddings to predict link. Link prediction heuristics (Liben-Nowell & Kleinberg, 2003; Barabási & Albert, 1999; Zhou et al., 2009; Adamic & Adar, 2003) use hand-crafted structural features. GNNs utilize Graph Neural Networks. Among these GNNs, Graph Autoencoder (GAE) (Kipf & Welling, 2016) uses the inner product of two target nodes’ MPNN representations, , as link ’s representation. It uses MPNN only and fails to capture pairwise relations between nodes. In contrast, various GNNs combining MPNN and structural features (Zhang & Chen, 2018; Yun et al., 2021; Chamberlain et al., 2023) have achieved state-of-the-art performance. SEAL (Zhang & Chen, 2018) is representative. For a target link , it initially adds each node’s shortest path distance to to node feature and extracts a -hop subgraph, generating augmented node feature and adjacency . Then SEAL applies MPNN to the subgraph and pools node representations within it, , as the target link representation. Other models employ a distinct approach to incorporate structural features. Neo-GNN (Yun et al., 2021) and BUDDY (Chamberlain et al., 2023), for instance, directly apply MPNN to the original graph and concatenate structural features, such as the count of common neighbors, with the Hadamard product of target node MPNN representations, .

Structural Feature

Structural features for link prediction vary but are generally based on neighborhood overlap. Notable heuristics, such as Common Neighbor (CN), Resource Allocation (RA), and Adamic Adar (AA), use first-order common neighbors to compute scores for a target link :

| (2) |

| Model | Neighbor | ||||

|---|---|---|---|---|---|

| Heuristics | CN | FO | |||

| RA | FO | ||||

| AA | FO | ||||

| GNN | Neo-GNN | HO | MLP | ||

| BUDDY | HO |

Neo-GNN (Yun et al., 2021) and BUDDY (Chamberlain et al., 2023) extend these heuristics by utilizing higher-order neighbors. Neo-GNN computes features for high-order neighborhood overlap as follows,

| (3) |

where is a learnable function of node degree . BUDDY (Chamberlain et al., 2023) further utilize high-order neighborhood difference. It computes overlap features and difference features as follows:

| (4) |

All these pairwise features can be summarized into the following framework.

| (5) |

where and denote the general neighborhood of and , is a set operator like intersection or difference, and are node degree and high-order adjacency weight functions, respectively. Details on how this framework unify existing structure features are shown in Table 1.

Incompleteness of Graph

Link prediction task is to forecast unobserved edges, inherently making the input graph incomplete. Nevertheless, graph incompleteness can significantly impact structural features, such as common neighbors, and models based on them. This issue has drawn attention in some prior works. Yang et al. (2022) examined how unobserved links could distort evaluation scores, with a specific focus on metrics and benchmark design, while our research concentrates on model design. Dong et al. (2022) explored the consequences of the presence of target links, whereas our emphasis lies in understanding how incompleteness affects common neighbor-based features. Outside the domain of link prediction, Zhao et al. (2023) and Zhao et al. (2021) add edges predicted by GAE to the input graph of GNNs. However, their primary objective was node classification tasks, aiming to enhance edges between nodes of the same class while diminishing others. In contrast, our research addresses distribution shifts and information loss stemming from graph incompleteness, offering unique completion methods and insights tailored for link prediction.

4 Neural Common Neighbor

Structural features (SF), such as common neighbors, are commonly employed in link prediction models. Existing approaches combine SF with Message Passing Neural Networks (MPNN) in two manners (illustrated in Figure 2): SF-then-MPNN and SF-and-MPNN. However, these approaches exhibit limitations in terms of either scalability or expressivity. To address these issues comprehensively, we introduce a novel architecture, MPNN-then-SF, which offers a unique blend of high expressivity and scalability. Subsequently, we present a concrete instantiation of this architecture, Neural Common Neighbor (NCN). All proofs for theorems in this section are in Appendix A.

4.1 New Architecture Combining MPNN and SF

In Figure 2, we categorize existing methods into two architectures:

-

•

SF-then-MPNN: This category includes SEAL (Zhang & Chen, 2018) and NBFNet (Zhu et al., 2021). In this approach, the input graph is initially enriched with structural features and then fed into the MPNN, which allows MPNN to leverage SF and have provable expressivity (Zhang & Chen, 2018). However, the drawback is that structural features change with target link, necessitating MPNN to be re-run for each link, resulting in lower scalability.

-

•

SF-and-MPNN: This category encompasses models like NeoGNN (Yun et al., 2021) and BUDDY (Chamberlain et al., 2023). Here, MPNN takes the original graph as input and runs only once for all target links, leading to high scalability. However, SF are directly concatenated to the final representations and thus detached from MPNN, leading to reduced expressivity.

From these two architectural paradigms, it becomes apparent that feeding the original graph to MPNN is essential for achieving high scalability. Moreover, the coupling between SF and MPNN remains a crucial factor for expressivity. Thus, we introduce a new architecture: MPNN-then-SF. This approach initially runs MPNN on the original graph and then employs structural features to guide the pooling of MPNN features, requiring only one MPNN run and enhancing expressivity. The specific representation of the target link is as follows:

| (6) |

where Pool is a pooling function mapping a multiset of node representations to a single set representation, and is a node set related to the target link. Multiple node sets can be used in conjunction to produce concatenated representations. This flexible framework can express various models. When using target nodes and as and Hadamard product as Pool, it can express GAE:

| (7) |

Alternatively, we can choose as combinations of high-order neighbor sets of and , leading to the following form (see Appendix A.1 for the detailed derivation.):

| (8) |

where is a function transforming the edge weight in high-order adjacency matrix. This framework exhibits stronger expressivity than existing SF-and-MPNN models.

Theorem 1.

A key factor contributing to its higher expressivity is the coupling of MPNN and SF. While SF-and-MPNN typically only counts the number of common neighbors, MPNN-then-SF, similar to SF-then-MPNN, can capture node properties of these common neighbors. As shown in Figure 3, node tuples and have the same number of common neighbors. However, their common neighbors have different node features, allowing MPNN-then-SF and SF-then-MPNN to distinguish them, a capability that SF-and-MPNN lacks.

4.2 Neural Common Neighbor

We will now present an implementation for the MPNN-then-SF framework. Notably, the previous models NeoGNN (Yun et al., 2021) and BUDDY (Chamberlain et al., 2023) all incorporate higher-order neighbors into their architectures, resulting in significant performance improvements. Surprisingly, in our experiments, we observed that the gains achieved by explicitly considering higher-order neighbors were marginal once we introduced MPNN into the framework (as discussed in Section 6.3). We speculate that this marginal improvement arises because MPNN implicitly learns information related to higher-order neighbors. Therefore, considering scalability, we opt to utilize only the target nodes and their first-order common neighbors as the node set, leading to the development of our NCN model:

| (9) |

where and are constants and ignored, and denotes concatenation. It has high expressivity.

Theorem 2.

NCN is strictly more expressive than GAE, CN, RA, AA. Moreover, Neo-GNN and BUDDY are not more expressive than NCN.

To elaborate, in certain scenarios where the properties of common neighbors hold significant importance, NCN outperforms both BUDDY and Neo-GNN in expressiveness.

As our first major contribution, NCN represents a straightforward yet potent model for combining structural features and MPNNs. It operates as an implicit high-order model by aggregating first-order common neighbors, each of which implicitly learns higher-order information through MPNN. A comprehensive analysis of time complexity is in Appendix E.

5 Neural Common Neighbor with Completion

While NCN outperforms existing models, it relies heavily on the common neighbor structure, which the incompleteness of the graph can notably influence. For instance, in cases where node pairs lack common neighbors, NCN essentially degenerates to GAE, rendering it unable to leverage structural features. Although the absence of common neighbors can suggest that a link is unlikely to exist, certain node pairs may possess common neighbors in the ground truth that remain unobserved in the input graph due to graph incompleteness. Graph incompleteness is ubiquitous in link prediction tasks, given that the objective is to predict unobserved edges. However, few studies have delved into this issue. In this section, we initially demonstrate that incompleteness can result in the loss of common neighbor information, distribution shifts between the training and test sets, and the consequent deterioration of model performance. We propose a straightforward yet effective method to tackle these challenges: common neighbor completion (CNC). CNC completes unobserved common neighbors using a link prediction model. With the introduction of CNC, we enhance NCN and introduce Neural Common Neighbor with Completion (NCNC).

5.1 Incompleteness Visualization

To illustrate the challenges posed by incompleteness, we analyze two common datasets: ogbl-collab (Hu et al., 2020) and Cora (Yang et al., 2016). We refer to the graph containing only the edges from the training set as the incomplete graph, while the one encompassing edges from the training, validation, and test sets is termed the complete graph.

Given the pivotal role of common neighbor information in our NCN model and other link prediction models, we visualize the distribution of the number of common neighbors for training/test edges in both complete and incomplete graphs separately in Figure 4 (a)(c). To assess how incompleteness impacts model performance, we present the performance of CN model (as shown in Section 3) in four distinct scenarios in Figure 4 (b) (d). We observe the following effects of incompleteness:

Loss of Common Neighbors.

Figure 4(c) illustrates that in the incomplete graph, there are fewer common neighbors for both training and test sets, as indicated by the comparison between the blue (green) and red (orange) lines. When comparing the incomplete and complete graphs, it becomes evident that the incomplete graph suffers from a loss of common neighbor information due to incompleteness. Additionally, more links have no common neighbors at all.

Common Neighbor Distribution Shift.

A noticeable distribution shift between the training and test sets is evident in the incomplete graph of the ogbl-collab dataset, as seen in the comparison between the blue and green lines in Figure 4(a). This shift disappears when the graph is complete (the red and orange lines), indicating that incompleteness is the cause. Such a substantial distribution shift between training and test links could pose challenges in model generalization. This distribution shift is related to the dataset split method. Ogbl-collab is splitted based on the timestamp of edges, and the test edges all belong to the same year. Consequently, compared to the training edges, test edges exhibit stronger correlations with other test edges, resulting in a greater loss of common neighbor when these test edges are absent from the incomplete graph. Conversely, the Cora dataset is randomly splitted, so training and test edges lose a similar ratio of common neighbors and does not exhibit distribution shifts (Figure 4(c)).

Performance Degradation.

The performance of CN aligns with the common neighbor distribution. In the ogbl-collab dataset, the common neighbor distribution is nearly identical for the training set in both the complete and incomplete graphs, as is the performance (See Figure 4 (b)). However, test performance on the incomplete graph decreases significantly as the test distribution changes. Similar trends are observed in the Cora dataset, with test and training scores declining on incomplete graphs when the common neighbor distribution changes compared to the complete graph.

Remark.

Note that while common neighbor distribution changes may not fully account for the differences between complete and incomplete graphs, they offer valuable insights into how incompleteness alters the input graph structure for other learnable models. Despite CN is non-learnable and non-generalizable, its calculation for a target edge doesn’t involve the edge itself, thereby avoiding data leakage concerns. These findings suggest that having a more complete input graph could yield superior link prediction models. However, in practice, we can only work with the incomplete input graph, necessitating exploring other mitigation methods for these issues.

5.2 Common Neighbor Completion

Motivated by the analysis above, we address graph incompleteness issues with a two-step method:

Soft Completion of Common Neighbors.

We start by softly completing the input graph with a link prediction model, such as NCN. However, instead of completing all edges in the entire graph, which can be impractical for large graphs, we focus specifically on common neighbor links. We compute the probability that a node serves as a common neighbor for a node tuple as follows:

| (10) |

where represents the predicted existence probability of link by the model. The idea is that is a common neighbor of iff both edges and exist. If one of these edges is unobserved, we use NCN to predict its link existence probability, which we also use as the probability of being a common neighbor. In the rare case where both and are unobserved, we set the probability to . This technique is called ”Common Neighbor Completion” (CNC).

Reapplication of NCN on the Completed Graph.

Following CNC, we apply the NCN model again on the graph that has been completed using the soft common neighbor weights . This final model is named Neural Common Neighbor with Completion (NCNC) and is defined as:

| (11) |

Notably, the input graph of MPNN still takes the original graph as input, allowing it to run only once for all target links, thus maintaining high scalability. While can be predicted using any link prediction model, weak models may not accurately recover the unobserved common neighbor structure. Therefore, in practice, we employ NCN to complete it.

In addition to addressing distribution shifts and common neighbor loss, NCNC also solves the problem that NCN can degrade to GAE when node pairs lack common neighbors. With NCNC, common neighbors are always completed, and the model only degenerates to GAE when both target nodes are isolated nodes. In such cases, where no structural features can be utilized, relying solely on the target node representations is reasonable. For a visual demonstration of CNC’s effect, please refer to Appendix H, which illustrates how NCN can make more precise predictions by completing common neighbors for node pairs with no observed common neighbors.

6 Experiment

| Cora | Citeseer | Pubmed | Collab | PPA | Citation2 | DDI | |

| Metric | HR@100 | HR@100 | HR@100 | HR@50 | HR@100 | MRR | HR@20 |

| CN | |||||||

| AA | |||||||

| RA | |||||||

| GCN | |||||||

| SAGE | |||||||

| SEAL | |||||||

| NBFnet | OOM | OOM | OOM | ||||

| Neo-GNN | |||||||

| BUDDY | |||||||

| NCN | |||||||

| NCNC |

| Cora | Citeseer | Pubmed | Collab | PPA | Citation2 | DDI | |

|---|---|---|---|---|---|---|---|

| Metric | HR@100 | HR@100 | HR@100 | HR@50 | HR@100 | MRR | HR@20 |

| CN | |||||||

| GAE | |||||||

| GAE+CN | |||||||

| NCN2 | OOM | OOM | OOM | ||||

| NCN-diff | |||||||

| NCN | |||||||

| NCNC |

In this section, we extensively evaluate the performance of both NCN and NCNC. Detailed experimental settings are included in Appendix D.

We use seven popular real-world link prediction benchmarks. Among these, three are Planetoid citation networks: Cora, Citeseer, and Pubmed (Yang et al., 2016). Others are from Open Graph Benchmark (Hu et al., 2020): ogbl-collab, ogbl-ppa, ogbl-citation2, and ogbl-ddi. Their statistics and splits are shown in Appendix B.

6.1 Evaluation on Real-World Datasets

In our evaluation on real-world datasets, we employ a range of baseline methods, encompassing traditional heuristics like CN (Barabási & Albert, 1999), RA (Zhou et al., 2009), and AA (Adamic & Adar, 2003), as well as GAE models, such as GCN (Kipf & Welling, 2017) and SAGE (Hamilton et al., 2017). Additionally, we consider SF-then-MPNN models, including SEAL (Zhang & Chen, 2018) and NBFNet (Zhu et al., 2021), as well as SF-and-MPNN models like Neo-GNN (Yun et al., 2021) and BUDDY (Chamberlain et al., 2023). The baseline results are sourced from (Chamberlain et al., 2023). Our models consist of NCN and NCNC. Their architectures are detailed in Appendix C.

The experimental results are presented in Table 2. NCN surpasses all baselines on 5/7 datasets and exhibits an average score improvement of 5% compared to BUDDY, the most competitive baseline. Even on the remaining two datasets, NCN outperforms all baselines except BUDDY. These impressive results underscore the outstanding expressivity of our MPNN-then-SF architecture. Furthermore, NCNC enhances performance by an additional 2%, emerging as the top-performing method on all datasets. Notably, on ogbl-ppa, NCNC achieves an HR@100 score of 61.42%, surpassing the strongest baseline BUDDY by a substantial margin of over 10%. It’s worth mentioning that our models outperform node embedding techniques (Perozzi et al., 2014; Grover & Leskovec, 2016; Tang et al., 2015) and other GNNs lacking pairwise features (Wang et al., 2021; 2022) significantly (see Appendix G).

6.2 Scalability

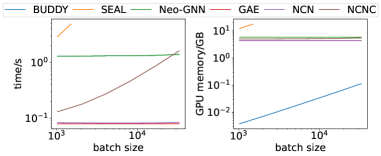

We compare the inference time and GPU memory on ogbl-collab in Figure 5. NCN and NCNC have a similar computation overhead to GAE, as they both need to run MPNN only once.

In contrast, SEAL, which reruns MPNN for each target link, takes times more time compared with NCN with a small batch size , and the disadvantage will be more significant with a larger batch size. Surprisingly, BUDDY and Neo-GNN are slower than NCN. The reason is that it uses pairwise features depending on high order neighbors that are much more time-consuming than common neighbor. NCN and NCNC also achieve low GPU memory consumption. We also conduct scalability comparisons on other datasets and observe the same results (see Appendix F).

6.3 Ablation Analysis

To assess the effectiveness of the NCNC design, we conducted a comprehensive ablation analysis, as presented in Table 3.

Starting with GAE, which relies solely on node representations, we introduced GAE+CN, which incorporates Common Neighbor (CN) as pairwise features. Remarkably, GAE+CN outperforms GAE by 70% on Open Graph Benchmark (OGB) datasets, illustrating the importance of structural features. Furthermore, NCN exhibits a 5.5% score increase over GAE+CN, highlighting the advantages of the MPNN-then-SF architecture over the MPNN-and-SF architecture.

We also explore variants of NCN, namely NCN-diff and NCN2. In NCN-diff, we include neighborhood difference information by summing the representations of nodes in and , while NCN2 incorporates high-order neighborhood overlap using and . Notably, NCN, NCN-diff, and NCN2 exhibit similar performances across most datasets, suggesting that first-order neighborhood overlap might be sufficient. However, NCN-diff achieves a lower score on the DDI dataset, possibly because the high node degree in DDI introduces noisy and uninformative neighborhood difference information.

7 Conclusion

In this work, we introduce Neural Common Neighbor (NCN), a scalable and robust model for link prediction that harnesses the power of learnable pairwise features. Additionally, we address the challenge of graph incompleteness by identifying and visualizing common neighbor loss and distribution shifts stemming from this issue. To mitigate these problems, we introduce the Common Neighbor Completion (CNC) technique. Combining CNC with NCN, our final model, Neural Common Neighbor with Completion (NCNC), outperforms state-of-the-art baselines across various datasets in terms of both speed and prediction performance.

8 Limitations

Though we propose MPNN-then-SF framework, we do not exhaust the design space and only propose one implementation, NCN, and its variants NCN2 and NCN-diff in ablation study. Moreover, while we only analyze the impact of incompleteness on common neighbor structures, graph incompleteness can also affect other structural features. Additionally, the proposed completion method has the potential to be generalized to address other structural features. Our future research will explore the design space of MPNN-then-SF and the broader implications of incompleteness on various structural features.

9 Reproducibility Statement

Our code is available at https://github.com/GraphPKU/NeuralCommonNeighbor. Proofs of all theorems in the maintext are in Appendix A.

Acknowledgement

This work is partially supported by the National Key R&D Program of China (2022ZD0160303), the National Natural Science Foundation of China (62276003), and Alibaba Innovative Research Program.

References

- Adamic & Adar (2003) Lada A Adamic and Eytan Adar. Friends and neighbors on the web. Social networks, 25(3):211–230, 2003.

- Akiba et al. (2019) Takuya Akiba, Shotaro Sano, Toshihiko Yanase, Takeru Ohta, and Masanori Koyama. Optuna: A next-generation hyperparameter optimization framework. In KDD, pp. 2623–2631, 2019.

- Barabási & Albert (1999) Albert-László Barabási and Réka Albert. Emergence of scaling in random networks. science, 286(5439):509–512, 1999.

- Chamberlain et al. (2023) Benjamin Paul Chamberlain, Sergey Shirobokov, Emanuele Rossi, Fabrizio Frasca, Thomas Markovich, Nils Hammerla, Michael M. Bronstein, and Max Hansmire. Graph neural networks for link prediction with subgraph sketching. ICLR, 2023.

- Dong et al. (2022) Kaiwen Dong, Yijun Tian, Zhichun Guo, Yang Yang, and Nitesh V. Chawla. Fakeedge: Alleviate dataset shift in link prediction. In LoG, volume 198, pp. 56. PMLR, 2022.

- Fey & Lenssen (2019) Matthias Fey and Jan Eric Lenssen. Fast graph representation learning with pytorch geometric. arXiv preprint arXiv:1903.02428, 2019.

- Gilmer et al. (2017) Justin Gilmer, Samuel S Schoenholz, Patrick F Riley, Oriol Vinyals, and George E Dahl. Neural message passing for quantum chemistry. In ICML, pp. 1263–1272, 2017.

- Grover & Leskovec (2016) Aditya Grover and Jure Leskovec. node2vec: Scalable feature learning for networks. In KDD, pp. 855–864. ACM, 2016.

- Hamilton et al. (2017) William L Hamilton, Rex Ying, and Jure Leskovec. Inductive representation learning on large graphs. NeurIPS, pp. 1025–1035, 2017.

- Hu et al. (2020) Weihua Hu, Matthias Fey, Marinka Zitnik, Yuxiao Dong, Hongyu Ren, Bowen Liu, Michele Catasta, and Jure Leskovec. Open graph benchmark: Datasets for machine learning on graphs. In NeurIPS, 2020.

- Kipf & Welling (2016) Thomas N. Kipf and Max Welling. Variational graph auto-encoders. CoRR, abs/1611.07308, 2016.

- Kipf & Welling (2017) Thomas N. Kipf and Max Welling. Semi-supervised classification with graph convolutional networks. In ICLR, 2017.

- Liben-Nowell & Kleinberg (2003) David Liben-Nowell and Jon Kleinberg. The link prediction problem for social networks. In International conference on Information and knowledge management, pp. 556–559, 2003.

- Paszke et al. (2019) Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, et al. Pytorch: An imperative style, high-performance deep learning library. NeurIPS, 32, 2019.

- Perozzi et al. (2014) Bryan Perozzi, Rami Al-Rfou, and Steven Skiena. Deepwalk: online learning of social representations. In KDD, pp. 701–710. ACM, 2014.

- Souri et al. (2022) E. Amiri Souri, Roman Laddach, S. N. Karagiannis, Lazaros G. Papageorgiou, and Sophia Tsoka. Novel drug-target interactions via link prediction and network embedding. BMC Bioinform., 23(1):121, 2022.

- Tang et al. (2015) Jian Tang, Meng Qu, Mingzhe Wang, Ming Zhang, Jun Yan, and Qiaozhu Mei. LINE: large-scale information network embedding. In Aldo Gangemi, Stefano Leonardi, and Alessandro Panconesi (eds.), WWW, pp. 1067–1077, 2015.

- Velickovic et al. (2018) Petar Velickovic, Guillem Cucurull, Arantxa Casanova, Adriana Romero, Pietro Liò, and Yoshua Bengio. Graph attention networks. In ICLR, 2018.

- Wang et al. (2021) Zhitao Wang, Yong Zhou, Litao Hong, Yuanhang Zou, Hanjing Su, and Shouzhi Chen. Pairwise learning for neural link prediction. CoRR, abs/2112.02936, 2021.

- Wang et al. (2022) Zixiao Wang, Yuluo Guo, Jin Zhao, Yu Zhang, Hui Yu, Xiaofei Liao, Hai Jin, Biao Wang, and Ting Yu. GIDN: A lightweight graph inception diffusion network for high-efficient link prediction. CoRR, abs/2210.01301, 2022.

- Xu et al. (2019) Keyulu Xu, Weihua Hu, Jure Leskovec, and Stefanie Jegelka. How powerful are graph neural networks? In ICLR, 2019.

- Yang et al. (2022) Haotong Yang, Zhouchen Lin, and Muhan Zhang. Rethinking knowledge graph evaluation under the open-world assumption. pp. 13683–13694, 2022.

- Yang et al. (2016) Zhilin Yang, William W. Cohen, and Ruslan Salakhutdinov. Revisiting semi-supervised learning with graph embeddings. In Proceedings of the 33nd International Conference on Machine Learning, volume 48, pp. 40–48, 2016.

- Yun et al. (2021) Seongjun Yun, Seoyoon Kim, Junhyun Lee, Jaewoo Kang, and Hyunwoo J. Kim. Neo-gnns: Neighborhood overlap-aware graph neural networks for link prediction. In NeurIPS, pp. 13683–13694, 2021.

- Zhang & Chen (2018) Muhan Zhang and Yixin Chen. Link prediction based on graph neural networks. NeurIPS, 31:5165–5175, 2018.

- Zhang & Chen (2020) Muhan Zhang and Yixin Chen. Inductive matrix completion based on graph neural networks. In ICLR, 2020.

- Zhang et al. (2021) Muhan Zhang, Pan Li, Yinglong Xia, Kai Wang, and Long Jin. Labeling trick: A theory of using graph neural networks for multi-node representation learning. NeurIPS, 34:9061–9073, 2021.

- Zhao et al. (2023) Jianan Zhao, Qianlong Wen, Mingxuan Ju, Chuxu Zhang, and Yanfang Ye. Self-supervised graph structure refinement for graph neural networks. In WSDM, pp. 159–167, 2023.

- Zhao et al. (2021) Tong Zhao, Yozen Liu, Leonardo Neves, Oliver J. Woodford, Meng Jiang, and Neil Shah. Data augmentation for graph neural networks. In AAAI, pp. 11015–11023, 2021.

- Zhou et al. (2009) Tao Zhou, Linyuan Lü, and Yi-Cheng Zhang. Predicting missing links via local information. The European Physical Journal B, 71(4):623–630, 2009.

- Zhu et al. (2021) Zhaocheng Zhu, Zuobai Zhang, Louis-Pascal A. C. Xhonneux, and Jian Tang. Neural bellman-ford networks: A general graph neural network framework for link prediction. In NeurIPS, pp. 29476–29490, 2021.

Appendix A Proof

A.1 Derivation of Equation 8

The MPNN-then-SF architecture is as follows,

| (12) |

Let be the following set,

| (13) |

Then, for , we set the pooling function to sum and multiplied with , where is a function with high-order adjacency edge weight as input. Then the MPNN-then-SF architecture can express,

| (14) |

Simply sums the feature of all leads to,

| (15) |

A.2 Proof of Theorem 1 and 2

Here, we present the theoretical proof of MPNN-then-SF’s higher expressivity. We say algorithm A is strictly more expressive than algorithm B when A can differentiate all pairs of links that B can differentiate, while there exists a pair of links that A can distinguish while B cannot. We first prove the more expressive results by simulating other models with SF-then-MPNN and NCN then prove the strictness by constructing an example.

Lemma 1.

Equation 7 and NCN are more expressive than Graph Autoencoder (GAE)

Proof.

Lemma 2.

Proof.

Furthermore, we construct an example in Figure 3. In that graph, and are symmetric and thus have the same MPNN representation, so GAE cannot distinguish and . Moreover, and are symmetric if the node feature is ignored, so CN, RA, AA, Neo-GNN, BUDDY cannot distinguish them. However, have a common neighbor with feature , and have a common neighbor with feature , so NCN can distinguish them.

Appendix B Dataset Statistics

The statistics of each dataset are shown in Table 4.

| Cora | Citeseer | Pubmed | Collab | PPA | DDI | Citation2 | |

|---|---|---|---|---|---|---|---|

| #Nodes | 2,708 | 3,327 | 18,717 | 235,868 | 576,289 | 4,267 | 2,927,963 |

| #Edges | 5,278 | 4,676 | 44,327 | 1,285,465 | 30,326,273 | 1,334,889 | 30,561,187 |

| splits | random | random | random | fixed | fixed | fixed | fixed |

| average degree | 3.9 | 2.74 | 4.5 | 5.45 | 52.62 | 312.84 | 10.44 |

Random splits use edges for training/validation/test set respectively. Different from others, the collab dataset allows using validation edges as input on test set.

Appendix C Model Architecture

Given an input graph , a node feature matrix , and target links , our models consist of three steps: target link removal, MPNN, and predictor. NCN and NCNC only differ in the last step. The model architecture is visualized in Figure 6

Target link removal.

We make no changes to the input graph in the validation and test set where the target links are unobserved. In the training set, we remove target links from . Let denote the processed graph. This method is detailed in Section 5.

MPNN.

We use MPNN to produce node representations . For each node ,

| (16) |

For all target links, MPNN needs to run only once.

Predictor.

Predictors use the node representations and graph structure to produce link prediction. Link representations of NCN are as follows,

| (17) |

where means concatenation, is the representation of link . composed of two components: two nodes’ presentation and representations of nodes within the common neighbor set. The former component is often used in link prediction models (Kipf & Welling, 2016; Yun et al., 2021; Chamberlain et al., 2023), while we propose the latter one for the first time. Link representations are then used to produce link existence probability.

| (18) |

where is the probability that link exists.

NCNC has a similar form. The only difference is that in Equation (17) is replaced with the follow form:

| (19) |

where is the link existence probability produced by NCNC.

Appendix D Experimental Settings

Computing infrastructure. We leverage Pytorch Geometric (Fey & Lenssen, 2019) and Pytorch (Paszke et al., 2019) for model development. All experiments are conducted on an Nvidia 4090 GPU on a Linux server.

Baselines. We directly use the results reported in (Chamberlain et al., 2023).

Model hyperparameter. We use optuna (Akiba et al., 2019) to perform random searches. Hyperparameters were selected to maximize validation score. The best hyperparameters selected for each model can be found in our code.

Training process. We utilize Adam optimizer to optimize models and set an epoch upper bound . All results of our models are provided from runs with 10 random seeds.

Computation cost The total time of each main experiment is shown in Table 5. Reproducing all main results takes 280 GPU hours.

| Cora | Citeseer | Pubmed | Collab | PPA | Citation2 | DDI | |

|---|---|---|---|---|---|---|---|

| NCN | |||||||

| NCNC |

Appendix E Time and Space Complexity

| Architecture | Method | B | C | D | E |

|---|---|---|---|---|---|

| MPNN only | GAE | ||||

| MPNN-and-SF | Neo-GNN | ||||

| BUDDY | |||||

| SF-then-MPNN | SEAL | ||||

| MPNN-then-SF | NCN | ||||

| NCNC |

Let denote the number of target links, denote the number of nodes in the graph, and denote the maximum node degree. Existing models’ time and space complexity can be expressed in and respectively, where are irrelevant to . of models are summarized in Table 6. The derivation of the complexity is as follows. As NCN, GAE, and GNN with separated structural features run MPNN on the original graph, they share similar in . Specifically, BUDDY (Chamberlain et al., 2023) uses a simplified MPNN with in . Moreover, Neo-GNN needs to precompute high order graph , which takes time and space. BUDDY needs to hash each node and takes time and space. In contrast, of SEAL is as it does not run MPNN on the original graph. For each target link, vanilla GNN only needs to feed the feature vector to MLP for each link, so . Besides GAE’s operation, BUDDY further needs to hash the structure for structural features, whose complexity is complex but higher than per edge, and Neo-GNN computes pairwise feature with complexity, where is the number of hop Neo-GNN consider. NCN needs to compute common neighbor: , pool node embeddings: , and feed to MLP: . NCNC- runs NCN for each potential common neighbor: . Similarly, NCNC- runs times NCNC-, so its time complexity is . For each target link, SEAL segregates a subgraph of size and runs MPNN on it, so , where is the number of hops of the subgraph.

Appendix F Scalability Comparison on datasets

The time and memory consumption of models on different datasets are shown in Figure 7. On these datasets, we observe results similar to those on the ogbl-collab dataset in Section 6.2: NCN achieves similar computation overhead to GAE; NCNC usually scales better than Neo-GNN; SEAL’s scalabilty is the worst. However, on the ogbl-citation2 dataset, SEAL has the lowest GPU memory consumption with small batch sizes, because the whole graph in ogbl-citation2 is large, on which MPNN is expensive, while SEAL only runs MPNN on small subgraphs sampled from the whole graph, leading to lower overhead.

| Collab | PPA | Citation2 | DDI | |

| Metric | Hits@50 | Hits@100 | MRR | Hits@20 |

| NCN | ||||

| NCNC | ||||

| Node2Vec | ||||

| DeepWalk | ||||

| LINE | ||||

| PLNLP | ||||

| GIDN | - | - | - | |

| NCN+tricks | - | - |

Appendix G Comparison with other link prediction models

Node embedding methods

The main advantage of GNN methods is that they keep permutation equivariance. In other words, these methods can give isomorphic links (links with the same structure) the same prediction. In contrast, node embedding methods, such as Node2Vec (Grover & Leskovec, 2016), LINE (Tang et al., 2015), and DeepWalk (Perozzi et al., 2014), will produce different results for isomorphic links, leading to potentially bad generalization.

We also compare our method with representative node embedding methods on ogb datasets in Table 7. NCN and NCNC outperform node embedding methods significantly on all datasets, indicating the advantages of MPNNs considering pairwise features for link prediction.

Other GNNs

Instead of representations of pairwise relations, PLNLP (Wang et al., 2021) and GIDN (Wang et al., 2022) boost GNNs on link prediction tasks by training tricks like loss function and data augmentation. These tricks are orthogonal to our model design. In experiments (Table 7), compared with PLNLP, NCN achieves performance gain on ogbl-ppa and gain on average. As GIDN only conducts experiment on one dataset ogbl-collab, the comparison is not complete. Moreover, tricks of PLNLP can also boost our models.

Appendix H CNC Example

Figure 8 provides an example from Cora dataset on how CNC works.

Appendix I Ablation of MPNN

Here we provide an ablation study on the MPNN used in NCN. The results are shown in Table 8. The MPNN model includes GIN (Xu et al., 2019), GraphSage (Hamilton et al., 2017), MPNN with max aggregation, GCN (Kipf & Welling, 2017), and GAT (Velickovic et al., 2018). Though the performance of NCN is sensitive to the MPNN model, NCN achieves performance gain with all GNNs compared with GraphAutoencoder (GAE).

| Dataset | Model | GIN | GraphSage | max | GCN | GAT |

|---|---|---|---|---|---|---|

| Cora | GAE | |||||

| NCN | ||||||

| Citeseer | GAE | |||||

| NCN | ||||||

| Pubmed | GAE | |||||

| NCN | ||||||

| collab | GAE | OOM | ||||

| NCN | OOM | |||||

| ppa | GAE | OOM | ||||

| NCN | OOM |

Appendix J Choice of Metrics

We test our model in different metrics. The results are shown in Table 9. In total, NCN achieves 11 best score (in bold), NCNC achieves 22 best score, and our strongest baseline achieves 9 best score. Therefore, our NCN and NCNC still outperforms baselines in different metrics.

| Cora | Citeseer | Pubmed | Collab | PPA | Citation2 | DDI | ||

|---|---|---|---|---|---|---|---|---|

| hit@1 | NCN | |||||||

| NCNC | ||||||||

| BUDDY | ||||||||

| hit@3 | NCN | |||||||

| NCNC | ||||||||

| BUDDY | ||||||||

| hit@10 | NCN | |||||||

| NCNC | ||||||||

| BUDDY | ||||||||

| hit@20 | NCN | |||||||

| NCNC | ||||||||

| BUDDY | ||||||||

| hit@50 | NCN | |||||||

| NCNC | ||||||||

| BUDDY | ||||||||

| hit@100 | NCN | |||||||

| NCNC | ||||||||

| BUDDY | ||||||||

| mrr | NCN | |||||||

| NCNC | ||||||||

| BUDDY |