Neural Augmentation Based Panoramic High Dynamic Range Stitching

Abstract

Due to saturated regions of inputting low dynamic range (LDR) images and large intensity changes among the LDR images caused by different exposures, it is challenging to produce an information enriched panoramic LDR image without visual artifacts for a high dynamic range (HDR) scene through stitching multiple geometrically synchronized LDR images with different exposures and pairwise overlapping fields of views (OFOVs). Fortunately, the stitching of such images is innately a perfect scenario for the fusion of a physics-driven approach and a data-driven approach due to their OFOVs. Based on this new insight, a novel neural augmentation based panoramic HDR stitching algorithm is proposed in this paper. The physics-driven approach is built up using the OFOVs. Different exposed images of each view are initially generated by using the physics-driven approach, are then refined by a data-driven approach, and are finally used to produce panoramic LDR images with different exposures. All the panoramic LDR images with different exposures are combined together via a multi-scale exposure fusion algorithm to produce the final panoramic LDR image. Experimental results demonstrate the proposed algorithm outperforms existing panoramic stitching algorithms.

Index Terms:

High dynamic range imaging, panoramic stitching, computational photography, weighted histogram averaging, neural augmentation, multi-scale exposure fusionI Introduction

Panoramic stitching can compose a group of images with pairwise overlapping fields of views (OFOVs) from a real-world scene to synthesize an image with a wider view for the scene [1]. All these images are usually 8-bit sRGB images and their exposure times are almost the same. Such a capturing method performs well for those real-word low dynamic range (LDR) scenes. However, a real-world high dynamic range (HDR) scene could have a dynamic range of 100,000,000:1 whereas an 8-bit sRGB image only has a dynamic range of 256:1 [2, 3]. Our human eyes are able to perceive dynamic ranges by up to 1,000,000:1. There are underexposed and/or overexposed regions in panoramic LDR images which are synthesized via the existing panoramic LDR stitching. Therefore, the inputting images of panoramic stitching are highly demanded to be captured by using different exposures for those real-world HDR scenes to give users a higher quality of experience [3, 4]. The resultant panoramic stitching is a new type, and can be called panoramic HDR stitching.

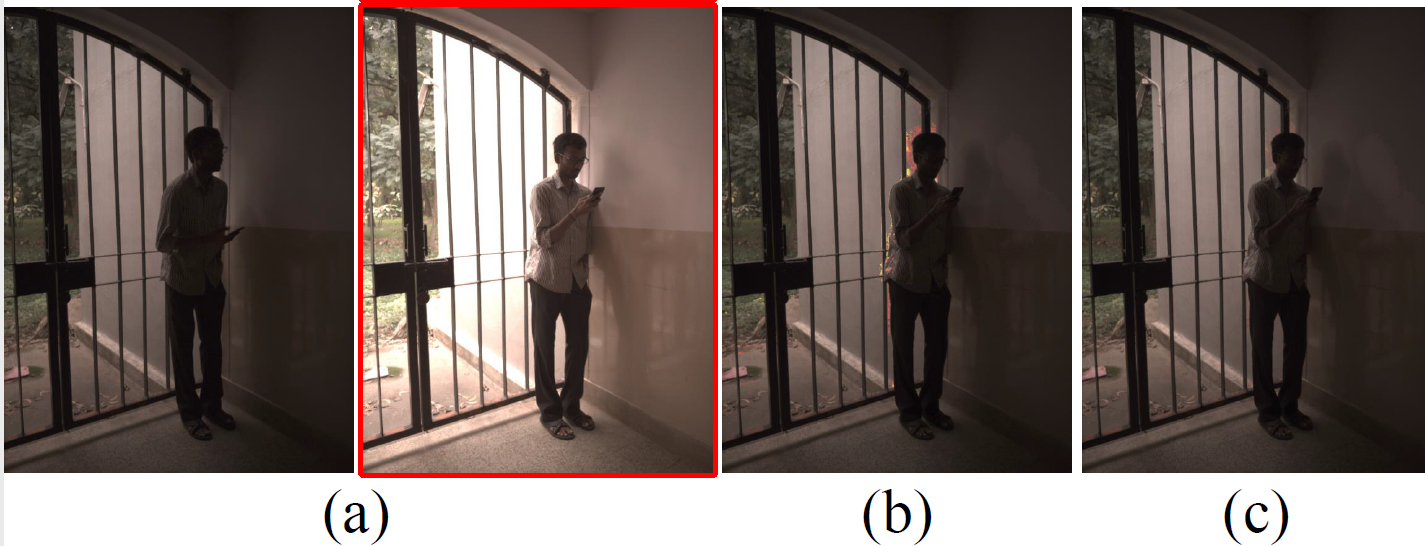

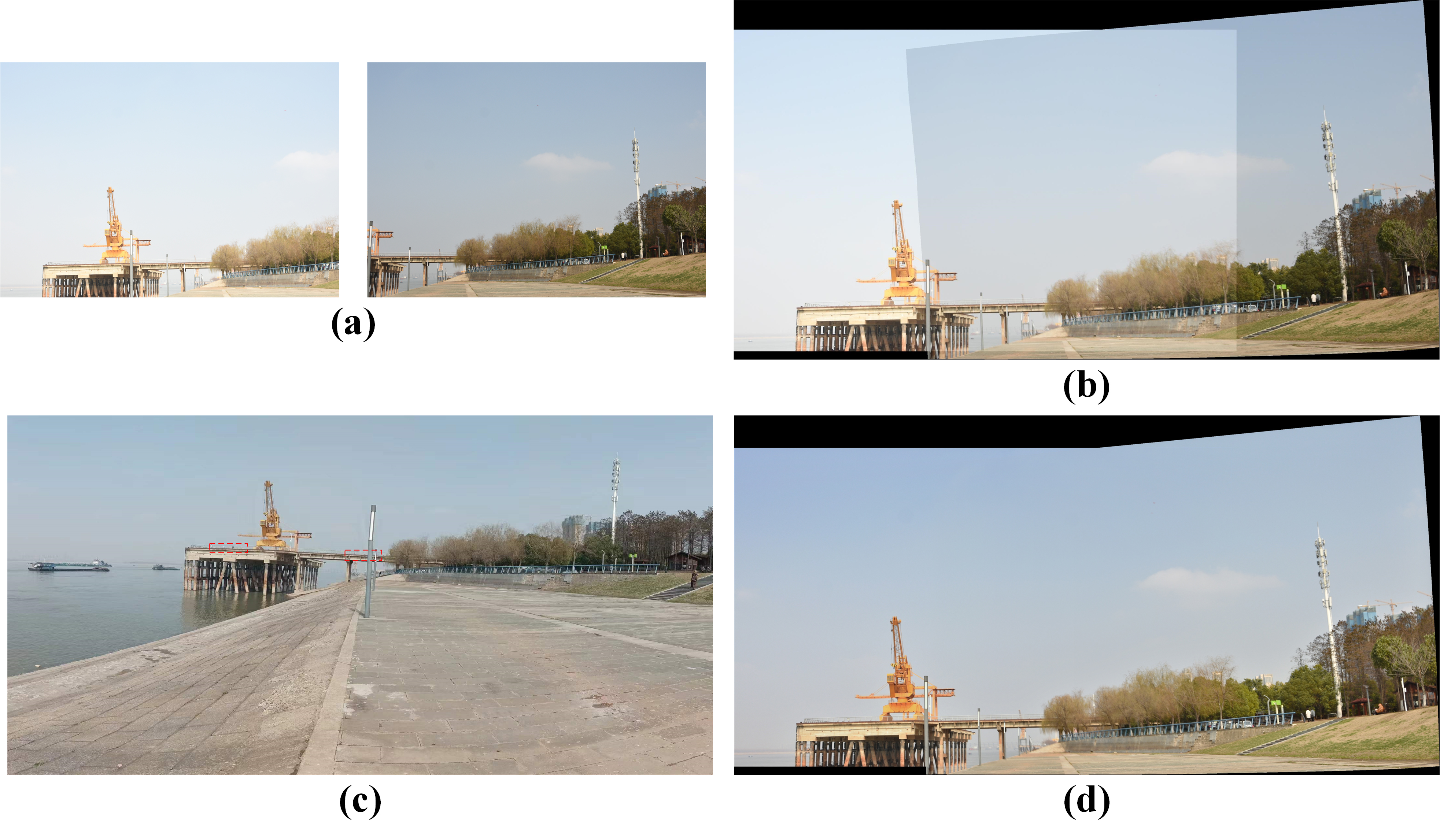

Two main steps of panoramic HDR stitching are: 1) to geometrically register all the inputting images by extending those algorithms in [5], and 2) to stitch all the aligned images together photometrically to removal all possible visual artifacts and recover underexposed and overexposed information. This paper primarily concentrates on the latter, which presents a new problem which has three main challenges: 1) eliminate brightness discrepancies among all the aligned images in the OFOVs caused by the varying exposures; 2) restore lost information in saturated regions of all the aligned images due to very limited number of different exposures, especially in those non-OFOV regions with only one exposure; and 3) preserve fine details of the darkest and brightest areas well in the final panoramic LDR image. These challenges cannot be adequately solved via existing panoramic LDR stitching algorithms [6, 7, 8, 9]. For instance, if all the synchronized images are tuned according to the brightness of the image with the largest (smallest) exposure, the brightest (darkest) areas of the HDR scene are then become overexposed (underexposed) in the panoramic LDR image, as illustrated in Figs. 1(c) and 1(d). Therefore, there is a need to introduce a different panoramic stitching algorithm tailored for real-world HDR scenes.

On top of our conference paper [10], a new type of panoramic stitching algorithm is proposed in this paper for a set of geometrically aligned LDR images with differing exposures and OFOVs, aimed at creating a panoramic LDR image with enriched information in the darkest and brightest regions for an HDR scene. The proposed panoramic HDR stitching algorithm is built upon a distinctive characteristic of the geometrically aligned LDR images with OFOVs and different exposures: all the pixels in the non-OFOV regions have only one exposure, whereas all the pixels in the OFOVs have two different exposures. Multiple panoramic LDR images with different exposures are generated from the inputs by using a novel physics-driven deep learning framework called neural augmentation. The framework is a seamless integration of a physics-driven approach and a data-driven approach . The former is on top of intensity mapping functions (IMFs) and the latter is on top of convolutional neural networks (CNNs). The proposed framework effectively combines both the generalization capability of the former and the learning capability of the latter.

The IMFs are estimated for adjacent images using the OFOVs among them, rather than being computed from camera response functions (CRFs) as in previous works [11, 12]. In other words, the proposed neural augmentation does not assume the availability of CRFs as in [11]. Therefore, the stitching of these images is a good case for the combination of the physics-driven approach and data-driven approach due to the OFOVs. The IMFs are estimated by using a new algorithm named as weighted histogram averaging (WHA) in [13] which is based on a novel correspondence between the two images at histogram-bin-level: each histogram bin from one image matches to one unique segment of the histogram bins from the other image. The mapped value is computed by averaging intensities in the matched segment with properly defined weights. The WHA outperforms those methods in [7, 12, 14, 15, 16, 17, 18, 19, 20]. The IMFs are adopted to initially produce the panoramic LDR images with different exposures. Such a method is a typical physics-driven approach and experimental results indicate that the accuracy of IMFs plays an important role in our neural augmentation. The OFOVs are thus required to be sufficiently large so as to estimate the accurate IMFs. The physics-driven approach is independent of training data, and it usually increases the robustness of our neural augmentation framework if it is accurate.

Even though the physics-driven approach is independent of training data, differently exposed images of each view generated by the physics-driven approach should be enhanced because of the limited representation capability of the physics-driven approach. A data-driven approach is thus introduced to refine these images by learning the remaining (or residual) information by proposing a new multi-scale exposedness aware network (MEAN). Our MEAN is designed by incorporating an exposedness aware guidance branch (EAGB) into short-skip connection based recursive residual groups (SRRGs). As pointed out in [21], deep CNNs generally have limited capability to represent high-frequency information. The function of both the multi-scale feature refinement structure and the short-skip connection structure is to preserve the desired high-frequency information. The exposedness of the input images is utilized to define a set of binary masks. The EAGB is designed on top of the binary masks, and it can assistant the proposed MEAN restore the overexposed and underexposed regions more efficiently.

All the differently exposed images of all the views are used to construct panoramic LDR images with different exposures. The generated panoramic LDR images with different exposures are ultimately combined together via the multi-scale exposure fusion (MEF) algorithm in [22], together with a physics-driven approach for the enhancement of high-frequency information. This process results in a panoramic LDR image with enriched information. As shown in Fig. 1(b), information in the darkest and brightest regions is preserved better in the stitched image by the proposed algorithm. The proposed algorithm is different from the algorithms in [3, 4] in the sense that a panoramic HDR image is first generated and then being tone mapped to a panoramic LDR image for displaying by the algorithms in [3, 4]. It is also different from the algorithms in [6, 7, 8] in the sense that the algorithms in [6, 7, 8] focus on real-world LDR scenes. Overall, the major contributions of this paper can be summarized as follows:

1) a new R&D problem is introduced for generating a set of panoramic LDR images with different exposures from the same number of geometrically synchronized LDR images with different exposures and OFOVs. The problem is addressed by a novel neural augmentation based framework which contains an IMF-based method and an CNN-based approach. Our neural augmentation framework converges faster than the data-driven approach. The framework can be applied to develop a new type of panoramic stitching systems;

2) an elegant histogram bins-based IMF estimation algorithm which is more accurate than existing IMF estimation algorithms and is more robust than the algorithms with respect to camera movements and moving objects;

3) a simple physics-driven approach to produce a panoramic LDR image with enriched information from a set of geometrically aligned and differently exposed LDR images with OFOVs. The genuine high-frequency information is effectively preserved in the final image.

II An Panoramic HDR Stitching Dataset

Same as other data driven research topics, data set plays an important role in the panoramic HDR stitching. Our panoramic HDR stitching data-set is built up from three well known HDR imaging data sets in [11, 25, 26]. All these differently exposed images in these datasets are captured in real-world. Each group of LDR images is constructed to resemble captures from a location with OFOVs, representing an HDR scene via a wide-angle perspective.

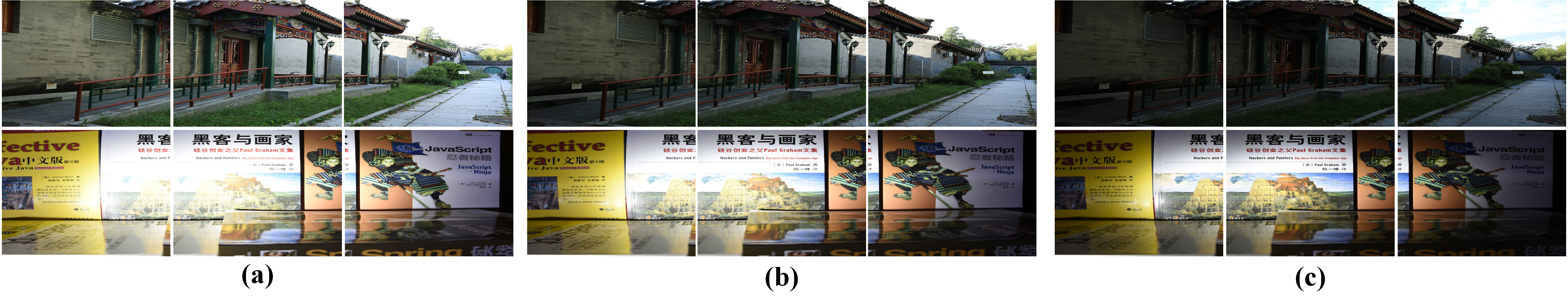

Nine images are captured for each HDR scene by using three different exposures and three different orientations. Three sets of LDR images with different exposures are captured for each orientation of the HDR scene. The nine images are denoted as (, , ), (, , ), and (, , ). Neither moving objects nor camera movements exist in the three LDR images with different exposure for each orientation. Our data set consists of 780 training sets, 80 validation sets, and 120 test sets. Two sets are illustrated in Fig. 2.

Inputs of the proposed panoramic HDR stitching algorithm are three differently exposed LDR images with OFOVs. They are , , and . is the non-OFOV region which is viewed only in the image . The corresponding sub-image is denoted as . The two pairwise OFOVs are denoted as and . The corresponding sub-images are , , and . It can be easily verified that the panoramic HDR image covers the spatial area of , and the images , and can be decomposed as

| (1) | ||||

| (2) | ||||

| (3) |

Each LDR image has a resolution of , and the overlapping area has a resolution of . It should be pointed out that the resolution of the overlapping area is important for the proposed neural augmentation framework. The exposure times are which satisfy . In other words, the EV gap between the image and is 1.

The inputs of the proposed panoramic HDR stitching possess a distinctive property: all the pixels in the non-OFOVs only have one exposure, while all the pixels in the OFOVs have two different exposures. The inputs are different from the input in singe image based HDR imaging [27, 28, 11] where all pixels only have one exposure. This is a new problem for the data-driven approach. It is difficult to restore three panoramic LDR images with different exposures from the three images. A new neural augmentation framework will be introduced to address this challenging problem in the next section.

III Neural Augmented Generation of Panoramic LDR Images with Different Exposures

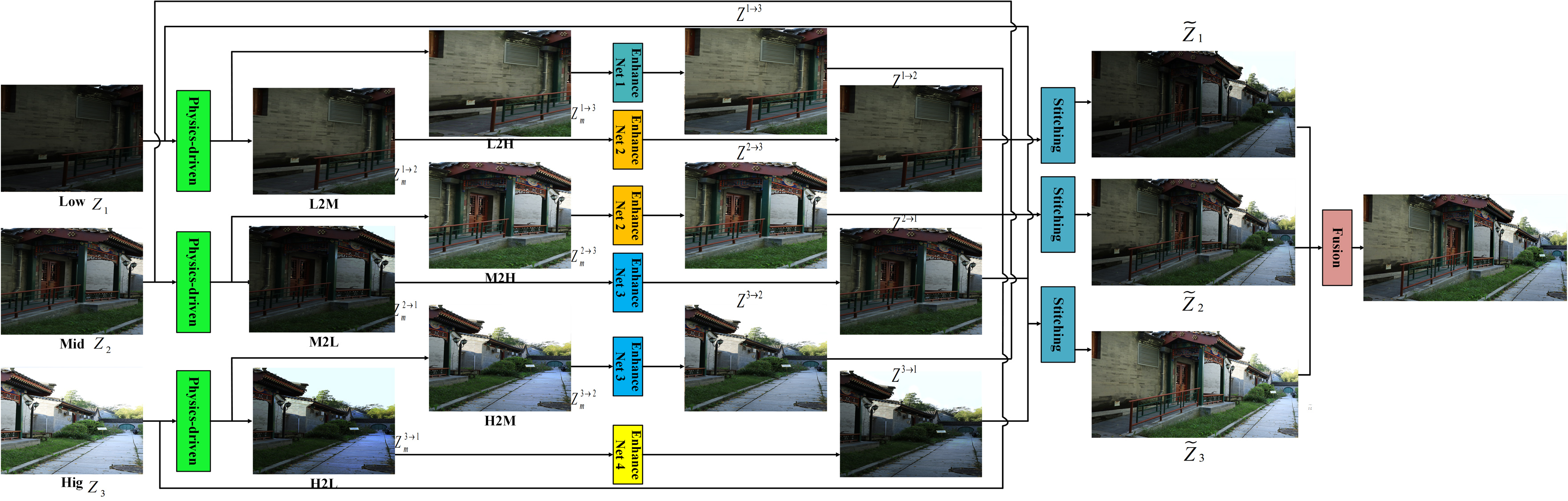

Diagram of our panoramic HDR imaging is shown in Fig. 3. Three differently exposed panoramic LDR images are synthesized via a novel neural augmentation framework which seamlessly integrates physics-driven and data-driven approaches. They will be combined together to generate an information enriched panoramic LDR image.

Two more differently exposed images are generated for image through

| (4) |

where is a physics-driven approach, and is a data-driven approach.

The three panoramic LDR images are then generated from the images , , and ’s . When the first panoramic LDR image is generated, the brightness of and is mapped to that of . The first image is produced as

| (10) |

where is defined according to the pixel in the set . It approaches 0 when the pixel moves to the region , and 1 when moves to the region . is defined according to the pixel in the set . It approaches 0 when moves to the region , and 1 when the pixel moves to the region .

Similarly, the second image is generated as

| (16) |

and the third one is synthesized as

| (22) |

Details on the and for all ’s and ’s are given in the following two subsections.

III-A Physics-driven Initialization

The accuracy of the physics-driven approach is crucial for the neural augmentation. Therefore, a novel histogram bins-based algorithm is proposed to estimate the IMFs [13]. The physics-driven generation of panoramic LDR images with different exposures builds upon the new IMF estimation algorithm.

The IMFs of the images and are computed by using their OFOV. The proposed method is different from the algorithms in [11] in the sense that the CRFs are required to be known in advance and the IMFs are computed from the CRFs in [11]. Therefore, the generation of panoramic LDR images with different exposures from the differently exposed LDR images with OFOVs is naturally a good case for the application of neural augmentation [11]. With some abuse of notation, their OFOVs are also denoted as and .

Let be a pixel position. is defined as , and is the number of pixels in the set . The cumulative histogram of the image is then computed as . A function can be defined from the non-decreasing property of the IMFs as [13]

| (23) |

where is 0. With the function , the correspondence between the histogram bins of the images and is first built up as follows: the first and last bins in the image corresponding to the bin are and , respectively. The cardinalities of sub-bins (or bins) in the image corresponding to the bin is denoted by . is defined as if is equal to . Otherwise, it is defined as

| (27) |

The new IMF is then computed by using the correspondance at the histogram-bin level as

| (28) |

Obviously, our new IMF is a weighted average of all the sub-bins (or bins) in the image corresponding to the bin . Therefore, our new IMF estimation algorithm is named as the WHA. Unlike the pixel-level correspondence in [19], the correspondence at the histogram-bin-level is robust to moving objects and camera movements. On the other hand, the high-frequency information will be lost when the IMFs are used to synthesize images.

The IMFs and are computed from the sub-images and . Similarly, the IMFs and are obtained from the sub-images and . Subsequently, the IMFs and can be computed. As shown in the section V-C, the accuracy of the IMFs plays an important role in the proposed neural augmentation framework. Thus, the resolutions of the sub-images , , and are required to be sufficiently large such that the IMFs , , and are highly accurate. Differently exposed images of each view are generated as

| (29) |

Even though the proposed physics-driven approach is independent of training data, its representation capability is usually a little limited. As a result, the quality of images is poor and all the images need to be refined via a data-driven approach as in the next subsection.

III-B Data-driven Refinement

The IMFs are physical models on the correlation among different exposed images. Since their representation capabilities are usually limited, there are unmodelled information. The unmodelled information can be further represented by the data-driven approach .

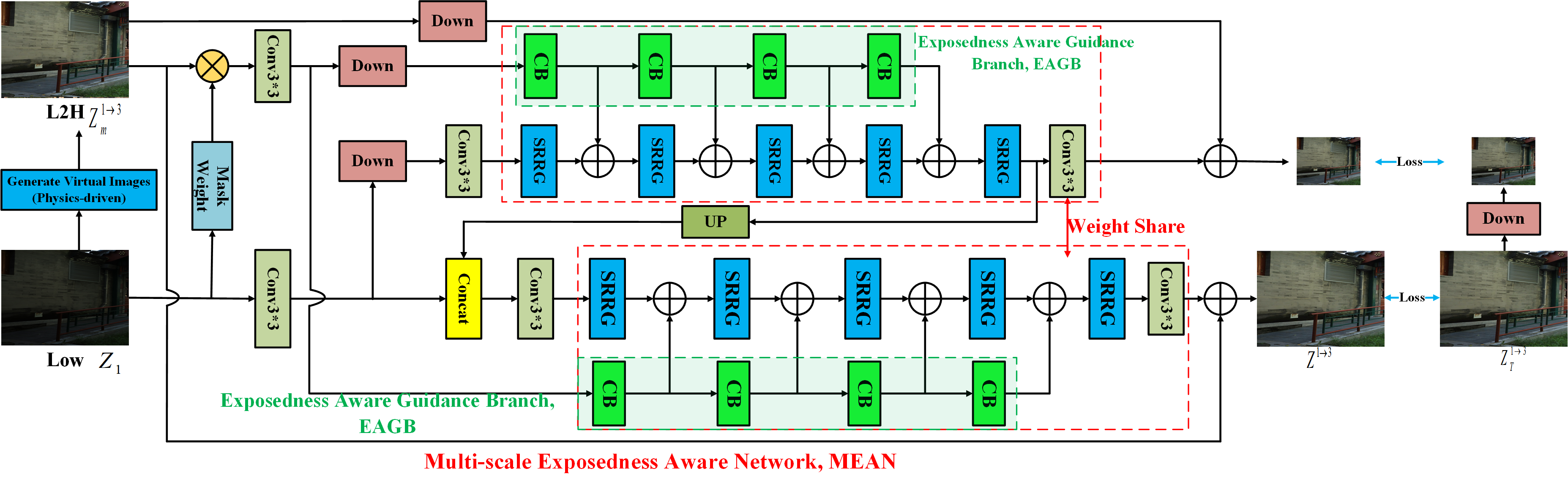

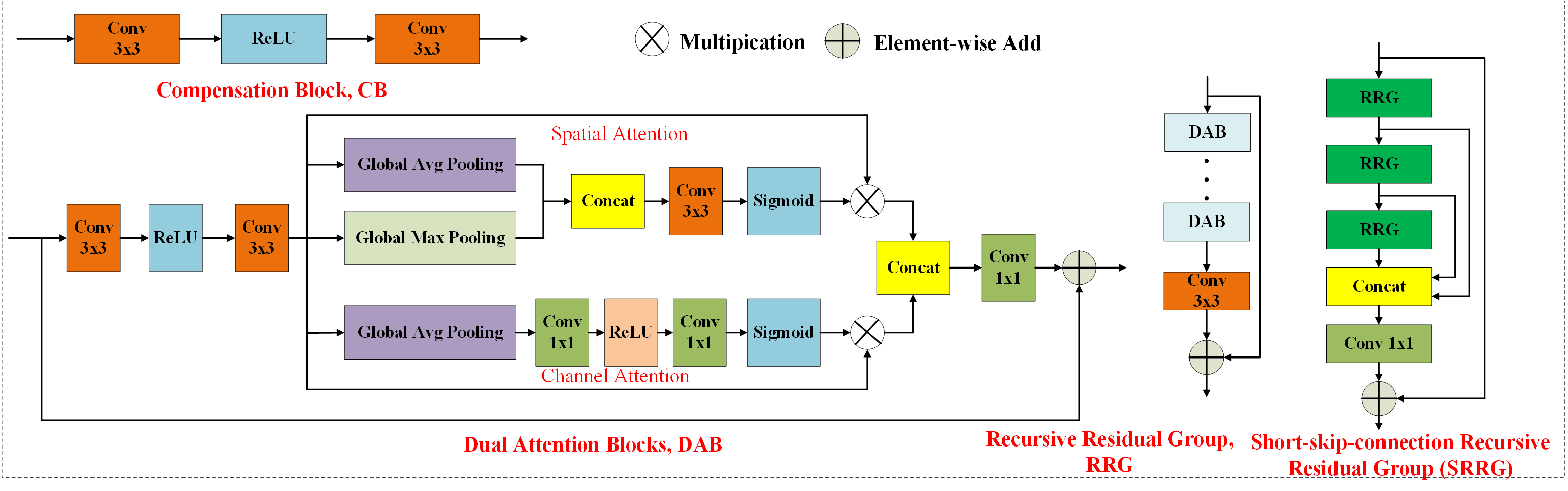

The unmodelled information can be easily compensated by the because it is sparser. Since the DNNs cannot learn the high-frequency information well [21], it is crucial for the data-driven approach to preserve the high-frequency information. A new MEAN is proposed in Figs. 4 and 5 to achieve these objectives. The key components of the proposed MEAN are EAGB, SRRGs, and multi-scale feature refinement. The SRRG is designed by adding short-skip connections to the RRG in [30] in order to pass shallow layers’ information into deep layers. As such, the high-frequency information has a potential to be well preserved. The proposed SRRG can propagate more informative features among different layers. The multi-scale feature refinement structure can further help preserve the high-frequency information.

The proposed EAGB consists of several compensations blocks (CBs) with each of them including two convolutional layers and one activation layer. The EAGB guides the SRRG to restore the saturated areas more effectively by properly binary masks . The masks are defined for the image as

| (32) |

It should be pointed out that when bright images are mapped to dark images, their binary masks are different. For example, the masks are

| (35) |

Obviously, the proposed masks are different from the soft mask. Besides the structure of the proposed MEAN, loss functions are also important for the data-driven approach. It is very important to guarantee the fidelity of the reconstructed image with to the ground truth image . A reconstruction loss function is

| (36) |

Color distortion is a possible issue for pixels in the underexposed and overexposed regions of [31]. To remove the color distortion, another loss function is given as

| (37) |

where is the angle between and [32].

One more feature-wise loss function is computed as

| (38) |

where and are the dimensions of the respective feature maps, denotes the feature map which is provided by the -th convolution before the -th pooling layer in the VGG network.

Our overall loss function is calculated as

| (39) |

where and are two hyper parameters. Since the reconstruction loss function is much more important than the other two items, and are empirically selected as ’s, respectively [11].

The numbers of SRRG and EAGB in the proposed MEAN are set as and , respectively. The MEAN is trained using an Adam optimizer. The batch size is 8. The learning rate is initially set to , then it is decreased through a cosine annealing method. Our MEAN is trained for 1000 epochs.

Our neural augmentation framework is convergent faster than the corresponding data-driven approach. Note than the data-driven approach only generates a refined term for the physics-driven approach. As such, the amount of training data is reduced by our new framework to achieve an accuracy [29]. Moreover, the physics-driven approach is independent of the training data, our new framework can be applied to to obtain a more general representation of the data than the corresponding data-driven approach.

IV Multi-scale Fusion of Panoramic LDR Images with Different Exposures

The three panoramic LDR images with different exposures are merged together as via the MEF algorithm in [22] which is on top of edge-preserving smoothing pyramids [23, 24]. Since the high frequency information could be lost by both the data-driven approach and the MEF, a log domain physics-driven approach is adopted to preserve the genuine high-frequency information in the fused image for each group of inputting images.

For simplicity, let be denoted as . Inputs of the proposed quadratic optimization based enhancement algorithm are the stitched image and a guidance vector field . Output is a high-frequency information enhanced image . The vector field is generated by extending the gradient domain alpha blending as

| (43) |

where the weight is calculated as

| (44) |

is is the center of the overlap area . is the width of .

The genuine high-frequency information can then be extracted via:

| (45) |

where and are empirically chosen as 0.125 and 1.125, respectively, and is

| (46) |

The high-frequency information enhanced image is finally calculated as with the extracted high-frequency information as .

V Experiment Results

Three main contributions of this paper: the proposed WHA, the new neural augmentation framework, and our panoramic HDR stitching algorithms are evaluated in this section.

V-A Evaluation of the Proposed WHA

The accuracy and computational cost of the physics-driven approach are important for our neural augmentation. In this subsection, the proposed WHA is compared with existing IMF estimation algorithms including HHM [7], PCRF [12], TG [14], AM [15], 3MS [16], GPS [17], MV [18], GC [19], and CHM [20] by using the VETHDR-Nikon dataset [11]. The PSNR, SSIM, FSIM, iCID and running time are chosen as the evaluation metrics. All the algorithms are tested by using the Matlab R2019a on a laptop with Intel Core i7-9750H CPU 2. 59GHz and 32. 0 GB memory.

In the VETHDR-Nikon dataset [11], each pair of differently exposed LDR images is well-aligned. In order to simulate cases with camera movements, those images in the data-set are cropped according to the following two equations:

| (47) | |||

| (48) |

where and are the weight and height of the images in the dataset. As demonstrated in Table I, the proposed WHA achieves the best performance from all the PSNR, SSIM, FSIM, and iCID points of view, and its speed is slightly slower than the CHM [20].

| Method | PSNR | SSIM | FSIM | iCID(%) | Time(s) |

| HHM [7] | 29.88 | 0.8977 | 0.9673 | 8.24 | 0.11 |

| PCRF [12] | 30.69 | 0.9056 | 0.9715 | 7.67 | 1.79 |

| TG [14] | 29.34 | 0.8897 | 0.9391 | 11.35 | 12.52 |

| AM [15] | 25.68 | 0.8413 | 0.8882 | 18.49 | 0.54 |

| 3MS [16] | 28.96 | 0.8857 | 0.9455 | 11.17 | 7.70 |

| GPS [17] | 30.96 | 0.8945 | 0.9643 | 9.36 | 151.09 |

| MV [18] | 28.14 | 0.8964 | 0.9470 | 10.28 | 0.66 |

| GC [19] | 28.17 | 0.8693 | 0.9385 | 11.98 | 1.77 |

| CHM [20] | 32.36 | 0.9029 | 0.9740 | 7.10 | 0.08 |

| WHA | 34.38 | 0.9153 | 0.9815 | 4.77 | 0.08 |

The proposed WHA can be applied to investigate ghost removal of differently exposed LDR images. There are many ghost removal algorithms for differently exposed images [31]. The proposed WHA is adopted to improve the ghost removal algorithm in [31]. One of the input images is selected as the reference image. Pixels in all other images are divided into inconsistent and consistent pixels. All the inconsistent pixels are corrected by using the correlation between the reference image and the corresponding image. The IMFs which are estimated by the CHM in [20] are used in [31] to detect all the inconsistent pixels and correct them. The CHM in [20] is replaced by the proposed WHA. As shown in Fig. 6(b), there is serious color distortion in the wall by using the CHM in [20]. Fortunately, the color distortion is significantly reduced by our WHA. It should be pointed out that the WHA was recently applied in [34] to design an optical flow estimation algorithm for differently exposed LDR images in LDR domain. It was shown in [34] that the WHA outperforms the gamma correction which was widely utilized by deep-learning based ghost removal algorithms.

V-B Ablation Study

| case | physics-driven approach | EAGB | short-skip connection | multi-scale | SSIM () | PSNR (dB: ) | FSIM () |

| 1 | Y | N | N | N | 0.9361 | 31.28 | 0.9800 |

| 2 | N | N | Y | Y | 0.9420 | 31.86 | 0.9804 |

| 3 | Y | N | Y | Y | 0.9434 | 32.30 | 0.9811 |

| 4 | Y | Y | N | Y | 0.9448 | 33.09 | 0.9816 |

| 5 | Y | Y | Y | N | 0.9461 | 33.27 | 0.9817 |

| 6 | Y | Y | Y | Y | 0.9464 | 33.39 | 0.9822 |

Four important components of the proposed neural augmentation frameworks are the physics-driven approach, the EAGB, the short-skip connection, and the multi-scale refinement. Their performances are evaluated in this subsection.

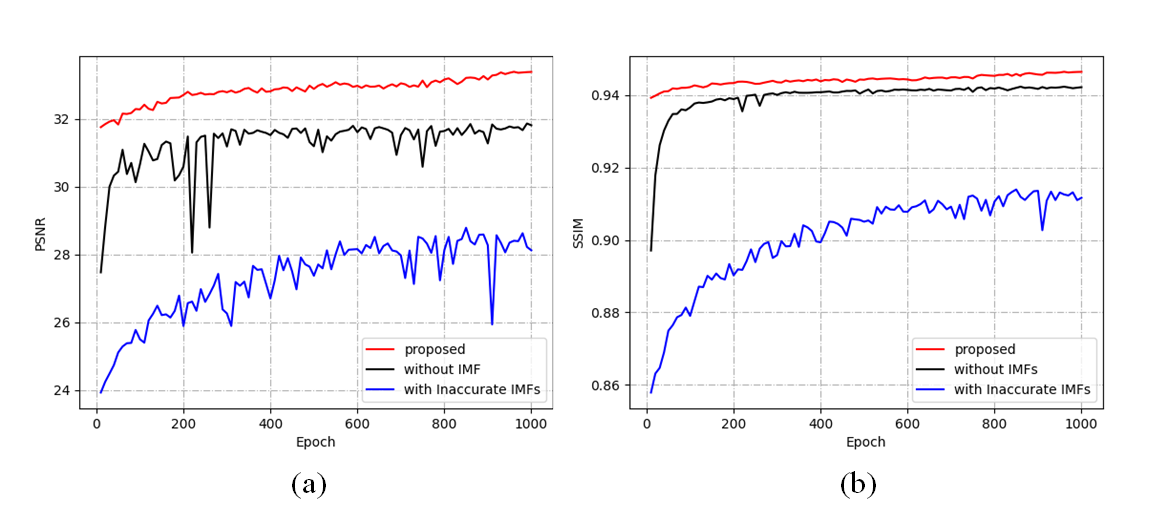

With vs without the physics-driven approach: Our neural augmentation framework and the data-driven approach are compared with each other. The data-driven approach is almost identical to the data-driven approach of our neural augmentation framework, except for disabling the EAGB. This is because that the initial synthetic images is required by the EAGB. Table II demonstrates that our framework can enhance the SSIM, PSNR, and FSIM. Additionally, Fig. 7 illustrates that our new framework converges faster than the data-driven approach. It is noteworthy that case 1 in Table II is the physics-driven approach. Clearly, the data-driven approach can significantly enhance the physics-driven approach.

With vs without the EAGB: As demonstrated in Fig. 7, the EAGB can be applied to improve the stability of the proposed framework, and it can also achieve higher PSNR and SSIM values at different epochs.

With vs without the short-skip-connection: As shown in Fig. 5, our proposed SRRG can transmit information from shallow layers to deep layers. The PSNR and SSIM at different epochs are depicted in Fig. 7, indicating that the mode with the short-skip connection can obtain superior results.

Multi-scale vs single-scale: The proposed multi-scale framework and another mode with a single-scale structure are compared with each other. The multi-scale structure achieves higher SSIM, PSNR, and FSIM values.

V-C Evaluation of Different Physics-driven Approaches

It was shown in [29] that the physics-driven approach can be improved by the data-driven approach in the neural augmentation. However, one important issue was ignore in [29], i.e., is the accuracy of the physics-driven approach important for the neural augmentation? Experimental results are given to address the issue in this subsection. More specifically, highly accurate IMFs are compared with lowly accurate IMFs.

Two different overlapping area, denoted as R1 () and R2 (), are compared to answer the question. The IMFs of the former are more accurate than those of the latter. As depicted in Fig. 7, the former converges faster than the latter. The PSNR and SSIM of R1 are also increased. Therefore, the accuracy of the physics-driven approach is important for the proposed neural augmentation framework. To reduce the inference complexity of the neural augmentation, the physics-driven approach is also required to be simple.

It is also demonstrated in Fig. 7 that data-driven approach is typically locally stable. Thus, a neural augmentation on top of an accurate physics-driven approach usually outperforms the corresponding data-driven approach.

V-D Comparison of Different Panoramic Stitching Algorithms

The proposed panoramic HDR stitching algorithm is compared with four state-of-the-art (SOTA) panoramic stitching algorithms in [6, 35, 7, 8].

| set | [6] | [35] | [7] | [8] | Ours | Ours with (45) |

| 1 | 0.4543 | 0.8036 | 0.9380 | 0.5436 | 0.9723 | 0.9726 |

| 2 | 0.4552 | 0.7048 | 0.8948 | 0.6850 | 0.9904 | 0.9905 |

| 3 | 0.5904 | 0.6918 | 0.9467 | 0.4614 | 0.9941 | 0.9943 |

| 4 | 0.7034 | 0.7386 | 0.9861 | 0.7124 | 0.9902 | 0.9904 |

| 5 | 0.1567 | 0.4391 | 0.8239 | 0.2877 | 0.8499 | 0.8532 |

| 6 | 0.5829 | 0.5843 | 0.9336 | 0.6058 | 0.9666 | 0.9678 |

| 7 | 0.2314 | 0.4086 | 0.9537 | 0.6549 | 0.9929 | 0.9936 |

| 8 | 0.3080 | 0.2690 | 0.9413 | 0.3029 | 0.9186 | 0.9215 |

| 9 | 0.4198 | 0.7182 | 0.9663 | 0.5743 | 0.9920 | 0.9923 |

| 10 | 0.1778 | 0.5477 | 0.9417 | 0.4098 | 0.9844 | 0.9849 |

| 11 | 0.4759 | 0.6925 | 0.9573 | 0.7375 | 0.9906 | 0.9908 |

| 12 | 0.3331 | 0.3390 | 0.8885 | 0.3083 | 0.9424 | 0.9425 |

| 13 | 0.4064 | 0.5268 | 0.9758 | 0.4483 | 0.9854 | 0.9857 |

| 14 | 0.2590 | 0.6723 | 0.9516 | 0.3132 | 0.9778 | 0.9783 |

| 15 | 0.3470 | 0.5467 | 0.9503 | 0.4174 | 0.9909 | 0.9914 |

| avg | 0.3829 | 0.5789 | 0.9366 | 0.4975 | 0.9692 | 0.9700 |

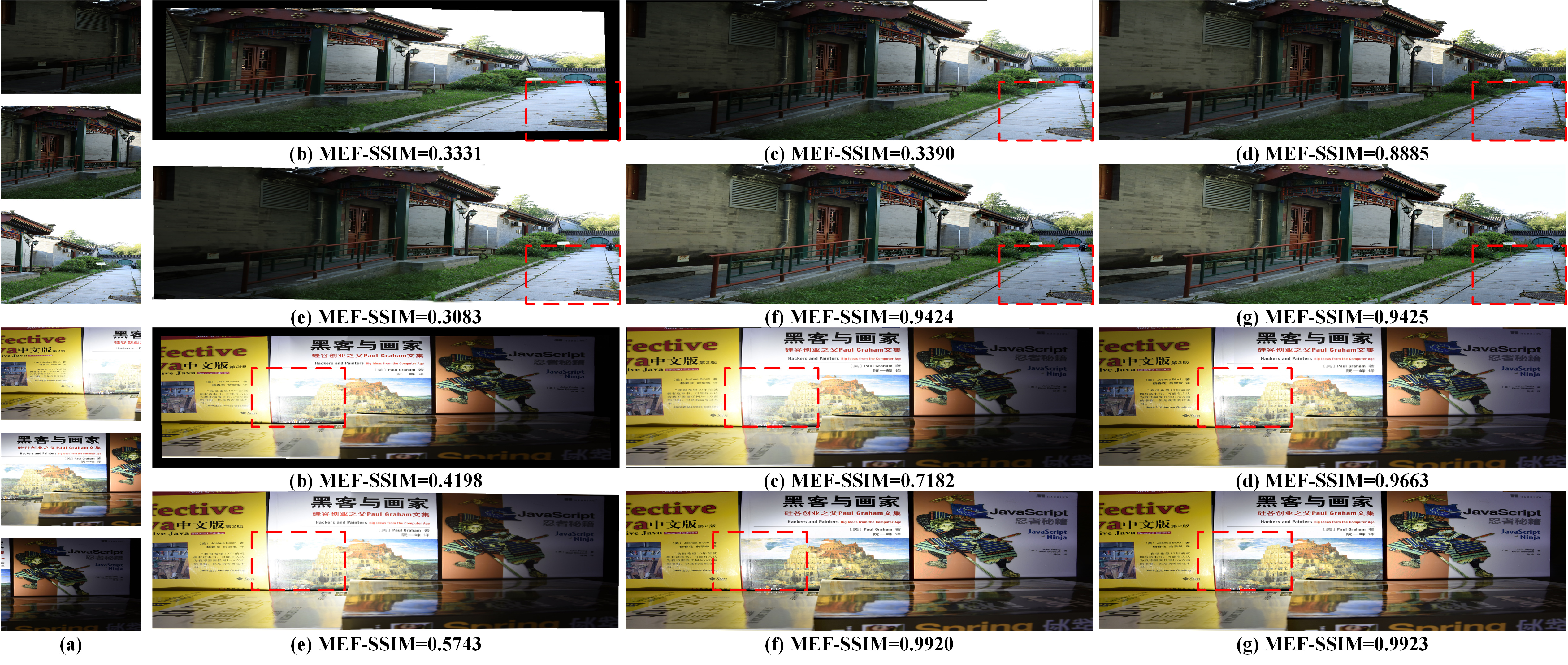

As illustrated in Fig. 8, serious brightness inconsistencies are evident in the stitched images produced by the algorithms in [6, 35, 8]. These inconsistencies are overcome by the algorithms in [7] and our algorithm. Information in the darkest regions of the first set and information in the brightest regions of the second set are preserved better using our algorithm compared to the algorithms in [6, 35, 8, 7]. Additionally, as highlighted by the red boxes, the algorithm in [7] produces color distortions. The MEF-SSIM metric in [36] is also employed to compare the all these. The MEF-SSIM is computed by using the ground-truth images of three panoramic LDR images with different exposures and a panoramic LDR image stitched by one of these algorithms. As shown in Table III, our algorithm noticeably outperforms those in [6, 35, 7, 8] from the MEF-SSIM perspective. Our algorithm (45) extract real high-frequency information from each set of images and can further enlarge the average MEF-SSIM. Clearly, the HDR stitching is highly demanded for real-world HDR scenes.

V-E Evaluation of the Local Stitching Algorithm (45)

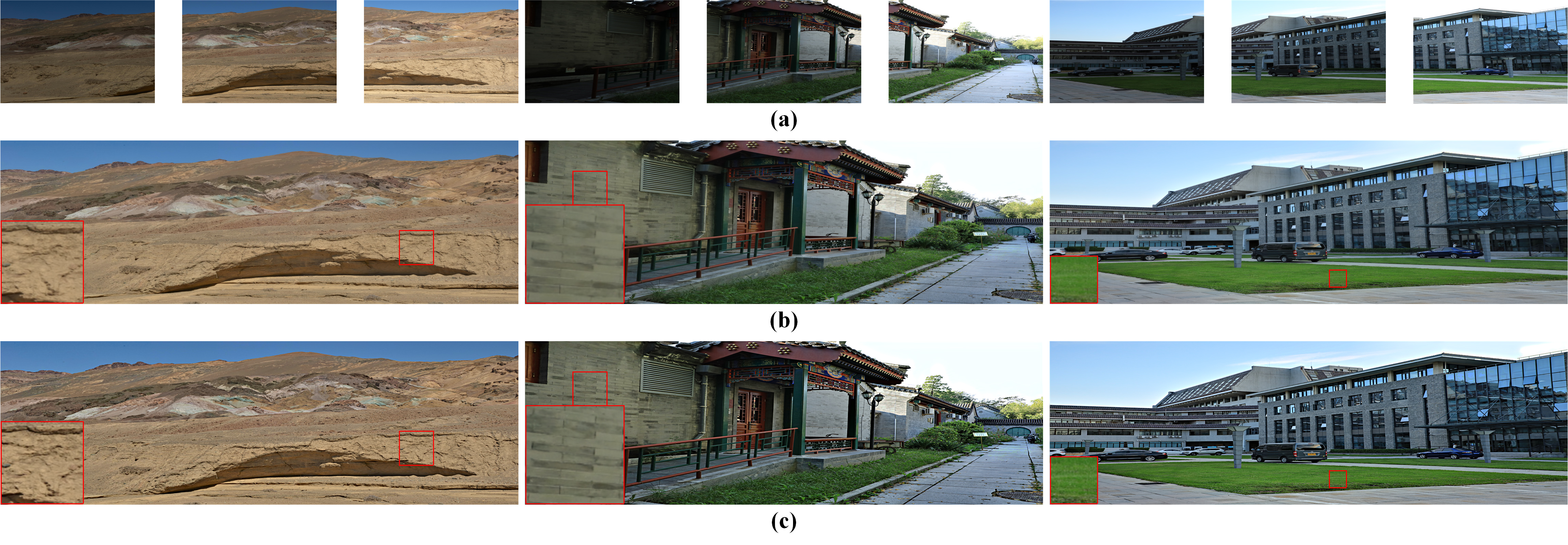

The proposed local stitching algorithm (45) is evaluated in this subsection. As shown by enlarged parts in Fig. 9, high-frequency components such as sharp edges, textures, fine details, etc are indeed preserved better by the local one. Therefore, the local algorithm can indeed be applied to achieve instance adaptation. It should be mentioned that different users have different preferences on the sharpness of the enhanced image . This can be achieved via a weighted combination with in the interval . An interactive mode can be provided by allowing tuning the on-line.

V-F A New Panoramic HDR Stitching System

A new panoramic stitching system is proposed in this subsection. Instead of capturing a series of images by existing panoramic stitching systems, multiple differently exposed images are captured with varying orientations. For simplicity, the case of two differently exposed images is studied in this subsection. The new system can be easily extended to the case of three different exposed images. The differences between the orientations of these two images are about 15 degrees as shown in Fig. 14. Since the exposures of the two images are different, the algorithm in [5] cannot be applied to align these images directly. The right images is first mapped to the brightness of the left one by using the IMFs between them. They are then aligned by simultaneously using homography transformations and thin-plate spline transformations. The homography transformation provides a global linear transformation, while the thin-plate spline transformation allows local nonlinear deformation. These two images are finally stitched by using the proposed neural augmentation based algorithm. Two panoramic LDR images with different exposures are produced by using the same set of homography transformation and thin-plate spline transformations. They are fused to generate the final image as shown in Fig. 10 (d).

Such a new panoramic HDR stitching system is compared with two conventional panoramic LDR stitching systems. One is from a smart phone and the other is the algorithm in [5]. The exposure times of those images are supposed to be almost the same for the conventional panoramic LDR stitching systems. As demonstrated in Fig. 10, the images produced by the new panoramic HDR stitching system is much better than those generated by the conventional panoramic LDR stitching systems. The panoramic LDR image captured with a mobile phone exhibits noticeable distortion in the bridge deck, as highlighted by these two red boxes. The panoramic LDR image generated using [5] includes visible seams, and has three different brightnesses in different regions. These artifacts are removed by using the proposed panoramic HDR stitching algorithm.

VI Conclusion Remarks and Discussions

High dynamic range imaging and panoramic stitching are two popular types of computational photography. They are studied jointly in this paper while they are usually investigated independently. There are many applications of high dynamic range (HDR) panoramic imaging. A novel algorithm is proposed in this paper to stitch multiple geometrically synchronized and differently exposed sRGB images with overlapping fields of views (OFOVs) for a real-world high dynamic range (HDR) scene. Different exposed images of each view are initially generated by using an accurate physics-driven approach, are then refined by a data-driven approach, and are finally adopted to generate panoramic LDR images with different exposures. All the panoramic LDR images with different exposures are merged using a multi-scale exposure fusion algorithm, incorporating a physics-driven approach for the enhancement of high-frequency information, resulting in an information-enriched panoramic LDR image.

It should be pointed out that the inputs are required to have sufficiently large overlapping fields of views large OFOVs by the proposed neural augmentation. It would be interesting to consider the case that the OFOVs are not large. This challenging case will be investigated by exploring new ways to derive the highly accurate physics-driven approach required by the neural augmentation.

References

- [1] Y. Chen, G. Jiang, M. Yu, C. Jin, H. Xu, Y.-S. Ho, “Hdr light field imaging of dynamic scenes: A learning-based method and a benchmark dataset,” Pattern Recognition,150: 110313, 2024.

- [2] P. E. Debevec and J. Malik, “Rendering high dynamic range radiance maps from photographs,” In Proceedings of SIGGRAPH, pp. 369-378, USA, May 1997.

- [3] M. Aggarwal and N. Ahuja, “High dynamic range panoramic imaging,” in Proceedings of the Eighth IEEE International Conference on Computer Vision, pp. 2-9, 2001.

- [4] A. Eden, M. Uyttendaele, and R. Szeliski, “Seamless image stitching of scenes with large motions and exposure differences,” in Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, vol. 2, pp. 2498-2505, USA, Oct. 2006.

- [5] L. Nie, C. Lin, K. Liao, S. Liu, Y. Zhao, “Parallax-tolerant unsupervised deep image stitching,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 7399–7408, 2023.

- [6] M. Brown, and D. G. Lowe, “Automatic panoramic image stitching using invariant features,” International Journal of Computer Vision, vol. 74, pp.59-73, 2007.

- [7] C. Ding and Z. Ma “Multi-camera color correction via hybrid histogram matching,” IEEE Trans. on Circuits and Systems for Video Technology,vol. 31, no. 9, pp. 3327-337, Sept. 2021.

- [8] Q. Jia, Z. Li, H. Zhao, S. Teng, X. Ye, L. Latecki, “Leveraging line-point consistence to preserve structures for wide parallax image stitching,” in IEEE Conference on Computer Vision and Pattern Recognition, pp. 12186-12195, Jun. 2021.

- [9] G. Wu, R. Song, M. Zhang, X. Li, P. L. Rosin, “Litmnet: A deep cnn for efficient hdr image reconstruction from a single ldr image,” Pattern Recognition, 127: 108620, 2022.

- [10] C. Zheng, Y. Xu, W. Chen, S. Wu, Z. Li, “Physics-driven deep panoramic imaging for high dynamic range scenes,” in IECON 2023- 49th Annual Conference of the IEEE Industrial Electronics Society, pp. 1–6, 2023.

- [11] C. Zheng, W. Ying, S. Wu, Z. Li, “Neural augmentation based saturation restoration for ldr images of hdr scenes,” IEEE Transactions on Instrumentation and Measurement, vol. 72, pp. 1-11, 2023.

- [12] Y. Yang, W. Cao, S. Wu, and Z. Li, “Multi-scale fusion of two large-exposure-ratio images,” IEEE Signal Processing Letters, vol. 25, no. 12, pp. 1885–1889, Dec. 2018.

- [13] Y. Xu, Z. Li, W. Chen, and C. Wen, “Novel intensity mapping functions: weighted histogram averaging,” in the 17th IEEE Conference on Industrial Electronics and Applications, pp. 1157-1161, China, Dec. 2022.

- [14] M. Oliveira, A. D. Sappa, and V. Santos, “A probabilistic approach for color correction in image mosaicking applications,” IEEE Trans. on Image Processing, vol. 24, no. 2, pp. 508–523, Feb. 2014.

- [15] Y. Hwang, J.-Y. Lee, I. So Kweon, and S. J. Kim, “Color transfer using probabilistic moving least squares,” in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 3342–3349, 2014.

- [16] H. Faridul, J. Stauder, J. Kervec, and A. Trémeau, “Approximate cross channel color mapping from sparse color correspondences,” in Proceedings of the IEEE international conference on computer vision workshops, 2013, pp. 860–867.

- [17] F. Bellavia and C. Colombo, “Dissecting and reassembling color correction algorithms for image stitching,” IEEE Trans. on Image Processing, vol. 27, no. 2, pp. 735–748, Feb. 2017.

- [18] E. Reinhard, M. Adhikhmin, B. Gooch, and P. Shirley, “Color transfer between images,” IEEE Computer graphics and applications, vol. 21, no. 5, pp. 34–41, May 2001.

- [19] Z. Zhu, Z. Li, S. Rahardja, and P. Franti, “Recovering real-world scene: High-quality image inpainting using multi-exposed references,” Electronics Letters, vol. 45, no. 25, pp.1310-1312, 2009.

- [20] M. D. Grossberg and S. K. Nayar, “Determining the camera response from images: what is knowable?” IEEE Trans. Pattern Analysis and Machine Intelligence, vol. 25, no. 11, pp. 1455-1467, Nov. 2003.

- [21] N. Rahaman, A. Baratin, D. Arpit, F. Draxler, M. Lin, F. Hamprecht, Y. Bengio, and A. Courville, “On the spectral bias of neural networks,” in Proceedings of the 36th International Conference on Machine Learning, pp. 5301-5310, 2019.

- [22] F. Kou, Z. Li, W. Chen, and C. Wen, “Edge-preserving smoothing pyramid based multi-scale exposure fusion,” Journal of Visual Communication and Image Representation, vol. 58, no. 4, pp. 235-244, Apr. 2018.

- [23] F. Kou, W. Chen, C. Wen, and Z. Li, “Gradient domain guided image filtering,” IEEE Trans. on Image Processing, vol. 24, no. 11, pp.4528-4539, Nov. 2015.

- [24] Z. Li, J. Zheng, Z. Zhu, W. Yao, and S. Wu, “Weighted guided image filtering,” IEEE Trans. on Image Processing, vol. 24, no. 1, pp. 120-129, Jan. 2015.

- [25] J. Cai, S. Gu, and L. Zhang, “Learning a deep single image contrast enhancer from multi-exposure images,” IEEE Trans. on Image Processing, vol. 27, no. 4, pp. 2049-2062, 2018.

- [26] Y. Xu, Z. Liu, X. Wu, W. Chen, C. Wen, and Z. Li, “Deep joint demosaicing and high dynamic range imaging within a single shot,” IEEE Trans. on Circuits and Systems for Video Technology, vol. 32, no. 8, pp.1-16, Aug. 2022.

- [27] F. Banterle, P. Ledda, K. Debattista, and A. Chalmers, “Inverse tone mapping,” in Proc. of GRAPHITE’06, pp. 349-356, 2006.

- [28] Y. Endo, Y. Kanamori, and J. Mitani, “Deep reverse tone mapping,” ACM Trans. on Graphics, vol. 36, no. 6, pp. 1-10, Nov. 2017.

- [29] N. Shlezinger, J. Whang, Y. C. Eldar, and A. G. Dimakis, “Model-based deep learning,” arXiv: 2012.08405v2 [eess.SP] 27 Jun 2021.

- [30] S. Wa. Zamir, A. Arora, S. Khan, M. Hayat, F. S. Khan, M. Yang, and L. Shao “CycleISP: real image restoration via improved data synthesis,” in IEEE Conference on Computer Vision and Pattern Recognition, Jun. 2020.

- [31] Z. Li, J. Zheng, Z. Zhu, and S. Wu, “Selectively detail-enhanced fusion of differently exposed images with moving objects,” IEEE Trans. on Image Processing, vol. 23, no. 10, pp. 4372-4382, Oct. 2014.

- [32] R. Wang, Q. Zhang, C. Fu, X. Shen, W. Zheng, and J. Jia, “Underexposed photo enhancement using deep illumination estimation,” in IEEE Conference on Computer Vision and Pattern Recognition, , pp. 6849–6857, 2019.

- [33] K. R. Prabhakar, R. Arora, A. Swaminathan, K. P. Singh, and R. V. Babu, “A fast, scalable, and reliable deghosting method for extreme exposure fusion,” in 2019 IEEE International Conference on Computational Photography (ICCP), pp. 1–8.

- [34] Z. Y. Liu, Z. G. Li, Z. Liu, X. M. Wu and W. H. Chen, “Unsupervised optical flow estimation for differently exposed images in LDR domain,” IEEE Trans. on Circuits and Systems for Video Technology, vol. 33, no. 10 , pp. 5332-5344, Oct. 2023.

- [35] T. Liao and N. Li, “Single-perspective warps in natural image stitching,” IEEE Trans. Image Processing, vol. 29, pp. 724-735, 2020.

- [36] K. Ma, K. Zeng, and Z. Wang, “Perceptual quality assessment for multiexposure image fusion,” IEEE Trans. on Image Processing, vol. 24, no. 11, pp. 3345-3356, Nov. 2015.