Network resampling for estimating uncertainty

Abstract

With network data becoming ubiquitous in many applications, many models and algorithms for network analysis have been proposed. Yet methods for providing uncertainty estimates in addition to point estimates of network parameters are much less common. While bootstrap and other resampling procedures have been an effective general tool for estimating uncertainty from i.i.d. samples, adapting them to networks is highly nontrivial. In this work, we study three different network resampling procedures for uncertainty estimation, and propose a general algorithm to construct confidence intervals for network parameters through network resampling. We also propose an algorithm for selecting the sampling fraction, which has a substantial effect on performance. We find that, unsurprisingly, no one procedure is empirically best for all tasks, but that selecting an appropriate sampling fraction substantially improves performance in many cases. We illustrate this on simulated networks and on Facebook data.

1 Introduction

With network data becoming common in many fields, from gene interactions to communications networks, much research has been done on fitting various models to networks and estimating parameters of interest. In contrast, few methods are available for evaluating uncertainty associated with these estimates, which is key to statistical inference. In classical settings, when asymptotic analysis for obtaining error estimates is intractable, various resampling schemes have been successfully developed for estimating uncertainty, such as the jackknife [34], the bootstrap [10], and subsampling or -out-of- bootstrap [6, 31]. Most of the classical resampling methods were developed for i.i.d. samples and are not easily transferred to the network setting, where nodes are connected by edges and have a complex dependence structure. Earlier work on bootstrap for dependent data focused on settings with a natural ordering, such as time series [30] or spatial random fields [7].

More recently, a few analogues of bootstrap have been proposed specifically for network data. One general approach is to extract some features of the network that can be reasonably treated as i.i.d., and resample those following classical bootstrap. For instance, under a latent space model assumptio latent node positions are assumed to be drawn i.i.d. from some unknown distribution, and the network model is based on these positions. Then as long as the latent positions can be estimated from a given data, they can be resampled in the usual bootstrap fashion to generate new bootstrap network samples, as proposed in [23],. Another network feature that easily lends itself to this kind of approach is subgraph counts, because they are calculated from small network “patches” that can be extracted and then treated as i.i.d. for resampling purposes. This approach has been applied to estimating uncertainty in the mean degree of the graph [35], and extended to bootstrapping the distribution of node degrees [14, 15]. Related work [3] proposed to estimate the variance of network count statistics by bootstrapping subgraphs isomorphic to the pattern of interest. This approach works well for network quantities that can be computed from small patches, and does not require the latent space model assumption, but it does not generalize easily to other more global inference tasks.

A more general approach analogous to the jackknife was proposed in [26], who analyzed a leave-one-node-out procedure for network data. This procedure can be applied to most network statistics, and the authors show that the variance estimates are conservative in expectation under some regularity conditions. However, for a size network, this procedure requires to compute the statistics of interest on networks with nodes each, which can be computationally expensive.

Another class of methods works by subsampling the network, which we can think of as analogous to -out-of- bootstrap, e.g., [5]. Inference based on a partially observed or subsampled network was first considered for computational reasons, when it is either not computationally feasible to conduct inference on the whole network, or only part of the network was observed in the first place. Most commonly, subsampling is implemented through node sampling, where we first select a fraction of all nodes at random and then observe the induced subgraph, but there are alternative subsampling strategies, to be discussed below. Examples in this line of work include subsampling under a sparse graphon model [27], estimating the number of connected components in a unknown parent graph from a subsampled subgraph [13, 19], and estimating the degree distribution of a network from a subgraph generated by node sampling or a random walk [38]. Similar methods have also been employed for cross-validation on networks, with subsampling either nodes [9] or edges [25] multiple times.

Our goal in this paper is to study general subsampling schemes that can be used to estimate uncertainty in most network statistics with minimal model assumptions. We will study three different subsampling procedures, discussed in detail in the next section: node sampling, row sampling, and node pair sampling. Our goal is to understand how the choice of subsampling procedure affects performance for different inference tasks, and how each subsampling method performs under different models. One of our main findings is that choosing the fraction of the network to sample has significant implications for performance. To this end, we propose a data-driven procedure to select the resampling fraction and show it performs close to optimal.

The paper is organized as follows: In Section 2, we present the proposed resampling procedures and the data-driven method to choose the subsampling fraction. In Section 3, we compare numerical performance of different subsampling methods for different inference tasks. Section 4 presents an application of our methods to triangle density estimation in Facebook networks, and Section 5 concludes with discussion.

2 Network subsampling methods

We start with fixing notation. Let be the observed network, with the node set and the edge set, and nodes. We represent using its adjacency matrix , where if there exists an edge from node to node , i.e., , and otherwise. For the purposes of this paper, we focus on binary undirected networks. Generalization to weighted undirected networks should be straightforward, as the resampling mechanisms would not change. Directed networks will, generally speaking, require a different approach, as for the undirected setting a single row of the adjacency matrix contains all the information about the corresponding node, whereas for directed matrices this would not be the case.

In classical statistics, we have a well-established standard bootstrap procedure [11]: given an i.i.d. sample from an underlying distribution , we can construct a confidence set for some statistics of interest by estimating the sample distribution of , and, typically, using its quantiles to construct a confidence interval for . By drawing new samples with replacement from the set , or in other words sampling i.i.d. from the empirical distribution , we can obtain the empirical distribution of , , to approximate the distribution of .

Thinking about applying this procedure to a network immediately reveals a number of questions: what are the units of resampling, nodes or edges? What does it mean to sample a node or an edge with replacement? Sampling without replacement seems more amendable to working with resulting induced graphs, and thus we turn to the -out-of- bootstrap, typically done without replacement, which provides asymptotically consistent estimation not only for i.i.d. but also for weakly dependent stationary samples [31]. Instead of drawing samples of size with replacement, we will now draw new samples of size without replacement.

For networks, we argue that the the best unit to resample and compare across procedures is node pairs (whether connected by an edge or not). Resampling algorithms can be constructed in different ways and operate either on nodes or on node pairs, but in the end it is the number of node pairs that determines the amount of useful information we have available. Resampling node pairs with replacement does not really work as it is not clear how to incorporate duplicated node pairs. Thus we will focus on network resampling without replacement, similar to the -out-of- bootstrap approach. The fraction of resampled node pairs, denoted by , turns out to be an important tuning parameter, and we will propose a data-driven way of choosing it. First, we describe three different resampling procedures we study.

2.1 Subsampling procedures

Next, we describe in detail the three subsampling procedures we study. While there are other subsampling strategies and some may have advantages in specific applications, we focus on these three because they are popular and general.

Subsampling by node sampling

This is perhaps the most common subsampling scheme, also known as -sampling or induced subgraph sampling. A subset of nodes is first sampled from the node set independently at random, through independent Bernoulli trials with success probability . We then observe the subgraph induced by these nodes, which consists of all edges between the nodes in , that is, . The expected fraction of node pairs sampled under this scheme is of the entries in the adjacency matrix.

Sampling nodes is arguably the most intuitive way to subsample a network, as it is analogous to first selecting the objects of interest and then observing the relationships among them, a common way of collecting network data in the real world. The intuition behind node sampling is that the induced network should inherit the global structure of its parent network, such as communities. A variation where inference is on node-level statistics, such as node degree, will work well if these statistics are subsampled directly with the nodes themselves, rather than recomputed from the induced subgraph [20]. At the same time, since each subsampled network will be on a different set of nodes, it is less clear how well this method can work for more general inferential tasks. Recently, node sampling was shown to consistently estimate the distribution of some network statistics such as triangle density [27], under a sparse graphon model satisfying some regularity conditions. But this result only holds asymptotically, and the question of how to choose for finite samples was not considered.

Subsampling by row sampling

This subsampling method was first proposed for the cross-validation on networks in [9]. In row sampling, one first chooses a subset of nodes by independent Bernoulli trials with success probability ; then edges from nodes in and all nodes in are observed. This is equivalent to sampling whole rows from the adjacency matrix. This way of sampling is related to star sampling, a common model for social network data collected through surveys, where first a set of nodes is sampled, and then each of the sampled nodes reports all of its connections, especially relevant in situations where we may not know all of the nodes in . We assume that both 1s and 0s are reported accurately, meaning that a 0 represents that an edge is absent rather than the status of that connection is unknown. For undirected graphs with symmetric adjacency matrices, row sampling is equivalent to masking a square submatrix from the original adjacency matrix, that is, proportion of all node pairs observed.

Subsampling by node pair sampling

Finally, a network can be subsampled by directly selecting a subset of node pairs to observe. This sampling method was used in [25] for cross-validation on networks, and shown to be superior to row sampling in most cross-validation tasks considered. A subset of node pairs are selected from through independent Bernoulli trials with success probability , resulting in proportion of entries in the adjacency matrix observed. Again, zeros are treated as truly absent edges, and for undirected networks the sampling is adjusted to draw only from entries with and fill in the symmetric matrix accordingly. In [25], noisy matrix completion was applied first to create a low rank approximation to a full matrix before proceeding with cross-validation, and in principle, we have a choice of whether to use the subsampled matrix or its completed version for downstream tasks.

2.2 Constructing confidence intervals by resampling

Before we proceed to the data-driven method of choosing the settings for resampling, we summarize the algorithm we employ for constructing confidence intervals for network statistics based on a given resampling method with a given sampling fraction . This is a general bootstrap-style algorithm that can be applied to any network statistic computable on a subgraph.

Algorithm 1: constructing a bootstrap confidence interval.

Input: a graph , a network statistic , a subsampling method, a fraction , and the number of bootstrap samples .

-

1.

For

-

(a)

Randomly subsample subgraph , using the chosen method and fraction .

-

(b)

Calculate the estimate .

-

(a)

-

2.

Sort the estimates, , and construct a confidence interval for as , where and .

We give this version of bootstrap as the most commonly used one, but any other version of bootstrap based on the empirical cumulative distribution function (symmetrized quantiles, etc) can be equally well applied.

2.3 Choosing the subsampling fraction

For any method of network subsampling, we have to choose the sampling fraction; for consistency across different methods, we focus on the choice of the node pair fraction , which can be converted to for node or row sampling. In the classical bootstrap analogue, the -out-of- bootstrap, consistency results have been obtained for and as . These do not offer us much practical guidance for how to choose for a given finite sample size . The situation is similar for network bootstrap, with no practical rules for choosing implied by consistency analysis.

The iterated bootstrap has been commonly used to calibrate bootstrap results since it was proposed and developed in the 1980s [16, 1, 17]. An especially relevant algorithm for our purposes is the double bootstrap approach, originally proposed to improve coverage of bootstrap intervals [28]. A similar double bootstrap approach was proposed by [22] for -out-of- bootstrap, to correct a discrepancy between the nominal and the actual coverage for bootstrap confidence intervals due to a different sample size .

A generic double bootstrap algorithm proceeds as follows. Given the original i.i.d. sample and an estimator based on , consider a size bootstrap sample , drawn from with replacement, and the corresponding estimator . If the distribution of can be approximated by the distribution of conditional on , a confidence interval of nominal level can be constructed as , with defined as . This confidence interval typically has correct coverage up to , and double bootstrap proposes to improve this bound by using instead, where is defined as the solution to and can be approximated by , the solution to . In practice, this is implemented by sampling multiple size draws from each with replacement, and estimating as the proportion of confidence intervals based on percentiles of that cover for a set of candidate ’s. We then choose such that is the closest to among all candidate ’s.

Since different choice of subsampling fraction will lead to different level to coverage, here we propose an analogous double bootstrap method for network resampling. Compared to the i.i.d. case, instead of using size resamples sampled with replacement, we will generate resamples using network subsampling methods described above and instead of choosing an appropriate , we aim to choose an appropriate . The algorithm is detailed as follows:

Algorithm 2: choosing the sampling fraction .

Input: a graph , a network statistic , a subsampling method, a set of candidate fractions , and the number of bootstrap samples .

For ,

-

1.

For ,

-

(a)

Split all nodes randomly into two sets , .

-

(b)

On the subgraph induced by , calculate the estimate .

-

(c)

On the subgraph induced by , run Algorithm 1 with to construct a confidence interval for

-

(a)

-

2.

Calculate empirical coverage rate .

Output: .

One key difference from the i.i.d. case is that we estimate empirical coverage by checking if the confidence interval constructed on each subsample covers , not , the estimate on the original observed graph . This is because with network subsampling, we cannot preserve the original sample size , and if we are going to compare network statistics on two subsamples, we need to match the graph sizes (in this case ).

3 Empirical results

We evaluate the three different subsampling techniques and our method for choosing the subsampling fraction on four distinct tasks. Three focus on estimating uncertainty, in (1) the normalized triangle density, a global network summary statistic; (2) the number of communities under the stochastic block model, a network model selection parameter; and (3) coefficients estimated in regression with network cohesion, regression parameters estimated with the use of network information. Task (4) is to choose a tuning parameter for regression with network cohesion with a lasso penalty, and evaluate subsampling as a tuning method in this setting.

The networks for all the tasks will be generated from the stochastic block models (SBM). To briefly review, the SBM assigns each node to one of communities; the node labels , for are sometimes viewed as randomly generated from a multinomial distribution with given probabilities for each community, but for the purposes of this evaluation we treat them as fixed, assigning a fixed number of nodes , , to each community . Edges are then generated as independent Bernoulli variables, with probabilities of success determined by the communities of their incident nodes, , where is a symmetric probability matrix. We will investigate the effects of varying the number of communities, the number of nodes in each community, the expected overall edge density , and the ratio of probabilities of within- and between-community edges , to be defined below.

3.1 Normalized Triangle Density

Count statistics are functions of counts of subgraphs in network, and are commonly viewed as key statistical summaries of a network [3, 4], analogous to moments for i.i.d. data. Here we will examine a simple and commonly studied statistic, the density of triangles. A triangle subgraph is three vertices all connected to each other. Triangle density is frequently studied because it is related to the concept of transitivity, a key property of social networks where two nodes that have a “friend” in common are more likely to be connected themselves. We will apply resampling to construct confidence intervals for the normalized triangle density for a given network.

Definition 1 (edge density).

Let be a graph on vertices with adjacency matrix . The edge density is defined as

Definition 2 (normalized triangle density).

Let be a graph on vertices with adjacency matrix . The normalized triangle density is defined as

We generate the networks from the SBM models with number of communities set to either 1 (no communities, the Erdös-Rényi graph) or 3, with equal community sizes. We consider two values of , 300 and 600. The matrix of probabilities for the SBM is defined by, for all ,

The difficulty of community detection in this setting is controlled by the edge density and the ratio . We set , and vary the expected edge density from 0.01 to 0.1, with higher corresponding to more information available for community detection.

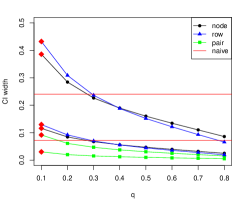

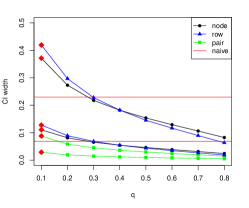

For a fair comparison, we match the proportion of adjacency matrix observed, , in all subsampling schemes, and vary from 0.2 to 0.8. Figures 2 and 3 show the widths and coverage rates for the confidence intervals constructed by the three subsampling procedures over the full range of , as well as the value of chosen by our proposed double bootstrap procedure.

(1) The confidence intervals generally get shorter as increases, since the subsampled graphs overlap more, and this dependence reduces variance. The coverage rate correspondingly goes down as goes up. Still, all resampling procedures achieve nominal or higher coverage for . The one exception to this is node pair sampling at the lowest value of with the lowest edge density, where most subsampled graphs are too sparse to have any triangles. In this case the procedure fails, producing estimated zero triangle density and confidence intervals with length and coverage both equal to zero.

(2) For a given value of , a larger effective sample size, either from higher density or from a larger network size , leads to shorter intervals, as one would expect.

(3) Node sampling produces shorter intervals than the other two schemes, while maintaining similar coverage rates for smaller values of . The coverage rate falls faster for node sampling for larger values of outside the optimal range.

(4) The double bootstrap procedure selects a value of that achieves a good balance of coverage rate and confidence interval width, maintaining close to nominal coverage without being overly conservative. It does get misled by the pathological sparse case with no triangles, highlighting the need of not deploying resampling algorithms without checking that there is enough data to preserve the feature of interest (triangles in this case) in the subsampled graphs.

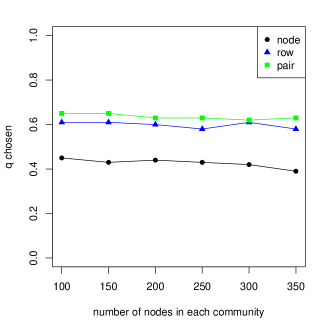

Figure 4 shows the value of chosen by the double bootstrap procedure as a function of graph size and edge density; both appear to not have much effect on the value of within the range considered. This suggests that as long as the structure of a network is preserved, a reasonable value of could be chosen on a smaller subsampled network, resulting in computational savings with little change in accuracy. And we can see that the chosen for node sampling is always smaller than that for the other two subsampling methods, so node sampling can be more computationally efficient, giving smaller subsampled graphs.

3.2 Number of Communities

Finding communities in a network often helps understand and interpret the network patterns, and the number of communities is required as input for most community detection algorithms [2, 12]. A number of methods have been proposed to estimate : as a function of the observed network’s spectrum [21], through sequential hypothesis testing [8], or subsampling [25, 9]. Subsampling has been shown to be one of the most reliable methods to estimate , but little attention has been paid to choosing the subsampling fraction and understanding its effects. Here we investigate the performance of the three subsampling schemes on this task and the choice of the subsampling fraction on the task of understanding uncertainty in , beyond the earlier focus on point estimation. Again, we match the proportion of adjacency matrix observed, , across all subsampling schemes.

For this task, we generate networks from an SBM with equal-sized communities. We vary the number of nodes , the expected edge density , and the ratio of within and between communities edge probabilities , which together control the problem difficulty.

For subgraphs generated by node sampling and row sampling, we estimate the number of communities using the spectral method based on the Bethe-Hessian matrix [21, 33]. For a discrete variable like , confidence intervals make little sense, and thus we look at their bootstrap distributions instead. These distributions are not symmetric, and rarely overestimate the number of communities. For subgraphs generated from node pair sampling, there is no complete adjacency matrix to compute the Bethe-Hessian for, and computing it with missing values replaced by zeros is clearly introducing additional noise. We instead estimate the number of communities from node-pair samples using the network cross-validation approach [25], first applying low-rank matrix completion with values of rank ranging from 1 to 6, and choosing the best by maximizing the AUC score for reconstructing the missing edges.

Figure 5 and 6 shows the results under different subsampling schemes. Since is discrete, instead of considering coverage rate, we compare methods by the proportion of correctly estimated . It is clear that whenever the community estimation problem itself is hard (the lowest values of , , and ), the number of communities is also hard to estimate. In these hardest cases, tends to be severely underestimated by node and row sampling, and varies highly for node pair sampling, and there is no good choice of . In all other scenarios, our proposed algorithm does choose a good value of balancing the bias and variability of by maintaining the empirical coverage rate above 90% and provide a set of candidate rank estimates.

3.3 Regression with node cohesion

In many network applications, we often observe covariates associated with nodes. For example, in a school friendship network survey, one may also have the students’ demographic information, grades, family background, and so on [18]. In such settings, the network itself is often used as an aid in answering the main question of interest, rather than the primary object of analysis. For instance, a network cohesion penalty was proposed as a tool to improve linear regression when observations are connected by a network [24]. They consider the predictive model

where , , and are the usual matrix of predictors, response vector, and regression coefficients, respectively, and is the individual effect for each node associated with the network. They proposed fitting this model by minimizing the objective function

| (1) |

where is a tuning parameter, and is the Laplacian of the network, with the degree matrix. The Laplacian-based network cohesion penalty has the effect of shrinking individual effects of connected nodes towards each other, ensuring more similar behavior for neighbors, and improving prediction [24].

This method provides a point estimate of the coefficients , but no measure of uncertainty, which makes interpretation difficult. Our subsampling methods can add a measure of uncertainty to point estimates . Since different subsampling methods will lead to different numbers of remaining nodes and thus different sample sizes for regression, we only replace the penalty term in (1) with , where is the corresponding Laplacian of the subsampled graph, and retain the full size sample of and for regression, to isolate the uncertainly associated with the network penalty. Alternatively, one could resample pairs together with the network to assess uncertainty overall. If we view the observed graph as a realization from a distribution , then the subsampled graphs under different subsampling methods can also be considered as a smaller sample from the same distribution and the estimation based on these smaller samples should still be consistent.

In addition to the three subsampling methods, we also include a naive bootstrap method. Let be the original adjacency matrix with nodes. Sample nodes with replacement, obtaining , and create a new adjacency matrix by setting if or , otherwise, . Note that with this method we cannot isolate the network as a source of uncertainty, and thus the uncertainty assessment will include the usual bootstrap sample variability of regression coefficients.

We generate the adjacency matrix as an SBM with with communities, with 200 nodes each. The individual effect is generated from the normal distribution , where are , , and for nodes from communities , , and , respectively. We vary the ratio of within and between edge probabilities and the standard deviation , to obtain different signal-to-noise ratios. Regression coefficients ’s are drawn from independently, and each response is drawn from . Figures 7, 8 and 9 show the results from this simulation.

Once again, as increases, the confidence intervals get narrower as subgraphs become more similar to the full network. However, for node and row sampling the coverage is also lower for the smallest value of , since the variance of bootstrap estimates of is larger for smaller , and the center of the constructed confidence interval gets further from the true . This may be caused by the fact that only a small fraction of nodes contribute to the penalty term. Node pair sampling is different: it gives better performance in terms of mean squared error for nearly all choices of , but since its confidence intervals are much narrower than those of node and row sampling, their coverage rate is very poor. All subsampling methods are anti-conservative and can only exceed the nominal coverage rate with small . One possible explanation is that in a regression setting, the impact of node covariates dominates any potential impact of the network. In all cases, Algorithm 2 chooses , which is either the only choice of that exceeds the nominal coverage rate or the choice of that gives a coverage rate close to the nominal coverage rate.

3.4 Regression with node cohesion and variable selection

We now consider the case when the dimension of node covariates is larger. The LASSO in [36] is a popular technique used for high dimensional i.i.d. data. By minimizing

the resulted estimator will have some entries shrunk to zero, and the set is known as the activated set. However, we need to balance the goodness-of-fit and complexity of the model properly by tuning , and model selection using cross validation is not always stable. In [29], the authors proposed stability selection, which uses subsampling to provide variable selection that is less sensitive to the choice of .

Here we propose a similar procedure for network data. With a graph observed, we first generate copies of using one of the resampling schemes. Then we minimize the objective function

over to construct activated set , and for each , calculate , the selection probability of each predictor.

The simulation setup is going as follows: A graph with 3 blocks with 200 nodes in each community is generated through SBM. Again we vary the ratio of within and between communities edge probability and the edge density of the graph. The individual effect is generated from , where are -1, 0, 1 for nodes from different communities respectively. 25 coefficients are drawn from independently, while the rest 75 are set to 0. Then is drawn from . The simulation result is shown in Figure 10, 11, 12.

The three resampling schemes give different performances in AUC when we vary the subsampling proportion : the performance of sampling is not sensitive to the choice of , while the performances of row sampling and node pair sampling improve or worsen respectively as increases.

Similar to what we observed in the last section, although the subsampling step does bring in uncertainty into the inference, in a regression setting, node covariates often play a more important role and the performance of resampling only the network structure is not as good as expected.

4 A data analysis: triangle density in Facebook networks

The data studied are Facebook networks of 95 American colleges and universities from a single-day snapshot taken in September 2005 [32, 37]. Size of these college networks ranges from around 800 to 30000 and their edge density ranges from 0.0025 to 0.06 as shown in Figure 13. Questions of interest include the distribution of normalized triangle density, understanding whether there are significant differences between colleges, and discovering relationships with other important network characteristics, such as size or edge density. Answering these and other similar questions requires uncertainty estimates in addition to point estimates. We construct these uncertainty estimates by applying our Algorithm 1 to obtain 90% confidence intervals, with subsampling fraction chosen by Algorithm 2. The results are shown in Figures 14 and 15.

There are several conclusions to draw from Figure 14. First, larger networks have higher normalized triangle density, despite normalization, and the confidence intervals are short enough to make these differences very significant. The same is true for normalized triangle density as a function of edge density: denser networks have lower normalized triangle density. This may be due to the fact that the edge density of these social networks goes down as the size of the network goes up, as shown in Figure 13, since the number of connections to each individual node grows much slower compared to the network size. Generally, the intervals from all three schemes have similar centers, and those obtained by pair sampling are somewhat shorter than those constructed with node and row sampling. Algorithm 2 generally chooses small values of subsampling proportion and Table 1 shows the percentage of different chosen out of the 95 graphs.

| node | 81.1% | 18.9% |

| row | 54.7% | 45.3% |

| pair | 96.8% | 3.2% |

It is evident from Figure 14 that there is a strong positive correlation between normalized triangle density and the number of nodes, and so it is instructive to look at triangle density while controlling for network size. We show residuals of normalized triangle density after regressing normalized triangle density on , in Figure 15. We observed that residuals mostly centered around zero, we larger variance as grows, suggesting there is no longer a dependence on . However, colleges such as UCF, USF and UC do have larger normalized triangle density compared to colleges of similar size, as seen in both Figure 14 and 15. Similar conclusion can be drawn for edge density.

While the example we gave is a simple exploratory analysis, it demonstrates the possibilities of much richer network data analysis when point estimates of network statistics are accompanied by uncertainty estimates.

5 Discussion

The three different subsampling methods we studied - node, row, and node pair sampling - are all reasonably good at achieving nominal coverage if the sampling proportion is selected appropriately, both for estimating uncertainty in network summary statistics and in estimated parameters of a model fitted to network data. While there is no universally best resampling scheme for all tasks, node sampling is a safe choice for most, especially compared to row sampling. The double bootstrap algorithm to choose we proposed works well empirically over a range of settings, especially for network statistics that are normalized with respect to the size or the edge density of the graph. Our algorithm is written explicitly for confidence intervals, and would thus need to modified to use with other losses, for instance, prediction intervals, but such a modification would be straightforward.

An obvious question arises about theoretical guarantees of coverage for bootstrap confidence intervals. We have intentionally made no assumptions on the underlying network model when deriving these bootstrap methods. Proving any such guarantee requires assuming an underlying probability model that generated the network. If such a model is assumed, then, for all common network models, there will be an alternative model-based bootstrap approach tailor-made for the model. For example, for latent variable models where network edge probabilities are functions of unobserved latent variables in , a bootstrap approach based on estimating latent variables in , bootstrapping those estimated variables, and generating networks from those has been shown to have good asymptotic properties [23]. Similarly, specialized methods for network moments have been proposed in [3, 27]. There is an inevitable tradeoff between keeping a method assumption-free and establishing theoretical guarantees. In this computational paper, we have focused on the algorithms themselves, and leave establishing guarantees for special cases for future work.

References

- [1] R. Beran. Prepivoting to reduce level error of confidence sets. Biometrika, 74(3):457–468, 1987.

- [2] S. Bhattacharyya and P. J. Bickel. Community detection in networks using graph distance. arXiv preprint arXiv:1401.3915, 2014.

- [3] S. Bhattacharyya and P. J. Bickel. Subsampling bootstrap of count features of networks. The Annals of Statistics, 43(6):2384 – 2411, 2015.

- [4] P. J. Bickel, A. Chen, and E. Levina. The method of moments and degree distributions for network models. The Annals of Statistics, 39(5):2280 – 2301, 2011.

- [5] P. J. Bickel and D. A. Freedman. Some Asymptotic Theory for the Bootstrap. The Annals of Statistics, 9(6):1196 – 1217, 1981.

- [6] P. J. Bickel, F. Götze, and W. R. van Zwet. Resampling fewer than n observations: Gains, losses, and remedies for losses. Statistica Sinica, 7(1):1–31, 1997.

- [7] P. J. Bickel and E. Levina. Covariance regularization by thresholding. The Annals of Statistics, 36(6):2577 – 2604, 2008.

- [8] P. J. Bickel and P. Sarkar. Hypothesis testing for automated community detection in networks. Journal of the Royal Statistical Society. Series B (Statistical Methodology), 78(1):253–273, 2016.

- [9] K. Chen and J. Lei. Network cross-validation for determining the number of communities in network data. Journal of the American Statistical Association, 113(521):241–251, 2018.

- [10] B. Efron and R. Tibshirani. Bootstrap Methods for Standard Errors, Confidence Intervals, and Other Measures of Statistical Accuracy. Statistical Science, 1(1):54 – 75, 1986.

- [11] B. Efron and R. J. Tibshirani. An introduction to the bootstrap. CRC press, 1994.

- [12] S. Fortunato. Community detection in graphs. Physics reports, 486(3-5):75–174, 2010.

- [13] O. Frank. Estimation of the Number of Connected Components in a Graph by Using a Sampled Subgraph. Scandinavian Journal of Statistics, 5:177–188, 1978.

- [14] Y. R. Gel, V. Lyubchich, and L. L. R. Ramirez. Bootstrap quantification of estimation uncertainties in network degree distributions. 7(1):1–12, 2017.

- [15] A. Green and C. R. Shalizi. Bootstrapping exchangeable random graphs. arXiv preprint arXiv:1711.00813, 2017.

- [16] P. Hall. On the Bootstrap and Confidence Intervals. The Annals of Statistics, 14(4):1431 – 1452, 1986.

- [17] P. Hall and M. A. Martin. On bootstrap resampling and iteration. Biometrika, 75(4):661–671, 1988.

- [18] K. M. Harris. The national longitudinal study of adolescent to adult health (add health), waves i & ii, 1994–1996; wave iii, 2001–2002; wave iv, 2007-2009; wave v, 2016-2018 [machine-readable data file and documentation]. 2018.

- [19] J. M. Klusowski and Y. Wu. Estimating the number of connected components in a graph via subgraph sampling. Bernoulli, 26(3):1635–1664, Aug. 2020.

- [20] E. D. Kolaczyk. Sampling and Estimation in Network Graphs, pages 1–30. Springer New York, New York, NY, 2009.

- [21] C. M. Le and E. Levina. Estimating the number of communities by spectral methods. Electronic Journal of Statistics, 16(1):3315 – 3342, 2022.

- [22] S. M. S. Lee. On a Class of m out of n Bootstrap Confidence Intervals. Journal of the Royal Statistical Society. Series B (Statistical Methodology), 61(4):901–911, 1999.

- [23] K. Levin and E. Levina. Bootstrapping networks with latent space structure, 2019.

- [24] T. Li, E. Levina, and J. Zhu. Prediction models for network-linked data. The Annals of Applied Statistics, 13(1):132 – 164, 2019.

- [25] T. Li, E. Levina, and J. Zhu. Network cross-validation by edge sampling. Biometrika, 107(2):257–276, 04 2020.

- [26] Q. Lin, R. Lunde, and P. Sarkar. On the theoretical properties of the network jackknife. In Proceedings of the 37th International Conference on Machine Learning, volume 119 of Proceedings of Machine Learning Research, pages 6105–6115. PMLR, 13–18 Jul 2020.

- [27] R. Lunde and P. Sarkar. Subsampling sparse graphons under minimal assumptions. arXiv preprint arXiv:1907.12528, 2019.

- [28] M. A. Martin. On Bootstrap Iteration for Coverage Correction in Confidence Intervals. Journal of the American Statistical Association, 85(412):1105–1118, 1990.

- [29] N. Meinshausen and P. Bühlmann. Stability selection. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 72(4):417–473, 2010.

- [30] D. N. Politis and J. P. Romano. The stationary bootstrap. Journal of the American Statistical Association, 89(428):1303–1313, 1994.

- [31] D. N. Politis, J. P. Romano, and M. Wolf. Subsampling. Springer Series in Statistics. Springer New York, New York, NY, 1999.

- [32] R. A. Rossi and N. K. Ahmed. The network data repository with interactive graph analytics and visualization. 2015.

- [33] A. Saade, F. Krzakala, and L. Zdeborová. Matrix Completion from Fewer Entries: Spectral Detectability and Rank Estimation. arXiv:1506.03498 [cond-mat, stat], Jan. 2016.

- [34] J. Shao and C. F. J. Wu. A General Theory for Jackknife Variance Estimation. The Annals of Statistics, 17(3):1176 – 1197, 1989.

- [35] M. E. Thompson, L. L. Ramirez Ramirez, V. Lyubchich, and Y. R. Gel. Using the bootstrap for statistical inference on random graphs. Canadian Journal of Statistics, 44(1):3–24, 2016.

- [36] R. Tibshirani. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society. Series B (Methodological), 58(1):267–288, 1996.

- [37] A. L. Traud, P. J. Mucha, and M. A. Porter. Social structure of Facebook networks. Physica A: Statistical Mechanics and its Applications, 391(16):4165–4180, Aug. 2012.

- [38] Y. Zhang, E. D. Kolaczyk, B. D. Spencer, et al. Estimating network degree distributions under sampling: An inverse problem, with applications to monitoring social media networks. The Annals of Applied Statistics, 9(1):166–199, 2015.