Negative-ResNet: Noisy Ambulatory Electrocardiogram Signal Classification Scheme

Abstract

With recently successful applications of deep learning in computer vision and general signal processing, deep learning has shown many unique advantages in medical signal processing. However, data labelling quality has become one of the most significant issues for AI applications, especially when it requires domain knowledge (e.g. medical image labelling). In addition, there might be noisy labels in practical datasets, which might impair the training process of neural networks. In this work, we propose a semi-supervised algorithm for training data samples with noisy labels by performing selected Positive Learning(PL) and Negative Learning(NL). To verify the effectiveness of the proposed scheme, we designed a portable ECG patch - iRealCare and applied the algorithm on a real-life dataset. Our experimental results show that we can achieve an accuracy of 91.0 %, which is 6.2 % higher than a normal training process with ResNet. There are 65 patients in our dataset and we randomly picked 2 patients to perform validation.

This is a post-peer-review, pre-copyedit version of an article published in Neural Computing and Applications. The final authenticated version is available online at: https://doi.org/10.1007/s00521-020-05635-7”.

I Introduction

Machine learning and deep learning have become the most popular data analysis techniques over these years due to its high accuracy, reliability and repeatable results. Especially in the field of biomedical image processing [1], where these cutting-edge techniques can be used to assist medical professionals with their diagnosis. In traditional medical imaging, diagnosis mainly relays on human eyes and experiences, which can sometime be inconsistent and problematic. Neural network is proved to possess an extraordinary ability to recognize patterns and make classifications. It is able to capture high-level features of signals and images, which are very subtle or even impossible for human eyes. As such, neural network is a powerful tool for solving biomedical image processing problems and is widely used over the past few years.

However, neural network, a data-driven method, relies heavily on the training dataset, which will lead to unexpected performance if it does not have a decent size or proper label [2]. In many medical research works, it is challenging to obtain a dataset with a huge size and labelling them correctly due to many factors(e.g. cost, domain knowledge).

Labelling such a large dataset tends to be erroneous due to human mistakes and medical misjudgment. It will therefore need more quality control (QC) procedures such as reliability cross-check [3]. And these will not always be possible as QC can double the number of data that needs to be labelled.

In this work, to overcome these issues and design a more robust training scheme that can be used for data classification when the biomedical signal is collected under an imperfect condition, we proposed a Negative-ResNet with 5 residual blocks (12-layer Convolutional Neural Network in total), which can be trained and showed good performance even when the dataset contains a significant number of noisy labels.

Unlike usual Positive Learning (PL), the Negative Learning (NL) training scheme we mention above provides the information that a given signal doesn’t belong to a certain label, which is more likely to be correct. For example, in Fig. 1, we plot 6 types of data in total in the dataset. If we assume a label might be noisy: For positive learning, information ”This is a Type 2” will be provided, As such, there’s only 1/6 chance that the label is correct. However, for negative learning, information ”This is not a Type 2” will be given. The probability of correctness increases to 5/6. Hence, NL will usually provide more precise information when the label is noisy.

To verify the effeteness of the training scheme, we designed a portable Electrocardiogram (ECG) patch - iRealCare to collect and construct our ambulatory ECG dataset. There are 5 types of signals in this dataset, which indicates 5 different status of the heartbeat signal. And the size of the dataset is more than 4 million of heartbeat signals.

The main contributions of this work are listed as below:

-

•

We propose a novel semi-supervised training scheme: a confidence-level-based training method, following by a negative training scheme using a deep residual block based neural network backbone.

-

•

We develop a novel portable IoT ECG Patch named iRealCare and constructed a large real-patient dataset using this IoT device with more than 4 million ECG signal waveforms.

-

•

We apply the proposed scheme on our dataset and achieve a satisfactory classification accuracy of 91.0 %, which is 6.2 % higher than a normal training process with ResNet.

The remainder of this manuscript is organized as below: Section II mainly talks about the previous relevant study related to our work, such as ECG signal and noisy label training. Section III describes how our dataset is constructed and issues that comes with it. It mainly introduces the ECG patch, the dataset and the data pre-processing procedure. Section IV demonstrates the methodology of the project, including detailed explanations of the concept of negative learning and confidence-level-based learning; the novel structure of the residual block. Section V introduces our proposed deep learning architecture and the experiments for performing and evaluating the proposed method. Section VI gives analytical results of the procedure. In the end, Section VII concludes the work.

II Related Work

II-A ECG Signal

Arrhythmia, also known as irregular heartbeat, is an important indicator for most of the heart diseases [4]. Many infrequent arrhythmias are harmless while some may cause serious damage or even death [4].

Electrocardiogram(ECG) is currently widely used for detecting arrhythmia by measuring the electrical activities generated by the heart as contracts [4, 5, 6, 7]. An ECG signal can be characterized by two features: peaks and intervals. Each peak (P, Q, R, S, T) has its normal amplitude, and each interval (P-R, R-R, Q-R-S, S-T) has its duration period. Fig. 2 illustrates an ECG signal, and Table I shows the ECG features and their typical values[8, 9].

| Feature | Value |

|---|---|

| PR interval | 0.12-0.20s |

| P-wave interval | 0.00-0.12s |

| QRS | 0.06-0.11S |

| RR interval | 0.60-1.00s |

| R-wave amplitude | 0.50mV |

| QT interval | 0.33-0.43s |

| T-wave amplitude | 0.50mV |

| P-wave amplitude | 0.25mV |

However, ECG signal data are usually of large size, and it would be a huge workload for cardiologists to review all of them manually. Hence, over the past few decades, many researchers and engineers have devoted themselves to design robust and accurate equipment and algorithms for ECG based arrhythmia detection and classification.

Conventionally, ECG decomposition and hand-crafted manual features are used for ECG classification. In [10], [1] and [11], the wavelet transform was used to extract features of each QRS complex. In [12] and [13], adaptive filtering was designed and used for arrhythmia detection.

Even though good results were reported, these model-based feature extraction methods are not generic and robust. Individual differences in ECG signals are significant and may lead to poor performance for different individuals.

Neural networks which are able to extract high-level features are proved to be a powerful tool to learn distributions of a dataset and generalize from training data [14, 15]. And the authors of [16] and [17] both presented a machine learning based method which consisted of a 1-D Convolutional Neural Network (CNN) for real-time ECG classification. [17] used a nine-layer CNN to classify five classes of arrhythmia and reached an accuracy of 94% on MIT-BIH dataset. Even though [17] reached a very high accuracy, it failed to consider performance on individual patients. [16] presented a better way of introducing patient-specific training using transfer learning. And it is also based on MIT-BIH database.

Besides CNN, other neural network backbone models are also commonly used in ECG classification. In [18], a 3-layer Recurrent Neural Network was used for 5-type classification and an 85% of accuracy was performed. In [19, 20, 21], long short-term memory(LSTM) showed very promising result in arrhythmia detection and classification tasks.

Even though [12], [16], [10], [1] and [14] showed very significant improvement in classification than using naked eyes, they suffer from either patient-generation ability or adaptability to the real-world data.

In [22] and [23] , patient-specific ECG classifiers were built using transfer learning of 2-D convolutional neural networks. A convolutional neural network was trained and used for feature extraction first. Then an individual classifier was trained for each patient transferring the whole pre-trained model from the training set domain to a specific patient domain.

However, these researches were all built on the MIT-BIH dataset, which is a very classic public and wholesome dataset [24]. The data is clean and collected from a 24-hour monitoring Holter [25, 26], which has a significant difference from the data collected by an ambulatory IoT patch. In many Smart Health applications involving ECG, the collected data will not be as clean and high-quality as the MIT-BIH dataset and therefore requires extra data analysis.

II-B Noisy Label Training

In the era of big data, obtaining large-scale dataset with high quality and precise labels is one of the most important procedures for most of data analysis related projects, especially when data-driven methods such as machine learning and deep learning are applied. However, annotating such a large dataset usually requires tremendous human resources and sometimes even requires domain knowledge. As such, human mistakes occur very often due to the reasons mentioned above, which will lead to a dataset with a certain number of noisy labels. To solve this problem, numerous studies are done in the field of noisy label training in the past several decades. And here, we refer to this survey [27] as a comprehensive review.

Many recent studies have illustrate directly training the noisy labels will impair the training scheme and therefore negatively impact the classification accuracy [28]. To avoid this issue, there are 2 major types of noisy label training schemes: Noisy Model Based Methods and Noisy Model Free Methods.

II-B1 Noisy Model Based Methods

Some approaches advocate to use noise model based methods which mainly aims to model the noise structure and extract the noise feature, so that the information of the noise can be estimated and applied on the training scheme. The performance of this method usually depends on the accuracy of noisy estimation. Hence, it’s more suitable when prior knowledge about noise structure is known.

In [29, 30, 31, 33, 34], noise channels were modelled at the training phase and the noise got removed at the evaluation phase. Even through good results were performed, the most significant problem in this method is the scale-ability. The calculation would become intractable when the number of class increases in classification task.

Some approaches attempted to identify and clean noisy labels by picking the suspicious samples and send them to human for further annotation [35, 36]. However, this could still be not feasible when the dataset is at a large size.

Other approaches including dataset pruning [37, 38, 39], sample choosing [40, 41, 42, 43], sample importance weighting [44, 45, 46, 47] also show significant improvement on noisy data classification. However, all of these methods are highly dependent on the calculation and estimation of the noisy structure. As such, prior information tends to be essential in this case.

II-B2 Noisy Model Free Methods

On the other hand, instead of building a noise structure model, noisy model free methods tends to achieve label noise robustness. These methods requires that the robustness of the classifier, which cannot be too sensitive to noise.

Some methods aimed to design a robust loss function so that the noisy would not decrease the accuracy [48, 49, 50, 51]. Many researchers proposed different type of loss function which showed good result, such as distance-based loss [52], information-theoretic loss [53], classification-calibrated loss functions [54].

With the development of deep neural networks, researchers tend to transfer from hand-designed features to autonomously learned machine learning techniques. So does in noisy label training, a machine learning algorithm called Meta Learning is widely used to learn the high level features on their own [55, 56, 57].

III ECG Data Acquisition, Processing and Accuracy considerations

III-A iRealCare ECG patch

Our dataset was collected by a device named iRealCare [63] as shown in Fig.3. It’s a portable ECG monitoring device with signals sampled at 250Hz. The patch is lightweight with only 13 grams. In addition, the patch has the characteristic of being able to perform long-term continuous monitoring, which can last 72 hours. As such, it has significant advantages over the traditional ECG Holter.

III-B Dataset

Our experiments were performed on an ECG signal dataset collected from 65 patients. 4,831,517 heartbeats were identified considering the position of QRS, among which heartbeats were divided into 6 categories,

Normal Heartbeat(N); Ventricular premature beat (V); Supraventricular premature beat(S); Atrial fibrillation(A); Electromagnetic Interference(E) and Motion Interference(Q). In order to construct a balanced dataset, each category contains the same number of data points. Therefore, no bias will be introduced to the neural network during training because of the different numbers of training samples for each category. In other words, each category is equally represented by data samples, which avoids the over-fitting problem for certain categories. Table II shows the number of six types of ECG signals.

| Type | Meaning | number |

|---|---|---|

| N | Normal Heartbeat | 3,501,003 |

| V | Ventricular premature beat | 194,469 |

| S | Supraventricular premature beat | 45,536 |

| A | Atrial fibrillation | 96,052 |

| E | Electromagnetic Interference | 46,437 |

| Q | Movement Interference | 148,020 |

As mentioned before, the dataset generated by the patch would be more noisier than the Holter due to the limitation of the IoT patch, which could generate a significant number of Electromagnetic Interference. In addition, a considerable amount of movement interference will be introduced when the patients wearing the patch every day. Besides, there might also be some errors in labelling as well.

A set of possible noisy labels are presented below:

In our dataset, there are a few signals that have very similar features as shown in Fig. 5. As such, we would consider it as a ”clean label”.

However, there are also a few ”noisy labels” in this dataset, such as the one shown in Fig. 6. It was labelled as type V, but it’s revised to be other type because it doesn’t show the significant features of type V (Ventricular premature beat).

III-C Data Segmentation

To identify the QRS position, both low pass filtering and high pass filtering are used to mitigate the noise and locate the QRS. Low pass filters can be used to cancel the high pass noise efficiently while the high pass filters can limit the interference of P and T wave. After filtering using low pass and high pass, we used hand-crafted features to identify the QRS wave respectively.

III-D Data Pre-processing

Data normalization is an essential step before feeding data into neural network for training. In deep learning, data are usually normalized to a mean of close to zero, which will usually speed up the learning process and result in a faster convergence. The reasons are as follows.

We define the binary cross-entropy loss as,

| (1) |

where is the training label vector, is the input vector and is the weight matrix. If we take the partial derivative with respect to each weight, we have,

| (2) |

where is corresponding activation output of this weight, is the network output probability and is the training label.

Weights update according to the following,

| (3) |

where is the learning rate.

Even though magnitudes are different, weights will be updated with some dependence on each other. And that usually indicates a slow convergence, because independent weight updates ensure all possible combinations of weights, which is crucial for approaching a local minimum.

Therefore, in this work, we normalized our data to the range of to according to Eq. (4), which also makes sure the scale of our input data is consistent, suppressing the outliers.

| (4) |

IV METHODOLOGY

In this section, we discuss the methodology of our work.

To implement this training scheme, there are four steps we need to do:

1. Find noisy signals by performing Confidence-level-based Learning;

2. Generate a complementary labels for noisy labels;

3. Perform negative learning by flipping the loss and gradient;

4. Change the outcome from ”NOT Type X” into ”Type X”.

IV-A Negative Learning and Confidence-level-based Learning

IV-A1 Positive Training

Positive training is the traditional way of training a neural network, where the neural network is trained to learn the distribution of a given dataset and corresponding labels [64]. For example, for a neural network to learn what is an image of a cat, we would “show” many pictures of cat and “tell” the neural network that “it’s a cat” multiple times until the neural network can recognize cats on its own.

IV-A2 Negative Training

Negative training is the opposite of positive training. Instead of telling the neural network “it is a cat”, we tell it “it is not a dog”. This idea was first proposed in [65] and used for increasing the accuracy of the label and decreasing the noisy labels. The labels used for negative training processes are called negative labels in this work.

IV-A3 Example

Usually, negative labels are more likely to be correctly labelled compared to positive labels under the same circumstances. This is because the accuracy of deciding whether an object belongs to a label is lower than deciding whether it doesn’t belong to the label. For example, there are totally 6 types of animals in a given dataset. A cat image is mislabelled as a dog image accidentally, which might impair the training process. However, negative labelling process might give it a ”not a monkey” label, which is still more likely to be correct(= 5/6).

IV-A4 Generate Complementary Labels

As such, we can generate correct negative labels from positive labels even if it is not labelled correctly.

‘

Therefore, we can use a combination of positive training and negative training to improve the network performance when the training dataset is noisy. The authors of [64] showed the performance of this training system on an ImageNet dataset with randomly added Gaussian noise.

To implement negative learning, a complementary label needs to be generated in the first place, which means the input doesn’t belong to the complementary label . For example, if the data was labelled as Type 1 and its confidence level is relatively low. Then we will find its complementary label by randomly select a type from Type 2 - Type 6. For instance, we select a Type 4 in this case. And the probability of ”this signal is NOT Type 4” is 5/6, hence the probability of ”this signal is Type 4” is 1/6.

The detailed algorithm of generating a complementary label and negative label is shown in Fig. 8.

IV-A5 Negative Training implementation

In the implementation, a negative version of the cross-entropy loss function is used, in which the output possibility is flipped. For example, for a specific type, if the output possibility is in a regular cross-entropy loss measurement, the flipped one will be . In implementation, the ”not monkey” training is realized by reverting the activation function at the output layer, such that when the gradient is passed through layers, the model will fit the opposite way as opposed to positive learning.

In more detail, if we want to train a ”not monkey” label, we first calculate the output activation function for a label of ”monkey”, which contains the information on how the weights affect the network output for a label of ”monkey”. Then, we take the negative of the output activation function, which holds the information on how the weights affect the network output for a label of ”not monkey”. Hence, passing gradients calculated in this way will result in fitting the opposite way of ”monkey”, i.e. ”not monkey”, yield a negative learning method. By doing this, we can ’trick’ the training process to negatively fit our negative labels. Hence, we can get our negative label here. In the implementation, we don’t need to expand the labelling by considering other negative cases.

The main difference between positive training and negative training is the loss function.

Generally, when training with positive learning, the expression of cross entropy loss function is given by:

| (5) |

where denotes the cross entropy loss, is the number of class, denotes current digit of the training label (which assumes to be one-hot encoding), and denotes output probability of current class. The equation measures the performance of a classification model whose output is a probability value between and . It calculates and accumulates the difference between predicted and actual labels.

Here, we sum up the loss of each type by calculating the product of and .

However, negative training has exactly opposite loss function, where the output probability is reverted.

The expression of the cross entropy loss function is

| (6) |

IV-B ResNet and Residual block

Residual block is the building block of our proposed model. It contains a normal convolutional layer, except for a residual connection from the input of the block to output of the block. It was first proposed in [66] as a solution for vanishing gradient when neural network goes deeper. Similar to [66], let’s consider as the underlying mapping which the residual block is supposed to learn and denotes input to the residual block. The idea of residual learning is that rather than learning the underlying mapping , we learn the residual function . And the underlying mapping can be reconstructed by an identity connection , which simply adds input to the output.

It has been proved that residual function F(x) is easier to learn and with extra information passed from input [66], the model can be trained deeper and deeper. If the output dimension is different from the input dimension, for example filter number changes, a linear projection needs to perform at the identity connection as (where is a matrix with a dimension of ab), ensuring the identity connection’s dimension matches the output dimension, such that we can perform an element-wise addition.

Fig. 9 shows the structure of our proposed residual block. It consists of two 1-D convolution layers followed by Batch Normalization, ReLu activation and Dropout which improve the convergence of the training process. As can be seen, an identity connection is established from the input to the output with a simple addition operation.

V Experimental Setup

V-A Neural Network Backbone Model

This backbone is a residual block based deep 1-D Convolution neural network which consists of 12 convolutional layers as shown in Fig. 10. Residual blocks which consist of a novel residual connection from the input to the output propagating information to deeper layers are basic building blocks of this backbone model [66]. The number of filters in the residual block is double every four residual block and a max-pooling operation is performed on the residual connection such that the input is sub-sampled by half before adding to its output for every two residual blocks.

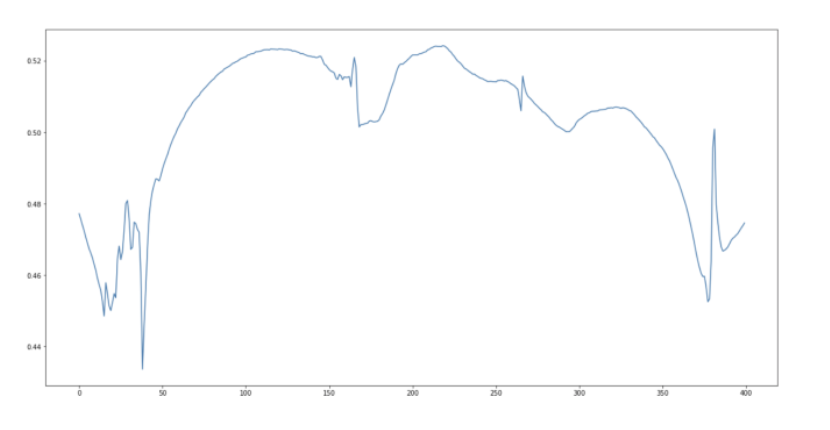

We have constructed a baseline model with the same structure but normal positive training scheme. The result of baseline model is shown in 11. The highest accuracy achieved at convergence is .

V-B Training Process

The whole training process of the system is shown in Fig. 12. The raw data is firstly feed into the CNN. Later on, the dataset is divided into clean data and noisy data based on the confidence level. Here, the confidence level refers to the output probability of the model. If the output confidence level is higher than a certain threshold, it is considered as clean data, vice versa.

The clean data (high confidence level) would be trained positively, and the noisy data (low confidence level) would go through negative learning process. Loss calculated from both positive and negative learning will be added together, and then passed back for gradient descent. Weights will be updated at the same time.

Different confidence levels are tested in our experiments. As can be seen from the results of Table III, the best result can be achieved when the confidence level is set to , which is around higher than the baseline model. The confidence level controls how many data samples should be trained positively and how many data should be trained negatively. Therefore, it reflects the level of noisy labels contained in the dataset. And the best confidence level varies depending on different datasets.

Note that originally there are six labels which consist of two interference labels: Type Q and Type E.

| Confidence Level | Accuracy |

|---|---|

| 99% | 83.0% |

| 90% | 87.3% |

| 80% | 90.7% |

| 70% | 88.4% |

| 60% | 87.2% |

| 50% | 85.0% |

| 40% | 83.1% |

| 30% | 83.1% |

As such, the accuracy reaches when training with confidence level. The accuracy and loss plot of training with of confidence level is shown .

Note: The confidence level for Type A is set to be 50% such that few Type A sample will go through the Negative Learning. It is because Type A in our dataset is confirmed by our medical expert to be of high labeling correctness.

VI ASSESSMENT CRITERION AND RESULT ANALYSIS

In this work, we use accuracy, precision, and recall to evaluate the performance of our classifier. These are the commonly used and standardized parameters for machine learning and deep learning. The performance of the model can be determined by these parameters since it can not only show how precise the model is, but also whether the model is biased.

In the confusion matrix below, Type Q and Type E are combined as Type Q.

The definitions of these parameters are listed below, where TP stands for True Positive, FP stands for False Positive, and FN stands for False Negative:

| (7) |

| (8) |

| (9) |

Here, we also used -Fold cross-validation to validate our results. is chosen to be two. In more detail, two patients will be selected randomly and the remaining patients will be used as training data.

VI-A Baseline Model

The confusion matrix for the baseline model is shown in Fig. 14. The precision and recall of the baseline model are shown in Table IV.

| N | V | S | Q | A | |

|---|---|---|---|---|---|

| mean Precision | 0.80 | 0.87 | 0.79 | 0.92 | 0.81 |

| mean Recall | 0.81 | 0.88 | 0.91 | 0.81 | 0.87 |

| mean F1 score | 0.80 | 0.88 | 0.85 | 0.86 | 0.84 |

VI-B Negative ResNet

The confusion matrix, precision and recall of optimal Negative ResNet are shown in Fig. 15 and Table V, respectively.

| N | V | S | Q | A | |

|---|---|---|---|---|---|

| mean Precision | 0.82 | 0.95 | 0.92 | 0.95 | 0.88 |

| mean Recall | 0.86 | 0.94 | 0.95 | 0.92 | 0.87 |

| mean F1 score | 0.84 | 0.94 | 0.93 | 0.94 | 0.87 |

Generally speaking, the result of negative training is better than the normal training process, especially for interference, where an increment of accuracy can be achieved.

However, for type A, the accuracy remains the same when going through negative training. This is mainly because for type A (Atrial fibrillation), the original labelling process is relatively accurate. As such, using negative learning in this case will not have a significant improvement in the accuracy of type A. It proves our idea that negative learning performs better in a noisy dataset.

VII CONCLUSION AND FUTURE DIRECTION

This work introduced a single-lead ambulatory ECG patch iRealCare, which was used to construct a large real-patient dataset. In addition, a residual block based neural network with a novel negative training scheme was proposed to train an ECG classifier from this dataset. Even with a considerable amount of erroneous labels in the dataset, our proposed training scheme showed a satisfactory and better result compared to the baseline model, a 6.2% of increase in accuracy was observed and the overall accuracy reached 91.0%.

Our proposed training scheme is useful in reducing the reliance on quality of the training dataset, such that the training performance can be improved and it can be extended to more applications.

Further down this research, we believe that neural network and our proposed training scheme can not only applied into the cardiology diagnosis process, it can improved other medical diagnosis processes in a similar manner as well. We will keep on exploring towards this direction and hopefully applying it on other biomedical signal processing field.

References

- [1] S. Yu and Y. Chen, ”Electrocardiogram beat classification based on wavelet transformation and probabilistic neural network”, Pattern Recognition Letters, vol. 28, no. 10, pp. 1142-1150, 2007. Available: 10.1016/j.patrec.2007.01.017.

- [2] Y. LeCun, Y. Bengio and G. Hinton, ”Deep learning”, Nature, vol. 521, no. 7553, pp. 436-444, 2015. Available: 10.1038/nature14539

- [3] B. C. Russell, A. Torralba, K. P. Murphy, and W. T. Freeman, ”LabelMe: a database and web-based tool for image annotation,” International journal of computer vision, vol. 77, no. 1-3, pp. 157-173, 2008.

- [4] H. Huikuri, A. Castellanos and R. Myerburg, ”Sudden Death Due to Cardiac Arrhythmias”, New England Journal of Medicine, vol. 345, no. 20, pp. 1473-1482, 2001.

- [5] N. El-Sherif et al., ”Definition of the best prediction criteria of the time domain signal-averaged electrocardiogram for serious arryhthmic events in the postinfarction period,” Journal of the American College of Cardiology, vol. 25, no. 4, pp. 908-914, 1995.

- [6] C. Ramanathan, R. N. Ghanem, P. Jia, K. Ryu, and Y. Rudy, ”Noninvasive electrocardiographic imaging for cardiac electrophysiology and arrhythmia,” Nature medicine, vol. 10, no. 4, pp. 422-428, 2004.

- [7] T. G. Farrell et al., ”Risk stratification for arrhythmic events in postinfarction patients based on heart rate variability, ambulatory electrocardiographic variables and the signal-averaged electrocardiogram,” Journal of the American College of Cardiology, vol. 18, no. 3, pp. 687-697, 1991.

- [8] Y. Yeh and W. Wang, ”QRS complexes detection for ECG signal: The Difference Operation Method”, Computer Methods and Programs in Biomedicine, vol. 91, no. 3, pp. 245-254, 2008.

- [9] A. L. Goldberger, Z. D. Goldberger, and A. Shvilkin, Clinical electrocardiography: a simplified approach e-book. Elsevier Health Sciences, 2017.

- [10] P. Addison, J. Watson, G. Clegg, M. Holzer, F. Sterz and C. Robertson, ”Evaluating arrhythmias in ECG signals using wavelet transforms”, IEEE Engineering in Medicine and Biology Magazine, vol. 19, no. 5, pp. 104-109, 2000. Available: 10.1109/51.870237.

- [11] M. Elgendi, A. Mohamed, and R. Ward, ”Efficient ECG compression and QRS detection for e-health applications,” Scientific reports, vol. 7, no. 1, pp. 1-16, 2017.

- [12] N. Thakor and Y. Zhu, ”Applications of adaptive filtering to ECG analysis: noise cancellation and arrhythmia detection”, IEEE Transactions on Biomedical Engineering, vol. 38, no. 8, pp. 785-794, 1991. Available: 10.1109/10.83591.

- [13] C. Venkatesan, P. Karthigaikumar, A. Paul, S. Satheeskumaran, and R. Kumar, ”ECG signal preprocessing and SVM classifier-based abnormality detection in remote healthcare applications,” IEEE Access, vol. 6, pp. 9767-9773, 2018.

- [14] J. Deng, W. Dong, R. Socher, L. Li, Kai Li and Li Fei-Fei, ”ImageNet: A large-scale hierarchical image database,” 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, 2009, pp. 248-255.

- [15] A. Majumdar and R. Ward, ”Robust greedy deep dictionary learning for ECG arrhythmia classification,” 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, 2017, pp. 4400-4407.

- [16] S. Kiranyaz, T. Ince and M. Gabbouj, ”Real-Time Patient-Specific ECG Classification by 1-D Convolutional Neural Networks”, IEEE Transactions on Biomedical Engineering, vol. 63, no. 3, pp. 664-675, 2016. Available: 10.1109/tbme.2015.2468589.

- [17] Acharya, U. Rajendra, et al. ”A deep convolutional neural network model to classify heartbeats.” Computers in biology and medicine 89 (2017): 389-396.

- [18] S. Singh, S. K. Pandey, U. Pawar, and R. R. Janghel, ”Classification of ECG arrhythmia using recurrent neural networks,” Procedia computer science, vol. 132, pp. 1290-1297, 2018.

- [19] Ö. Yildirim, ”A novel wavelet sequence based on deep bidirectional LSTM network model for ECG signal classification,” Computers in biology and medicine, vol. 96, pp. 189-202, 2018.

- [20] S. L. Oh, E. Y. Ng, R. San Tan, and U. R. Acharya, ”Automated diagnosis of arrhythmia using combination of CNN and LSTM techniques with variable length heart beats,” Computers in biology and medicine, vol. 102, pp. 278-287, 2018.

- [21] O. Yildirim, U. B. Baloglu, R.-S. Tan, E. J. Ciaccio, and U. R. Acharya, ”A new approach for arrhythmia classification using deep coded features and LSTM networks,” Computer methods and programs in biomedicine, vol. 176, pp. 121-133, 2019.

- [22] M. Salem, S. Taheri and J. Yuan, ”ECG Arrhythmia Classification Using Transfer Learning from 2- Dimensional Deep CNN Features,” 2018 IEEE Biomedical Circuits and Systems Conference (BioCAS), Cleveland, OH, 2018, pp. 1-4.

- [23] S. Kiranyaz, T. Ince and M. Gabbouj, ”Real-Time Patient-Specific ECG Classification by 1-D Convolutional Neural Networks”, IEEE Transactions on Biomedical Engineering, vol. 63, no. 3, pp. 664-675, 2016. Available: 10.1109/tbme.2015.2468589.

- [24] G. Moody and R. Mark, ”The impact of the MIT-BIH Arrhythmia Database”, IEEE Engineering in Medicine and Biology Magazine, vol. 20, no. 3, pp. 45-50, 2001. Available: 10.1109/51.932724.

- [25] T. A. Nappholz, W. N. Hursta, A. K. Dawson, and B. M. Steinhaus, ”Implantable ambulatory electrocardiogram monitor,” ed: Google Patents, 1992.

- [26] V. K. Yeragani et al., ”Decreased heart-period variability in patients with panic disorder: a study of Holter ECG records,” Psychiatry research, vol. 78, no. 1-2, pp. 89-99, 1998.

- [27] . Algan and I. Ulusoy, “Image classification with deep learning in the presence of noisy labels: A survey,” arXiv preprint arXiv:1912.05170, 2019.

- [28] B. Frenay and M. Verleysen, “Classification in the presence of label ´ noise: a survey,” IEEE transactions on neural networks and learning systems, vol. 25, no. 5, pp. 845–869, 2014.

- [29] Patrini, A. Rozza, A. Krishna Menon, R. Nock, and L. Qu, “Making deep neural networks robust to label noise: A loss correction approach,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 1944–1952.

- [30] D. Hendrycks, M. Mazeika, D. Wilson, and K. Gimpel, “Using trusted data to train deep networks on labels corrupted by severe noise,” in Advances in neural information processing systems, 2018, pp. 10 456– 10 465.

- [31] X. Chen and A. Gupta, “Webly supervised learning of convolutional networks,” in Proceedings of the IEEE International Conference on Computer Vision, 2015, pp. 1431–1439.

- [32] A. J. Bekker and J. Goldberger, “Training deep neural-networks based on unreliable labels,” in 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2016, pp. 2682–2686.

- [33] J. Goldberger and E. Ben-Reuven, “Training deep neural-networks using a noise adaptation layer,” 2016.

- [34] S. Sukhbaatar and R. Fergus, “Learning from noisy labels with deep neural networks,” arXiv preprint arXiv:1406.2080, vol. 2, no. 3, p. 4, 2014.

- [35] B. Frenay and M. Verleysen, “Classification in the presence of label ´ noise: a survey,” IEEE transactions on neural networks and learning systems, vol. 25, no. 5, pp. 845–869, 2014.

- [36] J. Krause, B. Sapp, A. Howard, H. Zhou, A. Toshev, T. Duerig, J. Philbin, and L. Fei-Fei, “The unreasonable effectiveness of noisy data for fine-grained recognition,” in European Conference on Computer Vision. Springer, 2016, pp. 301–320.

- [37] C. G. Northcutt, T. Wu, and I. L. Chuang, “Learning with confident examples: Rank pruning for robust classification with noisy labels,” in Uncertainty in Artificial Intelligence - Proceedings of the 33rd Conference, UAI 2017, may 2017.

- [38] X. Wu, R. He, Z. Sun, and T. Tan, “A light CNN for deep face representation with noisy labels,” IEEE Transactions on Information Forensics and Security, vol. 13, no. 11, pp. 2884–2896, 2018.

- [39] J. Huang, L. Qu, R. Jia, and B. Zhao, “O2u-net: A simple noisy label detection approach for deep neural networks,” in Proceedings of the IEEE International Conference on Computer Vision, 2019, pp. 3326– 3334.

- [40] Y. Bengio, J. Louradour, R. Collobert, and J. Weston, “Curriculum learning,” in Proceedings of the 26th annual international conference on machine learning. ACM, 2009, pp. 41–48.

- [41] M. P. Kumar, B. Packer, and D. Koller, “Self-paced learning for latent variable models,” in Advances in Neural Information Processing Systems, 2010, pp. 1189–1197.

- [42] B. Han, I. W. Tsang, L. Chen, P. Y. Celina, and S.-F. Fung, “Progressive stochastic learning for noisy labels,” IEEE transactions on neural networks and learning systems, no. 99, pp. 1–13, 2018.

- [43] L. Jiang, Z. Zhou, T. Leung, L.-J. Li, and L. Fei-Fei, “Mentornet: Learning data-driven curriculum for very deep neural networks on corrupted labels,” arXiv preprint arXiv:1712.05055, 2017

- [44] M. Ren, W. Zeng, B. Yang, and R. Urtasun, “Learning to reweight examples for robust deep learning,” arXiv preprint arXiv:1803.09050, 2018.

- [45] S. Jenni and P. Favaro, “Deep bilevel learning,” in Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 618–633.

- [46] J. Shu, Q. Xie, L. Yi, Q. Zhao, S. Zhou, Z. Xu, and D. Meng, “Metaweight-net: Learning an explicit mapping for sample weighting,” in Advances in Neural Information Processing Systems, 2019, pp. 1917– 1928.

- [47] Y. Wang, W. Liu, X. Ma, J. Bailey, H. Zha, L. Song, and S.-T. Xia, “Iterative learning with open-set noisy labels,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 8688–8696.

- [48] N. Manwani and P. Sastry, “Noise tolerance under risk minimization,” IEEE transactions on cybernetics, vol. 43, no. 3, pp. 1146–1151, 2013.

- [49] P. L. Bartlett, M. I. Jordan, and J. D. McAuliffe, “Convexity, classification, and risk bounds,” Journal of the American Statistical Association, vol. 101, no. 473, pp. 138–156, 2006.

- [50] A. Ghosh, H. Kumar, and P. Sastry, “Robust loss functions under label noise for deep neural networks,” in Thirty-First AAAI Conference on Artificial Intelligence, 2017.

- [51] X. Wang, E. Kodirov, Y. Hua, and N. M. Robertson, “Improved Mean Absolute Error for Learning Meaningful Patterns from Abnormal Training Data,” Tech. Rep., 2019.

- [52] V. Mnih and G. E. Hinton, “Learning to label aerial images from noisy data,” in Proceedings of the 29th International conference on machine learning (ICML-12), 2012, pp. 567–574.

- [53] Y. Xu, P. Cao, Y. Kong, and Y. Wang, “L dmi: A novel informationtheoretic loss function for training deep nets robust to label noise,” in Advances in Neural Information Processing Systems, 2019, pp. 6222– 6233.

- [54] B. Van Rooyen, A. Menon, and R. C. Williamson, “Learning with symmetric label noise: The importance of being unhinged,” in Advances in Neural Information Processing Systems, 2015, pp. 10–18.

- [55] L. P. Garcia, A. C. de Carvalho, and A. C. Lorena, “Noise detection in the meta-learning level,” Neurocomputing, vol. 176, pp. 14–25, 2016.

- [56] B. Han, G. Niu, J. Yao, X. Yu, M. Xu, I. Tsang, and M. Sugiyama, “Pumpout: A meta approach for robustly training deep neural networks with noisy labels,” arXiv preprint arXiv:1809.11008, 2018.

- [57] J. Li, Y. Wong, Q. Zhao, and M. Kankanhalli, “Learning to learn from noisy labeled data,” 2018.

- [58] N. Srivastava, G. Hinton, A. Krizhevsky, I. Sutskever, and R. Salakhutdinov, “Dropout: a simple way to prevent neural networks from overfitting,” The journal of machine learning research, vol. 15, no. 1, pp. 1929–1958, 2014.

- [59] I. J. Goodfellow, J. Shlens, and C. Szegedy, “Explaining and harnessing adversarial examples,” arXiv preprint arXiv:1412.6572, 2014.

- [60] H. Zhang, M. Cisse, Y. N. Dauphin, and D. Lopez-Paz, “mixup: Beyond Empirical Risk Minimization,” oct 2017.

- [61] X. Yu, T. Liu, M. Gong, and D. Tao, “Learning with biased complementary labels,” in Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 68–83.

- [62] W. Zhang, Y. Wang, and Y. Qiao, “Metacleaner: Learning to hallucinate clean representations for noisy-labeled visual recognition,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 7373–7382

- [63] J. Leng, ”A Wearable ECG Monitor for Deep Learning Based Real-Time Cardiovascular Disease Detection”, Available: https://www.sydneyiot.com/ecg-monitoring-module.

- [64] Y. Kim, J. Yim, J. Yun and J. Kim, ”NLNL: Negative Learning for Noisy Labels”, The IEEE International Conference on Computer Vision (ICCV), 2019, pp. 101-110

- [65] S. Sukhbaatar, J. Bruna, M. Paluri, L. Bourdev and R. Fergus, ”Training Convolutional Networks with Noisy Labels”, arXiv:1406.2080v4 [cs.CV], 10 Apr 2015.

- [66] K. He, X. Zhang, S. Ren and J. Sun, ”Deep Residual Learning for Image Recognition”,arXiv:1512.03385v1 [cs.CV], 10 Dec 2015.