NEEDED: Introducing Hierarchical Transformer to Eye Diseases Diagnosis

Abstract

With the development of natural language processing techniques(NLP), automatic diagnosis of eye diseases using ophthalmology electronic medical records (OEMR) has become possible. It aims to evaluate the condition of both eyes of a patient respectively, and we formulate it as a particular multi-label classification task in this paper. Although there are a few related studies in other diseases, automatic diagnosis of eye diseases exhibits unique characteristics. First, descriptions of both eyes are mixed up in OEMR documents, with both free text and templated asymptomatic descriptions, resulting in sparsity and clutter of information. Second, OEMR documents contain multiple parts of descriptions and have long document lengths. Third, it is critical to provide explainability to the disease diagnosis model. To overcome those challenges, we present an effective automatic eye disease diagnosis framework, NEEDED. In this framework, a preprocessing module is integrated to improve the density and quality of information. Then, we design a hierarchical transformer structure for learning the contextualized representations of each sentence in the OEMR document. For the diagnosis part, we propose an attention-based predictor that enables traceable diagnosis by obtaining disease-specific information. Experiments on the real dataset and comparison with several baseline models show the advantage and explainability of our framework. ††footnotetext: the code can be found in https://github.com/coco11563/NEEDED

[email protected], [email protected], [email protected], [email protected], [email protected], [email protected]††footnotetext: 2 University of Chinese Academy of Sciences††footnotetext: 3 Changsha Aier Eye Hospital, Emails: [email protected]††footnotetext: These authors have contributed equally to this work.††footnotetext: ∗ Corresponding author

1 Introduction

With the rising incidence of eye diseases and the shortage of ophthalmic medical resources, eye health issues are becoming increasingly prominent [1]. To improve the efficiency of ophthalmologists and lower the rate of misdiagnosis, an automated eye disease diagnostic system that provides advice to ophthalmologists has become an urgent demand. There have been many attempts to automatically diagnose eye diseases based on image data (e.g., ocular fundus photographs) [2, 3, 4]. However, a kind of image usually contains information about only one part of the eye, limiting the scope of automatic disease diagnosis. Meanwhile, the widespread adoption of ophthalmology electronic medical record (OEMR) and the development of NLP techniques make it possible to diagnose eye diseases based on OEMR automatically.

As shown in the left part of Figure 1, the OEMR document consists of several parts, including Chief Complaint (CC), History of Present Illness (HPI), Examination Results (ER), etc. Each part can be considered a particular type of text. The goal of automatic diagnosis of eye diseases is to diagnose diseases suffered by each of the patient’s eyes respectively based on these texts. For this task, a typical solution that has been tried on similar tasks is to concatenate these texts and use neural networks like RNN [5, 6, 7] and CNN [8, 9] to obtain the embedding representation of the OEMR document. Then the document-level representation is used as input to classifiers to obtain results.

However, after analyzing the characteristics of OEMR and our task, we found three issues that make this solution unsuitable: Issues 1: sparsity and clutter of information in the OEMR. Descriptions of different eyes are mixed up in OEMR documents, causing clutter in the information relevant to different eyes. Besides, many of these descriptions are templated asymptomatic descriptions such as No congestion or edema in the conjunctiva of the left eye, resulting in a sparsity of information in the document. This sparsity and clutter of information may hinder the diagnosis model from giving the accurate diagnosis results of two eyes respectively. Issues 2: long document length and multiple text types. OEMR documents are long and have multiple text types. This solution is inappropriate when the input is a lengthy document because it is weak at learning long-range dependencies, making it challenging to extract information from long documents comprehensively [10, 11]. Besides, it ignores the differences between text types and does not preserve the type information of the text. Issues 3: explainability of automatic disease diagnosis. It is critical to provide explainability to the proposed model for medical scenarios, especially for automatic disease diagnosis [12]. However, This typical approach obtains only a coarse-grained document-level embedding representation to diagnose all diseases, making it difficult to trace the diagnosis and explain the results.

Another method used in related tasks is extracting structured information from clinical texts by entity recognition and other methods, then using them to enhance the downstream model or get the diagnosis results directly [13, 14]. This method requires annotated dataset to train the information extraction model. However, due to the complexity and length of OEMR documents, doctors need to spend too long time annotating comprehensively and accurately, making this method difficult to implement. Besides, it suffers from the problem of error propagation.

To overcome these issues, we formulate the automatic eye disease diagnosis as a particular multi-label classification task. Specifically, the input to this task is an OEMR document, and the output is two sets of labels corresponding to the diagnosis results for both eyes, respectively. Then, we iNtroduce hierarchical transformer to EyE DisEases Diagnosis and propose an automatic diagnosis framework named NEEDED. The main contributions of this paper are summarized as:

An efficient preprocessing method for improving information density and quality. We first filter out useless asymptomatic descriptions from the OEMR document and then distinguish the contents relevant to different eyes to form two documents, which will be used separately to get the diagnostic result. While retaining vital information, we improve the information density and quality of the document.

A hierarchical encoder to extract abundant semantic information from OEMR. Inspired by the long-text modeling methods [15, 16, 17], we design a hierarchical encoder architecture to embed both texts and their particular type within an OEMR document into a matrix. Specifically, we utilize a hierarchical transformer [18, 19] to obtain the contextualized representation of each sentence in the OEMR while preserving the type information of the sentence by adding type-token [11]. Then, we use the matrix formed by these representations as the document representation.

An attention-based predictor that enables traceable diagnosis. We utilize a dot-product attention layer as an extractor to capture disease-specific information from these sentence representations and perform the diagnosis. This method enables traceable diagnosis by observing the distribution of attention weight and providing explanations for diagnostic results.

We conduct experiments on the real dataset and comparison with several baseline models to validate the advantage of NEEDED and provide a case study to show the explainability.

2 Preliminaries

In this section, we first give the definition of the ophthalmology electronic medical record(OEMR), then provide a formal definition of the task of Automatic Diagnose of Eye Disease(ADED).

Definiton 1 (Ophthalmology Electronic Medical Record): As shown in the left part of Figure 1, an OEMR has several parts. Each part can be considered as a set of sentences. Therefore, the OEMR can be viewed as a document consisting of multiple sentences. Formally, we define as an OEMR document with N sentences. The -th sentence in the document is defined as , where denotes the -th token in -th sentence.

Definiton 2 (Automatic Diagnosis of Eye Disease): Given an OEMR , the task of automatic diagnosis of eye diseases aims to diagnose the diseases suffered by each of the patient’s eyes respectively based on it. By treating each disease as a label, we can view this problem as deriving two sets of labels for each OEMR corresponding to different eyes, which can be seen as a particular multi-label classification task. Formally, Let denotes the label set for all eye disease, where represent the presence or absence of the disease. As mentioned before, we consider that it is essential to preprocess the OEMR document, let denote the preprocessing method. Finally, the ADED task can be formulated as:

| (2.1) |

where is the parameters of the disease diagnosis model , and denote the set of diseases labels for two eyes respectively.

3 Proposed Framework

3.1 Preprocessing Component

As shown in the left part of Figure 1, the OEMR document is composed of several sections, including Chief Complaint (CC), History of Present Illness (HPI), Examination Results (ER), etc. Each part can be considered a particular type of text that consists of some sentences. The task of automatic eye disease diagnosis aims to evaluate the status of both eyes respectively based on an OEMR document. However, descriptions of different eyes are mixed up in OEMR documents, resulting in a clutter of information. Besides, many descriptions are templated asymptomatic descriptions that contain little information for disease diagnosis, their abundance results in a sparsity of information in the document.

To improve information density and quality, we propose a preprocessing method consisting of two steps: filtering useless descriptions and distinguishing content relevant to different eyes. Step-1: Inspired by TF-IDF, we consider that for those templated asymptomatic descriptions, the more they appear in the document set, the more they are common condition descriptions and the less useful they are for disease diagnosis. Thus, we calculate the frequency of occurrence of each description in all OEMR documents and filter out asymptomatic descriptions that occur more frequently than the threshold . Step-2: Given an OEMR document , the descriptions relevant to different eyes are distinguished from to form two documents, and . They have the same structure as the OEMR, except that the content is focused on a particular eye. Specifically, for the examination results section, each sentence describes an examination result for a specific eye, so we can easily distinguish them. For other parts, we developed some rules based on the characteristics of the descriptions to distinguish, and for those sentences that are difficult to distinguish or relevant to both eyes, both documents retain them.

3.2 Hierarchical Transformer Encoder

After the above stage, we obtain two documents, and . They will be used as input of the downstream model separately to get the diagnostic results for the two eyes. For simplicity of expression, they will be denoted as in the following. To extract the information in a given OEMR document , we design a hierarchical Transformer structure as an encoder. As shown in Figure 1(2), the encoder consists of a word-level Transformer and a sentence-level Transformer. The word-level transformer takes the OEMR document as input and encodes each sentence in the document to obtain the type-specific sentence embedding representations. Then, the sentence-level transformer takes the set of sentence embedding representations as input, learns the dependencies between sentences and generate the representation matrix of the input OEMR document.

3.2.1 Word-level Transformer

Given a OEMR document , in this stage we aims to use a Transformer to embed each sentence in into the sentence representation . To retain information about the text type of each sentence and thus help the Transformer model its semantics, we first add a type-token at the beginning of each sentence. Formally, given an sentence with token sequence , the sentence representation can be obtained by:

| (3.2) | ||||

where is a -layers Transformer. For each token in the sentence , its input embedding is obtained by summing its token embedding and positional encoding, and the token embedding is randomly initialized. is a list of output embedding of tokens in the sentence , where is a dimensional vector corresponding to . The denotes the average-pooling on the output embedding representations to obtain the sentence embedding representation .

3.2.2 Setence-level Transformer

In the above stage, we obtain the representation of each sentence in the OEMR document. Most of the sentences are descriptions of the patient’s symptoms or medical history. It is likely that there are many associations between them, which means that the meaning represented by one sentence may vary depending on its context. Thus, the embedding representation of each sentence should not only depend on its content but also consider its context.

At this stage, we aims to use the Transformer to obtain contextualized sentence representations by learning the dependencies between sentences. Given a OEMR document , we can obtain the sentences representations set from the above word-level Transformer. The setence-level transformer takes sentences representations set as the input and outputs a matrix , which can be formulated as:

| (3.3) | ||||

where is a one layer Transformer. is a set of contextualized sentence representations. With the multi-head self-attention mechanism, Transformer can model the dependencies between sentence representations in the input from multiple perspectives, enabling each sentence representation can collect global information of context. In a sense, the matrix can be seen as a multi-perspective representation of the input OEMR document.

The hierarchical transformer encoder has the following main advantages: First, Transformer has strengths in learning long-range dependencies [10, 11]. The hierarchical Transformer encoder can effectively and efficiently model the semantic information of OEMR by learning both word-level and sentence-level dependencies. Second, embedding documents into multiple sentence-level representations allows us to implement traceable disease prediction based on these representations in combination with our attention-based predictor proposed below. Third, by adding the type-token at the beginning of sentences, the differences between text types are taken into account, helping the encoder to model sentence semantics more accurately.

3.3 Attention-based Predictor

After the previous step, the OEMR document is embedded in a matrix , where denotes the number of sentences in the OEMR document. A typical subsequent operation is to perform a pooling operation on matrix to obtain a dimensional vector and use this vector as the input to classifiers to obtain the diagnostic result. However, this approach obtains only a coarse-grained document-level representation to diagnose all diseases, making it difficult to trace the diagnosis and provide explanations for the results.

To provide explainability for the automatic diagnosis, inspired by the label-wise attention[20], we adopt the dot-product attention to capture disease-specific information from for traceable diagnosing. Specially, given the disease to be predicted, we first obtain the attention weight of each component in for disease . this process can be formulated as:

| (3.4) |

where the is the label embedding of disease , which is randomly initialized. The is the attention weight vector for disease . Then, we use the attention weight vector and matrix to compute the specific representation for disease .

| (3.5) |

Be noted that each component in is an embedding representation of a sentence in the input. Thus, by observing the attention weight vector , we can know the importance of sentences in the input for diagnosing disease , thus enabling a traceable diagnosis.

Suppose we have a total of diseases to diagnose, after the above stage, we get a matrix where is the disease-specific information representation for disease . Then, we use a set of Feedforward Neural Networks(FNNs) and the sigmoid function to calculate the probability of the presence of each disease.

| (3.6) |

3.4 Training

The training procedure minimizes the binary cross-entropy loss, which can be formulated as:

| (3.7) |

where indicates whether disease exists in the diagnosis record of the input document. is the probability of the existence of disease output by the prediction model.

4 Experiments

This section presents our experiments’ dataset and the evaluation of experimental results with some analysis. We demonstrate the advantage of our proposed framework by comparing it to the baseline models. We explore the effectiveness of each component of our framework and provide a case study to illustrate the explainability.

4.1 Dataset Description

We conducted the experiments on the real-world OEMR dataset with 5134 records collected by Aier Eye Hospital. After discussion with ophthalmologists, we select six common eye diseases to test the diagnostic ability of our model, including cataract(CAT), glaucoma, diabetic retinopathy(DR), dry eye syndrome(DES), pterygium, and meibomian gland dysfunction(MGD). The data set is divided into a training set, validation set, and test set according to the proportion of 70%, 15%, 15%, respectively.

4.2 Baseline Methods

To comprehensively evaluate the performances of our proposed framework, we selected three baselines and five ablation variants of NEEDED. The baselines are listed as follows: 1) CAML [20]: The CAML is a CNN-based model which integrate label-wise attention to obtain the label-specific representations. 2) LSTM-Att [7]: LSTM-Att combines LSTM and attention mechanism to capture important semantic information in the input for prediction. 3) BERT [21]:BERT is the representative of the pre-trained models, we use BERT and an pooling layer to obtain the representation of input. We also proposed five variants of NEEDED which are listed as follows: 1) w/o p: ablated the pre-processing steps. 2) w/o c: ablated the hierarchical transformers and replaced it with vanilla transformer. 3) w/o s: ablated the sentence-level transformers. 4) w/o l: ablated the attention mechanism and label embedding in predictor. 5) w/o w: both ablated the hierarchical transformers and the attention based predictor.

4.3 Experiment Settings

In experiments, we set the transformer layer number to 5, the multi-head number to 8, and the dimension size to 256. For the detail of NEEDED training, we use AdamW [22] optimizer with learning rate of , and set the batch size as 32, AdamW weight decay as . The dropout rate is set to 0.1 to prevent overfitting. For all models, we train and test them multiple times with different random seeds under their optimal hyperparameters and report their performance and standard deviation. In the following experiments, all methods are implemented by PyTorch, and all experiments are conducted on a Linux server with an AMD EPYC 7742 CPU and one NVIDIA A100 GPU.

| Models | Macro-F1 | Micro-F1 | Macro-AUC | Micro-AUC |

| BERT | 81.21±0.40 | 84.28±0.78 | 92.55±0.43 | 95.61±0.22 |

| CAML | 83.71±0.57 | 81.22±0.80 | 94.32±0.61 | 96.09±0.20 |

| LSTM-Att | 81.55±0.74 | 80.29±0.70 | 93.50±0.39 | 95.68±0.23 |

| BERTρ | 87.39±0.72 | 89.73±0.34 | 95.73±0.33 | 97.33±0.19 |

| CAMLρ | 88.49±0.42 | 90.53±0.33 | 96.10±0.18 | 97.59±0.14 |

| LSTM-Attρ | 88.19±0.61 | 91.11±0.44 | 97.16±0.23 | 98.14±0.12 |

| w/o p | 82.59±0.76 | 82.37±0.78 | 94.85±0.10 | 96.46±0.09 |

| w/o c | 87.96±0.73 | 89.80±0.44 | 97.14±0.26 | 98.00±0.19 |

| w/o s | 88.19±0.50 | 91.31±0.34 | 96.85±0.15 | 97.89±0.08 |

| w/o l | 89.29±0.65 | 91.88±0.49 | 96.40±0.19 | 97.67±0.20 |

| w/o w | 87.29±0.59 | 90.58±0.36 | 96.13±0.22 | 97.51±0.17 |

| NEEDED | 90.25±0.48 | 92.61±0.27 | 97.92±0.15 | 98.59±0.10 |

4.4 Experiment Results

These experiment aims to answer the following questions: Q1:How is the performance of NEEDED in compared with other baseline methods? Q2: How is each component of NEEDED impact the model performance? Q3: What is the impact of distinguishing the descriptions of different eyes in preprocessing? Q4: What is the impact of filtering the asymptomatic templated descriptions? Q5: How is the explainablity of our proposed model? Beside this, we also conduct the hyperparameter senetivity study.

4.4.1 RQ1: Overall Comparison

The goal of the first experiment is to compare the performance of the NEEDED and baseline models on the ADED task. In this comparison, we used Macro-F1, Micro-F1, Macro-AUC, and Micro-AUC as evaluation metrics, which are widely used in multi-label classification problems [11, 20, 23, 24]. The results are shown in Table 1. From the overall results, we observed that: First, our framework achieves the best performance in overall evaluation metrics, showing it is better at extracting disease-related information from the OEMR document. Second, our model performs better than w/o c by learning both word-level and sentence-level dependencies and preserving text type information. Third, BERT as pre-trained model does not perform as well as other baseline models on most metrics, probably due to the large gap between the text in its pre-training corpus and the OEMR documents.

4.4.2 RQ2: Ablation Study of NEEDED

To explore the effectiveness of each part of our framework, we conduct ablation experiments. We observed that our framework outperforms all its variants, illustrating that each component in our framework is indispensable. In addition to this, there are the following points: First, compared to our model, both w/o c and w/o w show a significant performance drop due to the fact that they both remove the hierarchical Transformer. Meanwhile, w/o c is better than w/o w in most metrics because it retains the attention-based predictor. Second, w/o s outperforms w/o w in all metrics and w/o c is better than w/o w in most metrics, indicating the effectiveness of attention-based predictor which can capture disease-specific information. Third, although the performance of w/o l is degraded compared to our framework, it performs better than w/o w in all metrics. This is because it removes the attention-based predictor while retaining the hierarchical Transformer, indicating the importance of learning the dependency between sentences. Fourth, w/o p is the worst and has a very significant degradation compared to the other models because it removes the preprocessing component, resulting in clutter and sparsity of information in the model input.

4.4.3 RQ3: The Impact of Distinguishing Descriptions Relevant to Different Eyes

To explore the effect of distinguishing descriptions of different eyes, we conduct the following experiment. We first filter part of the asymptomatic descriptions in the OEMR document according to the previous section. Then, instead of distinguishing the remaining descriptions to form two documents, we use all filtered texts as model inputs to obtain the diagnosis results for both eyes. We perform this experiment on our framework and all baseline methods, then compare the results with the case where distinction were made. The results are shown in figure 2. We observe that the performance of all models degrades significantly if the descriptions are not distinguished. The reason is the information clutter caused by descriptions of different eyes mixed up. Besides, we observed that our model is the most affected, probably because it utilize the hierarchical Transformer to model the document’s information at sentence level, which exacerbate the information clutter. In addition to the above, we observed that BERT is the least affected, probably because it has a huge number of model parameters and is pre-trained on a large corpus, making its fitting ability more stable despite the information clutter.

4.4.4 RQ4: The Impact of Filtering Templated Asymptomatic Descriptions

In our framework, we filter out templated asymptomatic descriptions that occur more frequently than a certain threshold before the OEMR document is fed into the downstream model. In this experiment, we explore the impact of this way by setting different filtering thresholds and not filtering at all. Specifically, we set the filtering thresholds to , , , and , as well as no filtering, to observe the changes in the performance of our framework. The results are shown in figure 3, where represent the ablation of filter. We can find that after the threshold is bigger than , the model’s performance tends to decrease as increases. The performance is worst in the absence of filtering, which indicates the validity of filtering the templated asymptomatic description.

We also observe that the performance is worse when is than when is , indicating that the too-low filtering threshold is harmful. This may be due to some valuable asymptomatic descriptions being filtered out.

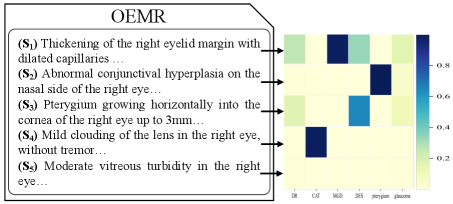

4.4.5 RQ5: Exploring the Explainability of NEEDED

Our predictor utilizes dot-product attention to extract disease-specific information, which improves the model’s effectiveness and can provide some explainability to the diagnostic results. Specifically, assume that our model diagnoses the disease based on the given input, we can obtain the attention weight vector from the predictor. The denotes the attention weight of the -th sentence’s representation for the disease , which can be viewed as the importance of the -th sentence for diagnosis of disease . Thus, for each disease, we can obtain the important sentences associated with it in the input based on the attention weights. We show a case to demonstrate the effect of providing explainability in this way. The left part of Figure 5 shows a portion of an OEMR document describing the patient’s right eye. Specifically, the patient’s right eye was diagnosed with cataract(CAT), meibomian gland dysfunction(MGD), dry eye syndrome(DES), and pterygium by doctor. Our model yields the same diagnosis. The right part of Figure 5 shows a heatmap in which each column is the distribution of attention weights for one disease. We can observe that: First, our model can focus on different information and make the correct diagnosis when diagnosing various diseases, demonstrating that our model can capture disease-specific information from the document by the attention mechanism. Second, based on the distribution of attention weights, we can track the sentences essential for diagnosing the disease, which provides explainability to our model. Third, we can learn each sentence’s role in diagnosing different diseases based on the distribution of attention weights, which can informing clinical research on the condition.

4.4.6 Hyperparameter Sensitivity and Model Converge Study

The number of Transformer layers in the word-level Transformer and the number of self-attention heads in the hierarchical Transformer are two critical parameters in our model. To assess the impact of these two hyperparameters, we investigate the sensitivity of them and show the results in Figure 4. We observe that the performance of our model shows a trend of increasing and then decreasing as the number of Transformer layers varies from one to eight. In addition, the performance of our model shows the same trend as the number of self-focused heads increases. This may be because the fitting ability of the model improves with more parameters introduced, but after introducing too many parameters, the model appears to be over-fitted. Besides, we collected the loss values and accuracy of each epoch during the training process and visualized them to study the convergence of our model. We observe that the model’s accuracy increases rapidly in the first ten training epochs, then tends to converge. The loss curve shows that the model’s training process is stable.

5 RELATED WORK

5.1 Clinical Text Classification

With the development of NLP techniques, various neural models have been proposed to address the problem of text-based automatic disease diagnosis and other clinical text classification tasks.

Many studies treat medical text as free text and propose methods based on neural networks[6, 7, 8, 9, 5, 25] to solve the medical text classification problem. For instance, Yang et al.[8] train a multi-layer CNN to predict several basic diseases based on electronic medical records. Girardi et al.[9] propose an attention-based CNN model to assess patient risk and detect warning symptoms. Yuwono et al.[25] combine CNN and RNN to diagnose appendicitis based on clinical notes. Hashir and Sawhney [5] established a hierarchical neural network to predict the mortality of inpatients using clinical records as input. Some work extracts structured knowledge from clinical text and then uses it to improve model performance or get results directly [13, 14]. For example, Chen et al.[13] propose a disease diagnosis framework based on CNN and Bayesian networks, which extracts symptom entities from medical records and then uses them to enhance model performance. Yuan et al.[14] first extract medical entities from medical records and construct a graph based on the relationships between them, and later extract structured information from the graph for disease diagnosis.

Nowadays, transformers [10] are widely adopted in various scenarios [11, 26, 27, 28]. For clinical text classification, Feng and Shaib [27] build a hierarchical CNN-transformer model for sepsis and mortality prediction. Si and Roberts [28] propose a clinical document classification model based only on transformers.

5.2 Automatic Diagnosis of Eye Diseases

Many scholars have explored how to automatically diagnose eye diseases based on artificial intelligence. Most of these studies are based on image data [2, 3, 4, 29]. For example, Christopher et al. [2]train several neural networks with ocular fundus photographs as input to diagnose glaucomatous optic nerve damage. Stuart Keel et al. [3] build two CNN neural networks based on fundus images to determine the presence of diabetic retinopathy and glaucoma, respectively. Besides, there are a few studies based on text data [30, 31]. Apostolova et al. [30] built an open eye injury identification model based on TF-IDF and SVM. Saleh et al. [31] extracted numerical type features from the electronic health records of diabetic patients and used machine learning models to predict the risk of diabetic retinopathy.

6 Conclusion

This paper presents a framework for the automatic diagnosis of eye diseases based on OEMR, which consists of three modules: a preprocessing component, a hierarchical transformer encoder, and an attention-based predictor. In the processing step, we filter out the useless descriptions and then distinguish the content relevant to different eyes in the OEMR document to form two documents. Then, we obtain the representation matrix for the input document based on the hierarchical transformer encoder. Finally, we utilize dot-product attention to capture the disease-specific information and make the diagnosis. Experiments on the real dataset show the superiority and explainability of our framework, and each component in our framework is effective.

7 Ackownledgement

This work was supported by the National Key Research and Development Plan of China under Grant No. 2022YFF0712200 ,the Natural Science Foundation of China under Grant No. 61836013 ,Science and Technology Service Network Initiative, Chinese Academy of Sciences under Grant No. KFJ-STS-QYZD-2021-11-001 ,Beijing Natural Science Foundation under Grant No. 4212030 ,Youth Innovation Promotion Association CAS

References

- [1] World Health Organization et al. World report on vision. 2019.

- [2] Mark Christopher, Akram Belghith, Christopher Bowd, James A Proudfoot, Michael H Goldbaum, Robert N Weinreb, Christopher A Girkin, Jeffrey M Liebmann, and Linda M Zangwill. Performance of deep learning architectures and transfer learning for detecting glaucomatous optic neuropathy in fundus photographs. Scientific reports, 8(1):1–13, 2018.

- [3] Stuart Keel, Jinrong Wu, Pei Ying Lee, Jane Scheetz, and Mingguang He. Visualizing deep learning models for the detection of referable diabetic retinopathy and glaucoma. JAMA ophthalmology, 137(3):288–292, 2019.

- [4] Dan Milea, Raymond P Najjar, Zhubo Jiang, Daniel Ting, Caroline Vasseneix, Xinxing Xu, Masoud Aghsaei Fard, Pedro Fonseca, Kavin Vanikieti, Wolf A Lagrèze, et al. Artificial intelligence to detect papilledema from ocular fundus photographs. New England Journal of Medicine, 382(18):1687–1695, 2020.

- [5] Mohammad Hashir and Rapinder Sawhney. Towards unstructured mortality prediction with free-text clinical notes. Journal of Biomedical Informatics, 108:103489, 2020.

- [6] Ying Sha and May D Wang. Interpretable predictions of clinical outcomes with an attention-based recurrent neural network. In Proceedings of the 8th ACM International Conference on Bioinformatics, Computational Biology, and Health Informatics, pages 233–240, 2017.

- [7] Annika M Schoene, George Lacey, Alexander P Turner, and Nina Dethlefs. Dilated lstm with attention for classification of suicide notes. In Proceedings of the tenth international workshop on health text mining and information analysis (LOUHI 2019), pages 136–145, 2019.

- [8] Zhongliang Yang, Yongfeng Huang, Yiran Jiang, Yuxi Sun, Yu-Jin Zhang, and Pengcheng Luo. Clinical assistant diagnosis for electronic medical record based on convolutional neural network. Scientific reports, 8(1):1–9, 2018.

- [9] Ivan Girardi, Pengfei Ji, An-phi Nguyen, Nora Hollenstein, Adam Ivankay, Lorenz Kuhn, Chiara Marchiori, and Ce Zhang. Patient risk assessment and warning symptom detection using deep attention-based neural networks. arXiv preprint arXiv:1809.10804, 2018.

- [10] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. Attention is all you need. Advances in neural information processing systems, 30, 2017.

- [11] Meng Xiao, Ziyue Qiao, Yanjie Fu, Yi Du, Pengyang Wang, and Yuanchun Zhou. Expert knowledge-guided length-variant hierarchical label generation for proposal classification. In 2021 IEEE International Conference on Data Mining (ICDM), pages 757–766. IEEE, 2021.

- [12] Yiming Zhang, Ying Weng, and Jonathan Lund. Applications of explainable artificial intelligence in diagnosis and surgery. Diagnostics, 12(2):237, 2022.

- [13] Jun Chen, Xiaoya Dai, Quan Yuan, Chao Lu, and Haifeng Huang. Towards interpretable clinical diagnosis with bayesian network ensembles stacked on entity-aware cnns. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, pages 3143–3153, 2020.

- [14] Quan Yuan, Jun Chen, Chao Lu, and Haifeng Huang. The graph-based mutual attentive network for automatic diagnosis. In Proceedings of the Twenty-Ninth International Conference on International Joint Conferences on Artificial Intelligence, pages 3393–3399, 2021.

- [15] Raghavendra Pappagari, Piotr Zelasko, Jesús Villalba, Yishay Carmiel, and Najim Dehak. Hierarchical transformers for long document classification. In 2019 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), pages 838–844. IEEE, 2019.

- [16] Xingxing Zhang, Furu Wei, and Ming Zhou. Hibert: Document level pre-training of hierarchical bidirectional transformers for document summarization. arXiv preprint arXiv:1905.06566, 2019.

- [17] Yang Liu and Mirella Lapata. Hierarchical transformers for multi-document summarization. arXiv preprint arXiv:1905.13164, 2019.

- [18] Meng Xiao, Ziyue Qiao, Yanjie Fu, Hao Dong, Yi Du, Pengyang Wang, Dong Li, and Yuanchun Zhou. Who should review your proposal? interdisciplinary topic path detection for research proposals. arXiv preprint arXiv:2203.10922, 2022.

- [19] Meng Xiao, Ziyue Qiao, Yanjie Fu, Hao Dong, Yi Du, Pengyang Wang, Hui Xiong, and Yuanchun Zhou. Hierarchical interdisciplinary topic detection model for research proposal classification. arXiv preprint arXiv:2209.13519, 2022.

- [20] James Mullenbach, Sarah Wiegreffe, Jon Duke, Jimeng Sun, and Jacob Eisenstein. Explainable prediction of medical codes from clinical text. arXiv preprint arXiv:1802.05695, 2018.

- [21] Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805, 2018.

- [22] Ilya Loshchilov and Frank Hutter. Decoupled weight decay regularization. arXiv preprint arXiv:1711.05101, 2017.

- [23] Tong Zhou, Pengfei Cao, Yubo Chen, Kang Liu, Jun Zhao, Kun Niu, Weifeng Chong, and Shengping Liu. Automatic icd coding via interactive shared representation networks with self-distillation mechanism. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), pages 5948–5957, 2021.

- [24] Jun Chen, Quan Yuan, Chao Lu, and Haifeng Huang. A novel sequence-to-subgraph framework for diagnosis classification. In IJCAI, pages 3606–3612, 2021.

- [25] Steven Kester Yuwono, Hwee Tou Ng, and Kee Yuan Ngiam. Learning from the experience of doctors: Automated diagnosis of appendicitis based on clinical notes. In Proceedings of the 18th BioNLP Workshop and Shared Task, pages 11–19, Florence, Italy, August 2019. Association for Computational Linguistics.

- [26] Meng Xiao, Min Wu, Ziyue Qiao, Zhiyuan Ning, Yi Du, Yanjie Fu, and Yuanchun Zhou. Hierarchical mixup multi-label classification with imbalanced interdisciplinary research proposals. arXiv preprint arXiv:2209.13912, 2022.

- [27] Jinyue Feng, Chantal Shaib, and Frank Rudzicz. Explainable clinical decision support from text. In Proceedings of the 2020 conference on empirical methods in natural language processing (EMNLP), pages 1478–1489, 2020.

- [28] Yuqi Si and Kirk Roberts. Hierarchical transformer networks for longitudinal clinical document classification. arXiv preprint arXiv:2104.08444, 2021.

- [29] Cecilia S Lee, Doug M Baughman, and Aaron Y Lee. Deep learning is effective for classifying normal versus age-related macular degeneration oct images. Ophthalmology Retina, 1(4):322–327, 2017.

- [30] Emilia Apostolova, Helen A White, Patty A Morris, David A Eliason, and Tom Velez. Open globe injury patient identification in warfare clinical notes. In AMIA annual symposium proceedings, volume 2017, page 403. American Medical Informatics Association, 2017.

- [31] Emran Saleh, Jerzy Błaszczyński, Antonio Moreno, Aida Valls, Pedro Romero-Aroca, Sofia de la Riva-Fernandez, and Roman Słowiński. Learning ensemble classifiers for diabetic retinopathy assessment. Artificial intelligence in medicine, 85:50–63, 2018.