Necessity Feature Correspondence Estimation for Large-scale Global Place Recognition and Relocalization

Abstract

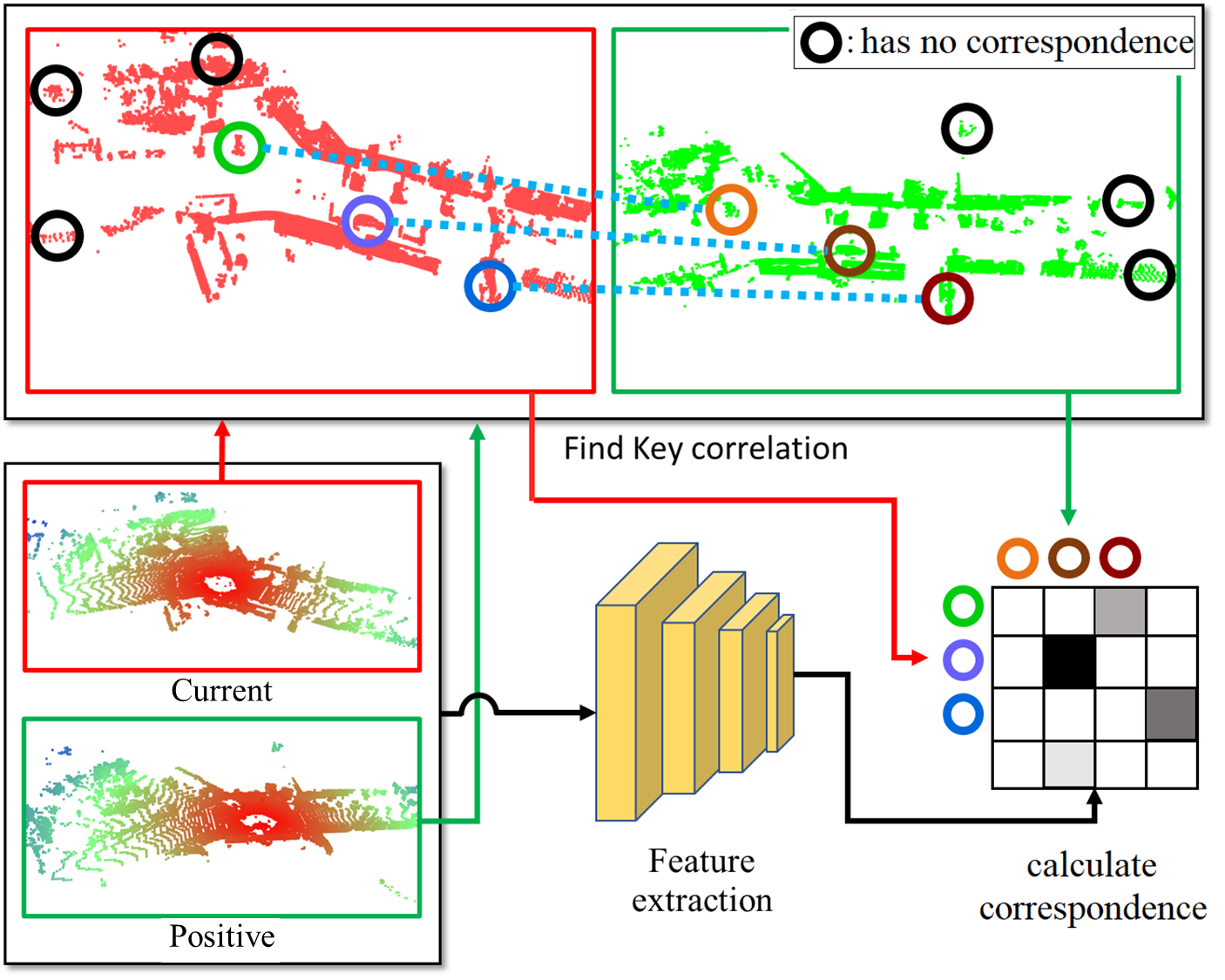

In order to find the accurate global 6-DoF transform by feature matching approach, various end-to-end architectures have been proposed. However, existing methods do not consider the false correspondence of the features, thereby unnecessary features are also involved in global place recognition and relocalization. In this paper, we introduce a robust correspondence estimation method by removing unnecessary features and highlighting necessary features simultaneously. To focus on the necessary features and ignore the unnecessary ones, we use the geometric correlation between two scenes represented in the 3D LiDAR point clouds. We introduce the correspondence auxiliary loss that finds key correlations based on the point align algorithm and enables end-to-end training of the proposed networks with robust correspondence estimation. Since the ground with many plane patches acts as an outlier during correspondence estimation, we also propose a preprocessing step to consider negative correspondence by Weakening the influence dominant plane patches. The evaluation results on the dynamic urban driving dataset, show that our proposed method can improve the performances of both global place recognition and relocalization tasks. We show that estimating the robust feature correspondence is one of the important factors in global place recognition and relocalization.

I Introduction

In the fields of the robotics and computer vision, wide-ranging researches have been conducted on Simultaneous Localization and Mapping (SLAM) [1]. SLAM is widely used not only for mobile robots but also for unmanned technologies such as autonomous vehicles and underwater marine robots [2, 3, 4]. In traditional approaches, the pipeline of SLAM can be broadly divided into three parts: 1) front-end, 2) back-end, and 3) loop-closing [5]. Loop closing can optimize the robot’s pose when the robot detects a loop, also known as a Rendezvous, that connects to a previously visited location. It is important for the robot navigation task to accurately detect loop closing point, because failure to detect loops can result in incorrect pose optimization of the map and the robot, and lead the distorted map representation. [6, 7]. By successfully detecting previously visited locations, the robot’s poses can be corrected through relocalization even if the robot fails to estimate its past trajectories correctly due to accumulation of errors.

Place recognition is of significant importance for the loop closing detection, thereby many researches have been conducted. However, there are still difficulties in performing place Recognition for 3D LiDAR point clouds, because 3D LiDAR point clouds are sparse compared to 2D images and have complex distributions of 3D geometric structures [8]. In this case, information that can distinguish geometric structures may not be incorporated into the features, which can cause the failure to detect course-level previously visited places. It is also hard to achieve the fine-level pose estimation as well, because the estimation of the 6-DOF pose needs features that contain detailed structural information in 3D LiDAR point clouds.

To resolve these issues, in previous works, various registration methods based on ICP [9] have been widely used to estimate a fine pose after coarsely recognizing the global pose [10, 11, 12, 13]. However, methods like ICP can be influenced by the initial pose and can fall into a local minimum, resulting in misaligned 3D scans. Recent researches tackle these problems by estimating global place recognition and learning local descriptors for 6-DOF transform simultaneously or estimating initial pose for fine pose estimation [12, 14]. To accomplish this, it is crucial to establish point correspondences, leading to the adoption of various methods, including data augmentation [14] and unbalanced optimal transport [5]. Nevertheless, these methods do not consider the necessity of feature points for estimating true-positive correspondence and simply utilize all possible points. Using all feature points equally can result in incorrect correspondence estimation such as false-positive or false-negative one, as it tries to find correlations even if some of the feature points have no correspondence. This leads to a lack of discrimination in local feature learning for structural information. As a result, when using coarse place recognition, unnecessary features are aggregated, leading to limited accuracy in global place recognition. Furthermore, the inability of estimating feature correspondence also results in poorer relocalization performance.

To overcome these limitation, we propose a method considering the necessity of each feature point for both global place recognition and relocalization. By considering the necessity of each feature point based on the geometric characteristics before training, the network can estimate the key correspondences for each feature. Robust estimation of the correspondence can reduce the number of the outliers, thus make it easier to find correspondences at each local point and leading to better results in 3D point cloud registration. With fewer outliers, the accuracy of 6-DOF calculations during RANSAC or SVD will increase, enabling robust point cloud registration [15]. In addition, the better “local consistency” of features can also lead to improvement in global place recognition [8].

To determine the necessity of each feature point for learning, this paper employs two techniques that consider geometrical feature correlation. First, we weaken the influence of ground structures that have unnecessary geometrical features. The features on the ground can be considered outliers from a local feature perspective because they have structurally duplicated forms, which can cause inaccurate correspondence information. By weakening the influence of dominant ground we can remove features that can generate inaccurate correspondence. Second, we exploit the weights from probabilistic model based on geometric correlation to determine the necessity of the features. Since the feature points we want to find correspondences in two scenes have a geometric correlation, we found that it is possible to find and learn the strong necessity of inlier features by considering this geometric correlation. After finding the weights, the points corresponding to those weights are used to estimate correspondence according to the weights.

To summarize, we propose a method that enhances global place recognition and point cloud registration, by considering the geometric characteristics of 3D point clouds. We weaken unnecessary feature points and strengthen necessary ones while training by feature correspondence estimation. Our contribution is as follows.

-

•

We preprocess the ground to weaken unnecessary features identified through geometrical character. The ground has repetitive structures due to its characteristics, and these characteristics can become irrelevant in feature extraction or clustering [11, 16, 17]. Therefore, ground can act as outliers when estimating point correspondence between two scenes, so they are weakened through the preprocessing process.

-

•

We enhance learning features with better matching, considering probabilistic model based on geometric correlation through 3D alignment algorithms. Each overlapping points have corresponding relationships. We estimate correspondence using weights computed by the probabilistic model based on geometric correlation.

Our method shows better performance compared to the previous methods. We confirmed the high performance of our method through testing on the KITTI and KITTI-360 datasets. This demonstrates the critical role of considering probability-based geometric correlation in global place recognition and relocalization.

II related works

Research in place recognition in 3D point clouds can be divided into two main categories: global place recognition and point cloud registration [18]. Global place recognition is a coarse-level place recognition method that utilizes feature points of a scene to search topological locations on a large-scale map. Point cloud registration, on the other hand, is a fine place recognition method that calculates the 6-DOF of a scene to describe transformation in a metrical location perspective. Although accurate transformations within a scene can be described with point cloud registration, it is often used as a supplementary method in large-scale place recognition due to limitations in computing feature correspondences.

Global place recognition uses a method that compares the global descriptor of the current point clouds to that of the map to determine their similarity. The traditional approach is handcrafted-based method using BEV, generating a global descriptor [19, 20]. Recently, an end-to-end learning-based global place recognition approach has been proposed, showing promising results [21]. This method combines point cloud feature extraction [22] with vision-based global place recognition [23], demonstrating its viability for use in 3D point clouds. More recently, architectures have been proposed to generate global descriptors that better represent the place, thereby improving the performance of global place recognition [8, 24, 25].

Recent research has emerged with an architecture that combines point cloud registration and global place recognition [12, 13]. They aim for more accurate place recognition by not only learning global descriptors but also local features that can describe 6-DOF transforms. To learn local features, correspondence must be known, and methods have been proposed to augment training data to achieve [14]. In the case of [5] used sparse convolution network [26] to extract features and proposed unbalanced optimal transport to find correspondence between features. Our work also effectively estimates correspondence through the use of unbalanced optimal transport.

III Approach

Suppose we have a set of points and the corresponding local feature set . We aim to have the optimal feature set which can be fully utilized both for global place recognition and relocalization. Here, let be the ground truth correspondence of and detected in scenes and respectively. In order to obtain , it is necessary to estimate . However, as remains unknown in usual, estimating is the foremost thing that we need. Assume we have , which is a function that finds correspondences between two point sets. Then that estimate of and is represented as the following:

| (1) |

where and is local feature extracted from and respectively. To solve this data association problem, unbalanced optimal transport can serve as one of the function for correspondence estimation [5, 27, 28]. The derived through unbalanced optimal transport is presented as the following:

| (2) |

where is the Kullback–Leibler divergence, is the discrete uniform distribution, is a parameter that mass is preserved and is a parameter that the sparseness of the mapping. is a dependent variable on and which represents the matching cost of the ’th point in and ’th point in .

Using Eqn. (2), existing methods obtain and estimate optimal local features subsequently. However, when estimating through unbalanced optimal transport, it is challenging to consider prior information such as geometric characteristics of , because the existing approach simply applies uniform weights to every possible correspondenses of and . This limitation makes it hard to consider true positive and false positive, which is crucial for relocalization, and furthur global place recognition as well.

Therefore, we propose a method that enhances both global place recognition and relocalization performance by strengthening the true-positive correspondences and weakening the False-positive ones by considering geometric correlation. By using robust correspondence estimation based on the necessity of feature points, it is easier to find the feature association for local points. Our approach can lead to fewer outliers and improved accuracy in 6-DOF when calculating RANSAC or SVD, reducing the error in metric pose and relocalization. Additionally, as the local feature correspondence was improved, “local consistency” can be maintained, leading to an improvement in the performance of global place recognition [8].

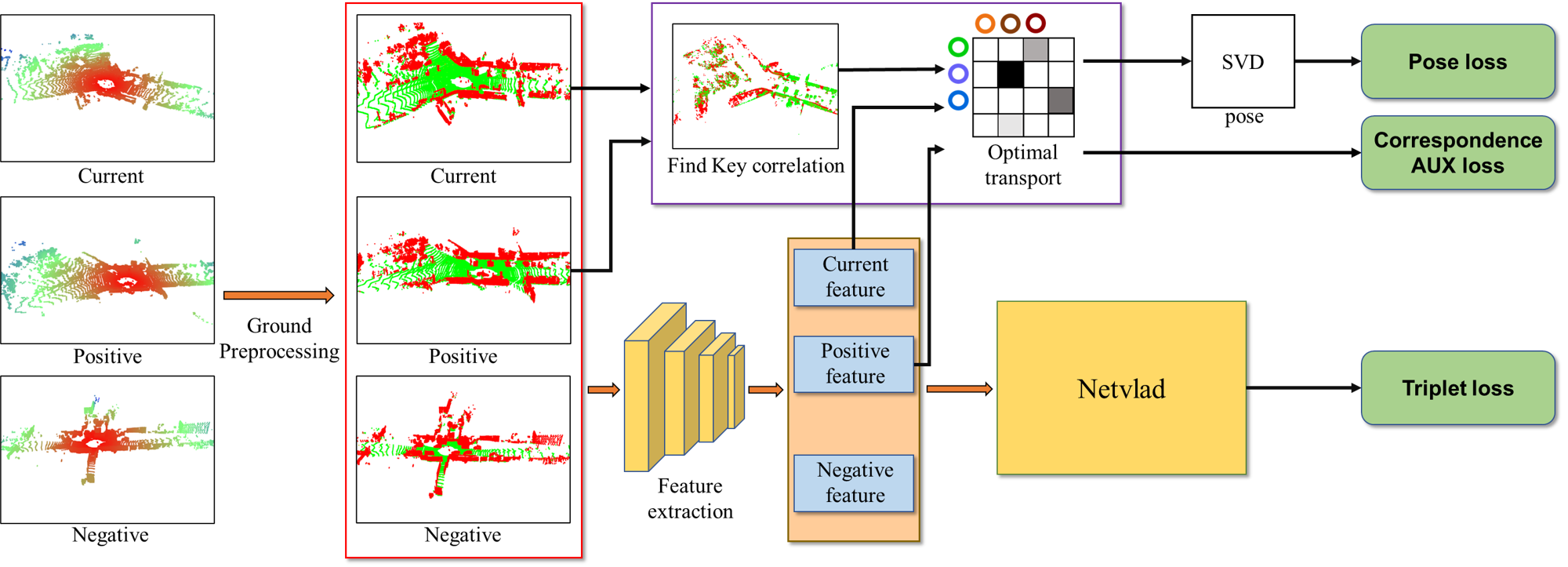

The overview of our proposed method is displayed in Fig. 2. First, when the current points are provided, we consider areas with repetitive and unnecessary geometrical features as the ground and preprocess those sections. Then, local features are extracted from the preprocessed data using the local feature extraction module. At the same time, overlapping points distance are found by performing ICP or a better align algorithm on the preprocessed data between the current point cloud and positive. The overlapping points found through the local features and the align algorithm are then estimated through weight unbalanced optimal transport for correspondence, and the pose is obtained through SVD. The obtained pose is then trained through correspondence auxiliary loss and pose loss. Additionally, the local features are used to generate a global descriptor through the aggregation module, NetVLAD [23]. The generated global descriptor can be trained through triplet loss.

III-A Weakening the Unnecessary Correspondences

Because of the structural characteristics of ground, preprocessing of ground has a significant impact on the performances. Previous researches on global place recognition showed that removing ground and training results in better performance, or preprocessing ground before training is necessary [24, 29, 30]. However, they hardly consider the effectiveness of ground preprocessing in the aspect of the feature correspondence for global place recognition and relocalization.

Local features in 3D LiDAR place recognition are created by condensing the geometric shape information inherent in the point cloud. In other words, local features depend on the geometric information contained within the point cloud [22, 31]. In 3D LiDAR data, the ground exhibits a continuous plane structure, resulting in the inclusion of numerous repetitive plane structure details in the local features during feature extraction [11]. These repetitive plane structure details can act as outliers that lead to incorrect geometric correlations between two scenes, causing confusion in feature correspondence. Therefore, data with a continuous plane structure, such as the ground, can hinder correspondence during the model training process and potentially degrade performance. By weakening the influence of structures with repetitive plane patterns from a geometric perspective, we find better correspondence between local features in place recognition. We propose a method to mitigate the impact of unnecessary correspondence from this perspective. Geometric characteristics that are indicative of repetitive flat plane structures include flatness, uprightness, elevation, and laneness [32]. We leverage these attributes to assess the ground using a probabilistic model. The ground can be determined by the following:

| (3) |

where is the ’th section in LiDAR point cloud, is ’th section point probability that the ’th section of LiDAR point from the perspective of planeness, uprightness, elevation, and flatness is ground. is the probability that the ’th section of LiDAR point is ground, expressed as the product of . If in Eqn. (3) is high enough, the ’th section is estimation ground. Detailed formulas are explained in [32]. By weakening the influence of the ground that may induce false-positive correspondece, outliers are eliminated by having lower probability when estimating correspondences, enabling more accurate feature association.

III-B Strengthening the Necessary Correspondences

We also consider the geometric correlation between points in two scenes to assess the importance of features for correspondence estimation. By considering the geometric correlation between points in two scenes, robust correspondence estimation can be made, leading to a reduction in outliers and improvement in performance of place recognition. This results in improved estimation of relocalization and global place recognition.

To enhance the correspondence accuracy based on feature importance assessment, we rewrite Eqn. 1 as the following:

| (4) |

where is the geometric correlation weight for and . This weight matrix is defined as:

where and are probabilistic models of the ground for and in Eqn. (3). is defined as:

| (5) |

Here, represents the probabilistic model of geometric relationship, where a stronger geometric relationship is assumed when the geometric distance between and is closer. Therefore, matrix determines whether two scenes in the LiDAR point cloud have correspondences with each other. In Eqn. (5), as the geometric distance between and decreases, signifying a tighter geometric connection, we allocate a greater weight to establish this correspondence.

Since we rewrite using as in Eqn. (4), our estimated correspondence can be given as the following:

| (6) |

However, we found that when we directly apply Eqn. (6), an issue arises where points with lower weights risk getting truncated, thereby missing the opportunity to be considered for the optimal correspondence. Therefore, we directly multiply to in order to obtain in practice: .

Using and , we finally have the optimal feature as the following [5]:

| (7) |

where is ground truth transformation from B to A. Since is the matching cost determined by and , we can have from Eqn. (7). Since and are correlated to each other in Eqn. (6) and Eqn. (7), we iteratively perform optimizations for both and , similar to Expectation-maximization manner.

The proposed method, which takes into account geometric correlations, enhances the learning process by placing greater emphasis on points that are likely to have a genuine correspondence .

GLOBAL PLACE RECOGNITION PERFORMANCE ACCORDING TO EACH METHOD

| Method | AP Metrics 1 | AP Metrics 2 | |||||||

| KITTI | KITTI-360 | KITTI | KITTI-360 | ||||||

| 00 | 08 | 02 | 09 | 00 | 08 | 02 | 09 | ||

| Scan Context [19] | 0.96 | 0.65 | 0.81 | 0.90 | 0.47 | 0.21 | 0.32 | 0.31 | |

| OverlapNet [12] | 0.95 | 0.32 | 0.14 | 0.70 | 0.60 | 0.20 | 0.05 | 0.33 | |

| LCDNet [5] | 0.97 | 0.88 | 0.87 | 0.91 | 0.95 | 0.73 | 0.76 | 0.83 | |

| Ours | 0.99 | 0.90 | 0.91 | 0.94 | 0.98 | 0.79 | 0.82 | 0.88 | |

POINT CLOUD REGISTRATION PERFORMANCE ACCORDING TO EACH METHOD

| Method | Success rate [%] | RME [DEG] | TME [m] | |||||||||||

| KITTI | KITTI-360 | KITTI | KITTI-360 | KITTI | KITTI-360 | |||||||||

| 00 | 08 | 02 | 09 | 00 | 08 | 02 | 09 | 00 | 08 | 02 | 09 | |||

| Scan Context [19] | - | - | - | - | 1.92 | 3.11 | 5.49 | 6.80 | - | - | - | - | ||

| OverlapNet [12] | - | - | - | - | 3.89 | 65.45 | 76.74 | 33.62 | - | - | - | - | ||

| LCDNet [5] | 93.14 | 61.75 | 82.23 | 90.61 | 0.88 | 2.73 | 1.61 | 1.08 | 0.77 | 1.65 | 1.16 | 0.91 | ||

| Ours | 96.39 | 59.59 | 89.84 | 93.99 | 0.70 | 2.62 | 1.38 | 0.92 | 0.59 | 2.13 | 0.89 | 0.73 | ||

COMPARISON OF PERFORMANCE AMONG DNN-BASED METHODS

| Dataset | Sequences | Global Place Recognition | Point Cloud Registration | |||||

| Max F1 | recall@1 [%] | recall@5 [%] | successful rate [%] | RME [DEG] | TME [m] | |||

| LCDNet | KITTI | 00 | 0.927 | 97.681 | 98.870 | 93.14 | 0.876 | 0.775 |

| Ours | 0.951 | 98.870 | 99.762 | 96.39 | 0.703 | 0.597 | ||

| LCDNet | 02 | 0.847 | 88.317 | 94.853 | 76.21 | 0.989 | 1.432 | |

| Ours | 0.899 | 93.045 | 99.304 | 82.32 | 0.956 | 1.198 | ||

| LCDNet | 08 | 0.812 | 84.250 | 96.941 | 61.75 | 2.730 | 1.645 | |

| Ours | 0.836 | 87.461 | 97.094 | 59.59 | 2.624 | 2.130 | ||

| LCDNet | KITTI-360 | 02 | 0.798 | 88.678 | 95.809 | 82.23 | 1.606 | 1.164 |

| Ours | 0.831 | 92.520 | 98.282 | 89.83 | 1.380 | 0.891 | ||

| LCDNet | 04 | 0.783 | 89.675 | 95.838 | 87.72 | 1.397 | 0.996 | |

| Ours | 0.793 | 93.257 | 97.952 | 92.59 | 1.178 | 0.789 | ||

| LCDNet | 05 | 0.811 | 85.647 | 93.442 | 86.03 | 1.626 | 1.034 | |

| Ours | 0.816 | 89.288 | 96.476 | 93.07 | 1.248 | 0.778 | ||

| LCDNet | 06 | 0.879 | 90.940 | 97.241 | 86.52 | 1.257 | 1.025 | |

| Ours | 0.880 | 93.054 | 98.147 | 89.52 | 1.175 | 0.876 | ||

| LCDNet | 09 | 0.828 | 91.047 | 96.654 | 90.61 | 1.084 | 0.905 | |

| Ours | 0.871 | 94.722 | 98.645 | 93.99 | 0.927 | 0.732 | ||

IV Experiments

IV-A Implementation and Setup

To evaluate the performance of place recognition, we check the results from both the global place recognition and point cloud registration perspectives. we use the KITTI odometry dataset [33] and KITTI-360 dataset [34] to evaluate our proposed method. The KITTI and KITTI-360 datasets are LiDAR datasets, making them suitable for evaluation due to their inclusion of moving vehicles in various dynamic urban environments. KITTI Sequence 00 have same driving direction of the loop, and KITTI sequence 08 has inverse driving direction of the loop. KITTI-360 sequences 02 and 09 both have driving loops in both same and inverse directions, and they contain the highest number of loop closures. Therefore, We used KITTI sequences 00, 08 and KITTI-360 sequences 02, 09 for testing. Hence, we employ these sequences to assess the performance of our method across loops originating from both forward and reverse driving directions. In addition, for a detailed performance comparison with the state-of-the-art DNN-based method, LCDNet, we conducted tests on KITTI sequences 00, 02, 08 (excluding sequences 05, 06, 07, 09 that used to train) and KITTI-360 sequences 02, 04, 05, 06, and 09.

Similar to [5], to train and evaluate our method we designate locations within 4 meters as positive (same place) pairs and locations more than 10 meters apart as negative (different place) pairs at the source point cloud and the target map. For preprocessed ground training, we remove the ground that uses the probability model [35] to estimate the ground and only use non-ground points for training. In training using in Eqn. (7), we find the overlap points on 4096 keypoints extracted from baseline in 3D coordinates. To emphasize the effect of geometric relationships, when the points of two scenes that have registered point clouds are within 0.5m of each other, it is considered a point of the overlapping location. In the case of overlapping location, we assign a value of 0 to in Eqn. (5). Conversely, for points that do not overlap, we assign with a value of . We train the DNN-based model [5] and Ours on KITTI sequences 05, 06, 07, and 09. All methods use a batch size of 6 and epoch of 150 and are trained with the same hyperparameters on NVIDIA 4090 RTX GPU.

IV-B Global Place Recognition

We evaluate our proposed method from the perspective of global place recognition using two metrics: AP (Average Precision) Metric 1 and AP Metric 2. AP Metric 1 evaluates loop-closing detection performance for the pair with the highest similarity between the current point cloud and the previous point cloud. In contrast, AP Metric 2 evaluates loop-closing detection performance for not only the pair with the highest similarity but also all previous point clouds. In real-world loop detection, the measurement approach of AP Metric 1 can be more accurate. However, to assess loop detection performance in challenging scenarios, AP Metric 2 may be a better evaluation metric [5]. We evaluate the global place recognition performance by comparing our method with state-of-the-art techniques, including Scan Context [19], OverlapNet [12], and LCDNet [5]. The results can be found in Table I. Additionally, the global place recognition section in Table III provides a detailed comparison between the DNN-based methods, LCDNet and our method, by summarizing Max F1, recall@1, and recall@5, which represent the percentage of correct answers within the top 1% and 5% of candidates. The metrics with the highest performance in Table I and Table III are highlighted in bold symbol.

In most cases, the AP Metric 1 approach shows similar results for forward loop detection, but significant differences are observed in reverse loop detection. Particularly, OverlapNet exhibits notably low performance in sequences with reverse loops, such as KITTI sequence 08 and KITTI-360 sequence 02, and Scan Context also drops to 0.65 in KITTI sequence 08. In the case of AP Metric 2, both OverlapNet and Scan Context demonstrate low performance in KITTI sequence 08, which includes reverse loops, with an AP of 0.20 and 0.21. On the other hand, Our method does not show a significant performance drop. At the same time, Our method consistently outperforms LCDNet across all sequences, achieving the highest performance. The performance comparison between LCDNet and Our method in Table III also shows higher performance for Our method across all sequences. This result demonstrates that considering the necessary information for correspondence estimation helps in rotation and translation invariant global place recognition.

-0cm-0cm

IV-C Relocalization

We also evaluate our proposed technique from the point cloud registration perspective using Success rate, RME (Rotation Mean Error), and TME (Translation Mean Error). Successful rate indicates the success ratio of the point cloud registration; where we consider a case to be successful if both the rotation error is less than 5 degrees and the translation error is less than 2 meters. Similar to the global place recognition evaluation, we assess point cloud registration performance by comparing it with state-of-the-art techniques, including Scan Context, OverlapNet, and LCDNet. Both LCDNet and ours can calculate the 6-DOF transform between the two point clouds using UOT-based relative position estimation, while Scan Context and OverlapNet can only estimate the yaw angle between point clouds. Therefore, Scan Context and OverlapNet measure metrics related to RME only. The results can be found in Table II. The point cloud registration section in Table III provides a detailed comparison between the DNN-based LCDNet and our method, summarizing the performance for success rate, RME, and TME across multiple sequences. The metrics with the highest performance in Table II and Table III are highlighted in bold symbol.

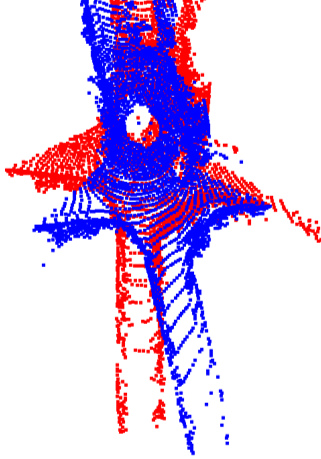

In the case of RME, all methods perform well in forward loops, but as with global place recognition, differences in performance are noticeable in reverse loops. OverlapNet, in particular, shows a significant error of 76.74 degrees in KITTI-360 sequence 02, indicating its inability to estimate rotation accurately in reverse loops. Scan Context also exhibits RME measurements exceeding 5 degrees in some sequences. However, DNN-based models consistently achieve RME measurements below 5 degrees for all sequences. Additionally, Our method outperforms most performance metrics, not only in terms of RME. LCDNet achieves higher performance in success rate and TME for KITTI sequence 08. However, according to the summarized point cloud registration in Table 3, our method outperforms in all other sequences. As shown in Fig. 3, it can be observed that our method consistently achieves higher point cloud registration performance in most cases due to its robust feature correspondence. This result highlights the importance of feature correspondence, as predicted by geometric correlation, in point cloud registration.

V Conclusion

In this paper, we presented a method of robust feature correspondence estimation for improving 3D place recognition and relocalization. We proposed a probabilistic technique to enhance the necessary features and suppress the unnecessary features simultaneously in the estimation of correspondence, by considering the geometric characteristics of the 3D scenes. To weakening the strong unnecessary correspondence, we first showed that features on the ground can act as outliers when estimating correspondence therefore should be preprocessed. We also found the key correlations through the geometric registration method to estimate robust correspondence by focusing on necessary features which can enhance both global place recognition and relocalization. We provided the evaluation results to show improvement performances in the place recognition problem on the KITTI odometry dataset and KITTI-360 dataset, an urban dynamic environment dataset. Our approach demonstrated significant improvement in both 3D point cloud registration and global place recognition performance.

References

- [1] S. Thrun, W. Burgard, and D. Fox, Probabilistic robotics, ser. Intelligent robotics and autonomous agents. MIT Press, 2005.

- [2] H. Yu, J. Moon, and B. Lee, “A variational observation model of 3d object for probabilistic semantic slam,” in 2019 International Conference on Robotics and Automation (ICRA). IEEE, 2019, pp. 5866–5872.

- [3] H. Jang, S. Yoon, and A. Kim, “Multi-session underwater pose-graph slam using inter-session opti-acoustic two-view factor,” in 2021 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2021, pp. 11 668–11 674.

- [4] M. Mittal, R. Mohan, W. Burgard, and A. Valada, “Vision-based autonomous uav navigation and landing for urban search and rescue,” in Robotics Research: The 19th International Symposium ISRR. Springer, 2022, pp. 575–592.

- [5] D. Cattaneo, M. Vaghi, and A. Valada, “Lcdnet: Deep loop closure detection and point cloud registration for lidar slam,” IEEE Transactions on Robotics, vol. 38, no. 4, pp. 2074–2093, 2022.

- [6] H. Durrant-Whyte and T. Bailey, “Simultaneous localization and mapping: part i,” IEEE robotics & automation magazine, vol. 13, no. 2, pp. 99–110, 2006.

- [7] T. Bailey and H. Durrant-Whyte, “Simultaneous localization and mapping (slam): Part ii,” IEEE robotics & automation magazine, vol. 13, no. 3, pp. 108–117, 2006.

- [8] K. Vidanapathirana, M. Ramezani, P. Moghadam, S. Sridharan, and C. Fookes, “Logg3d-net: Locally guided global descriptor learning for 3d place recognition,” in 2022 International Conference on Robotics and Automation (ICRA). IEEE, 2022, pp. 2215–2221.

- [9] A. Segal, D. Haehnel, and S. Thrun, “Generalized-icp.” in Robotics: science and systems, vol. 2, no. 4. Seattle, WA, 2009, p. 435.

- [10] P. Dellenbach, J.-E. Deschaud, B. Jacquet, and F. Goulette, “Ct-icp: Real-time elastic lidar odometry with loop closure,” in 2022 International Conference on Robotics and Automation (ICRA). IEEE, 2022, pp. 5580–5586.

- [11] T. Shan and B. Englot, “Lego-loam: Lightweight and ground-optimized lidar odometry and mapping on variable terrain,” in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2018, pp. 4758–4765.

- [12] X. Chen, T. Läbe, A. Milioto, T. Röhling, O. Vysotska, A. Haag, J. Behley, and C. Stachniss, “Overlapnet: Loop closing for lidar-based slam,” arXiv preprint arXiv:2105.11344, 2021.

- [13] J. Komorowski, M. Wysoczanska, and T. Trzcinski, “Egonn: Egocentric neural network for point cloud based 6dof relocalization at the city scale,” IEEE Robotics and Automation Letters, vol. 7, no. 2, pp. 722–729, 2021.

- [14] J. Du, R. Wang, and D. Cremers, “Dh3d: Deep hierarchical 3d descriptors for robust large-scale 6dof relocalization,” in Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part IV 16. Springer, 2020, pp. 744–762.

- [15] J. Li and G. H. Lee, “Usip: Unsupervised stable interest point detection from 3d point clouds,” in Proceedings of the IEEE/CVF international conference on computer vision, 2019, pp. 361–370.

- [16] J. Jiang, J. Wang, P. Wang, P. Bao, and Z. Chen, “Lipmatch: Lidar point cloud plane based loop-closure,” IEEE Robotics and Automation Letters, vol. 5, no. 4, pp. 6861–6868, 2020.

- [17] S. Yang and S. Scherer, “Monocular object and plane slam in structured environments,” IEEE Robotics and Automation Letters, vol. 4, no. 4, pp. 3145–3152, 2019.

- [18] G. Kim, B. Park, and A. Kim, “1-day learning, 1-year localization: Long-term lidar localization using scan context image,” IEEE Robotics and Automation Letters, vol. 4, no. 2, pp. 1948–1955, 2019.

- [19] G. Kim and A. Kim, “Scan context: Egocentric spatial descriptor for place recognition within 3d point cloud map,” in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2018, pp. 4802–4809.

- [20] G. Kim, S. Choi, and A. Kim, “Scan context++: Structural place recognition robust to rotation and lateral variations in urban environments,” IEEE Transactions on Robotics, vol. 38, no. 3, pp. 1856–1874, 2021.

- [21] M. A. Uy and G. H. Lee, “Pointnetvlad: Deep point cloud based retrieval for large-scale place recognition,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 4470–4479.

- [22] C. R. Qi, H. Su, K. Mo, and L. J. Guibas, “Pointnet: Deep learning on point sets for 3d classification and segmentation,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 652–660.

- [23] R. Arandjelovic, P. Gronat, A. Torii, T. Pajdla, and J. Sivic, “Netvlad: Cnn architecture for weakly supervised place recognition,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 5297–5307.

- [24] J. Komorowski, “Minkloc3d: Point cloud based large-scale place recognition,” in Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2021, pp. 1790–1799.

- [25] L. Hui, H. Yang, M. Cheng, J. Xie, and J. Yang, “Pyramid point cloud transformer for large-scale place recognition,” in Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), October 2021, pp. 6098–6107.

- [26] S. Shi, C. Guo, L. Jiang, Z. Wang, J. Shi, X. Wang, and H. Li, “Pv-rcnn: Point-voxel feature set abstraction for 3d object detection,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 10 529–10 538.

- [27] X. Xu, S. Lu, J. Wu, H. Lu, Q. Zhu, Y. Liao, R. Xiong, and Y. Wang, “Ring++: Roto-translation-invariant gram for global localization on a sparse scan map,” IEEE Transactions on Robotics, 2023.

- [28] T. Séjourné, G. Peyré, and F.-X. Vialard, “Unbalanced optimal transport, from theory to numerics,” arXiv preprint arXiv:2211.08775, 2022.

- [29] R. Dubé, A. Cramariuc, D. Dugas, J. Nieto, R. Siegwart, and C. Cadena, “Segmap: 3d segment mapping using data-driven descriptors,” arXiv preprint arXiv:1804.09557, 2018.

- [30] W. Zhang and C. Xiao, “Pcan: 3d attention map learning using contextual information for point cloud based retrieval,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 12 436–12 445.

- [31] C. R. Qi, L. Yi, H. Su, and L. J. Guibas, “Pointnet++: Deep hierarchical feature learning on point sets in a metric space,” in Advances in Neural Information Processing Systems, I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett, Eds., vol. 30. Curran Associates, Inc., 2017. [Online]. Available: https://proceedings.neurips.cc/paper˙files/paper/2017/file/d8bf84be3800d12f74d8b05e9b89836f-Paper.pdf

- [32] H. Lim, M. Oh, and H. Myung, “Patchwork: Concentric zone-based region-wise ground segmentation with ground likelihood estimation using a 3d lidar sensor,” IEEE Robotics and Automation Letters, vol. 6, no. 4, pp. 6458–6465, 2021.

- [33] A. Geiger, P. Lenz, and R. Urtasun, “Are we ready for autonomous driving? the kitti vision benchmark suite,” in 2012 IEEE conference on computer vision and pattern recognition. IEEE, 2012, pp. 3354–3361.

- [34] Y. Liao, J. Xie, and A. Geiger, “KITTI-360: A novel dataset and benchmarks for urban scene understanding in 2d and 3d,” Pattern Analysis and Machine Intelligence (PAMI), 2022.

- [35] S. Lee, H. Lim, and H. Myung, “Patchwork++: Fast and robust ground segmentation solving partial under-segmentation using 3d point cloud,” in 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2022, pp. 13 276–13 283.