NAS-ASDet: An Adaptive Design Method for Surface Defect Detection Network using Neural Architecture Search

Abstract

Deep convolutional neural networks (CNNs) have been widely used in surface defect detection. However, no CNN architecture is suitable for all detection tasks and designing effective task-specific requires considerable effort. The neural architecture search (NAS) technology makes it possible to automatically generate adaptive data-driven networks. Here, we propose a new method called NAS-ASDet to adaptively design network for surface defect detection. First, a refined and industry-appropriate search space that can adaptively adjust the feature distribution is designed, which consists of repeatedly stacked basic novel cells with searchable attention operations. Then, a progressive search strategy with a deep supervision mechanism is used to explore the search space faster and better. This method can design high-performance and lightweight defect detection networks with data scarcity in industrial scenarios. The experimental results on four datasets demonstrate that the proposed method achieves superior performance and a relatively lighter model size compared to other competitive methods, including both manual and NAS-based approaches.

keywords:

deep learning, neural architecture search (NAS), surface defect detection, defect segmentation1 Introduction

In industrial production, defects inevitably occur on the end-product surface. Surface defect detection is an effective method to control the quality of industrial products. With the rapid development of intelligent manufacturing, automated and intelligent defect detection has gradually become critical [1].

Recently, deep convolutional neural networks (CNNs) have been proven effective in surface defect detection. By designing different neural networks, improved detection performance is obtained. For example, [2] and [3] enhance the ability to focus on critical defects in complex semantics by incorporating attention modules. [4] and [5] design deformable convolution and dilated convolution, respectively, to improve the detection of irregular and diverse defects by expanding the receptive fields. [6, 7, 8] use multi-scale feature fusion to integrate information from different levels, enhancing the awareness of defect scale variations. However, industrial scenarios encompass a wide range of surface defect types, each with significantly different characteristics [9]. There is no unified design paradigm for how to design effective network matching data characteristics.

The design of traditional detection networks often relies on human expertise and repetitive experimentation, with certain difficulties and challenges [10]. Firstly, the design process lacks automation and optimization capabilities. Manual network design and adjustments require extensive trial and error, consuming a lot of manpower, time, and computational resources. Secondly, due to limitations in human cognition, traditional designs usually rely on predetermined network connectivity (VGGNet [11], ResNet [12], etc), which may limit the ability to exploit feature information, resulting in suboptimal performance. Moreover, surface defects are diverse and the features of different types of defects are quite different. It is difficult for a single network to show general good performance on different complex detection tasks, and it needs to rely on additional manual design and adjustment.

To overcome these shortcomings, we introduce neural architecture search (NAS) into the network design for surface defect detection. NAS can automatically design data-driven networks to adapt to diverse requirements, so it can improve the efficiency of network design and make the searched network have excellent performance. At present, NAS has made many breakthroughs in the field of natural imaging. Early NAS research search the entire network from scratch. The resultingly huge resource consumption limited the development of NAS. Therefore, researchers improved NAS with respect to search space and search strategy. Regarding the search space, NASNet [13] and NAS-Unet [14] use repeated cells to limit the search space size, which reduced the search difficulty. LiDNAS [15] built the search space on predefined backbone networks to balance layer diversity and search space size. Regarding the search strategy, by adding the weight sharing mechanism, the gradient descent search strategy reduces the search cost.

Although NAS has been successfully applied in natural scenarios, there are few reports in the literature on the performance of NAS for surface defect detection network design in complex industrial scenarios. When applying NAS technology to surface defect detection network design, it is important to focus on unique industrial requirements, distinct from natural scenarios.

-

1.

Limited available defect samples: The nature of industrial production lines leads to the generation of limited defective samples, which poses challenges to the search process. To overcome this issue, one solution is to use a reduced search space to decrease data requirements [16]. However, due to restricted layer diversity, using reduced search spaces designed for natural scenarios may restrict the expressive power and potentially overlook excellent architectures for defect detection tasks. Therefore, NAS methods for surface defect detection should use a small search space and give careful consideration to defect features, focusing on structures and parameters which relevant to the defect characteristics to strike a balance between search space size and expressive capability.

-

2.

Stable detection accuracy and robustness: Unlike natural image segmentation, which focuses more on overall scene understanding, surface defect detection requires stable precise localization and identification of boundary contours to ensure product quality. Additionally, the production line introduces environmental disturbances like lighting variations, noise, and occlusion, making it necessary for the detection capability to be robust. Therefore, NAS methods need to fully consider the challenges in surface defect in order to adaptively design the network that meets the requirements.

-

3.

Lightweight and low-computation: Detection equipment on industrial production lines typically faces constraints, including limited memory space and constrained computational resources. Therefore, designing a low-computation and lightweight network is a crucial aspect of industrial network. To meet the requirements of detection accuracy within these resource limitations, the surface defect detection network designed by NAS should possesses lightweight characteristics while ensuring network performance.

To achieve the aforementioned requirements, we propose a NAS method specifically tailored for designing surface defect detection networks. Our approach considers both the search space and search strategy. Regarding the search space, we design a refined and industry-appropriate search space that enables NAS has good network design ability with limited defect samples. This search space enables data-driven design of lightweight detection networks that robustly adapt to various surface defect scenarios while balancing accuracy and computation. Additionally, we incorporate prior knowledge from manually designed detection networks into the propose NAS framework, enhancing the accuracy and robustness of the searched networks for different detection tasks. This involves the use of large receptive field cells and searchable attention operations to improve adaptability in complex environmental conditions, as well as a multi-scale feature fusion structure that can adaptively adjust the feature distribution to handle diverse shapes and scales. As for the search strategy, we enhance the efficiency of the gradient optimization search strategy (DARTS), so that the search space can be explored more efficiently, ensuring a performance-driven and time-efficient network design process.

The contributions of this article are as follows:

-

1.

We propose a method to adaptively design the surface defect detection networks based on NAS, called NAS-ASDet. This scheme has a refined and industry-appropriate search space, which can adaptively search the lightweight defect detection network in industrial scenarios with limited data (compared to natural scenarios), reducing the workload of manual detection network design.

-

2.

A basic cell containing multiple receptive fields with searchable attention operations are provided to construct the search space, improve the detection ability of irregular and diverse defects, and enhance the ability to automatically focus on key defects in complex environments.

-

3.

A multi-scale feature fusion that can adaptively adjust the feature distribution is designed in the proposed NAS framework, enhancing the network’s adaptability to defects of various scales and further improving the detection accuracy.

-

4.

We design a progressive search strategy with a deep supervision mechanism based on gradient optimization search strategy to effectively explore the search space. This strategy can make the search process better and faster to adjust the architecture to adapt to the defects to be detected, and further improve the efficiency of network design.

-

5.

The proposed method is capable of searching for networks with state-of-the-art resutls on four different surface defect datasets. Compared to recent manually detection networks, NAS-ASDet utilizes only approximately 10% of the parameters and 5% of the FLOPs but achieving the best performance. This proves that proposed NAS method has certain generality and theoretical value.

The rest of this article is organized as follows. Section 2 briefly introduces the related research work, including CNN-based methods for surface defect detection and the development of NAS methods. The proposed method for adaptive surface defect detection is described in Section 3. Section 4 presents the experiments and discussions. Conclusions are given in Section 5.

2 Related work

2.1 CNN-based Surface Defect Detection

Since pixel-level defect detection can describe the defect contour boundary, which provides a more valuable reference for the evaluation of defect severity, segmentation maps are often used for defect localization. Inspired by FCN [17], many methods (U-Net [18], PSPNet [19], DeepLab[20], etc.) try to extract more informative features from image patches, leading to successful segmentation results. However, the performance of algorithms is often limited by the complex and diverse defect types in industrial scenes. Therefore, in recent years, defect segmentation methods have been adapted according to specific application scenarios to achieve improved detection performance.

Addressing the challenges of fuzzy boundaries (low contrast) and noise interference, DCAM-Net [4] used deformable convolution and attention mechanism to locate the contrast of irregular strip surface defects. TSERNet [21] employed a prediction and refinement strategy, using edge information twice to generate saliency maps with more accurate boundaries and precise defect positions for steel strip defects. Addressing the challenges of multi-scale variations of inspected defects, MRD-Net [7] captured short and long distance patterns through a multiscale feature enhancement fusion and reverse attention network. CSEPNet [22] designed a cross-scale edge purification network to highlight defects in steel images and maintain important edge information. Addressing the challenge of detecting small defect targets, [6] realized the ultrasmall bolt defect detection through feature fusion, attention mechanisms, and extraction of fused salient regions. [23] improved the detection effect of small targets in wire and arc additive manufacturing by attaching a multi-SPP structure to the FPN. In addition, some studies such as FHENet [24] and BV-YOLOv5S [25] focus on the design of lightweight detection networks to address the deployment challenges of large models in industrial scenarios.

Although these methods achieve good performance, they still require improvement: 1) They are usually based on a predetermined network connectivity. This means that features are extracted restrictively and fixedly, rather than being driven by data features, which limits performance. 2) Even though some methods try to design from scratch, they are usually customized for specific tasks. There is no single network that has shown competency for all detection tasks. Therefore, designing effective networks for specific tasks consumes considerable time and computation resources.

2.2 Neural Architecture Search (NAS)

NAS aims to design the neural architecture in an automatic way to maximize performance while using limited computing resources.

2.2.1 Gradient Optimization-Based NAS

The differentiable search method converts a discrete search space into a continuous differentiable form such that gradient descent can be applied to the search process to exceed the black-box optimization efficiency, such as reinforcement learning and evolutionary algorithms. The earliest gradient-based idea was proposed in DARTS [26]. But there remain two problems [27] in gradient-based DARTS: (a) Full candidate operations participate in the whole search process, which leads to longer search times and heavier computational overhead; (b) The transfer of rough decoupling may leads to performance variation. Many studies have improved DARTS: DATA [28] developed the ensemble Gumbel softmax estimator, which realized migration between the search and validation stages. PC-DARTS [29] proposed channel sampling and edge normalization technologies to reduce GPU resource consumption. P-DARTS [30] considered performance collapse and adopted a progressive search strategy. Even so, stably and efficiently searching strategies remain a popular research topic.

2.2.2 Neural Architecture Search for Image Segmentation

Auto-DeepLab [31] is generally recognized as the pioneering work demonstrating NAS application in image segmentation. It also extended the gradient optimization-based strategy to the segmentation task for the first time. NAS-Unet [14] improved on the baseline U-net using NAS and showed higher performance. FasterSeg [32] used a multibranch architecture to overcome model breakdown. DCNAS [33] designed a more complex supernet than Auto-DeepLab, which added cross-layer connections to the search space. DNAS [34] designed a three-level decoupled search strategy to reduce the training difficulty. In addition, in order to eliminate the deployment pressure of large networks, some methods [35, 36, 37, 38] also take into account the combined effect of NAS and hardware awareness to search for lighter architectures. However, most of the current NAS methods are designed based on natural scenes.

Although there have been some recent attempts of NAS in industrial scenarios, most of them are limited to defect classification or focused on specific tasks. For example, [39] and [40] realized defect classification of steel cracks using NAS. [41] explored an automated defect detection method for industry wood veneer, and [42] developed a NAS-based detection approach for analyzing defects in photovoltaic cells in electroluminescence images. Such methods do not meet the requirements of robustly designing precise defect localization in diverse industrial scenarios.

Therefore, the design method of NAS pixel-level defect detection network with high performance in industrial scenarios remains largely unexplored.

3 Proposed method

3.1 System Overview

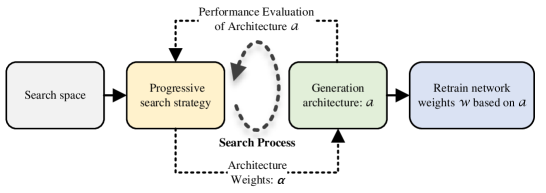

Considering the difficulty of manually designing detection networks and the challenges posed by the unique industrial requirements to NAS, we focus on the surface defect detection and propose an adaptive network design method, called NAS-ASDet. In this method, surface defect detection is regarded as a pixel-level segmentation task. NAS-ASDet allows the network to adaptively adjust the connection mode according to the data characteristics, and finally obtain a certain detection network with both high performance and lightweight. The adaptive network design process of the proposed method is shown in Figure. 1.

-

1.

First, the refined and industry-appropriate lightweight search space is defined. It is composed of repeatedly stacked expressive basic cells, where the cells consist of a given set of candidate operations (e.g., convolution, pooling). Correspondingly, the search scope is limited to the structure of the cell rather than the whole network.

-

2.

Next, the lightweight network architecture is searched. A progressive search strategy is used to explore the search space gradually. To make gradient optimization applicable, each candidate operation is assigned an updatable architectural weight , and the cell consists of candidate operations with weight assignments. The cell performance is fed back to update . According to the contribution of the candidate operation to performance, the weak operations are progressively removed so that the basic cells with favorable performance are obtained.

-

3.

Then, the original supernet is replaced by the searched cells to obtain a definite architecture. On this basis, the network weights of the determined architecture are retrained to ensure complete convergence.

-

4.

Finally, the fully trained deterministic lightweight network is used to achieve end-to-end defect segmentation and then to evaluate the detection performance.

The main contributions of the above NAS process are two core contents: (a) The design of the refined and industry-appropriate lightweight search space, including the basic cell containing multiple receptive fields with searchable attention operations and the network architecture with adaptively fused multiscale features; (b) The design of a progressive search strategy with a deep supervision mechanism, where the search process is divided into multiple stages to explore the search space better and faster.

3.2 Search Space Design

This section describes the refined and industry-appropriate lightweight search space designed for surface defect detection, which defines the architecture set that can be represented in theory. Considering the data scarcity in industrial scenarios, a small search space size should be used, inspired by [43], we use a reduced search space based on repeatedly stacked cell. Specifically, this cell-based search space includes two levels: cell-level and network-level, as shown in Figure. 2. At the cell level, we design the lightweight cell containing multiple receptive fields with searchable attention operations to enrich the candidate operations. At the network level, we propose a new network-level framework with a multi-scale feature fusion that can adaptively adjust the feature distribution.

3.2.1 Cell Level Search Space

Since a small search space size should be used to reduce search difficulty, noting that many handcrafted architectures are based on repeated fixed blocks and inspired by [13], we desgin the search space for the lightweight network based on two types of repeatedly stacked lightweight cells: normal cells and reduction cells. Both of these cells follow the same construction pattern, wherein the output feature map of normal cell contains the same dimension as the input and half the size of reduction cell.

Many manually designed detection networks enhance their ability to detect defects in complex, irregular, and diverse contexts by using stronger multiscale representation. Therefore, inspired by Res2Net [44] to expand the receptive field, we design the cell to generate multiple available receptive fields at a finer cell level granularity. The cell-level search space is shown in Figure. 2(a).

As the industrial scene is more seriously affected by complex environments and lighting changes, the manual detection network usually adds attention mechanism to enhance the ability to extract important feature information, but when and how to add attention operation still requires researchers to analyze specific problems. Therefore, we propose to use attention operations to enrich the candidate operations, including channel attention [45] and spatial attention [46]. So that cells can adaptively focus on key defects in complex environments.

Since industrial scenarios have lightweight and low-computation requirements, we first collect basic operations that are widely used in detection networks (such as convolution, pooling, etc.), and then use separable convolutions instead of ordinary convolutions to limit cell lightweighting. And the final full candidate operation set is summarized in Table 1.

| ID | Operation | Note |

| 1 | Zero | no operation |

| 2 | Identity | skip connection |

| 3 | Sep_conv_3x3 | 3x3 separable convolution |

| 4 | Sep_conv_5x5 | 5x5 separable convolution |

| 5 | Sep_conv_7x7 | 7x7 separable convolution |

| 6 | Dil_conv_3x3 | 3x3 dilated separable convolution |

| 7 | Dil_conv_5x5 | 5x5 dilated separable convolution |

| 8 | Max_pool_3x3 | 3x3 max pooling |

| 9 | Avg_pool_3x3 | 3x3 average pooling |

| 10 | Channel_att | channel attention |

| 11 | Spatial_att | spatial attention |

Each cell is viewed as a directed acyclic graph consisting of multiple edges and nodes. Two of nodes (,) are inputs, one () is output, and the remaining are intermediate nodes. The transformation from the -node to the -node is connected by a mixed edge, which is a mixture of all candidate operations denoted . To enable gradient optimization, the selection of discrete operations is relaxed as a continuous optimization problem. Specifically, we assign each candidate operation in each edge with an architecture weight , and is applied to ensure that , . The information flow between the -node and -node can be calculated:

| (1) |

where represents that is the forward node of , and the data flow needs to be transmitted from to . represents the -th node feature map in cells, and each candidate operation belongs to candidate operation set space with a relative weight . represents the feature map corresponding to node after data is transmitted from node to node .

Each intermediate node accepts all forward intermediate node feature maps and the output of the previous two cells:

| (2) |

where represents the feature map of the -th intermediate node, denotes the forward cell output, and indicate the previous and previous-previous cells, respectively.

Then, the cell concatenates all intermediate nodes and the residual feature map sum as its output:

| (3) |

where represents the output of the -th cell.

Finally, the architecture with candidate operation set can be formed by stacking multiple cells:

| (4) |

where and denote the normal cell and reduction cell. represents the direction of information transmission.

This lightweight cell level search space provides several guarantees for detection performance: 1) Instead of using fixed connection and operations, the cell can be automatically searched and adaptively select an appropriate connection mode, such that more appropriate fine-grained features can be obtained and multiple available receptive fields can be provided, which improves the ability to detect defects. 2) Due to the addition of forward node , the network can choose to accept more forward information and expand the receptive field. 3) Attention operations enrich the candidate operation set, and it is automatically determined by search, which enhances the ability to automatically focus on key defects in complex environments.

3.2.2 Network Level Search Space

The segmentation NAS pioneered by Auto-DeepLab [31] usually uses single-level features for target prediction. However, the defect scales are varied, and single-layer features offer limited ability to recognize different scale targets. Noting that the effectiveness of combining multiscale features for performance improvement has been demonstrated in handcrafted architectures, we are motivated to integrate these effective knowledge of multiscale feature fusion into the proposed NAS framework to enhance the ability of the network to deal with multi-scale challenges, as shown in Figure. 2(b).

It should be noted that although handcrafted network performance proves that multiscale feature combination is effective, it does not indicate that in FPN-based detection architectures, balanced multiscale feature fusion is best. Different surface defects present different shapes and scales, which signifies that different level features have different levels of importance for detection, e.g., shallow features are more important for small-scale detection, and vice versa [7]. Therefore, we assign different weights at different feature levels, which are learned during the training process, toward adaptively adjusting the importance distribution of features and focusing on more useful information. The implementation details are as follows.

First, for given defect images, multilevel features are extracted by repeatedly stacking cells. The last layer features of each level are used for subsequent adaptive feature fusion. To integrate feature maps on different scales, these maps are resized to :

| (5) |

where indicates the upsampling operation by enlarging features by times, and . We concatenate in the channel dimension to obtain the original feature fusion map :

| (6) |

Next, learnable weights are added to , which are used to adjust the importance distribution of different features. Specifically, we use the average pooling operation to obtain the representation vectors of each layer in . The fully connected layer and nonlinear activation function are used to encode to obtain :

| (7) | ||||

where represents the parameter of .

Then, is normalized to through the function and used to represent feature importance:

| (8) |

Therefore, network can adaptively adjust the feature importance by learning . The enhanced features can be expressed:

| (9) |

Finally, we resize the feature map in using an upsampling operation to complete end-to-end segmentation prediction:

| (10) |

Thus, the lightweight network-level search space design is completed.

The network level search space considered multiscale features inspired by handcrafted architectures, which increases the lightweight cell-based search space expression. The generated architecture can not only extract data-driven multiscale features but also adaptively adjusts the feature’s importance distribution to cope with scale challenges, which guarantees effective detection under broadly diverse and complex factors.

3.3 Search Strategy

In this section, we introduce a progressive search strategy with a deep supervision mechanism to explore the search space. As discussed in Section 2.2.1, the original DARTS suffers from extra search costs and rough decoupling. Therefore, we divide the search process into multiple stages, gradually removing operations at each stage, thereby enabling the direct generation of the architecture without additional decoupling. Additionally, inspired by deeply supervised networks, we employ deep supervision to facilitate rapid adaptation to target defects. This search strategy not only reduces optimization challenges in complex search processes but also contributes to improved detection performance and reduced network design costs. The entire search diagram is shown in Figure. 3.

First, because of the abovementioned continuous relaxation, the search process can be formulated as a nested optimization problem:

| (11) | ||||

This indicates that architectural weights are found to minimize validation loss , where represents the best network weight under the given architecture . The network weight is updated to on the architecture training dataset by fixing architectural weight , and then is updated on the weight training dataset by fixing .

Next, the search process is decomposed into multiple stages. After each stage of training, operation-level pruning is used to gradually remove operations with small contributions. Specifically, is used to represent the importance of each operation. At the end of stage training, candidate operations with low importance are removed and important candidate operations are retained.

| (12) |

where represents the shrink search space after -th pruning.

Therefore, the original space can be gradually narrowed:

| (13) |

where denotes the new candidate operation set after pruning according to the sorting of , and represents the new search space obtained in the -th stage.

This process is repeated in each stage until each edge remains to be considered in the unique definite operation.

Then, for each intermediate node in the cell, the two strongest operations from different nodes collected from all previous nodes are kept, while other transformations are masked. The strongest operations are defined as follows:

| (14) |

Finally, the determined architecture is searched as:

| (15) |

Additionally, deep supervision is applied to reduce the optimization difficulty of the complex search process. This mechanism can be implemented by adding the branch loss to the total training loss:

| (16) |

where denotes the branch loss of the -th feature map and . The total training loss is defined as the sum of the branch loss and the segmentation loss: , where denotes the segmentation loss between the defect segmentation results and the data labels. In this article, the same loss function as [47] is used as the branch loss, which consists of binary cross-entropy (BCE) and dice similarity coefficient (DICE).

4 Experiments and Results

In this section, we conduct experiments on industrial datasets to determine: RQ1: How do the networks designed by NAS-ASDet compare with manually designed detection networks and other NAS methods? RQ2: How does the design in NAS-ASdet affect its performance: (a) How advantageous is data-driven multiscale feature extraction compared with classical feature extraction networks? (b) How effective is the adaptive adjustment of the feature importance distribution? RQ3: How effective is the search strategy used in NAS-ASdet compared to other NAS search strategies?

4.1 Experimental Settings

4.1.1 Experimental Environment

We implement our network on PyCharm with the toolbox PyTorch. In the experiments, the method is trained and tested on NVIDIA Tesla A100 (with 40-GB GPU memory), CUDA vision of 11.4, Pytorch vision of 1.9.0, torchvision vision of 0.10.0, and CentOS Linux 8.0.

4.1.2 Datasets

To evaluate the performance of NAS-ASdet, we conduct experiments on four industrial datasets that encompassed various challenges, including lighting conditions, scale variations, limited sample sizes, etc.

-

1.

MCSD-C dataset: This dataset comes from multiple batches of motor commutator cylinder surface defects. Figure. 4 shows representative surface defect samples that appear on the commutator cylinder surface. The background environment of MCSD-C is complex and dynamic due to changes in production batches and line parameters. These factors leads to diverse lighting conditions and low-contrast defects, combined with defects exhibiting multi-scale variations, increase the difficulty of defect detection. We selected 566 defect samples with 256256. We used 445 images for training and the remaining samples for testing. Figure. 5 (1)-(2) rows shows example defect images and corresponding ground truth in MCSD-C.

Figure 4: Commutator cylinder surface and its surface defect samples. -

2.

RSDDs dataset[48]: RSDDs is a rail tread defect dataset collected from general/heavy transportation tracks. The detection challenges include severe surrounding noise interference and limited defect samples with complex contour shapes. RSDDs contains 128 defect samples with 551250. We augment the original images with a 55-pixel sliding window, and finally obtain 191 training images and 82 testing images. Images are resized to 6464 during training. Figure. 5 (3)-(4) rows shows example defect images and corresponding ground truth in RSDDs.

-

3.

KSDD dataset[49]: It is captured in a controlled industrial environment with visible surface cracks and contains only 54 defect samples. We augment the defect samples with a 500-pixel sliding window, resulting in 191 training images and 82testing images. KSDD primarily evaluates the capability for low-contrast defects with a limited number of sample images. Images are resized to 512512 during training. Figure. 5 (5)-(6) rows shows example defect images and corresponding ground truth in KSDD.

-

4.

DAGM dataset[50]: The artificially created DAGM dataset provides a faithful representation of defects against a textured background. Its main challenge lies in the need to detect multiple types of defects with blurred boundaries under complex backgrounds and textures. In the original DAGM dataset, the defect regions are blanketed roughly by ellipses. In our experiment, four types of defects are selected and redefine the label at the pixel level. Finally we obtain 523 training images and 255 test images, with 512 × 512 original resolution. Figure. 5 (7)-(8) rows shows example defect images and corresponding ground truth in DAGM.

4.1.3 Baselines

We compare NAS-ASDet with state-of-the-art handcrafted networks and existing NAS methods.

-

1.

Handcrafted network: First, we select five classical handcraft networks designed for natural scenes as benchmarks for detection performance: FCN [17], U-Net[18], PSPNet[19], DeepLabV3+ [20], CSNet [51] (salient object detection technique). Secondly, we compare NAS-ASDet with four state-of-the-art manual defect detection networks, including defect segmentation frameworks and detection method based on salient object detection technique: PGA-Net[9],CSEPNet [22], TSERNet [21], and LSA-Net [52].

-

2.

NAS-based method: We compare the NAS-ASDet performance with recent NAS segmentation methods: (a) Auto-DeepLab [31]: a macrosearch method for image segmentation, which is a classical baseline for NAS segmentation; (b) NAS-Unet[14]: which searches for specific cells and achieves good segmentation performance based on cell-based search space; (c) iNAS[35]: A hardware-aware saliency detection architecture with high performance based on NAS. (d) DNAS[34]: which uses the same search space as Auto-DeepLab, with a decoupled search framework to mitigate combinatorial explosion.

The above selected comparison methods cover image segmentation techniques, saliency object detection techniques, and lightweight network design, which helps to comprehensively evaluate the performance of our methods.

4.1.4 Performance Metrics

We use the same common metrics as [7] to evaluate model performance, including intersection over union (IoU), F1-Measure (F1), and pixel accuracy (PA). In addition, model Parameters (Params) and floating-point operation per second (FLOPs) are added because industrial sences are more sensitive to resource consumption. We use the total search time (Search_time) to measure the time consumption in NAS methods.

4.1.5 Implementation Details

Given a defect detection dataset, the training process is divided into search and retraining steps.

-

1.

Search Stage: This stage aims to generate deterministic detection architectures from a cell-based search space. The training set is divided 6:4 into an architecture training set and a weight training set, respectively. We define that each cell contains 4 intermediate nodes and 2 input nodes, and the network consists of 4 normal cells and 4 reduction cells. According to the search strategy described in Section 3.3, we establish 4 search stages, and the original candidate operations (Table 1) are gradually pruned in each stage according to . The maximum epoch and batch size of each stage are set to 70 and 4, respectively. To avoid poor search performance caused by instability at the beginning of a search stage, we only use the weight training set to update network weights during the first 20 epochs of each stage. In the remaining epochs, architecture weights and network weights are updated by alternately using the architecture training set and weight training set. The Adam optimizer with a learning rate of 0.002, weight decay of 0.001 and momentum of 0.9 is used to update , and the SGD optimizer with a learning rate of 0.005, weight decay of 0.0001 and momentum of 0.9 is used to update .

-

2.

Retraining Stage: After the search stage, a deterministic architecture is selected to replace the original supernet. The network weights are retrained for 500 epochs to mitigate training bias and ensure full network convergence. Multiscale features are fully considered according to the importance distribution. The other hyperparameters are set as in the search stage.

4.2 Performance Comparison (RQ1)

In Table 2 and Figure. 5, we present the quantitative analysis and visual defect prediction results of NAS-ASDet, respectively. Overall, our proposed method outperforms existing approaches in terms of model performance, computational complexity, and visual results, achieving the best detection performance.

| Dataset | Metrics | Manually designing architectures | NAS-based architectures | Ours | |||||||||||

| FCN [17] | U-Net [18] | PSPNet [19] | DeepLabV3+ [20] | CSNet [51] | PGA-Net [9] | CSEPNet [22] | TSERNet [21] | LSANet [52] | Auto-DeepLab [31] | NAS-Unet [14] | iNAS [35] | DNAS [34] | NAS-ASDet | ||

| MCSD-C | IoU(%) | 68.53 | 66.13 | 68.10 | 69.17 | 68.66 | 63.61 | 71.79 | 71.04 | 70.28 | 65.70 | 68.13 | 67.13 | 66.54 | 72.98 |

| F1(%) | 79.19 | 77.43 | 79.38 | 80.28 | 82.49 | 75.70 | 83.95 | 81.19 | 80.79 | 77.67 | 79.58 | 78.60 | 78.17 | 83.39 | |

| PA(%) | 82.12 | 80.99 | 81.53 | 82.15 | 82.67 | 78.98 | 82.61 | 83.72 | 83.43 | 80.30 | 81.43 | 81.06 | 80.91 | 84.60 | |

| Params(M) | 32.94 | 7.85 | 46.71 | 39.76 | 0.16 | 51.41 | 18.78 | 189.6 | 25.42 | 3.02 | 1.00 | 6.30 | 8.12 | 2.43 | |

| FLOPs(G) | 69.43 | 28.20 | 92.44 | 29.88 | 1.01 | 824.53 | 118.66 | 531.83 | 30.77 | 25.44 | 13.66 | 0.85 | 15.93 | 2.33 | |

| Search_time | / | / | / | / | / | / | / | / | / | 6:38’19” | 5:13’14” | 9:33’01” | 4:16’19” | 4:49’55” | |

| RSDDs | IoU(%) | 58.11 | 63.62 | 56.61 | 61.43 | 52.85 | 60.85 | 65.42 | 65.63 | 64.07 | 52.02 | 61.09 | 58.29 | 18.33 | 66.79 |

| F1(%) | 71.01 | 75.03 | 69.60 | 73.76 | 69.79 | 73.14 | 76.97 | 77.31 | 76.15 | 65.16 | 73.71 | 70.89 | 25.41 | 78.21 | |

| PA(%) | 73.91 | 79.59 | 74.31 | 76.21 | 70.60 | 76.79 | 80.25 | 79.96 | 78.23 | 71.38 | 77.10 | 75.21 | 51.30 | 80.78 | |

| Params(M) | 32.94 | 7.85 | 46.71 | 39.76 | 0.77 | 51.41 | 18.78 | 189.64 | 25.42 | 5.48 | 0.58 | 5.32 | 22.83 | 1.27 | |

| FLOPs(G) | 4.33 | 1.80 | 5.88 | 1.87 | 0.15 | 51.53 | 7.41 | 33.24 | 1.92 | 0.79 | 0.55 | 0.04 | 1.82 | 0.10 | |

| Search_time | / | / | / | / | / | / | / | / | / | 3:29’40” | 1:42’13” | 5:5’31” | 1:51’30” | 1:40’27” | |

| KSDD | IoU(%) | 64.08 | 68.98 | 65.52 | 68.87 | 64.28 | 67.13 | 70.39 | 68.01 | 69.64 | 69.35 | 69.95 | 64.83 | 65.97 | 72.78 |

| F1(%) | 77.26 | 81.13 | 78.78 | 80.90 | 79.79 | 79.82 | 81.83 | 78.86 | 81.52 | 81.62 | 82.01 | 78.23 | 79.04 | 83.52 | |

| PA(%) | 80.91 | 83.22 | 80.99 | 81.91 | 80.42 | 81.85 | 84.19 | 82.97 | 83.45 | 82.65 | 83.51 | 81.10 | 80.65 | 85.24 | |

| Params(M) | 32.94 | 7.85 | 46.71 | 39.76 | 0.69 | 51.41 | 18.78 | 189.64 | 25.42 | 3.10 | 0.71 | 5.09 | 7.60 | 1.63 | |

| FLOPs(G) | 277.72 | 112.81 | 369.46 | 119.54 | 8.40 | 3298.13 | 474.68 | 2127.32 | 123.09 | 104.51 | 46.67 | 2.34 | 98.04 | 6.94 | |

| Search_time | / | / | / | / | / | / | / | / | / | 6:23’10” | 5:35’50” | 7:25’48” | 4:01’59” | 2:35’28” | |

| DAGM | IoU(%) | 68.44 | 75.30 | 77.85 | 79.36 | 80.09 | 77.81 | 79.79 | 79.85 | 79.66 | 78.27 | 79.97 | 76.07 | 76.13 | 80.66 |

| F1(%) | 79.21 | 86.19 | 87.29 | 88.04 | 88.64 | 87.23 | 88.53 | 88.55 | 88.43 | 87.50 | 89.02 | 85.86 | 85.70 | 89.08 | |

| PA(%) | 81.51 | 86.65 | 87.80 | 88.71 | 89.13 | 87.86 | 89.02 | 89.10 | 88.93 | 88.16 | 89.14 | 86.98 | 86.71 | 89.51 | |

| Params(M) | 32.94 | 7.85 | 46.71 | 39.76 | 0.49 | 51.41 | 18.78 | 189.64 | 25.42 | 4.09 | 0.64 | 5.57 | 14.06 | 2.26 | |

| FLOPs(G) | 277.72 | 112.81 | 369.46 | 119.54 | 8.99 | 3298.13 | 474.68 | 2127.32 | 123.09 | 108.43 | 52.02 | 2.66 | 23.62 | 7.62 | |

| Search_time | / | / | / | / | / | / | / | / | / | 39:11’16” | 21:07’27” | 15:59’03” | 10:31’13” | 9:28’34” | |

4.2.1 Quantitative comparison

First, we begin the analysis with the performance of the designed network.

-

1.

Comparison with handcrafted networks: As shown in Table 2, no fixed handcrafted network consistently achieves the best performance across the four different datasets (the best performance is highlighted by underline, including CSNet, CSEPNet, TSERNet, and LSANet), which demonstrates the necessity of using NAS technology. In contrast, our proposed NAS-based adaptive design method (NAS-ASDet) comprehensively outperforms the existing handcrafted networks and achieves state-of-the-art performance (IoU), both when compared to natural images and defect detection methods. It should be noted that unlike these manually designed networks that require empirical design, our approach adopts an automatic search-based design methodology, which only requires a few hours to automatically obtain the detection network on different datasets, significantly improving network design efficiency.

-

1.

Comparison with NAS-based design methods: Our proposed NAS-ASDet consistently outperforms existing NAS-based methods. Particularly, on those datasets with more scarce samples (RSDD, KSDD, MCSD-C), compared with those NAS frameworks based on macro search (e.g. Auto-Deeplab, DNAS), our method shows more stable performance. We can observe an average IoU improvement of over 4% compared to the existing best-performing NAS methods, which shows the effectiveness of our refined search space. Moreover, we achieve this competitive result in relatively shorter search times. Except for DNAS, which completed the search faster on the MCSD-C dataset, our proposed method designed networks with optimal detection results in the shortest time. Compared to the difference in search performance of DNAS on MCSD-C, our method only takes an additional 33 minutes but achieved 6.44% IoU improvement. This demonstrates that NAS-ASDet outperforms existing NAS methods in terms of performance.

Second, we compare the computational complexity because lightweighting is crucial for industrial deployment. Table 2 presents the indicators of computational complexity, including Params and FLOPs. Across the four datasets, the networks’ size designed by NAS-ASDet are only about 1M-2M parameters with lower FLOPs. Compared to manually designed networks specifically for defect detection (PGANet, CSEPNet, TSERNet, and LSA-Net), NAS-ASDet utilizes only approximately 10% of the parameters and 5% of the FLOPs but achieving state-of-the-art performance. This achievement highlights the advantage of NAS-ASDet in industrial scenarios. Although NAS-ASDet has a slightly larger size than lightweight networks like CSNet and iNAS, the complexity increase is minimal (a maximum of 2M additional parameters and 5G additional FLOPs). While this increase does not significantly impose significant computational burden in industrial applications, but achieving an average IoU improvement of over 5%.

Conclusively, taking into account comprehensive performance metrics and computational complexity, NAS-ASDet surpasses other competitive methods and achieves state-of-the-art results.

4.2.2 Qualitative comparison

In order to visually compare the differences in defect detection performance among different methods, we present the visual results of our proposed method and 13 comparative methods in Figure. 5. When those networks designed for natural images (Figure. 5 (d)-(h) columns) are applied to defect detection, the defect area only be roughly delineated, resulting in blurred details and missing some areas. Although those method designed specifically for surface defect detection (Figure. 5 (i)-(l) columns) can delineate the defects contour more comprehensively, they often exhibit false positives or false negatives in regions with low contrast features, leading to incorrect defect judgments. When facing the defects of scarce samples and low contrast, the existing NAS methods (Figure. 5 (m)-(p) columns) seem cannot well overcome the interference such as lighting and noise, which cannot show strong adaptability. In comparison, NAS-ASDet has more sensitive adaptability. As shown in Figure. 5 (1)-(2) rows, NAS-ASDet can capture low contrast defect features of different scales under different lighting conditions, without missing any defects. Even with a limited number of training samples and in the presence of environmental disturbances, our method can accurately distinguish the defect contour and obtain prediction results closer to the ground truth shown in Figure. 5 (3)-(4) rows. Figure (5)-(6) rows demonstrate that NAS-ASDet can accurately detect low contrast defects, even subtle cracks, with limited training samples. And it also shows strong adaptability by sensitively recognizing various types of defects with blurry boundaries in the presence of complex background textures, as shown in Figure. 5 (7)-(8) rows.

Therefore, our method can flexibly adapt to the challenge of defect detection, locate defects more accurately, and obtain better visual effects.

To provide a more intuitive representation of the searched network architecture, Figure. 6 illustrates the searched architectures on different datasets. As expected, the optimal cells obtained vary among these datasets, showcasing the ability of NAS-ASDet to automatically construct cells with diverse architectures driven by data. These architectures are further emphasized by the application of spatial attention and channel attention in different forms to the searched cell, allowing NAS-ASDet to adaptively adjust the feature map’s focus and enhance the cell’s representational power.

4.2.3 Failure Case Analysis

Although NAS-ASDet outperforms other competitive methods, there are still failure cases in some challenging situations. In Figure. 7, (a) and (b) columns illustrate typical defect regions with large proportions. However, NAS-ASDet may not consider the entire defect, resulting in partial loss when the defect area changes. For small defects with low contrast at the edge, as shown in the (c) and (d) columns of Figure. 7, our method may miss detection. In the (e), (f) column of Figure. 7, our method may overly focus on the vicinity of the defect area, leading to false detection in nearby regions. The main reason for this issue is the dataset availability and diversity, and we will address these deficiencies in our future work.

4.3 Ablation study (RQ2)

To study how designs in NAS-ASDet affect performance, we design a series of ablation experiments for evaluation.

4.3.1 Data-driven multiscale feature extraction

In NAS-ASDet, feature extraction is performed by repeatedly searching cells. We compare this approach with widely used classical feature extraction methods (VGG16 [11], ResNet50 [12], GoogLeNet [53], MobileNetV3 [54] and DenseNet [55]). During the comparison, we separately replace the feature extraction stage, while the other components of NAS-ASDet remain intact. As shown in Table 3, with the fixed connection mode, the extracted features are fixed and the performance is limited. Our search method can thus automatically determine a more suitable connection, making the network performance is better. Moreover, the searched architecture is more lightweight compared to these classical fixed feature extraction methods.

4.3.2 Adaptive adjustment of feature distribution

To consider the impact of fusing multiscale features, we evaluate different levels of feature maps () extracted by automatically searched cells. We also compare the performance of balanced fusion and adaptive fusion. As shown in Figure. 8: (1) The detection ability of fusing multiscale features is better than that of single-level features (Figure. 8(b) VS Figure. 8(a)), which proves that multiscale feature fusion can improve the detection performance; (2) Different single-level features show different performances (Figure. 8(a)). For example, achieves the best performance on RSDDs, while performs best on MCSD-C. This proves that the contributions of each level of features are different for different tasks, and it is necessary to integrate different scale features according to their importance so that the total performance can be enhanced. (3) The experiments show that adaptive fusion not only outperforms single-level features but also outperforms balanced fusion (Figure. 8(b)), proving that the adaptive adjustment of feature importance distribution is effective.

4.4 Search Efficiency (RQ3)

We evaluate the search efficiency from the perspectives of search time and model performance. We compare the search strategy in NAS-ASDet with the original DARTS and related improved DARTS, including DARTS [26], PC-DARTS [29], P-DARTS [30], and Att-DARTS [56]. The same search space as considered with NAS-ASDet is used (to ensure fair comparison). The maximum epoch remains the same as NAS-ASDet, which is set to 280 (70 epochs4). For the nonstaged search strategy, in the first 80 epochs (20 epochs4), only the network weight is updated to avoid initial instability.

The search efficiency comparison results are shown in Figure. 9. The search time of the proposed method is less than that of the others, while the architecture with better performance is generated. Under the condition of limited time resources, our method can generate favorably performing architectures in a shorter time. In summary, the proposed method can explore the NAS-ASDet search space better and faster.

5 Conclusion

In this article, we propose an NAS-based method for adaptive architecture generation in surface defect detection, NAS-ASDet. First, we design a refined and industry-appropriate lightweight search space based on prior manual architecture knowledge. Second, we introduce a cell architecture with data-driven capability and searchable attention operations. Additionally, we desgin a multi-scale feature fusion that can adaptively adjust feature distribution. Furthermore, a progressive search strategy with deep supervision is designed to effectively explore the search space. Experimental results demonstrate that NAS-ASDet outperforms both manually designed architectures and NAS ones.

In future work, we plan to add more degrees of freedom for architecture search (e.g., scalable architecture) to further reduce the expressive limitation caused by search space. At the same time, we will try to improve the inference speed by using hardware-aware NAS. Additionally, implementing an effective data augmentation strategy combined with our method is another future direction.

References

- [1] X. Wen, J. Shan, Y. He, K. Song, Steel surface defect recognition: A survey, Coatings 13 (1) (2022) 17.

- [2] X. Ni, Z. Ma, J. Liu, B. Shi, H. Liu, Attention network for rail surface defect detection via consistency of intersection-over-union (iou)-guided center-point estimation, IEEE Transactions on Industrial Informatics 18 (3) (2021) 1694–1705.

- [3] M. Zhuxi, Y. Li, M. Huang, Q. Huang, J. Cheng, S. Tang, A lightweight detector based on attention mechanism for aluminum strip surface defect detection, Computers in Industry 136 (2022) 103585.

- [4] H. Chen, Y. Du, Y. Fu, J. Zhu, H. Zeng, Dcam-net: A rapid detection network for strip steel surface defects based on deformable convolution and attention mechanism, IEEE Transactions on Instrumentation and Measurement 72 (2023) 1–12.

- [5] J. Yao, J. Li, Ayolov3-tiny: An improved convolutional neural network architecture for real-time defect detection of pad light guide plates, Computers in Industry 136 (2022) 103588.

- [6] P. Luo, B. Wang, H. Wang, F. Ma, H. Ma, L. Wang, An ultrasmall bolt defect detection method for transmission line inspection, IEEE Transactions on Instrumentation and Measurement 72 (2023) 1–12.

- [7] C. You, N. Chen, Y. Zou, Mrd-net: Multi-modal residual knowledge distillation for spoken question answering., in: IJCAI, 2021, pp. 3985–3991.

- [8] H. Yang, Y. Chen, K. Song, Z. Yin, Multiscale feature-clustering-based fully convolutional autoencoder for fast accurate visual inspection of texture surface defects, IEEE Transactions on Automation Science and Engineering 16 (3) (2019) 1450–1467.

- [9] H. Dong, K. Song, Y. He, J. Xu, Y. Yan, Q. Meng, Pga-net: Pyramid feature fusion and global context attention network for automated surface defect detection, IEEE Transactions on Industrial Informatics 16 (12) (2019) 7448–7458.

- [10] T. Elsken, A. Zela, J. H. Metzen, B. Staffler, T. Brox, A. Valada, F. Hutter, Neural architecture search for dense prediction tasks in computer vision, arXiv preprint arXiv:2202.07242 (2022).

- [11] K. Simonyan, A. Zisserman, Very deep convolutional networks for large-scale image recognition, arXiv preprint arXiv:1409.1556 (2014).

- [12] K. He, X. Zhang, S. Ren, J. Sun, Deep residual learning for image recognition, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 770–778.

- [13] B. Zoph, V. Vasudevan, J. Shlens, Q. V. Le, Learning transferable architectures for scalable image recognition, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 8697–8710.

- [14] Y. Weng, T. Zhou, Y. Li, X. Qiu, Nas-unet: Neural architecture search for medical image segmentation, IEEE access 7 (2019) 44247–44257.

- [15] L. Huynh, P. Nguyen, J. Matas, E. Rahtu, J. Heikkilä, Lightweight monocular depth with a novel neural architecture search method, in: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2022, pp. 3643–3653.

- [16] M. Verma, P. Lubal, S. K. Vipparthi, M. Abdel-Mottaleb, Rnas-mer: A refined neural architecture search with hybrid spatiotemporal operations for micro-expression recognition, in: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2023, pp. 4770–4779.

- [17] J. Long, E. Shelhamer, T. Darrell, Fully convolutional networks for semantic segmentation, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2015, pp. 3431–3440.

- [18] O. Ronneberger, P. Fischer, T. Brox, U-net: Convolutional networks for biomedical image segmentation, in: Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, October 5-9, 2015, Proceedings, Part III 18, Springer, 2015, pp. 234–241.

- [19] H. Zhao, J. Shi, X. Qi, X. Wang, J. Jia, Pyramid scene parsing network, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 2881–2890.

- [20] L.-C. Chen, Y. Zhu, G. Papandreou, F. Schroff, H. Adam, Encoder-decoder with atrous separable convolution for semantic image segmentation, in: Proceedings of the European conference on computer vision (ECCV), 2018, pp. 801–818.

- [21] C. Han, G. Li, Z. Liu, Two-stage edge reuse network for salient object detection of strip steel surface defects, IEEE Transactions on Instrumentation and Measurement 71 (2022) 1–12.

- [22] T. Ding, G. Li, Z. Liu, Y. Wang, Cross-scale edge purification network for salient object detection of steel defect images, Measurement 199 (2022) 111429.

- [23] W. Li, H. Zhang, G. Wang, G. Xiong, M. Zhao, G. Li, R. Li, Deep learning based online metallic surface defect detection method for wire and arc additive manufacturing, Robotics and Computer-Integrated Manufacturing 80 (2023) 102470.

- [24] W. Zhou, J. Hong, Fhenet: Lightweight feature hierarchical exploration network for real-time rail surface defect inspection in rgb-d images, IEEE Transactions on Instrumentation and Measurement (2023).

- [25] F.-J. Du, S.-J. Jiao, Improvement of lightweight convolutional neural network model based on yolo algorithm and its research in pavement defect detection, Sensors 22 (9) (2022) 3537.

- [26] H. Liu, K. Simonyan, Y. Yang, Darts: Differentiable architecture search, arXiv preprint arXiv:1806.09055 (2018).

- [27] P. Ren, Y. Xiao, X. Chang, P.-Y. Huang, Z. Li, X. Chen, X. Wang, A comprehensive survey of neural architecture search: Challenges and solutions, ACM Computing Surveys (CSUR) 54 (4) (2021) 1–34.

- [28] J. Chang, Y. Guo, G. Meng, S. Xiang, C. Pan, et al., Data: Differentiable architecture approximation, Advances in Neural Information Processing Systems 32 (2019).

- [29] Y. Xu, L. Xie, X. Zhang, X. Chen, G.-J. Qi, Q. Tian, H. Xiong, Pc-darts: Partial channel connections for memory-efficient architecture search, arXiv preprint arXiv:1907.05737 (2019).

- [30] X. Chen, L. Xie, J. Wu, Q. Tian, Progressive differentiable architecture search: Bridging the depth gap between search and evaluation, in: Proceedings of the IEEE/CVF international conference on computer vision, 2019, pp. 1294–1303.

- [31] C. Liu, L.-C. Chen, F. Schroff, H. Adam, W. Hua, A. L. Yuille, L. Fei-Fei, Auto-deeplab: Hierarchical neural architecture search for semantic image segmentation, in: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 82–92.

- [32] W. Chen, X. Gong, X. Liu, Q. Zhang, Y. Li, Z. Wang, Fasterseg: Searching for faster real-time semantic segmentation, arXiv preprint arXiv:1912.10917 (2019).

- [33] X. Zhang, H. Xu, H. Mo, J. Tan, C. Yang, L. Wang, W. Ren, Dcnas: Densely connected neural architecture search for semantic image segmentation, in: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2021, pp. 13956–13967.

- [34] Y. Wang, Y. Li, W. Chen, Y. Li, B. Dang, Dnas: Decoupling neural architecture search for high-resolution remote sensing image semantic segmentation, Remote Sensing 14 (16) (2022) 3864.

- [35] Y.-C. Gu, S.-H. Gao, X.-S. Cao, P. Du, S.-P. Lu, M.-M. Cheng, Inas: integral nas for device-aware salient object detection, in: Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 4934–4944.

- [36] B. Yan, H. Peng, K. Wu, D. Wang, J. Fu, H. Lu, Lighttrack: Finding lightweight neural networks for object tracking via one-shot architecture search, in: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 15180–15189.

- [37] T. Vu, Y. Zhou, C. Wen, Y. Li, J.-M. Frahm, Toward edge-efficient dense predictions with synergistic multi-task neural architecture search, in: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2023, pp. 1400–1410.

- [38] F. Yao, S. Wang, L. Ding, G. Zhong, L. B. Bullock, Z. Xu, J. Dong, Lightweight network learning with zero-shot neural architecture search for uav images, Knowledge-Based Systems 260 (2023) 110142.

- [39] H. Chen, Z. Zhang, C. Zhao, J. Liu, W. Yin, Y. Li, F. Wang, C. Li, Z. Lin, Depth classification of defects based on neural architecture search, IEEE Access 9 (2021) 73424–73432.

- [40] H. Chen, Z. Zhang, W. Yin, C. Zhao, F. Wang, Y. Li, A study on depth classification of defects by machine learning based on hyper-parameter search, Measurement 189 (2022) 110660.

- [41] J. Shi, Z. Li, T. Zhu, D. Wang, C. Ni, Defect detection of industry wood veneer based on nas and multi-channel mask r-cnn, Sensors 20 (16) (2020) 4398.

- [42] J. Zhang, X. Chen, H. Wei, K. Zhang, A lightweight network for photovoltaic cell defect detection in electroluminescence images based on neural architecture search and knowledge distillation, arXiv preprint arXiv:2302.07455 (2023).

- [43] G. Zhu, W. Wang, Z. Xu, F. Cheng, M. Qiu, C. Yuan, Y. Huang, Psp: Progressive space pruning for efficient graph neural architecture search, in: 2022 IEEE 38th International Conference on Data Engineering (ICDE), IEEE, 2022, pp. 2168–2181.

- [44] S.-H. Gao, M.-M. Cheng, K. Zhao, X.-Y. Zhang, M.-H. Yang, P. Torr, Res2net: A new multi-scale backbone architecture, IEEE transactions on pattern analysis and machine intelligence 43 (2) (2019) 652–662.

- [45] J. Hu, L. Shen, G. Sun, Squeeze-and-excitation networks, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 7132–7141.

- [46] S. Woo, J. Park, J.-Y. Lee, I. S. Kweon, Cbam: Convolutional block attention module, in: Proceedings of the European conference on computer vision (ECCV), 2018, pp. 3–19.

- [47] L. Yang, J. Fan, B. Huo, E. Li, Y. Liu, A nondestructive automatic defect detection method with pixelwise segmentation, Knowledge-Based Systems 242 (2022) 108338.

- [48] J. Gan, Q. Li, J. Wang, H. Yu, A hierarchical extractor-based visual rail surface inspection system, IEEE Sensors Journal 17 (23) (2017) 7935–7944.

- [49] D. Tabernik, S. Šela, J. Skvarč, D. Skočaj, Segmentation-Based Deep-Learning Approach for Surface-Defect Detection, Journal of Intelligent Manufacturing (May 2019). doi:10.1007/s10845-019-01476-x.

- [50] M. Wieler, T. Hahn, Weakly supervised learning for industrial optical inspection, in: DAGM symposium in, 2007.

- [51] M.-M. Cheng, S.-H. Gao, A. Borji, Y.-Q. Tan, Z. Lin, M. Wang, A highly efficient model to study the semantics of salient object detection, IEEE Transactions on Pattern Analysis and Machine Intelligence 44 (11) (2021) 8006–8021.

- [52] W. Li, B. Li, S. Niu, Z. Wang, M. Wang, T. Niu, Lsa-net: Location and shape attention network for automatic surface defect segmentation, Journal of Manufacturing Processes 99 (2023) 65–77.

- [53] C. Szegedy, W. Liu, Y. Jia, P. Sermanet, S. Reed, D. Anguelov, D. Erhan, V. Vanhoucke, A. Rabinovich, Going deeper with convolutions, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2015, pp. 1–9.

- [54] A. Howard, M. Sandler, G. Chu, L.-C. Chen, B. Chen, M. Tan, W. Wang, Y. Zhu, R. Pang, V. Vasudevan, et al., Searching for mobilenetv3, in: Proceedings of the IEEE/CVF international conference on computer vision, 2019, pp. 1314–1324.

- [55] G. Huang, Z. Liu, L. Van Der Maaten, K. Q. Weinberger, Densely connected convolutional networks, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 4700–4708.

- [56] K. Nakai, T. Matsubara, K. Uehara, Att-darts: Differentiable neural architecture search for attention, in: 2020 International Joint Conference on Neural Networks (IJCNN), IEEE, 2020, pp. 1–8.