Mutual Information-based Representations Disentanglement for Unaligned Multimodal Language Sequences

Abstract

The key challenge in unaligned multimodal language sequences lies in effectively integrating information from various modalities to obtain a refined multimodal joint representation. Recently, the disentangle and fuse methods have achieved the promising performance by explicitly learning modality-agnostic and modality-specific representations and then fusing them into a multimodal joint representation. However, these methods often independently learn modality-agnostic representations for each modality and utilize orthogonal constraints to reduce linear correlations between modality-agnostic and modality-specific representations, neglecting to eliminate their nonlinear correlations. As a result, the obtained multimodal joint representation usually suffers from information redundancy, leading to overfitting and poor generalization of the models. In this paper, we propose a Mutual Information-based Representations Disentanglement (MIRD) method for unaligned multimodal language sequences, in which a novel disentanglement framework is designed to jointly learn a single modality-agnostic representation. In addition, the mutual information minimization constraint is employed to ensure superior disentanglement of representations, thereby eliminating information redundancy within the multimodal joint representation. Furthermore, the challenge of estimating mutual information caused by the limited labeled data is mitigated by introducing unlabeled data. Meanwhile, the unlabeled data also help to characterize the underlying structure of multimodal data, consequently further preventing overfitting and enhancing the performance of the models. Experimental results on several widely used benchmark datasets validate the effectiveness of our proposed approach.

keywords:

Unaligned multimodal language sequences, multimodal joint representation, representations disentanglement, mutual information, unlabeled data.organization=Harbin Institute of Technology,city=Harbin, postcode=150001, country=China

1 Introduction

Human communication occurs through not only spoken language but also non-verbal behaviors such as facial expressions and vocal intonations [1]. This form of language is referred to as multimodal language in computational linguistics [2, 3]. With the increasing amount of user-generated multimedia content, Multimodal Sentiment Analysis (MSA) is emerging as a popular research field and one of the most important application directions in multimodal language [4]. MSA aims to understand the sentimental tendencies conveyed in opinion videos by leveraging information from multiple modalities, including visual, language and audio. It has broad applications in various fields, such as e-commerce, public opinion monitoring, human-computer interaction, etc.

Early works [5, 6, 7, 8, 9, 10, 11] in MSA primarily focused on aligned multimodal language sequences, which are formed by manually aligning visual and audio features to the word granularity based on the time interval of each word, typically by taking the average of visual and audio features. However, this manual alignment process requires domain-specific knowledge and consumes significant time and effort. Consequently, current research has gradually shifted its focus to unaligned multimodal language sequences, where the key challenge lies in effectively integrating information from various modalities (i.e., multimodal fusion) to obtain a refined multimodal joint representation [12, 13, 14].

Previous fusion methods can be regarded as a form of directly fuse, which refers to the fusion of information from different modalities without preprocessing. Based on different learning strategies, the directly fuse methods for unaligned multimodal language sequences can be roughly categorized into three types: tensor-based methods, graph-based methods, and cross-modal attention-based methods. Tensor-based methods [15, 16] utilize the tensor product of the representations encoded from each modality to capture cross-modal interactions, resulting in a high-dimensional multimodal joint representation Graph-based methods either construct a separate graph for each modality, modeling the interactions within each modality and then aggregating the graph representations of each modality to obtain a multimodal joint representation [17, 18], or construct a single graph for sequential elements of all modalities, aiming to model the interactions across modalities and learn a refined graph representation [19]. The core of cross-modal attention-based methods [20, 21, 22] is to model long-term dependencies across modalities. By repeatedly enhancing the features of one modality with the features of another modality, the enhanced sequential representations can be obtained for each modality, which are then aggregated into a multimodal joint representation. Although these directly fuse methods have achieved a good performance, they overlook the interference caused by modality heterogeneity and distribution gap. That is to say, they do not explicitly consider the shared factor between modalities and have poor interpretability.

Therefore, the disentangle and fuse methods have recently begun to emerge, with the aim of simultaneously exploring the commonality and complementarity of different modalities before multimodal fusion. For example, in terms of commonality, it includes the motivations and intentions of the speaker [23]. Regarding complementarity, it encompasses the aspects such as word choice and grammar in the language modality, tone and prosody in the audio modality, and facial expressions in the visual modality. The disentangle and fuse methods explicitly disentangle the representation of each modality into a modality-agnostic representation and modality-specific one, which are then aggregated into a multimodal joint representation. They aim towards bridging the differences of modality distribution within the modality-invariant subspace and explicitly learning shared information, thus achieving promising performance and possessing good interpretability.

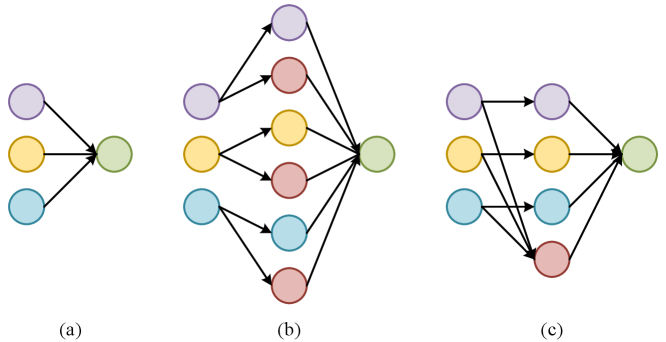

However, existing representations disentanglement-based methods suffer from the following issues. On the one hand, in terms of model architecture, these methods first independently learn the modality-agnostic representations for each modality, and then aggregate these modality-agnostic representations together with their respective modality-specific representations to form a multimodal joint representation. On the other hand, in terms of information constraints, they enforce orthogonality between the modality-invariant and modality-specific subspace to reduce the linear correlation between corresponding representations, but overlook their non-linear correlations, thus failing to ensure the effective disentanglement of the representations for each modality. Both of these issues lead to information redundancy in the multimodal joint representation, thus affecting the generalization capability of the model. In this paper, we explore a superior paradigm to tackle the above issues in model architecture design and information constraint levels, respectively.

To address the first issue, we propose a novel representations disentanglement framework, as illustrated in Figure 1. Instead of separately learning modality-agnostic representations for each modality, we only learn a unified modality-agnostic representation. Then, we employ mutual information to measure the non-linear correlations between the latent representations. By minimizing the mutual information between the modality-agnostic and modality-specific representations, as well as among the modality-specific representations themselves, we ensure information independence between corresponding representations, achieving the superior disentanglement of the representations for each modality and avoiding information redundancy within multimodal joint representation. We refer to our approach as Mutual Information-based Representations Disentanglement (MIRD). Last, we introduce unlabeled data to accurately estimate the mutual information. Meanwhile, the use of unlabeled data is also capable of helping to characterize the underlying structure of multimodal data and further mitigates overfitting.

To validate the effectiveness of the proposed MIRD, extensive experiments were conducted on several benchmark datasets for multimodal sentiment analysis. The experimental results demonstrate that MIRD not only outperforms the baseline models but also achieves the state-of-the-art performance. In addition, the visualization of the disentangled representations further confirms that the proposed method effectively disentangles the representation for each modality.

Our contributions are as follows:

-

1.

We propose a novel mutual information-based representations disentanglement method with the different framework from previous methods. It enables the superior disentanglement of modality-specific and modality-agnostic representations, avoiding information redundancy within multimodal joint representation.

-

2.

We introduce unlabeled data to accurately estimate the mutual information, which is also helpful to characterize the underlying structure of multimodal data to further improve the performance of the model.

-

3.

Comprehensive experiments on several benchmark datasets show that our model outperforms state-of-the-art methods by a large margin.

2 Related Works

2.1 Directly fuse

Tensor-based methods Although tensor-based methods were initially applied to aligned multimodal language sequences, they can be easily extended to the case of unaligned data. TFN [15] utilizes an outer product-based calculation of representations encoded from each modality to capture uni-modal, bi-modal, and tri-modal interactions. LMF [16] builds upon TFN by incorporating a low-rank multimodal tensor fusion technique, enhancing the efficiency of the model.

Graph-based methods Multimodal Graph [17] is structured hierarchically for two stages: intra- and inter-modal dynamics learning. In the first stage, a graph convolutional network is used for each modality to learn intra-modal dynamics. In the second stage, a graph pooling fusion network is used to automatically learn associations between nodes from different modalities and form a multimodal joint representation. GraphCAGE [18] leverages the dynamic routing of capsule network and self-attention to create nodes and edges for each modality, which effectively address long-range dependency issues. MTGAT [19] presents a procedure for transforming unaligned multimodal sequence data into a single graph comprising heterogeneous nodes and edges. This graph effectively captures the rich interactions among diverse modalities through time. Subsequently, a novel multimodal temporal graph attention is introduced to incorporate a dynamic pruning and read-out technique, enabling the refined joint representation of this multimodal temporal graph.

Cross-modal attention-based methods MulT [20] utilizes a cross-modal attention mechanism, which provides a latent cross-modal adaptation that fuses multimodal information by directly attending to low-level features in other modalities, regardless of the need for alignment. PMR [21] utilizes a message hub to facilitate information exchange among unaligned multimodal sequences. The message hub sends common messages to each modality and reinforces their features through cross-modal attention. In turn, it gathers the reinforced features from each modality to create a reinforced common message. Through iterative cycles, the common message and modality features progressively complement each other. LMR-CBT [22] introduces a novel cross-modal block transformer, enabling complementary learning across different modalities. It comprises local temporal learning, cross-modal feature fusion, and global self-attention representations.

2.2 Disentangle and fuse

MISA [23] projects each modality into two subspaces: one is modality-invariant subspace for capturing commonalities and reducing modality gaps, and another is modality-specific subspace for preserving unique characteristics. These representations are fused into a multimodal joint representation for sentiment prediction. FDMER [24] handles modality heterogeneity by learning two distinct representations for each modality on aligned multimodal sequences. The first representation is the common representation for projecting all modalities into a modality-invariant shared subspace with aligned distributions. The representation can capture commonality among modalities regarding suggested emotions and reduces the modality gap. The second representation is the private representation for providing a modality-specific subspace for each modality. In this subspace, FDMER learns the unique characteristics of different modalities and eliminates redundant information. MFSA [25] employs a predictive self-attention module to capture reliable contextual dependencies and enhance unique features within modality-specific spaces. In addition, a hierarchical cross-modal attention module is introduced to explore correlations between cross-modal elements across the modality-agnostic space. To ensure the acquisition of distinct representations, a double-discriminator strategy is employed in an adversarial manner. Finally, modality-specific and modality-agnostic representations are integrated into a multimodal joint representation for downstream tasks.

3 Methodology

In previous representations disentanglement-based frameworks, the feature sequence of each modality is separately processed through a modality-agnostic (a.k.a., shared/common) encoder to obtain the respective modality-agnostic representation. In contrast, we argue that learning modality-agnostic representations requires the cross-modal interactions, and obtaining modality-agnostic representations for each modality through a single modality-agnostic encoder is suboptimal and results in information redundancy in the aggregated multimodal joint representation. In this section, we describe our proposed Mutual Information-based Representations Disentanglement (MIRD) framework, as shown in Figure 2. The framework includes the encoders and decoders for each modality, the multimodal encoder, the Mutual Information Minimization (MIM) module, and the regressor. In the subsequent subsections, we will describe these modules in detail.

3.1 Formulation

Our task is defined as follows: Given a set of three modalities , an opinion video, i.e., multimodal language sequence, can be represented as where , , denotes the extracted sentiment features corresponding to modality at the -th moment, is the dimension of the feature, and is the length of sequence of modality . is the original sequence of words. Our goal is to predict the sentiment intensity of the whole video.

3.2 Modality-specific representations learning

To extract modality-specific (a.k.a., private) information, we design the unimodal encoders to map the feature sequences of each modality to their respective modality-specific subspaces, obtaining mutually exclusive modality-specific representations,

| (1) |

where , represents the encoder for modality , denotes the parameter of the encoder, is the acquired modality-specific representation, and is the dimension of the representation.

For all modalities, the Long Short-Term Memory (LSTM) [26] and subsequent fully connected networks are selected as the modality-specific encoders to model the intra-modal interactions within the sequences.

3.3 Modality-agnostic representation learning

The aim of the multimodal encoder is to obtain a modality-agnostic representation from the feature sequences of three modalities, and the learning of such a representation requires cross-modal interactions. Given the outstanding performance and popularity of text-centric cross-modal interaction models, we introduce our previous work Word-wise Sparse Attention BERT (WSA-BERT) [27], which takes into account long-range dependencies across modalities and sparse attention mechanisms between sequence elements. By injecting the non-verbal (audio and visual) information into the word representations at the intermediate stage of the text backbone model, more refined word representations are obtained.

More specifically, we first feed into a pretrained BERT [28] model and extract the middle word representations , where is the intermediate word representation at the -th moment, is the dimension of the representation, and represents the length of the sequence. Then, the non-verbal feature sequences and , along with the word representation sequence , are jointly mapped to a common semantic space, where the word is used as a semantic anchor to search for the non-verbal information most relevant to from holistic non-verbal sequences. The semantic similarity between each word representation and the non-verbal features is calculated and then followed by a Sparsemax [29] function to highlight the important information in non-verbal sequences,

| (2) |

where , is the similarity function (fully connected network and inner product similarity) with the parameter for modality , is the Sparsemax transformation which can map attention scores to a probability simplex, and denote the combined audio and visual feature sequences with the same length as the language sequence , respectively.

Furthermore, a Multimodal Adaptive Gating (MAG) [30] mechanism is leveraged to determine the amount of information injected from non-verbal modalities. Specifically, two adaptive gates are constructed to control audio and visual information, respectively,

| (3) |

where , represents the concatenation of and , represents a sigmoid activation function, is the single-layer fully connected network with the parameter , and is the acquired adaptive gate.

Then, the sequence of displacement vectors is acquired by fusing together audio and visual features multiplied by their respective gate vectors,

| (4) |

where represents the Hadamard product (element-wise product), and are the single-layer fully connected networks with the parameters and , respectively, is the acquired sequence of displacement vectors. Subsequently, the weighted summation is utilized between BERT word representations and its non-verbal displacement vectors to create a more refined word representations sequence containing non-verbal information,

| (5) |

| (6) |

where is a hyper-parameter, is the scaling factor which designed to remain the effect of non-verbal displacement vectors within a desirable range, is a function that transforms vectors into diagonal matrices, is the modified word representation incorporating global non-verbal information. We input into the subsequent BERT model to obtain the final word representations . Finally, the word representations are pooled and mapped to the modality-agnostic subspace,

| (7) |

where is the average pooling function, denotes the fully connected network with the parameter , is the acquired modality-agnostic representation, and is the dimension of the representation. For simplicity, we define the parameter of the multimodal encoder as .

3.4 Multimodal reconstruction

To ensure that the disentangled representations do not lose information and capture the underlying structure of multimodal data [31], the disentangled modality-specific representation and modality-agnostic representation are used to reconstruct the original representations ,

| (8) |

where , is the LSTM [26] decoder for modality with the parameter , is the reconstructed sequence of representations.

3.5 Mutual information minimization

The representations disentanglement aims to decompose the original representation into and , such that and contain completely different information. This requires removing any correlation between and , between and . Previous representations disentanglement-based methods [23, 25] have often utilized Orthogonal Constraints (OC), taking and as examples, as shown below:

| (9) |

where , and are sets of and from samples, respectively, represents the Frobenius norm.

The application of orthogonal constraints ensures linear independence among variables. Nonetheless, nonlinear relationships may still persist among these variables, leading to an inadequate level of representations disentanglement. To this end, we propose a constraint based on mutual information minimization, which effectively eliminates the dependency between variables. Specifically, we utilize Contrastive Log-ratio Upper Bound (CLUB) [32] to estimate the mutual information upper bound. CLUB bridges mutual information estimation with contrastive learning, where mutual information is estimated by the difference of conditional probabilities between the joint distribution (positive samples) and the marginal distribution (negative samples),

| (10) |

With sample pairs , has an unbiased estimation as:

| (11) |

Since the conditional distribution is unavailable, the above formula is difficult to calculate. Therefore, a variational distribution with parameter is used to approximate ,

| (12) |

In theory, as long as the variational distribution is good enough, (12) remains an upper bound on the mutual information [32]. We employ the neural networks parameterized by to characterize the variational distribution , and by enhancing the capacity of the neural network and updating its parameters, we can obtain a more accurate approximation of . Thus, the neural networks are referred to as the mutual information estimators.

To minimize the mutual information between and , at each training iteration, we first update the variational approximation by minimizing the negative log-likelihood,

| (13) |

After is updated, we calculate the estimator as described in (12). Finally, and are updated alternately during the training.

In addition to enforcing the independence of information between , , and , it is also necessary to impose constraints on the independence of information between and the original inputs , , to truly learn the shared information between modalities. As shown in Figure 3, taking visual and audio modalities as an example, without the constraint of information independence between and the original inputs, may contain excessive information beyond the genuine shared information. Thus we further use variational distribution to minimize the mutual information between and ,

| (14) | ||||

The corresponding negative log-likelihood is as follows,

| (15) |

For simplicity, we define the parameter of the variational distributions as .

3.6 Sentiment prediction

After obtaining the disentangled representations , a regressor is designed to predict the sentiment intensity of the video,

| (16) |

| (17) |

where is the concatenation of and , is a two-layer fully connected network with the parameter , is the predicted sentiment intensity. For simplicity, we define .

3.7 Optimization objective

The task-specifc loss estimates the quality of prediction during training. We use the Mean Squared Error (MSE) loss for the regression task,

| (18) |

where is the number of the samples in each batch, taking the logarithm of the MSE loss is done to avoid cumbersome weight hyper-parameter tuning and balance the gradients of each loss during the optimization process [33]. For reconstruction of original representations, the MSE loss is utilized for audio and visual modalities, and the Cross Entropy (CE) loss is utilized for language modality ( sentences are composed of discrete words in the lexicon),

|

|

(19) |

Similarly, taking the logarithm of the reconstruction loss for each modality is done to automatically balance the gradients of each loss during the optimization process and avoid manual adjustment of the loss weights. Furthermore, we define as , as , as , thus the Mutual Information Minimization (MIM) loss is as follows:

| (20) |

where , . The overall learning of the model is performed by minimizing:

| (21) |

where is the weight coefficient. Since the estimated mutual information values may become negative, we do not take their logarithm. Instead, we simply set the weights of the mutual information minimization losses to be the same. Meanwhile, as mentioned in Section 3.5, before optimizing , we need to minimize the negative log-likelihood for accurate mutual information estimation,

| (22) |

where , . and are optimized alternately during the training.

However, the estimation of mutual information may not be accurate due to limited labeled data availability [32], and introducing additional data can improve this. Thus, given the abundant supply of unlabeled opinion videos accessible on the Internet, we leverage them to assist in computing mutual information. On the other hand, these unlabeled data is also utilized to reconstruct original inputs, thereby aiding in characterizing the underlying structure of multimodal data, and further improving the performance of the model. The overall optimization algorithm of our model is shown in Algorithm 1,

4 Experiment Settings

4.1 Datasets

We conduct the evaluation of our methods on two extensively used datasets, namely CMU-MOSI [34] and CMU-MOSEI [2], which encompass three modalities: visual, language, and audio, to convey the sentiment of the speakers. In addition, a sentiment-unlabeled dataset EmoVoxCeleb [35] is introduced for accurately estimating mutual inforamtion and reconstructing original inputs.

CMU-MOSI dataset comprises 93 opinion videos extracted from YouTube movie reviews. Each video is composed of multiple opinion segments, with each segment annotated with sentiment scores ranging from -3 (strong negative) to +3 (strong positive). To align with previous studies [20, 12, 25], we employ 1,284 segments for training, 229 segments for validation, and 686 segments for testing.

CMU-MOSEI dataset, currently the largest multimodal sentiment analysis dataset, contains 22,846 movie review video clips sourced from YouTube. Each video clip is assigned a sentiment score between -3 (strong negative) and +3 (strong positive). To align with previous studies [20, 12, 25], we utilize 16,326 video clips for training, 1,861 video clips for validation, and 4,659 video clips for testing.

EmoVoxCeleb comprises a vast collection of over 100,000 video clips featuring over 1,200 speakers, also sourced from open-source media YouTube. The dataset exhibits a balanced distribution across genders and encompasses speakers with diverse ethnicities, accents, professions, and ages. Through a rough screening process, non-English video clips were excluded, resulting in the selection of 132,708 English video clips, representing 1,105 speakers. We select a total of 81,630 video clips, which is five times the size of the training set in CMU-MOSEI, as the unlabeled data.

4.2 Sentiment features

The sentiment-related features are extracted for non-verbal (visual and audio) modalities.

Visual: The MultiComp OpenFace2.0 toolkit [36] is employed to extract a comprehensive set of visual features, encompassing 340-dimensional facial landmarks, 35-dimensional facial action units, 6-dimensional head pose and orientation, 40-dimensional rigid and non-rigid shape parameters, and 288-dimensional eye gaze 111For more details, you can see https://github.com/TadasBaltrusaitis/OpenFace/wiki/Output-Format.

Audio: The librosa library [37] is utilized for extracting frame-level acoustic features which include 1-dimensional logarithmic fundamental frequency (log F0), 20-dimensional Mel-Frequency Cepstral Coefficients (MFCCs), and 12-dimensional Constant-Q chromatogram (CQT). These features are related to emotions and tone of speech according to [38].

4.3 Training details

All models are developed using the PyTorch toolbox [39] and trained on NVIDIA RTX 3090 GPUs. We employ the AdamW [40] optimizer with an initial learning rate of for the encoders, decoders, and the regressor. For the MIM module, we utilize the Adam [41] optimizer with an initial learning rate of . The batch size is set to 32, and the training process runs for 100 epochs. The mutual information estimator is a two-layer fully connected network with a hidden layer dimension of 32. The number of sample pairs for mutual information estimator is equal to batch size , and the number of iterations is set to 5. The hyper-parameter in MAG is set to 1, and the dimension of the latent representations is 64. All our experiments are conducted using the exact same random seed. In addition, we utilize the designated validation sets of CMU-MOSI and CMU-MOSEI datasets to identify the best hyper-parameters for our models.

| Datasets | CMU-MOSI | CMU-MOSEI | ||||||

|---|---|---|---|---|---|---|---|---|

| Metric | Acc | F1 | MAE | Corr | Acc | F1 | MAE | Corr |

| TFN (B)◇ [15] | 80.80 | 80.70 | 0.901 | 0.698 | 82.50 | 82.10 | 0.593 | 0.700 |

| LMF (B)◇ [16] | 82.50 | 82.40 | 0.917 | 0.695 | 82.00 | 82.10 | 0.623 | 0.677 |

| MulT∗ [20] | 80.45 | 80.47 | 0.892 | 0.667 | 81.02 | 80.98 | 0.605 | 0.670 |

| MISA (B)∗ [23] | 81.20 | 81.17 | 0.825 | 0.722 | 82.70 | 82.67 | 0.589 | 0.703 |

| GPFN§ [17] | 80.60 | 80.50 | 0.933 | 0.684 | 81.40 | 81.70 | 0.608 | 0.675 |

| GraphCAGE§ [18] | 82.10 | 82.10 | 0.933 | 0.684 | 81.70 | 81.80 | 0.609 | 0.670 |

| PMR∗ [21] | 81.33 | 81.30 | 0.875 | 0.669 | 82.12 | 82.07 | 0.614 | 0.675 |

| LMR-CBT∗ [22] | 80.42 | 80.38 | 0.901 | 0.657 | 80.75 | 80.79 | 0.634 | 0.653 |

| Self-MM∗ [12] | 85.21 | 85.18 | 0.773 | 0.774 | 84.07 | 84.12 | 0.556 | 0.750 |

| WSA-BERT∗ [27] | 83.99 | 83.83 | 0.766 | 0.779 | 84.36 | 84.39 | 0.556 | 0.763 |

| MFSA (B)∗ [25] | 83.32 | 83.30 | 0.804 | 0.746 | 83.92 | 83.87 | 0.566 | 0.743 |

| MIRD1 (ours) | 85.82 | 85.74 | 0.723 | 0.799 | 85.62 | 85.60 | 0.556 | 0.781 |

| MIRD2 (ours) | 86.23 | 86.16 | 0.721 | 0.804 | 86.34 | 86.29 | 0.553 | 0.823 |

4.4 Evaluation metrics

Consistent with previous studies [20, 12, 25], we record our experimental results in two forms: classification and regression. For classification, we report the weighted F1 score and binary accuracy. For regression, we report Mean Absolute Error (MAE) and Pearson correlation (Corr). Except for MAE, higher values indicate better performance for all metrics.

5 Results and Analysis

In this section, we make a detailed analysis and discussion about our experimental results. All codes are publicly available at here 222https://github.com/qianfan1996/MIRD.

5.1 Comparison with state-of-the-art models

The comparison results with state-of-the-art models on the CMU-MOSI and CMU-MOSEI datasets are shown in Table 1. means higher is better, and means lower is better. (B) means the language features are based on BERT [28]. ◇ means the results are from [12]; from original papers. ∗ denotes the reimplementation with non-verbal sentiment features mentioned in Section 4.2. denotes other representations disentanglement-based methods. MIRD1 and MIRD2 indicate the non-use and use of unlabeled data, respectively. % is omitted after Acc and F1 metrics. Best results are highlighted in bold.

From the results we can observe that, 1) our method MIRD (with or without unlabeled data) performs much better than all compared methods. The Accuracy, F1 score, MAE, and Correlation metrics of MIRD with unlabeled data are 86.23%, 86.16%, 0.721, and 0.804 on CMU-MOSI dataset, 86.34%, 86.29%, 0.553, and 0.823 on CMU-MOSEI dataset. The absolute improvements of the performance are 1.02%, 0.98%, 0.052, and 0.030 on CMU-MOSI dataset, 2.27%, 2.17%, 0.003, and 0.073 on CMU-MOSEI dataset compared to the second-best method Self-MM [12]. 2) MIRD outperforms previous representations disentanglement-based methods, MISA [23] and MFSA [25], on both datasets (we do not make comparisons with [42] and [24] since both of works are conducted on aligned multimodal language sequences, and official code implementations are not provided). These observations indicate that MIRD is more effective in modeling unaligned multimodal language sequences.

5.2 Efficiency analysis

To analyze the efficiency of MIRD, we list the number of parameters (#Params) and computation (Computational Budget) of each model, as shown in Table 2. Same as Table 1, (B) means the language features are based on BERT [28]; denotes other representations disentanglement-based methods. The M stands for millions; The GMACs denotes Giga Multiply–Accumulate Operations. From the table we can find that the model efficiency of MIRD is also remarkable under the premise of the best performance, especially compared with other representations disentanglement-based methods. This indicates that our method has relatively high applicability.

| Method | #Params | Computational Budget |

|---|---|---|

| MISA (B) | 123 M | 5.0 GMACs |

| Self-MM | 112 M | 4.6 GMACs |

| WSA-BERT | 115 M | 4.7 GMACs |

| MFSA (B) | 138 M | 5.6 GMACs |

| MIRD | 121 M | 4.9 GMACs |

5.3 Comparison with other representations disentanglement-based methods

To illustrate the differences between our method and previous representations disentanglement-based methods, we conduct a comparison in Table 3. In the table, ✓ denote presence, while ✗ indicate absence. The difference between the multimodal encoder and the common encoder is that the input of the multimodal encoder is multiple modalities together, while the input of the common encoder is one of each modality. Our method involves learning latent representations through cross-modal interactions, followed by a straightforward concatenation, whereas other representations disentanglement-based methods directly learn latent representations and then use the Transformer [43] for fusion.

| Module | MIRD | MISA | MFSA |

|---|---|---|---|

| Unimodal Encoder | ✓ | ✓ | ✓ |

| Multimodal Encoder | ✓ | ✗ | ✗ |

| Common Encoder | ✗ | ✓ | ✓ |

| Decoder | ✓ | ✓ | ✗ |

| Modality Discriminator | ✗ | ✗ | ✓ |

| Non-linear Constraint | ✓ | ✗ | ✗ |

| Fusion Mode | Concat | Transformer | Transformer |

| Interact before learning | ✓ | ✗ | ✗ |

5.4 Ablation study

To further explore the contributions of different components, we conduct an ablation study on CMU-MOSI dataset and the results are shown in Table 4. From this table, we can observe:

1) Experiment 1 removes the labeled data and uses only labeled data. The performance decreases significantly (accuracy drops from 86.23% to 85.82%, F1 score drops from 86.16% to 85.74%), highlighting the importance of utilizing more data to estimate the mutual information and helping to characterize the underlying structure of the multimodal data. However, despite the performance degradation observed in our method without unlabeled data, comparing it with other methods in Table 1, our method still significantly outperforms the alternatives. This shows the effectiveness of the proposed disentanglement architecture along with information constraints.

2) Experiment 2, which omits the mutual information minimization constraint between the shared representation and the input , does not result in a degradation of model performance. This is because after the representations disentanglement, there is an aggregation process on the disentangled representations, and whether or not the mutual information minimization constraint is applied does not affect the information integrity of the aggregated multimodal joint representation .

3) Experiment 3 removes the mutual information minimization constraints among the latent representations and . The performance further deteriorates (accuracy drops from 85.80% to 85.21%, F1 score drops from 85.77% to 85.09%), indicating that explicitly learning modality-specific and modality-agnostic representations is beneficial for sentiment prediction.

4) Experiment 4 indicates that if the original inputs are not reconstructed and only the WSA-BERT model [27] is used for sentiment prediction, the performance of the model decreases to an accuracy of 83.99% and an F1 score of 83.83%, further demonstrating the effectiveness of the proposed method.

| Method | Acc | F1 | MAE | Corr |

|---|---|---|---|---|

| MIRD2 | 86.23% | 86.16% | 0.721 | 0.804 |

| 1. MIRD1 | 85.82% | 85.74% | 0.723 | 0.799 |

| 2. | 85.80% | 85.77% | 0.722 | 0.796 |

| 3. | 85.21% | 85.09% | 0.750 | 0.787 |

| 4. | 83.99% | 83.83% | 0.766 | 0.779 |

5.5 Comparison between MIM and OC

The utilization of Orthogonal Constraint (OC) can enhance the linear independence between variables. However, it is possible that non-linear relationships still exist among them, leading to inadequate disentanglement of representations. The representations disentanglement method based on Mutual Information Minimization (MIM) can effectively eliminate the dependencies between variables, thereby achieving a more comprehensive disentanglement of representations across different modalities. This reduces information redundancy in the multimodal joint representation and consequently enhances the performance of the model. To validate this, we compare the performance of two constrained methods and visualize their disentangled representations, as shown in Table 5 and Figure 4.

| Dataset | Method | Acc | F1 | MAE | Corr |

|---|---|---|---|---|---|

| CMU-MOSI | OC | 85.35% | 85.32% | 0.744 | 0.790 |

| MIM | 85.80% | 85.77% | 0.722 | 0.796 | |

| CMU-MOSEI | OC | 85.46% | 85.39% | 0.577 | 0.794 |

| MIM | 85.87% | 85.82% | 0.570 | 0.798 |

From Table 5, we can observe that the MIM-based method outperforms the OC-based method in almost all evaluation metrics on the CMU-MOSI and CMU-MOSEI datasets. Specifically, on the CMU-MOSI dataset, the MIM-based method outperforms the OC-based method by 0.45% in terms of both accuracy and F1 score; on the CMU-MOSEI dataset, the MIM-based method exhibits superior accuracy and F1 score compared to the OC-based method, with differences of 0.41% and 0.43%, respectively. From Figure 4 (a), we can observe that in the method without relationship constraints, the disentangled visual representations (orange points) are separated from the language representations (blue points), and the major portion of the visual representations spontaneously form two distinct clusters. Furthermore, the disentangled audio representations (green points) also exhibit a rough formation of two clusters. From Figure 4 (b), it can be seen that in the OC-based method, the disentangled language representations (blue points) are separated from the visual representations (orange points). However, the MIM-based method tends to roughly cluster language, visual, audio, and shared representations separately, demonstrating greater distinctiveness (as depicted in Figure 4 (c)). It suggests that imposing relationship constraints on the latent representations yields more discriminative representations, with the MIM-based method showing superior distinctiveness compared to the OC-based method. Interestingly, in Figure 4 (a), we observe that despite the absence of any relationship constraint, the intermediate representations obtained by the model still exhibit some level of separability. This could be attributed to the fact that the individual modality encoders as well as the multimodal encoder have learned certain discriminative information.

5.6 Effect of unlabeled data

1) Mutual information estimation: The most direct reason for using unlabeled data is to estimate mutual information more accurately [32]. We plot the line chart of mutual information estimation values during the training process, as shown in Figure 5. The mutual information estimation values refer to the sum of mutual information between language, visual, audio, and shared representations pair-wise in each epoch, averaged over batches. From the figure, we can observe that without unlabeled data, the estimated mutual information converges rapidly (converging to approximately 0.4) but then exhibits intense oscillations. On the other hand, with unlabeled data, the mutual information estimation continuously decreases, approaching convergence at a smaller value 0.2, and the overall trend remains smooth. Therefore, the addition of unlabeled data can lead to more accurate mutual information estimation and more stable training process.

2) Model performance: The performance improvement resulting from the utilization of unlabeled data may be attributed to better estimating mutual information, thereby eliminating redundancy in multimodal joint representations. It may also be due to assisting in characterizing the structure of multimodal data by reconstruction, preventing model overfitting. To explore this, we conducted experiments on the CMU-MOSI dataset, and the results are shown in Table 6. In the table, \raisebox{-0.8pt}{1}⃝ refers to MIRD2, which uses unlabeled data to estimate mutual information and reconstruct the original input; \raisebox{-0.8pt}{2}⃝ indicates using unlabeled data to estimate mutual information without reconstructing the original input; \raisebox{-0.8pt}{3}⃝ represents using unlabeled data to reconstruct the original input without estimating mutual information; \raisebox{-0.8pt}{4}⃝ refers to MIRD1, which does not use any unlabeled data. Through the comparison of \raisebox{-0.8pt}{1}⃝ with \raisebox{-0.8pt}{2}⃝ and \raisebox{-0.8pt}{3}⃝, as well as \raisebox{-0.8pt}{4}⃝ with \raisebox{-0.8pt}{2}⃝ and \raisebox{-0.8pt}{3}⃝, we observe that the improvement in model performance is not only attributed to unlabeled data assisting in estimating mutual information but also to its contribution in reconstructing the original input and capturing the underlying structure of multimodal data.

| Method | Acc | F1 | MAE | Corr |

|---|---|---|---|---|

| \raisebox{-0.8pt}{1}⃝ | 86.23% | 86.16% | 0.721 | 0.804 |

| \raisebox{-0.8pt}{2}⃝ | 86.05% | 86.04% | 0.727 | 0.800 |

| \raisebox{-0.8pt}{3}⃝ | 85.98% | 85.92% | 0.723 | 0.801 |

| \raisebox{-0.8pt}{4}⃝ | 85.82% | 85.74% | 0.723 | 0.799 |

In addition, to investigate the impact of the amount of unlabeled data on model performance, we present the bar chart in Figure 6. From the figure, we observe that as the amount of unlabeled data increases, the model performance improves. The model achieves its best performance (86.23% on accuracy, 86.16% on F1 score) when the split rate is equal to 3. However, further increasing the amount of unlabeled data results in a gradual decline in performance. This is because (1) the model may have already learned sufficient information from the unlabeled data, and further increasing the data does not significantly improve the performance. (2) given the limited capacity of the model, extensive unlabeled data might hinder the model from effectively utilizing all the available data. Instead, it could introduce excessive noise, potentially leading to a decline in model performance.

5.7 Effect of loss weights

In order to select the optimal loss weight , we compare the performance of the model under different loss weights on CMU-MOSI and CMU-MOSEI dataset, as shown in Figure 7. From the figure, we can observe that on the CMU-MOSI dataset, the best performance is achieved when equals to 0.1, while on the CMU-MOSEI dataset, the best performance is achieved when equals to 1. This indicates that the weight of mutual information minimization should neither be too large nor too small. If it is too large, it can make the model difficult to learn, while if it is too small, it may not effectively serve as a regularization term. In addition, we find that when equals to 10, the performance of the model was significantly poor, with 58.23% accuary and 58.20% F1 score on CMU-MOSI dataset, 58.08% accuracy and 58.02% F1 score on CMU-MOSEI dataset (for aesthetic reasons, we did not depict the performance when equals to 10 in the figure). The reason is that the model prioritize disentangling correlations between latent representations, thereby neglecting the primary task of sentiment prediction.

5.8 Case study

To visually validate the reliability of our model, we present three examples shown in Figure 8. The examples are from the first three speaker videos of the test set of the CMU-MOSI dataset. From the figure, we can observe that the MIM-based approach exhibits the best sentiment prediction performance on the first two examples, and it is also comparable to the OC-based approach on the last example. This validates the generalizability of our proposed method in sentiment prediction tasks. Furthermore, we note that the method incorporating latent representation constraints (MIM, OC) outperforms unconstrained method (NC) in terms of sentiment prediction ability, indicating the significance of representations disentanglement.

6 Conclusion

In this paper, we highlighted the importance of learning modality-agnostic and modality-specific representations on modeling unaligned multimodal language sequences. A novel representations disentanglement architecture is proposed to learn a single modality-agnostic representation. The mutual information minimization constraints are further introduced along with our architecture to achieve superior disentanglement of representations, and avoiding information redundancy in multimodal joint representation. In addition, we emphasized the significance of employing unlabeled data. On one hand, it aids in estimating mutual information, and on the other hand, it helps to characterize the underlying structure of multimodal data, further mitigating the risk of model overfitting. Our method significantly improves the baseline and outperforms the state-of-the-art models in experiments.

References

- [1] R. G. Milo, Tools, language and cognition in human evolution, International Journal of Primatology 16 (1995) 1029–1031.

- [2] A. B. Zadeh, P. P. Liang, S. Poria, E. Cambria, L.-P. Morency, Multimodal language analysis in the wild: Cmu-mosei dataset and interpretable dynamic fusion graph, in: Proc. ACL, 2018, pp. 2236–2246.

- [3] P. P. Liang, Z. Liu, A. B. Zadeh, L.-P. Morency, Multimodal language analysis with recurrent multistage fusion, in: Proc. EMNLP, 2018, pp. 150–161.

- [4] L.-P. Morency, R. Mihalcea, P. Doshi, Towards multimodal sentiment analysis: Harvesting opinions from the web, in: Proc. Int. Conf. Multimodal Interfaces, 2011, pp. 169–176.

- [5] C. Minghai, W. Sen, L. Paul Pu, T. Baltrušaitis, Z. Amir, M. Louis-Philippe, Multimodal sentiment analysis with word-level fusion and reinforcement learning, Proc. ICMI (2017) 163–171.

- [6] A. Zadeh, P. P. Liang, N. Mazumder, S. Poria, E. Cambria, L.-P. Morency, Memory fusion network for multi-view sequential learning, in: Proc. AAAI, Vol. 32, 2018.

- [7] A. Zadeh, P. P. Liang, S. Poria, P. Vij, E. Cambria, L.-P. Morency, Multi-attention recurrent network for human communication comprehension, in: Proc. AAAI, Vol. 32, 2018.

- [8] H. Pham, P. P. Liang, T. Manzini, L.-P. Morency, B. Póczos, Found in translation: Learning robust joint representations by cyclic translations between modalities, in: Proc. AAAI, Vol. 33, 2019, pp. 6892–6899.

- [9] Y.-H. H. Tsai, P. P. Liang, A. Zadeh, L.-P. Morency, R. Salakhutdinov, Learning factorized multimodal representations, in: Proc. ICLR, 2019.

- [10] Y. Wang, Y. Shen, Z. Liu, P. P. Liang, A. Zadeh, L.-P. Morency, Words can shift: Dynamically adjusting word representations using nonverbal behaviors, in: Proc. AAAI, Vol. 33, 2019, pp. 7216–7223.

- [11] F. Qian, J. Han, Multimodal sentiment analysis with temporal modality attention, Proc. Interspeech (2021) 3385–3389.

- [12] W. Yu, H. Xu, Z. Yuan, J. Wu, Learning modality-specific representations with self-supervised multi-task learning for multimodal sentiment analysis, in: Proc. AAAI, Vol. 35, 2021, pp. 10790–10797.

- [13] W. Han, H. Chen, S. Poria, Improving multimodal fusion with hierarchical mutual information maximization for multimodal sentiment analysis, in: Proc. EMNLP, 2021, pp. 9180–9192.

- [14] B. Yang, B. Shao, L. Wu, X. Lin, Multimodal sentiment analysis with unidirectional modality translation, Neurocomputing 467 (2022) 130–137.

- [15] A. Zadeh, M. Chen, S. Poria, E. Cambria, L.-P. Morency, Tensor fusion network for multimodal sentiment analysis, in: Proc. EMNLP, 2017, pp. 1103–1114.

- [16] Z. Liu, Y. Shen, V. B. Lakshminarasimhan, P. P. Liang, A. B. Zadeh, L.-P. Morency, Efficient low-rank multimodal fusion with modality-specific factors, in: Proc. ACL, 2018, pp. 2247–2256.

- [17] S. Mai, S. Xing, J.-X. He, Y. Zeng, H. Hu, Analyzing unaligned multimodal sequence via graph convolution and graph pooling fusion, ArXiv abs/2011.13572 (2020).

- [18] J. Wu, S. Mai, H. Hu, Graph capsule aggregation for unaligned multimodal sequences, in: Proc. ICMI, 2021, pp. 521–529.

- [19] J. Yang, Y. Wang, R. Yi, Y. Zhu, A. Rehman, A. Zadeh, S. Poria, L.-P. Morency, Mtgat: Multimodal temporal graph attention networks for unaligned human multimodal language sequences, arXiv preprint arXiv:2010.11985 (2020).

- [20] Y.-H. H. Tsai, S. Bai, P. P. Liang, J. Z. Kolter, L.-P. Morency, R. Salakhutdinov, Multimodal transformer for unaligned multimodal language sequences, in: Proc. ACL, 2019, pp. 6558–6569.

- [21] F. Lv, X. Chen, Y. Huang, L. Duan, G. Lin, Progressive modality reinforcement for human multimodal emotion recognition from unaligned multimodal sequences, in: Proc. CVPR, 2021, pp. 2554–2562.

- [22] Z. Fu, F. Liu, H. Wang, S. Shen, J. Zhang, J. Qi, X. Fu, A. Zhou, Lmr-cbt: Learning modality-fused representations with cb-transformer for multimodal emotion recognition from unaligned multimodal sequences, arXiv preprint arXiv:2112.01697 (2021).

- [23] D. Hazarika, R. Zimmermann, S. Poria, Misa: Modality-invariant and-specific representations for multimodal sentiment analysis, in: Proc. ACM Multimedia, 2020, pp. 1122–1131.

- [24] D. Yang, S. Huang, H. Kuang, Y. Du, L. Zhang, Disentangled representation learning for multimodal emotion recognition, in: Proc. ACM Multimedia, 2022, pp. 1642–1651.

- [25] D. Yang, H. Kuang, S. Huang, L. Zhang, Learning modality-specific and-agnostic representations for asynchronous multimodal language sequences, in: Proc. ACM Multimedia, 2022, pp. 1708–1717.

- [26] S. Hochreiter, J. Schmidhuber, Long short-term memory, Neural Comput. 9 (1997) 1735–1780.

- [27] F. Qian, H. Song, J. Han, Word-wise sparse attention for multimodal sentiment analysis, Proc. Interspeech (2022) 1973–1977.

- [28] J. D. M.-W. C. Kenton, L. K. Toutanova, Bert: Pre-training of deep bidirectional transformers for language understanding, in: Proc. NAACL-HLT, 2019, pp. 4171–4186.

- [29] A. Martins, R. Astudillo, From softmax to sparsemax: A sparse model of attention and multi-label classification, in: Proc. ICML, 2016, pp. 1614–1623.

- [30] W. Rahman, M. K. Hasan, S. Lee, A. B. Zadeh, C. Mao, L.-P. Morency, E. Hoque, Integrating multimodal information in large pretrained transformers, in: Proc. ACL, 2020, pp. 2359–2369.

- [31] P. Vincent, H. Larochelle, Y. Bengio, P.-A. Manzagol, Extracting and composing robust features with denoising autoencoders, in: Proc. ICML, 2008, pp. 1096–1103.

- [32] P. Cheng, W. Hao, S. Dai, J. Liu, Z. Gan, L. Carin, Club: A contrastive log-ratio upper bound of mutual information, in: Proc. ICML, 2020, pp. 1779–1788.

- [33] S. Vandenhende, S. Georgoulis, W. Van Gansbeke, M. Proesmans, D. Dai, L. Van Gool, Multi-task learning for dense prediction tasks: A survey, IEEE Trans. on Pattern Anal. and Mach. Intell. 44 (7) (2021) 3614–3633.

- [34] A. Zadeh, R. Zellers, E. Pincus, L.-P. Morency, Mosi: multimodal corpus of sentiment intensity and subjectivity analysis in online opinion videos, arXiv preprint arXiv:1606.06259 (2016).

- [35] S. Albanie, A. Nagrani, A. Vedaldi, A. Zisserman, Emotion recognition in speech using cross-modal transfer in the wild, in: Proc. ACM Multimedia, 2018, pp. 292–301.

- [36] T. Baltrusaitis, A. Zadeh, Y. C. Lim, L.-P. Morency, Openface 2.0: Facial behavior analysis toolkit, in: IEEE Int. Conf. on Autom. Face & Gesture Recognit., 2018, pp. 59–66.

- [37] B. McFee, C. Raffel, D. Liang, D. P. Ellis, M. McVicar, E. Battenberg, O. Nieto, librosa: Audio and music signal analysis in python, in: Proc. of the 14th Python in Sci. Conf., Vol. 8, 2015, pp. 18–25.

- [38] W. Yu, H. Xu, F. Meng, Y. Zhu, Y. Ma, J. Wu, J. Zou, K. Yang, Ch-sims: A chinese multimodal sentiment analysis dataset with fine-grained annotation of modality, in: Proc. ACL, 2020, pp. 3718–3727.

- [39] A. Paszke, S. Gross, F. Massa, A. Lerer, J. Bradbury, G. Chanan, T. Killeen, Z. Lin, N. Gimelshein, L. Antiga, A. Desmaison, A. Köpf, E. Yang, Z. DeVito, M. Raison, A. Tejani, S. Chilamkurthy, B. Steiner, L. Fang, J. Bai, S. Chintala, Pytorch: An imperative style, high-performance deep learning library, ArXiv abs/1912.01703 (2019).

- [40] I. Loshchilov, F. Hutter, Decoupled weight decay regularization, in: Int. Conf. on Learn. Representations, 2018.

- [41] D. P. Kingma, J. Ba, Adam: A method for stochastic optimization, ArXiv abs/1412.6980 (2014).

- [42] J. He, H. Yanga, C. Zhang, H. Chen, Y. Xua, et al., Dynamic invariant-specific representation fusion network for multimodal sentiment analysis, Comput. Intell. and Neurosci. 2022 (2022).

- [43] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, I. Polosukhin, Attention is all you need, Adv. in Neural Inf. Processing Syst. 30 (2017).