Multivariate Relations Aggregation Learning in Social Networks

Abstract.

Multivariate relations are general in various types of networks, such as biological networks, social networks, transportation networks, and academic networks. Due to the principle of ternary closures and the trend of group formation, the multivariate relationships in social networks are complex and rich. Therefore, in graph learning tasks of social networks, the identification and utilization of multivariate relationship information are more important. Existing graph learning methods are based on the neighborhood information diffusion mechanism, which often leads to partial omission or even lack of multivariate relationship information, and ultimately affects the accuracy and execution efficiency of the task. To address these challenges, this paper proposes the multivariate relationship aggregation learning (MORE) method, which can effectively capture the multivariate relationship information in the network environment. By aggregating node attribute features and structural features, MORE achieves higher accuracy and faster convergence speed. We conducted experiments on one citation network and five social networks. The experimental results show that the MORE model has higher accuracy than the GCN (Graph Convolutional Network) model in node classification tasks, and can significantly reduce time cost.

1. Introduction

We are living in a world full of relations. It is of great significance to explore existing social relationships as well as predict potential relationships. These abundant relations generally exist among multiple entities; thus, these relations are also called multivariate relations. Multivariate relations are the most fundamental relations, containing interpersonal relations, public relations, logical relations, social relations, etc (White et al., 2016; Chang et al., 2019). Multivariate relations are of more complicated structures comparing with binary relations. Such higher-order structures contain more information to express inner relations among multiple entities. Since multivariate relations can maintain a larger volume of knowledge, multivariate relations are widely applied in a variety of application scenarios, such as anomaly detection, fraud detection, social and academic network analysis, interaction extraction, digital library system, etc (Xu et al., 2018; Nguyen et al., 2019; Yu et al., 2019a). Indeed, in social networks, multivariate relations are of more significance. Scholars have gradually realized the importance of multivariate relations in social networks. There are some previous works focus on closures in social networks and some studies focus on teams, groups, communities, etc (Yu et al., 2017; Zhang et al., 2018; Wang et al., 2019). All these related studies generally focus on mining some certain patterns, formulating corresponding models, or building some theoretical conclusions, etc.

In some specific scenarios, it seems that multivariate relations can be precisely divided into multiple binary relations. For example, Alice, Bob, Cindy, and David are in one same collaboration team (Figure 1). It can be depicted by a binary relation with two collaborated members. However, binary relations can only represent simple pairwise relationships, while in most cases, multivariate relations cannot be divided in this way. If Cindy is the team leader, Alice, Bob, as well as David never collaborate, then using binary relations will leading to over-expression or wrong expression of relations. Especially in social networks, multivariate relationships are more common such as friendships, kinships, peer relationships, etc. (Litwin and Stoeckel, 2016) Such complicated relationships cannot only be represented with binary relationships.

Graph neural network (GNN) has resoundingly taken neighborhood information of nodes into consideration, which is also proved to be more precise and effective (Qiao et al., 2018; Goyal and Ferrara, 2018). A certain node may have multiple neighbors, in which some of these neighbors are in closer relations because they are in multivariate relations. However, current GNN methods ignore the relations among neighbors. Based on the principle of ternary closure, there will be a large number of low-order fully-connected structures in interpersonal social networks. This causes complex relationships to appear frequently in social networks, such as network motifs (Yu et al., 2019b).

In this work, we propose a Multivariate relatiOnship aggRegation lEarning method called MORE. In our proposed method, we employ network motifs to model multivariate relationships in networks, because such the network structure has been proved to be effective in network embedding methods (Rossi et al., 2018, 2018).

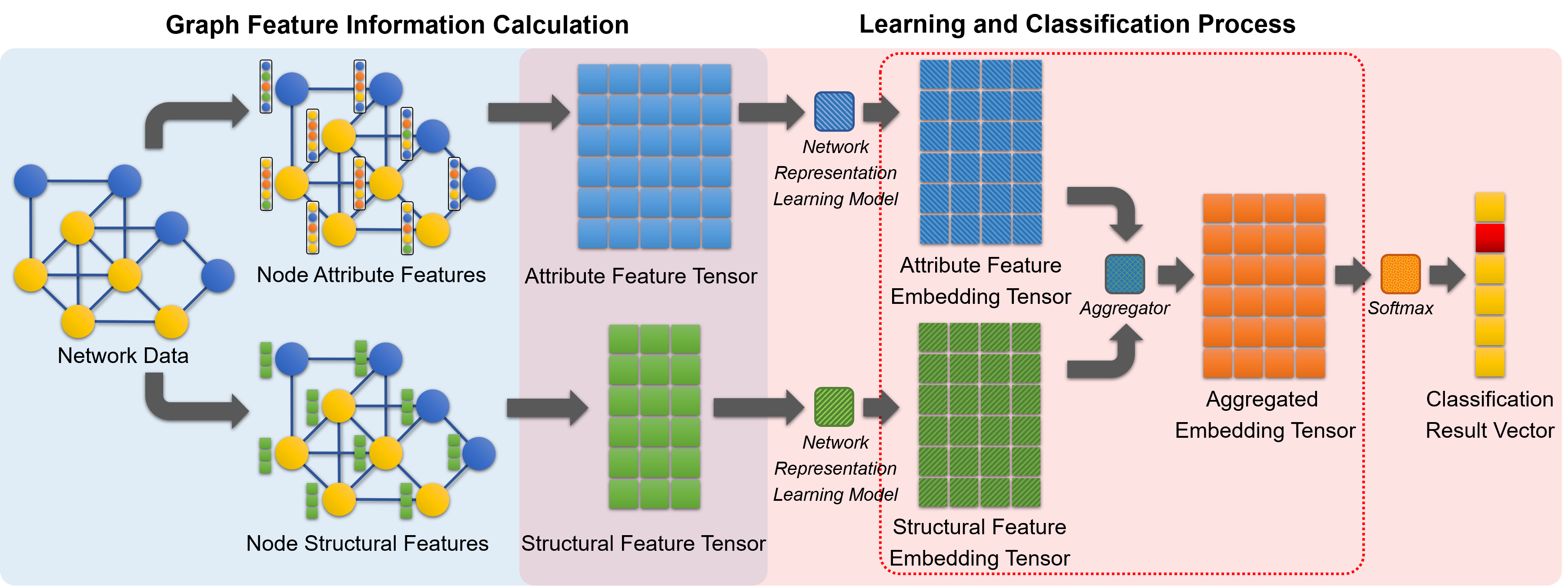

MORE considers both node attribute features and structural features to enhance the ability of multivariate relation aggregation. For both features, MORE first respectively generates attribute feature tensor and structural feature tensor. Then, by network representation learning, MORE can achieve two embedding tensors (i.e., attribute feature embedding tensor and structural feature tensor) with the same dimension. After then, MORE aggregates two embedding tensors into one aggregated embedding tensor with three different aggregators, including Hadamard aggregator, Summation aggregator, and connection aggregator. Finally, MORE employs softmax to achieve the target vector. Comparing with the baseline method, MORE outperforms with higher accuracy and efficiency in 6 different networks including Cora, Email-Eucore, Facebook, Ploblogs, Football, and TerrorAttack. We specifically analyze that in social networks, our proposed MORE achieves higher accuracy and consumes much less training time. Meanwhile, it is also found that MORE also outperforms the baseline method in the Cora dataset. Generally, we summarize our contributions as follows.

-

•

MORE aggregates both nodes’ attribute features and structural features, which is proved to have the ability to better representing multivariate relationships social networks.

-

•

MORE consumes much less training time compared with baseline methods. We find that MORE always iterates fewer times than the baseline method and the training time can be shortened to 19.5% times.

-

•

By implementing MORE on 5 different social networks and 1 general dataset, MORE achieves better performance with higher accuracy and efficiency.

In this paper, we first introduce the existing graph learning methods and their advantages and disadvantages in Section 2. Then, we introduce the design theory and framework of the MORE model in Section 3. Next, in Section 4, the MORE model will complete the node classification task in six datasets. And MORE will compare with GCN (Graph Convolutional Network) in terms of accuracy and efficiency. Finally, we will discuss the future improvement of the MORE model in Section 5. Section 6 concludes the paper.

2. Related Work

2.1. Digital Library

The emergence of the Internet and the development of related technologies have not only increased information but also changed the nature of traditional libraries and information services. The Digital Library (DL) has become an important part of the modern digital information system. In a narrow sense, the digital library refers to a library that uses digital technology to process and store various documents; in a broad sense, it is a large-scale, knowledge-free knowledge center that can implement multiple information search and processing functions without time and space restrictions. Academic data sets and online academic literature search platforms, such as IEEE / IEE Electronic Library, Wiley Online Library, Springer LINK, Google Academic Search, etc., can be regarded as representative modern digital libraries.

The knowledge information of digital libraries often need to sort and repair. Due to the large number of documents or the loss of knowledge information, manual repair is often inefficient and inaccurate. To this end, based on existing knowledge information, such as the author of the document, keywords, citation data and other information, efficient and automated realization of knowledge or document classification and missing information prediction has become one of the important topics in the field of digital library research. In the process of solving this research topic, machine learning technology play an important role. Wu et al. (Wu et al., 2015) have developed CiteSeerX, a digital library search engine, over 5-6 years. They combined traditional machine learning, metadata and other technologies to achieve document classification and deduplication, document and citation clustering, and automatic metadata extraction. Vorgia et al. (Vorgia et al., 2017) used many traditional machine learning methods, including 14 classifiers such as Naive Bayes, SVM, Random Forest, etc., to classify documents based on literature abstracts, and achieved good classification results on their datasets. However, the existing method requires a large number of training samples, to obtain a better model through a long iterative learning process. Meanwhile, because it is based on traditional scatter tables or binary relations, it does not take into account the multivariate relations between knowledge or literature, which limits the further optimization of its task accuracy.

2.2. Graph Learning & Classification

Classification task is one of the most classic application scenarios in the field of machine learning. Many researchers have studied “how to classify data” and have created a variety of classification algorithms. Among these algorithms, the logistic regression (LR) algorithm and the support vector machine (SVM) algorithm (Singh et al., 2016) are the most famous. These algorithms are usually based on mathematical knowledge and optimization theory, and they are simple to implement. By repeatedly performing gradient descent on a unit, the algorithm can obtain very effective classification results (Kurt et al., 2008; Caigny et al., 2018). However, these algorithms have huge flaws: They only consider the attributes of the nodes during the iteration process, and cannot consider the dependencies between the data. In the network data with rich relationships between data nodes, the classification accuracy of such algorithms is not high, and their training efficiency is usually low.

Over time, networks have become the focus of researchers’ attention. Machine learning in complex graphs or networks is becoming the focus of research by artificial intelligence scientists. Because traditional machine learning methods cannot effectively combine the related information in the network environment, to improve the efficiency and accuracy of tasks, graph learning algorithms have been proposed to solve graph-related problems. Meanwhile, the great success of the recurrent neural network (RNN) (Chung et al., 2014) and the convolutional neural network (CNN) (Krizhevsky et al., 2012) in the field of natural language (Vu et al., 2016; Kumar et al., 2016; Goldberg, 2017) and computer vision (Zhang et al., 2017; Hershey et al., 2017; Xiong et al., 2019) have provided new ideas and reference objects for graph learning methods. As a result, graph recurrent neural networks (GRNN) and graph convolutional neural networks (GCNN) have become mainstream methods.

Graph Recurrent Neural Network (GRNN). The graph neural network algorithm suitable for graph structure is first proposed by Gori et al. (Gori et al., 2005), aiming at improving the traditional neural network (NN) algorithm. Gori et al. use the recursive method to continuously propagate neighbor node information until the node state is stable. This neighborhood information propagation mechanism has become the basis for many subsequent graph learning algorithms. Later, Scarselli et al. (Scarselli et al., 2009) ameliorated this method. The new algorithm combines the ideas of RNN and applies to many types of graph structures, including directed and undirected graphs, cyclic graphs and acyclic graphs. Li et al. (Li et al., 2016) combined it with the Gated Recurrent Unit (GRU) theory (Cho et al., 2014). The resulting Gated Graph Neural Network (GGNN) reduced the number of iterations required, thereby improving the overall efficiency of the learning process. However, on the whole, the GRNN algorithm still costs a lot: From the space perspective, this overhead is reflected in the huge parameter and the intermediate state nodes that need to be stored. From the time perspective, these algorithms require a large number of iterations and long training time.

| Notations | Implications |

|---|---|

| Basic Operation: | |

| The number of elements in the set. | |

| Hadamard product operation. | |

| Graph Theory Related: | |

| G | A graph. |

| V | The node set of graph G. |

| E | The edge set of graph G. |

| A | The adjacency matrix of graph G. |

| D | The degree matrix of graph G. |

| Graph Learning Method Related: | |

| The identity matrix of . | |

| The Feature matrix. | |

| The renormalized adjacency matrix. | |

| The renormalized degree matrix. | |

| The activation function | |

| The softmax function. | |

| The weight parameter matrix to be trained. | |

Graph Convolutional Neural Network (GCNN). Different methods for defining convolution have created various graph convolutional neural network models. Bruna et al. (Bruna et al., 2014) combined spectral graph theory with graph learning methods. They used the graph Fourier transform and Convolution theorem in the field of graph signal processing (GSP) (Sandryhaila and Moura, 2013; Perraudin and Vandergheynst, 2017; Ortega et al., 2018), to obtain the graph convolution formula. Defferrard et al. (Defferrard et al., 2016) made further improvements on their basis. By cleverly designing convolution kernels and combining Chebyshev polynomials, this algorithm has fewer parameters and can effectively aggregate local features in the graph structure. The GCN model proposed by Kipf et al. (Kipf and Welling, 2017) is the master of this direction. By approximating the Chebyshev polynomial of the first order, their method is more stable while avoiding overfitting. Seo et al. (Seo et al., 2018) combined the long short-term memory (LSTM) mechanism (Sak et al., 2014) in RNN, and proposed the GCRN model that can be applied to dynamic networks. On the other hand, Micheli (Micheli, 2009) proposed to directly use the neighborhood information aggregation mechanism to implement the graph convolution operation. Gilmer et al. (Gilmer et al., 2017) integrated this theory and proposed a general framework for this kind of GCNN. Hamilton et al. (Hamilton et al., 2017) used the sampling method to unify the number of neighbor nodes and established the well-known GraphSage algorithm. Velickovic et al. (Velickovic et al., 2018) combined the attention mechanism (Chorowski et al., 2015) with GCNN and proposed the graph attention network (GAT) model. These two ideas have their advantages, but they are still limited to neighborhood information. This will lead to partial omission or even complete loss of the rich multivariate relationship information in the network.

3. The Design of MORE

In this section, we introduce the framework of the MORE. First, we illustrate the definition and characteristics of the network motif. Then, the correlation between the multivariate relationship and the network motif will be explained. Finally, we introduce the framework of the MORE model in detail.

3.1. Network Motif

The network motif firstly refers to a part that has a specific function or a specific structure in a biological macromolecule. For example, a combination of amino acids that characterize a particular function in a protein macromolecule can be called as a motif. Milo et al. (Milo et al., 2002) extended this concept to the field of network science in 2002. Their research team discovered a frequent subgraph structure while studying gene transcription networks. In subsequent research work, they found some special subgraphs with similar characteristics but different structures in many natural networks. Milo et al. summarized the relevant results and proposed a new network subgraph concept called the network motif.

Unlike network graphlets and communities, the network motif is a special type of subgraph structure in the network, which has a stronger practical scene and significance. By definition, the network motif is a kind of connected induced subgraphs, which have the following three characteristics:

(1) Network motifs have stronger practical meaning. Similar to the concept of motifs in biology, network motifs also have some specific practical significances. This practical meaning is determined by the certain structure of the network motif, the type and the characteristic of the network. For example, a typical three-order triangle motif can represent a three-person friendship in a general social network. Therefore, the number of such network motifs can be effectively used to study the correctness of the ternary closure principle in social networks.

(2) Network motifs appear more frequently in real world networks. This phenomenon is caused by the structural characteristics of the network motif and its practical significance. In a real network and a random network with the same number of nodes and edges, the network motif appears much more frequently in the former than the latter. In some real sparse networks, such as transportation networks, some complex network motifs may still occur frequently. But they cannot be found in the corresponding random networks.

(3) Network motifs are mostly low-order structures. Network motifs can be regarded as a special kind of low-order subgraph structure. In general, the number of nodes that make up a network motif will not exceed eight. Scholars refer to a network motif consisting of three or four nodes as a low-order network motif, and a network motif composed of five or more nodes is called a high-order network motif. High-order network motifs are numerous, complex, and are often used in the super-large-scale network. Applying it to the analysis of a general network requires a lot of time to perform preprocessing, and it is difficult to obtain better experimental results than using low-order motifs. That is, the input time cost and the output experimental effect often fail to balance. Therefore, in this paper, we mainly use low-order network motifs composed of three or four nodes for network analysis.

| Motif | Code | |||||

|---|---|---|---|---|---|---|

| M31 | 2.00 | 2 | 1 | 1.00 | 1 | |

| M32 | 1.33 | 2 | 2 | 0.67 | 0 | |

| M41 | 3.00 | 3 | 1 | 1.00 | 4 | |

| M42 | 2.50 | 3 | 2 | 0.83 | 2 | |

| M43 | 2.00 | 2 | 2 | 0.67 | 0 |

At present, the research scope of network motif researchers has expanded from the biochemical network, gene network, and biological neural network to social networks, academic collaboration networks, transportation networks, etc. In these networks, they found a large number of network motifs with different structural characteristics. In this sense, the network motif can reveal the basic structure of most network structures and play an important role in the specific application functions of the network. In Table 2, we list various network motifs used in this paper. Among them, denotes the average degree, denotes the maximum degree, denotes the diameter, denotes the density, and denotes the number of triangles contained in the network motif.

3.2. Multivariate Relationship & Network Motif

Relationships are the foundation of network building. In the network dataset, the edge between two nodes actually reflects a kind of binary relationship, that is, there is a relationship between the two data entities. This low-order relationship is simple, direct, and easy to characterize. However, because it only considers the association between two data, low-order relationships often lose high-order information within many datasets. To this end, researchers have extended the binary relationship into the multivariate relationship, and analyzed the multivariate relationship to mine patterns, features and implicit associations in the network, to obtain more accurate and valuable conclusions.

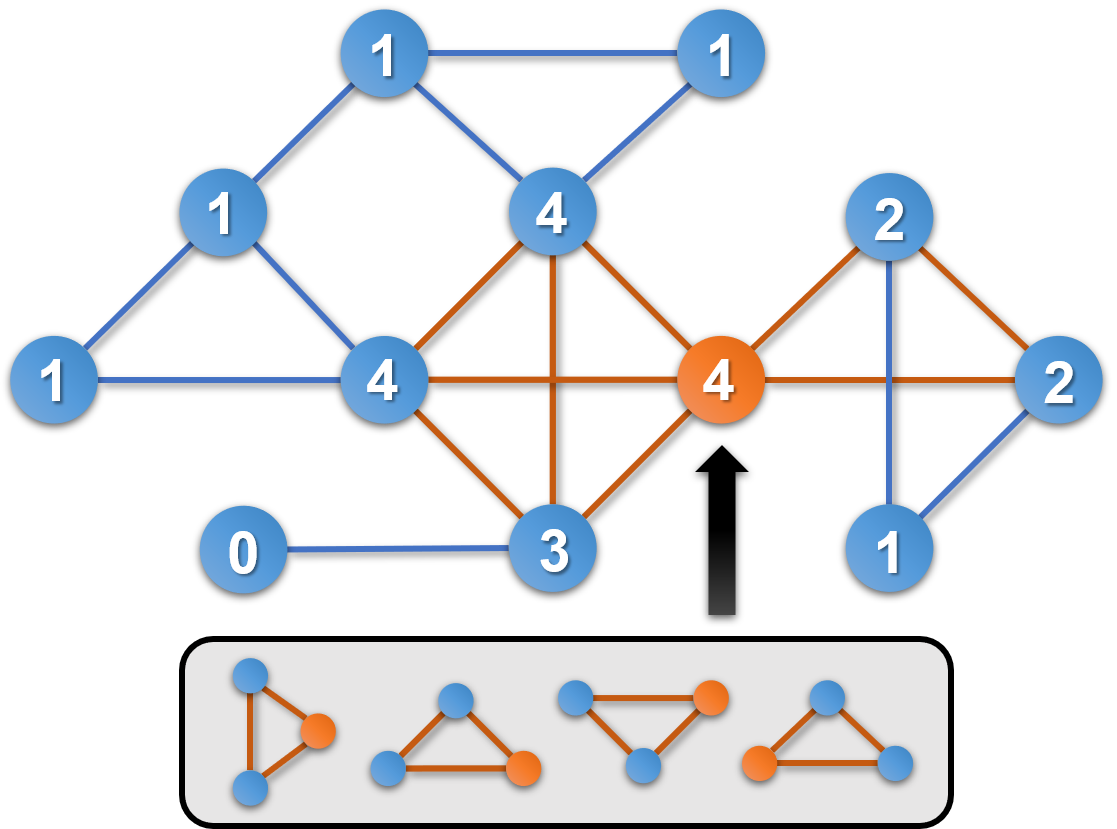

Multivariate relationships are common in actual networks, and the network motif can effectively characterize them because of their definitions and properties. As shown in Figure 2, in a social network, besides the direct relationship connect one user to another, the ternary closure relationship between three users is a common phenomenon existing in the social environment. This relationship can be regarded as a fully-connected small group, and fully-connected network motifs, such as M31 and M41 (in Table 2), can effectively characterize this multivariate relationship in the social network. This kind of network motifs can also be used to characterize the ubiquitous coauthor team consisting of three or four scholars in the academic network. In transportation networks, such as urban road networks, there are a large number of block-like structures in the network, due to the constraints of realistic conditions such as traffic planning and geographical factors. This multivariate relationship between intersections can be effectively characterized by M43.

In this paper, we utilize the concept of Node Motif Degree (NMD) to characterize the association of a particular multivariate relationship at a node, and its definition is as follows.

Definition 0.

(Node Motif Degree) In the graph , the node motif degree information of a node is defined as the number of the motif , whose constituent nodes includes the node .

As shown in the Figure 4, the number of the nodes represents the node motif degree information of the triangle motif (M31). Through these graph features, we can better connect the single node, the network motif and the multivariate relationship in the network structure.

3.3. The Framework of MORE

In this part, we will introduce the MORE model proposed in this article, which is simple and easy to implement. This model can effectively catch the multivariate relationship information in the network. While improving the accuracy of graph learning tasks, MORE greatly speeds up the convergence and reduces the time cost in the learning process.

The framework structure of the entire MORE model is shown in Figure 3. The overall model contains two parts, one is the graph feature information calculation, and the other is the learning and classification process. The attribute feature tensor and structure feature tensor output by the first part will be used as the input of the second part. It is worth noting that in the above figure, due to space constraints, we have not fully expanded the content of the aggregated part (the red dotted box in the figure). The content of the method in this part will be explained in Figure 5.

For the input network dataset, we first extract the characteristic information of its nodes. MORE algorithm considers the attribute features and structural features of the node. The algorithm directly extracts the attribute information of each node in the graph structure, and integrates it into the node Attribute Feature Tensor (AFT) of the total graph. Moreover, to better characterize the structural association and multivariate relationship information, the algorithm calculates five kinds of node motif degree information of each node in the network, including the node motif degree of M31, M32, M41, M42 and M43. This information will be integrated with the original degree of the node, and then the node Structural Feature Tensor (SFT) will be obtained.

Because the two feature tensors obtained in the above process often have large scale differences, and the direct connection two tensors will easily destroy the internal correlation of the feature itself, we consider using a graph representation learning model to reduce or expand the dimension of original tensors. By transform its node feature representation method, we can make the two have the same dimensional information. In this paper, this model uses the GCN model, which uses a graph convolutional network to implement the network embedding process. The GCN model is an effective model to deal with graph-related problems. The core of GCN uses a graph convolution operation based on spectral graph theory, and its formula is expressed as follows:

| (1) |

Wherein, represents the graph feature vector, represents the weight parameter vector, and represents the graph convolution operation. To improve the stability of the algorithm and avoid gradient explosion or disappearance during the learning process, GCN replaced in the above formula with . Wherein, and . Through this methodmodel, we transform the original AFT and SFT into their respective embedding tensors, namely the Attribute Feature Embedding Tensor (AFET) and the Structure Feature Embedding Tensor (SFET).

In order to aggregate the AFET and SFET, get the Aggregated Embedding Tensor (AET), and apply it to practical tasks, MORE proposed three multivariate relationship aggregation methods, as shown in Figure 5. The Hadamard Aggregation (HA) method, or dot multiplication aggregation method, gets AET by performing dot multiplication of two embedding tensors; The Summation Aggregation (SA) method gets AET by adding two embedding tensors. These two aggregation methods will not change the size of the aggregation tensor, consume less time for the iterative process, and will not greatly affect the internal correlation information of the features. The Connection Aggregation (CA) method gets AET by connecting two embedding tensors. Although this method will double the size of the aggregation tensor, and the iterative process requires a higher time cost, it can completely retain all the feature information in the total network dataset. In this paper, we use MORE-HA, MORE-SU, and MORE-CO, to represent the MORE algorithm using three aggregation methods (HA, SA and CA) in the aggregation processing of AFET and SFET, respectively.

Finally, the MORE model is used for node classification tasks to measure and express the performance of our algorithm in real network environments. We have considered inputting AET into a graph learning model again for iterative training. However, it was found through experiments that, when the GCN model was applied, the previous iterative process was sufficient to meet the needs of the processing task. If we add more graph learning models, it will cause severe overfitting. To this end, the algorithm directly adds a Softmax layer at the end to generate the one-hot vector required for node classification.

The above is an iterative process of the MORE model. This process can be expressed by the following formula.

| (2) |

Among them, represents the single GCN iteration process, and represents the aggregation process, which is selected from three methods. represents the Softmax function, which is defined as . Next, we use the cross-entropy error as the loss function of the model in the node classification task, and continuously adjust the values of the relevant weight parameters through the gradient descent algorithm. The specific error function of one data can be described by the following formula.

| (3) |

Wherein, represents the true node classification label of the network data set, and represents the node classification label predicted by the model.

4. Experiment

In this section, we explain the experimental design of the node classification task using the MORE model. We illustrate the data set used firstly. Then, the operating environment, hyperparameter settings, and baseline method will be introduced. Finally, the advantages of our method are explained in terms of the accuracy and efficiency of the node classification task.

4.1. Datasets

We selected 6 different datasets to conduct experiments to reflect the characteristics and advantages of our model. The basic information of these data sets is shown in Table 3. The information of each dataset is divided into three parts: the first part is the basic information, the second part is the structure information, and the third part is the network motif information. In this tabel, #Node represents the number of nodes in the dataset network, #Edge represents the number of edges, #Feature represents the feature number of each node, and #Label represents the type number of node labels in the total network. ‘-’ in #Feature means that the dataset is missing node feature data. In addition to the above basic network information, we have additionally listed the average degree , the maximum degree , the network density , and the overall clustering coefficient Clustering of the network. These statistical indicators will effectively characterize the density of associations between data and the connection status of the network.

Because our model needs to consider the number of motifs in the network, and use this to characterize the multivariate relationships and high-order structural features in the dataset, we need to count several representative network motifs and get the motif node degree information of each node in the network. The number of network motifs we used in each network dataset is also shown in Table 3. #M31 represents the number of triangle motif, and #M32 represents the number of three-order path motif in the network. #M41, #M42 and #M43 respectively represent the number of three kinds of four-order motif mentioned in this paper.

| Network Name | Cora1 | Email-Eucore3 | Facebook4 |

|---|---|---|---|

| #Node | 2708 | 1005 | 6637 |

| #Edge | 5278 | 16706 | 249967 |

| #Feature | 1433 | - | - |

| #Label | 7 | 41 | 3 |

| 3.9 | 33.25 | 75.32 | |

| 168 | 347 | 840 | |

| 0.00144 | 0.033113 | 0.011351 | |

| Clustering | 0.24 | 0.399 | 0.278 |

| #M31 | 1630 | 105461 | 2310053 |

| #M32 | 47411 | 866833 | 30478345 |

| #M41 | 220 | 423750 | 13743319 |

| #M42 | 2468 | 2470220 | 83926778 |

| #M43 | 1536 | 909289 | 45518420 |

| Network Name | Polblogs2 | Football2 | TerrorAttack1 |

| #Node | 1224 | 115 | 1293 |

| #Edge | 16718 | 613 | 3172 |

| #Feature | - | - | 106 |

| #Label | 2 | 12 | 6 |

| 27.32 | 10.66 | 4.91 | |

| 351 | 12 | 49 | |

| 0.022336 | 0.093516 | 0.003798 | |

| Clustering | 0.32 | 0.403 | 0.378 |

| #M31 | 101043 | 810 | 26171 |

| #M32 | 1038396 | 3537 | 232 |

| #M41 | 422327 | 732 | 241419 |

| #M42 | 2775480 | 1155 | 0 |

| #M43 | 1128796 | 564 | 0 |

- •

- •

- •

- •

4.2. Environmental Settings

Because the comparison of the running time is needed in this experiment, we provide information for the computer’s operating environment and hardware configuration. We use a Hewlett-Packard PC, ENVY-13: The operating system is Windows 10 64-bit, the CPU is Intel i5-8265U, and the memory is 8G. We use Python 3.7.3 and Tensorflow 1.14.0 for coding, and use Visual Studio Code for programming.

During the training process, we divide the original dataset into a training set, a validation set, and a test set. If the number of nodes in the dataset is between 1150 and 3000, we randomly select 150 data as the training set (TRS) and 500 data as the verification set (VAS), and finally test the model effect on a test set (TES) consisting of 500 data. For the Email-Eucore, Facebook, and Football, we construct three sets at similar proportions. Besides, since the attribute information of the nodes is used in the iteration, we use a method that assigns a one-hot vector to each node randomly for the network without features. This method is simple, easy to implement, and almost does not affect overall mission performance.

The choice of hyperparameters is also worth considering because they directly affect the final experimental results. In this experiment, if there is no special statement, we optimize the model using Adam with the learning rate of 0.01, and the maximum training iterations number max_epoch of 300. This experiment uses two regularization methods: random inactivation and L2 regularization. The probability of random inactivation drop_out is 0.5, and the L2 regularization factor is 0.0005. To avoid the occurrence of overfitting, the early stopping method is adopted, and the tolerance is 30 (If the loss of the validation set does not decrease during 30 consecutive iterations, the algorithm will automatically stop). Also, the embedding dimension ED is an important attribute, which directly determines the capacity of the model space. In this experiment, the graph representation learning model use one layer of GCN structure. AFT and SFT are embedded in 32, 64, 128, 256, and 512 dimensions, respectively, and the best performance is selected as the final experimental result.

| Network | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| GCN | MORE-HA | MORE-SU | MORE-CO | GCN | MORE-HA | MORE-SU | MORE-CO | GCN | MORE-HA | MORE-SU | MORE-CO | |

| Cora | 82.20% | 81.80% | 81.40% | 81.60% | 79.00% | 82.30% | 80.40% | 81.60% | 76.20% | 81.20% | 79.90% | 81.40% |

| Email-Eucore | 51.50% | 61.50% | 58.25% | 60.75% | 23.00% | 53.50% | 54.00% | 56.00% | 09.75% | 47.00% | 50.25% | 51.75% |

| 58.20% | 60.05% | 61.45% | 60.40% | 53.70% | 58.65% | 56.65% | 54.65% | 53.85% | 55.70% | 54.20% | 54.65% | |

| Polblogs | 95.80% | 96.20% | 95.80% | 95.90% | 96.00% | 96.20% | 95.80% | 95.80% | 95.80% | 96.20% | 95.80% | 95.60% |

| Football | 86.67% | 86.67% | 86.67% | 86.67% | 44.44% | 86.67% | 86.67% | 86.67% | 40.00% | 86.67% | 84.44% | 86.67% |

| TerrorAttack | 75.20% | 35.00% | 76.20% | 76.40% | 71.20% | 33.80% | 75.00% | 76.20% | 66.40% | 33.60% | 75.20% | 76.40% |

| Network | Model | Accuracy | #Iter | ASTT(s) | OIT(s) | TET(s) | Network | Model | Accuracy | #Iter | ASTT(s) | OIT(s) | TET(s) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Cora | GCN | 82.10% | 791 | 0.0622 | 71.8276 | 0.0301 | Polblogs | GCN | 95.60% | 1008 | 0.0458 | 62.2642 | 0.0175 |

| MORE-HA | 81.30% | 338 | 0.0887 | 42.9283 | 0.0322 | MORE-HA | 96.20% | 315 | 0.0586 | 25.4139 | 0.0309 | ||

| MORE-SU | 79.90% | 251 | 0.0867 | 31.8249 | 0.0359 | MORE-SU | 95.80% | 124 | 0.0732 | 12.5456 | 0.0346 | ||

| MORE-CO | 81.10% | 257 | 0.1047 | 39.1086 | 0.0583 | MORE-CO | 95.80% | 157 | 0.0812 | 17.7403 | 0.0319 | ||

| Email-Eucore | GCN | 51.00% | 954 | 0.0434 | 56.0472 | 0.0170 | Football | GCN | 84.44% | 559 | 0.0142 | 9.9511 | 0.0140 |

| MORE-HA | 59.25% | 350 | 0.0417 | 20.1162 | 0.0160 | MORE-HA | 86.67% | 164 | 0.0149 | 3.1719 | 0.0123 | ||

| MORE-SU | 54.75% | 220 | 0.0376 | 11.8910 | 0.0160 | MORE-SU | 84.44% | 138 | 0.0145 | 2.5635 | 0.0044 | ||

| MORE-CO | 57.25% | 220 | 0.0507 | 17.0667 | 0.0259 | MORE-CO | 86.67% | 99 | 0.0171 | 2.2097 | 0.1577 | ||

| GCN | 57.60% | 495 | 0.5414 | 360.3334 | 0.1871 | TerrorAttack | GCN | 75.40% | 327 | 0.0236 | 10.9818 | 0.0110 | |

| MORE-HA | 59.05% | 167 | 0.5475 | 123.9314 | 0.2079 | MORE-HA | 33.80% | 146 | 0.0263 | 5.3676 | 0.0110 | ||

| MORE-SU | 59.55% | 102 | 0.5890 | 81.4163 | 0.1985 | MORE-SU | 76.20% | 86 | 0.0374 | 4.4990 | 0.0150 | ||

| MORE-CO | 59.80% | 82 | 0.6361 | 70.6593 | 0.2224 | MORE-CO | 76.20% | 87 | 0.0333 | 4.0502 | 0.0140 |

4.3. Baseline Method

We compare the MORE model with the GCN model proposed by Kipf et al (Kipf and Welling, 2017). As a well-known algorithm in the field of graph convolutional networks, GCN is based on spectral graph theory and has been recognized by many researchers in this field. We think that using it as a comparison algorithm can effectively illustrate the advantages of our algorithm. During the experimental phase, the GCN model used for comparison adopts the double-layer structure as the original paper. The specific iteration formula is as follows:

| (4) |

Wherein, ReLU represents the linear rectification function which used as the activation function, and and represent the training weight matrix.

4.4. Node Classification Accuracy

On the whole, we will show our experimental content in terms of the accuracy and overall efficiency of the node classification task. For experiments on the accuracy, we set three groups of hyperparameters that differ in the learning rate and the maximum number of iterations max_epoch (Their values are [0.01, 300], [0.001, 500], and [0.0003, 1000], respectively), to enhance the persuasiveness of our experimental results. Then, we iterated the MORE model and GCN model using three groups of hyperparameters in six network datasets, and the experimental results are shown in Table 4. It can be seen that in all networks, the highest accuracy of the MORE model is better than that of the GCN model. In the Email-Eucore dataset which is rich in the network motif, our model has improved by 10% based on the 51.50% accuracy of the GCN model.

(a) Change in Accuracy on TRS.

(b) Change in Accuracy on VAS.

(c) Change in Accuracy on TES.

(d) Change in LOSS on TRS.

(e) Change in LOSS on VAS.

(f) Change in LOSS on TES.

By comparing the three models proposed in this paper, namely MORE-HA, MORE-SU, and MORE-CO, we can obviously find that the MORE-HA model has the highest times of getting the best accuracy. This is because the MORE-HA model uses the Hadamard product process to make one type of feature information as a bias of another type, and preserves the implicit association as much as possible. The aggregation process of the MORE-SU model and the MORE-CO model leads to the cancellation or even destruction of some of this correlation information, resulting in a decrease in the overall accuracy. However, it is worth noting that in TerrorAttack dataset, we find that the accuracy of the MORE-HA is very low. The reason for this phenomenon is that the number of M42 and M43 motif in the network is zero, causing the Hadamard product to completely lose data. This phenomenon does not occur during the summation process and connection process, so it can be said that the MORE-SU and MORE-CO models have stronger stability than MORE-HA model.

4.5. Node Classification Effectiveness

We perform comparative experiments on the efficiency of the node classification task. We iterate the four models to convergence with a learning rate of 0.003. The experimental results are shown in Table 5. Wherein, we use the number of iterations #Iter, the Average Single Training Time (ASTT), the Overall Iteration Time (OIT), and the TEst running Time (TET) to measure the convergence speed and training efficiency of the model. It can be found that by combining the multivariate relationships in the network environment, the overall efficiency of the algorithm has been greatly improved. Compared with the GCN model, the MORE model can reduce the OIT required in the iterative process by up to 19.5% of GCN, and reduce #Iter by up to 15.5% of GCN. Moreover, the MORE model has not reduced the accuracy or even improved it in the node classification tasks of most datasets.

Figure 6 further shows the convergence speed comparison between MORE and GCN models. We run four models on the Email-Eucore dataset with = 0.001, and continue to iterate until one of the models converges. In this process, we calculate the accuracy and loss of the four models on the training set, validation set, and test set for node classification tasks after each iteration, and draw it into a line chart. It can be clearly seen that the convergence speed of the MORE model is significantly higher than that of the GCN model. And compared with MORE-HA and MORE-SU, because the node information is completely preserved during the connection process, MORE-CO using the connection aggregation has more information available during the iteration. This reason ultimately leads that MORE-CO has the highest training efficiency.

It is worth noting that we can clearly find from Figure 6 (a)-(c) that around the 25th iteration, the accuracy trends of all models have shown a large degree of smoothness. Even in the MORE-HA model, the accuracy of the node classification task experienced a local peak. This phenomenon is caused by the data set and the gradient descent algorithm. In the Email-Eucore dataset, comparing the four models, it can be found that MORE-SU and MORE-CO are less affected. They did not experience a sudden increase or decrease in the accuracy of the validation set and the test set. This matches the conclusions we have drawn above, that is, MORE-SU and MORE-CO are more stable than MORE-HA.

5. Discussion

In this section, we discuss the future direction of improvement about the current model. For our model, we propose three feasible directions to meet the needs of more data processing tasks.

-

•

Use other graph representation learning model. Our method has the potential as a framework. The MORE model proposed in this paper uses a single-layer GCN structure as an intermediate module for graph representation learning and only compares it with the GCN model. Next, we will try to improve MORE into a framework that can be applied to graph learning algorithms to improve the comprehensive performance of GCNN models.

-

•

Extend the application scope of the graph structure. Due to the limitation of the GCN algorithm, the currently proposed MORE model can only be applied to undirected graph structures. In the future, we hope to extend existing models to more graph structures, such as directed graphs. There are many types of directed network motif and they can more specifically represent some kinds of multivariate relationships in the network. We believe that it can further improve the accuracy and efficiency of the method.

-

•

Apply to large-scale networks. The existing graph learning methods all face the challenge that it is difficult to apply to large-scale networks. This is due to multiple reasons such as low iteration efficiency, high memory consumption, and poor parallelism in a large-scale network environment. With the continuous increase of data, graph learning of large-scale networks is an inevitable development trend in the future. We want to improve the applicability of the algorithm in large-scale networks to meet the needs of an ever-increasing network dataset in the future work.

6. Conclusion

Multivariate relationships appear frequently in various types of real networks. In this paper, we propose a new node classification method based on graph structure and multivariate relations. Our MORE model utilizes the network motif to characterize multivariate relations in the network environment. This relation information that is partially missed or even completely lost in other graph learning algorithms is effectively used in MORE, which makes our algorithm more accurate and efficient. MORE transforms the multivariate relation into the node structural characteristics, and aggregates with the node attribute characteristics, and completes the task of predicting the types of nodes. Experimental results on several network datasets show that the MORE model can effectively capture the potential multivariate relationship information. In this setting, our model is comprehensively superior to the GCN method in terms of computational efficiency and accuracy. In future work, we will try to expand MORE to a graph learning framework, and improve the adaptability of various graph structures and large-scale networks.

References

- (1)

- Bruna et al. (2014) Joan Bruna, Wojciech Zaremba, Arthur Szlam, and Yann Lecun. 2014. Spectral networks and locally connected networks on graphs. In International Conference on Learning Representations (ICLR2014), CBLS, April 2014.

- Caigny et al. (2018) Arno De Caigny, Kristof Coussement, and Koen W. De Bock. 2018. A new hybrid classification algorithm for customer churn prediction based on logistic regression and decision trees. European Journal of Operational Research 269, 2 (2018), 760 – 772.

- Chang et al. (2019) Soowon Chang, Nirvik Saha, Daniel Castro-Lacouture, and Perry Pei-Ju Yang. 2019. Multivariate relationships between campus design parameters and energy performance using reinforcement learning and parametric modeling. Applied Energy 249 (2019), 253 – 264.

- Cho et al. (2014) Kyunghyun Cho, Bart van Merrienboer, Çaglar Gülçehre, Dzmitry Bahdanau, Fethi Bougares, Holger Schwenk, and Yoshua Bengio. 2014. Learning Phrase Representations using RNN Encoder-Decoder for Statistical Machine Translation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, EMNLP 2014, October 25-29, 2014, Doha, Qatar, A meeting of SIGDAT, a Special Interest Group of the ACL. 1724–1734.

- Chorowski et al. (2015) Jan K Chorowski, Dzmitry Bahdanau, Dmitriy Serdyuk, Kyunghyun Cho, and Yoshua Bengio. 2015. Attention-Based Models for Speech Recognition. In Advances in Neural Information Processing Systems 28, C. Cortes, N. D. Lawrence, D. D. Lee, M. Sugiyama, and R. Garnett (Eds.). Curran Associates, Inc., 577–585.

- Chung et al. (2014) Junyoung Chung, Caglar Gulcehre, Kyunghyun Cho, and Yoshua Bengio. 2014. Empirical evaluation of gated recurrent neural networks on sequence modeling. In NIPS 2014 Workshop on Deep Learning, December 2014.

- Defferrard et al. (2016) Michaël Defferrard, Xavier Bresson, and Pierre Vandergheynst. 2016. Convolutional Neural Networks on Graphs with Fast Localized Spectral Filtering. In Advances in Neural Information Processing Systems 29, D. D. Lee, M. Sugiyama, U. V. Luxburg, I. Guyon, and R. Garnett (Eds.). Curran Associates, Inc., 3844–3852.

- Gilmer et al. (2017) Justin Gilmer, Samuel S. Schoenholz, Patrick F. Riley, Oriol Vinyals, and George E. Dahl. 2017. Neural Message Passing for Quantum Chemistry (ICML’17). JMLR.org, 1263–1272.

- Goldberg (2017) Yoav Goldberg. 2017. Neural network methods for natural language processing. Synthesis Lectures on Human Language Technologies 10, 1 (2017), 1–309.

- Gori et al. (2005) M. Gori, G. Monfardini, and F. Scarselli. 2005. A new model for learning in graph domains. In Proceedings. 2005 IEEE International Joint Conference on Neural Networks, 2005., Vol. 2. 729–734 vol. 2.

- Goyal and Ferrara (2018) Palash Goyal and Emilio Ferrara. 2018. Graph embedding techniques, applications, and performance: A survey. nowledge-Based Systems 151 (2018), 78 – 94.

- Hamilton et al. (2017) Will Hamilton, Zhitao Ying, and Jure Leskovec. 2017. Inductive Representation Learning on Large Graphs. In Advances in Neural Information Processing Systems 30, I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett (Eds.). Curran Associates, Inc., 1024–1034.

- Hershey et al. (2017) S. Hershey, S. Chaudhuri, D. P. W. Ellis, J. F. Gemmeke, A. Jansen, R. C. Moore, M. Plakal, D. Platt, R. A. Saurous, B. Seybold, M. Slaney, R. J. Weiss, and K. Wilson. 2017. CNN architectures for large-scale audio classification. In 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). 131–135.

- Kipf and Welling (2017) Thomas N. Kipf and Max Welling. 2017. Semi-Supervised Classification with Graph Convolutional Networks. (April 2017).

- Krizhevsky et al. (2012) Alex Krizhevsky, Ilya Sutskever, and Geoffrey E Hinton. 2012. ImageNet Classification with Deep Convolutional Neural Networks. In Advances in Neural Information Processing Systems 25, F. Pereira, C. J. C. Burges, L. Bottou, and K. Q. Weinberger (Eds.). Curran Associates, Inc., 1097–1105.

- Kumar et al. (2016) Ankit Kumar, Ozan Irsoy, Peter Ondruska, Mohit Iyyer, James Bradbury, Ishaan Gulrajani, Victor Zhong, Romain Paulus, and Richard Socher. 2016. Ask me anything: Dynamic memory networks for natural language processing. In International conference on machine learning. 1378–1387.

- Kurt et al. (2008) Imran Kurt, Mevlut Ture, and A. Turhan Kurum. 2008. Comparing performances of logistic regression, classification and regression tree, and neural networks for predicting coronary artery disease. Expert Systems with Applications 34, 1 (2008), 366 – 374.

- Li et al. (2016) Yujia Li, Daniel Tarlow, Marc Brockschmidt, and Richard S. Zemel. 2016. Gated Graph Sequence Neural Networks. In 4th International Conference on Learning Representations, ICLR 2016, San Juan, Puerto Rico, May 2-4, 2016, Conference Track Proceedings.

- Litwin and Stoeckel (2016) Howard Litwin and Kimberly J. Stoeckel. 2016. Social Network, Activity Participation, and Cognition: A Complex Relationship. Research on Aging 38, 1 (2016), 76–97. PMID: 25878191.

- Micheli (2009) A. Micheli. 2009. Neural Network for Graphs: A Contextual Constructive Approach. IEEE Transactions on Neural Networks 20, 3 (March 2009), 498–511.

- Milo et al. (2002) R. Milo, S. Shen-Orr, S. Itzkovitz, N. Kashtan, D. Chklovskii, and U. Alon. 2002. Network Motifs: Simple Building Blocks of Complex Networks. Science 298, 5594 (2002), 824–827.

- Nguyen et al. (2019) Duc Minh Nguyen, Tien Huu Do, Robert Calderbank, and Nikos Deligiannis. 2019. Fake news detection using deep markov random fields. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers). 1391–1400.

- Ortega et al. (2018) A. Ortega, P. Frossard, J. Kovačević, J. M. F. Moura, and P. Vandergheynst. 2018. Graph Signal Processing: Overview, Challenges, and Applications. Proc. IEEE 106, 5 (May 2018), 808–828.

- Perraudin and Vandergheynst (2017) N. Perraudin and P. Vandergheynst. 2017. Stationary Signal Processing on Graphs. IEEE Transactions on Signal Processing 65, 13 (July 2017), 3462–3477.

- Qiao et al. (2018) Lishan Qiao, Limei Zhang, Songcan Chen, and Dinggang Shen. 2018. Data-driven graph construction and graph learning: A review. Neurocomputing 312 (2018), 336 – 351.

- Rossi et al. (2018) Ryan A Rossi, Nesreen K Ahmed, and Eunyee Koh. 2018. Higher-order network representation learning. In Companion Proceedings of the The Web Conference 2018. International World Wide Web Conferences Steering Committee, 3–4.

- Rossi et al. (2018) R. A. Rossi, R. Zhou, and N. Ahmed. 2018. Deep Inductive Graph Representation Learning. IEEE Transactions on Knowledge and Data Engineering (2018), 1–1.

- Sak et al. (2014) Haşim Sak, Andrew Senior, and Françoise Beaufays. 2014. Long short-term memory recurrent neural network architectures for large scale acoustic modeling. In Fifteenth annual conference of the international speech communication association.

- Sandryhaila and Moura (2013) A. Sandryhaila and J. M. F. Moura. 2013. Discrete signal processing on graphs: Graph fourier transform. In 2013 IEEE International Conference on Acoustics, Speech and Signal Processing. 6167–6170.

- Scarselli et al. (2009) F. Scarselli, M. Gori, A. C. Tsoi, M. Hagenbuchner, and G. Monfardini. 2009. The Graph Neural Network Model. IEEE Transactions on Neural Networks 20, 1 (Jan 2009), 61–80.

- Seo et al. (2018) Youngjoo Seo, Michaël Defferrard, Pierre Vandergheynst, and Xavier Bresson. 2018. Structured Sequence Modeling with Graph Convolutional Recurrent Networks. In Neural Information Processing, Long Cheng, Andrew Chi Sing Leung, and Seiichi Ozawa (Eds.). Springer International Publishing, Cham, 362–373.

- Singh et al. (2016) A. Singh, N. Thakur, and A. Sharma. 2016. A review of supervised machine learning algorithms. In 2016 3rd International Conference on Computing for Sustainable Global Development (INDIACom). 1310–1315.

- Velickovic et al. (2018) Petar Velickovic, Guillem Cucurull, Arantxa Casanova, Adriana Romero, Pietro Liò, and Yoshua Bengio. 2018. Graph Attention Networks. In 6th International Conference on Learning Representations, ICLR 2018, Vancouver, BC, Canada, April 30 - May 3, 2018, Conference Track Proceedings.

- Vorgia et al. (2017) Frosso Vorgia, Ioannis Triantafyllou, and Alexandros Koulouris. 2017. Hypatia Digital Library: A Text Classification Approach Based on Abstracts. In Strategic Innovative Marketing, Androniki Kavoura, Damianos P. Sakas, and Petros Tomaras (Eds.). Springer International Publishing, Cham, 727–733.

- Vu et al. (2016) N. T. Vu, P. Gupta, H. Adel, and H. Schütze. 2016. Bi-directional recurrent neural network with ranking loss for spoken language understanding. In 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). 6060–6064.

- Wang et al. (2019) W. Wang, J. Liu, Z. Yang, X. Kong, and F. Xia. 2019. Sustainable Collaborator Recommendation Based on Conference Closure. IEEE Transactions on Computational Social Systems 6, 2 (April 2019), 311–322.

- White et al. (2016) Leroy White, Graeme Currie, and Andy Lockett. 2016. Pluralized leadership in complex organizations: Exploring the cross network effects between formal and informal leadership relations. The Leadership Quarterly 27, 2 (2016), 280–297.

- Wu et al. (2015) Jian Wu, Kyle Mark Williams, Hung-Hsuan Chen, Madian Khabsa, Cornelia Caragea, Suppawong Tuarob, Alexander G Ororbia, Douglas Jordan, Prasenjit Mitra, and C Lee Giles. 2015. Citeseerx: Ai in a digital library search engine. AI Magazine 36, 3 (2015), 35–48.

- Xiong et al. (2019) Fu Xiong, Yang Xiao, Zhiguo Cao, Kaicheng Gong, Zhiwen Fang, and Joey Tianyi Zhou. 2019. Good practices on building effective CNN baseline model for person re-identification. In Tenth International Conference on Graphics and Image Processing (ICGIP 2018), Vol. 11069. International Society for Optics and Photonics, 110690I.

- Xu et al. (2018) Haiyun Xu, Kun Dong, Rui Luo, and Chao Wang. 2018. Research on Topic Recognition Based on Multivariate Relation Fusion. In 23rd International Conference on Science and Technology Indicators (STI 2018), September 12-14, 2018, Leiden, The Netherlands. Centre for Science and Technology Studies (CWTS).

- Yu et al. (2019a) S. Yu, F. Xia, and H. Liu. 2019a. Academic Team Formulation Based on Liebig’s Barrel: Discovery of Anticask Effect. IEEE Transactions on Computational Social Systems 6, 5 (Oct 2019), 1083–1094.

- Yu et al. (2017) S. Yu, F. Xia, K. Zhang, Z. Ning, J. Zhong, and C. Liu. 2017. Team Recognition in Big Scholarly Data: Exploring Collaboration Intensity. In 3rd Intl Conf on Big Data Intelligence and Computing(DataCom). 925–932.

- Yu et al. (2019b) S. Yu, J. Xu, C. Zhang, F. Xia, Z. Almakhadmeh, and A. Tolba. 2019b. Motifs in Big Networks: Methods and Applications. IEEE Access 7 (2019), 183322–183338.

- Zhang et al. (2017) Kai Zhang, Wangmeng Zuo, Shuhang Gu, and Lei Zhang. 2017. Learning Deep CNN Denoiser Prior for Image Restoration. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR).

- Zhang et al. (2018) Lihua Zhang, Jiachao Zhang, Guangming Zeng, Haoran Dong, Yaoning Chen, Chao Huang, Yuan Zhu, Rui Xu, Yujun Cheng, Kunjie Hou, Weicheng Cao, and Wei Fan. 2018. Multivariate relationships between microbial communities and environmental variables during co-composting of sewage sludge and agricultural waste in the presence of PVP-AgNPs. Bioresource Technology 261 (2018), 10 – 18.