Multivariate Normality Test with Copula Entropy

Abstract

In this paper, we proposed a multivariate normality test based on copula entropy. The test statistic is defined as the difference between the copula entropies of unknown distribution and the Gaussian distribution with same covariances. The estimator of the test statistic is presented based on the nonparametric estimator of copula entropy. Two simulation experiments were conducted to compare the proposed test with the five existing ones. Experiment results show the advantage of our test over the others.

Keywords: Copula Entropy; Multivariate Normality; Hypothesis Test

1 Introduction

Gaussian distribution is one of the most fundamental probabilistic distribution functions in probability and statistics. Normality is assumed in many statistical models and methods and therefore normality test is a basic tool in practice. There are abundant of literature on such test, see [1, 2] for the reviews on this topic.

We focus on multivariate normality test in this paper. There are many existing tests on multivariate normality that were proposed based on different concepts, such as characteristics function [3], moments [4], skewness and kurtosis [5], energy distance [6], entropy [7], Wasserstein distance [8], etc. The statistics of these tests are defined as the difference of the properties of the unknown distribution and Gaussian distribution. For example, entropy-based test is defined as the difference of the entropies of the unknown distribution and Gaussian distribution based on the fact that among the distributions with same covariance, Gaussian distribution has the maximum entropy.

Copula Entropy (CE) is a kind of Shannon entropy defined with copula function [9]. It is a multivariate measure of statistical independence with several good properties, such as symmetric, non-positive (0 iff independent), invariant to monotonic transformation, and particularly, equivalent to correlation coefficient in Gaussian cases. It has been proved that CE is equivalent to mutual information in information theory.

In this paper, we proposed a multivariate normality test with copula entropy. The test statistic is defined as the difference of CE of the unknown distribution and Gaussian distribution. It is well known that given covariance matrix, the CE of Gaussian distributions can be easily derived analytically. Therefore, the test statistic can be derived by simply estimating the CE of the unknown distribution. This can be achieved with the non-parametric estimator of CE proposed in [9].

2 Copula Entropy

Copula theory is a probabilistic theory on representation of multivariate dependence [10, 11]. According to Sklar’s theorem [12], any multivariate density function can be represented as a product of its marginals and copula density function (cdf) which represents dependence structure among random variables.

With copula theory, Ma and Sun [9] defined a new mathematical concept, named Copula Entropy, as follows:

Definition 1 (Copula Entropy).

Let be random variables with marginals and copula density function . The CE of is defined as

| (1) |

A non-parametric estimator of CE was also proposed in [9], which composed of two simple steps:

-

1.

estimating empirical copula density function;

-

2.

estimating the entropy of the estimated empirical copula density.

The empirical copula density in the first step can be easily derived with rank statistic. With the estimated empirical copula density, the second step is essentially a problem of entropy estimation, which can be tackled with the KSG estimation method [13]. In this way, a non-parametric method for estimating CE was proposed [9].

3 Test on Multivariate Normality

Given random vector , the hypothesis for multivariate normality test is

| (2) |

where denotes normal distribution, and is the covariances of .

The test statistic for is defined as follows:

| (3) |

where is the Gaussian random vector with the same covariances. The first term in (3) can be estimated with the nonparametric CE estimator. The second term in (3) can be derived analytically as

| (4) |

It is easy to know for normal distributions.

4 Simulations

We compared our test with several existing tests, including the tests proposed by Mardia [5], Royston [14], Henze and Zirkler [15], Doornik and Hansen [16], and the energy distance based test by Rizzo and Székely [6].

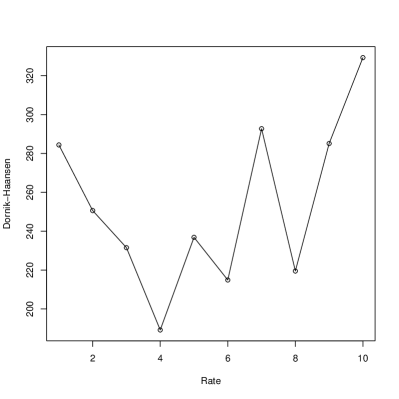

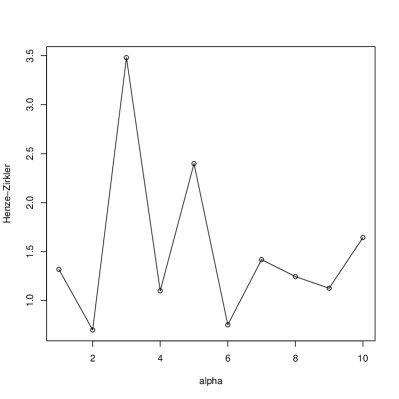

Two simulation experiments were conducted with bivariate copula function associated with marginals. In the first simulation, the data were generated from a bivariate normal copula with two marginals: one is normal distribution, and the other is exponential distribution. The parameter of bivariate normal copula is 0.8 and the mean and standard deviation of the normal marginal are 0 and 2 respectively. The rate of the exponential distribution is set from 1 to 10. In the second simulation, the data were generated from a bivariate Gumbel copula with two normal marginals. The mean and standard deviation of the two normal marginals are 0 and 2 respectively. The parameter of the bivariate Gumbel copula is set from 1 to 10. These two simulations generate different non-normality: one by non-normal marginal and the other by non-normal copula. The sample size is 800 for all the simulations. Each simulation was run for 10 times to derive the average of the test statistics.

The R package copula was used for bivariate normal and Gumbel copula. The R package copent was used for estimating CE in the experiments. The R package MVN was used for the implementations of the other tests.

The results of the two simulation experiments are shown in Figure 1 and Figure 2. It can be learnt from them that the estimated CE based test statistic reflects the right monotonicity of normality in both experiments. The other five test did not present the monotonicity of normality in the first experiment and four tests (Mardia’s, Royston’s, DH, and Energy distance) presented the right monotonicity of normality.

5 Conclusions

In this paper, we proposed a multivariate normality test based on copula entropy. The test statistic is defined as the difference between the copula entropies of unknown distribution and the Gaussian distribution with same covariances. The estimator of the test statistic is presented based on the nonparametric estimator of copula entropy. Two simulation experiments were conducted to compare the proposed test with the five existing ones. Experiment results show the advantage of our test over the others.

References

- [1] K.V. Mardia. Tests of unvariate and multivariate normality. In Analysis of Variance, volume 1 of Handbook of Statistics, pages 279–320. Elsevier, 1980.

- [2] Norbert Henze. Invariant tests for multivariate normality: a critical review. Statistical Papers, 43(4):467–506, October 2002.

- [3] L. Baringhaus and N. Henze. A consistent test for multivariate normality based on the empirical characteristic function. Metrika, 35(1):339–348, December 1988.

- [4] Norbert Henze and María Dolores Jiménez-Gamero. A new class of tests for multinormality with i.i.d. and garch data based on the empirical moment generating function. TEST, 28(2):499–521, June 2019.

- [5] K. V. Mardia. Measures of multivariate skewness and kurtosis with applications. Biometrika, 57(3):519–530, 12 1970.

- [6] Maria L. Rizzo and Gábor J. Székely. Energy distance. WIREs Computational Statistics, 8(1):27–38, 2016.

- [7] Oldrich Vasicek. A test for normality based on sample entropy. Journal of the Royal Statistical Society: Series B (Methodological), 38(1):54–59, 1976.

- [8] Marc Hallin, Gilles Mordant, and Johan Segers. Multivariate goodness-of-fit tests based on wasserstein distance. arXiv preprint, page arXiv:2003.06684, 2021.

- [9] Jian Ma and Zengqi Sun. Mutual information is copula entropy. Tsinghua Science & Technology, 16(1):51–54, 2011.

- [10] Roger B Nelsen. An introduction to copulas. Springer Science & Business Media, 2007.

- [11] Harry Joe. Dependence modeling with copulas. CRC press, 2014.

- [12] Abe Sklar. Fonctions de repartition an dimensions et leurs marges. Publications de l’Institut de statistique de l’Université de Paris, 8:229–231, 1959.

- [13] Alexander Kraskov, Harald Stögbauer, and Peter Grassberger. Estimating mutual information. Physical Review E, 69(6):066138, 2004.

- [14] J. Patrick Royston. Some techniques for assessing multivarate normality based on the shapiro‐wilk w. Journal of The Royal Statistical Society Series C-applied Statistics, 32(2):121–133, 1983.

- [15] N. Henze and B. Zirkler. A class of invariant consistent tests for multivariate normality. Communications in Statistics - Theory and Methods, 19(10):3595–3617, 1990.

- [16] Jurgen A. Doornik and Henrik Hansen. An omnibus test for univariate and multivariate normality. Oxford Bulletin of Economics and Statistics, 70(s1):927–939, 2008.