* Equal Contributors.

Senior authors.

** Corresponding author: 33email: {[email protected]}

Multiscale Encoder and Omni-Dimensional Dynamic Convolution Enrichment in nnU-Net for Brain Tumor Segmentation

Abstract

Brain tumor segmentation plays a crucial role in computer-aided diagnosis. This study introduces a novel segmentation algorithm utilizing a modified nnU-Net architecture. Within the nnU-Net architecture’s encoder section, we enhance conventional convolution layers by incorporating omni-dimensional dynamic convolution layers, resulting in improved feature representation. Simultaneously, we propose a multi-scale attention strategy that harnesses contemporary insights from various scales. Our model’s efficacy is demonstrated on diverse datasets from the BraTS-2023 challenge. Integrating omni-dimensional dynamic convolution (ODConv) layers and multi-scale features yields substantial improvement in the nnU-Net architecture’s performance across multiple tumor segmentation datasets. Remarkably, our proposed model attains good accuracy during validation for the BraTS Africa dataset. The ODconv source code111https://github.com/i-sahajmistry/nnUNet˙BraTS2023/blob/master/nnunet/ODConv.py along with full training code222https://github.com/i-sahajmistry/nnUNet˙BraTS2023 is available on GitHub.

Keywords:

BraTS-2023, deep learning, brain, segmentation, Adult Glioma, BraTS-Africa, Meningioma, Brain Metastases and Pediatric Tumors, nnU-Net, ODConv3D, multiscale, lesion, medical imaging1 Introduction

Glioblastomas (GBM) are the most prevalent and aggressive primary brain tumors in adults. The significant morphological and histological diversity of gliomas, encompassing distinct zones like active tumors, cystic and necrotic structures, as well as edema and invasion areas, adds complexity to the precise identification of the tumor and its sub-regions. The manual segmentation of brain tumors demands substantial time and resources, while also being susceptible to errors originating from both inter and intra-observer variability [16]. Consequently, developing automated and accurate methods to locate gliomas and their sub-regions within MRI scans precisely is paramount for diagnosis and treatment purposes [18].

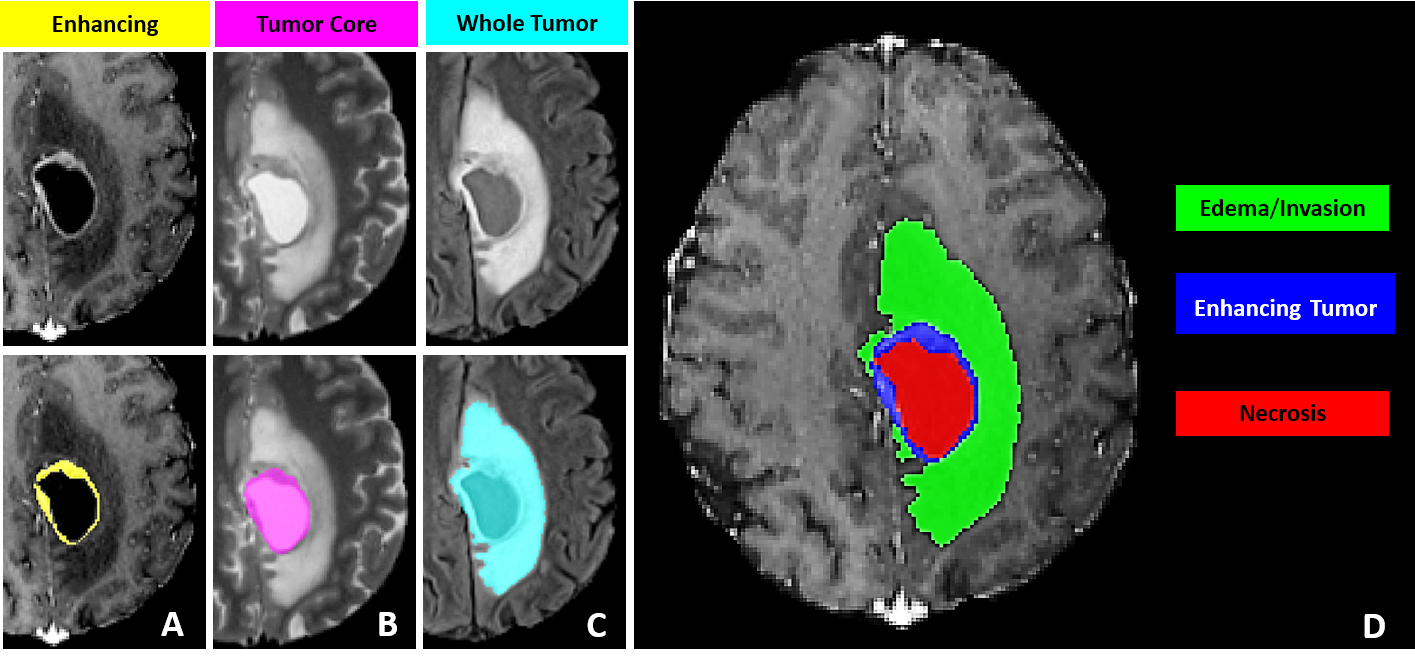

The BraTS datasets and challenges offer an extensive repository of labeled brain MR images in an open-source format, facilitating the development of cutting-edge solutions in the field of neuroradiology. The BraTS-2023 challenge includes 4 mpMRI scans from each patient, including native (T1N), post-contrast T1-weighted (T1C), T2-weighted (T2W), and T2 Fluid Attenuated Inversion Recovery (T2F) volumes. The label mask comprised three categories: Edema (ED), Enhancing Tumor (ET), and Necrosis (NE). The Tumor Core (TC) is defined as the combined value of ET and NE, while the sum of ED, ET, and NE forms the Whole Tumor (WT).

Prior BraTS challenges focused on adult brain diffuse astrocytoma [14, 4, 5, 2]. The current edition of BraTS presents a series of challenges encompassing a range of tumor types, incomplete data, and technological factors. The focus of this paper will be mainly on two challenges, namely (a) Brain Metastases Dataset and, (b) the BraTS-Africa Dataset, where we achieved good performance in the validation phase of the BraTS 2023 Challenge.

Our proposed approach uses the modified nnU-Net [7] architecture and comprises (a) two encoders, both inspired by the nnU-Net [7] framework (See Fig. 2) and (b) Omni-dimensional Dynamic Convolution (ODConv-2D) [12] layers adapted to work with 3D images, which we refer as ODConv3D.

By integrating two encoders into the network architecture, we exploit the capability of simultaneously processing the same image at two distinct scales. This approach enables us to extract features spanning a wide range of complexities. ODConv utilizes an innovative multi-dimensional attention mechanism to acquire four distinct forms of attention for convolutional kernels, simultaneously encompassing all four dimensions of the kernel space. This enriched, dynamic convolution design enables the model to effectively capture intricate spatial, channel-wise, and temporal information. The results demonstrate the superiority of ODConv3D over the conventional convolutional layers in nnU-Net [7]. For submitting the code for evaluation purposes, we use MedPerf’s MLCube[9].

2 Materials

2.1 Datasets

BraTS 2023 introduces five diverse challenges, each encompassing a distinct aspect of Brain Tumor Segmentation. Each challenge corresponds to datasets targeting specific types of brain tumors, namely: Adult Glioma, BraTS-Africa (Glioma from Sub-Saharan Africa), Meningioma, Brain Metastases, and Pediatric Tumors.

The BraTS dataset comprises retrospective multi-parametric magnetic resonance imaging (mpMRI) scans of brain tumors gathered from diverse medical institutions. These scans were acquired using varied equipment and imaging protocols, leading to a broad spectrum of image quality that reflects the diverse clinical approaches across different institutions. Annotations outlining each tumor sub-region were meticulously reviewed and validated by expert neuroradiologists.

Following the customary methodology for evaluating machine learning algorithms, the BraTS 2023 challenge follows a division of the dataset into training, validation, and testing sets. The training data includes the provided ground truth labels. Participants are given access to the validation data, which lacks associated ground truth labels. The testing data, kept confidential during the challenge, serves as an evaluation benchmark.

2.1.1 Adult Glioma

The dataset comprises a comprehensive collection of 5,880 MRI scans from a cohort of 1,470 patients diagnosed with brain diffuse glioma [3]. Among these scans, 1,251 have been designated for training purposes, while an additional 219 scans are allocated for validation. This dataset is consistent with the data meticulously compiled for the BraTS 2021 Challenge. This dataset focuses on glioblastoma (GBM), which stands out as the most prevalent and aggressive form of brain-originating cancer. With a grade classification of IV, GBMs display an extraordinary degree of heterogeneity in terms of their appearance, morphology, and histology. Typically originating in the cerebral white matter, these tumors exhibit rapid growth and can attain considerable sizes before manifesting noticeable symptoms. Given the challenging nature of GBM and its dire prognosis, with a median survival rate of approximately 15 months, the comprehensive dataset is a vital resource for advancing research, diagnosis, and treatment strategies in the field.

2.1.2 BraTS-Africa

With a total training cohort size of 60 cases, the Sub-Saharan Africa dataset [1] represents a specialized collection of glioma cases among patients from the Sub-Saharan Africa region. This dataset stands out due to its distinct characteristics, arising from the utilization of lower-grade MRI technology and the limited availability of MRI scanners in the region. Consequently, the MRI scans included exhibit diminished image contrast and resolution. This reduction in clarity poses a challenge by obscuring the distinct features present in the aforementioned dataset and introducing intricacies in the segmentation process. Notably, patients within this dataset from the Sub-Saharan Africa region often present with comorbidities like HIV/AIDS, malaria, and malnutrition. These underlying health conditions influence the appearance of brain tumors in MRI scans, further complicating the diagnostic process.

2.1.3 Meningioma

The dataset contains an extensive collection of cases, comprising 1,000 instances designated for training and an extra 141 cases set aside for validation. These cases are centered around meningiomas [11], a specific type of tumor that originates from the meninges. These meninges are protective layers that cover the brain and spinal cord. Meningiomas are noteworthy since they represent the most frequently occurring primary tumors within the brain of adults. Their significant risks emphasize the clinical importance of these tumors in terms of health complications and mortality. This dataset was introduced as part of the BraTS challenge in 2023.

2.1.4 Brain Metastases

The Brain Metastases dataset’s [15] training segment comprises 165 samples. In comparison, an additional 31 samples are included in the validation set, providing a comprehensive collection of multiparametric MRI (mpMRI) scans focusing on brain tumors. Diverging from the characteristics of gliomas, which tend to be more readily detectable in their initial scans due to their larger size, brain metastases exhibit a distinctive heterogeneity in their dimensions. These metastatic tumors manifest across a spectrum of sizes, often presenting as smaller lesions within the brain. Notably, these metastatic growths possess the capacity to emerge at various locations throughout the brain, contributing to the diversity of their appearances.

A noteworthy aspect of this dataset is the prevalence of brain metastases measuring less than 10 mm in diameter. These smaller lesions stand out in terms of their frequency, underscoring their clinical significance. Unlike their larger counterparts, these diminutive metastases have been observed to surpass others in terms of occurrence.

2.1.5 Pediatric Tumor

Despite certain similarities between pediatric tumors [10] and adult tumors, notable differences exist in their appearance in both imaging and clinical contexts. Both high-grade gliomas, such as GBMs, and pediatric diffuse midline gliomas (DMGs) have limited survival rates. Interestingly, DMGs are roughly three times rarer than GBMs. While GBMs typically emerge in the frontal or temporal lobes in the age of 60s, DMGs are predominantly found within pons and are frequently identified in the age range of 5 and 10.

GBMs are typically characterized by the presence of an enhancing tumor region in post-gadolinium T1-weighted MRI scans, accompanied by necrotic regions. In contrast, these imaging characteristics are less pronounced or distinct in DMGs. Pediatric brain tumors are more inclined to be low-grade gliomas, generally displaying slower growth rates compared to those in adults. The dataset at hand comprises 99 training samples and 45 validation samples.

2.2 Annotation Methtod

The dataset is established via a meticulous process for annotating tumor sub-regions, combining automated segmentation and manual refinement by expert neuroradiologists. The methodology involves initial automated segmentations using established methodsDeepMedic [8, 13, 6], which are then fused through the STAPLE label fusion [17] technique to address errors. Expert annotators meticulously enhance these segmentations using multimodal MRI scans and ITK-SNAP [19] software. Senior neuroradiologists review the refined segmentations, ensuring accuracy. This iterative process results in annotations conforming to BraTS-defined sub-regions: enhancing tumor (ET), tumor core (TC), and whole tumor (WT) as described in Fig. 1. The process acknowledges challenges in radiological tumor boundary definition, providing a standardized, dependable ground truth for assessing segmentation algorithms.

3 Proposed Algorithm

In this section, we introduce our innovative methodology designed to precisely segment brain tumors using a multi-modal attention-based model. The proposed algorithm leverages a combination of data preprocessing, model architecture, and attention mechanisms to enhance the segmentation accuracy on 3D brain scans.

3.1 Preprocessing

3.1.1 Data Augmentation

Before inputting the data into our model, we apply multiple data augmentation techniques to enhance the robustness of the model. These random augmentations within a specific range include crop, zoom, flip, noise, blur, brightness, and contrast. This ensures that the model can generalize better to various spatial transformations and variations in the input data.

3.1.2 Patch Extraction

We extract patches of size x x x from the original brain scan images. These patches capture localized features and enable the model to focus on specific regions of interest. This patch-based approach also aids in reducing memory requirements and computational complexity during training and inference.

3.2 Model Architecture

3.2.1 Encoder

Our proposed model architecture consists of two encoder layers, each inspired by the nnU-Net [7] framework. Instead of using conventional Conv3D layers, we employ Omni-dimensional Dynamic Convolution 2D (ODConv2D) layers, which we extend for use with 3D images and refer to as ODConv3D. ODConv3D integrates a multi-dimensional attention mechanism utilizing a parallel strategy. This empowers us to acquire complementary attention patterns for convolutional kernels across all four dimensions of the kernel space. This enriched dynamic convolution design enables the model to effectively capture intricate spatial, channel-wise, and temporal information.

3.2.2 Multi-Modal Attention

Our multi-modal attention strategy enhances the model’s ability to leverage complementary information from different scales. We provide the original input image and a downsampled version of the same image as inputs to the model. This enables the model to capture fine-grained details and broader contextual information simultaneously. Separate encoders process each of these inputs, and the attention mechanism is then applied to fuse the encoded features. This approach encourages the model to focus on informative regions across scales, contributing to enhanced feature representation.

3.2.3 Decoder

The decoder of our model is similar to the nnU-Net’s decoder architecture. It reconstructs the fused encoded features to generate a comprehensive feature map that preserves spatial information. This map is then processed to yield the final predicted segmentation.

3.3 Segmentation Process

3.3.1 Post-Processing

Post-processing is applied to the output feature map to refine the predicted segmentation. This includes steps such as thresholding, morphological operations, and connected component analysis to remove noise and ensure coherent tumor regions.

3.3.2 Performance Metrics

To evaluate the accuracy and efficacy of our proposed model, we employ two performance metrics i.e., Dice coefficient and Hausdorff distance. These metrics provide quantitative measures of the segmentation quality and the model’s ability to delineate tumor boundaries accurately.

3.4 Experimental Setup

We validate the performance of our proposed algorithm on the Brain Metastases dataset of brain scans containing instances of brain tumors. Each dataset instance consists of four 3D images of size x x , along with corresponding ground truth segmentations. We divide the dataset into training and validation sets, and test on the synapse page, ensuring an unbiased evaluation of the model’s performance.

4 Results

In this section, we present the results of our proposed attention-based tumor segmentation model on two distinct datasets: the Brain Metastases Dataset and the BraTS-Africa Dataset. We compare our model’s performance against several baselines, including the original nnU-Net architecture, variations incorporating ODConv3D and multiscale strategies, and a data processing combination approach.

4.1 Evaluation Metrics

We evaluate the segmentation accuracy using a set of standard performance metrics, including the Dice coefficient for overall segmentation, as well as specific metrics for lesion subregions, namely Lesion ET (Enhancing Tumor), Lesion TC (Tumor Core), and Lesion WT (Whole Tumor).

4.2 BraTS-Africa Dataset

4.2.1 Baseline Models

We evaluate the baseline performance of the nnU-Net architecture on the BraTS-Africa Dataset. As shown in Table 1, the nnU-Net achieves a Dice coefficient of 0.8980 for overall segmentation. Lesion ET, TC, and WT achieve Dice coefficients of 0.8354, 0.8485, and 0.6578, respectively.

4.2.2 Multiscale and ODConv3D Integration

We investigate the effects of integrating the Multiscale and ODConv3D into the nnU-Net architecture on the BraTS-Africa Dataset. The nnU-Net + Multiscale + ODConv3D model achieves an enhanced Dice coefficient of 0.9092 for overall segmentation. However, individual lesion subregions show varying outcomes, with Lesion ET experiencing a slightly decreased Dice coefficient.

4.2.3 Additional Post Processing

When we replace the labels of the nnU-Net model by the nnU-Net + Multiscale + ODConv3D model’s Label 2 for the BraTS-Africa Dataset, this leads to an increase in Lesion WT from 0.65 to 0.75 as shown in table 1, improving the overall accuracy. Our model’s performance is highlighted in Table 2, demonstrating competitive results. Across all lesion subtypes, our model achieves Lesion ET scores of 0.8354, Lesion TC scores of 0.8485, and Lesion WT scores of 0.7564.

| Algorithm | Training Dice* | Lesion ET | Lesion TC | Lesion WT |

|---|---|---|---|---|

| nnU-Net (a) | 0.8980 | 0.8354 | 0.8485 | 0.6578 |

| nnU-Net + Multiscale + ODConv3D (b) | 0.9092 | 0.8082 | 0.7634 | 0.7872 |

| a + b | 0.8354 | 0.8485 | 0.7564 |

* The models are trained on Glioma Dataset

| Algorithm | Lesion ET | Lesion TC | Lesion WT |

|---|---|---|---|

| SPARC | 0.7478 | 0.7649 | 0.7515 |

| @harshi | 0.7537 | 0.7051 | 0.5991 |

| @ntnu40940111s | 0.7603 | 0.7695 | 0.8403 |

| blackbean | 0.8264 | 0.8464 | 0.5690 |

| @nic-vicorob | 0.8029 | 0.7985 | 0.7487 |

| BraTS2023_SPARK_UNN | 0.7577 | 0.7907 | 0.8647 |

| Ours | 0.8354 | 0.8485 | 0.7564 |

4.3 Brain Metastases Dataset

4.3.1 Baseline Models

We begin by evaluating the baseline performance of the nnU-Net architecture on the Brain Metastases Dataset. As depicted in Table 3, the nnU-Net achieves a Dice coefficient of 0.7675 for overall segmentation. The performance varies across lesion subregions, with Lesion ET, Lesion TC, and Lesion WT achieving Dice coefficients of 0.5157, 0.5105, and 0.4656, respectively.

4.3.2 Impact of ODConv3D

We assess the effectiveness of Omni-dimensional Dynamic Convolution (ODConv3D) by introducing the nnU-Net + ODConv3D model. This model replaces traditional Conv layers with ODConv3D layers for enhanced feature extraction. The results in Table 3 demonstrate that ODConv3D leads to an improved Dice coefficient of 0.7953 for overall segmentation. This improvement extends to lesion subregions, with Lesion ET, Lesion TC, and Lesion WT achieving Dice coefficients of 0.5364, 0.5451, and 0.5141, respectively.

4.3.3 Multiscale Strategy

We explore the benefit of a multiscale strategy by introducing the nnU-Net + Multiscale model. This approach leverages multiple scales of the input data to improve feature representation. As indicated in Table 3, the multiscale strategy yields a Dice coefficient of 0.7771 for overall segmentation. Lesion TC substantially improves with a Dice coefficient of 0.5737, compared to other subregions.

4.3.4 Combined Approach

The culmination of our model’s innovation comes with the nnU-Net + Multiscale + ODConv3D configuration. Combining the multiscale strategy and ODConv3D layers yields exceptional results, evident in the overall Dice coefficient of 0.8188. This combined approach significantly enhances the segmentation performance across lesion subregions, with Dice coefficients of 0.5896, 0.6406, and 0.5555 for Lesion ET, Lesion TC, and Lesion WT, respectively. Table 4 showcases our model’s performance, underscoring its competitive results. Our model consistently achieves good scores across all lesion subtypes, including Lesion ET with a score of 0.5896, Lesion TC with a score of 0.6406, and Lesion WT with a score of 0.5648.

| Algorithm | Training Dice | Lesion ET | Lesion TC | Lesion WT |

|---|---|---|---|---|

| nnU-Net | 0.7675 | 0.5157 | 0.5105 | 0.4656 |

| nnU-Net + ODConv3D | 0.7953 | 0.5364 | 0.5451 | 0.5141 |

| nnU-Net + Multiscale | 0.7771 | 0.5354 | 0.5737 | 0.5008 |

| nnU-Net + Multiscale + ODConv3D | 0.8188 | 0.5896 | 0.6406 | 0.5555 |

| Algorithm | Lesion ET | Lesion TC | Lesion WT |

|---|---|---|---|

| SPARC | 0.4133 | 0.4378 | 0.4698 |

| MIA_SINTEF | 0.4433 | 0.4774 | 0.4832 |

| DeepRadOnc | 0.4575 | 0.4913 | 0.4665 |

| @jeffrudie | 0.4119 | 0.5171 | 0.5168 |

| @parida12 | 0.5592 | 0.6039 | 0.5650 |

| CNMC_PMI2023 | 0.608 | 0.649 | 0.587 |

| Ours | 0.5896 | 0.6406 | 0.5555 |

4.4 Comparison across Diverse Datasets

To comprehensively assess our proposed multi-modal attention-based tumor segmentation model, we conducted evaluations on a diverse range of challenges: Adult Glioma Segmentation, BraTS-Africa Segmentation, Meningioma Segmentation, Brain Metastases Segmentation, and Pediatric Tumors Segmentation. As summarized in table 5 and 6, the results offer a comparison of the achieved Dice coefficients across different datasets in the validation and testing phase of the BraTS 2023 challenge.

Moreover, to provide visual clarity on the model’s performance, we present figures in table 7. These figures visually represent the segmentation outcomes on representative examples from each challenge. These visual insights enrich our understanding of how the model’s capabilities translate into actual segmentations in various clinical scenarios.

| Challenge | Training Dice | Lesion ET | Lesion TC | Lesion WT |

|---|---|---|---|---|

| Adult Glioma Segmentation | 0.909 | 0.798 | 0.826 | 0.789 |

| BraTS-Africa Segmentation | 0.909 | 0.835 | 0.849 | 0.756 |

| Meningioma Segmentation | 0.889 | 0.783 | 0.780 | 0.756 |

| Brain Metastases Segmentation | 0.819 | 0.590 | 0.641 | 0.556 |

| Pediatric Tumors Segmentation | 0.805 | 0.565 | 0.764 | 0.813 |

| Challenge | Lesion ET | Lesion TC | Lesion WT |

|---|---|---|---|

| Adult Glioma Segmentation | 0.798 | 0.826 | 0.789 |

| BraTS-Africa Segmentation | 0.818 | 0.775 | 0.845 |

| Meningioma Segmentation | 0.799 | 0.773 | 0.763 |

| Brain Metastases Segmentation | 0.491 | 0.534 | 0.483 |

| Pediatric Tumors Segmentation | 0.480 | 0.320 | 0.347 |

| t2w | t2f | t1n | t1c | Ground Truth | Predictions | |

|---|---|---|---|---|---|---|

| (a) | ![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/t2w.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/t2f.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/t1n.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/t1c.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Ground_Truth.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Segmented_Files.png) |

| (b) | ![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/t2w.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/t2f.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/t1n.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/t1c.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Ground_Truth.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Segmented_File.png) |

| (c) | ![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/t2w.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/t2f.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/t1n.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/t1c.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/GT_on_t2w.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Model_output_on_t2w.png) |

| (d) | ![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/t2w.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/t2f.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/t1n.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/t1c.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/GT_on_t2w.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Model_output_on_t2w.png) |

| (e) | ![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Screenshot_2023-08-18_122209.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Screenshot_2023-08-18_122214.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Screenshot_2023-08-18_122218.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Screenshot_2023-08-18_122222.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Ground_truth.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Predicted_seg.png) |

| (f) | ![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Screenshot_2023-08-18_125532.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Screenshot_2023-08-18_125527.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Screenshot_2023-08-18_125523.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Screenshot_2023-08-18_125517.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Ground_Truth.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Segmentation.png) |

| (g) | ![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/t2w.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/t2f.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/t1n.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/t1c.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Ground_Truth.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Swgmentation_Prediction.png) |

| (h) | ![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/t2w.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/t2f.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/t1n.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/t1c.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Ground_Trutu.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Segmented_Prediction.png) |

| (i) | ![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Screenshot_2023-08-18_162238.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Screenshot_2023-08-18_162232.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Screenshot_2023-08-18_162222.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Screenshot_2023-08-18_162216.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Original_Segmentation.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Segmented_Output.png) |

| (j) | ![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Screenshot_2023-08-18_170225.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Screenshot_2023-08-18_170218.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Screenshot_2023-08-18_170213.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Screenshot_2023-08-18_170208.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Ground_Truth.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e6dd0558-a54a-45be-9a71-1d053e54e077/Segmented_Output.png) |

5 Discussion

Across both the Brain Metastases Dataset and the BraTS-Africa Dataset datasets, the results highlight the effectiveness of the proposed multi-modal attention-based tumor segmentation model, particularly when leveraging multiscale inputs and Omni-dimensional Dynamic Convolution. The combination of these approaches improves the segmentation accuracy and performance of lesion subregions. The data processing combination further refines results. These findings contribute to more accurate and reliable tumor segmentation.

6 Conclusion

This paper has provided an in-depth exploration of our proposed model’s performance across distinct datasets. The combined utilization of multiscale inputs, ODConv techniques, and strategic data processing leads to enhanced segmentation accuracy, showcasing the potential for more accurate and reliable tumor segmentation across various medical imaging scenarios.

References

- [1] Maruf Adewole, Jeffrey D Rudie, Anu Gbadamosi, Oluyemisi Toyobo, Confidence Raymond, Dong Zhang, Olubukola Omidiji, Rachel Akinola, Mohammad Abba Suwaid, Adaobi Emegoakor, et al. The brain tumor segmentation (brats) challenge 2023: Glioma segmentation in sub-saharan africa patient population (brats-africa). arXiv preprint arXiv:2305.19369, 2023.

- [2] Ujjwal Baid, Satyam Ghodasara, Suyash Mohan, Michel Bilello, Evan Calabrese, Errol Colak, Keyvan Farahani, Jayashree Kalpathy-Cramer, Felipe C Kitamura, Sarthak Pati, et al. The rsna-asnr-miccai brats 2021 benchmark on brain tumor segmentation and radiogenomic classification. arXiv preprint arXiv:2107.02314, 2021.

- [3] Ujjwal Baid, Satyam Ghodasara, Suyash Mohan, Michel Bilello, Evan Calabrese, Errol Colak, Keyvan Farahani, Jayashree Kalpathy-Cramer, Felipe C Kitamura, Sarthak Pati, et al. The rsna-asnr-miccai brats 2021 benchmark on brain tumor segmentation and radiogenomic classification. arXiv preprint arXiv:2107.02314, 2021.

- [4] Spyridon Bakas, Hamed Akbari, Aristeidis Sotiras, Michel Bilello, Martin Rozycki, Justin S Kirby, John B Freymann, Keyvan Farahani, and Christos Davatzikos. Advancing the cancer genome atlas glioma mri collections with expert segmentation labels and radiomic features. Scientific data, 4(1):1–13, 2017.

- [5] Spyridon Bakas, Mauricio Reyes, Andras Jakab, Stefan Bauer, Markus Rempfler, Alessandro Crimi, Russell Takeshi Shinohara, Christoph Berger, Sung Min Ha, Martin Rozycki, et al. Identifying the best machine learning algorithms for brain tumor segmentation, progression assessment, and overall survival prediction in the brats challenge. arXiv preprint arXiv:1811.02629, 2018.

- [6] Fabian Isensee, Paul F Jaeger, Simon AA Kohl, Jens Petersen, and Klaus H Maier-Hein. nnu-net: a self-configuring method for deep learning-based biomedical image segmentation. Nature Methods, pages 1–9, 2020.

- [7] Fabian Isensee, Jens Petersen, Andre Klein, David Zimmerer, Paul F. Jaeger, Simon Kohl, Jakob Wasserthal, Gregor Koehler, Tobias Norajitra, Sebastian Wirkert, and Klaus H. Maier-Hein. Abstract: nnu-net: Self-adapting framework for u-net-based medical image segmentation. In Heinz Handels, Thomas M. Deserno, Andreas Maier, Klaus Hermann Maier-Hein, Christoph Palm, and Thomas Tolxdorff, editors, Bildverarbeitung für die Medizin 2019, pages 22–22, Wiesbaden, 2019. Springer Fachmedien Wiesbaden.

- [8] Konstantinos Kamnitsas, Christian Ledig, Virginia FJ Newcombe, Joanna P Simpson, Andrew D Kane, David K Menon, Daniel Rueckert, and Ben Glocker. Efficient multi-scale 3d cnn with fully connected crf for accurate brain lesion segmentation. Medical image analysis, 36:61–78, 2017.

- [9] Alexandros Karargyris, Renato Umeton, Micah J Sheller, Alejandro Aristizabal, Johnu George, Srini Bala, Daniel J Beutel, Victor Bittorf, Akshay Chaudhari, Alexander Chowdhury, et al. Medperf: open benchmarking platform for medical artificial intelligence using federated evaluation. arXiv preprint arXiv:2110.01406, 2021.

- [10] Anahita Fathi Kazerooni, Nastaran Khalili, Xinyang Liu, Debanjan Haldar, Zhifan Jiang, Syed Muhammed Anwar, Jake Albrecht, Maruf Adewole, Udunna Anazodo, Hannah Anderson, et al. The brain tumor segmentation (brats) challenge 2023: Focus on pediatrics (cbtn-connect-dipgr-asnr-miccai brats-peds). arXiv preprint arXiv:2305.17033, 2023.

- [11] Dominic LaBella, Maruf Adewole, Michelle Alonso-Basanta, Talissa Altes, Syed Muhammad Anwar, Ujjwal Baid, Timothy Bergquist, Radhika Bhalerao, Sully Chen, Verena Chung, et al. The asnr-miccai brain tumor segmentation (brats) challenge 2023: Intracranial meningioma. arXiv preprint arXiv:2305.07642, 2023.

- [12] Chao Li, Aojun Zhou, and Anbang Yao. Omni-dimensional dynamic convolution. arXiv preprint arXiv:2209.07947, 2022.

- [13] Richard McKinley, Raphael Meier, and Roland Wiest. Ensembles of densely-connected cnns with label-uncertainty for brain tumor segmentation. In International MICCAI Brainlesion Workshop, pages 456–465. Springer, 2018.

- [14] Bjoern H Menze, Andras Jakab, Stefan Bauer, Jayashree Kalpathy-Cramer, Keyvan Farahani, Justin Kirby, Yuliya Burren, Nicole Porz, Johannes Slotboom, Roland Wiest, et al. The multimodal brain tumor image segmentation benchmark (brats). IEEE transactions on medical imaging, 34(10):1993–2024, 2014.

- [15] Ahmed W Moawad, Anastasia Janas, Ujjwal Baid, Divya Ramakrishnan, Leon Jekel, Kiril Krantchev, Harrison Moy, Rachit Saluja, Klara Osenberg, Klara Wilms, et al. The brain tumor segmentation (brats-mets) challenge 2023: Brain metastasis segmentation on pre-treatment mri. arXiv preprint arXiv:2306.00838, 2023.

- [16] Sérgio Pereira, Adriano Pinto, Victor Alves, and Carlos A Silva. Brain tumor segmentation using convolutional neural networks in mri images. IEEE transactions on medical imaging, 35(5):1240–1251, 2016.

- [17] Simon K Warfield, Kelly H Zou, and William M Wells. Simultaneous truth and performance level estimation (staple): an algorithm for the validation of image segmentation. IEEE transactions on medical imaging, 23(7):903–921, 2004.

- [18] Michael Weller, Martin van den Bent, Matthias Preusser, Emilie Le Rhun, Jörg C Tonn, Giuseppe Minniti, Martin Bendszus, Carmen Balana, Olivier Chinot, Linda Dirven, et al. Eano guidelines on the diagnosis and treatment of diffuse gliomas of adulthood. Nature reviews Clinical oncology, 18(3):170–186, 2021.

- [19] Paul A. Yushkevich, Joseph Piven, Heather Cody Hazlett, Rachel Gimpel Smith, Sean Ho, James C. Gee, and Guido Gerig. User-guided 3D active contour segmentation of anatomical structures: Significantly improved efficiency and reliability. Neuroimage, 31(3):1116–1128, 2006.