Multi-View Graph Representation Learning Beyond Homophily

Abstract.

Unsupervised graph representation learning(GRL) aims to distill diverse graph information into task-agnostic embeddings without label supervision. Due to a lack of support from labels, recent representation learning methods usually adopt self-supervised learning, and embeddings are learned by solving a handcrafted auxiliary task(so-called pretext task). However, partially due to the irregular non-Euclidean data in graphs, the pretext tasks are generally designed under homophily assumptions and cornered in the low-frequency signals, which results in significant loss of other signals, especially high-frequency signals widespread in graphs with heterophily. Motivated by this limitation, we propose a multi-view perspective and the usage of diverse pretext tasks to capture different signals in graphs into embeddings. A novel framework, denoted as Multi-view Graph Encoder(MVGE), is proposed, and a set of key designs are identified. More specifically, a set of new pretext tasks are designed to encode different types of signals, and a straightforward operation is propxwosed to maintain both the commodity and personalization in both the attribute and the structural levels. Extensive experiments on synthetic and real-world network datasets show that the node representations learned with MVGE achieve significant performance improvements in three different downstream tasks, especially on graphs with heterophily. Source code is available at https://github.com/G-AILab/MVGE.

1. Introduction

Graphs provide a natural and efficient representation for non-Euclidean data, such as brain networks, social networks, traffic maps in logistics(Lu et al., 2021), and financial networks(Bronstein et al., 2017). To simplify the learning process, the current graph learning community generally divides graphs into two significant types from the node label’s perspective: homophily and heterophily. In graphs with homophily, linked nodes often belong to the same class or have similar features, which is a crucial principle of many real-world networks, e.g., in the citation network, papers tend to cite papers from the same research area(Newman, 2018). Partially due to its simplicity, this type of network has been under intensive study. In heterophily settings, trends in connections are reversed with the so-called ”opposites attract” phenomenon. Linked nodes are likely from different classes or have different features, e.g., the chemical interactions in proteins often occur between different types of amino acids(Bo et al., 2021). Learning on graphs with heterophily is more difficult (Jia and Benson, 2020).

However, either homophily or heterophily is classified based on the global assortativity (Newman, 2003), a metric defined only concerning node labels across the whole graph. In reality, real-world graphs can not be readily classified into either homophily or heterophily while they essentially reveal a complex and typically mixture of patterns from both node and attribute levels (Peel et al., 2018; Li et al., 2022). Fig. 1(a) shows that real-world graphs have different degrees of homophily: Cora, Citeseer, and Pubmed are with high homophily, while Cornell, Texas, and Wisconsin are with high heterophily. Furthermore, even within a graph, the actual distribution patterns are highly diverse, Fig. 1(b) shows that Cora with strong homophily still exist nodes with local heterophily, and vice versa also holds for Wisconsin with strong global heterophily. This fact clearly shows abundant signals, including low-frequency signals for commonality and high-frequency signals for disparity and the mixtures of those signals.

The goal of unsupervised Graph Representation Learning(GRL) is to condense different types of information existing in both the graph attributes and the topology into task-agnostic low-dimension embeddings (Hamilton et al., 2017b). Such complex patterns in high-dimensional and intractable non-Euclidean graph data bring enormous challenges for unsupervised GRL research without direct access to ground truth labels during embeddings. Due to the lack of label guidelines and the popularity of homophily, most GRL solutions are designed under strong homophily assumption(Li et al., 2022). For example, random walk-based methods(e.g., DeepWalk(Perozzi et al., 2014), Node2Vec(Grover and Leskovec, 2016)) match nodes’ co-occurrence rate on short random walks over the graph to force neighboring nodes to have similar representations. GAE, VGAE(Kipf and Welling, 2016b) and later variants ARVGE(Pan et al., 2018) assumes the connected nodes have more similar embeddings. GNN-based solutions are optimized for the network with homophily by propagating features and aggregating them within various graph neighborhoods via different mechanisms (e.g., averaging, LSTM, self-attention)(Zhu et al., 2020), e.g., GCN(Kipf and Welling, 2016a), SGC(Wu et al., 2019), GAT(Veličković et al., 2017), can be regarded as a particular form of the low-pass filter (Nt and Maehara, 2019; Xu et al., 2020), which can extract the low-frequency information in the graph data and make the characterization information of a node and its surrounding context closer(Li et al., 2019). Another major stream of GRL is contrastive learning in which graph augmentations preserve the low-frequency components and perturb the middle and high-frequency components of the graph. This design hinders its application on graphs with heterophily(Wang et al., 2022).

However, as shown in Fig. 1(b), there are abundant signals in the network, merely retaining the low-frequency information, which denotes the commonality in node features, is insufficient to support patterns differing from commonality. The high-frequency information, capturing the difference between node features, may be more suitable for heterophily. However, very limited GRL works have been proposed to support the embeddings for heterophily. Our previous work, PairE(Li et al., 2022) tries to encode both low-frequency and high-frequency signals among connected nodes by employing node pairs as basic embedding units. However, systematic encoding of different signals in GRL remains under-explored.

One major limitation faced by most GRL solutions lies in that one pretext task may only retain information from one perspective and can not cover the rich patterns existing in graphs(Jin et al., 2021a). To support this conclusion, we assess the quality of the embeddings generated from two different pretext tasks: the ego-task that keeps the difference between node attributes and the agg-task that keeps the commonality signals between a node and its surrounding neighbors, which explore high-frequency and low-frequency signals respectively. Fig. 1(c) and 1(d) clearly illustrate an important fact: embeddings from the two tasks have the exact opposite performance in Pubmed and Wisconsin. Specifically, Fig. 1(d) shows that when a network exhibits heterophily(Wisconsin), embeddings from high-frequency signals (ego-task) perform much better than low-frequency signals (agg-task), while under homophily settings(Cora) are opposite, as shown in Fig. 1(c). As already discussed, real-world graphs usually display a complex and typically mixture of patterns. Thus, it is essential to extract different frequency signals simultaneously.

Motivated by the above discussed limitations with one pretext task, this paper proposes a novel Multi-view Graph Encoder(MVGE) framework that can effectively condense different types of signals into the embeddings with multiple novel pretext tasks. In order to tackle the problems of the semantic difference among embeddings learned from different tasks, we also propose a novel design to transform those embeddings into one uniform semantic space. Our contribution is summarized as follows:

-

•

GRL beyond the homophily assumption. We analyze the efficacy and limitations of different pretext tasks in terms of their capabilities in capturing different types of signals existing in graphs. We show that different pretext tasks can be only effective for graphs with either homophily or heterophily and innovatively point out the necessity of learning from multiple perspectives.

-

•

Capturing different types of information in the graph. This paper designs two pretext tasks that capture the graph’s high and low-frequency signals within node features. More specifically, the paper proposes two pretext tasks: the ego-task learns the distributions of node features with rich high-frequency signals, and the agg-task learns the distributions of the smoothed aggregated neighbor attributes with low-frequency signals. Two separated autoencoders are proposed to support those two tasks.

-

•

Effective integration of embeddings from multiple perspectives. The direct use of multi-task learning might not improve final embedding quality due to their diverse semantic meanings. This paper designs and implements MVGE that can merge different embeddings with an adjacent reconstruction task which can translate embeddings in different semantic spaces into one coherent space.

In order to evaluate the quality of generated embeddings, we compare MVGE with nine state-of-the-art baselines on eight real-world networks covering the full spectrum of low-to-high global homophily. Extensive comparative experiments, including node classification, link prediction, and pairwise node classification, validate that MVGE has advantages over state-of-the-arts and gains significant performance improvement in heterophily while being competitive in homophily.

2. Related Work

In this section, we introduce the graph representation learning under the homophily or heterophily assumptions.

2.1. GRL under Homophily Assumption.

Matrix Factorization & DeepWalk. Early unsupervised methods for learning node representation are traditionally based on matrix factorization and random walks. Matrix factorization methods calculate losses with handcrafted similarity metrics to build vector representations for each node with latent features(Yang et al., 2015; Zhang et al., 2019). The inspiration for random walk-based unsupervised methods for learning node representation comes from the effectiveness of the NLP method. DeepWalk(Perozzi et al., 2014) and Node2Vec(Grover and Leskovec, 2016) optimize node embeddings by matching nodes’ co-occurrence rate on short random walks over graphs. They are shown to over-emphasize proximity information at the expense of structural information(Ribeiro et al., 2017; Velickovic et al., 2019). Also, they are limited to preserving the similarity of adjacent nodes and cannot extend to heterophily settings.

AutoEncoder-based. Graph autoencoders, e.g., GAE and VGAE(Kipf and Welling, 2016b) and their follow-up work ARVGE(Pan et al., 2018) with an adversarial regularization framework use two-layer GCN as their encoder and consider that impose the topological closeness of nodes in the graph structure on the latent space by predicting the first-order neighbors. GAEs over-emphasize proximity information and are also based on the assumption that connected nodes should be more similar.

Unsupervised GNNs. Unsupervised GNN-based methods(Hamilton et al., 2017a; You et al., 2019), on the other hand, propagate features and aggregate them within various graph neighborhoods via different mechanisms (e.g., averaging, LSTM), where the node representations evolve over multiple rounds of propagation with becoming prohibitively similar. Uniform aggregation and update in GNNs ignore the difference in information between similar and dissimilar neighbors. On heterophilic graphs, discriminative node representation learning yearns for distinguishable information with diverse message passing(Zhu et al., 2020)(Zheng et al., 2022).

Contrastive methods. Contrastive methods aim to learn representations by maximizing feature consistency under different augmentation views(Herzig et al., 2020). For example, DGI(Velickovic et al., 2019) employs the idea of Deep InfoMax(Hjelm et al., 2018) and considers both local and global information during discrimination. MVGRL(Hassani and Khasahmadi, 2020) proposed to train graph encoder by maximizing MI between representations encoded from first-order neighbors and a graph diffusion. MERIT(Jin et al., 2021b) utilized Siamese networks(Chen and He, 2021) and proposed cross-views and cross-network contrastive objectives to maximize the similarity between node representations from local and global perspectives. These methods can, to some extent, mitigate the impacts of heterophily. However, most of those solutions only augment the low-frequency signals between nodes and perturb the middle and high-frequency components of the graph. It contributes to the success of GCL algorithms in homophily but hinders its application in heterophily(Wang et al., 2022).

2.2. Learning beyond Homophily

Due to the lack of node labels, graph representation learning beyond homophily remains largely unexplored. Existing works are mainly limited to the semi-supervised GNNs for heterophily. The common philosophy behind these works is weakening the smoothing effect. For example, MixHop(Abu-El-Haija et al., 2019) and H2GCN(Zhu et al., 2020) combine multi-scale information by concatenation to modify the smoothed node representations based on local information, while FAGCN(Bo et al., 2021) and GPRGNN(Chien et al., 2020) relax the edges with positive values, which tends to induce the smoothing effect, to possess real values (which can be either positive or negative). GNN-LF/HF (Zhu et al., 2021) designs filter weights from the perspective of graph optimization functions, which can simulate high- and low-pass filters and LINKX (Lim et al., 2021) separately embeds node features and graph topology. After that, the two embeddings are combined with MLPs to generate node embeddings. However, these methods are semi-supervised and rely on the node labels to define the homophily level and guide the learning processes. Actually, different attributes possess diverse characteristics in unsupervised settings.

GRL adopting an unsupervised/self-supervised paradigm still remains challenging due to the uncertainty in defining a learning objective. Selene (Zhong et al., 2022) proposes a dual-channel feature embedding mechanism to fuse node attributes and network structure information and leverage a sampling and anonymization strategy to break the implicit homophily assumption of existing embedding mechanisms. However, Selene needs to extract the high-order subnetwork (-Ego) corresponding to each node, which will significantly increase the computational and space burden. Our previous work, PairE(Li et al., 2022) preserves both information for homophily and heterophily by going beyond the localized node view and utilizing paired node entities as the basic embedding unit. It can, to some extent, maintain high-frequency signals. But it demands hand-craft design to translate pair-based embeddings to node embeddings for typical downstream tasks. AF-GCL(Wang et al., 2022) leverages the features aggregated by GNN to construct the self-supervision signal, which prevents perturbing middle and high-frequency information due to the heavily relying on graph augmentation and is less sensitive to the homophily degree. However, AF-GCL still aims to learn similarities between nodes rather than keep high-frequency signals in node features. In the settings of unsupervised GRLs, how to effectively encode different frequency signals into embeddings and make the graph representation can effectively support a wealth of unknown downstream tasks remains largely unexplored.

3. Notation and Preliminaries

Let be an undirected, unweighted graph with node set and edge set . For each node , there contains a unique class label . We denote a general random walk rooted at vertex as and the hops/steps random walk as . For example, is one of the immediate neighbors of . denotes the 1-hop neighbors of node . We represent the graph by its adjacency matrix and its node feature matrix , where the vector corresponds to the ego-feature of node and to features of the node in its random walk path.

3.1. Global and Local Homophily

Here, we define the metrics to describe the essential characteristics of a graph. There exist multiple metrics, e.g., the label smoothness defined in (Hou et al., 2019) or the network assortativity in (Newman, 2003). Here, we adopt the edge homophily ratio , the fraction of edges which connect nodes that have the same class label(i.e., intra-class edges), which gives an overall trend for all the edges in the graph. This metric is firstly defined in H2GCN(Zhu et al., 2020).

Definition 1 (Global Homophily). The edge homophily ratio

| (1) |

When the global edge homophily ratio , it shows that the graph has strong homophily. In contrast, graph with strong heterophily(i.e., low/weak homophily) have small global edge homophily ratio .

However, as discussed in the introduction, this metric only represents the global trend of a graph. In different parts of real-world graphs, different homophily degrees can be observed. Here, we explicitly introduce a new metric that measures the homophily degree from a node’s local view.

Definition 2 (Local Homophily). The local homophily is the fraction of nodes in node ’s immediate neighbors that have the same class label as node :

| (2) |

Rather than that represent global trend with one scalar, the local edge homophily ratio is node-specific. When , it indicates that node connects to surrounding nodes with the same label, while node with strong heterophily .

3.2. Node Features in Ego and Aggregation Views

As pointed out by previous works(Zhu et al., 2020; Bo et al., 2021), in graphs with strong homophily, the attribute aggregation operation in GNNs can usually intensify the low-frequency signals. While in graphs with strong heterophily, the node attributes themselves and MLP can often achieve SOTA performance as those attributes contain a distinctive node pattern for classification. As we do not have any labels and downstream tasks might rely on different types of signals, we go beyond the traditional homophily assumption and innovatively propose two views to capture the low- and high-frequency information existing in the node features: specifically, ego features and aggregated features.

Definition 3 (Node-specific Ego features). We use the nodes’ original features as ego features:

| (3) |

It contains the unique information of the node itself, which can preserve the information difference between nodes to the greatest extent, which is the high-frequency signals.

Definition 4 (Walk-based Aggregated features). Merely using the ego features, the noise in them will cause the learned representations to deviate from reality. To reduce the impacts of the noise, similar to the GNN-based solutions, we define an aggregation function to aggregate the surrounding context, denoted as . One important requirement is the aggregated feature should be able to intensify the low-frequency signals and/or the mean-smoothed high-frequency signals around a node . Furthermore, to some extent, the aggregated features should represent the local structure of node . Here, the aggregated neighbor feature for each node is given as:

| (4) |

Specifically, for each node , we generate several paths through unbiased random walks with unequal steps, e.g., ,. Here, i and j represent the length of the path. The aggregated features from each path are calculated with the features-wise average for all the nodes along the path. Figure 2 shows the three paths starting from node 8 with lengths 3, 5, and 10. Then, we mixed the aggregated features of each random walk path by concatenation. This design is based on the following observation. (1) multiple random walks help to capture mixing latent information from neighbors at various distances. (2) As verified in H2GCN, in heterophily settings, although the labels of immediate neighbors tend to be different from the ego node, the higher-order neighborhoods may have the same class of the ego node and can provide more relevant context. Thus, by higher-order random walk and aggregating information beyond the immediate neighbors, the similarity between nodes with structural and semantic similarity can be preserved.

3.3. Feature-wise Low and High-frequency Signals

Here we hope to explain low-frequency and high-frequency signals and their relationship to ego and aggregated features.

Definition 5 (Low/High-frequency signals) According to the definition in (Bo et al., 2021; Zhu et al., 2021), the low-frequency signal represents the similarity information between node features, and the high-frequency signal represents the difference information between node features. The greater the similarity of node feature information, the stronger the low-frequency signal transmitted, otherwise, the high-frequency signal dominates.

Actually, different features possess diverse characteristics and can not be directly compared. To explicitly model the diversity, we define low and high-frequency signals from a single feature dimension. As shown in Fig. 3, taking a central node and any node as an example, the 1st/2nd-dim features with significant differences can be seen as existing high-frequency signals, while the 3rd-dim features exist as low-frequency signals. The agg features, which are the aggregated features from neighbor node and central node , smooth high-frequency signals and identify low-frequency signals.

4. Multi-view Graph Encoder

MVGE is inspired by the approaches that explore the combination of multiple pretext tasks to learn different signals(Jin et al., 2021b; Hassani and Khasahmadi, 2020; Li et al., 2022; Jin et al., 2021a). This framework includes two novel pretext tasks supported with multi-view parallel AutoEncoders(Section 4.1), a novel solution to integrate embeddings from two views into one coherent semantic space(Section 4.3).

overall framework of MVGE

4.1. Separated Encoders for Different Views

Traditional self-supervised learning feeds different data augmentations into a single embedding space or two embedding spaces with shared parameters, which interferes with learning different features. Motivated by this limitation, our model learns to construct separated embedding sub-spaces for different views, which avoids mixing low frequency and high-frequency signals and allows for more expressiveness for different frequency signals. Furthermore, we devise two similar pretext tasks to learn signals from the two views for each encoder.

4.1.1. Linear Encoder for Ego-features.

To preserve high-frequency information in ego features, we propose to replace the generic GCN encoder with a linear encoding scheme. As illustrated in Figure 4 in blue block, the ego features are encoded by two single layer MLP and . Different from the standard GNN-based encoder, a single linear transformation of the features does not smooth the feature signal of the surrounding neighbors during propagation, thus maximizing the preservation of the high-frequency information of the nodes themselves and keeping node representations from becoming indistinguishable. Besides, similar to the ”add skip connection” operation introduced in ResNet(He et al., 2016), which is later adopted in jumping knowledge networks for graph representation learning(Xu et al., 2018), we (2) concatenate the original inputs and the intermediate representations at the final layer, to preserve the integrity and personalization of the input information as much as possible.

4.1.2. GNN Encoder for Agg-features.

For the aggregated features from neighbors, we want to learn the commonality among neighbors. Thus, we adopt a GCN encoder for the aggregated features. In general, GCN updates node representations by aggregating information from neighbors, which can be seen as a special form of low-pass filter(Wu et al., 2019; Li et al., 2019) and can retain the commonality in the neighbors. As shown in Figure 4 in red block, the agg features are encoded by a two-layer GCN and a single layer MLP .

4.1.3. Learning in Different Views

Generally, the self-supervision task are normally use the reconstruction loss(Veličković et al., 2017) or the contrast loss(Velickovic et al., 2019). To capture both the low-frequency and high-frequency signals in the node attribute, we utilize Kullback-Leibler divergence (Kullback, 1997) and propose two feature reconstruction pretext tasks to learn signals from two views.

Ego Feature Reconstruction. To capture low-frequency signals in the embedding, MVGE adopts the ego feature reconstruction pretext task from the ego feature view. This task is designed to recover the ego features . As each node usually has its own specific feature vector, it usually has distinctive information that differs itself from other nodes. As pointed out by many previous GNNs(Zhu et al., 2020; Bo et al., 2021; Li et al., 2022), the integration of ego-features can help the embeddings keep high-frequency signals and prevent the node representation from tending to be similar during the model training process.

Specifically, we use to denote the output embedding of decoder . Aiming to pull closer to the representations from the original features, we use the ego feature reconstruction loss with the Kullback-Leibler divergence . calculate the distribution difference between and , where Q is the distribution of the reconstructed features and is the original distribution of :

| (5) |

where represents the -th node in , represents the -th feature. and are the distribution possibility and the target distribution of the -th features of the -th node, respectively:

| (6) |

Aggregated Feature Reconstruction. Similar to the ego feature reconstruction task, this task reconstructs the aggregated features and uses a loss function based on KL divergence:

| (7) |

where and can be calculated as the same way like and .

By reconstructing the smoothed node neighborhood features, the model can capture low-frequency information, which helps avoid node representations deviating from reality in multiple rounds of learning guided by self-feature reconstruction tasks.

4.2. Learning different signals.

Fig. 5 shows the embedding distributions in the first dimension generated by respective feature reconstruction tasks on the syn-cora with low (0.1) and high (0.9) homophily ratios. As we can see from this figure, as the NN optimizer algorithms normally generate weights according to Gaussian distribution, all those embeddings basically follow a Gaussian distribution, however, with different standard deviations . Here, we can observe two important facts. 1) Under different global homophily, is bigger than . This result demonstrates that the ego feature reconstruction task preserves more high-frequency signals. In comparison, in the aggregated feature reconstruction task, due to its smoothing operations in both deepwalk generation and GCN, the embeddings generated by the agg-task mainly contain low-frequency signals with small . (2) Embeddings from the two tasks become similar in strong homophily settings while dissimilar in heterophily settings. In datasets with low homophily, nodes with different labels tend to be connected, and the nodes are very different from their surrounding neighbors. Thus, the ego embeddings are quite different from agg embeddings. In datasets with strong homophily(), the similarity between linked nodes is very high, and the feature difference between the connected node(high-frequency signals) is relatively less. Thus, as shown in Fig. 5(b), these two tasks generate almost identical distributions. Fig. 5(a) and 5(b) clearly show that the two tasks can capture different types of signals.

4.3. Integration of multiple semantic embeddings

Our model learns to capture low-frequency and high-frequency signals for node representations by constructing separate embedding spaces and introducing two pretext tasks. Although, as pointed in (Zhang and Yang, 2021), learning with multiple tasks can generally improve performance compared to learning from a single task in CV, NLP, etc. However, simply using multiple pretext tasks in graph learning normally leads to unstable and poor performance in graphs(Standley et al., 2020). The key to this factor, we guess, is due to the fact that the learned embeddings from different pretext tasks are actually from different semantic spaces. It is hard for the ML classifier to use embeddings from different semantic spaces effectively.

In MVGE, although the ego-task and agg-task are similar as they are both based on the feature distribution reconstruction, their semantic meanings are still quite different. To bridge the gap between two semantic spaces of different pretext tasks, we propose one additional pretext task, the adjacency matrix reconstruction task, to seamlessly integrate the embeddings from two views. Another reason to introduce this pretext task is that we need to keep a certain graph structure while the two views are generally from the feature view and lack topology information.

Adjacency Matrix Reconstruction. Specifically, as shown in Fig. 4 in purple blocks, we use a traditional inner product decoder to reconstruct the graph structure for link prediction, which is defined as:

| (8) |

where is obtained by concatenating ’Ego Embeddings’ and ’Agg Embeddings’, denotes the reconstructed adjacency matrix, and is a non-linear activation function - we use the sigmoid activation function in MVGE. This function determines the likelihood of pairwise nodes connected in . During the training phase, our goal is to iteratively minimize a reconstruction loss by capturing the similarity between and . Thus, the objective function can be formulated as a cross-entropy loss:

| (9) |

Learning with three pretext tasks. To train our model end-to-end and learn node representations for downstream tasks, we jointly leverage both the ego and aggregated feature restoration loss and the adjacency matrix reconstruction loss. Thus, the overall object function is defined as:

| (10) |

where we aim to minimize during the optimization, and represents the weights that balance the and , is a balance factor that balances the averaged feature reconstruction loss and the adjacency matrix reconstruction loss.

5. Experiments

We choose three graph analysis tasks, i.e., node classification, link prediction, and pairwise node classification, to evaluate the effectiveness of representations learned from graphs with different global homophily. And we demonstrate the superiority of MVGE by comparison with 9 state-of-the-art techniques. Furthermore, we show the significance of designs in MVGE through rich ablation studies. The experiments are performed based on 8 popular benchmark datasets with global homophily ratio ranging from strong heterophily to strong homophily and two synthetic datasets where we can control the homophily/heterophily level.

5.1. Experiment Settings:

Datasets. Statistic details of those datasets are listed in Table 1.

-

•

Cora, Citeseer, Pubmed, Corafull(Namata et al., 2012): standard citation networks where nodes represent documents, edges are citation links, and features are the bag-of-words representation of the document.

-

•

Chameleon(Rozemberczki et al., 2021): page-page networks in Wikipedia, where nodes represent articles from the English Wikipedia, edges reflect mutual links between them. Node features indicate the presence of particular nouns in the articles and the average monthly traffic.

-

•

Cornell, Texas, Wisconsin(Pei et al., 2020): school department Web Page networks, where nodes represent web pages, and edges are hyperlinks between them. Node features are the bag-of-words representation of web pages. The web pages are manually classified into the five categories, student, project, course, staff, and faculty.

-

•

Synthetic datasets. syn-cora and syn-products are two synthetic datasets where we can control the homophily/heterophily level(Zhu et al., 2020). Node features are generated by sampling nodes with the same class label from real-world datasets Cora and ogb-products.

| Dataset | Nodes | Edges | Features | Classes | |

| Cora | 2,708 | 5,278 | 1,433 | 7 | 0.81 |

| Citeseer | 3,327 | 4,676 | 3,703 | 6 | 0.74 |

| Pubmed | 19,717 | 44,327 | 500 | 3 | 0.8 |

| Corafull | 19.793 | 63,421 | 8710 | 70 | 0.57 |

| Chameleon | 2,277 | 31,371 | 2325 | 5 | 0.23 |

| Cornell | 183 | 277 | 1,703 | 5 | 0.3 |

| Texas | 183 | 279 | 1,703 | 5 | 0.11 |

| Wisconsin | 251 | 450 | 1,703 | 5 | 0.21 |

| syn-cora | 1,490 | 2,968 | 1,433 | 5 | [0.1,0.3,0.5,0.7,0.9] |

| syn-products | 10,000 | 59,648 | 101 | 10 | [0.1,0.3,0.5,0.7,0.9] |

Baselines. In comparative experiments, we compare MVGE with 9 self-supervised models with open source code, including two contrastive models learning from multi-views with several training objectives, MERIT and MVGRL, and one beyond homophily assumption, PairE. We aim to provide a rigorous and fair comparison between different models on each dataset by using the same dataset splits and training procedure. The introduction of the comparison method is as follows:

-

•

DeepWalk(Perozzi et al., 2014): A GRL algorithm based on random walk, utilizing Word2Vec and optimizing node embeddings by matching the co-occurrence rates of nodes on short random walk paths over graphs.

-

•

SAGE-U(Hamilton et al., 2017a): An unsupervised variant of GraphSAGE generates node embeddings by sampling a fixed number of neighbors for each node and aggregating their features.

-

•

DGI(Velickovic et al., 2019): A contrastive learning method via maximizing mutual information between local and global representations for embedding learning.

-

•

GAE(Kipf and Welling, 2016b): The first algorithm to process graph-structured data with autoencoder, which captures the structural information of the graph by reconstructing the adjacency matrix.

-

•

ARVGE(Pan et al., 2018): An autoencoder-based solution that augments GAE by adding an adversarial module on the obtained embeddings to learn more robust graph representations, thereby improving the model’s performance on sparse and noisy graph data.

-

•

P-GNN(You et al., 2019): A new class of GNNs for computing position-aware node embeddings, which capture positions/locations of nodes with respect to the anchor nodes by computing the distance of a given target node to each anchor-set.

-

•

MERIT(Jin et al., 2021b): A multi-view self-supervised model that learns node representations by enhancing Siamese self-distillation with multi-scale contrastive learning.

-

•

MVGRL(Hassani and Khasahmadi, 2020): A multi-view self-supervised model that learns node representations and graph level representations by contrasting structural views of graphs.

-

•

PairE(Li et al., 2022): A multi-task unsupervised model to preserve both information for homophily and heterophily by going beyond the localized node view and utilizing paired nodes entities with richer expression powers.

Experiment Settings. For MVGE and all state-of-the-art baselines, we use the Adam optimizer with a learning rate of 0.01, and the embedding dimension of both embeddings in MVGE and node embeddings for other benchmarks is fixed to 128. All models are run 10 times, and the experiment results are the mean values with standard deviation. Other parameters are set as the default settings in the corresponding papers. For the walk-based aggregated features in MVGE, we generate 3 random walk paths for each node and set them with deep walk lengths as 3, 5, and 10 respectively. We run 200 epochs for each trainning.

Downstream Tasks. The experimental settings for different downstream tasks are as follows:

Node classification. For this task, all the nodes are used in the embedding generation process. To balance the effect of three reconstruction tasks, we perform Optuna(Akiba et al., 2019) for efficient hyper-parameter search and tune and between 0 and 1 with a step size of 0.1. Then, the graph encoder is trained with the obtained loss weights. To evaluate the trained graph encoder, we adopt a linear evaluation protocol by training a separate logistic regression classifier on top of the learned node representations with the one-vs-rest strategy. For all of the datasets, we use 30% of nodes to construct the training set, while using the remaining nodes as the testing set. We repeat the experiments ten times and report the average Micro-F1-score with the standard deviation.

Link prediction. The models are trained on an incomplete version of these datasets where parts of the citation links(edges) have been removed, while all node features are kept. Specifically, we use 15% existing links and an equal number of nonexistent links as test sets. The remaining 85% existing links and an equal number of randomly generated nonexistent links were used as the training set.

Pairwise node classification. For the pairwise node classification task, we predict whether a pair of nodes belong to the same class or not. In this case, a pair of nodes that belong to the same class is a positive example and the label will be marked as 1 otherwise as 0(You et al., 2019). Specifically, we randomly generate the same number of positive and negative samples and follow the same dataset split ratio as link prediction.

For both the link prediction task and pairwise node classification task, the paired node embeddings are calculated from the source and target node embedding with the L2 operator. We report the averaged ROC_AUC score with the standard deviation on the test set over 10 runs with different random seeds and train/test split.

5.2. Results on Synthetic Datasets

Using synthetic graphs syn-cora and syn-products with various global homophily ratio , we show the effectiveness of MVGE and the significance of diverse pretext tasks through node classification comparison task and ablation studies of variants of MVGE.

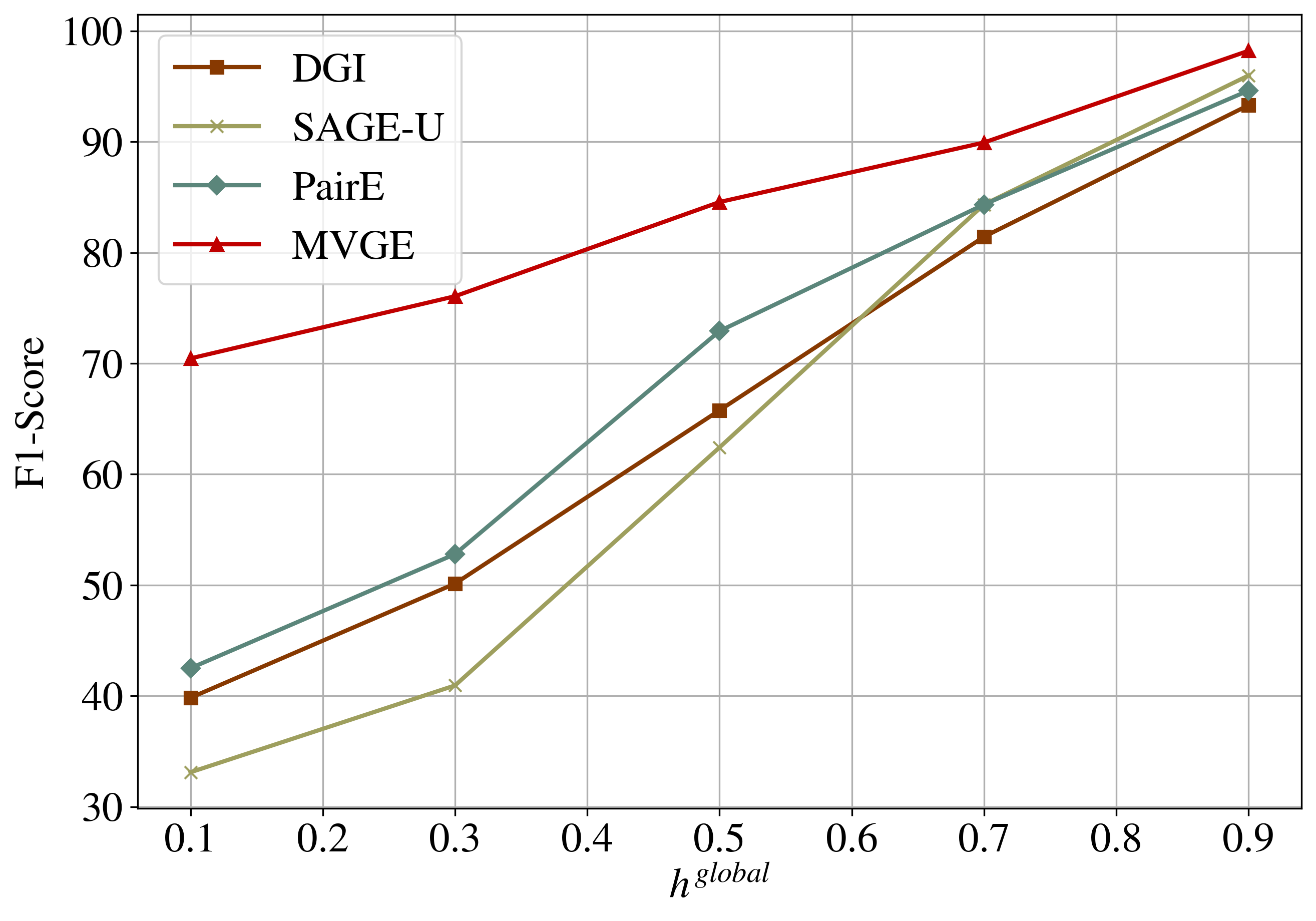

Performance comparison under different global homophily. Fig. 6(a) shows the Micro-F1 score of node classification on syn-cora. While all models achieve near-perfect performance under strong homophily, the performance of baselines based on homophily assumptions, e.g., DGI and SAGE-U, significantly deteriorates under heterophily settings. In comparison, MVGE has the best overall performance, outperforming other baselines, especially in heterophily settings, while tying with other models in homophily. PairE, which encodes different signals in the embeddings, has more advantages than other baselines under heterophily settings but still has weak performance.

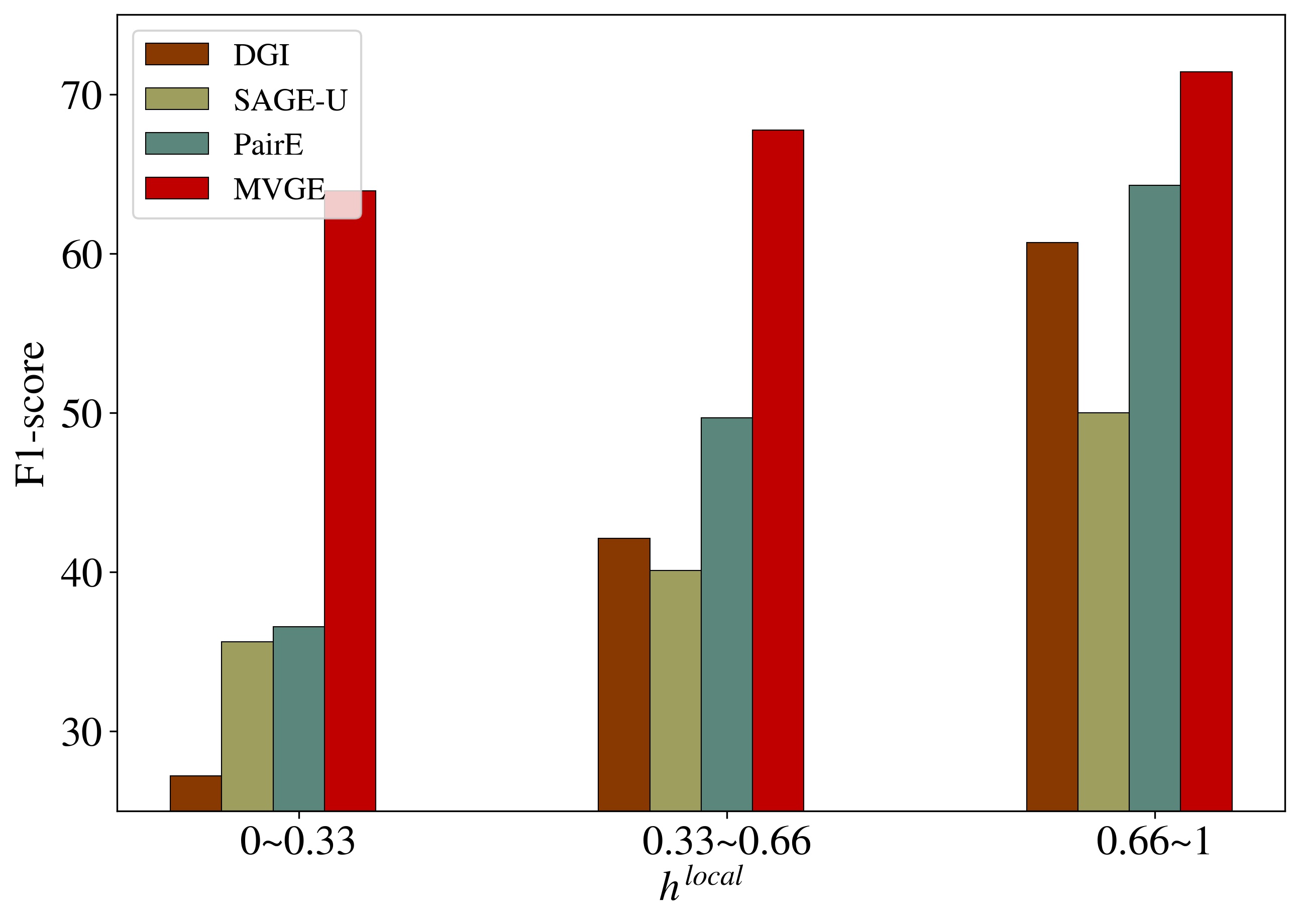

Performance comparison under different local homophily. To better analyze why MVGE can achieve good performance in graphs with different homophily settings, we conduct an analytical experiment by evaluating the node classification performance on nodes with different local homophily defined in Eq. 1 on syn-cora with global homophily 0.1 and 0.7. According to local homophily, we divide nodes into three different ranges, [0,0.33), [0.33,0.66) and [0.66, 1]. Fig. 6(b) and 6(c) show node classification results over different local homophily intervals. We can clearly see that MVGE keeps a rather good performance on the nodes with low local homophily, which are difficult to identify their classes under homophily assumption. In comparison, DGI and SAGE-U suffer poor performance on those difficult nodes, i.e., 25.42% in and 36.62% in , for nodes with strong heterophily (00.33). They have comparable good performance for nodes with high local homophily (0.661), i.e., 97.05% and 91.63%. PairE, which leverages low-frequency and high-frequency signals, learns more effectively under heterophily settings. However, it uses paired node as a basic embedding unit and needs the translation between pair embeddings to node embeddings. It suffers information loss during this translation. MVGE achieves consistent performance on both local heterophily and homophily and outperforms the other three baselines.

Impact of ego-task/agg-task under different global homophily. To discuss the performance impact of the two feature reconstruction tasks on downstream tasks under different global homophily, we conduct node classification on two synthetic benchmark syn-cora and syn-products. Fig. 6 shows the classification results at 30 training ratio. We can observe that the performance under the two feature reconstruction tasks is generally better than the single pretext task. It verifies the importance of integrating different information into node embeddings. On the other hand, comparing the effects of ego-task and agg-task, we can observe that for networks with strong heterophily, embeddings from ego-task generally achieve much better performance, even better than all-task in syn-cora with global homophily 0.1. In this graph, a node significantly differs from its neighbors. In this case, the agg-task might collect much noise that can perturb the learning process and deviate node representations from the ground truth. In comparison, the ego-task guarantees the basic facts by preserving the high-frequency signals of the nodes themselves. For networks with strong homophily, only the agg-task can achieve good performance. The reason is that the agg-task essentially enhances the low-frequency signals (similar parts) in the neighborhood information. Of course, due to the lack of labels during GRL, we actually do not know the actual trend of connectivity during embedding. Thus, it is important to use two pretext tasks.

5.3. Results on real-world Datasets

In this section, we evaluate MVGE with three different downstream tasks. Each of them normally demands different types of information for classification.

5.3.1. Results on Node Classification Task

We report the overall node classification results in Table 2. We can observe from the table that MVGE outperforms all unsupervised baselines on all eight datasets with different global homophily. For example, in Cornell, MVGE achieves the average Micro-F1 score of 78.89%, and up to 12% relative performance improvements over the compared baselines. Experiment results clearly show that MVGE can effectively retain rich signals during embeddings with its multi-view self-supervision design and the distribution-based reconstruction tasks that can keep both low-frequency and high-frequency signals in node features. Other baselines generally achieve good performance in the graphs with homophily while comparably poor performance in heterophily settings. There still exists a large performance gap compared with the models beyond homophily assumption. For example, the contrastive method MVGRL achieves 82.09% Micro-F1 score in Cora with strong homophily, while only 58.85% in Cornell with strong heterophily.

| Name | Cora | Citeseer | Pubmed | Corafull | Chameleon | Cornell | Texas | Wisconsin |

|---|---|---|---|---|---|---|---|---|

| Hom.ratio | 0.81 | 0.74 | 0.8 | 0.57 | 0.23 | 0.3 | 0.11 | 0.21 |

| DeepWalk | 80.055.74 | 56.0210.14 | 79.803.66 | 37.443.73 | 24.160.17 | 48.6727.84 | 47.0029.28 | 48.6727.84 |

| SAGE-U | 80.189.85 | 71.344.32 | 83.823.01 | 55.771.54 | 42.909.27 | 48.1813.61 | 61.2711.93 | 60.008.43 |

| DGI-gcn | 81.287.58 | 68.949.10 | 83.111.50 | 43.461.66 | 42.907.33 | 38.7311.93 | 77.8218.31 | 60.0022.31 |

| GAE | 78.204.61 | 56.327.89 | 81.891.87 | 54.961.68 | 39.455.56 | 63.099.87 | 59.0916.26 | 54.6721.33 |

| ARVGE | 79.067.94 | 60.4210.01 | 71.503.18 | 45.112.21 | 41.616.11 | 54.3330.27 | 50.3328.20 | 54.4221.84 |

| P-GNN | 63.6010.28 | 46.926.04 | 69.082.42 | 46.122.16 | 33.856.67 | 53.8911.17 | 57.806.78 | 49.339.49 |

| MERIT | 41.801.46 | 68.130.23 | 85.050.10 | 26.500.54 | 30.464.79 | 66.9013.09 | 54.665.33 | |

| MVGRL | 82.095.25 | 62.644.41 | 80.121.71 | 52.992.04 | 41.196.64 | 58.8516.79 | 62.2218.33 | 60.1318.75 |

| PairE | 86.518.52 | 72.142.53 | 85.732.34 | 61.020.95 | 45.523.37 | 66.737.77 | 61.514.86 | 68.009.98 |

| MVGE | 86.952.40 | 75.350.95 | 86.820.85 | 66.901.98 | 45.681.79 | 78.899.19 | 81.2710.05 | 79.339.10 |

| Name | Cora | Citeseer | Pubmed | Corafull | Chameleon | Cornell | Texas | Wisconsin |

|---|---|---|---|---|---|---|---|---|

| DeepWalk | 74.350.83 | 77.790.53 | 72.840.71 | 86.690.27 | 74.230.59 | 55.974.28 | 55.395.41 | 66.692.94 |

| SAGE-U | 89.970.39 | 92.440.22 | 87.970.23 | 95.730.04 | 82.640.64 | 75.984.60 | 63.912.53 | 77.212.86 |

| DGI-gcn | 83.701.00 | 85.742.24 | 84.570.33 | 79.200.56 | 82.220.44 | 82.983.52 | 83.812.90 | 84.341.99 |

| P-GNN | 82.170.04 | 77.911.44 | 82.760.17 | 90.050.15 | 87.600.80 | 48.157.08 | 48.2112.37 | 64.071.11 |

| GAE | 91.180.58 | 88.000.93 | 96.410.11 | 96.920.10 | 98.480.09 | 47.163.58 | 43.562.63 | 54.113.39 |

| ARVGAE | 92.140.24 | 89.080.47 | 92.041.21 | 95.820.61 | 97.820.16 | 56.703.12 | 48.962.56 | 75.381.62 |

| MERIT | 85.840.10 | 61.950.32 | 63.900.23 | 52.441.38 | 70.111.36 | 56.255.86 | 53.643.30 | |

| MVGRL | 84.521.47 | 60.382.23 | 80.911.35 | 78.590.96 | 80.720.55 | 75.055.84 | 81.176.50 | 76.086.95 |

| PairE | 92.920.41 | 95.100.20 | 94.980.23 | 96.910.09 | 93.630.27 | 80.572.47 | 83.891.56 | 86.472.64 |

| MVGE | 94.860.31 | 95.360.16 | 96.200.06 | 97.720.04 | 94.760.08 | 85.151.46 | 84.581.38 | 90.331.50 |

| Name | Cora | Citeseer | Pubmed | Corafull | Chameleon | Cornell | Texas | Wisconsin |

|---|---|---|---|---|---|---|---|---|

| DeepWalk | 60.371.24 | 53.051.47 | 53.050.27 | 61.910.51 | 58.200.61 | 52.583.19 | 60.573.95 | 52.501.78 |

| SAGE-U | 57.171.53 | 50.851.65 | 50.470.18 | 53.340.59 | 50.180.61 | 70.494.07 | 71.734.30 | 71.231.69 |

| DGI-gcn | 62.811.33 | 51.991.42 | 58.431.82 | 53.711.58 | 49.720.40 | 65.453.88 | 71.444.39 | 59.881.52 |

| GAE | 75.842.87 | 65.091.14 | 76.281.00 | 86.910.06 | 57.780.86 | 53.512.30 | 56.234.24 | 52.495.16 |

| ARVGAE | 77.381.43 | 64.751.77 | 73.281.11 | 85.910.56 | 60.900.30 | 53.624.98 | 57.998.63 | 57.634.53 |

| P-GNN | 66.400.83 | 58.070.98 | 61.690.20 | 74.180.32 | 58.470.55 | 51.974.49 | 52.733.53 | 49.321.88 |

| MERIT | 67.081.12 | 53.670.81 | 60.230.31 | 51.040.25 | 72.741.28 | 69.71.46 | 63.253.87 | |

| MVGRL | 81.490.58 | 64.030.64 | 81.621.00 | 90.100.20 | 57.621.02 | 62.476.58 | 71.171.13 | 59.955.58 |

| PairE | 66.541.46 | 60.950.96 | 69.670.63 | 73.640.72 | 58.640.67 | 72.623.51 | 71.894.40 | 73.562.95 |

| MVGE | 83.250.49 | 79.610.56 | 84.130.03 | 90.210.11 | 60.970.29 | 87.890.46 | 85.610.53 | 87.851.54 |

5.3.2. Results on Link Prediction task

Table 3 shows the ROC_AUC scores of the link prediction task on all eight datasets. We can find that our model achieves state-of-the-art results with respect to unsupervised models in six out of eight datasets. For example, in Wisconsin, we achieve a 90.33% ROC_AUC score which is almost 4% relative improvement over the previous state-of-the-art. It attributes to the fact that we design an adjacency matrix reconstruction task to predict whether there is a link between two nodes directly. In addition, the random walk operation in data augmentation also captures the structural information of different local neighborhoods in the network to a certain extent.

GAE and ARVGE, which adopt link reconstruction in the decoder, also achieve competitive performance under homophily settings. However, their homophily assumption making connected nodes with similar embeddings, these models fail to predict links accurately in heterophily settings, e.g., Cornell, Texas, and Wisconsin.

5.3.3. Results on Pair-wise Prediction task

Table 4 summarizes the performance of MVGE and other baselines on pairwise node classification tasks, where MVGE gains the best classification accuracy on all eight datasets. Specifically, MVGE achieves 89.89% ROC_AUC score while the previous state-of-the-art method MERIT can only achieve 72.74% ROC_AUC, which means that MVGE gives about 15% relative improvement on this task.

In the pairwise node classification task, we believe that the implicit relationship of the pair is related to the low- and high-frequency information of the features of the paired nodes. In other words, two nodes with similar embeddings are more likely to fall into the same class. For paired nodes belonging to different classes, the embeddings of paired nodes should contain enough high-frequency information for classification. Results show that MVGE has a superior classification performance and can effectively differentiate nodes from different classes. While models based on homophily assumption are concerned with capturing the commonality of node features and inevitably ignore the difference. Their designs make rich relationship patterns become vague and indistinguishable in learned representation. Thus, their performance in graphs with heterophily is rather poor, e.g., DGI 59.88% and MGRL 59.95% v.s. MVGE 87.85%.

5.4. Ablation Study

In this section, we analyze and verify the effectiveness of different designs.

5.4.1. Design choices for walk-based aggregated features.

This subsection analyzes and evaluates the contribution of multiple random walk paths and impacts of different aggregators in Definition 4 based on node classification on synthetic datasets. (1) Fig. 7(a) and 7(b) shows the results for MVGE and three variants, where , , and denotes there is only single walk path in Eq. (4) with different length respectively. We observe that MVGE consistently performs better than all the variants, indicating that the agg features obtained by combining multiple walk paths contain the richest information. (2) Furthermore, we consider compare the performance of MVGE with different function in Eq. (4), i.e., , , and . Fig. 7(c) and 7(d) show that the generally achieves that best performance in all global homophily settings and significantly outperforms the others () on syn-cora () by up to 13%. These results support the effectiveness of the concatenation operation.

5.4.2. Effectiveness of Linear Encoder For Ego Features

To verify the effectiveness of the linear encoder for ego features, we compare it with a variant of MVGE with two separated GCN encoders. Fig. 8 shows the node classification results in Micro-F1 score on syn-cora and syn-products with different global homophily. We observe that MVGE with linear encoder consistently performs better than the variant, existing a significantly bigger performance gap in strong heterophily settings. As a popular encoding scheme, the essence of GCN is a low-pass filter: each node repeatedly averages its own features and those of its neighbors to update its own feature representation(Nt and Maehara, 2019; Xu et al., 2020). This mechanism results in final embeddings that are similar across neighboring nodes for any set of original features (Rossi et al., 2020). While this may work well in the case of homophily, where neighbors likely belong to the same class or have similar features, it poses severe challenges in the case of heterophily: it is not possible to distinguish neighbors from different labels based on the similar learned representations. In contrast, a simple linear encoding scheme for ego features can avoid smoothing high-frequency information in features and allow learning distinguishable representations.

5.4.3. Effectiveness of Adjacency Matrix Reconstruction Task.

Fig. 9 gives the node classification and link prediction results of MVGE and a variant without the adjacency reconstruction task on two synthetic datasets. We observe similar trends on both two benchmarks and two downstream tasks: MVGE with adj-task almost has the best overall performance outperforming the variants without adj-task. Results clearly show the importance of merging embeddings with different semantic meanings. In addition, when the global homophily is rather high (0.9), ego embeddings and agg embeddings have very close semantics, which results in a comparably small performance gap between MVGE and its variant. In comparison, when with low global homophily, their performance gaps are generally large. Particularly, as shown in Fig.9(a), since the purpose of adj-ask is to learn neighboring patterns between connected nodes, e.g., close representations, MVGE with adj-task is less effective than MVGE without adj-task in node classification under strong heterophily (0.1). Furthermore, as the adj-task is able to capture some important characteristics of the graph structure by reconstructing an adjacency matrix close to the true one starting from the node embeddings, the performance of link prediction is greatly improved.

An interesting observation worth noting is that the performance gaps to be opposite in syn-cora and syn-products under strong heterophily (=0.1). The three core designs involved in this article all have their unique roles: t1, Linear Encoder for Ego-features, to preserve high-frequency information in ego features; t2, GNN Encoder for Agg-features, to learn the commonality among neighbors; t3, Reconstruct the Adjacency Matrix, to learn the proximity of topological distances. t1 and t2 directly preserve the difference and commonality between node features, and their role is to make the distance between the node and its neighbors as consistent as possible in the original feature space and embedding space. While t3 only considers structural information, it is expected that structurally adjacent nodes will also maintain a relatively close distance in the embedding space.

Therefore, in some cases, the goals of the two will conflict, especially when there are sparse networks with high heterogeneity and low degree (such as syn-cora with heterophily=0.1 and degree = 3.9), at this time, the labels of the central node and its neighbors are all different with a high probability. In such a case, the t1/t2 task will maintain the characteristics of the node and the commonality with the neighborhood , which usually keeps the embeddings of and highly distinguishable, while t3 will make the embeddings of and are as similar as possible. At this time, there is a serious conflict between the learning goals of the two, which may cause confusion in learning. For syn-product, its degree is 11, so even if homophily=0.1, there may still be some similar nodes around the central node . Therefore, t1/t2 and t3 can better find consistent signals, and their collaborative learning can also effectively improve performance. We think this is what causes the performance gaps to be opposite in syn-cora and syn-products under strong heterophily (=0.1). All in all, the impact of homophily level is closely related to the sparsity of the network (node degree), in heterophily, low-degree nodes pose stronger challenges in learning (Zhu et al., 2020).

5.4.4. Functions in merging ego and agg embeddings

To validate the effectiveness of the concatenation operation for learned representations, we conduct experiments on six datasets for three MVGE variants with different functions to merge the ego embeddings and agg embeddings, i.e., , and . Fig. 10 shows the node classification results under 30% training ratio. We can observe that function achieves the best performance in all six datasets under different global homophily, as function can keep the integrity and personalization of the input information as much as possible, and avoid losses caused by information mixing. The performance of the other two aggregator functions is relatively unstable. Specifically, the function generally achieves better performance than the function. The embedding accumulation operation of can, to a certain extent, preserve the degree information of nodes.

6. Conclusion

Due to a lack of support from labels, graph representation learning methods normally make the homophily assumptions on the graph, which result in their poor performance in heterophily settings. Furthermore, one pretext task can hardly capture diverse graph information into task-agnostic embeddings without label supervision. In this paper, we present a novel multi-view graph representation framework MVGE with the usage of diverse pretext tasks to capture different signals in graphs into embeddings. More specifically, a set of new pretext tasks are designed to encode different types of signals, and a straightforward operation is proposed to maintain both the commodity and personalization in both the attribute and the structural levels. Extensive experiments on both synthetic and real-world network datasets show that the node representations learned with MVGE achieve significant performance improvements in different downstream tasks, especially on graphs with heterophily.

7. Acknowledgements

This work was supported by the Science and Technology Research and Development Program Project of China railway group limited (Project Nos. 2020-Special-02 and 2021-Special-08). The computing resources supporting this work were partially provided by High-Flyer AI. (Hangzhou High-Flyer AI Fundamental Research Co., Ltd.)

References

- (1)

- Abu-El-Haija et al. (2019) Sami Abu-El-Haija, Bryan Perozzi, Amol Kapoor, Nazanin Alipourfard, Kristina Lerman, Hrayr Harutyunyan, Greg Ver Steeg, and Aram Galstyan. 2019. Mixhop: Higher-order graph convolutional architectures via sparsified neighborhood mixing. In international conference on machine learning. PMLR, 21–29.

- Akiba et al. (2019) Takuya Akiba, Shotaro Sano, Toshihiko Yanase, Takeru Ohta, and Masanori Koyama. 2019. Optuna: A next-generation hyperparameter optimization framework. In Proceedings of the 25th ACM SIGKDD international conference on knowledge discovery & data mining. 2623–2631.

- Bo et al. (2021) Deyu Bo, Xiao Wang, Chuan Shi, and Huawei Shen. 2021. Beyond low-frequency information in graph convolutional networks. arXiv preprint arXiv:2101.00797 (2021).

- Bronstein et al. (2017) Michael M Bronstein, Joan Bruna, Yann LeCun, Arthur Szlam, and Pierre Vandergheynst. 2017. Geometric deep learning: going beyond euclidean data. IEEE Signal Processing Magazine 34, 4 (2017), 18–42.

- Chen and He (2021) Xinlei Chen and Kaiming He. 2021. Exploring simple siamese representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 15750–15758.

- Chien et al. (2020) Eli Chien, Jianhao Peng, Pan Li, and Olgica Milenkovic. 2020. Adaptive universal generalized pagerank graph neural network. arXiv preprint arXiv:2006.07988 (2020).

- Grover and Leskovec (2016) Aditya Grover and Jure Leskovec. 2016. node2vec: Scalable feature learning for networks. In Proceedings of the 22nd ACM SIGKDD international conference on Knowledge discovery and data mining. 855–864.

- Hamilton et al. (2017a) Will Hamilton, Zhitao Ying, and Jure Leskovec. 2017a. Inductive representation learning on large graphs. Advances in neural information processing systems 30 (2017).

- Hamilton et al. (2017b) William L Hamilton, Rex Ying, and Jure Leskovec. 2017b. Representation learning on graphs: Methods and applications. arXiv preprint arXiv:1709.05584 (2017).

- Hassani and Khasahmadi (2020) Kaveh Hassani and Amir Hosein Khasahmadi. 2020. Contrastive multi-view representation learning on graphs. In International Conference on Machine Learning. PMLR, 4116–4126.

- He et al. (2016) Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition. 770–778.

- Herzig et al. (2020) Roei Herzig, Amir Bar, Huijuan Xu, Gal Chechik, Trevor Darrell, and Amir Globerson. 2020. Learning canonical representations for scene graph to image generation. In European Conference on Computer Vision. Springer, 210–227.

- Hjelm et al. (2018) R Devon Hjelm, Alex Fedorov, Samuel Lavoie-Marchildon, Karan Grewal, Phil Bachman, Adam Trischler, and Yoshua Bengio. 2018. Learning deep representations by mutual information estimation and maximization. arXiv preprint arXiv:1808.06670 (2018).

- Hou et al. (2019) Yifan Hou, Jian Zhang, James Cheng, Kaili Ma, Richard TB Ma, Hongzhi Chen, and Ming-Chang Yang. 2019. Measuring and improving the use of graph information in graph neural networks. In International Conference on Learning Representations.

- Jia and Benson (2020) Junteng Jia and Austin Benson. 2020. Outcome correlation in graph neural network regression. arXiv preprint arXiv:2002.08274 (2020).

- Jin et al. (2021b) Ming Jin, Yizhen Zheng, Yuan-Fang Li, Chen Gong, Chuan Zhou, and Shirui Pan. 2021b. Multi-scale contrastive siamese networks for self-supervised graph representation learning. arXiv preprint arXiv:2105.05682 (2021).

- Jin et al. (2021a) Wei Jin, Xiaorui Liu, Xiangyu Zhao, Yao Ma, Neil Shah, and Jiliang Tang. 2021a. Automated self-supervised learning for graphs. arXiv preprint arXiv:2106.05470 (2021).

- Kipf and Welling (2016a) Thomas N Kipf and Max Welling. 2016a. Semi-supervised classification with graph convolutional networks. arXiv preprint arXiv:1609.02907 (2016).

- Kipf and Welling (2016b) Thomas N Kipf and Max Welling. 2016b. Variational graph auto-encoders. arXiv preprint arXiv:1611.07308 (2016).

- Kullback (1997) Solomon Kullback. 1997. Information theory and statistics. Courier Corporation.

- Li et al. (2019) Qimai Li, Xiao-Ming Wu, Han Liu, Xiaotong Zhang, and Zhichao Guan. 2019. Label efficient semi-supervised learning via graph filtering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 9582–9591.

- Li et al. (2022) You Li, Bei Lin, Binli Luo, and Ning Gui. 2022. Graph Representation Learning Beyond Node and Homophily. IEEE Transactions on Knowledge and Data Engineering (2022).

- Lim et al. (2021) Derek Lim, Felix Hohne, Xiuyu Li, Sijia Linda Huang, Vaishnavi Gupta, Omkar Bhalerao, and Ser Nam Lim. 2021. Large scale learning on non-homophilous graphs: New benchmarks and strong simple methods. Advances in Neural Information Processing Systems 34 (2021), 20887–20902.

- Lu et al. (2021) Yuhuan Lu, Hongliang Ding, Shiqian Ji, NN Sze, and Zhaocheng He. 2021. Dual attentive graph neural network for metro passenger flow prediction. Neural Computing and Applications 33, 20 (2021), 13417–13431.

- Namata et al. (2012) Galileo Namata, Ben London, Lise Getoor, Bert Huang, and U Edu. 2012. Query-driven active surveying for collective classification. In 10th International Workshop on Mining and Learning with Graphs, Vol. 8. 1.

- Newman (2018) Mark Newman. 2018. Networks. Oxford university press.

- Newman (2003) Mark EJ Newman. 2003. Mixing patterns in networks. Physical review E 67, 2 (2003), 026126.

- Nt and Maehara (2019) Hoang Nt and Takanori Maehara. 2019. Revisiting graph neural networks: All we have is low-pass filters. arXiv preprint arXiv:1905.09550 (2019).

- Pan et al. (2018) Shirui Pan, Ruiqi Hu, Guodong Long, Jing Jiang, Lina Yao, and Chengqi Zhang. 2018. Adversarially regularized graph autoencoder for graph embedding. arXiv preprint arXiv:1802.04407 (2018).

- Peel et al. (2018) Leto Peel, Jean-Charles Delvenne, and Renaud Lambiotte. 2018. Multiscale mixing patterns in networks. Proceedings of the National Academy of Sciences 115, 16 (2018), 4057–4062.

- Pei et al. (2020) Hongbin Pei, Bingzhe Wei, Kevin Chen-Chuan Chang, Yu Lei, and Bo Yang. 2020. Geom-gcn: Geometric graph convolutional networks. arXiv preprint arXiv:2002.05287 (2020).

- Perozzi et al. (2014) Bryan Perozzi, Rami Al-Rfou, and Steven Skiena. 2014. Deepwalk: Online learning of social representations. In Proceedings of the 20th ACM SIGKDD international conference on Knowledge discovery and data mining. 701–710.

- Ribeiro et al. (2017) Leonardo FR Ribeiro, Pedro HP Saverese, and Daniel R Figueiredo. 2017. struc2vec: Learning node representations from structural identity. In Proceedings of the 23rd ACM SIGKDD international conference on knowledge discovery and data mining. 385–394.

- Rossi et al. (2020) Ryan A Rossi, Di Jin, Sungchul Kim, Nesreen K Ahmed, Danai Koutra, and John Boaz Lee. 2020. On proximity and structural role-based embeddings in networks: Misconceptions, techniques, and applications. ACM Transactions on Knowledge Discovery from Data (TKDD) 14, 5 (2020), 1–37.

- Rozemberczki et al. (2021) Benedek Rozemberczki, Carl Allen, and Rik Sarkar. 2021. Multi-scale attributed node embedding. Journal of Complex Networks 9, 2 (2021), cnab014.

- Standley et al. (2020) Trevor Standley, Amir Zamir, Dawn Chen, Leonidas Guibas, Jitendra Malik, and Silvio Savarese. 2020. Which tasks should be learned together in multi-task learning?. In International Conference on Machine Learning. PMLR, 9120–9132.

- Veličković et al. (2017) Petar Veličković, Guillem Cucurull, Arantxa Casanova, Adriana Romero, Pietro Lio, and Yoshua Bengio. 2017. Graph attention networks. arXiv preprint arXiv:1710.10903 (2017).

- Velickovic et al. (2019) Petar Velickovic, William Fedus, William L Hamilton, Pietro Liò, Yoshua Bengio, and R Devon Hjelm. 2019. Deep Graph Infomax. ICLR (Poster) 2, 3 (2019), 4.

- Wang et al. (2022) Haonan Wang, Jieyu Zhang, Qi Zhu, and Wei Huang. 2022. Augmentation-Free Graph Contrastive Learning. arXiv preprint arXiv:2204.04874 (2022).

- Wu et al. (2019) Felix Wu, Amauri Souza, Tianyi Zhang, Christopher Fifty, Tao Yu, and Kilian Weinberger. 2019. Simplifying graph convolutional networks. In International conference on machine learning. PMLR, 6861–6871.

- Xu et al. (2020) Bingbing Xu, Huawei Shen, Qi Cao, Keting Cen, and Xueqi Cheng. 2020. Graph convolutional networks using heat kernel for semi-supervised learning. arXiv preprint arXiv:2007.16002 (2020).

- Xu et al. (2018) Keyulu Xu, Chengtao Li, Yonglong Tian, Tomohiro Sonobe, Ken-ichi Kawarabayashi, and Stefanie Jegelka. 2018. Representation learning on graphs with jumping knowledge networks. In International Conference on Machine Learning. PMLR, 5453–5462.

- Yang et al. (2015) Cheng Yang, Zhiyuan Liu, Deli Zhao, Maosong Sun, and Edward Chang. 2015. Network representation learning with rich text information. In Twenty-fourth international joint conference on artificial intelligence.

- You et al. (2019) Jiaxuan You, Rex Ying, and Jure Leskovec. 2019. Position-aware graph neural networks. In International Conference on Machine Learning. PMLR, 7134–7143.

- Zhang et al. (2019) Jie Zhang, Yuxiao Dong, Yan Wang, Jie Tang, and Ming Ding. 2019. ProNE: Fast and Scalable Network Representation Learning.. In IJCAI, Vol. 19. 4278–4284.

- Zhang and Yang (2021) Yu Zhang and Qiang Yang. 2021. A survey on multi-task learning. IEEE Transactions on Knowledge and Data Engineering (2021).

- Zheng et al. (2022) Xin Zheng, Yixin Liu, Shirui Pan, Miao Zhang, Di Jin, and Philip S Yu. 2022. Graph Neural Networks for Graphs with Heterophily: A Survey. arXiv preprint arXiv:2202.07082 (2022).

- Zhong et al. (2022) Zhiqiang Zhong, Guadalupe Gonzalez, Daniele Grattarola, and Jun Pang. 2022. Unsupervised Heterophilous Network Embedding via -Ego Network Discrimination. arXiv preprint arXiv:2203.10866 (2022).

- Zhu et al. (2020) Jiong Zhu, Yujun Yan, Lingxiao Zhao, Mark Heimann, Leman Akoglu, and Danai Koutra. 2020. Beyond homophily in graph neural networks: Current limitations and effective designs. Advances in Neural Information Processing Systems 33 (2020), 7793–7804.

- Zhu et al. (2021) Meiqi Zhu, Xiao Wang, Chuan Shi, Houye Ji, and Peng Cui. 2021. Interpreting and unifying graph neural networks with an optimization framework. In Proceedings of the Web Conference 2021. 1215–1226.