Multi-scale tests of independence powerful for detecting explicit or implicit functional relationship

Abstract

In this article, we consider the problem of testing the independence between two random variables. Our primary objective is to develop tests that are highly effective at detecting associations arising from explicit or implicit functional relationship between two variables. We adopt a multi-scale approach by analyzing neighborhoods of varying sizes within the dataset and aggregating the results. We introduce a general testing framework designed to enhance the power of existing independence tests to achieve our objective. Additionally, we propose a novel test method that is powerful as well as computationally efficient. The performance of these tests is compared with existing methods using various simulated datasets.

1 Introduction

Tests of independence play a vital role in statistical analysis. They are used to determine relationships between variables, validate models, select relevant features from a large pool of features, and establish causal directions, among other applications. These tests are particularly important in fields such as economics, biology, social sciences, and clinical trials.

The mathematical formulation for testing independence is as follows. Consider two random variables and with distribution functions and , respectively, and let represent their joint distribution function. The objective is to test the null hypothesis against the alternative hypothesis based on independent and identically distributed (i.i.d.) observations drawn from , where

| (1) |

A substantial amount of research has been conducted on this topic, and it remains an active area of investigation. Pearson’s correlation is perhaps the most well-known classical measure that quantifies linear dependence. Spearman’s rank correlation (Spearman, 1904) and Kendall’s concordance-discordance statistic (Kendall, 1938) are used to measure monotonic associations. Hoeffding (1948) proposed a measure using the distance between the joint distribution function and the product of marginals. Székely et al. (2007) introduced distance correlation (dCor), based on energy distance, while Gretton et al. (2007) leveraged kernel methods to develop the Hilbert-Schmidt independence criterion (HSIC). Reshef et al. (2011) proposed the maximal information coefficient (MIC), an information-theoretic measure that reaches its maximum when one variable is a function of the other. (Heller et al., 2013) introduced a powerful test that breaks down the problem into multiple contingency table independence tests and aggregates the results. Zhang (2019) proposed a test that is uniformly consistent with respect to total variation distance. Roy et al. (2020), and Roy (2020) used copula and checkerboard copula approaches to propose monotonic transformation-invariant tests of dependence for two or more variables. Chatterjee (2021) introduced an asymmetric measure of dependence with a simple form and an asymptotic normal distribution under independence, which attains its highest value only when a functional relationship exists. These are just a few of the many contributions in this field.

Different tests of independence have unique strengths and limitations. As demonstrated in Theorem 2.2 of Zhang (2019), no test of independence can achieve uniform consistency. This implies that for any fixed sample size, the power of a test cannot always exceed the nominal level across all alternatives. Therefore, selecting the most appropriate test for a given scenario is crucial. For instance, the correlation coefficient is particularly powerful for detecting dependence between two jointly normally distributed random variables. It is highly effective at identifying linear relationships. Similarly, Spearman’s rank correlation is particularly suited for detecting monotonic relationships.

In this article, we aim to develop tests of independence (as described in Equation 1) that will be particularly powerful for detecting association between two random variables, and , that adhere to the following parametric equation:

| (2) |

where and are continuous functions not constant on any interval, is a continuous random variable defined on an interval, and and are independent noise components that are each independent of . As a result, our tests will also be powerful for detecting association between random variables and that are functionally related to each other, i.e., when or . In contrast to the method proposed by Chatterjee (2021), which is powerful for detecting dependence when is a function of , in our approach, and are treated symmetrically.

The article is organized as follows. In Section 2, we introduce a general testing framework aimed at enhancing the power of existing tests of independence in scenarios described by Equation (2) and examine its computational complexity. In Section 3, we apply this framework to develop a novel test of independence and analyze its computational complexity. Section 4 presents a performance comparison of our methods on various simulated datasets. Finally, we conclude with a discussion and summary of our findings.

2 A General Testing Framework

Let us begin this section with a few examples of continuous dependent random variables and that satisfy the parametric equation described in Equation 2. We consider three examples, referred to as Example A, B, and C.

-

•

Example A: and , where , , and are mutually independent, with and .

-

•

Example B: and .

-

•

Example C: and , where and is independent of and .

The scatter plots for these examples are shown in Figure 1. A common feature of all these examples is that within certain neighborhoods around support points, the conditional correlation between and is non-zero. Specifically, there exist points and in the support of the distribution such that is non-zero, where represents the -neighborhood of , defined as . In Figure 1, red rectangles highlight instances of such neighborhoods with non-zero conditional correlation.

As the next example, we consider the distribution, originally introduced in (Zhang, 2019) where it is defined as the uniform distribution over a set of parallel and intersecting lines given by , where . Figure 2 illustrates the distribution for , and . If follows the distribution, the marginals and are dependent but both follow a continuous uniform distribution over . Moreover, and can be shown to satisfy Equation 2. Although for the distribution, there exist neighborhoods around support points where the conditional correlation is extremely high.

Red rectangles highlight some such neighborhoods with high conditional correlation values in , , and distributions in Figure 2.

These examples suggest that by calculating the test statistics for an existing test of independence across different neighborhoods and aggregating these values meaningfully, we can enhance its power, especially in scenarios described by Equation (2). Building on this idea, we introduce a multi-scale approach for existing tests of independence in this section.

Let denote the i.i.d. observations . We analyze the dataset using neighborhoods of varying sizes centered at each observation point, with the distances from the center point to other observations serving as our guide for selecting neighborhood sizes. For a given sample from a bivariate distribution , we consider all neighborhoods of the form for . Thus, we consider a total of neighborhoods. For notational convenience, we denote by .

Let be a test statistic for testing independence between two univariate random variables. Let us use the notation to denote the value of the test statistic computed on the sample . Here, we consider only those test statistics such that , in probability as under independence, and the rejection region is of the form for some constant . For example, Person’s correlation coefficient cannot be considered as , but its absolute value can be used. We define , that is, is the value of the test statistic when evaluated on the observations that fall within the neighborhood . If is undefined on (it may happen when or ), we set . One naive way to aggregate all the findings from different neighborhoods is to sum ’s, that is, to consider as our test statistics. The problem with this summation is that if the dependency information is limited to relatively small neighborhoods, summing all the values might introduce noise into this information. To address this issue, we separate ’s in distinct groups according to the proximity between and . A detailed description is as follows.

For each observation , we order remaining observations according to their Euclidean distance from . Random tie-breaking is to be used in case of ties while ordering. Let be the observations ordered by their Euclidean distance from in ascending order. Thus, is the neighborhood of that has -th nearest neighbor of at one of its vertices. It is easy to see that for a fixed , the length of the diagonal of increases as increases. We average the value of the test statistic based on the sample keeping fixed and varying over over . We denote this average as , that is, . To determine how extreme the value of is, we need to know its distribution under the independence of the marginals, which can be estimated by resampling technique. We discuss this in details next.

Given a sample , a randomly permuted sample can be generated, where is a random permutation of and . We calculate in the same way on the sample and denote their values with . For independent random permutations , we can compute for . According to the permutation test principle, the empirical distribution of is an estimator of the distribution of under independence. We can estimate the mean of under independence by and the standard deviation of under independence by . Next we compute Z-score of with respect to its distribution under independence by . If , we define . The Z-scores suggest how extreme the values of are. Analyzing them for a sample can give us valuable insight into the dependence structure. We demonstrate this point below using various bivariate distributions.

In this illustration, we consider two popular test statistics as : absolute value of Pearson’s correlation and distance correlation. We denote these two test statistics by and respectively. We consider eight bivariate distributions, description of each is provided in the Table 1.

| Distribution | Description |

|---|---|

| (a) Square | |

| (b) Straight line | |

| (c) Noisy straight line | |

| (d) Sine | |

| (e) Circle | |

| (f) Noisy parabola | |

| (g) | As described in Section 1 |

| (h) BVN, rho=0.5 | follows bivariate normal distribution |

| with zero means, unit variances and correlation | |

| coefficient 0.5 |

The scatter plots of these distributions are presented in Figure 3. It is easy to see that except for the ‘Square’ distribution, and are dependent in all the other distributions.

From each distribution, we generated 50 i.i.d. observations and calculated . By repeating this step independently 1000 times, we then calculated the average values . We plotted these average values for each distribution in Figure 4. Under independence, it is evident that the expected value of is 0. Therefore, if deviates from 0, it will be indicative of dependence.

From Figure 4, we observe that the average Z-score values are close to 0 for ‘Square’ distribution as and are independent. For the ‘Straight line’ and ‘Noisy straight line’ distributions, the average Z-scores are higher in larger neighborhoods for both and . However, in the ‘Noisy straight line’, the dependence information is less apparent in smaller neighborhoods compared to the ‘Straight line’. For the ‘Sine’ distribution, the dependence information is clearly noticeable in smaller neighborhoods. An interesting phenomenon occurs with the ‘Circle’ distribution, where most neighborhoods, including the largest ones, contain arcs, allowing both and to detect dependence effectively in most neighborhoods. In the ‘Noisy parabola’, the dependence is most prominent in mid-sized neighborhoods. For the more complex distribution, these statistics detect the dependence patterns in smaller and mid-sized neighborhoods, but less so in larger ones. Finally, in ‘BVN, rho=0.5’, the dependence information is only clearly evident in larger neighborhoods.

It follows clearly from the above illustration that test statistics such as absolute value of correlation and distance correlation sometimes detect dependence information better in smaller neighborhoods, sometimes in mid-sized, and sometimes in larger neighborhoods. Therefore, it makes sense to aggregate information from all the Z-scores . We propose an aggregation method in the following paragraph.

First, we observe that under independence, the expected value of is for . Under dependence, if the ’s are stochastically larger, will also be stochastically larger. In that case, the expected values of will also be positive. Let be the expected value of , that is, . Thus testing against can be done by testing vs. . To test this, we suggest the following test statistic . Clearly, a high value of presents evidence against null hypothesis. To determine the distribution of under , we again use resampling approach by utilizing randomly permuted samples which are already in our disposal. Similar how we computed for using permuted samples , we compute for based on permuted samples for . Next we calculate for . Finally we determine the p-value of by . We reject if the p-value turns out to be less than the level of significance.

Assume that computing requires operations. In that case, computing collectively for requires operations. For a fixed , the merge sort algorithm allows us to compute in operations. Therefore, determining collectively takes operations (recall that ). As a result, the computational complexity of computing together also is assuming that number of randomly permuted samples is constant with respect to . Thus we conclude that operations are required for computing the test statistics .

Since the correlation coefficient can be calculated in linear time, when . Thus, the complexity of calculating when is . On the other hand, the distance correlation between two univariate random variables can be computed in operations (see, Chaudhuri and Hu, 2019). Therefore, the complexity of calculating when is .

3 A Special Test Method

In this section, we introduce a testing method that closely follows the framework proposed in the previous section, with a few modifications. and are assumed to be continuous random variables in this setup. The key distinction in this approach lies in the manner in which the values are computed.

Given a sample , we begin by fixing two observations and , where . Similar to the previous method, we define the neighborhood , centered at with positioned at a corner of the rectangle defined by the neighborhood. Within this neighborhood, we partition the space into four quadrants, classifying observations according to whether the -coordinate is less than or greater than and whether the -coordinate is less than or greater than . Subsequently, a contingency table is constructed to count the number of observations in each of these quadrants (see Table 2).

Let represent the frequencies in this contingency table, where , , , and .

is defined as the absolute value of the phi coefficient calculated from this contingency table:

If , we set .

Next, we employ the same framework described in the previous section to determine a p-value. For notational convenience, the test statistic used here will be referred to as .

Following the setup in Figure 4, we plotted the average Z-scores for the statistic across various neighborhood sizes, as shown in Figure 5 to gain a deeper understanding of its underlying mechanism. These values are represented by red dots. For comparison, the average Z-scores for the statistic are plotted alongside and represented by the blue dots.

As shown in Figure 5(a), the Z-scores for exhibit higher variance compared to under independence. Figures 5(b) through 5(g) demonstrate that consistently yields higher Z-scores than for the ‘Straight line,’ ‘Noisy straight line,’ ‘Sine,’ ‘Circle,’ ‘noisy parabola,’ and ‘’ distributions. Additionally, the average Z-score plot for appears to be more wiggly compared to . In the ‘BVN, rho=0.5’ distribution, the average Z-scores of are comparable to those of .

For a fixed , calculating requires counting the values and . A straightforward approach to obtain these counts is to check each observation in individually. This process involves operations to compute . As established in the previous section, this naive approach requires operations to compute the test statistics. However, by applying the algorithm described in Lemma 1, which computes for all simultaneously in operations for a fixed , the computational complexity can be significantly reduced. When this algorithm is implemented, calculating collectively will require operations. As a result, the complexity of computing the test statistics is reduced to .

4 Performance on simulated datasets

In this section, we compare the performance of our proposed test methods on various simulated datasets with that of existing tests in the literature. From the proposed general testing framework, we select two well-known test statistics - the absolute value of Pearson’s correlation and distance correlation - as . We denote the resulting test statistic in these two cases as and , respectively. We denote the test statistic for our proposed special test method as . As pointed out in previous sections, the computational complexities of , and are , and , respectively.

From existing tests of independence, we selected the following popular tests: dCor (Székely et al., 2007), HSIC (Gretton et al., 2007), HHG (Heller et al., 2013), MIC (Reshef et al., 2011), Chatterjee’s correlation (xicor) (Chatterjee, 2021) and BET (Zhang, 2019). For performing dCor, HSIC, HHG, and xicor tests, we used R packages energy (Rizzo and Szekely, 2022), dHSIC (Pfister and Peters, 2019), HHG (Kaufman et al., 2019), and XICOR (Chatterjee and Holmes, 2023), respectively. We calculated the MIC statistic by using ‘mine_stat’ function from the R package minerva (Albanese et al., 2012) and subsequently performed a permutation test. For executing BET test, we used the R code provided in the supplemental material of (Zhang, 2019). We fixed the nominal level at . Except for BET, all other tests were performed using the permutation principle, with the number of permutations set to 1000. The empirical power of a test is determined by calculating the proportion of times it rejects the null hypothesis over 1000 independently generated samples of a specific size from a given distribution.

First, we considered eighty jointly continuous distributions, namely ‘Doppler’, ‘’, ‘Lissajous A’, ‘Lissajous B’, ‘Rose curve’, ‘Spiral’, ‘Tilted square’, and ‘Five clouds’. Their descriptions are provided in Table 3. Scatter plots for each of these distributions are presented in Figure 8 in Appendix B.

| Distribution | Description |

|---|---|

| (a) Doppler | |

| (b) | As described in Section 1 |

| (c) Lissajous A | |

| (d) Lissajous B | |

| (e) Rose curve | |

| (f) Spiral | |

| (g) Tilted Square | V |

| (h) Five Clouds | uniform on , |

It can be observed from the table that in the ‘Doppler’ distribution, is directly a function of . In ‘Lissajous curve A’, ‘Lissajous curve B’, ‘Rose curve’, and ‘Spiral’, and are related to each other in form of an implicit function. ‘Tilted square’ and ‘five clouds’ are two distributions where and are dependent, but their correlation is 0.

The empirical powers of all test methods on these 8 distributions are presented in Figure 6.

It can be seen from this figure that performed best in both ‘Doppler’ and ‘’ and performed well in all other distributions. performed fairly well in all distributions except for ‘Rose curve’ and ‘Tilted square’. performed well in all cases, and in ‘Lissajous curve A’, ‘Lissajous curve B’, ‘Rose curve’, ‘Spiral’, ‘Five clouds’, it performed the best. HHG yielded satisfactory power overall except for ‘Lissajous curve B’. The power of HSIC is satisfactory only in the ‘Doppler’ and ‘Tilted square’ distributions, not otherwise. dCor, MIC, xicor did not perform well. BET performed somewhat well for larger sample sizes in ‘Lissajous curve B’, ‘Rose curve’ and in ‘Spiral’.

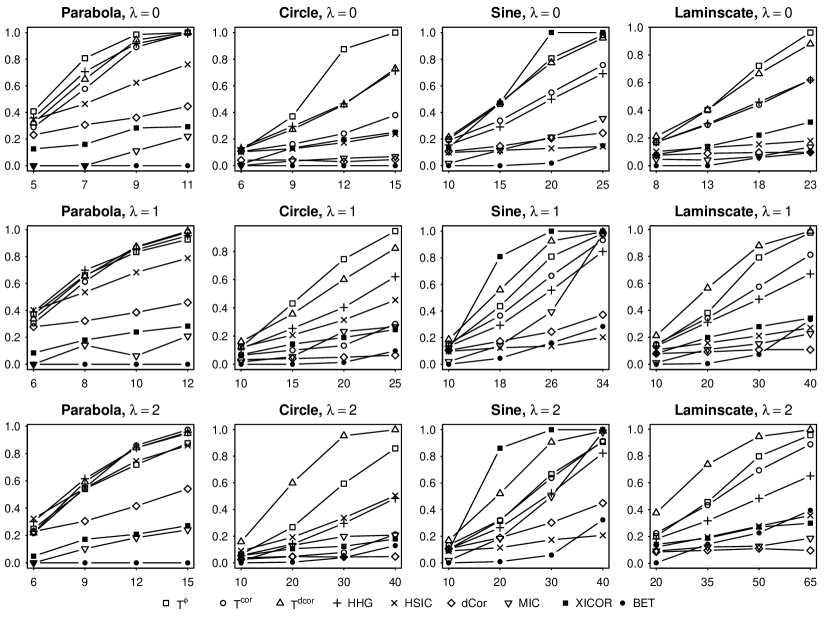

Next, we considered four datasets to assess the performance of our methods under different levels of noise. In these examples, and , where and are real functions, is a random variable, is a non-negative constant, and and are i.i.d. normal random variables that are also independent of . As increases, the noise level increases. In particular, we considered 4 distribution named - ‘Parabola ’, ‘Circle ’, ‘Sine ’ and ‘Laminscate ’, descriptions of each of these can be found in Table 4.

| Distribution | Description |

|---|---|

| (a) Parabola | |

| (b) Circle | |

| (c) Sine | |

| (d) Laminscate |

We considered three noise levels by taking . Scatter plots of the twelve () distributions are presented in Figure 9 in the Appendix B.

The empirical powers of each of these tests are presented in Figure 7.

It can be observed from this figure that performs best or next to best in the absence of noise. Even in the presence of noise, power of is quite good. As the noise level increases, takes the lead. The overall performance of is competitive except for the ‘Circle ’ case. HHG performed well but it lagged behind our proposed tests most of the time. HSIC performed well in ‘Parabola ’ and ‘Circle ’ distributions, but didn’t achieve satisfactory power in other distributions. dCor had a somewhat competitive power in ‘Parabola ’, but not in other distributions. MIC had low power overall, except for higher sample sizes in the ‘Sine ’ distributions. Xicor also performed poorly except for ‘Sine ’, where it performed the best. This is not unexpected from xicor, as it is mainly designed to detect whether is a function of . BET had the lowest power among all tests in most distributions.

5 Discussion and conclusion

We proposed a general framework for testing independence, which can utilize existing independence tests in different neighborhoods of the dataset and combine the results in a meaningful way. This approach has been shown to enhance performance when the variables are explicitly or implicitly functionally dependent. Additionally, we introduced a novel test of independence that leverages a similar framework. It is important to note that multiple approaches can be taken towards selecting neighborhoods, and both the power and the complexity of the resulting method can vary based on it. Therefore, an optimal selection of neighborhoods could be a potential direction for future research. Our proposed method assigns equal weight to each neighborhood, from nearest to farthest. The potential benefits of using varying weights for different neighborhoods could be explored in future research.

Appendix A

Lemma 1.

For the proposed special test method, for a fixed , the computation of for all can be done collectively in operations.

Proof.

Let us fix such that . At first, we will prove that the sequence can be computed in operations.

For , and can be computed in operations. As are i.i.d. observations from a continuous distribution, there are no ties in -coordinate or in -coordinate, and hence and are positive for all without any ties.

Using the merge sort algorithm, a permutation of the sequence can be computed in operations, such that .

Next, by iterating through in ascending order, we can store in an array those values that satisfy and . As a result, for all , we have whenever , with and . The remaining integers in that do not satisfy the conditions for can be stored in another array in ascending order. Thus, for all , we have whenever , with or . This iterative checking and storing process requires only operations. Note that and have no common elements, and together they contain all elements from the set .

Given an array of real numbers , the ‘surpasser count’ is defined as an array of integers of the same length, where for . Using the merge sort technique, this can be computed in operations (see Bird, 2010, Chapter 2). Instead of using the surpasser count algorithm directly, we’ll use the ‘trail count’. For a given array of real numbers , the trail count is defined as an array of integers of the same length, where for . Essentially, the trail count is the surpasser count applied to the reversed array, thus maintaining the same complexity.

We apply the trail count to the array , resulting in the array, say, . It’s easy to verify that represents the number of observations, excluding , that are within the neighborhood for . Next, we apply the trail count to the array , resulting in the array, say, . It can be verified that is the number of observations that fall within the set for . Thus, for . Similarly, applying the trail count to the array results in the array, say, . It can be verified that represents the number of points in the set for . Therefore, for . Hence, all for can be computed together in operations.

Similar steps can be taken to show that for a fixed , for can be computed collectively in operations. Since is a function of , and , it directly follows that computing collectively for also requires operations. ∎

Appendix B

References

- Albanese et al. [2012] Davide Albanese, Michele Filosi, Roberto Visintainer, Samantha Riccadonna, Giuseppe Jurman, and Cesare Furlanello. Minerva and minepy: a c engine for the mine suite and its r, python and matlab wrappers. Bioinformatics, page bts707, 2012.

- Bird [2010] Richard Bird. Pearls of functional algorithm design. Cambridge University Press, 2010.

- Chatterjee [2021] Sourav Chatterjee. A new coefficient of correlation. Journal of the American Statistical Association, 116(536):2009–2022, 2021.

- Chatterjee and Holmes [2023] Sourav Chatterjee and Susan Holmes. XICOR: Robust and generalized correlation coefficients, 2023. URL https://CRAN.R-project.org/package=XICOR. https://github.com/spholmes/XICOR.

- Chaudhuri and Hu [2019] Arin Chaudhuri and Wenhao Hu. A fast algorithm for computing distance correlation. Computational statistics & data analysis, 135:15–24, 2019.

- Gretton et al. [2007] Arthur Gretton, Kenji Fukumizu, Choon Teo, Le Song, Bernhard Schölkopf, and Alex Smola. A kernel statistical test of independence. Advances in neural information processing systems, 20, 2007.

- Heller et al. [2013] Ruth Heller, Yair Heller, and Malka Gorfine. A consistent multivariate test of association based on ranks of distances. Biometrika, 100(2):503–510, 2013.

- Hoeffding [1948] Wassily Hoeffding. A non-parametric test of independence. The Annals of Mathematical Statistics, 19(4):546–557, 1948.

- Kaufman et al. [2019] Barak Brill & Shachar Kaufman, based in part on an earlier implementation by Ruth Heller, and Yair Heller. HHG: Heller-Heller-Gorfine Tests of Independence and Equality of Distributions, 2019. URL https://CRAN.R-project.org/package=HHG. R package version 2.3.

- Kendall [1938] Maurice G. Kendall. A new measure of rank correlation. Biometrika, 30(1/2):81–93, 1938.

- Pfister and Peters [2019] Niklas Pfister and Jonas Peters. dHSIC: Independence Testing via Hilbert Schmidt Independence Criterion, 2019. URL https://CRAN.R-project.org/package=dHSIC. R package version 2.1.

- Reshef et al. [2011] David N Reshef, Yakir A Reshef, Hilary K Finucane, Sharon R Grossman, Gilean McVean, Peter J Turnbaugh, Eric S Lander, Michael Mitzenmacher, and Pardis C Sabeti. Detecting novel associations in large data sets. science, 334(6062):1518–1524, 2011.

- Rizzo and Szekely [2022] Maria Rizzo and Gabor Szekely. energy: E-Statistics: Multivariate Inference via the Energy of Data, 2022. URL https://CRAN.R-project.org/package=energy. R package version 1.7-11.

- Roy [2020] Angshuman Roy. Some copula-based tests of independence among several random variables having arbitrary probability distributions. Stat, 9(1):e263, 2020.

- Roy et al. [2020] Angshuman Roy, Anil K Ghosh, Alok Goswami, and CA Murthy. Some new copula based distribution-free tests of independence among several random variables. Sankhya A, pages 1–41, 2020.

- Spearman [1904] Charles Spearman. The proof and measurement of association between two things. The American Journal of Psychology, 15(1):72–101, 1904.

- Székely et al. [2007] Gábor J Székely, Maria L Rizzo, and Nail K Bakirov. Measuring and testing dependence by correlation of distances. The Annals of Statistics, 35(6):2769–2794, 2007.

- Zhang [2019] Kai Zhang. Bet on independence. Journal of the American Statistical Association, 2019.