Multi-scale Information Sharing and Selection Network with Boundary Attention for Polyp Segmentation

Abstract

Polyp segmentation for colonoscopy images is of vital importance in clinical practice. It can provide valuable information for colorectal cancer diagnosis and surgery. While existing methods have achieved relatively good performance, polyp segmentation still faces the following challenges: (1) Varying lighting conditions in colonoscopy and differences in polyp locations, sizes, and morphologies. (2) The indistinct boundary between polyps and surrounding tissue. To address these challenges, we propose a Multi-scale information sharing and selection network (MISNet) for polyp segmentation task. We design a Selectively Shared Fusion Module (SSFM) to enforce information sharing and active selection between low-level and high-level features, thereby enhancing model’s ability to capture comprehensive information. We then design a Parallel Attention Module (PAM) to enhance model’s attention to boundaries, and a Balancing Weight Module (BWM) to facilitate the continuous refinement of boundary segmentation in the bottom-up process. Experiments on five polyp segmentation datasets demonstrate that MISNet successfully improved the accuracy and clarity of segmentation result, outperforming state-of-the-art methods.

Index Terms:

Polyp segmentation, feature fusion, boundary attentionI Introduction

Colorectal cancer (CRC) stands as one of the most prevalent cancers in the world. The disease is mainly attributed to the malignant growth of bulky tissue known as polyps in the colon or rectum. The standard approach for CRC diagnosis involves colonoscopy examinations, allowing for the visualization of the location and appearance of polyps. Early detection and treatment of rectal polyps can effectively prevent the occurrence of rectal cancer. However, with increasingly growing medical pressure, manual diagnosis is time-consuming, labor-intensive, and less stable. Hence, accurate and efficient polyps segmentation is of crucial significance in clinical practice.

Many research have been conducted in polyp segmentation, demonstrating notable progress. Some methods introduce fully convolutional neural networks(FCN) [1, 2, 3] for pixel-level prediction of polyps in colonoscopy. Although FCN-based methods are highly efficient, the direct up-sampling operation could lead to blurred results due to the loss of details. Addressing this problem, UNet [4] proposed a U-shaped encoder-decoder structure for medical image segmentation. UNet fuses different level of features through skip connections, enabling more reasonable restoration of details. Its variants [5, 6] also exhibited remarkable performance for polyp segmentation. Still, these methods overlooked the valuable boundary information.

Some research have explored solutions to this issue via introducing boundary information into polyp segmentation. Psi-Net [7] employs a parallel decoder for jointly training in three tasks: mask, contour, and distance map. Meanwhile, a new joint loss function is proposed, achieving better results by retaining more boundary information. However, the integration of too many tasks has led to shortcomings in this model when it comes to extracting the boundary relationships between polyps and surrounding tissues. SFANet [8] proposes a area and boundary constraints with additional edge supervision. However, the limited expressive capability of the model encoder for polyp image features results in poor internal coherence in image segmentation and unclear segmentation edges. PraNet [9] utilizes reverse attention [10] to acquire additional boundary information. However, reverse attention tends to focus more on the background area, introducing noticeable noise in the predicted results.

Unfortunately, in aforementioned methods, low-level features are often overlooked as they are considered to contribute less to the network compared to high-level features but with high computation cost. However, these features which capturing details like edges and textures, can offer valuable local fine-grained information for the identification of boundaries and subtle structures, thereby complementing and assisting in segmentation tasks. Besides, the exploitation of boundary information still needs refinement to enhance segmentation precision and clarity. Therefore, we aim to tackle two challenges in polyp segmentation: (1) Exploring effective and efficient ways to incorporate low-level features for mining boundary cues. (2) Refining boundary information exploitation strategy.

In this paper, we propose a novel neural network, called Multi-scale Information Sharing and Selection Network (MISNet) for the polyp segmentation task. First, MISNet adopt a Selectively Shared Fusion Module (SSFM) to improve model’s ability to capture multi-scale contextual information, thus the generated initial guidance map can address the scale variation challenges of polyps. Second, a set of Parallel Attention Modules (PAM) are introduced to further mine the polyp boundary information. Third, we use Balancing Weight Module (BWM) to adaptively incorporate the low-level feature, boundary attention and guidance map, enabling our model to continuously refine boundary details in the bottom-up flow of the network. With BWM, the low-level feature would serve as explicit guide to further improve the boundary segmentation. Benefiting from these well-designed modules, the proposed network demonstrates enhanced accuracy and clarity for polyp segmentation.

In conclusion, our main contributions are summarized as follows:

(1) We propose a Selectively Shared Fusion Module (SSFM) to enforce information sharing and active selection between features at different scales, enabling the model to capture boundary details as well as global context information.

(2) We present a new method with Parallel Attention Module (PAM) and Balancing Weight Module (BWM) to effectively extract and exploit boundary information for enhancing polyp segmentation accuracy and clarity.

(3) Extensive experiments on five polyp segmentation datasets demonstrate that the proposed network outperforms state-of-the-art methods. Meanwhile, a comprehensive ablation studies validate the effectiveness of key components in our proposed model.

II Related Work

II-A Medical Image Segmentation

Recently, deep learning has demonstrated remarkable performance for precise medical image segmentation. UNet [4] achieves the segmentation process through a symmetric encoder-decoder structure. Following this approach, various types of improvement structures have been primarily designed for segmentation research.

The first improvement focuses on skip connections. UNet++ [5] enhances the fusion of multi-scale features by using a series of nested dense skip connections on both the encoder and the decoder. U2-Net [11] defines a nested U-shaped structure and introduces Residual U-blocks, achieving the capture of context information at different scales. The second enhancement involves utilizing different types of backbone. ResUNet [6] introduces ResNet [12] and enhances learning performance by fitting residuals by adding skip connections. ResUNet++ [6] improves ResUNet by integrating efficient components into the UNet structure. R2U-Net [13] combines the advantages of UNet, residual networks, and Recurrent CNNs(RCNNs) to design a recurrent residual convolutional neural network, which can perform the rich feature representation and segment the target successfully. Next is the incorporation of various mechanisms into UNet, such as the attention mechanism in Attention UNet [14]. It incorporates attention gates (AG) into skip connections, allowing the network to emphasize the salient features of the specific target for effective segmentation. Attention UNet++ [15] further enhances UNet++ by adding attention gates (AG) between nested convolution blocks, allowing features at different levels to selectively focus on their respective tasks. ERDUnet [16] addresses the challenge of extracting global contextual features by enhancing UNet, aiming to improve segmentation accuracy while saving parameters.

After that, transformer-based [17] methods have introduced new ideas for medical image segmentation. Medical Transformer (MedT) [18] based on Transformer introduces a gated axial self-attention mechanism and local global training strategy (LoGo) to learn image features automatically. SwinUNet [19] employs a hierarchical Swin Transformer [20] for feature extraction and designs a decoder based on the symmetric Swin Transformer with patch expansion layers to perform upsampling operations. TransUNet [21] combines the strengths of UNet and Transformer, exhibiting robust performance in medical image segmentation. MSCAF-Net [22] adopts the improved Pyramid Vision Transformer (PVTv2) model as its backbone, refining features at each scale and achieving a comprehensive interaction of multi-scale information for accurate segmentation.

II-B Polyp Segmentation

Deep learning has been widely applied in polyp segmentation. Brandao et al. [1] are pioneers in utilizing a Fully Convolutional Neural Network [23] (FCN) to segment polyps in colonoscopy. Wicakam et al. [3] propose a fully compressed convolutional network by improving the FCN-8s network, enabling real-time polyp segmentation and improving segmentation performance. Wickstrm et al. [24] propose an advanced architecture that combines FCN-8s and SegNet [25], introducing batch normalization and dropout to improve model generalization and estimate model uncertainty.

However, the up-sampling process of FCN-based methods often results in the loss of detailed information. To solve this problem, UNet++ and ResUNet++ based on UNet serve for polyp segmentation successfully. PolypMixer [26] is a model based on MLP that flexibly handles input scales of polyps and models long-term dependencies for precise and efficient polyp segmentation. However, these approaches often pay insufficient attention to valuable boundary details.

Several approaches have actively explored solutions to this problem. SFANet [8] introduces a boundary-sensitive loss and employs a shared encoder with two mutually constrained decoders to select and aggregate polyp features at different scales. PsiNet [7] employs parallel decoders for joint training of three tasks and proposes a new joint loss function that achieves improved results after retaining more boundary information. However, it still exhibits limitations in handling boundaries due to the integration of multiple tasks. In addition, PraNet [9] utilizes reverse attention [10] to acquire additional boundary cues. CaraNet [27] proposes a contextual axial reverse attention network by adding axial attention to the reverse attention module and using the channel feature pyramid (CFP) module to improve the segmentation performance of small targets. ACSNet [28] leverages local and global contextual features to achieve layer-wise feature complementarity and refine predictions for uncertain regions. However, it extracts limited information from the last feature generated by the encoder for guidance and focusing attention entirely on boundaries might make it challenging for the model to segment the polyp region. CCBANet [29] introduces attention to the foreground, background, and boundary regions to ensure that the model covers the segmentation area with attention as much as possible. MSNet [30] introduces a multi-scale subtraction network and comprehensively supervises features to capture more details and structural cues for accurate polyp localization and edge refinement. BDG-Net [31] proposes a boundary-distribution-guided segmentation network to aggregate high-level features and generate a boundary distribution map, which successfully segments polyps at different scales. DCNet [32] explores candidate objects and additional object-related boundaries by constraining object regions and boundaries. It segments polyps in a coarse-to-fine manner.

III Methodology

III-A Overview

The overall architecture of MISNet is illustrated in Figure 1. For the input colonoscopy image , we first use Res2Net [33] to extract features at five different scales. To improve computation efficiency, we use Low-level Fusion Module (LFM) to integrate shallow features extracted from the first two blocks of backbone, and use High-level Fusion Module (HFM) to integrate deep features extracted from the last three blocks of backbone.

MISNet first use Selectively Shared Fusion Module (SSFM) to adaptively aggregate low-level and high-level features and generate an initial map for the following process. Then, we leverage Parallel Attention Modules (PAM) to emphasize local boundary information. Subsequently, the features of the current layer, the boundary attention from PAM, and the explicit boundary cues mined from low-level features are adaptively combined with Balancing Weight Module (BWM) to refine polyp segmentation in the bottom-up flow of the network. We elaborate each component in below.

III-B Low-level Feature Fusion and High-level Feature Fusion

With the significance of preserving valuable local fine-grained information for polyp boundary identification, we introduce a Low-level Fusion Module (LFM). This module facilitates the exploitation of low-level features, enhancing the model’s ability to extract precise boundary information.

The low-level fusion module is shown in Figure 2. Specifically, shallow features and generated by the first two blocks of the backbone are firstly processed by Receptive Field Block (RFB) [34] to expand the receptive field. Then, the channel number of and is adjusted by convolutional layers respectively, after which will be upsampled to the same resolution as that of . Then, these two features are passed through convolutional layers then concatenated to generate a aggregated feature. The aggregated feature is then processed with two convolution layers and downsampled to match with the the selective shared fusion module.

The fused low-level features serve two purposes within the network. Firstly, they contribute as inputs to SSFM, aiding in the sharing and adaptive selection of shallow features, thereby enhancing the accuracy of the initial guidance map. Secondly, these features explicitly guide the boundary refinement in the decoding process, facilitating clarity enhancement in the generation of segmentation results.

For the fusion of deep features, we adopt the Cascaded Partial Decoder [35]. Specifically, the high-level features produced by the last three blocks of the backbone are first expanded in receptive field through RFB. Then, the high-level features are aggregated using the Cascaded Partial Decoder , .

III-C Selectively Shared Fusion Module

As demonstrated by most image recognition research, high-level features capture abstract and high-level semantic information, providing a more global context, whereas low-level features contribute to capturing details and local information. For segmentation tasks, high-level features enable the model to better understand the complex structures and relationships within the image, enhancing the model’s ability to recognize and locate objects, while low-level features provide finer details, assisting the model in more accurately locating and segmenting the boundaries of objects.

Thus, appropriate integration of features at different scales is crucial for providing comprehensive information in image segmentation tasks. In conventional ”U-shaped” network structures, features of the same scale are typically fused through skip connections in a single stream, which is not conducive for information interaction. Motivated by these observations, we propose a novel Selectively Shared Fusion Module (SSFM) to enforce information sharing and active selection between features at different levels.

The structure of SSFM is illustrated in Figure 3. Firstly, the channels of fused low-level feature and high-level feature are reduced with convolution to obtain the squeezed feature and . Then, we employ cross-fusion to share information between and . Specifically, and are first cross-concatenated, and then passed through two separate convolutional layers with different kernels to obtain more comprehensive feature representation:

| (1) |

where and denote a convolutional layer and a convolutional layer followed by a BN layer and a ReLU activation function. Then we obtain and by repeating cross-fusion:

| (2) |

With two rounds of cross-fusion, the low-level and high-level features complete the circulation of information.

Subsequently, we allow the model to adaptively select the essential information from low-level features and high-level features. We first apply an element-wise summation operation to the features from these two branches:

| (3) |

Global average pooling is then employed to embed the global information. In the fused feature , the channel-wise mean value is computed, . For each channel, we have:

| (4) |

Then, we use a fully connected layer to obtain a relatively dense feature by reducing the dimension of :

| (5) |

where denotes the sigmoid function and denotes the BN.

| (6) |

where denotes the reduction ratio, denotes the minimal value of ( is 16 in our experiments).

Following that, we compute the attention for low-level feature and high-level feature:

| (7) |

where are learnable weights, and , denote the soft attention for and . , is the c-th row of and . , is the c-th element of and .

Finally, the information from low-level feature and high-level feature are adaptively selected by multiplying and with attention weight and obtain the initial guidance map :

| (8) |

where , .

With information sharing and adaptive selection between low-level and high-level feature, SSFM can attend to both local details and global contextual information, thus generate more accurate initial guidance map.

III-D Parallel Attention Module

The initial guidance map generated through SSFM can provide a coarse area and location of the polyp. Still, it lacks accurate identification and localization of object boundaries. To address this issue, we introduce a parallel attention module to progressively refine the boundary cues of three parallel high-level features during the decoding stage.

The structure of Parallel Attention Module is illustrated in Figure 4. PAM consist of two parallel attention branches: Parallel Axial Reverse Attention (PA-RA) and Parallel Axial Boundary Attention (PA-BA). PA-RA learns the relationship between background and target areas, and PA-BA further address the attention to boundary information. The structure of PA-RA and PA-BA is demonstrated in Figure 5 and 6.

III-D1 PA-RA

As our backbone is pre-trained on ImageNet, high-level features may prioritize polyp regions with higher response values, potentially ignoring boundary details. Therefore, we introduce Parallel Axial Reverse Attention to capture the object boundary features more accurately. The erasure strategy within Parallel Axial Reverse Attention refines imprecise and coarse estimates into accurate and complete prediction maps.

Specifically, we incorporate parallel axial attention [36] into the original reverse attention. To address the computational resource demand when calculating attention on high-dimensional features, we first obtain the feature map from high-level features through parallel computation aggregation along the horizontal and vertical axis to extract salient feature information:

| (9) | ||||

| (10) |

where Q, K, V and denote query, key, value and the dimensions of key, respectively.

Then we inverse the feature map from previous layer to obtain the reverse attention weight :

| (11) |

where denotes the Sigmoid function and denotes the upsampling operation.

Finally, we obtain the reverse attention features by multiplying axial aggregation feature with reverse attention weight and then added back to the high-level feature :

| (12) |

This operation efficiently directs the network to focus on delineation the predicted polyp area of deeper layer and surrounding tissues.

III-D2 PA-BA

As parallel axial reverse attention tends to focus more on the background regions of the image, there are still limitations in localizing the boundaries of polyps. To address this issue, we introduce Parallel Axial Boundary Attention to further improve the model’s ability to precisely identify polyp edges.

Similar with PA-RA, PA-BA first use axial attention [36] to generate the feature map from high-level features . Subsequently, we use the feature map from the previous layer and obtain the boundary attention weight according to ACSNet[28]:

| (13) |

where denotes the Sigmoid function and denotes the upsampling operation.

The axial aggregated feature is multiplied with boundary attention and added to the output feature map of the current layer to obtain the boundary attention features :

| (14) |

Then the obtained attention features and from PA-RA and PA-BA are compressed with a convolution layer and then concatenated to obtain the parallel attention features with enhanced boundary information.

Parallel Attention Module adaptively attend to the boundary information in three parallel high-level features. With axial attention, we achieved higher training efficiency. By integrating boundary attention, we addressed the deficiency of reverse attention to focus on boundary regions. PAM helps to improve the model’s ability to identify boundaries and discriminate polyp region from background tissue, thus improving segmentation accuracy.

III-E Balancing Weight Module

In the bottom-up process of generating segmentation map, the upsampling operation can lead to blurriness. To address this problem, we introduce Balancing Weight Module to adaptively integrate low-level features , boundary attention and high-level features , as shown in Figure 7. This allows the network to concentrate on both local details and global context.

To preserve local details in the decoding flow, it is crucial to incorporate low-level features. In contrast to ”U-shaped” network that directly decoding low-level features, We employ the BWM module to incorporate low-level features as guidance for recognizing uncertain regions during the generation of segmentation maps for each deep layer. Specifically, the fused low-level features will first be filtered by CBAM [37] (denoted as ) to reduce noise and reinforce the boundary information from the low-level features. The parallel attention features and the high-level features from the current layer are resized to match the resolution of the . Then the three parts are concatenated to form the cascaded features.

The cascaded features are first processed by a convolutional layer to reduce dimensionality. Then the compressed features are passed through a global average pooling layer followed by element-wise multiplication to highlight contextual information for improving polyp segmentation accuracy. The segmentation map generated from the previous layer is added to the output of BWM to generate segmentation map of current layer.

The layer-by-layer generation of the segmentation map with BWM progressively refines and incorporates features from different levels, contributing to the accurate delineation of object boundaries in the final segmentation result.

III-F Loss Function

We define the loss function as:

| (15) |

where [38] and [38] denote the weighted binary cross entropy (BCE) loss and the weighted IoU loss, respectively.

We found that in the training set, the sizes of polyps exhibit an obvious imbalance. Commonly used BCE loss can address the imbalance in positive sample segmentation. However, it primarily complements pixel-wise aspects at a microscopic level, which neglects the global structure within the image. This leads to certain deficiencies in learning challenging samples. Therefore, we supplement the IoU loss to constrain from the global aspect. Compared with standard BCE loss and IoU loss, and can emphasize the significance of small objects and boundary information by assigning larger weights to them. The effectiveness of these two methods has been confirmed in various studies [10] [39].

We employ deep supervision for the three high-level feature maps and the initial guidance map . These maps are upsampled (denoted as , , , ) to match the same size of the ground truth .

Finally, the whole segmentation framework can be trained in an end-to-end manner with total loss function:

| (16) | ||||

IV Experiments

IV-A Datasets

We utilize the same dataset, and training and testing splits with PraNet for polyp segmentation. Specifically, the dataset consists of 900 images from Kvasir-SEG and 550 images from CVC-ClinicDB, totaling 1450 samples. They are randomly divided into 80% for training, 10% for validation, and 10% for testing. We evaluate the performance of the proposed method on five benchmark datasets. Detailed information of benchmarks is described below.

(1) CVC-T (CVC-300) [40]: this dataset contains 60 samples from 44 colonoscopy sequences from 36 patients, where all images are in size. All of them are used for testing.

(2) CVC-ClinicDB (CVC-612) [41]: this dataset contains 612 images extracted from 29 different endoscopic video clips with very similar polyp targets. The size of all the images is . 62 images of this dataset are used for test and the rest of the images are used for training.

(3) CVC-ColonDB [42]: this dataset contains 380 images from 15 different colonoscopy sequences with an image size of , all of which are used for testing.

(4) ETIS-LaribPolypDB [43]: this dataset contains 196 images collected from 34 colonoscopy videos. The size of all the images is . This dataset is currently the most difficult in the field of polyp segmentation. Due to the smaller size and concealed locations of the polyps in this dataset, detection becomes challenging.

(5) Kvasir [44]: this dataset contains 1000 images of polyps with sizes ranging from to . The images exhibit variations in the size and shape of the polyps. For training purposes, 900 images from this dataset are utilized, while 100 images are reserved for testing.

IV-B Evaluation Metrics

To comprehensively assess our model, we employ the following metrics:

(1) MeanDice and MeanIoU are used to measure the similarity between predicted segmentation results and ground truth. Larger values denote higher similarity between the predicted segmentation results and the ground truth.

(2) () [45] intuitively generalized the F-measure [46] by calculating the accuracy and recall alternately, assigning different weights to various errors in different locations of neighborhood information. This approach is used to correct the ”equal-importance defect” in Dice [47]:

| (17) |

where denotes precision and denotes recall. and are used to adjust the relative importance of precision and recall, respectively.

(3) S-measure () [48] is used to evaluate the structural similarity between the prediction segmentation results and the ground truth:

| (18) |

where and denote the object-aware and region-aware structural similarity, respectively. is set to 0.5 in our experiments.

(4) E-measure () [49] is an enhanced alignment method measured from the perspective of global average and local pixel matching with the ground truth:

| (19) |

where denotes the enhanced alignment matrix, which reflects the correlation between the prediction segmentation results and the ground truth.

(5) MAE [50] is the average pixel-wise absolute error, which is used to evaluate the pixel-level accuracy between the ground truth and the predicted segmentation results:

| (20) |

where denotes the ground truth and denotes the predicted segmentation results.

| Dataset | Methods | mDice | mIoU | MAE | |||

|---|---|---|---|---|---|---|---|

| Kvasir | UNet [4] | 0.870 | 0.806 | 0.855 | 0.885 | 0.924 | 0.039 |

| UNet++ [5] | 0.866 | 0.804 | 0.851 | 0.882 | 0.918 | 0.038 | |

| SegNet [24] | 0.880 | 0.812 | 0.856 | 0.889 | 0.934 | 0.035 | |

| ResUNet [51] | 0.774 | 0.675 | 0.744 | 0.809 | 0.873 | 0.062 | |

| ResUNet++ [6] | 0.867 | 0.798 | 0.848 | 0.882 | 0.923 | 0.041 | |

| U2Net [11] | 0.896 | 0.843 | 0.889 | 0.909 | 0.937 | 0.031 | |

| PraNet [9] | 0.899 | 0.850 | 0.891 | 0.912 | 0.944 | 0.029 | |

| C2FNet [52] | 0.897 | 0.847 | 0.891 | 0.910 | 0.939 | 0.029 | |

| MSNet [30] | 0.891 | 0.841 | 0.880 | 0.907 | 0.942 | 0.027 | |

| CaraNet [27] | 0.876 | 0.820 | 0.869 | 0.894 | 0.925 | 0.037 | |

| DoubleU-Net [16] | 0.894 | 0.833 | 0.883 | 0.898 | 0.940 | 0.038 | |

| FCBFormer [53] | 0.893 | 0.835 | 0.884 | 0.901 | 0.942 | 0.029 | |

| GMSRF-Net [54] | 0.858 | 0.787 | 0.847 | 0.878 | 0.917 | 0.044 | |

| HarDNet-MSEG [55] | 0.882 | 0.821 | 0.870 | 0.895 | 0.927 | 0.036 | |

| Polyp-PVT [56] | 0.854 | 0.783 | 0.842 | 0.875 | 0.921 | 0.037 | |

| TransFuse-S [57] | 0.859 | 0.783 | 0.841 | 0.877 | 0.926 | 0.038 | |

| TransFuse-L [57] | 0.863 | 0.787 | 0.846 | 0.880 | 0.929 | 0.036 | |

| Ours | 0.903 | 0.846 | 0.902 | 0.915 | 0.947 | 0.025 | |

| CVC-ClinicDB | UNet [4] | 0.889 | 0.831 | 0.889 | 0.920 | 0.950 | 0.014 |

| UNet++ [5] | 0.900 | 0.849 | 0.901 | 0.926 | 0.960 | 0.012 | |

| SegNet [24] | 0.852 | 0.790 | 0.844 | 0.894 | 0.924 | 0.017 | |

| ResUNet [51] | 0.801 | 0.721 | 0.798 | 0.859 | 0.913 | 0.027 | |

| ResUNet++ [6] | 0.910 | 0.857 | 0.912 | 0.932 | 0.968 | 0.012 | |

| U2Net [11] | 0.906 | 0.859 | 0.903 | 0.929 | 0.962 | 0.015 | |

| PraNet [9] | 0.903 | 0.859 | 0.902 | 0.935 | 0.961 | 0.008 | |

| C2FNet [52] | 0.902 | 0.857 | 0.899 | 0.932 | 0.967 | 0.009 | |

| MSNet [30] | 0.900 | 0.857 | 0.899 | 0.933 | 0.965 | 0.008 | |

| CaraNet [27] | 0.884 | 0.829 | 0.884 | 0.914 | 0.951 | 0.015 | |

| DoubleU-Net [58] | 0.878 | 0.822 | 0.871 | 0.910 | 0.947 | 0.017 | |

| FCBFormer [53] | 0.901 | 0.855 | 0.901 | 0.927 | 0.953 | 0.016 | |

| GMSRF-Net [54] | 0.847 | 0.786 | 0.849 | 0.892 | 0.935 | 0.019 | |

| HarDNet-MSEG [55] | 0.895 | 0.845 | 0.891 | 0.921 | 0.961 | 0.009 | |

| Polyp-PVT [56] | 0.855 | 0.787 | 0.854 | 0.891 | 0.953 | 0.019 | |

| TransFuse-S [57] | 0.848 | 0.770 | 0.840 | 0.886 | 0.942 | 0.018 | |

| TransFuse-L [57] | 0.853 | 0.780 | 0.849 | 0.894 | 0.947 | 0.018 | |

| Ours | 0.918 | 0.869 | 0.927 | 0.935 | 0.971 | 0.008 |

IV-C Implementation details

We use Res2Net-50 pretrained on ImageNet as the backbone for feature extraction. We use Adam optimizer with the initial learning rate of 1e-5, and weight decay of 1e-5 to optimize our model. The batch size is set to 16.

In training, all images are resized to 352352. To ensure better model convergence, the total number of training epochs is set to 300. To enhance model stability in later training stages, a Poly learning rate decay strategy is employed, represented as , where the power is set to 0.9. Our proposed network is implemented using PyTorch and trained on a Tesla V100 with 32GB of memory. During training, extensive data augmentation is applied on-the-fly to improve the generalization, including random scaling and cropping, flipping, Gaussian noise, contrast, brightness and sharpness variations. For the ground truth, dilation and erosion operations are applied with kernels vary from 2 to 5.

IV-D Comparison with State-of-the-art Methods

In order to validate the effectiveness of the proposed model, we compare our method to state-of-the-art segmentation methods: UNet [4], UNet++ [5], SegNet [24], ResUNet [51], ResUNet++ [6], U2Net [11], PraNet [9], C2FNet [52], MSNet [30], CaraNet [27], DoubleU-Net[58], FCBFormer[53], GMSRF-Net[54], HarDNet-MSEG[55], Polyp-PVT[56], TransFuse[57]. To ensure fair comparison, we use official implementations of these comparison models and apply the same data augmentation strategy and dataset splits. All experiments are conducted in the same environment. We report quantitative comparisons on test sets of Kvasir and CVC-ClinicDB in Table I to validate our model’s learning ability, and comparisons on unseen datasets CVC-300, CVC-ColonDB and ETIS in Table II to verify the model’s generalizability.

IV-D1 Quantitative Comparison

As shown in Table I, our model outperforms all classic baselines on CVC-ClinicDB dataset. On Kvasir dataset, our proposed method achieves the best performance across five metrics and comparable results on meanIOU. This indicates that our method have learned accurate local detail and global context to correctly segment polyps.

| Dataset | Methods | mDice | mIoU | MAE | |||

|---|---|---|---|---|---|---|---|

| CVC-300 | UNet [4] | 0.823 | 0.742 | 0.803 | 0.883 | 0.928 | 0.013 |

| UNet++ [5] | 0.839 | 0.754 | 0.812 | 0.891 | 0.942 | 0.013 | |

| SegNet [24] | 0.760 | 0.670 | 0.710 | 0.839 | 0.889 | 0.018 | |

| ResUNet [51] | 0.517 | 0.399 | 0.505 | 0.697 | 0.756 | 0.026 | |

| ResUNet++ [6] | 0.815 | 0.719 | 0.776 | 0.877 | 0.930 | 0.017 | |

| U2Net [11] | 0.809 | 0.722 | 0.767 | 0.879 | 0.902 | 0.019 | |

| PraNet [9] | 0.864 | 0.785 | 0.837 | 0.902 | 0.948 | 0.010 | |

| C2FNet [52] | 0.845 | 0.772 | 0.821 | 0.902 | 0.937 | 0.013 | |

| MSNet [30] | 0.838 | 0.771 | 0.818 | 0.895 | 0.940 | 0.011 | |

| CaraNet [27] | 0.854 | 0.787 | 0.837 | 0.907 | 0.942 | 0.010 | |

| DoubleU-Net [58] | 0.793 | 0.712 | 0.751 | 0.868 | 0.891 | 0.021 | |

| FCBFormer [53] | 0.889 | 0.817 | 0.865 | 0.925 | 0.964 | 0.008 | |

| GMSRF-Net [54] | 0.769 | 0.675 | 0.727 | 0.850 | 0.891 | 0.022 | |

| HarDNet-MSEG [54] | 0.833 | 0.766 | 0.811 | 0.900 | 0.937 | 0.016 | |

| Polyp-PVT [26] | 0.841 | 0.748 | 0.820 | 0.892 | 0.949 | 0.011 | |

| TransFuse-S [57] | 0.809 | 0.717 | 0.766 | 0.872 | 0.922 | 0.014 | |

| TransFuse-L [57] | 0.834 | 0.741 | 0.798 | 0.890 | 0.940 | 0.011 | |

| Ours | 0.907 | 0.842 | 0.893 | 0.935 | 0.980 | 0.005 | |

| CVC-ColonDB | UNet [4] | 0.657 | 0.566 | 0.633 | 0.768 | 0.825 | 0.050 |

| UNet++ [5] | 0.652 | 0.562 | 0.629 | 0.769 | 0.814 | 0.052 | |

| SegNet [24] | 0.677 | 0.589 | 0.643 | 0.777 | 0.840 | 0.050 | |

| ResUNet [51] | 0.506 | 0.397 | 0.471 | 0.676 | 0.766 | 0.061 | |

| ResUNet++ [6] | 0.695 | 0.604 | 0.662 | 0.796 | 0.852 | 0.050 | |

| U2Net [11] | 0.734 | 0.655 | 0.709 | 0.817 | 0.868 | 0.045 | |

| PraNet [9] | 0.748 | 0.676 | 0.736 | 0.828 | 0.870 | 0.043 | |

| C2FNet [52] | 0.740 | 0.664 | 0.726 | 0.827 | 0.859 | 0.039 | |

| MSNet [30] | 0.746 | 0.665 | 0.732 | 0.825 | 0.866 | 0.039 | |

| CaraNet [27] | 0.744 | 0.665 | 0.740 | 0.827 | 0.867 | 0.038 | |

| DoubleU-Net [58] | 0.709 | 0.630 | 0.691 | 0.805 | 0.848 | 0.044 | |

| FCBFormer [53] | 0.754 | 0.671 | 0.738 | 0.830 | 0.889 | 0.037 | |

| GMSRF-Net [54] | 0.675 | 0.585 | 0.646 | 0.781 | 0.828 | 0.052 | |

| HarDNet-MSEG [55] | 0.722 | 0.650 | 0.711 | 0.814 | 0.858 | 0.042 | |

| Polyp-PVT [56] | 0.666 | 0.564 | 0.656 | 0.773 | 0.829 | 0.044 | |

| TransFuse-S [57] | 0.689 | 0.597 | 0.660 | 0.788 | 0.862 | 0.045 | |

| TransFuse-L [57] | 0.711 | 0.617 | 0.683 | 0.798 | 0.874 | 0.044 | |

| Ours | 0.762 | 0.690 | 0.754 | 0.838 | 0.892 | 0.036 | |

| ETIS | UNet [4] | 0.542 | 0.476 | 0.514 | 0.735 | 0.757 | 0.030 |

| UNet++ [5] | 0.533 | 0.470 | 0.504 | 0.731 | 0.749 | 0.029 | |

| SegNet [24] | 0.550 | 0.476 | 0.509 | 0.733 | 0.768 | 0.034 | |

| ResUNet [51] | 0.356 | 0.275 | 0.328 | 0.633 | 0.644 | 0.042 | |

| ResUNet++ [6] | 0.470 | 0.393 | 0.420 | 0.677 | 0.734 | 0.054 | |

| U2Net [11] | 0.655 | 0.577 | 0.606 | 0.793 | 0.840 | 0.026 | |

| PraNet [9] | 0.557 | 0.491 | 0.524 | 0.739 | 0.767 | 0.054 | |

| C2FNet [52] | 0.624 | 0.560 | 0.596 | 0.780 | 0.813 | 0.029 | |

| MSNet [30] | 0.582 | 0.513 | 0.550 | 0.747 | 0.791 | 0.036 | |

| CaraNet [27] | 0.694 | 0.618 | 0.668 | 0.813 | 0.868 | 0.019 | |

| DoubleU-Net [58] | 0.656 | 0.579 | 0.618 | 0.790 | 0.854 | 0.024 | |

| FCBFormer [53] | 0.708 | 0.633 | 0.673 | 0.829 | 0.873 | 0.020 | |

| GMSRF-Net [54] | 0.528 | 0.444 | 0.499 | 0.725 | 0.783 | 0.031 | |

| HarDNet-MSEG [55] | 0.590 | 0.519 | 0.553 | 0.754 | 0.807 | 0.045 | |

| Polyp-PVT [56] | 0.654 | 0.566 | 0.631 | 0.791 | 0.863 | 0.017 | |

| TransFuse-S [57] | 0.604 | 0.502 | 0.547 | 0.743 | 0.821 | 0.028 | |

| TransFuse-L [57] | 0.551 | 0.462 | 0.507 | 0.724 | 0.816 | 0.026 | |

| Ours | 0.764 | 0.686 | 0.739 | 0.855 | 0.900 | 0.012 |

Generalization Capability. From Table II we can see that on three unseen datasets, our model still surpasses other approaches across all metrics, demonstrating strong generalizability of our method. On the most challenging ETIS dataset, one notable findings is that all SOTA methods experienced a significant decline in performance (PraNet dropped 38.3% on meanDice), while our exhibited a much smaller decrease (16.8% on meanDice). By properly integrating multi-scale features, our model can well handle the scale variations of polyps. Besides, the boundary details is effectively enhanced and refined to delineate the polyps and surrounding tissues, thereby well generalized to unseen colonoscopy.

IV-D2 Qualitative Comparison

In Figure 8, we provide visual comparison of the segmentation results. As illustrated, our model significantly improved the accuracy and clarity of polyp segmentation in handling different situations. In the second and third rows, the polyp is located in a concealed position. Almost all other comparative methods fail to predict accurate segmentation, while our model can segment the polyps almost completely accurately. As shown in the fourth row, with low lighting condition and different sizes of polyps, our method managed to segment all the polyps. The visual results further proves that our model can better cope with challenges posed by varying lighting conditions, sizes, shapes, and other factors.

V Ablation Study

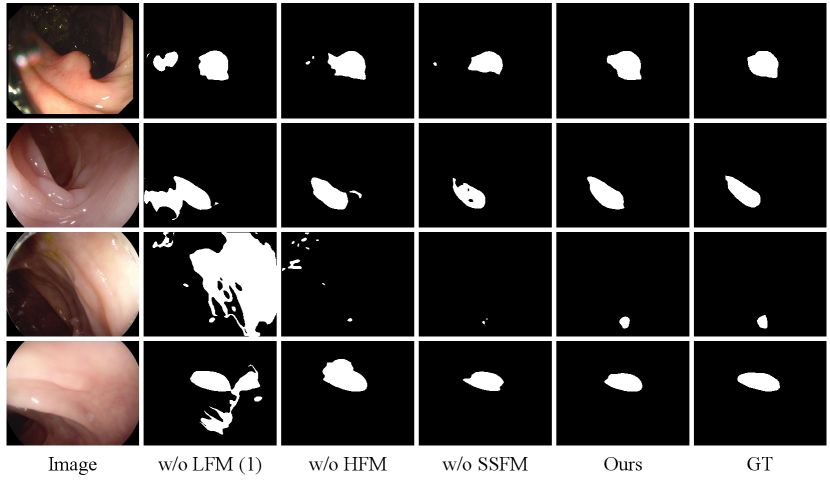

We conduct a series of ablation studies to verify the effectiveness of the key components in our framework. We report the ablation study results in Table IV, Table V and Table VI on Kvasir, CVC-ClinicDB, CVC-300, CVC-ColonDB and ETIS datasets. Figure 10 and 9 illustrate visual comparisons of the ablation studies across these five datasets.

| Settings | LFM | HFM | SSFM |

|---|---|---|---|

| w/o LFM (1) | ✗ | ✓ | ✗ |

| w/o HFM | ✓ | ✗ | ✗ |

| w/o SSFM | ✓ | ✓ | ✗ |

| Ours | ✓ | ✓ | ✓ |

Effectiveness of Selectively Shared Fusion Module. To verify the effects of SSFM, we compare our model with three variants, as shown in Table III: (1) w/o LFM(1): we remove the low-level feature input of SSIM. The initial guidance map is generated with only integrated high-level features. (2) w/o HFM: we remove the high-level feature input of SSIM. The initial guidance map is generated with only integrated low-level features. (3) w/o SSFM: we directly fuse the low-level feature and high-level feature using addition operation.

As shown in Table IV and Figure 10, when initial guidance map is generated with only low-level features (w/o HFM) or only high-level features (w/o LFM(1)), the segmentation accuracy drops. Among them, high-level features contribute more to performance. Also, the feature fusion by directly adding low-level and high-level features(w/o SSFM) failed to improve model performance. Instead, it resulted in a decrease in model performance, indicating the importance of an appropriate feature fusion method.

In contrast, SSFM noticeably improved the segmentation accuracy. By enforcing information sharing and adaptive selection between different level of features, SSFM enhances the model’s ability to capture diverse information. Specifically, on the challenging ETIS dataset, SSFM can effectively adapt to the scale variations of polyps, greatly improved the generalizability of our method.

In Figure 9, we visualize the attention and in adaptive selection of SSFM. We can see that adaptive selection would concentrate on discriminating the boundary between a polyp and its surrounding tissues when attend to low-level features. Conversely, when attend to high-level features, accurate location of polyp regions are emphasized. Figure 9 explicitly indicated that features of different scales contribute diverse information to the model, and SSFM effectively integrates these information, therefore enhancing the model’s performance.

| Dataset | Settings | meanDice | meanIoU | MAE | |||

|---|---|---|---|---|---|---|---|

| Kvasir | w/o LFM (1) | 0.887 | 0.830 | 0.878 | 0.898 | 0.935 | 0.035 |

| w/o HFM | 0.839 | 0.768 | 0.811 | 0.862 | 0.899 | 0.052 | |

| w/o SSFM | 0.891 | 0.834 | 0.888 | 0.907 | 0.938 | 0.028 | |

| Ours | 0.903 | 0.846 | 0.902 | 0.915 | 0.947 | 0.025 | |

| CVC-ClinicDB | w/o LFM (1) | 0.888 | 0.835 | 0.885 | 0.919 | 0.950 | 0.015 |

| w/o HFM | 0.845 | 0.776 | 0.833 | 0.889 | 0.925 | 0.018 | |

| w/o SSFM | 0.880 | 0.823 | 0.894 | 0.910 | 0.945 | 0.009 | |

| Ours | 0.918 | 0.869 | 0.927 | 0.935 | 0.971 | 0.008 | |

| CVC-300 | w/o LFM (1) | 0.860 | 0.786 | 0.837 | 0.906 | 0.939 | 0.010 |

| w/o HFM | 0.874 | 0.805 | 0.855 | 0.913 | 0.952 | 0.009 | |

| w/o SSFM | 0.876 | 0.798 | 0.882 | 0.908 | 0.961 | 0.007 | |

| Ours | 0.907 | 0.842 | 0.893 | 0.935 | 0.980 | 0.005 | |

| CVC-ColonDB | w/o LFM (1) | 0.734 | 0.656 | 0.714 | 0.814 | 0.839 | 0.056 |

| w/o HFM | 0.708 | 0.625 | 0.689 | 0.804 | 0.841 | 0.044 | |

| w/o SSFM | 0.700 | 0.616 | 0.706 | 0.799 | 0.829 | 0.040 | |

| Ours | 0.762 | 0.690 | 0.754 | 0.838 | 0.892 | 0.036 | |

| ETIS | w/o LFM (1) | 0.582 | 0.509 | 0.532 | 0.725 | 0.714 | 0.108 |

| w/o HFM | 0.701 | 0.620 | 0.665 | 0.813 | 0.871 | 0.027 | |

| w/o SSFM | 0.600 | 0.534 | 0.592 | 0.764 | 0.798 | 0.014 | |

| Ours | 0.764 | 0.686 | 0.739 | 0.855 | 0.900 | 0.012 |

Effectiveness of integrating low-level features. In our method, low-level features serve two primary purposes. Firstly, the fused low-level features are used in SSFM module to generate the initial guidance map. Secondly, the boundary information contained in the low-level features contributes to continuously refinement of segmentation maps with BWM module. To validate the effectiveness of integrating low-level features, we compare our model with two variants: w/o LFM (1) and w/o LFM(2), where remove the integrated low-level feature from Balancing Weight Module.

As shown in Table IV, V and VI, low-level features have been proved worthy for improving segmentation accuracy. The visual comparison results in Figure 10 and Figure 9 further indicate that the integrated low-level features is crucial for initial guidance map generation and the enhancement of boundary information, significantly improving the clarity of segmentation results.

| Dataset | Settings | meanDice | meanIoU | MAE | |||

|---|---|---|---|---|---|---|---|

| Kvasir | w/o LFM (2) | 0.878 | 0.820 | 0.863 | 0.893 | 0.924 | 0.037 |

| w/o PAM | 0.856 | 0.789 | 0.862 | 0.885 | 0.917 | 0.039 | |

| PAM (PA-RA) | 0.898 | 0.847 | 0.892 | 0.911 | 0.941 | 0.029 | |

| PAM (PA-BA) | 0.887 | 0.830 | 0.880 | 0.899 | 0.930 | 0.036 | |

| w/o BWM | 0.893 | 0.839 | 0.892 | 0.909 | 0.944 | 0.029 | |

| Ours | 0.903 | 0.846 | 0.902 | 0.915 | 0.947 | 0.025 | |

| CVC-ClinicDB | w/o LFM (2) | 0.887 | 0.827 | 0.887 | 0.915 | 0.951 | 0.013 |

| w/o PAM | 0.877 | 0.812 | 0.890 | 0.912 | 0.952 | 0.013 | |

| PAM (PA-RA) | 0.909 | 0.862 | 0.910 | 0.931 | 0.963 | 0.008 | |

| PAM (PA-BA) | 0.904 | 0.846 | 0.904 | 0.923 | 0.962 | 0.012 | |

| w/o BWM | 0.898 | 0.850 | 0.905 | 0.925 | 0.955 | 0.008 | |

| Ours | 0.918 | 0.869 | 0.927 | 0.935 | 0.971 | 0.008 |

| Dataset | Settings | meanDice | meanIoU | MAE | |||

|---|---|---|---|---|---|---|---|

| CVC-300 | w/o LFM (2) | 0.872 | 0.804 | 0.858 | 0.915 | 0.957 | 0.011 |

| w/o PAM | 0.861 | 0.793 | 0.852 | 0.910 | 0.939 | 0.008 | |

| PAM (PA-RA) | 0.848 | 0.769 | 0.821 | 0.898 | 0.943 | 0.012 | |

| PAM (PA-BA) | 0.879 | 0.810 | 0.880 | 0.924 | 0.958 | 0.007 | |

| w/o BWM | 0.851 | 0.784 | 0.840 | 0.906 | 0.938 | 0.009 | |

| Ours | 0.907 | 0.842 | 0.893 | 0.935 | 0.980 | 0.005 | |

| CVC-ColonDB | w/o LFM (2) | 0.743 | 0.663 | 0.734 | 0.826 | 0.872 | 0.036 |

| w/o PAM | 0.698 | 0.617 | 0.699 | 0.799 | 0.829 | 0.043 | |

| PAM (PA-RA) | 0.738 | 0.663 | 0.728 | 0.824 | 0.867 | 0.039 | |

| PAM (PA-BA) | 0.710 | 0.628 | 0.710 | 0.806 | 0.843 | 0.041 | |

| w/o BWM | 0.745 | 0.674 | 0.737 | 0.829 | 0.876 | 0.037 | |

| Ours | 0.762 | 0.690 | 0.754 | 0.838 | 0.892 | 0.036 | |

| ETIS | w/o LFM (2) | 0.590 | 0.512 | 0.537 | 0.753 | 0.743 | 0.063 |

| w/o PAM | 0.614 | 0.539 | 0.596 | 0.768 | 0.834 | 0.019 | |

| PAM (PA-RA) | 0.584 | 0.508 | 0.540 | 0.744 | 0.754 | 0.057 | |

| PAM (PA-BA) | 0.704 | 0.623 | 0.673 | 0.819 | 0.864 | 0.016 | |

| w/o BWM | 0.597 | 0.522 | 0.560 | 0.758 | 0.784 | 0.029 | |

| Ours | 0.764 | 0.686 | 0.739 | 0.855 | 0.900 | 0.012 |

Effectiveness of Parallel Attention Module. To evaluate the effectiveness of Parallel Attention Module, we investigate the following settings: (1) w/o PAM: we remove PAM from the network, so that each side feature map is generated only by fusing deep features with previous layer features through the balancing weight module. (2) PAM (PA-RA): we only apply reverse attention. (3) PAM (PA-BA): we only apply boundary attention.

The results in Table V and Table VI proved the effectiveness of PAM module. Meanwhile, we can see that with only PA-RA or PA-BA, the model fail to reach the best performance, demonstrating that these two modules provide attention to the boundary from different perspectives and their combination is the most effective setting. Figure 11 also indicates that adequate attention to boundaries can improve the segmentation performance.

Effectiveness of Balancing Weight Module. To validate the effectiveness of the balancing weight module, we conduct ablation experiments by replace BWM with direct adding operation(denoted as w/o BWM). The results in Table V, Table VI and the visualization result in Figure 11 demonstrates that the approach of merging features by balancing the weights of multiple sources enhances the segmentation performance. With BWM, the network forms a layer-by-layer architecture to progressively refines the details and incorporates semantic information from deep levels, and finally obtain the output segmentation results.

VI Conclusion

In this paper, we propose a novel network architecture MISNet for polyp segmentation Notably, a Selective Shared Fusion Module is proposed to promote information sharing and active selection across various feature levels to enhance the model’s ability to capture multi-scale contextual information, improving the accuracy of the initial guidance map used in the decoding stage. A Parallel Attention Module is proposed to emphasize the model’s attention to boundary information. Meanwhile, a Balancing Weight Module embedded in the bottom-up flow allows for adaptive integration of features from different layers. Experimental results on five polyp segmentation datasets show that the proposed method outperforms state-of-the-arts under different metrics.

In future work, we will focus on semi-supervised and self-supervised learning approaches to address issues such as insufficient data volume and class imbalance. We plan to integrate multi-modal information, such as CT and MRI, to provide more comprehensive and accurate medical image information. Additionally, we will explore how to adopt lightweight model structures without compromising segmentation accuracy.

References

- [1] P. Brandao, E. Mazomenos, G. Ciuti, R. Caliò, F. Bianchi, A. Menciassi, P. Dario, A. Koulaouzidis, A. Arezzo, and D. Stoyanov, “Fully convolutional neural networks for polyp segmentation in colonoscopy,” in Medical Imaging 2017: Computer-Aided Diagnosis, vol. 10134, pp. 101–107, Spie, 2017.

- [2] M. Akbari, M. Mohrekesh, E. Nasr-Esfahani, S. R. Soroushmehr, N. Karimi, S. Samavi, and K. Najarian, “Polyp Segmentation in Colonoscopy Images Using Fully Convolutional Network,” in 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), pp. 69–72, July 2018. ISSN: 1558-4615.

- [3] I. Wichakam, T. Panboonyuen, C. Udomcharoenchaikit, and P. Vateekul, “Real-Time Polyps Segmentation for Colonoscopy Video Frames Using Compressed Fully Convolutional Network,” in MultiMedia Modeling (K. Schoeffmann, T. H. Chalidabhongse, C. W. Ngo, S. Aramvith, N. E. O’Connor, Y.-S. Ho, M. Gabbouj, and A. Elgammal, eds.), Lecture Notes in Computer Science, (Cham), pp. 393–404, Springer International Publishing, 2018.

- [4] O. Ronneberger, P. Fischer, and T. Brox, “U-Net: Convolutional Networks for Biomedical Image Segmentation,” in Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015 (N. Navab, J. Hornegger, W. M. Wells, and A. F. Frangi, eds.), Lecture Notes in Computer Science, (Cham), pp. 234–241, Springer International Publishing, 2015.

- [5] Z. Zhou, M. M. Rahman Siddiquee, N. Tajbakhsh, and J. Liang, “Unet++: A nested u-net architecture for medical image segmentation,” in Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support: 4th International Workshop, DLMIA 2018, and 8th International Workshop, ML-CDS 2018, Held in Conjunction with MICCAI 2018, Granada, Spain, September 20, 2018, Proceedings 4, pp. 3–11, Springer, 2018.

- [6] D. Jha, P. H. Smedsrud, M. A. Riegler, D. Johansen, T. D. Lange, P. Halvorsen, and H. D. Johansen, “ResUNet++: An Advanced Architecture for Medical Image Segmentation,” in 2019 IEEE International Symposium on Multimedia (ISM), pp. 225–2255, Dec. 2019.

- [7] B. Murugesan, K. Sarveswaran, S. M. Shankaranarayana, K. Ram, J. Joseph, and M. Sivaprakasam, “Psi-net: Shape and boundary aware joint multi-task deep network for medical image segmentation,” in 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), pp. 7223–7226, IEEE, 2019.

- [8] Y. Fang, C. Chen, Y. Yuan, and K.-y. Tong, “Selective Feature Aggregation Network with Area-Boundary Constraints for Polyp Segmentation,” in Medical Image Computing and Computer Assisted Intervention – MICCAI 2019 (D. Shen, T. Liu, T. M. Peters, L. H. Staib, C. Essert, S. Zhou, P.-T. Yap, and A. Khan, eds.), Lecture Notes in Computer Science, (Cham), pp. 302–310, Springer International Publishing, 2019.

- [9] D.-P. Fan, G.-P. Ji, T. Zhou, G. Chen, H. Fu, J. Shen, and L. Shao, “PraNet: Parallel Reverse Attention Network for Polyp Segmentation,” in Medical Image Computing and Computer Assisted Intervention – MICCAI 2020 (A. L. Martel, P. Abolmaesumi, D. Stoyanov, D. Mateus, M. A. Zuluaga, S. K. Zhou, D. Racoceanu, and L. Joskowicz, eds.), Lecture Notes in Computer Science, (Cham), pp. 263–273, Springer International Publishing, 2020.

- [10] S. Chen, X. Tan, B. Wang, and X. Hu, “Reverse Attention for Salient Object Detection,” in Computer Vision – ECCV 2018 (V. Ferrari, M. Hebert, C. Sminchisescu, and Y. Weiss, eds.), vol. 11213, pp. 236–252, Cham: Springer International Publishing, 2018. Series Title: Lecture Notes in Computer Science.

- [11] X. Qin, Z. Zhang, C. Huang, M. Dehghan, O. R. Zaiane, and M. Jagersand, “U2-net: Going deeper with nested u-structure for salient object detection,” Pattern recognition, vol. 106, p. 107404, 2020.

- [12] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 770–778, 2016.

- [13] M. Z. Alom, M. Hasan, C. Yakopcic, T. M. Taha, and V. K. Asari, “Recurrent residual convolutional neural network based on u-net (r2u-net) for medical image segmentation,” arXiv preprint arXiv:1802.06955, 2018.

- [14] O. Oktay, J. Schlemper, L. L. Folgoc, M. Lee, M. Heinrich, K. Misawa, K. Mori, S. McDonagh, N. Y. Hammerla, B. Kainz, B. Glocker, and D. Rueckert, “Attention U-Net: Learning Where to Look for the Pancreas,” May 2018. arXiv:1804.03999 [cs].

- [15] C. Li, Y. Tan, W. Chen, X. Luo, Y. Gao, X. Jia, and Z. Wang, “Attention Unet++: A Nested Attention-Aware U-Net for Liver CT Image Segmentation,” in 2020 IEEE International Conference on Image Processing (ICIP), pp. 345–349, Oct. 2020. ISSN: 2381-8549.

- [16] H. Li, D.-H. Zhai, and Y. Xia, “Erdunet: An efficient residual double-coding unet for medical image segmentation,” IEEE Transactions on Circuits and Systems for Video Technology, 2023.

- [17] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is all you need,” Advances in neural information processing systems, vol. 30, 2017.

- [18] J. M. J. Valanarasu, P. Oza, I. Hacihaliloglu, and V. M. Patel, “Medical transformer: Gated axial-attention for medical image segmentation,” in Medical Image Computing and Computer Assisted Intervention–MICCAI 2021: 24th International Conference, Strasbourg, France, September 27–October 1, 2021, Proceedings, Part I 24, pp. 36–46, Springer, 2021.

- [19] H. Cao, Y. Wang, J. Chen, D. Jiang, X. Zhang, Q. Tian, and M. Wang, “Swin-unet: Unet-like pure transformer for medical image segmentation,” in European conference on computer vision, pp. 205–218, Springer, 2022.

- [20] Z. Liu, Y. Lin, Y. Cao, H. Hu, Y. Wei, Z. Zhang, S. Lin, and B. Guo, “Swin transformer: Hierarchical vision transformer using shifted windows,” in Proceedings of the IEEE/CVF international conference on computer vision, pp. 10012–10022, 2021.

- [21] J. Chen, Y. Lu, Q. Yu, X. Luo, E. Adeli, Y. Wang, L. Lu, A. L. Yuille, and Y. Zhou, “TransUNet: Transformers Make Strong Encoders for Medical Image Segmentation,” Feb. 2021. arXiv:2102.04306 [cs].

- [22] Y. Liu, H. Li, J. Cheng, and X. Chen, “Mscaf-net: a general framework for camouflaged object detection via learning multi-scale context-aware features,” IEEE Transactions on Circuits and Systems for Video Technology, 2023.

- [23] E. Shelhamer, J. Long, and T. Darrell, “Fully Convolutional Networks for Semantic Segmentation,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 39, pp. 640–651, Apr. 2017. Conference Name: IEEE Transactions on Pattern Analysis and Machine Intelligence.

- [24] K. Wickstrøm, M. Kampffmeyer, and R. Jenssen, “Uncertainty and interpretability in convolutional neural networks for semantic segmentation of colorectal polyps,” Medical image analysis, vol. 60, p. 101619, 2020.

- [25] V. Badrinarayanan, A. Kendall, and R. Cipolla, “Segnet: A deep convolutional encoder-decoder architecture for image segmentation,” IEEE transactions on pattern analysis and machine intelligence, vol. 39, no. 12, pp. 2481–2495, 2017.

- [26] J.-H. Shi, Q. Zhang, Y.-H. Tang, and Z.-Q. Zhang, “Polyp-mixer: An efficient context-aware mlp-based paradigm for polyp segmentation,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 33, no. 1, pp. 30–42, 2022.

- [27] A. Lou, S. Guan, and M. H. Loew, “CaraNet: context axial reverse attention network for segmentation of small medical objects,” Journal of Medical Imaging, vol. 10, p. 014005, Feb. 2023. Publisher: SPIE.

- [28] R. Zhang, G. Li, Z. Li, S. Cui, D. Qian, and Y. Yu, “Adaptive context selection for polyp segmentation,” in Medical Image Computing and Computer Assisted Intervention–MICCAI 2020: 23rd International Conference, Lima, Peru, October 4–8, 2020, Proceedings, Part VI 23, pp. 253–262, Springer, 2020.

- [29] T.-C. Nguyen, T.-P. Nguyen, G.-H. Diep, A.-H. Tran-Dinh, T. V. Nguyen, and M.-T. Tran, “CCBANet: Cascading Context and Balancing Attention for Polyp Segmentation,” in Medical Image Computing and Computer Assisted Intervention – MICCAI 2021, pp. 633–643, Springer, Cham, 2021.

- [30] X. Zhao, L. Zhang, and H. Lu, “Automatic Polyp Segmentation via Multi-scale Subtraction Network,” in Medical Image Computing and Computer Assisted Intervention – MICCAI 2021 (M. de Bruijne, P. C. Cattin, S. Cotin, N. Padoy, S. Speidel, Y. Zheng, and C. Essert, eds.), Lecture Notes in Computer Science, (Cham), pp. 120–130, Springer International Publishing, 2021.

- [31] Z. Qiu, Z. Wang, M. Zhang, Z. Xu, J. Fan, and L. Xu, “Bdg-net: boundary distribution guided network for accurate polyp segmentation,” in Medical Imaging 2022: Image Processing, vol. 12032, pp. 792–799, SPIE, 2022.

- [32] G. Yue, H. Xiao, H. Xie, T. Zhou, W. Zhou, W. Yan, B. Zhao, T. Wang, and Q. Jiang, “Dual-constraint coarse-to-fine network for camouflaged object detection,” IEEE Transactions on Circuits and Systems for Video Technology, 2023.

- [33] S.-H. Gao, M.-M. Cheng, K. Zhao, X.-Y. Zhang, M.-H. Yang, and P. Torr, “Res2net: A new multi-scale backbone architecture,” IEEE transactions on pattern analysis and machine intelligence, vol. 43, no. 2, pp. 652–662, 2019.

- [34] S. Liu, D. Huang, et al., “Receptive field block net for accurate and fast object detection,” in Proceedings of the European conference on computer vision (ECCV), pp. 385–400, 2018.

- [35] Z. Wu, L. Su, and Q. Huang, “Cascaded partial decoder for fast and accurate salient object detection,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 3907–3916, 2019.

- [36] J. Ho, N. Kalchbrenner, D. Weissenborn, and T. Salimans, “Axial attention in multidimensional transformers,” arXiv preprint arXiv:1912.12180, 2019.

- [37] S. Woo, J. Park, J.-Y. Lee, and I. S. Kweon, “Cbam: Convolutional block attention module,” in Proceedings of the European conference on computer vision (ECCV), pp. 3–19, 2018.

- [38] J. Wei, S. Wang, and Q. Huang, “F3net: fusion, feedback and focus for salient object detection,” in Proceedings of the AAAI conference on artificial intelligence, vol. 34, pp. 12321–12328, 2020.

- [39] Z. Bai, J. Wang, X.-L. Zhang, and J. Chen, “End-to-end speaker verification via curriculum bipartite ranking weighted binary cross-entropy,” IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 30, pp. 1330–1344, 2022.

- [40] D. Vázquez, J. Bernal, F. J. Sánchez, G. Fernández-Esparrach, A. M. López, A. Romero, M. Drozdzal, and A. Courville, “A Benchmark for Endoluminal Scene Segmentation of Colonoscopy Images,” Journal of Healthcare Engineering, vol. 2017, p. e4037190, July 2017. Publisher: Hindawi.

- [41] J. Bernal, F. J. Sánchez, G. Fernández-Esparrach, D. Gil, C. Rodríguez, and F. Vilariño, “WM-DOVA maps for accurate polyp highlighting in colonoscopy: Validation vs. saliency maps from physicians,” Computerized Medical Imaging and Graphics, vol. 43, pp. 99–111, July 2015.

- [42] N. Tajbakhsh, S. R. Gurudu, and J. Liang, “Automated Polyp Detection in Colonoscopy Videos Using Shape and Context Information,” IEEE Transactions on Medical Imaging, vol. 35, pp. 630–644, Feb. 2016.

- [43] J. Silva, A. Histace, O. Romain, X. Dray, and B. Granado, “Toward embedded detection of polyps in WCE images for early diagnosis of colorectal cancer,” International Journal of Computer Assisted Radiology and Surgery, vol. 9, pp. 283–293, Mar. 2014.

- [44] D. Jha, P. H. Smedsrud, M. A. Riegler, P. Halvorsen, T. de Lange, D. Johansen, and H. D. Johansen, “Kvasir-SEG: A Segmented Polyp Dataset,” in MultiMedia Modeling (Y. M. Ro, W.-H. Cheng, J. Kim, W.-T. Chu, P. Cui, J.-W. Choi, M.-C. Hu, and W. De Neve, eds.), Lecture Notes in Computer Science, (Cham), pp. 451–462, Springer International Publishing, 2020.

- [45] R. Margolin, L. Zelnik-Manor, and A. Tal, “How to Evaluate Foreground Maps,” in 2014 IEEE Conference on Computer Vision and Pattern Recognition, pp. 248–255, June 2014. ISSN: 1063-6919.

- [46] R. Achanta, S. Hemami, F. Estrada, and S. Susstrunk, “Frequency-tuned salient region detection,” in 2009 IEEE Conference on Computer Vision and Pattern Recognition, (Miami, FL), pp. 1597–1604, IEEE, June 2009.

- [47] A. Borji, M.-M. Cheng, H. Jiang, and J. Li, “Salient object detection: A benchmark,” IEEE transactions on image processing, vol. 24, no. 12, pp. 5706–5722, 2015.

- [48] D.-P. Fan, M.-M. Cheng, Y. Liu, T. Li, and A. Borji, “Structure-Measure: A New Way to Evaluate Foreground Maps,” in 2017 IEEE International Conference on Computer Vision (ICCV), pp. 4558–4567, Oct. 2017. ISSN: 2380-7504.

- [49] D. Fan, C. Gong, Y. Cao, B. Ren, M. Cheng, and A. Borji, “Enhanced-alignment measure for binary foreground map evaluation,” in Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, IJCAI 2018, July 13-19, 2018, Stockholm, Sweden (J. Lang, ed.), pp. 698–704, ijcai.org, 2018.

- [50] F. Perazzi, P. Krähenbühl, Y. Pritch, and A. Hornung, “Saliency filters: Contrast based filtering for salient region detection,” in 2012 IEEE Conference on Computer Vision and Pattern Recognition, pp. 733–740, June 2012. ISSN: 1063-6919.

- [51] F. I. Diakogiannis, F. Waldner, P. Caccetta, and C. Wu, “Resunet-a: A deep learning framework for semantic segmentation of remotely sensed data,” ISPRS Journal of Photogrammetry and Remote Sensing, vol. 162, pp. 94–114, 2020.

- [52] Y. Sun, G. Chen, T. Zhou, Y. Zhang, and N. Liu, “Context-aware cross-level fusion network for camouflaged object detection,” arXiv preprint arXiv:2105.12555, 2021.

- [53] E. Sanderson and B. J. Matuszewski, “Fcn-transformer feature fusion for polyp segmentation,” in Annual conference on medical image understanding and analysis, pp. 892–907, Springer, 2022.

- [54] A. Srivastava, S. Chanda, D. Jha, U. Pal, and S. Ali, “Gmsrf-net: An improved generalizability with global multi-scale residual fusion network for polyp segmentation,” in 2022 26th International Conference on Pattern Recognition (ICPR), pp. 4321–4327, IEEE, 2022.

- [55] C.-H. Huang, H.-Y. Wu, and Y.-L. Lin, “Hardnet-mseg: A simple encoder-decoder polyp segmentation neural network that achieves over 0.9 mean dice and 86 fps,” arXiv preprint arXiv:2101.07172, 2021.

- [56] B. Dong, W. Wang, D.-P. Fan, J. Li, H. Fu, and L. Shao, “Polyp-pvt: Polyp segmentation with pyramid vision transformers,” arXiv preprint arXiv:2108.06932, 2021.

- [57] Y. Zhang, H. Liu, and Q. Hu, “Transfuse: Fusing transformers and cnns for medical image segmentation,” in Medical Image Computing and Computer Assisted Intervention–MICCAI 2021: 24th International Conference, Strasbourg, France, September 27–October 1, 2021, Proceedings, Part I 24, pp. 14–24, Springer, 2021.

- [58] D. Jha, M. A. Riegler, D. Johansen, P. Halvorsen, and H. D. Johansen, “Doubleu-net: A deep convolutional neural network for medical image segmentation,” in 2020 IEEE 33rd International symposium on computer-based medical systems (CBMS), pp. 558–564, IEEE, 2020.