[2]\fnmJingya \surChang [1]\orgdivSchool of Mathematical Sciences, \orgnameSouth China Normal University, \orgaddress\cityGuangzhou, \postcode510631, \countryChina 2]\orgdivSchool of Mathematics and Statistics, \orgnameGuangdong University of Technology, \orgaddress\cityGuangzhou, \postcode510520, \countryChina

Multi-Linear Pseudo-PageRank for Hypergraph Partitioning

Abstract

Motivated by the PageRank model for graph partitioning, we develop an extension of PageRank for partitioning uniform hypergraphs. Starting from adjacency tensors of uniform hypergraphs, we establish the multi-linear pseudo-PageRank (MLPPR) model, which is formulated as a multi-linear system with nonnegative constraints. The coefficient tensor of MLPPR is a kind of Laplacian tensors of uniform hypergraphs, which are almost as sparse as adjacency tensors since no dangling corrections are incorporated. Furthermore, all frontal slices of the coefficient tensor of MLPPR are M-matrices. Theoretically, MLPPR has a solution, which is unique under mild conditions. An error bound of the MLPPR solution is analyzed when the Laplacian tensor is slightly perturbed. Computationally, by exploiting the structural Laplacian tensor, we propose a tensor splitting algorithm, which converges linearly to a solution of MLPPR. Finally, numerical experiments illustrate that MLPPR is powerful and effective for hypergraph partitioning problems.

keywords:

PageRank, multi-linear system, existence, uniqueness, perturbation analysis, splitting algorithm, convergence, hypergraph, Laplacian tensor, hypergraph partitioning, network analysispacs:

[MSC Classification]05C90, 15A69, 65C40, 65H10

1 Introduction

PageRank is the first and best-known method developed by Google to evaluate the importance of web-pages via their inherent graph and network structures BP'98 ; BL'06 . There are three advantages of PageRank: existence and uniqueness of the PageRank solution, fast algorithms for finding the PageRank solution, and a built-in regularization Gl'15 . Thus, PageRank and its generalizations have been extensively used in network analysis, image sciences, sports, biology, chemistry, mathematical systems, and so on Gl'15 ; LM'06 . Hypergraphs have the capability of modeling connections among objects according to their inherent multiwise similarity and affinity. Recently, higher-order organization of complex networks at the level of small network subgraphs becomes an active research area BGL16 ; BGL'17 ; KW06 ; RELWL14 . The set of small network subgraphs with common connectivity patterns in a complex network could be modeled by a uniform hypergraph. A natural question is how can we generalize the PageRank idea from graphs to uniform hypergraphs.

PageRank was originally interpreted as a special Markov chain LM'06 , where the transition probability matrix is a columnwise-stochastic matrix. Many algorithms, e.g., power methods, were employed to find the dominant eigenvector of the PageRank eigensystem IS08 . Alternatively, PageRank could be represented as a linear system with an M-matrix, which has favorable theoretical character F15 and good numerical performance LM06 . In the context of PageRank for partitioning a graph, although a Laplacian matrix of the graph is an M-matrix, an adjacency matrix of the graph is not necessarily columnwise-stochastic, when the graph has dangling vertices Ch'05 . Some traditional approaches ACL'07 ; ACL'08 ; Gl'15 ; LM06 incorporated a rank-one correction into a graph adjacency matrix and thus produced a columnwise-stochastic matrix. Using this columnwise-stochastic matrix, PageRank generates the stochastic solution. However, the rank-one correction of the graph adjacency matrix has no graphic meaning. Alternatively, following the framework of M-linear systems, Gleich Gl'15 used the graph Laplacian matrix, which is only columnwise-substochastic, to construct a pseudo-PageRank model. The pseudo-PageRank model outputs the nonnegative solution, which is normalized to obtain the stochastic solution if necessary. Pseudo-PageRank comes from a graph Laplacian matrix directly and is more suitable for practical applications LM06 ; Gl'15 . Moreover, the pseudo-PageRank model is potentially true of the multi-linear cases. This is the primary motivation of this paper.

To explore higher-order extensions of classical Markov chain and PageRank for large-scale practical problems, on one hand, Li and Ng LN'14 used a rank-one array to approximate the limiting probability distribution array of a higher-order Markov chain. The vector for generating the rank-one array is called the limiting probability distribution vector of the higher-order Markov chain. On the other hand, Benson et al. BGL'17 studied the spacey random surfer, which remembers bits and pieces of history and is influenced by this information. Motivated by the spacey random surfer, Gleich et al. GLY'15 developed the multi-linear PageRank (MPR) model, of which the stochastic solution is called a multi-linear PageRank vector. Indeed, the multi-linear PageRank vector could be viewed as a limiting probability distribution vector of a special higher-order Markov chain.

According to the Brouwer fixed-point theorem, a stochastic solution of MPR always exists GLY'15 . The uniqueness of MPR solutions and related problems are more complicated than that of the classical PageRank. Many researchers gave various sufficient conditions CZ'13 ; FT'20 ; GLY'15 ; LLNV'17 ; LLVX'20 ; LN'14 . Dynamical systems of MPR were studied in BGL'17 . The perturbation analysis and error bounds of MPR solutions were presented in LCN'13 ; LLVX'20 . The ergodicity coefficient to a stochastic tensor was addressed in FT'20 . Interestingly, Benson B'19 studied three eigenvector centralities corresponding to tensors arising from hypergraphs. Tensor eigenvectors determine MPR vectors of these stochastic processes and capture some important higher-order network substructures BGL'15 ; BGL'17 .

For solving MPR and associated higher-order Markon chains, Li and Ng LN'14 proposed a fixed-point iteration, which converges linearly when a sufficient condition for uniqueness holds. Gleich et al. GLY'15 studied five numerical methods: a fixed-point iteration, a shifted fixed-point iteration, a nonlinear inner-outer iteration, an inverse iteration, and a Newton iteration. Meini and Poloni MP'18 solved a sequence of PageRank subproblems for Perron vectors to approximate the MPR solution. Furthermore, Huang and Wu HW'21 analyzed this Perron-based algorithm. Liu et al. LLV'19 designed and analyzed several relaxation algorithms for solving MPR. Cipolla et al. CRT'20 introduced extrapolation-based accelerations for a shifted fixed-point method and an inner-outer iteration. Hence, it is an active research area to compute MPR vectors arising from practical problems.

1.1 Contributions

In the process of extending the pseudo-PageRank method from graphs to uniform hypergraphs, the contributions are given as follows.

-

•

Using a Laplacian tensor of a uniform hypergraph directly without any dangling corrections, we propose the multi-linear pseudo-PageRank (MLPPR) model for a uniform hypergraph, which is a multi-linear system with nonnegative constraints. Every frontal slice of the coefficient tensor of MLPPR is an M-matrix. Comparing MLPPR with MPR, MLPPR admits a columuwise-substochastic tensor with dangling column fibers, while MPR rejects any dangling column fibers.

-

•

The theoretical framework of the proposed model is established. The existence and uniqueness of the solution of MLPPR are discussed. The perturbation bound of the MLPPR solution is also presented.

-

•

According to the structure of the tensor in MLPPR, we design a tensor splitting algorithm for finding a solution of MLPPR. Under suitable conditions, the propose algorithm converges linearly to a solution of MLPPR.

-

•

As applications, we employ MLPPR to conduct hypergraph partitioning problems. For the problem of subspace clustering, we illustrate that MLPPR finds vertices of the target cluster more accurately when compared with some existing methods. For network analysis, directed 3-cycles in real-world networks are carefully selected for constructing edges of -uniform hypergraphs. For such hypergraphs, numerical experiments illustrate that the new method is effective and efficient.

1.2 Organization

The outline of this paper is drawn as follows. Section 2 gives preliminary on tensors and graph-based PageRank. Hypergraphs and MLPPR are introduced in Section 3 and theoretical results on MLPPR are presented here. In Section 4, a tensor splitting algorithm for solving MLPPR is customized and analyzed. Applications in hypergraph partitioning problems are reported in Sections 5. Finally, we conclude this paper in Section 6.

2 Preliminary

Before starting, we introduce our notations and definitions. We denote scalars, vectors, matrices, and tensors by lower case (), bold lower case (), capital case (), and calligraphic script (), respectively. Let be the space of th order dimensional real tensors and let be the set of nonnegative tensors in . A tensor is called semi-symmetric if all of its elements are invariant for any permutations of the second to the last indices .

For , the -mode product of and produces a th order dimensional tensor of which elements are defined by

For convenience, we define . Particularly, and are a vector and a matrix, respectively. For a th order tensor and an th order tensor , the tensor outer product is a th order tensor, of which elements are

Particularly, when , the outer product of two vectors and is a rank-one matrix .

Let be an all-one vector. For convenience, we denote . If satisfies , is called a stochastic vector. The -mode unfolding of a tensor is an -by- matrix of which elements are

Each column of is called a mode-1 (column) fiber of the tensor . If all of column fibers of are stochastic, i.e., and , is called a columnwise-stochastic tensor. is said to be a columnwise-substochastic tensor if and . Obviously, a columnwise-stochastic tensor is columnwise-substochastic and not conversely. In the remainder of this paper, a fiber refers to a mode-1 (column) fiber. For given indices , an matrix is called a frontal slice of a tensor .

Next, we briefly review PageRank for directed graphs. A directed graph contains a vertex set , an arc set , and a weight vector . A component of stands for the weight of an arc for . An arc means an directed edge from vertex to vertex , where has an out-neighbor and has an in-neighbor . The out-degree of is defined by the sum of weights of arcs that depart from :

If , vertex is called a dangling vertex. Let the out-degree vector be . The adjacency matrix of is defined with elements

Clearly, it holds that . Vertex is dangling if and only if the th column of the adjacency matrix is a dangling (zero) vector.

Let us consider the random walk (stochastic process) on the vertex set of a directed graph . The random walk takes a step according to the transition probability (columnwise-stochastic) matrix with probability and jumps randomly to a fixed distribution with probability , i.e.,

| (1) |

where denotes the probability of a walk from vertex to vertex . Here, if and only if is an arc of . If the out-degree vector is positive, the normalized adjacency matrix of is columnwise-stochastic, where and stands for the Moore-Penrose pseudo-inverse. However, when the graph contains dangling vertices, the normalized adjacency matrix is columnwise-substochastic but not columnwise-stochastic. Researchers Gl'15 ; LM06 incorporated a rank-one correction (named a dangling correction) into the columnwise-substochastic matrix to produce a columnwise-stochastic matrix:

where is a stochastic vector, is an indicator vector of dangling columns of and thus is an indicator vector of dangling vertices in . Here, if the th column of is a zero vector and is a dangling vertex, otherwise.

Let a stochastic vector be a limiting probability distribution of the random walk . The random walk (1) could be represented as a classical PageRank:

| (2) |

It is well-known that the solution of PageRank (2) could be interpreted as the Perron vector (the eigenvector corresponding to the dominant eigenvalue) of a nonnegative matrix, , or the solution of a linear system with its coefficient matrix being an M-matrix, , where is the identity matrix. The linear system with and for the graph PageRank could be simplified by finding the solution of another structural linear system

| (3) |

and then normalizing solution to obtain a stochastic vector Gl'15 . In fact, the system (3) is called a pseudo-PageRank problem. The coefficient matrix is both an M-matrix and a Laplacian matrix of the graph YCO'21 , enjoying good properties from linear algebra and graph theory. It is interesting to note that the pseudo-PageRank model doesn’t need to do any dangling corrections. Indeed, dangling cases are inevitable for hypergraphs.

3 Hypergraph and Multi-linear pseudo-PageRank

Spectral hypergraph theory made great progress in the past decade BP'13 ; CD'12 ; QL17book ; GCH22 . Let be a weighted undirected -uniform hypergraph, where is the vertex set, is the edge set, and is a positive vector whose component denotes the weight of an edge . Here, means the cardinality of a set. Cooper and Dutle CD'12 defined adjacency tensors of uniform hypergraphs.

Definition 3.1 (CD'12 ).

Let be a weighted undirected -uniform hypergraph with vertices. An adjacency tensor of is a symmetric and nonnegative tensor of which elements are

| (4) |

Our MLPPR model could be applied in a weighted directed -uniform hypergraph with a vertex set , an arc set , and weights of arcs . An arc is an ordered subset of for . Here, vertices are called tails of the arc and the order among them are irrelevant. The last vertex is coined the head of the arc . Each arc has a positive weight for . Similarly, we define an adjacency tensor for the directed -uniform hypergraph as follows.

Definition 3.2.

Let be a weighted directed -uniform hypergraph with vertices. An adjacency tensor of is a nonnegative tensor of which elements are

| (5) |

where denotes any permutation of indices .

Clearly, an adjacency tensor of is semi-symmetric. We note that an edge of an undirected hypergraph could be viewed as varieties of arcs: of a directed hypergraph. In this sense, Definitions 3.2 and 3.1 are consistent.

Next, we consider dangling fibers of an adjacency tensor arising from a uniform hypergraph. There are two kinds of dangling cases. (i) The structural dangling fibers are due to the definition of adjacency tensors of uniform hypergraphs. For example, since the 3-uniform hypergraph does not have edges/arcs with repeating vertices, elements of the associated adjacency tensor are zeros for all and . Hence, there are structural dangling fibers in adjacency tensors of any -uniform hypergraphs with . We note that a graph has no structural dangling fibers. (ii) The sparse dangling fibers are generated by the sparsity of a certain uniform hypergraph. For a set of tail vertices , if there is no head vertex such that , there exist sparse dangling fibers. Particularly, there are amount of dangling fibers in adjacency tensors of large-scale sparse hypergraphs. A complete hypergraph does not have any sparse dangling fibers but has structural dangling fibers.

Example 3.3.

Let be a -uniform undirected hypergraph with , , and . By Definition 3.1, the unfolding of the adjacency tensor of is written as follows:

Obviously, columns , , , and are structural dangling fibers. Columns and are sparse dangling fibers.

To get rid of these dangling fibers that lead to a gap between Laplacian tensors of uniform hypergraphs and columnwise-stochastic tensors for higher-order extensions of PageRank, we are going to develop a novel MLPPR model. Following the spirit of the pseudo-PageRank model for graphs, we normalize all nonzero fibers of the adjacency tensor of a uniform hypergraph to produce a columnwise-substochastic tensor , of which elements are defined by

| (6) |

That is to say, we have , where . Both an adjacency tensor and its normalization are nonnegative tensors, which have interesting theoretical results LN15 .

As an analogue of the Laplacian matrix of a graph YCO'21 , we call

a Laplacian tensor of a -uniform hypergraph with vertices, where is a probability and is the outer produce of copies of . Obviously, each frontal slice of this Laplacian tensor is an M-matrix. Now, we are ready to present the multi-linear pseudo-PageRank model.

Definition 3.4 (MLPPR).

Let be a columnwise-substochastic tensor, i.e., and . Let be a stochastic vector and let be a probability. Then MLPPR is to find a nonnegative solution of the following multi-linear system

| (7) |

The normalized , i.e., , is called an MLPPR vector.

Next, we show some properties of the multi-linear system of MLPPR.

Lemma 3.5.

If the MLPPR system (7) has a nonnegative solution , then belongs to a bounded, closed and convex set:

| (8) |

where .

Proof: Since is columnwise-substochastic, by taking inner products between and both sides of (7), we have

| (9) |

which means that satisfies . Hence, . It is straightforward to see that is a bounded, closed and convex set.

Lemma 3.6.

Let . Then solves the MLPPR system (7) if and only if is a fixed point of the continuous map

| (10) |

Proof: On one hand, assume that solves MLPPR. From (9), we say

Then the MLPPR system (7) could be represented as

That is to say, is a fixed point of .

On the other hand, From we can derive

Then the equality (10) reduces to

which is equivalent to MLPPR (7).

The following theorem reveals the existence of nonnegative solutions of MLPPR.

Theorem 3.7.

Proof: At the beginning, we prove that maps into itself, where and are defined as in Lemmas 3.5 and 3.6, respectively. Let . Obviously, . By taking an inner product between and , we have

where the first inequality holds because is columnwise-substochastic. Hence, the continuous function maps the compact and convex set into itself.

According to the Brouwer fixed point theorem, there exists such that . By Lemma 3.6, is also a nonnegative solution of MLPPR.

Corollary 3.8.

If is a positive (stochastic) vector, then a nonnegative solution of MLPPR is also positive.

On the uniqueness of MLPPR solution, we have theoretical results under some conditions. To move on, the following two lemmas are helpful.

Lemma 3.9.

Let denote the Kronecker product. Define

For any , we have

Lemma 3.10.

Define for . For any , we have

Proof: Let for . Obviously, is a convex and monotonically decreasing function on . When , we have

| (11) |

and

| (12) |

For any , without loss of generality, we suppose . Then, we have

where the second and the third inequalities hold owing to (11) and (12), respectively.

Theorem 3.11.

Let , , and be defined as in Definition 3.4. Define

| (13) |

If , then the map is a contraction map, i.e., for all .

Proof: For any , we have and

where the first and the last inequalities are owing to Lemmas 3.10 and 3.9, respectively. The second inequality holds because is columnwise-substochastic. Hence, is a contraction map when .

For the special case of the pseudo-PageRank model for graphs, we know . Then the condition reduces to a trivial condition . When , the condition reduces to . Furthermore, the uniqueness of MLPPR solution follows this contraction theorem.

Corollary 3.12.

Whereafter, we will consider how perturbations in the columnwise-substochastic tensor and the stochastic vector affect the MLPPR solution . Numerical verifications of this theorem will be illustrated in Subsection 5.1.

Theorem 3.13.

Let and be columnwise-substochastic tensors. Let and be stochastic vectors. The probability is chosen such that . Nonnegative vectors and solve MLPPR systems

respectively. Then, we have

Proof: According to definition of in Lemma 3.10, we have

where the first inequality is valid by Lemma 3.10 and the last inequality follows from and Lemma 3.9. Hence, we obtain

The proof is completed.

3.1 Relationships between MPR and MLPPR

In the framework of MPR BGL'15 ; GLY'15 , a columnwise-substochastic tensor used in MLPPR was adjusted to a columnwise-stochastic tensor by performing the following dangling correction:

| (14) |

We note that the tensor is dense if is sparse. Gleich et al. GLY'15 proposed the MPR model:

| (15) |

where is a columnwise-stochastic tensor and is a stochastic vector.

We remark that MPR (15) has a homogeneous form

| (16) |

If the homogeneous form (16) has a nonzero solution , there are an infinite number of solutions for all . Furthermore, a nonzero vector solves the homogeneous form (16) if and only if solves MPR (15).

Next, we discuss relationships between MLPPR, MPR, and the homogeneous MPR when the dangling correction (14) holds.

Lemma 3.14.

Lemma 3.15.

Proof: By taking inner products between and both sides of (7), we get

Then, it follows from (7) and (14) that

which implies (16) straightforwardly.

Theorem 3.16.

Proof: Suppose that and are nonnegative solutions of MLPPR (7). By Lemma 3.15, and are solutions of MPR (15). Because MPR has a unique solution, we have

which means for a positive number .

On the other hand, because and solve MLPPR (7), we have

Hence, the positive number is subject to . We say which means .

The converse statement could be verified by using Lemma 3.14 and a similar discussion.

Based on Theorem 3.16 and Theorem 4.3 in GLY'15 , we get a new sufficient condition for preserving the uniqueness of an MLPPR solution.

Corollary 3.17.

MLPPR has a unique nonnegative solution when .

Clearly, Corollary 3.17 is better than Corollary 3.12, since we can infer from and not conversely when . In the case of , is equivalent to . Additionally, other sufficient conditions for the uniqueness of the MPR solution elaborated in LN'14 ; LLNV'17 ; LLVX'20 are also applied to MLPPR.

Comparing with MLPPR, MPR adjusts all fibers of the columnwise-substochastic tensor to stochastic vectors in advance and then MPR returns a stochastic solution. When applied into uniform hypergraphs, the adjustment in MPR lacks significance of graph theory. In addition, it is a time-consuming process to produce the adjustment for large scale hypergraphs. Our MLPPR utilizes the columnwise-substochastic tensor directly to produce a nonnegative solution, which could easily be normalized to a stochastic vector if necessary. By reversing the order of adjustment/normalization and root-finding of constrained multi-linear systems, we proposed a novel MLPPR model. Moreover, MLPPR gets rid of dangling cases by directly dealing with columnwise-substochastic tensors, which are usually sparser than columnwise-stochastic tensors used in MPR. This sparsity reveals a valuable potential of MLPPR in computation.

4 Tensor splitting algorithm

Inspired by the structure of the coefficient tensor of MLPPR, of which all frontal slices are M-matrices, we propose a tensor splitting iterative algorithm. Starting from , we repeatedly find a new iterate by solving the following subproblems

| (17) |

for . This structural tensor equation has a closed-form solution.

Lemma 4.1.

Proof: It follows from a subproblem (17) that

| (18) |

which means is parallel to the right-hand-side vector . Hence, we set

| (19) |

with a positive undetermined parameter .

To determine , we take inner products between and both sides of (18) and thus get

which means

| (20) |

The proof is completed.

Now, we present formally the tensor splitting algorithm in Algorithm 1, which is a fixed-point iteration LGL17 . On the global convergence of the tensor splitting algorithm, we have the following theorem.

Theorem 4.2.

Let be defined as in Theorem 3.11. If , then the tensor splitting algorithm generates a sequence of iterates which converges globally to a nonnegative solution of MLPPR with a linear rate, i.e.,

Proof: Since is a contractive map, we have

The proof is completed.

5 Hypergraph partitioning

To evaluate the performance of our MLPPR model and the associated tensor splitting algorithm, we do some numerical experiments on hypergraph partitioning. The tensor splitting algorithm terminates if

Theorem 4.2 ensures that the tensor splitting algorithm for solving MLPPR converges if . Not only that, for MLPPR with , the tensor splitting algorithm always converges in our experiments.

5.1 A toy example

Recalling Theorem 3.13, there is a theoretical bound of the variation in the MLPPR solution with respect to perturbations in both the columnwise-substochastic tensor and the stochastic vector . Now, we aim to identify whether this theoretical bound is tight by a numerical way.

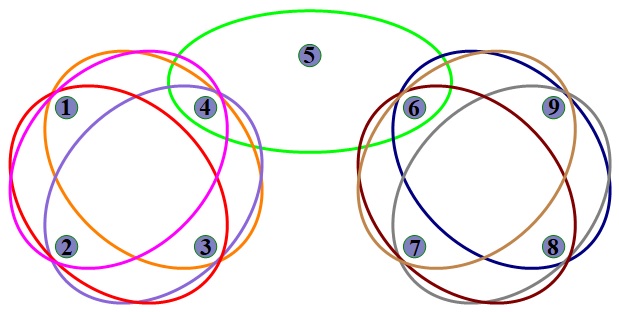

We consider an undirected -uniform hypergraph illustrated in Fig. 1, where , , and . The adjacency tensor and the columnwise-substochastic tensor of this hypergraph are produced by (4) and (6), respectively. Readers may refer to Fig. 2 for the sparsity of . It is valuable to see that 51 out of 81 fibers of are dangling. To find a largest complete sub-hypergraph in , we take a stochastic vector and a probability . By solving MLPPR with these , , and , we obtain the nonnegative solution .

Next, to perturb a stochastic vector ( and ) in a quantity , we utilize an optimization approach for producing a random perturbation such that , , and . First of all, a random vector is generated by using the standard normal distribution . Then vector takes the value from the projection of this random vector onto a compact set:

By introducing variables and , the above projection problem could be represented as a convex quadratic programming

which could be solved efficiently. Once the optimal solution of this convex quadratic programming is available, it is straightforward to calculate .

Owing to the difference in coefficients of and in Theorem 3.13, we set and to balance impacts from and , where is a perturbation quantity. For the columnwise-substochastic tensor , only non-dangling fibers are perturbed. When the perturbation quantity varies from to , we perform one hundred tests for each and evaluate the mean of resulting changes in the MLPPR solution. For comparison, we examine three cases of perturbations: (i) perturb both and , (ii) only perturb , and (iii) only perturb . Numerical results on versus are illustrated in Figure 3. The theoretical bound in Theorem 3.13 is also printed here. It is easy to see from Figure 3 that the theoretical bound for are almost tight up to some constant multiples. In addition, perturbation has less impact on the MLPPR solution than perturbation .

5.2 Subspace clustering

Subspace clustering BP'13 ; CQZ'17 is a crucial problem in artificial intelligence. A typical problem is to extract subsets of collinear points from a set of points in , where . In this setting, classical pairwise approaches cannot work. Higher-order similarity of collinear points as well as tensor representions should be exploited.

At the beginning, to identify collinear points, there exists an approach GeoT'99 for computing a least-squares error of fitting a straight line with distinct points , where . Assume that the straight line goes through with a unit direction . The cost of least-squares fitting is

To find an optimal , we consider the partial derivative of the cost with respect to :

of which solutions satisfy for . For simplicity, we set and get . Furthermore, let . The cost could be rewritten as

To minimize the cost , an optimal (unit) direction has a closed-form solution: the eigenvector corresponding to the largest eigenvalue of .

|

|

| (a) | (b) |

Next, we consider clustering multiple subspaces. Fig. 4(a) illustrates one hundred points, which constitute roughly four subsets of collinear points with blue, cyan, magenta, and red colors located in lines (subspaces) with slopes , , , and , respectively. All points are corrupted by additive Gaussian noise . Each subset has twenty percent of points and the remainder are outliers that are marked as green diamonds. A larger example with two thousand points is displayed in Fig. 4(b). When only positions of these points are known, how can we cluster these points such that points in each subset are nearly collinear?

As a first step, we construct an undirected -uniform hypergraph . Each point is regarded as a vertex in . Hence, . Basically, every three vertices may be connected as an edge to produce a complete -uniform hypergraph. But it is too expensive to handle complete hypergraphs in practice. We prefer to use a random hypergraph which is generated by two steps economically. (i) To ensure connectedness, a complete graph is produced in advance. Then, for each edge of the complete graph, we add a different vertex which is randomly chosen. So there are edges as candidates. (ii) For every candidate edge, we compute the least-squares error of fitting a straight line with positions of three points in a candidate edge. Then five percent candidate edges with smaller fitting errors are picked up to form edges of a random -uniform hypergraph. All weights of edges of this hypergraph are ones.

Once a random hypergraph is ready, our approach, i.e., Algorithm 2 using MLPPR, follows the framework of Algorithm 1 in BGL'15 . Roughly speaking, there are two stages. (i) MLPPR-based Hypergraph analysis. Above all, we generate an adjacency tensor and a columnwise-substochastic tensor of a random -uniform hypergraph. By setting a probability and a stochastic vector , an MLPPR problem is solved by the tensor splitting algorithm to obtain a nonnegative solution . (ii) Unsupervised clustering of a directed graph BGL'15 ; Ch'05 . Let be an adjacency matrix of a latent directed graph. It is straightforward to calculate a degree matrix and a columnwise-stochastic matrix . By a symmetrized approach in Ch'05 , we set , where is the stationary distribution of such that . Then, we define , , and . Finally, the second left eigenvector of is calculated for partitioning vertices of this hypergraph.

To produce a bipartition of the vertex set of a -uniform hypergraph , i.e., Step 7 of Algorithm 2, we utilize the normalized cut of hypergraphs. For a vertex subset , we define the volume of :

where ’s are elements of an adjacency tensor of . Let . The cut of is defined as

Then, the normalized cut of is defined as follows

Indeed, it is NP-hard to evaluate normalized cuts of hypergraphs exactly. A heuristic and economic approach is widely used. We sort vertices in of according to the corresponding elements of the second left eigenvector of to obtain an ordered arrangement of vertices in . To find an efficient cut, we only consider subsets that contains the leading vertices of this ordered arrangement. That is to say, we compute

| (21) |

for and then pick up the optimal index such that

| (22) |

The minimization (22) is a heuristic approximation of the normalized cut of . Then, a solution of (22) produces a bipartition of points corresponding to vertex subsets and of . Whereafter, since there are four subspaces in our experiments, we recursively run Algorithm 2 to repartition a sub-hypergraph with a larger vertex subset of until the set of points are clustered in four parts.

A significant difference between our method and Algorithm 1 in BGL'15 (named TSC-MPR) is that the former do not use any dangling information but the latter do. On one hand, for large-scale (sparse) random hypergraphs, there are a mount of dangling fibers contained in adjacency tensors of hypergraphs. By discarding dangling information, MLPPR is more efficient than MPR. On the other hand, although the stochastic vector , where is generated by MLPPR, is the same as the solution of MPR, the matrix in Step 4 of Algorithm 2 is different from used in TSC-MPR. Since the tensor is a columnwise-stochastic tensor with dangling corrections, the matrix is also columnwise-stochastic. When we give up dangling corrections, the matrix is only nonnegative but not necessarily columnwise-stochastic. In this sense, the matrix is clean while the matrix may be corrupted by dangling corrections.

For solving subspace clustering problems, we run three methods: (i) The new method, i.e., Algorithm 2 using MLPPR. (ii) TSC-MPR: tensor spectral clustering by MPR in BGL'15 . (iii) GPR: PageRank for a graph approximation of a hypergraph. For subspace clustering problems with total numbers of points ranging from to , we count the success ratios of points being in correct clusters, where outliers could be in any clusters. For each case, we run 100 tests with randomly generated points. Numerical results illustrated in Fig. 5 means that the new method outperforms TSC-MPR and GPR. Owing to the skilful avoiding of dangling corrections, the new method is effective and efficient.

5.3 Network analysis

Higher-order graph information is important for analyzing network. For example, triangles (network structures involving three nodes) are fundamental to understand social network and community structure KW06 ; RELWL14 ; BGL16 ; BGL'17 . Particularly, we are interested in the directed 3-cycle (D3C), which captures a feedback loop in network.

| Network | #nodes | #D3Cs | GPR | TSC-MPR | New method |

|---|---|---|---|---|---|

| email-EuAll | 11,315 | 183,836 | 1,492 | 1,700 | 1,634 |

| wiki-Talk | 52,411 | 5,138,613 | 21,893 | 21,240 | 21,238 |

We consider two practical networks snapnets in Table 1 from https://snap.stanford.edu/data/. Originally, each network is a directed graph. For instance, each node in the “email-EuAll” network represents an email address. There exists a directed edge from nodes to if sent at least one message to . Before higher-order network analysis, we filter the network as follows: (i) remove all vertices and all edges that do not participate in any D3Cs; (ii) take the largest strongly connected component of the remaining network. As a result, the filtered “email-EuAll” network contains nodes and D3Cs. A larger network named “wiki-Talk” contains nodes and D3Cs.

|

|

| (a) “email-EuAll” network | (b) “wiki-Talk” network |

|

|

|

| (a) | (b) | (c) |

|

|

|

| (d) | (e) | (f) |

Then it is straightforward to construct a -uniform hypergraph , where nodes and D3Cs of a network are vertices and edges of a -uniform hypergaph, respectively. All weights are set to ones. To produce heuristic and efficient cuts, we test four algorithms for generating ordered arrangements of vertices of a hypergraph: (i) Baseline: a random order, (ii) GPR: PageRank for a graph approximation of a hypergraph, (iii) TSC-MPR: tensor spectral clustering by MPR in BGL'15 , and (iv) the new method, i.e., Algorithm 2 using MLPPR. By experiments, four algorithms generate four orders, of which associated -curves defined by (21) are illustrated in Fig. 6. By picking up a minimum of by (22), each algorithm obtains a partition. The new method returns bipartitions with nodes for the “email-EuAll” network and nodes for the “wiki-Talk” network, as illustrated respectively in Fig. 7 (c) and (f). Partitions produced by TSC-MPR and GPR are also reported in Fig. 7 and associated partition sizes are listed in Table 1. When compared with these existing methods, the new method is competitive.

| Network | MPR-1 | MPR-2 | MLPPR |

|---|---|---|---|

| email-EuAll | 1.882 | 0.073 | 0.064 |

| wiki-Talk | 58.530 | 2.257 | 0.913 |

Finally, we examine CPU times costed by solving MPR and MLPPR problems. MLPPR problems are solved by the tensor splitting algorithm and MPR problems are solved by the shifted fixed-point iteration BGL'15 ; GLY'15 . We should remind readers that CPU times costed by any existing algorithms for solving MPR and MLPPR are just a little part of the time for handling the whole process of network analysis. We recall the dangling correction (14) used by MPR:

where is a th order indicator tensor of dangling fibers of . On one hand, a straightforward approach (MPR-1) is to save and to manipulate explicitly. On the other hand, if is dense but is sparse, an alternative approach (MPR-2) utilizes explicitly. For solving MPR/MLPPR problems raising from “email-EuAll” and “wiki-Talk” networks, CPU times (in seconds) of MPR-1, MPR-2, and MLPPR are reported in Table 2. Obviously, MPR-2 is faster than MPR-1. This verifies that indicator tensors are dense. Particularly, MLPPR is about 60 times faster than MPR-1 when dealing with “wiki-Talk” network. According to these numerical experiments, the proposed MLPPR is powerful and efficient.

6 Conclusions

As an interesting extension of the classical PageRank model for graph partitioning, we proposed the MLPPR model for partitioning uniform hypergraphs. By using Laplacian tensors of uniform hypergraphs without any dangling corrections, MLPPR is more suitable for processing large-scale hypergraphs. The existence and uniqueness of nonnegative solutions of MLPPR were analyzed. An error bound of the MLPPR solution was analyzed when the Laplacian tensor and a stochastic vector are slightly perturbed. By exploiting the structural Laplacian tensors, we designed a tensor splitting algorithm (a fixed-point iterations) for MLPPR. Numerical experiments on subspace clustering and network analysis verified the powerfulness and effectiveness of our method.

Funding This work was funded by the National Natural Science Foundation of China grant 12171168, 12071159, 11901118, 11771405, 62073087 and U1811464.

Data Availability The data that support the findings of this study are available from the corresponding author upon reasonable request.

Declarations

Conflict of interest The authors have no relevant financial or non-financial interests to disclose.

References

- \bibcommenthead

- (1) Brin, S., Page, L.: The anatomy of a large-scale hypertextual Web search engine. Computer Networks and ISDN Systems 30(1), 107–117 (1998). https://doi.org/10.1016/S0169-7552(98)00110-X

- (2) Bryan, K., Leise, T.: The eigenvector: The linear algebra behind Google. SIAM Review 48(3), 569–581 (2006). https://doi.org/10.1137/050623280

- (3) Gleich, D.F.: PageRank beyond the Web. SIAM Review 57(3), 321–363 (2015). https://doi.org/10.1137/140976649

- (4) Langville, A.N., Meyer, C.D.: Google’s PageRank and Beyond: The Science of Search Engine Rankings. Princeton university press, Princeton (2006)

- (5) Benson, A.R., Gleich, D.F., Leskovec, J.: Higher-order organization of complex networks. Science 353(6295), 163–166 (2016). https://doi.org/10.1126/science.aad9029

- (6) Benson, A.R., Gleich, D.F., Lim, L.-H.: The spacey random walk: A stochastic process for higher-order data. SIAM Review 59(2), 321–345 (2017). https://doi.org/10.1137/16M1074023

- (7) Kossinets, G., Watts, D.J.: Empirical analysis of an evolving social network. Science 311(5757), 88–90 (2006). https://doi.org/10.1126/science.1116869

- (8) Rosvall, M., Esquivel, A.V., Lancichinetti, A., West, J.D., Lambiotte, R.: Memory in network flows and its effects on spreading dynamics and community detection. Nature Communications 5, 4630 (2014). https://doi.org/10.1038/ncomms5630

- (9) Ipsen, I.C.F., Selee, T.M.: PageRank computation, with special attention to dangling nodes. SIAM Journal on Matrix Analysis and Applications 29(4), 1281–1296 (2008). https://doi.org/10.1137/060664331

- (10) Francesco, T.: A note on certain ergodicity coefficients. Special Matrices 3(1), 175–185 (2015). https://doi.org/10.1515/spma-2015-0016

- (11) Langville, A.N., Meyer, C.D.: A reordering for the pagerank problem. SIAM Journal on Scientific Computing 27(6), 2112–2120 (2006). https://doi.org/10.1137/040607551

- (12) Chung, F.: Laplacians and the Cheeger inequality for directed graphs. Annals of Combinatorics 9(1), 1–19 (2005). https://doi.org/10.1007/s00026-005-0237-z

- (13) Andersen, R., Chung, F., Lang, K.: Using PageRank to locally partition a graph. Internet Mathematics 4(1), 35–64 (2007)

- (14) Andersen, R., Chung, F., Lang, K.: Local partitioning for directed graphs using PageRank. Internet Mathematics 5(1-2), 3–22 (2008). https://doi.org/im/1259158595

- (15) Li, W., Ng, M.K.: On the limiting probability distribution of a transition probability tensor. Linear and Multilinear Algebra 62(3), 362–385 (2014). https://doi.org/10.1080/03081087.2013.777436

- (16) Gleich, D.F., Lim, L.-H., Yu, Y.: Multilinear PageRank. SIAM Journal on Matrix Analysis and Applications 36(4), 1507–1541 (2015). https://doi.org/10.1137/140985160

- (17) Chang, K.C., Zhang, T.: On the uniqueness and non-uniqueness of the positive Z-eigenvector for transition probability tensors. Journal of Mathematical Analysis and Applications 408(2), 525–540 (2013). https://doi.org/10.1016/j.jmaa.2013.04.019

- (18) Fasino, D., Tudisco, F.: Ergodicity coefficients for higher-order stochastic processes. SIAM Journal on Mathematics of Data Science 2(3), 740–769 (2020). https://doi.org/10.1137/19M1285214

- (19) Li, W., Liu, D., Ng, M.K., Vong, S.-W.: The uniqueness of multilinear PageRank vectors. Numerical Linear Algebra with Applications 24(6), 2107–112 (2017). https://doi.org/10.1002/nla.2107

- (20) Li, W., Liu, D., Vong, S.-W., Xiao, M.: Multilinear PageRank: Uniqueness, error bound and perturbation analysis. Applied Numerical Mathematics 156, 584–607 (2020). https://doi.org/10.1016/j.apnum.2020.05.022

- (21) Li, W., Cui, L.-B., Ng, M.K.: The perturbation bound for the Perron vector of a transition probability tensor. Numerical Linear Algebra with Applications 20(6), 985–1000 (2013). https://doi.org/10.1002/nla.1886

- (22) Benson, A.R.: Three hypergraph eigenvector centralities. SIAM Journal on Mathematics of Data Science 1(2), 293–312 (2019). https://doi.org/10.1137/18M1203031

- (23) Benson, A.R., Gleich, D.F., Leskovec, J.: Tensor Spectral Clustering for Partitioning Higher-order Network Structures. In: Proc SIAM Int Conf Data Min., pp. 118–126 (2015)

- (24) Meini, B., Poloni, F.: Perron-based algorithms for the multilinear PageRank. Numerical Linear Algebra with Applications 25(6), 2177–115 (2018). https://doi.org/10.1002/nla.2177

- (25) Huang, J., Wu, G.: Convergence of the fixed-point iteration for multilinear PageRank. Numerical Linear Algebra with Applications 28(5), 2379 (2021). https://doi.org/10.1002/nla.2379

- (26) Liu, D., Li, W., Vong, S.-W.: Relaxation methods for solving the tensor equation arising from the higher-order Markov chains. Numerical Linear Algebra with Applications 26(5), 2260 (2019). https://doi.org/10.1002/nla.2260

- (27) Cipolla, S., Redivo-Zaglia, M., Tudisco, F.: Extrapolation methods for fixed-point multilinear PageRank computations. Numerical Linear Algebra with Applications 27(2), 2280 (2020). https://doi.org/10.1002/nla.2280

- (28) Yuan, A., Calder, J., Osting, B.: A continuum limit for the PageRank algorithm. European Journal of Applied Mathematics 33(3), 472–504 (2022). https://doi.org/10.1017/S0956792521000097

- (29) Bulò, S.R., Pelillo, M.: A game-theoretic approach to hypergraph clustering. IEEE Transactions on Pattern Analysis and Machine Intelligence 35(6), 1312–1327 (2013). https://doi.org/10.1109/TPAMI.2012.226

- (30) Cooper, J., Dutle, A.: Spectra of uniform hypergraphs. Linear Algebra and its Applications 436(9), 3268–3292 (2012). https://doi.org/10.1016/j.laa.2011.11.018

- (31) Qi, L., Luo, Z.: Tensor Analysis: Spectral Theory and Special Tensors. SIAM, Philadelphia (2017)

- (32) Gao, G., Chang, A., Hou, Y.: Spectral radius on linear -graphs without expanded . SIAM Journal on Discrete Mathematics 36(2), 1000–1011 (2022). https://doi.org/10.1137/21M1404740

- (33) Li, W., Ng, M.K.: Some bounds for the spectral radius of nonnegative tensors. Numerische Mathematik 130, 315–335 (2015). https://doi.org/10.1007/s00211-014-0666-5

- (34) Liu, C.-S., Guo, C.-H., Lin, W.-W.: Newton–Noda iteration for finding the Perron pair of a weakly irreducible nonnegative tensor. Numerische Mathematik 137, 63–90 (2017). https://doi.org/10.1007/s00211-017-0869-7

- (35) Chen, Y., Qi, L., Zhang, X.: The Fiedler vector of a Laplacian tensor for hypergraph partitioning. SIAM Journal on Scientific Computing 39(6), 2508–2537 (2017). https://doi.org/%****␣MLPPR20221201.tex␣Line␣1950␣****10.1137/16M1094828

- (36) Eberly, D.: Least Squares Fitting of Data by Linear or Quadratic Structures, Redmond WA 98052 (Created: July 15, 1999; Last Modified: September 7, 2021)

- (37) Leskovec, J., Krevl, A.: SNAP datasets: Stanford large network dataset collection (2014)