Multi-Label Graph Convolutional Network Representation Learning

Abstract

Knowledge representation of graph-based systems is fundamental across many disciplines. To date, most existing methods for representation learning primarily focus on networks with simplex labels, yet real-world objects (nodes) are inherently complex in nature and often contain rich semantics or labels, , a user may belong to diverse interest groups of a social network, resulting in multi-label networks for many applications. The multi-label network nodes not only have multiple labels for each node, such labels are often highly correlated making existing methods ineffective or fail to handle such correlation for node representation learning. In this paper, we propose a novel multi-label graph convolutional network (ML-GCN) for learning node representation for multi-label networks. To fully explore label-label correlation and network topology structures, we propose to model a multi-label network as two Siamese GCNs: a node-node-label graph and a label-label-node graph. The two GCNs each handle one aspect of representation learning for nodes and labels, respectively, and they are seamlessly integrated under one objective function. The learned label representations can effectively preserve the inner-label interaction and node label properties, and are then aggregated to enhance the node representation learning under a unified training framework. Experiments and comparisons on multi-label node classification validate the effectiveness of our proposed approach.

Index Terms:

Multi-label learning, network embedding, deep neural networks, label correlationI Introduction

Graphs have become increasingly common structures for organizing data in many complex systems such as sensor networks, citation networks, social networks and many more [1]. Such a development raised new requirement of efficient network representation or embedding learning algorithms for various real-world applications, which seeks to learn low-dimensional vector representations of all nodes with preserved graph topology structures, such as edge links, degrees, and communities etc. The graph edges inherently reflect semantic relevance between nodes, where nodes with similar neighborhood structures usually tend to share identical labeling information, i.e., clustering together characterized by a single grouping label. For examples, in a scientific collaboration network, two connected authors often belong to a common area of science [2, 3], and in a protein-protein interaction network, proteins co-appeared in many identical protein complexes are likely to serve with the similar biological functions.

To date, a large body of work has been focused on the representation learning of graphs with simplex labels [4, 5], where each node only has a single label which is used to model node relationships, i.e., two nodes in a neighborhood are forced to have an identical unique label in the learning process. However, graph nodes associated with multiple labels are ubiquitous in many real-world applications. For example, in an image network, a photograph can belong to more than one semantic class, such as sunsets and beaches. In a patient social network, a patient may be suffering from diabetes and kidney cancer at the same time. Similarly, in many social networks, such as BlogCatalog and Flickr, users are allowed to join various groups that respectively represent their multiple interests. For all these networks, each node not only has content (or features), it is also associated with multiple class labels.

In general, multi-label graphs primarily differ from simplex-label graphs in twofold. First, every node in a multi-label graph could be associated with a set of labels, thus graph structures usually encode much more complicated relationships between nodes with shared labels, i.e., an edge could either reflect a simple relationship of some single label or interpret a very complex relationship of multiple combined labels. Second, it has been widely accepted that label correlations and dependencies are widespread between multiple labels [6, 7], i.e., the sunsets are frequently correlated with the beaches, and diabetes could finally lead to kidney cancer. Therefore, the correlation and interaction between labels could provide implicit and supplemental factors to enhance and differentiate node relationships that cannot be explicitly captured by the discrete and independent labels in a simplex-label graph.

Indeed, multi-label learning is a fundamental problem in the machine learning community [8], with significant attentions in many research domains such as computer vision [9], text classification [10] and tag recommendation [11]. However, research on multi-label graph learning is still in its infancy. Existing methods either consider graphs with simplex labels [5, 12] or treat multiple labels as plain attribute information to enhance the graph learning process [13, 14]. Such learning paradigms, however, neglect the fact that the information of one label may be helpful for the learning of another related label [6]—the label correlations may provide helpful extra information especially when some labels have insufficient training examples. To address this constrain and meanwhile move forward the graph learning theory a litter, we propose multi-label graph representation learning in this paper, where each node has a collection of features as well as a set of labels. Figure 1 illustrates the difference between our studied problem and the traditional simplex-label graph learning. We argue the key for multi-label graph learning is to efficiently combine network structures, node features and label correlations for enriched node relationships modeling in a mutually reinforced manner.

Incorporating labels and their correlations with graph structures for graph representation learning is a nontrivial task. First, in a multi-label graph, two linked nodes may share one or multiple identical labels, thus their affinity cannot be simply determined by one observed edge that is indistinguishable from others. Second, while each label can be seen as an abstraction of nodes sharing similar network structures and features, the label-label correlations would bring about dramatic impact on the node-node interactions, thus it is hard to constrain and balance the two aspects of relations modeling for an optimal graph representation learning as a whole. Recently, a general class of neural network called Graph Convolutional Networks (GCN) [15] shows good performance for learning node representations from graph structures and features by performing the supervised single-label node classification training. GCN operates directly on a graph and induces embedding vectors of nodes based on the spectral convolutional filter that enforces each node to aggregate features from all neighbors to form its representation.

In this paper, we advance this emerging tool to multi-label node classification and propose a novel model called Multi-Label GCN (ML-GCN) to specifically handle the multi-label graph learning problem. ML-GCN contains two Siamese GCNs to learn label and node representations from a high-layer label-label-node graph and a low-layer node-node-label graph, respectively. The high-layer graph learning serves to model label correlations, which only updates the label representations with preserved labels, label correlations and node community information by performing a single-label classification. The derived label representations are subsequently aggregated to enhance the low-layer graph learning, which carries out node representation learning from graph structures and features by performing a multi-label classification. Learning in these two layers can enhance each other in an alternative training manner to optimize a collective classification objective.

Our main contributions are summarized as follows:

-

1.

We advance the traditional simplex-label graph learning to a multi-label graph learning setting, which is more general and common in many real-world graph-based systems.

-

2.

Instead of treating multiple labels as flat attributes, like many existing methods do, We propose to leverage label correlations to strengthen and differentiate edge relationships between nodes.

-

3.

We propose a novel model ML-GCN to handle multi-label graphs. It can simultaneously integrate graph structures, features, and label correlations for enhanced node representation learning and classification.

The rest of this paper is organized as follows. Section II surveys the related work. Section III reviews some preliminaries, including definition of the multi-label graph learning problem and the graph convolutional networks used in our approach. The proposed model for multi-label graph embedding is introduced in Section IV. Section V reports experiments and comparisons to validate the proposed approach. Finally, Section VI concludes the paper.

II Related Works

This section presents existing works related to our studied problem in this paper, including multi-label learning and graph representation learning.

II-A Multi-label Learning

Multi-label learning is a classical research problem in the machine learning community with applications ranging from document classification and gene function prediction to automatic image annotation [16]. In a multi-label learning task, each instance is associated with multiple labels represented by a sparse label vector. The objective is to learn a classifier that can automatically assign an instance with the most relevant subset of labels [8]. Techniques for multi-label classification learning can be broadly divided into two categories [17]: the problem transformation-based and the algorithm adaption-based. The former class of methods generally transforms the multi-label classification task into a series of binary classification problems [6, 18] while adaption-based methods try to generalize some popular learning algorithms to enable a multi-label learning setting [19, 20].

Multi-label learning methods for graph-based data did not attract much attention in the past. DeepWalk [21] was proposed to learn graph representations that are then used for training a multi-label classifier. However, DeepWalk only exploits graph structures, with valuable label and label correlation information not preserved in learned node embeddings. Wang et al. [4] and Huang et al. [14] proposed to leverage labeling information along with graph structures for enriched representation learning. However, these methods either consider simplex-label graphs or treat multiple labels as plain attribute genes to support graph structure modeling. Such paradigms still neglect frequent label correlations and dependencies which are demonstrably helpful properties in multi-label learning problems [9, 10].

II-B Graph Representation Learning

Graph representation learning [1, 22] seeks to learn low-dimensional vector representations of a given network, such that various downstream analytic tasks like link prediction and node classification can be benefited. Traditional methods in this area are generally developed based on shallow neural models, such as DeepWalk[21], Node2vec[23] and LINE[24]. To preserve the node neighborhood relationships, they typically perform truncated random walk over the whole graph to generate a collection of fixed-length node sequences, where nodes within the same sequences are assumed to have semantic connections and will be mapped to be close in the learned embedding space. However, above methods only consider modeling the edge links to constrain node relations, which may be insufficient especially when the network structures are very sparse. To mitigate this issue, many methods [3, 25] are proposed to additionally embed the rich network contents or features associated such as the user profiles in a social network and the publication descriptions in a citation network. For example, TriDNR [26] was proposed to learn simultaneously from the network structures and textual contents, where structures and texts are mutually boosted to collectively constrain the similarities between learned node representations. In general, most real-word graphs are sparse in connectivity (e.g., each node only connects several others in the huge node space), while node contents or features can be leveraged to either enhance node relevance or repair the missing links in the original network structures [27].

However, above representation learning methods belong to the class of shallow neural models, which may have limitations in learning complex relational patterns between graph nodes. Recently, there is growing interest in adapting deep neural networks to handle the non-Euclidean graph data [12, 28]. Several works seek to apply the concepts of convolutional neural networks to process arbitrary graph structures [15, 24], with GCN [15] achieving state-of-the-art representation learning and node classification performance on a number of benchmark graph datasets. Following this success, Yao el al. [29] proposed a Text GCN for document embedding and text classification based on a constructed heterogeneous word-document graph. Graph Attention Networks (GAN) [12] are another recently proposed end-to-end neural network structure similar to GCNs, which introduce attention mechanisms that assign larger weights to the more important nodes, walks, or models. Inspired by these deep neural models targeted at mostly the simplex-label graphs, we generalize GCN and propose a novel training framework, ML-GCN, to address the multi-label graph learning problem in this paper.

III Problem Definition & Preliminaries

III-A Problem Definition

A multi-label graph is represented as , where is a set of unique nodes, is a set of edges and is a set of unique labels, respectively. is the total number of nodes in the graph and is the total number of labels in the labeling space. is a adjacency matrix with if and if . is a affiliation matrix of labels with if has label or otherwise . Finally, is a matrix containing all nodes with their features, , represents the feature vector of node , where is the feature vector’s dimension.

In this paper, the multi-label graph learning aims to represent nodes of graph in a new -dimensional feature space , embedding information of graph structures, features, labels and label correlations preserved, i.e., learning a mapping such that can be used to accurately infer labels associated with node .

III-B Graph Convolutional Networks

GCN [22] is a general class of convolutional neural networks that operate directly on graphs for node representation learning and classification by encoding both the graph structures and node features. In this paper, we focus on the spectral-based GCN [16], which assumes that neighborhood nodes tend to have identical labels guaranteed by each node gathers features from all neighbors to form its representation. Given a network , which has nodes and each node has a set of -dimensional features ( denotes the feature vector matrix of all nodes), GCN takes this graph as input and obtains the new low-dimensional vector representations of all nodes though a convolutional learning process. More specifically, with one convolutional layer, GCN is able to preserve the 1-hop neighborhood relationships between nodes, where each node will be represented as a dimension vector. The output feature matrix for all nodes can be computed by:

| (1) |

where is the normalized symmetric adjacency matrix. is the degree matrix of and is an identity matrix with corresponding shape. is the input feature matrix (e.g., ) for GCN and is a weight matrix for the first convolutional layer. is an activation function such as the ReLU represented by . If it is necessary to encode -hop neighborhood relationships, one can easily stack multiple GCN layers, where the output node features of the th (1 ) layer is calculated by:

| (2) |

where is the weight matrix for the th layer and is the feature vector dimension output in the hidden convolutional layer.

IV The Proposed Approach

In this section, we first present the proposed Multi-Label Graph Convolutional Networks (ML-GCN) model, where the node representations are learned and trained through the supervised classification. Then, we provide the training and optimization details which incorporate node and label representation learning by a collective objective, followed by the computation complexity analysis of the ML-GCN.

IV-A Multi-Label Graph Convolutional Networks

As discussed in previous sections, the key and challenge for multi-label graph learning are to simultaneously learn from the graph structures, features, labels and label correlations, where different aspects of learning could enhance each other to achieve a global good network representation. To support the incorporation of labels, we can simply build a heterogeneous node-label graph similar to the text GCN [29], where common nodes and label nodes are directly connected by their labeling relationships. However, such a diagram makes it hard to model the higher-order label correlations since labels must reach each other through common nodes, i.e., one cannot directly encode -hop neighborhood node relations and label correlations by a GCN with convolutional layers. To enable immediate and flexible label interactions, we consider a stratified graph structure shown in Fig. 2(a), which is defined as follows:

Label-label-node graph: In this graph, labels connect each other by their co-occurrence relations, i.e., two labels have an edge if they appear at the same time in the label set of some common node. Meanwhile, common nodes are seen as attributes that link with label nodes based on their corresponding labeling relationships.

Node-node-label graph: In this graph, common nodes link together to form the original graph structure. Meanwhile, associated label nodes are seen as the attributes of the common node-node graph.

Such a construction of the layered multi-label graph in Fig. 2(a) could bring three main favorable properties. First, the label-label connectivity allows direct and efficient higher-order label interactions by simply adjusting the number of convolutional layers in GCN. In addition, common nodes as attributes of the label nodes enable to encode graph community information in learned label representations, as nodes with identical labels tend to form a cluster or community. Lastly, the learned node representations can naturally preserve labels, label correlations and graph community information by taking label nodes as attributes of the node-node graph.

Fig. 2(b) shows the proposed ML-GCN model that contains two Siamese GCNs to simultaneously learn the label and node representations from the given multi-label graph, where input feature vectors of both the attributed label and common nodes will be regularly updated during the training. First, the high-layer GCN learns label representations from the label-label-node graph through supervised single-label classification. Let be the input feature matrix of all label nodes, be the adjacency matrix recording the co-occurrence relations between label nodes, and be the adjacency matrix of the input label-label-node graph. The first convolutional layer aggregates information from both the neighborhood label nodes and the associated common nodes (e.g., label node L1 in Fig. 2(a)), where the new -dimensional label node feature matrix is computed by:

| (3) |

where is an activation function, such as the ReLU represented by , is a weight matrix for the first label-label-node GCN layer and is a vertically stacked feature matrix. is a truncated normalized symmetric adjacency matrix obtained by:

| (4) |

where is the identity matrix, is the degree matrix with . One layer GCN only incorporates immediate label node neighbors. When higher order label correlations need to be preserved, we can easily stack multiple GCN layers (e.g., the layer number ) by:

| (5) |

where is the normalized symmetric adjacency matrix and . The last layer output label embeddings have the same size as the total number of labels, , and are through a softmax classifier to perform the single-label classification (e.g., assume we consider a two-layer GCN) by:

| (6) |

| (7) |

where and are the weight matrices for the first and second label-label-node GCN layers, respectively. Let be the one-hot label indicator matrix of all label nodes, the classification loss can be defined as the cross-entropy error computed by:

| (8) |

Then, the low-layer GCN learns node representations from the node-node-label graph. Similarly, in the first layer each convolution node aggregates information from both the neighborhood common nodes and the associated attributed label nodes (e.g., take node V2 in Fig. 2(a) as an example). Let be the adjacency matrix of the input node-node-label graph, the -dimensional node embeddings output by the first GCN layer are computed as:

| (9) |

where is a weight matrix for the first node-node-label GCN layer, and is a truncated normalized symmetric adjacency matrix obtained by:

| (10) |

where is the degree matrix with . As in the label-label-node graph, we can also incorporate -hop neighborhood information by stacking multiple GCN layers:

| (11) |

where and . The node embeddings output by the last layer have size and are passed through a sigmoid transformation to perform supervised multi-label classification with the collective cross-entropy loss (e.g., the two-layer GCN are used in this paper) over all labeled nodes computed by:

| (12) |

| (13) |

where and are the weight matrices for the first and second node-node-label GCN layers, respectively, is the set of node indices that have labels. Let be the one-hot label indicator matrix of all common nodes, then is calculated:

| (14) | ||||

The above two aspects of representation learning for labels and nodes are trained together and impact one another by sharing the common classification labeling space of from the target graph , and in the meantime a subset of input features, i.e., through the attributed label nodes in the low-level node-node graph and the attributed common nodes in the high-level label-label graph. Let the total training epoch for ML-GCN be , after -epoch training of common node representations, the input feature matrix for the label-label-node graph will be updated:

| (15) |

and in the meantime, after -epoch training of label representations, the input feature matrix for the node-node-label graph will be updated:

| (16) |

where and are weight matrices. The collective training procedure for the ML-GCN model has been summarized in Algorithm 1.

IV-B Algorithm Optimization and Complexity Analysis

As can be seen from Algorithm 1, the node representations and label representations are not learned independently, but depend on each other through shared embedding features learned from two reciprocally enhanced GCNs. In addition, the two level GCNs conduct two supervised classification tasks within the same labelling space: the top label-label-node GCN is doing a single-label classification and the bottom node-node-label GCN is doing a multi-label node classification. Finally, The global learning objective is to minimize the following collective classification loss:

| (17) |

In this paper, all weight parameters are optimized using gradient descent as in [15] and [29].

The training of ML-GCN is efficient in terms of the computational complexity. In this paper, we adopt a two-layer GCN and one-layer GCN for learning the node and feature representations, respectively. Since multiplication of the adjacency matrix (e.g., for the node-node graph and for the label-label graph) and feature matrix (e.g., and in Eqs. (6) and (12), respectively) can be implemented as a product of a sparse matrix with a dense matrix, the algorithm complexity of ML-GCN can be represented as , where and are the number of nodes and labels, and are the number of edges in node-node-label graph and label-label-node graph, respectively. is the dimension (for both nodes and labels) of input feature vectors. is dimension of the hidden feature vectors produced in the first node-node-label GCN layer of all common nodes. In addition, because for most networks , and are generally far more less than (e.g., as we will see in section V, for the Filckr dataset, is 4,332,620, compared with , and are merely 194, 3,716 and 8,052, respectively), therefore the complexity of ML-GCN is approximately equivalent to , which is the same as GCN. Meanwhile, since Eqs. (15) and (16) are not computed in each epoch (e.g., every 50 epoch), the complexity for our model is still , the same theoretical asymptotic complexity as the GCN.

V Experiments & Results

In this section, we compare the proposed approach against a set of strong baselines on three real-world datasets by conducting supervised node classification.

V-A Benchmark Datasets

We collect three multi-label networks [13, 21], BlogCatalog, Flickr, and YouTube, as the benchmark. They are described as follows.

BlogCatalog is a network of social relationships among 10,312 blogger authors (nodes), where the node labels represent bloggers’ interests such as Education, Food and Health. There are 39 unique labels in total and each node may be associated with one or multiple labels. It is easy to find that users’ labels of interest often interact and correlate with each other to enhance the affinities between blogger authors. For example, food is highly related with Health in real life, where two users have both labels food and life should be much closer compared with those whom only share either label food or label life. There are 615 co-occurrence relationships (e.g., correlations) among all 39 labels in this dataset.

Flickr is a photo-sharing network between users, where node labels represent user interests, such as Landscapes and Travel. There are 8,052 users and 4,332,620 interactions (e.g., edges) among them. Each user could have one or multiple labels of interest from the same labeling space of 194 labels in total.

YouTube is a social network formed by video-sharing behaviors, where labels represent the interest groups of users who enjoy common video genres such as anime and wrestling. Table I summaries their detailed statistic information. There are 22,693 users and 192,722 links between them. Each pair of linked users may share multiple identical labels out of the total 47 labels. The number of correlations between these labels is 1,079.

The detailed statistic information of the above three multi-label networks are summarized in Table 1.

V-B Comparative Methods

We compare the performance of the proposed method with the following state-of-the-art methods for multi-label node classification:

-

•

DeepWalk [21] is a shallow network embedding model that only preserves the topology structures. It captures the node neighborhood relations based on random walks and then derives node representations based on SkipGram model.

-

•

LINE [30] is also a structure preserving method. It optimizes a carefully designed objective function that preserves both the local and global network structures, compared with the DeepWalk that encodes only the local structures.

-

•

Node2vec [23] adopts a more flexible neighborhood sampling process than DeepWalk to capture the node relations. The biased random walk of Node2vec can capture second-order and high-order node proximity for representation learning.

-

•

GENE [13] is a network embedding method that simultaneously preserves the topology structures and label information. Different from the proposed approach in this paper, GENE simply models labels as plain attributes to enhance structure-based representation learning process, whereas our model considers multi-label correlation and network structure for representation learning.

-

•

GCN [15] is a state-of-the-art method that can naturally learn node relations from network structures and features, where each node forms its representation by adopting a spectral-based convolutional filter to recursively aggregate features from all its neighborhood nodes.

-

•

Text GCN [29] is built on GCN that aims to embed heterogeneous information network. In this paper, we construct heterogeneous node-label graph, where common nodes and label nodes are directly connected by their labeling relationships.

-

•

ML-GCNnode is a variant of the proposed ML-GCN model that removes attributes of common nodes from the label-label-node graph. Therefore, the community information is not preserved in this method.

-

•

ML-GCN1n is a variant of the proposed ML-GCN model. The only difference with ML-GCN is that ML-GCN1n takes only one convolutional layer while learning from the node-node-label graph.

-

•

ML-GCN2l is a variant of the proposed ML-GCN model, which adopts a two consecutive convolutional layers to learn from the label-label-node graph, compared with one layer in GCN.

-

•

ML-GCN is our proposed multi-label learning approach in this paper. It considers a two-layer graph structure–a high-level label-label-node graph which allows the preservation of label correlations and meanwhile a low-level node-node-label graph that enables the label correlation-enhanced node representation learning.

| Items | BlogCatalog | Flickr | YouTube |

|---|---|---|---|

| # Nodes | |||

| # Edges | |||

| # Labels | |||

| # Co-occur. |

| Metrics | Micro-F1 (%) | Macro-F1 (%) | |||||

|---|---|---|---|---|---|---|---|

| Datasets | BlogCatalog | Flickr | YouTube | BlogCatalog | Flickr | YouTube | |

| Methods | DeepWalk | ||||||

| LINE | |||||||

| Node2vec | |||||||

| GENE | |||||||

| GCN | 44.44 | ||||||

| Text GCN | 41.82 | 39.07 | |||||

| ML-GCNnode | |||||||

| ML-GCN1n | |||||||

| ML-GCN2l | 43.86 | 38.63 | 26.28 | ||||

| ML-GCN | |||||||

The above baselines can be roughly separated into three categories based on the types of information (e.g., network structures and labels) and how it is incorporated in the graph embedding models. The first class belongs to methods that only preserve graph structures, including DeepWalk, Node2vec, LINE, GCN (e.g., we use the structure-based identity matrix as the original features of all node). The second class includes GENE and Text GCN that preserve both the graph structures and label information, where the labels are modeled as plain attribute information to enhance structure-based representation learning. The proposed method MG-GCN and its variants (ML-GCNnode, ML-GCN1n, and ML-GCN2l) represent the thrid class, which not only preserve structural and label information, but also the correlations between labels.

It is worth noting that we designed three variants of MG-GCN (including ML-GCNnode, ML-GCN1n, and ML-GCN2l) to validate its performance under different settings. This allows us to fully observe ML-GCN’s performance and conclude which part is playing major roles for multiple-label GCN learning.

V-C Experiment Setup

There are many hyper-parameters involved. Some are empirically set [29] while others are selected through sensitivity experiments. For ML-GCN, we use two-layer and one-layer GCNs to learn from the node-node-label graph and the label-label-node graph respectively. We test hidden embedding size, , between 50 to 500, training ratios, , of supervised labeled instances between 0.025 and 0.2, and updating frequencies and from 10 to 100, respectively. We also compare the performance of ML-GCN through differing numbers of GCN convolutional layers (e.g., ML-GCN1n and ML-GCN2l). For comparison, we set the learning rate for gradient decent as 0.02, training epoch as 300, dropout probability as 0.5, norm regularization weight decay as 0, and the default values of , , and as 400, 0.2, 50 and 50, respectively. After selecting the labeled training instances, the rest is split into two parts: 10% as validation set and 90% for testing set.

It is necessary to mention that all baselines are set to conduct the multi-label node classification (e.g., each node can belong to multiple labels) within the same environmental settings. As metrics used in [21] and [30], we adopt Micro-F1 and Macro-F1 to evaluate the node classification performance, which are defined as follows:

| (18) |

| (19) |

where is the set of labels from the target graph G. , and denote the number of true positives, false negatives and false positives w.r.t the th label category, respectively. All experiments are repeated 10 times with the average results and their standard deviations reported.

V-D Experimental Results

Table II presents the comparative results of all methods with respect to the multi-label classification performance within the same environment settings, where the top three best results have been highlighted. From the table, we have the following four main observations.

-

•

Among all methods that encode only the graph topology structures, the shallow neural networks-based methods (e.g., DeepWalk, LINE and Node2vec) perform poorly with a wide gap compared with deep model GCN over all three datasets, i.e., on BlogCatalog network, the classification performance of GCN improved 30.6% and 70.9% over Node2vec w.r.t Micro-F1 and Macro-F1, respectively. This is because shallow models have limitations in learning complex relational patterns among nodes [1]. For example, although Node2vec relies on a carefully designed random walk process to capture the node neighborhood relationships, it cannot differentiate the affinities between a node and others within the same walk sequence. In comparison, GCN uses a more efficient way to constrain the neighborhood relations between nodes, where each node only interact with its neighbors in each convolution layer. Such a learning paradigm is more accurate to maintain the actual node relevance reflected by the edge links without introducing noise neighborhood relationships as in Node2vec.

-

•

In terms of the performance of methods (GENE and Text GCN) that have incorporated the labels to enhance the structures modeling. GENE is built on DeepWalk to additionally preserve the label information. We can observe that GENE performs slightly better than DeepWalk on both Flickr and YouTube datasets. The reason is that each label is considered as the higher-level summation of a group of similar nodes, thus can be used to supervise and distinguish the neighborhood affinities between nodes within the same random walk sequence. Nevertheless, LINE and Node2vec can perform better than GENE in most cases over three datasets, i.e., the Micro-F1 performance of LINE and Node2vec on BlogCatalog increased 2.0% and 4.6%, respectively. The reason is probably that they have adopted more efficient random walk process to capture node neighborhood relationships. In addition, as we can see from Table II, the label-preserved model, Text GCN, has no advantages compared with the basic GCN model. The reason is probably that the labels are considered as attributes that have not been leveraged in a meaningful manner. In other words, only the labeled nodes have the attribute of labels in the supervised node representation leaning and training, the scattered labels could have become the noisy information to confuse the neighborhood relationships modeling between nodes.

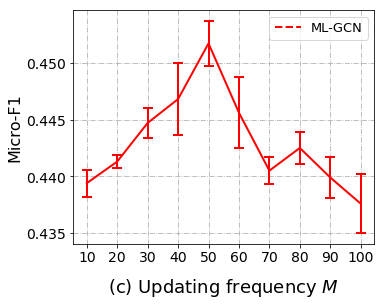

Figure 4: Impact of the information updating frequencies.

Figure 5: Impact of the hidden node embedding size. -

•

For deep models that both preserved graph structures, labels and label correlations, we can observe that ML-GCN is consistently superior to the Text GCN in leaning multi-label graphs over all three datasets. The reasons are mainly in three-fold. First, ML-GCN allows immediate and efficient label correlation modeling without depending on common nodes, i.e., labels directly interact with each other over the label-label graph in the proposed model. Second, it is common to use different numbers of convolutional layer (e.g., based on the results observed by comparing ML-GCN and ML-GCN2l, where the former performs far more better) to learn from the label and node graphs respectively, since the node-node graph is much more complicated than the label-label graph that involves simple label interaction patterns. To obtain a model that best fits the given label and node graphs of different scales, one can easily change the number of layers used by the two-layer graph modelings in ML-GCN independently. But this is hard for Text GCN to coordinate the layer settings that are most suitable to model node relations and label relations simultaneously in the node-label graph. Finally, in the structure design of ML-GCN, each label has preserved the community information by taking all related common nodes as attributes, which make the node relations modeling and the label correlations modeling more dependent on each other to optimize the global network representation learning, i.e., the node representations of one label could refine the node representation learning of another correlated label, as we will demonstrate in the case study later.

-

•

In terms of the performance of different variants for the proposed ML-GCN, we can condlude that Ml-GCN is superior to ML-GCNnode, ML-GCN1n and ML-GCN2l, where the possible reasons are given as follows: 1) The comparison between ML-GCN and ML-GCNnode demonstrates that taking the common nodes as attributes of the label-label network is beneficial, where labels could learn more enriched representations (with encoding label correlations and the node communities) to refine the neighborhood feature aggregation for node representation learning in the low-level node-node graph; 2) ML-GCN performs better than ML-GCN2l, which illustrates a single-layer GCN is appropriate to model the label correlations, since compared with the node-node interactions, the label-label interactions are generally simple and explicit; 3) ML-GCN2l is poorly performed than ML-GCN, which demonstrates that exploring the high-order neighborhood relationships between nodes is important.

V-E Parameter Sensitivity

We designed extensive experiments to test the sensitivities of various parameters between a wider range of values, such as the training ratio of labeled nodes, the feature updating frequencies and , and the embedding size of the first hidden convolution layer while modeling the node-node-label graph. Fig. 3 shows the impacts of different portions of labeled training instances. In general, for all test models, we can observe that both the Micro-F1 (e.g., Fig. 3(a)) and Macro-F1 (e.g., Fig. 3(b)) performances increase with more labeled training nodes. This is reasonable since all these models adopt a supervised node representation learning and training manner, where the model parameters can be fully trained with larger labeled data [29]. Fig. 4 shows the influence of the input feature-updating frequencies controlled by and (e.g., used in Eqs. (15) and (16)). We can see from Fig. 4(a) where the performance changes with but no clear patterns can be observed in Micro-F1, while Fig. 4(b) shows an deceasing trend with larger values of in the Macro-F1 scores. In comparison, from Fig. 4(c) and Fig. 4(d) the accuracy first increases then decreases with larger values of w.r.t. both Micro-F1 and Macro-F1 scores. We also test the impact of node embedding size generated by the first convolutional layer with the trend shown in Fig. 5(a). The accuracy fluctuates before peaking at 400 and 450 w.r.t. Micro-F1 and Macro-F1 results, followed by a sharp decline.

V-F Case Study

To illustrate how label correlations affect the multi-label graph learning performance, we present the classification results through four related label categories shown in Fig. 6. Fig. 7 presents their correlation matrix, where deeper colors imply higher correlation between two corresponding labels, i.e., L1 and L2 are highly correlated. We can see in Fig. 6 that ML-GCN and GCN perform similarly with respect to the node classification of category L1. However, the accuracy of MC-GCN improved over GCN with respect to categories L2, L3 and L4. It is interesting to note how much these categories improved (e.g., L2 L3 L4) is related to how frequently they correlate with label L1 respectively. This phenomenon might be caused by the fact that the L1 has an impact on its correlated labels during training. This also verifies that label interaction is critical for multi-label graph learning, and our proposed ML-GCN model can effectively capture and utilize this property.

VI Conclusions

In this paper, we formulated a new multi-label network representation learning problem, where each node of the network may have multiple labels. To simultaneously explore label-label correlation and the network topology, we proposed a multi-label graph convolution network (ML-GCN) to build two siamese GCNs, a node-node-label graph and a label-label-node graph from the multi-label network, and carry out learning of node representation and label representation from the two GCNs simultaneously. Because the two GCNs are unified to achieve one optimization goal, the learning of node representation and label representation can mutually benefit each other for maximum performance gain. Experiments on three real-word datasets verified the effectiveness of ML-GCN in combining labels, label correlations, and graph structures for enhanced node representation learning and classification.

References

- [1] D. Zhang, J. Yin, X. Zhu, and C. Zhang, “Network representation learning: A survey,” IEEE Trans. on Big Data, 2018.

- [2] J. Yang and J. Leskovec, “Defining and evaluating network communities based on ground-truth,” Knowledge and Information Systems, vol. 42, no. 1, pp. 181–213, 2015.

- [3] L. Liao, X. He, H. Zhang, and T.-S. Chua, “Attributed social network embedding,” IEEE Transactions on Knowledge and Data Engineering, vol. 30, no. 12, pp. 2257–2270, 2018.

- [4] X. Wang, P. Cui, J. Wang, J. Pei, W. Zhu, and S. Yang, “Community preserving network embedding,” in Thirty-First AAAI Conference on Artificial Intelligence, 2017.

- [5] T. N. Kipf and M. Welling, “Variational graph auto-encoders,” arXiv preprint arXiv:1611.07308, 2016.

- [6] S.-J. Huang and Z.-H. Zhou, “Multi-label learning by exploiting label correlations locally,” in Twenty-sixth AAAI conference on artificial intelligence, 2012.

- [7] W. Bi and J. T. Kwok, “Multilabel classification with label correlations and missing labels,” in Twenty-Eighth AAAI Conference on Artificial Intelligence, 2014.

- [8] V. Kumar, A. K. Pujari, V. Padmanabhan, S. K. Sahu, and V. R. Kagita, “Multi-label classification using hierarchical embedding,” Expert Systems with Applications, vol. 91, pp. 263–269, 2018.

- [9] J. Zhang, Q. Wu, C. Shen, J. Zhang, and J. Lu, “Multilabel image classification with regional latent semantic dependencies,” IEEE Transactions on Multimedia, vol. 20, no. 10, pp. 2801–2813, 2018.

- [10] S. Burkhardt and S. Kramer, “Online multi-label dependency topic models for text classification,” Machine Learning, vol. 107, no. 5, pp. 859–886, 2018.

- [11] M. Shi, J. Liu, D. Zhou, and Y. Tang, “A topic-sensitive method for mashup tag recommendation utilizing multi-relational service data,” IEEE Transactions on Services Computing, 2018.

- [12] P. Veličković, G. Cucurull, A. Casanova, A. Romero, P. Lio, and Y. Bengio, “Graph attention networks,” arXiv preprint arXiv:1710.10903, 2017.

- [13] J. Chen, Q. Zhang, and X. Huang, “Incorporate group information to enhance network embedding,” in Proceedings of the 25th ACM International on Conference on Information and Knowledge Management. ACM, 2016, pp. 1901–1904.

- [14] X. Huang, J. Li, and X. Hu, “Label informed attributed network embedding,” in Proceedings of the Tenth ACM International Conference on Web Search and Data Mining. ACM, 2017, pp. 731–739.

- [15] T. N. Kipf and M. Welling, “Semi-supervised classification with graph convolutional networks,” arXiv preprint arXiv:1609.02907, 2016.

- [16] W. Liu and I. W. Tsang, “Large margin metric learning for multi-label prediction,” in Twenty-Ninth AAAI Conf. on Artificial Intelligence, 2015.

- [17] M.-L. Zhang and Z.-H. Zhou, “A review on multi-label learning algorithms,” IEEE transactions on knowledge and data engineering, vol. 26, no. 8, pp. 1819–1837, 2014.

- [18] M.-L. Zhang, Y.-K. Li, X.-Y. Liu, and X. Geng, “Binary relevance for multi-label learning: an overview,” Frontiers of Computer Science, vol. 12, no. 2, pp. 191–202, 2018.

- [19] M.-L. Zhang and Z.-H. Zhou, “Ml-knn: A lazy learning approach to multi-label learning,” Pattern recognition, vol. 40, no. 7, pp. 2038–2048, 2007.

- [20] J. Nam, J. Kim, E. L. Mencía, I. Gurevych, and J. Fürnkranz, “Large-scale multi-label text classification—-revisiting neural networks,” in Joint european conference on machine learning and knowledge discovery in databases. Springer, 2014, pp. 437–452.

- [21] B. Perozzi, R. Al-Rfou, and S. Skiena, “Deepwalk: Online learning of social representations,” in Proceedings of the 20th ACM SIGKDD international conference on Knowledge discovery and data mining. ACM, 2014, pp. 701–710.

- [22] Z. Wu, S. Pan, F. Chen, G. Long, C. Zhang, and P. S. Yu, “A comprehensive survey on graph neural networks,” arXiv preprint arXiv:1901.00596, 2019.

- [23] A. Grover and J. Leskovec, “node2vec: Scalable feature learning for networks,” in Proc. of the 22nd ACM SIGKDD international conference on Knowledge discovery and data mining. ACM, 2016, pp. 855–864.

- [24] M. Defferrard, X. Bresson, and P. Vandergheynst, “Convolutional neural networks on graphs with fast localized spectral filtering,” in Advances in neural information processing systems, 2016, pp. 3844–3852.

- [25] C. Yang, Z. Liu, D. Zhao, M. Sun, and E. Chang, “Network representation learning with rich text information,” in Twenty-Fourth International Joint Conference on Artificial Intelligence, 2015.

- [26] S. Pan, J. Wu, X. Zhu, C. Zhang, and Y. Wang, “Tri-party deep network representation,” Network, vol. 11, no. 9, p. 12, 2016.

- [27] T. M. Le and H. W. Lauw, “Probabilistic latent document network embedding,” in 2014 IEEE International Conference on Data Mining. IEEE, 2014, pp. 270–279.

- [28] F. Scarselli, M. Gori, A. C. Tsoi, M. Hagenbuchner, and G. Monfardini, “The graph neural network model,” IEEE Transactions on Neural Networks, vol. 20, no. 1, pp. 61–80, 2009.

- [29] L. Yao, C. Mao, and Y. Luo, “Graph convolutional networks for text classification,” arXiv preprint arXiv:1809.05679, 2018.

- [30] J. Tang, M. Qu, M. Wang, M. Zhang, J. Yan, and Q. Mei, “Line: Large-scale information network embedding,” in Proceedings of the 24th international conference on world wide web. International World Wide Web Conferences Steering Committee, 2015, pp. 1067–1077.