Chongqing University of Posts and Telecommunications, China

11email: [email protected], {binliu, wangjin}@cqupt.edu.cn 22institutetext: School of Informatics, Aristotle University of Thessaloniki, Greece

22email: [email protected]

Multi-Label Adaptive Batch Selection by Highlighting Hard and Imbalanced Samples

Abstract

Deep neural network models have demonstrated their effectiveness in classifying multi-label data from various domains. Typically, they employ a training mode that combines mini-batches with optimizers, where each sample is randomly selected with equal probability when constructing mini-batches. However, the intrinsic class imbalance in multi-label data may bias the model towards majority labels, since samples relevant to minority labels may be underrepresented in each mini-batch. Meanwhile, during the training process, we observe that instances associated with minority labels tend to induce greater losses. Existing heuristic batch selection methods, such as priority selection of samples with high contribution to the objective function, i.e., samples with high loss, have been proven to accelerate convergence while reducing the loss and test error in single-label data. However, batch selection methods have not yet been applied and validated in multi-label data. In this study, we introduce a simple yet effective adaptive batch selection algorithm tailored to multi-label deep learning models. It adaptively selects each batch by prioritizing hard samples related to minority labels. A variant of our method also takes informative label correlations into consideration. Comprehensive experiments combining five multi-label deep learning models on thirteen benchmark datasets show that our method converges faster and performs better than random batch selection.

Keywords:

Multi-label classification batch selection class imbalance.1 Introduction

Multi-label classification (MLC), which is concerned with assigning multiple labels to each instance at once, arises frequently as a task across various domains, including text categorization [13], bioinformatics [35], and automatic image labeling [4]. For instance, a news article could span categories like Technology, Economy, and Health.

Deep learning has recently proven successful in addressing multi-label classification challenges [34, 1, 16, 40, 15, 14]. By forming appropriate latent embedding spaces, deep neural networks manage to unravel the complex dependencies between features and labels in multi-label data [34, 1]. Moreover, deep learning models can successfully dissect and analyze label correlations [14, 40]. In addition, their inherent strength in representation learning allows them to naturally model label-specific features [16].

Recently, the issue of class imbalance in multi-label data has garnered growing interest [29]. Due to the inherent nature of multi-label data, label frequencies (the number of instances relevant to each label) are significantly diverse. The high-frequent majority labels typically dominate the training process of multi-label classifiers, leading to an undue ignorance of less-frequent minority labels. Class imbalance impacts the training of deep learning models too. Conventional training of multi-label deep neural networks relies on an optimizer with randomly sampled mini-batches, i.e., every sample has an equal chance to be selected in the batch [39]. Due to label rarity and batch size, instances associated with minority labels seldom appear in mini-batches over epochs. In each epoch, the features of instances associated with minority labels are exposed within fewer batches, leading to deep learning models inadequately learning more difficult minority labels.

Figure 1 shows the loss density distributions of the DELA [15] model on the bibtex dataset during the 30-th and 70-th epoch across samples associated with different numbers of minority labels. Obviously, instances associated with more minority labels tend to suffer higher losses throughout the entire training process. The connection between the higher loss and minority labels offers valuable insight: similar to higher loss samples, instances associated with minority labels are more crucial for training models. Samples with higher loss, also called hard samples, pose a challenge for the deep learning models. It has been demonstrated that emphasis on hard samples in batch selection can significantly improve the generalization ability [26] and speed up the convergence of deep learning models in single-label data scenario [22, 6, 18]. However, choosing proper multi-label samples for each batch has not been investigated yet.

In this paper, we introduce an adaptive batch selection algorithm tailored for multi-label scenarios, designed to boost the training efficiency and performance of deep learning models. Our approach utilizes the widely adopted multi-label binary cross entropy loss to assess the difficulty of each sample, and imposes more attention on samples suffering global and local imbalance. The goal is to derive an adaptive loss that calculates the selection probability of each sample without adding to the time overhead. In addition, by adopting quantization index-based probability assignment, batch selection becomes insensitive to the small loss change and results in a smoother overall probability distribution. In addition, a variant of our method leverages a chain-based selection strategy to exploit label correlations explicitly. Integrating the adaptive batch selection method with five multi-label deep learning models achieves significantly improved performance and faster convergence than the default batch selection strategy.

2 Related Work

2.1 Multi-Label Classification

Multi-label classification, leverages techniques that harness label correlations to streamline the learning process and navigate complexity through effective use of label relationships [31, 38]. Broadly, these methodologies can be categorized based on the complexity of label correlations they consider: first-order (e.g., BR, MLkNN [3, 37]), second-order (e.g., CLR [12]), and high-order correlations (e.g., CC, RAkEL [24, 33]).

Recently, deep learning has become a successful technique to solve the multi-label classification problem BP-MLL [36] is the first neural network architecture for multi-label learning, which develops a pairwise loss function to explore label dependencies. Deep embedding-based methods have demonstrated effectiveness by using deep neural networks to harmonize the latent spaces of features and labels. For instance, C2AE [34] embeds both feature and label data into deep latent space, employing a label-correlation sensitive loss function for end-to-end label output prediction. MPVAE [1] aligns probabilistic embedding spaces for labels and features, utilizing a decoder to model the joint distribution of output targets based on a multivariate probit model, emphasizing label correlation capture. Several works focus on exploiting deep neural networks to capture label correlations. For instance, PACA [16] creates a latent metric space regulated by label correlation, learning prototypes and metrics for each label, and employs normalizing flows for capturing class label characteristics. HOT-VAE [40] is an attention-based framework for multi-label classification, which innovatively learns high-order label correlations adaptive to feature changes. Additionally, due to the powerful representation learning capability of deep neural networks, it is quite natural to consider the problem of label-specific features in the deep learning scenario. For instance, CLIF [14] merges label semantics learning with the extraction of label-specific features, incorporating a graph autoencoder for semantic relations and a module for disentangling label-specific features. DELA [15] implements a perturbation-based technique for label-specific feature stability within a probabilistically relaxed expected risk minimization framework.

The imbalanced approaches proposed for MLC can be divided into three categories: sampling methods, classifier adaptation, and ensemble approaches [29]. Sampling methods aim to balance the label distribution by either oversampling [7, 19, 30] minority labels or undersampling [8, 23] majority ones, thus aiming to produce the more balanced versions of the training set before training. Classifier adaptation [11] techniques modify existing algorithms to make them more sensitive to label imbalance, often by adjusting decision thresholds or incorporating imbalance-aware loss functions. Ensemble approaches [20] combine multiple models or algorithms to leverage their collective strength, often incorporating mechanisms to specifically address label imbalance, such as weighted voting or selective ensemble training focused on underrepresented labels.

In the field of multi-label learning, the existing batch selection algorithms are only applicable to active multi-label learning tasks [5, 10], which are different from the scope of our multi-label classification. Active multi-label learning aims to progressively select unlabeled samples with the maximum information for manual annotation.

2.2 Hard Sample and Batch Selection in Single Label Data

Hard samples are crucial for deep learning as they drive the model to refine its decision boundaries and improve generalization, making the learning process more effective and robust [26, 21, 17]. For example, OHEM (Online Hard Example Mining) [26] focuses on training with hard samples, identified by their high losses, and exclusively using these instances to update the model’s gradients.

Recent studies have pointed out that the performance of deep neural networks depends heavily on how well the mini-batch samples are selected. Meanwhile, it has been verified that neural networks converge faster with the help of intelligent batch selection strategies [6, 18, 27]. Techniques that select mini-batches based on sample difficulty have enhanced network precision and hastened training convergence. For instance, Online Batch Selection [22] boosts training efficiency by ranking samples according to their recent loss values and adjusting their selection probability with an exponential decay based on rank. This approach strategically prioritizes higher-loss samples for upcoming mini-batches. Further, Recency Bias [27] targets samples with fluctuating predictions, using recent history to gauge uncertainty and increase their sampling probability for upcoming batches. Ada-Boundary [28] prioritizes samples near the decision boundary where the model is uncertain, accelerating convergence by focusing on these critical samples. In multi-label settings, the complexity of measuring uncertainty for each label and aggregating these measures significantly increases computational demands. Additionally, the inherent sparsity and inter-label correlations in multi-label datasets can render traditional uncertainty-based selection methods less effective, resulting in suboptimal batch choices.

3 Proposed Method

3.1 Preliminaries

Let denote the input space and denote the label space with q labels. A multi-label instance is denoted as , with as the feature vector and indicating the relevant labels set. A binary vector of dimension , with elements in , represents the set of labels . Here, implies that , and indicates that . The objective of multi-label classification is to learn a prediction function based on a dataset . For any new example , the function predicts a subset of labels as relevant.

In multi-label data, the metric [7] is commonly used to evaluate the level of imbalance for a particular label. Let be the count of samples for which the value of the -th label is . Then the metric is defined as:

| (1) |

where denotes the greatest number of instances for any label that is assigned a value of 1. measures the average imbalance across all labels. Let represent the set of minority labels, defined by those labels whose exceeds the . A label is classified as a minority if and as a majority otherwise.

Let denote a local imbalance matrix, where signifies the local imbalance for instance regarding label , defined by the proportion of neighboring instances that belong to a different class.

| (2) |

where represents the indicator function, which yields 1 if is true and 0 otherwise, and represents the -nearest neighbors (NNs) of based on the Euclidean distance.

3.2 Rank-Based Batch Selection

The rank-based batch selection [22] sorts samples by loss, assigns sample selection probabilities based on rank, and selects mini-batches accordingly. Given a dataset of samples, the probability of selecting the -th sample is:

| (3) |

where denotes the rank of the elements in ascending order within . Here is defined as the vector with its -th element representing the model loss of the -th sample. Furthermore, represents the selection pressure, influencing the disparity in probabilities between the most and least significant samples.

In multi-label classification neural networks, the loss function usually involves several components. For example, the KL divergence is used by many models [1, 15, 40], to quantify the difference between the distribution of input and latent space. However, this loss function component is not directly related to the classification target. We instead utilize multi-label binary cross-entropy as the loss for rank-based batch selection. The rationale behind this choice and its effectiveness are thoroughly discussed and validated in Section 4.5.

3.3 Class Imbalance Aware Weighting

To increase the likelihood of minority label instances being included in batches. we introduce a weight for each instance and incorporate it into . Let represent the matrix that captures local imbalance, defined by:

| (4) |

where normalization is applied to the local class imbalance of label for all instances to emphasize labels with fewer samples. Meanwhile, is used to exclude the influence of outliers. Further, let be the imbalance vector, with for each instance, accumulating local imbalance from non-noisy minority labels. Given a vector , the instance weight vector is defined as: . Then, upon combining with , the loss vector can be rewritten as:

| (5) |

where represents the Adam product. It’s important to highlight that is solely utilized for determining sample probability in the batch selection and does not contribute to the model’s gradient update process. All data has a chance to be selected during the training process to avoid introducing system bias

3.4 Incorporate Quantization Index

In Eq.3, the ranking-based method for sample selection probability tends to magnify small differences, resulting in significant (disproportionate) shifts in rankings and selection probabilities.

A feasible way to circumvent this is to exploit the quantization index [9] to smooth the rank value and limit it within the range of . The quantization function 111 is upward rounding function is given by:

| (6) |

where is defined as the adaptive quantization step size, equivalent to . Here, represents the maximum element of . Combining , the probability of sample selection in multi-label adaptive batch can be rewritten as:

| (7) |

Figure 2 shows the workflow of the model training with adaptive batch selection, the red line indicates adaptability on a batch basis.

Before the training, the set is initialized to track each sample’s . Meanwhile, the model requires a continuous warm-up phase of epochs to alleviate the instability caused by initial random initialization. In adaptive batch selection, each sample’s selection probability is linked to its quantization index , calculated by using an adaptive step size . Here, denotes the highest loss among all samples after the previous batch of training ends. After each batch, we adjust the quantization index based on and then update by recalculating each sample’s .

3.5 Variant of Adaptive Batch Selection Exploiting Label Correlations

It is well-known that label correlations are significant in multi-label learning. Label correlations can provide additional information, especially when some labels have insufficient training samples.

By considering label correlation, we propose a chain-based adaptive batch selection method.

First, we select the seed sample based on the selection probability set .

Given that is the seed sample’s associated label with the maximum , we employ the adjacency matrix to identify the ( represents the label cardinality) labels most closely related to 222 is the symmetric conditional probability matrix, which measures the co-occurrence relationship between labels, and the definition can be found in the appendix of supplementary materials., constituting the set . We then define as the collection of instances linked to the labels within .

From the second sample onward, selection relies on a new probability set , defined as .

The selected sample then becomes the new seed sample for the next round of selection. This process is repeated until it reaches the batch size.

3.6 Convergence Guarantee

Adaptive batch selection methods typically meet Adam’s convergence standards, assuming their sampling distributions are strictly positive and gradient estimates unbiased. We theoretically demonstrate that our approach meets these key convergence conditions and the Proof can be found in the appendix of supplementary materials.

4 Experiments and Analysis

4.1 Experiment Setup

4.1.1 Datasets

We analyze thirteen multi-label datasets spanning text, image, and bioinformatics domains, sourced from the MULAN repository [32].

| name | Card | Dens | MeanIR | domain | name | Card | Dens | MeanIR | domain | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Corel5ka | 5000 | 499 | 374 | 3.52 | 0.01 | 189.57 | images | tmc2007 | 28596 | 490 | 22 | 2.15 | 0.10 | 15.16 | text |

| rcv1subset3 | 6000 | 944 | 101 | 2.61 | 0.03 | 68.33 | text | bibtex | 7395 | 1836 | 159 | 2.40 | 0.02 | 12.50 | text |

| rcv1subset1 | 6000 | 944 | 101 | 2.88 | 0.03 | 54.49 | text | enron | 1702 | 1001 | 53 | 3.38 | 0.06 | 9.93 | text |

| rcv1subset2 | 6000 | 944 | 101 | 2.63 | 0.03 | 45.51 | text | yeast | 2417 | 103 | 14 | 4.24 | 0.30 | 7.20 | biology |

| yahoo-Arts | 7484 | 2314 | 25 | 1.67 | 0.07 | 26.00 | text | LLOG-F | 1460 | 1004 | 75 | 15.93 | 0.21 | 5.39 | text |

| cal500 | 502 | 68 | 174 | 26.04 | 0.15 | 20.58 | music | scene | 2407 | 294 | 6 | 1.07 | 0.18 | 1.25 | images |

| yahoo-Business | 11214 | 2192 | 28 | 1.47 | 0.06 | 16.90 | text |

Characteristics and imbalance levels of these datasets are detailed in Table 1, including Card, the mean labels per instance associated, and Dens, the ratio of Card to the overall label count.

4.1.2 Evaluation Metrics

4.1.3 Base Classifier and Implementation Details

We used five multi-label deep models, namely C2AE [34], MPVAE [1], PACA [16], CLIF [14], and DELA [15]. We configure each model according to the parameter settings provided in the original paper and its source code, including layer dimensions, activation functions, and other specifics. For any hyperparameters not detailed, we standardize configurations across batch selection methods to maintain consistency. For optimization, Adam, with a batch size of 128, weight decay of 1e-4, and momentums of 0.999 and 0.9, is employed. As for the adaptive batch selection parameters, we used the best selection pressure , obtained from , and set the warm-up threshold to 3. Technically, a small is enough to warm up, but to reduce the performance variance caused by randomly initialized, we use the first three epochs as the warm-up period and share the model parameters for all strategies during the warm-up period [28]. We employ five-fold cross-validation to evaluate the above approaches on the 13 data sets. In each fold, we record the results on the test set at the best epoch of the validation set. Our code can be found in Anonymous GitHub 1.

4.2 Experimental Results

Table 2 shows the comparative average performance metrics of two different batch selection techniques, adaptive batch selection, and random batch selection, in different models. "Random" denotes the use of random batch selection with "shuffle=True", which refers to the batch selection used by these models. Adaptive batch selection consistently outperformed random selection, achieving the highest rankings across various evaluation metrics in most datasets. This advantage was particularly pronounced in datasets with high imbalance, such as Corel5k, rcv1subset1, rcv1subset2, rcv1subset3, as well as in those with a large number of labels, such as Corel5k, bibtex, and cal500. Adaptive batch selection significantly enhances the performance of embedding-based models like C2AE and models employing sophisticated neural networks, such as the CLIF. These results highlight our method’s broad effectiveness in deep learning models.

| C2AE | Macro-F | Micro-F | Macro-AUC | Ranking Loss | Hamming Loss | One Error | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Dataset | Random | Adaptive | Random | Adaptive | Random | Adaptive | Random | Adaptive | Random | Adaptive | Random | Adaptive | |

| Corel5k | 0.0702 | 0.0726 | 0.1943 | 0.1985 | 0.6165 | 0.6361 | 0.2922 | 0.2832 | 0.0533 | 0.0393 | 0.8202 | 0.8002 | |

| rcv1subset3 | 0.2659 | 0.2768 | 0.4387 | 0.4481 | 0.8136 | 0.8298 | 0.1287 | 0.1187 | 0.0362 | 0.0344 | 0.5423 | 0.5321 | |

| rcv1subset1 | 0.2611 | 0.2723 | 0.4565 | 0.4664 | 0.8396 | 0.8589 | 0.0930 | 0.0917 | 0.0399 | 0.0380 | 0.5081 | 0.4918 | |

| rcv1subset2 | 0.2505 | 0.2609 | 0.4463 | 0.4544 | 0.8157 | 0.8277 | 0.1114 | 0.1067 | 0.0369 | 0.0346 | 0.5505 | 0.5355 | |

| yahoo-Arts1 | 0.2007 | 0.2146 | 0.3973 | 0.4064 | 0.7108 | 0.7287 | 0.1188 | 0.1129 | 0.0528 | 0.0528 | 0.5192 | 0.5147 | |

| cal500 | 0.2473 | 0.2634 | 0.4641 | 0.4880 | 0.5781 | 0.5972 | 0.2610 | 0.2413 | 0.2616 | 0.2417 | 0.1615 | 0.1391 | |

| yahoo-Business1 | 0.2224 | 0.2243 | 0.4152 | 0.4176 | 0.7478 | 0.7455 | 0.0373 | 0.0379 | 0.0452 | 0.0446 | 0.8239 | 0.8199 | |

| tmc2007 | 0.4332 | 0.4405 | 0.5643 | 0.5895 | 0.8697 | 0.8711 | 0.0785 | 0.0764 | 0.0700 | 0.0685 | 0.2710 | 0.2691 | |

| bibtex | 0.2683 | 0.2778 | 0.3736 | 0.3788 | 0.8594 | 0.8674 | 0.1318 | 0.1216 | 0.0194 | 0.0147 | 0.4878 | 0.4443 | |

| enron | 0.2587 | 0.2692 | 0.5510 | 0.5622 | 0.6690 | 0.6698 | 0.1610 | 0.1496 | 0.0901 | 0.0840 | 0.3771 | 0.3354 | |

| yeast | 0.4040 | 0.4275 | 0.6536 | 0.6567 | 0.7045 | 0.7100 | 0.1719 | 0.1723 | 0.2011 | 0.1981 | 0.2527 | 0.2362 | |

| LLOG-F | 0.3021 | 0.3066 | 0.5556 | 0.5694 | 0.6375 | 0.6419 | 0.2011 | 0.1961 | 0.2075 | 0.1976 | 0.2340 | 0.2066 | |

| scene | 0.7249 | 0.7368 | 0.7163 | 0.7277 | 0.9303 | 0.9361 | 0.0920 | 0.0892 | 0.0968 | 0.0942 | 0.2605 | 0.2508 | |

| MPVAE | Macro-F | Micro-F | Macro-AUC | Ranking Loss | Hamming Loss | One Error | |||||||

| Corel5k | 0.1131 | 0.1155 | 0.2196 | 0.2311 | 0.7025 | 0.7054 | 0.2316 | 0.2322 | 0.0254 | 0.0245 | 0.6903 | 0.7009 | |

| rcv1subset3 | 0.3190 | 0.3262 | 0.4733 | 0.4751 | 0.8971 | 0.9051 | 0.0702 | 0.0697 | 0.0301 | 0.0296 | 0.4207 | 0.4182 | |

| rcv1subset1 | 0.3514 | 0.3605 | 0.4664 | 0.4780 | 0.8997 | 0.9071 | 0.0693 | 0.0681 | 0.0358 | 0.0349 | 0.4275 | 0.4148 | |

| rcv1subset2 | 0.3532 | 0.3595 | 0.4820 | 0.4854 | 0.9032 | 0.9082 | 0.0659 | 0.0628 | 0.0302 | 0.0300 | 0.4173 | 0.4112 | |

| yahoo-Arts1 | 0.3364 | 0.3528 | 0.4684 | 0.4780 | 0.7456 | 0.7492 | 0.1384 | 0.1341 | 0.0572 | 0.0565 | 0.5095 | 0.5039 | |

| cal500 | 0.2026 | 0.2084 | 0.3664 | 0.3783 | 0.5365 | 0.5379 | 0.3183 | 0.3205 | 0.2394 | 0.2381 | 0.3662 | 0.3648 | |

| yahoo-Business1 | 0.3409 | 0.3580 | 0.4670 | 0.4774 | 0.7865 | 0.7923 | 0.0452 | 0.0421 | 0.0179 | 0.0161 | 0.8235 | 0.8202 | |

| tmc2007 | 0.5154 | 0.5242 | 0.6275 | 0.6326 | 0.8918 | 0.8947 | 0.0612 | 0.0606 | 0.0700 | 0.0696 | 0.2583 | 0.2528 | |

| bibtex | 0.3395 | 0.3385 | 0.4522 | 0.4543 | 0.8724 | 0.8740 | 0.1045 | 0.1026 | 0.0145 | 0.0144 | 0.4101 | 0.4041 | |

| enron | 0.3007 | 0.2964 | 0.5531 | 0.5548 | 0.7251 | 0.7241 | 0.1418 | 0.1390 | 0.0838 | 0.0832 | 0.3041 | 0.2967 | |

| yeast | 0.4434 | 0.4486 | 0.6235 | 0.6261 | 0.6949 | 0.7013 | 0.1933 | 0.1907 | 0.2178 | 0.2182 | 0.2708 | 0.2710 | |

| LLOG-F | 0.4179 | 0.4297 | 0.5531 | 0.5635 | 0.7667 | 0.7692 | 0.2387 | 0.2360 | 0.1915 | 0.1908 | 0.3070 | 0.2796 | |

| scene | 0.7702 | 0.7833 | 0.7608 | 0.7762 | 0.9462 | 0.9484 | 0.0693 | 0.0655 | 0.0835 | 0.0782 | 0.2106 | 0.1965 | |

| PACA | Macro-F | Micro-F | Macro-AUC | Ranking Loss | Hamming Loss | One Error | |||||||

| Corel5k | 0.1447 | 0.1431 | 0.2372 | 0.2359 | 0.7468 | 0.7543 | 0.1618 | 0.1587 | 0.0282 | 0.0277 | 0.6982 | 0.7047 | |

| rcv1subset3 | 0.3500 | 0.3576 | 0.4870 | 0.4899 | 0.9091 | 0.9165 | 0.0526 | 0.0507 | 0.0314 | 0.0306 | 0.4125 | 0.4077 | |

| rcv1subset1 | 0.3540 | 0.3586 | 0.4587 | 0.4618 | 0.9066 | 0.9099 | 0.0528 | 0.0515 | 0.0367 | 0.0364 | 0.4301 | 0.4243 | |

| rcv1subset2 | 0.3512 | 0.3547 | 0.4893 | 0.4856 | 0.9067 | 0.9158 | 0.0520 | 0.0512 | 0.0310 | 0.0307 | 0.4138 | 0.4098 | |

| yahoo-Arts1 | 0.2273 | 0.2331 | 0.3981 | 0.4032 | 0.7523 | 0.7612 | 0.1365 | 0.1314 | 0.0524 | 0.0515 | 0.5473 | 0.5408 | |

| cal500 | 0.1215 | 0.1286 | 0.3705 | 0.3721 | 0.5821 | 0.5881 | 0.2392 | 0.2345 | 0.1957 | 0.1930 | 0.1179 | 0.1157 | |

| yahoo-Business1 | 0.3219 | 0.3207 | 0.4940 | 0.5041 | 0.8236 | 0.8279 | 0.0349 | 0.0368 | 0.0155 | 0.0134 | 0.8119 | 0.8091 | |

| tmc2007 | 0.4890 | 0.4902 | 0.6040 | 0.6132 | 0.8837 | 0.8849 | 0.0645 | 0.0642 | 0.0749 | 0.0743 | 0.2778 | 0.2726 | |

| bibtex | 0.2995 | 0.3008 | 0.4101 | 0.4128 | 0.8476 | 0.8528 | 0.0923 | 0.0926 | 0.0157 | 0.0128 | 0.4524 | 0.4497 | |

| enron | 0.3051 | 0.3071 | 0.5762 | 0.5781 | 0.7351 | 0.7391 | 0.1135 | 0.1095 | 0.0845 | 0.0845 | 0.2801 | 0.2761 | |

| yeast | 0.4180 | 0.4232 | 0.6435 | 0.6476 | 0.7096 | 0.7097 | 0.1737 | 0.1721 | 0.2051 | 0.2010 | 0.2391 | 0.2382 | |

| LLOG-F | 0.3837 | 0.3996 | 0.5612 | 0.5643 | 0.7104 | 0.7131 | 0.2022 | 0.1968 | 0.1791 | 0.1783 | 0.2365 | 0.2318 | |

| scene | 0.7712 | 0.7727 | 0.7640 | 0.7697 | 0.9442 | 0.9464 | 0.0663 | 0.0667 | 0.0843 | 0.0827 | 0.2015 | 0.2011 | |

| CLIF | Macro-F | Micro-F | Macro-AUC | Ranking Loss | Hamming Loss | One Error | |||||||

| Corel5k | 0.1246 | 0.1269 | 0.2375 | 0.2379 | 0.7534 | 0.7683 | 0.1677 | 0.1762 | 0.0241 | 0.0240 | 0.6628 | 0.6660 | |

| rcv1subset3 | 0.3057 | 0.3079 | 0.4729 | 0.4738 | 0.9268 | 0.9279 | 0.0606 | 0.0602 | 0.0341 | 0.0294 | 0.4129 | 0.4113 | |

| rcv1subset1 | 0.3498 | 0.3506 | 0.4711 | 0.4746 | 0.9221 | 0.9307 | 0.0617 | 0.0576 | 0.0355 | 0.0338 | 0.4192 | 0.4072 | |

| rcv1subset2 | 0.3265 | 0.3284 | 0.4756 | 0.4824 | 0.9279 | 0.9329 | 0.0611 | 0.0610 | 0.0302 | 0.0299 | 0.4272 | 0.4166 | |

| yahoo-Arts1 | 0.3384 | 0.3412 | 0.4772 | 0.4797 | 0.7580 | 0.7535 | 0.1293 | 0.1207 | 0.0556 | 0.0561 | 0.4936 | 0.4928 | |

| cal500 | 0.1007 | 0.1066 | 0.3547 | 0.3620 | 0.5801 | 0.5982 | 0.2300 | 0.2259 | 0.1910 | 0.1895 | 0.1215 | 0.1196 | |

| yahoo-Business1 | 0.3765 | 0.3761 | 0.5097 | 0.5082 | 0.7801 | 0.7940 | 0.0430 | 0.0374 | 0.0163 | 0.0165 | 0.8099 | 0.8087 | |

| tmc2007 | 0.5337 | 0.5306 | 0.6451 | 0.6355 | 0.9048 | 0.9059 | 0.0552 | 0.0550 | 0.0700 | 0.0697 | 0.2540 | 0.2521 | |

| bibtex | 0.3423 | 0.3467 | 0.4687 | 0.4696 | 0.8983 | 0.9011 | 0.0884 | 0.0841 | 0.0133 | 0.0132 | 0.3767 | 0.3846 | |

| enron | 0.2903 | 0.2909 | 0.5728 | 0.5770 | 0.7700 | 0.7763 | 0.1232 | 0.1198 | 0.0758 | 0.0745 | 0.2504 | 0.2492 | |

| yeast | 0.4141 | 0.4157 | 0.6482 | 0.6561 | 0.7107 | 0.7191 | 0.1679 | 0.1607 | 0.1995 | 0.1930 | 0.2429 | 0.2221 | |

| LLOG-F | 0.3962 | 0.3958 | 0.5671 | 0.5661 | 0.7659 | 0.7703 | 0.2169 | 0.2138 | 0.2000 | 0.1691 | 0.2340 | 0.2326 | |

| scene | 0.7606 | 0.7709 | 0.7473 | 0.7589 | 0.9418 | 0.9454 | 0.0725 | 0.0676 | 0.0891 | 0.0850 | 0.2284 | 0.2093 | |

| DELA | Macro-F | Micro-F | Macro-AUC | Ranking Loss | Hamming Loss | One Error | |||||||

| Corel5k | 0.0755 | 0.0963 | 0.1812 | 0.2081 | 0.7556 | 0.7621 | 0.1601 | 0.1532 | 0.0216 | 0.0223 | 0.6526 | 0.6520 | |

| rcv1subset3 | 0.2673 | 0.2995 | 0.4640 | 0.4704 | 0.9175 | 0.9183 | 0.0569 | 0.0581 | 0.0305 | 0.0300 | 0.4307 | 0.4080 | |

| rcv1subset1 | 0.2842 | 0.3184 | 0.4424 | 0.4544 | 0.9179 | 0.9194 | 0.0576 | 0.0571 | 0.0362 | 0.0356 | 0.4355 | 0.4305 | |

| rcv1subset2 | 0.2868 | 0.3125 | 0.4680 | 0.4748 | 0.9203 | 0.9220 | 0.0540 | 0.0538 | 0.0312 | 0.0303 | 0.4358 | 0.4118 | |

| yahoo-Arts1 | 0.2735 | 0.2688 | 0.4617 | 0.4628 | 0.7387 | 0.7444 | 0.1227 | 0.1202 | 0.0547 | 0.0537 | 0.5131 | 0.5136 | |

| cal500 | 0.0666 | 0.0833 | 0.3283 | 0.3473 | 0.5633 | 0.5927 | 0.2322 | 0.2273 | 0.1904 | 0.1894 | 0.1215 | 0.1156 | |

| yahoo-Business1 | 0.3295 | 0.3196 | 0.4942 | 0.4913 | 0.7979 | 0.8062 | 0.0358 | 0.0343 | 0.0173 | 0.0167 | 0.8119 | 0.8153 | |

| tmc2007 | 0.5417 | 0.5495 | 0.6546 | 0.6550 | 0.9121 | 0.9171 | 0.0524 | 0.0517 | 0.0673 | 0.0667 | 0.2544 | 0.2504 | |

| bibtex | 0.2956 | 0.3151 | 0.4399 | 0.4517 | 0.9042 | 0.9053 | 0.0767 | 0.0746 | 0.0133 | 0.0137 | 0.4024 | 0.4012 | |

| enron | 0.2743 | 0.2688 | 0.5800 | 0.5931 | 0.7727 | 0.7748 | 0.1154 | 0.1108 | 0.0735 | 0.0722 | 0.2421 | 0.2426 | |

| yeast | 0.3813 | 0.3859 | 0.6482 | 0.6551 | 0.7006 | 0.7121 | 0.1677 | 0.1631 | 0.1975 | 0.1933 | 0.2328 | 0.2160 | |

| LLOG-F | 0.3749 | 0.3798 | 0.5208 | 0.5859 | 0.7909 | 0.7930 | 0.1865 | 0.1849 | 0.1603 | 0.1601 | 0.1752 | 0.1806 | |

| scene | 0.7496 | 0.7684 | 0.7396 | 0.7585 | 0.9405 | 0.9449 | 0.0722 | 0.0726 | 0.0898 | 0.0834 | 0.2309 | 0.2183 | |

The results presented in Table 3, derived from the Wilcoxon signed-ranks test [2] at a 0.05 significance level, conclusively indicate that our adaptive batch selection method outperforms random batch selection with statistical significance.

| C2AE | MPVAE | PACA | CLIF | DELA | |

|---|---|---|---|---|---|

| Macro-F | win (0.0002) | win (0.0017) | win (0.0046) | win (0.0215) | win (0.0171) |

| Micro-F | win (0.0002) | win (0.0002) | win (0.0081) | tie (0.0942) | win (0.0012) |

| Macro-AUC | win (0.0012) | win (0.0005) | win (0.0002) | win (0.0034) | win (0.0002) |

| Ranking Loss | win (0.0012) | win (0.0061) | win (0.0171) | win (0.0171) | win (0.0046) |

| Hamming Loss | win (0.0022) | win (0.0012) | win (0.0022) | win (0.0081) | win (0.0105) |

| One Error | win (0.0022) | win (0.0134) | win (0.0171) | win (0.0479) | tie (0.1465) |

4.3 Convergence Analysis

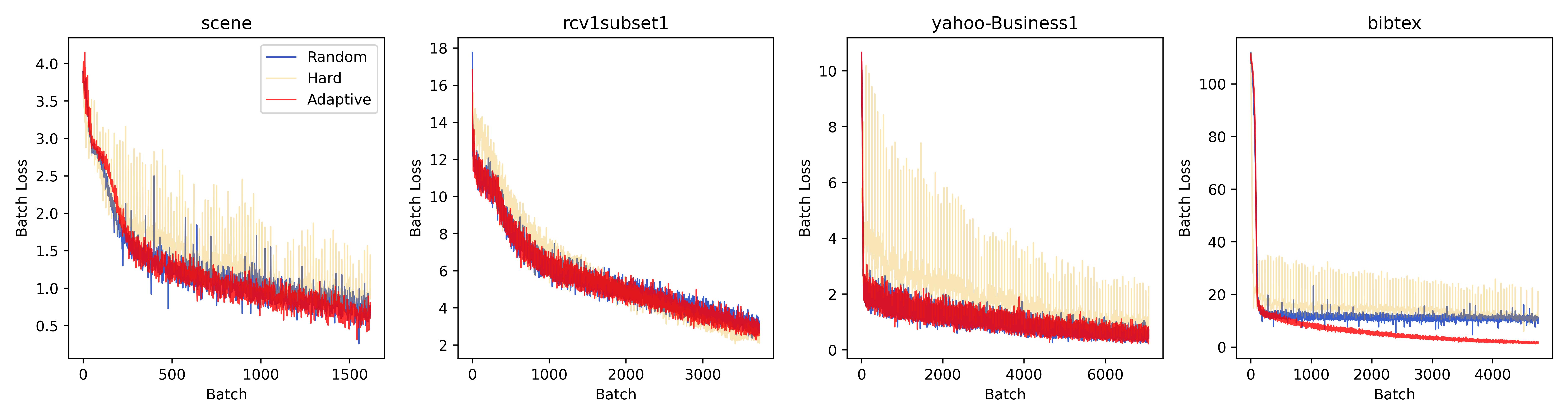

In this section, we add a ranking batch selection based on BCE loss (named "Hard") as a comparison from the ablation perspective. Compared with the Adaptive method, Hard ignores imbalance weight and does not employ the quantization index. Figure 3 illustrates the convergence curves of three distinct batch selection strategies, viewed from the perspectives of epochs, batches, and time, along with the validation set performance curves evaluated using Macro-AUC.

Epoch Perspective: As shown in Figure 3(a), the adaptive batch selection outperforms the other strategies in the scene and bibtex dataset, converging to lower losses with fewer epochs. Hard batch selection method shows improved convergence over random batch methods on the first three datasets but underperforms on the bibtex datatset. The latter may be due to the small difference in training sample loss, failing the loss ranking strategy between samples. The convergence curve of the adaptive batch selection proves the effectiveness of the quantization index. Specifically, we observe that training with random batch, the loss on the bibtex dataset plateaued after only a few epochs. In contrast, adopting adaptive batching allowed the model to break through this "bottleneck", leading to a continued decline in the loss convergence curve.

Batch Perspective: As shown in Figure 3(b), the adaptive batch shows less volatility and a steadier loss reduction during training. In contrast, the hard batch method is more volatile. The random batch method displays moderate volatility. The stability offered by adaptive batch selection is advantageous since large fluctuations, particularly upward ones in the last batch of an epoch, could disrupt the entire training epoch and adversely affect validation performance.

Time Perspective: As shown in Figure 3(c), to evaluate performance gains in training time, we measure the time required to achieve the same training loss levels (based on the minimum training loss achieved by a random batch). For example, on the scene dataset, random batch selection requires approximately 7.8 seconds to reach the minimum loss, while the adaptive batch selection only needs 5.7 seconds. On the scene, rcv1subset1, and bibtex datasets, the adaptive batch reduces training time by 14.1, 10.8, and 84.6, respectively. Although the adaptive batch increases training time by 37.2 on the yahoo-Business dataset, the overall convergence curve and evaluation metric results suggest that the adaptive batch method offers notable time performance benefits. Despite the extra time needed to compute and adjust the selection probability set during batch selection, this benefit persists.

Validation Perspective: As shown in Figure 4, adaptive batch selection notably enhances model performance. For the scene and rcv1subset1 datasets, all approaches achieve similar Macro-AUC by training’s end. Yet, the adaptive batch method attains peak validation epochs more swiftly than random selection strategies. More importantly, the adaptive batch significantly improved the validation set’s Macro-AUC on both the yahoo-Business1 and bibtex datasets.

4.4 Adaptive Batch Selection with Label Correlations

Table 4 shows a comparative analysis of adaptive batch selection combining label correlation across different datasets.

| Macro-F | Micro-F | Macro-AUC | Ranking Loss | Hamming Loss | One Error | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Dataset | Adaptive | AdaC | Adaptive | AdaC | Adaptive | AdaC | Adaptive | AdaC | Adaptive | AdaC | Adaptive | AdaC |

| Corel5k | 0.0963 | 0.0945 | 0.2081 | 0.2042 | 0.7621 | 0.7614 | 0.1532 | 0.1521 | 0.0223 | 0.0235 | 0.6520 | 0.6544 |

| rcv1subset1 | 0.3184 | 0.3157 | 0.4544 | 0.4523 | 0.9194 | 0.9175 | 0.0571 | 0.0594 | 0.0356 | 0.0349 | 0.4305 | 0.4315 |

| cal500 | 0.0833 | 0.0940 | 0.3473 | 0.3491 | 0.5927 | 0.5962 | 0.2273 | 0.2234 | 0.1894 | 0.1852 | 0.1156 | 0.1135 |

| yahoo-Business1 | 0.3196 | 0.3243 | 0.4913 | 0.4952 | 0.8062 | 0.8053 | 0.0343 | 0.0362 | 0.0167 | 0.0182 | 0.8153 | 0.8144 |

The adaptive batch selection considering label correlations, denoted as "AdaC" performs better in datasets with a high cardinality of labels (cal500), but is ineffective in extremely sparse label spaces (Corel5k). This phenomenon arises from richer mutual information and label associations in high-cardinality settings, boosting the application of combing label correlation.

4.5 Investigation of Loss

Binary cross-entropy (BCE) loss is a preferred choice for modeling due to its direct measurement of prediction accuracy and has been integrated into the model’s loss functions. Considering imbalanced losses like Asymmetric Loss [25], introduces significant computational overhead. In contrast, BCE loss offers a more streamlined approach, reducing the need for extra loss calculations and thereby conserving computational resources. In section 4.3, while verifying the effectiveness of adaptive batch selection from a global perspective, Figure 5 demonstrates from a more fine-grained perspective that the loss of minority label samples under adaptive batch selection can converge better, which provides evidence support for our approach of focusing on hard minority samples.

Initially, we explore the relationship between the model’s loss and BCE loss by analyzing the Pearson correlation coefficient. Figure 6(a) demonstrates a strong correlation between these two loss metrics. Furthermore, Figure 6(b) the convergence curve of adaptive batch selection with the model’s loss or BCE loss involved in computing . Both CLIF and DELA models incorporate BCE loss prominently in their loss functions, and giving it more weight than other loss components. This results in similar convergence patterns of the training loss to those seen with adaptive sample selection based on BCE loss. Conversely, C2AE, which does not include BCE loss in its loss function and prioritizes reconstruction loss, shows slower convergence in adaptive batch selection. This slower convergence may be due to the lack of correlation between samples with larger model losses and hard samples under classification tasks.

4.6 Parameter Analysis

This section assesses key parameter impacts on our method, particularly selection pressure () and batch size variation. Refer to the supplementary appendix for detailed visuals. We conclude that: 1) may not expedite the convergence of training losses but improves performance metrics by batch setups. 2) the adaptive selection surpasses random selection for batch sizes 256 and 512, proving its effectiveness.

5 Conclusion

In this paper, we explore the issue of class imbalance in multi-label classification and its impact on the training of deep models. We propose a novel multi-label batch selection strategy that focuses on class imbalance and hard samples, marking a pioneering effort in this area. Our initial investigations revealed a nuanced relationship between challenging samples and minority labels: samples associated with minority labels tend to incur higher losses during training. By adaptively selecting the Binary Cross Entropy loss with a combination of global and local imbalance weights, we assign greater importance to these challenging samples. Additionally, we refine the ranking strategy through quantization factors to address the issue of smoothness. We also introduce the variant of the adaptive batch selection strategy, such as the chain adaptive batch processing that considers label correlations, which require further and richer experimental validation. Comprehensive experiments demonstrate that our approach leads to better convergence and performance compared to the random batch selection methods used in existing models. Future exploration of batch selection in multi-label learning is intriguing, given the potential "inexplicable" relationships between the model and its mini-batches.

Ethical Statement

There are no ethical issues.

References

- [1] Bai, J., Kong, S., Gomes, C.: Disentangled variational autoencoder based multi-label classification with covariance-aware multivariate probit model. In: Proceedings of the Twenty-Ninth International Conference on International Joint Conferences on Artificial Intelligence. pp. 4313–4321 (2021)

- [2] Benavoli, A., Corani, G., Mangili, F.: Should we really use post-hoc tests based on mean-ranks? The Journal of Machine Learning Research 17(1), 152–161 (2016)

- [3] Boutell, M.R., Luo, J., Shen, X., Brown, C.M.: Learning multi-label scene classification. Pattern recognition 37(9), 1757–1771 (2004)

- [4] Cabral, R., Torre, F., Costeira, J.P., Bernardino, A.: Matrix completion for multi-label image classification. Advances in neural information processing systems 24 (2011)

- [5] Chakraborty, S., Balasubramanian, V., Panchanathan, S.: Optimal batch selection for active learning in multi-label classification. In: Proceedings of the 19th ACM international conference on multimedia. pp. 1413–1416 (2011)

- [6] Chang, H.S., Learned-Miller, E., McCallum, A.: Active bias: Training more accurate neural networks by emphasizing high variance samples. Advances in Neural Information Processing Systems 30 (2017)

- [7] Charte, F., Rivera, A.J., del Jesus, M.J., Herrera, F.: Mlsmote: Approaching imbalanced multilabel learning through synthetic instance generation. Knowledge-Based Systems 89, 385–397 (2015)

- [8] Charte, F., Rivera, A.J., Jesus, M.J.d., Herrera, F.: Mlenn: a first approach to heuristic multilabel undersampling. In: International Conference on Intelligent Data Engineering and Automated Learning. pp. 1–9. Springer (2014)

- [9] Chen, B., Wornell, G.W.: Quantization index modulation: A class of provably good methods for digital watermarking and information embedding. IEEE Transactions on Information theory 47(4), 1423–1443 (2001)

- [10] Chen, S., Wang, R., Lu, J., Wang, X.: Stable matching-based two-way selection in multi-label active learning with imbalanced data. Information Sciences 610, 281–299 (2022)

- [11] Daniels, Z., Metaxas, D.: Addressing imbalance in multi-label classification using structured hellinger forests. In: Proceedings of the AAAI Conference on Artificial Intelligence. vol. 31 (2017)

- [12] Fürnkranz, J., Hüllermeier, E., Loza Mencía, E., Brinker, K.: Multilabel classification via calibrated label ranking. Machine learning 73, 133–153 (2008)

- [13] Gao, S., Wu, W., Lee, C.H., Chua, T.S.: A mfom learning approach to robust multiclass multi-label text categorization. In: Proceedings of the twenty-first international conference on Machine learning. p. 42 (2004)

- [14] Hang, J.Y., Zhang, M.L.: Collaborative learning of label semantics and deep label-specific features for multi-label classification. IEEE Transactions on Pattern Analysis and Machine Intelligence 44(12), 9860–9871 (2021)

- [15] Hang, J.Y., Zhang, M.L.: Dual perspective of label-specific feature learning for multi-label classification. In: International Conference on Machine Learning. pp. 8375–8386. PMLR (2022)

- [16] Hang, J.Y., Zhang, M.L., Feng, Y., Song, X.: End-to-end probabilistic label-specific feature learning for multi-label classification. In: Proceedings of the AAAI Conference on Artificial Intelligence. vol. 36, pp. 6847–6855 (2022)

- [17] Huang, Y., Shen, P., Tai, Y., Li, S., Liu, X., Li, J., Huang, F., Ji, R.: Improving face recognition from hard samples via distribution distillation loss. In: Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XXX 16. pp. 138–154. Springer (2020)

- [18] Katharopoulos, A., Fleuret, F.: Not all samples are created equal: Deep learning with importance sampling. In: International conference on machine learning. pp. 2525–2534. PMLR (2018)

- [19] Liu, B., Blekas, K., Tsoumakas, G.: Multi-label sampling based on local label imbalance. Pergamon (2022)

- [20] Liu, B., Tsoumakas, G.: Making classifier chains resilient to class imbalance. In: Asian Conference on Machine Learning. pp. 280–295. PMLR (2018)

- [21] Liu, Y., Yang, X., Zhou, S., Liu, X., Wang, Z., Liang, K., Tu, W., Li, L., Duan, J., Chen, C.: Hard sample aware network for contrastive deep graph clustering. In: Proceedings of the AAAI conference on artificial intelligence. vol. 37, pp. 8914–8922 (2023)

- [22] Loshchilov, I., Hutter, F.: Online batch selection for faster training of neural networks. In International Conference on Learning Representation (ICLR),workshop (2016)

- [23] Pereira, R.M., Costa, Y.M., Silla Jr, C.N.: Mltl: A multi-label approach for the tomek link undersampling algorithm. Neurocomputing 383, 95–105 (2020)

- [24] Read, J., Pfahringer, B., Holmes, G., Frank, E.: Classifier chains for multi-label classification. Machine learning 85, 333–359 (2011)

- [25] Ridnik, T., Ben-Baruch, E., Zamir, N., Noy, A., Friedman, I., Protter, M., Zelnik-Manor, L.: Asymmetric loss for multi-label classification. In: Proceedings of the IEEE/CVF international conference on computer vision. pp. 82–91 (2021)

- [26] Shrivastava, A., Gupta, A., Girshick, R.: Training region-based object detectors with online hard example mining. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 761–769 (2016)

- [27] Song, H., Kim, M., Kim, S., Lee, J.G.: Carpe diem, seize the samples uncertain" at the moment" for adaptive batch selection. In: Proceedings of the 29th ACM International Conference on Information & Knowledge Management. pp. 1385–1394 (2020)

- [28] Song, H., Kim, S., Kim, M., Lee, J.G.: Ada-boundary: accelerating dnn training via adaptive boundary batch selection. Machine Learning 109, 1837–1853 (2020)

- [29] Tarekegn, A.N., Giacobini, M., Michalak, K.: A review of methods for imbalanced multi-label classification. Pattern Recognition 118, 107965 (2021)

- [30] Teng, Z., Cao, P., Huang, M., Gao, Z., Wang, X.: Multi-label borderline oversampling technique. Pattern Recognition 145, 109953 (2024)

- [31] Tsoumakas, G., Katakis, I.: Multi-label classification: An overview. International Journal of Data Warehousing and Mining (IJDWM) 3(3), 1–13 (2007)

- [32] Tsoumakas, G., Spyromitros-Xioufis, E., Vilcek, J., Vlahavas, I.: Mulan: A java library for multi-label learning. The Journal of Machine Learning Research 12, 2411–2414 (2011)

- [33] Tsoumakas, G., Vlahavas, I.: Random k-labelsets: An ensemble method for multilabel classification. In: Machine Learning: ECML 2007: 18th European Conference on Machine Learning, Warsaw, Poland, September 17-21, 2007. Proceedings 18. pp. 406–417. Springer (2007)

- [34] Yeh, C.K., Wu, W.C., Ko, W.J., Wang, Y.C.F.: Learning deep latent space for multi-label classification. In: Proceedings of the AAAI conference on artificial intelligence. vol. 31 (2017)

- [35] Zhang, M.L., Zhou, Z.H.: Multilabel neural networks with applications to functional genomics and text categorization. IEEE transactions on Knowledge and Data Engineering 18(10), 1338–1351 (2006)

- [36] Zhang, M.L., Zhou, Z.H.: Multilabel neural networks with applications to functional genomics and text categorization. IEEE transactions on Knowledge and Data Engineering 18(10), 1338–1351 (2006)

- [37] Zhang, M.L., Zhou, Z.H.: Ml-knn: A lazy learning approach to multi-label learning. Pattern recognition 40(7), 2038–2048 (2007)

- [38] Zhang, M.L., Zhou, Z.H.: A review on multi-label learning algorithms. IEEE transactions on knowledge and data engineering 26(8), 1819–1837 (2013)

- [39] Zhang, Y., Kang, B., Hooi, B., Yan, S., Feng, J.: Deep long-tailed learning: A survey. IEEE Transactions on Pattern Analysis and Machine Intelligence (2023)

- [40] Zhao, W., Kong, S., Bai, J., Fink, D., Gomes, C.: Hot-vae: Learning high-order label correlation for multi-label classification via attention-based variational autoencoders. In: Proceedings of the AAAI Conference on Artificial Intelligence. vol. 35, pp. 15016–15024 (2021)

6 Symmetric conditional probability matrix A

To construct the label relation graph, we denote the graph as , where represents the set of nodes corresponding to the set of class labels, and represents the set of edges indicating the co-occurrence relationships between pairs of labels. The adjacency matrix stores each edge’s weights, representing the strengths of the co-occurrence relationships. The matrix is defined as the symmetric conditional probability matrix: , where is the probability that label appears given that label appears. The diagonal elements of the conditional probability matrix are set to 0. We calculate the conditional probability matrix on the training set. Based on the specified parameter , we identify the most relevant labels for by finding the indices of the top highest values in the -th row or column of matrix .

7 Appendix

7.1 Specific experimental results

7.1.1 Selection Pressure

Selection pressure, denoted as , influences the intensity of selection for hard minority samples. When the value of is high, it increases the likelihood of choosing minority samples for the next mini-batch, emphasizing their selection. Conversely, a lower value of reduces the distinction from random batch selection, i.e. making the batch selection more random by diminishing the focus on hard samples.

Figure 7 illustrates the impact of varying values on adaptive batch selection across two benchmark datasets in terms of training loss and other evaluation metrics 333Here, we record the result on the first fold. As increases, we observe no significant change in the convergence speed of the training loss. This suggests that while altering the might not quicken the decrease of training losses, it can improve evaluation metrics across various batch compositions. For the bibtex dataset, a rise in is directly linked to better evaluation metrics, indicating that focusing on hard minority samples improves model fit. Similarly, for the yahoo-Arts dataset, this positive trend continues as increases from 2 to 8, but further escalation to 64 diminishes the metrics, hinting at potential overfitting on hard samples.

7.1.2 Batch size of Model

To investigate the universality of our adaptive batch selection method, we explore the experimental results of using the DELA model with random batch selection and adaptive batch selection under the condition of batch size=256 and 512.

The experimental outcomes demonstrate that our adaptive batch selection method consistently outperforms random batch selection across both batch sizes of 256 and 512, showcasing its superior universality and effectiveness in optimizing model’s performance.

7.2 Proof

the index of each instance is bounded by:

| (8) |

Then, the lower bound of is formulated by

| (9) |

Thus, the sampling distribution of multi-label adaptive selection is strictly positive. To ensure a gradient estimate is unbiased, the expected value of the gradient estimate under the sampling distribution must equal the true gradient calculated across the entire dataset. Mathematically, this condition can be expressed as:

| (10) |

where is the gradient of the loss with respect to the model parameters, is the true loss computed over the entire dataset, and denotes the expectation over the sampling distribution. Further, we can assume that if every sample in the training set has a probability of being selected, then the gradient is unbiased. Let us consider the gradient estimate used in combination with the Adam optimizer. Adam, an algorithm for first-order gradient-based optimization of stochastic objective functions, computes adaptive learning rates for each parameter. In its essence, Adam maintains two moving averages for each parameter; one for gradients () and one for the square of gradients () respectively. These moving averages are estimates of the first moment (the mean) and the second moment (the uncentered variance) of the gradients. The unbiasedness of the gradient estimate , when combined with Adam, can be articulated by analyzing the correction step applied to and . Specifically, the bias-corrected first and second moment estimates are given by:

| (11) | ||||

| (12) |

where and are the exponential decay rates for the moment estimates, and denotes the timestep. The correction factors and counteract the bias towards zero in the initial time steps, ensuring that and are unbiased estimates of the first and second moments. Therefore, given an unbiased gradient estimate , the application of Adam’s bias correction guarantees that the adjusted gradients remain unbiased.