Multi-Grid Schemes for Multi-Scale Coordination of Energy Systems

Abstract

We discuss how multi-grid computing schemes can be used to design hierarchical coordination architectures for energy systems. These hierarchical architectures can be used to manage multiple temporal and spatial scales and mitigate fundamental limitations of centralized and decentralized architectures. We present the basic elements of a multi-grid scheme, which includes a smoothing operator (a high-resolution decentralized coordination layer that targets phenomena at high frequencies) and a coarsening operator (a low-resolution centralized coordination layer that targets phenomena at low frequencies). For smoothing, we extend existing convergence results for Gauss-Seidel schemes by applying them to systems that cover unstructured domains. This allows us to target problems with multiple timescales and arbitrary networks. The proposed coordination schemes can be used to guide transactions in decentralized electricity markets. We present a storage control example and a power flow diffusion example to illustrate the developments.

1 Motivation and Setting

We consider the following optimization problem:

| (1a) | ||||

| s.t. | (1b) | |||

| (1c) | ||||

Here, are decision or primal variables (including states and controls) and is the data (including disturbances and system parameters). These variable vectors contain elements that are distributed over a mesh with points that covers a certain temporal or spatio-temporal domain of interest . We define the set of points in the mesh as with . The matrix is positive definite and is a cost vector. The constraint (1b) (with associated dual variables ) is defined by the matrices and and the matrix is assumed to have full row rank. The constraints may include discretized dynamic equations (in space and time) and other physical constraints. The constraints (1c) (with associated dual variables ) are defined by the matrix . This constraint models coupling (connectivity) between the primal variables at different mesh points and can also be used to model boundary conditions. Problem (1) captures formulations used in optimization-based control strategies such as model predictive control (MPC) rawlingsbook .

We assume that the dimension of the mesh describing problem (1) is so large that the problem cannot be solved in a centralized manner. This is often the case in systems that cover large temporal and spatial domains and/or multiple scales. In an electrical network, for instance, a large number of nodes and harmonics might need to be captured, rendering centralized control impractical. An alternative to address this complexity is to partition the problem into subdomains to create decentralized control architectures. We begin by defining a partitioned version of problem (1):

| (2a) | ||||

| s.t. | (2b) | |||

| (2c) | ||||

We denote this problem as . Here, is a set for partitions (subdomains) of the set and we define the number of partitions as . Each partition contains mesh elements satisfying and for all and . The number of elements in a partition is denoted as . The variables and data are defined over the partition . We represent the cost function as a sum of the partition cost functions with associated positive definite matrices and cost vectors . The constraints are also split into individual partition constraints with associated matrices and we link the partition variables by using the coupling constraints (2c) and associated matrices . As we will discuss later, we can always obtain such a representation by introducing duplicate decision variables in each partition and by adding suitable coupling constraints. This procedure is known as lifting diehllift .

To avoid centralized coordination, a wide range of decomposition schemes (we also refer to them as decentralized coordination schemes) can be used. A popular approach used in the solution of partial differential equations (PDEs) and decomposition methods such as the alternating direction method of multipliers (ADMM) is the Gauss-Seidel (GS) coordination scheme borzi ; boydadmm . Here, the problem in each partition (often called a control agent) is solved independently from the rest and exchanges information with its neighbors to coordinate. For a lifted problem of the form (2), we will derive a decentralized GS scheme that solves problems over individual partitions of the form:

| (3a) | ||||

| s.t. | (3b) | |||

| (3c) | ||||

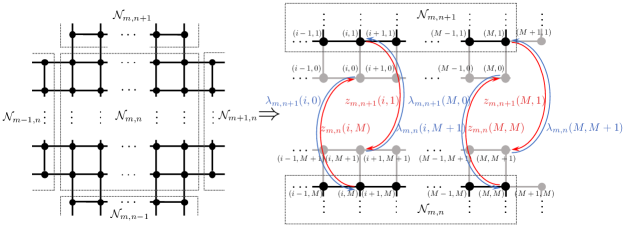

We denote this partition subproblem as that is solved at the update step (that we call here the coordination step). From the solution of this problem we obtain the updated primal variables and dual variables (corresponding to the coupling constraints (3c)). Here, and are primal and dual variables for neighboring partitions connected to partition and that have not been updated while and are primal and dual variables for neighboring partitions that have already been updated. We refer to the variables communicated between partitions as the coordination variables. We note that partition cannot update its primal and dual variables until the variables of a subset of the partitions connected to it have been updated. Consequently, the GS scheme is sequential and synchronous in nature. We also note that the connectivity topology (induced by the coupling matrices ) determines the communication structure. We highlight, however, that the order of the updates presented in (3) is lexicographic (in the order of the partition number) but this choice of update order is arbitrary and can be modified. We will see that the update order can be designed to derive parallel schemes (i.e., in which certain partitions can proceed independently of others) but that the order can affect performance. In Figure 1 we illustrate the configuration of a GS scheme over a 1-D mesh while in Figure 2 we present a configuration for a 2-D mesh. For the 2-D mesh, we note that the nodes spanning the domain are grouped into sets of the form and we note that the information is exchanged using the state and dual variables in the boundary of the partitions. In Section 3 we discuss this approach in more detail.

In the next sections we derive and analyze a decentralized GS scheme to solve problem . The analysis seeks to illustrate how the structure of the partition subproblem arises and to highlight how information of the coordination variables propagates throughout the partitions. We then discuss how to create coarse representations of the full resolution problem to obtain approximations for the coordination variables and with this accelerate the decentralized GS scheme. This gives rise to the concept of multi-grid schemes, that can be used to design hierarchical coordination architectures. Our analysis is performed on convex quadratic programs (QPs), which will reveal important features of multi-grid schemes.

The concepts discussed in this paper seek to extend existing literature on decentralized and hierarchical MPC. Many strategies have been proposed to address the complexity of centralized MPC such as spatial and temporal decomposition methods rawlingscooperative ; kroghdecentralized ; scattoreview ; frasch ; deschutter , fast inexact schemes zavalaasnmpc ; zavalage ; diehlrealtime , and reduced order modeling techniques daoutidis . Decentralized control manages the entire system by coordinating multiple controllers, each operating in a different node or subnetwork. Decentralization also enables resiliency and asynchronicity, which are key practical advantages over centralized MPC. Different schemes for coordinating MPC controllers have been devised scattoreview . Lagrangian dual decomposition is a technique where Lagrange multipliers are used for coordination. This technique is popular in electricity markets because the dual variables can be interpreted as prices that are used as a coordination mechanism illic ; deschutter2 ; arnold . Techniques based on coordinate minimization schemes and distributed gradient methods have also been proposed to coordinate MPC controllers in general settings rawlingsjpc ; mpcadmm ; liuadmm . An important limitation of decentralized schemes is that coordination of subsystems tends to be slow (e.g., convergence rates of existing schemes are at best linear) boydadmm ; luoadmm ; fisher . This slow convergence has been reported in the context of energy networks in arnold . Moreover, spatial decentralization by itself does not address the complexity induced by multiple time scales. In particular, time scales and prediction horizons of different decentralized controllers might be different. To the best of our knowledge, no coordination schemes currently exist to handle such settings.

Hierarchical control seeks to overcome fundamental limitations of decentralized and centralized control schemes. Fundamental concepts of hierarchical control date as far back as the origins of automatic control itself hierarbook . Complex industrial control systems such as the power grid are structured hierarchically in one way or another to deal with multiple time and spatial scales. Existing hierarchies, however, are often constructed in ad-hoc manners by using objectives, physical models, and control formulations at different levels that are often incompatible. For instance, an independent system operator (ISO) solves a hierarchy of optimization problems (unit commitment, economic dispatch, optimal power flow) that use different physical representations of the system. This can lead to lost economic performance, unreachable/infeasible command signals, and instabilities rawlingsunreachable ; zavalaipopt . Hierarchical MPC provides a general framework to tackle dynamics and disturbances occurring at multiple time scales scattohierar ; scattoreview ; hierarbook ; zavalamultigrid and spatial scales farina2017hierarchical . In a traditional hierarchical MPC scheme, one uses a high-level controller to compute coarse control actions that are used as targets (commands) by low level controllers. This approach has been used recently in microgrids and multi-energy systems hierarresid ; hug . More sophisticated MPC controllers use robustness margins of the high level controller that are used by the lower level controller to maintain stability scattohierar . Significant advances in the analysis of multi-scale dynamical systems have also been made, most notably by the use of singular perturbation theory to derive reduced-order representations of complex networks kokotovicpower ; chowpower ; peponides ; simon . The application of such concepts in hierarchical MPC, however, has been rather limited. In particular, the recent review on hierarchical MPC by Scattolini notices that systematic design methods for hierarchical MPC are still lacking scattoreview . More specifically, no hierarchical MPC schemes have been proposed that aggregate and refine trajectories at multiple scales. In addition, existing schemes have been tailored to achieve feasibility and stability but do not have optimality guarantees. This is important in systems where both economic efficiency and stability must be taken into account. To the best of our knowledge, no attempt has been made to combine hierarchical and decentralized MPC schemes to manage spatial and temporal scales simultaneously. The multi-grid computing concepts presented in this work seek to take a first step towards creating more general hierarchical control architectures. The proposed multi-grid schemes provide a framework to coordinate decentralized electricity markets. This is done by exchanging state and price (dual information) at the interfaces of the agents domain. The ability to do this hierarchically enables coordination over multiple spatial schemes, in particular, provides a framework to cover large geographical regions that might involve many market players.

2 Analysis of Gauss-Seidel Schemes

This section presents basic concepts and convergence results for a GS scheme under a general convex QP setting. The results seek to highlight how the structure of the coupling between partition variables as well as the coordination sequence affect the performance of GS schemes.

2.1 Illustrative Setting

To introduce notation, we begin by considering a convex QP with two variable partitions (i.e., ). To simplify the presentation, we do not include internal partition constraints and focus on coupling constraints across partitions. Under these assumptions, we have the following lifted optimization problem:

| (4a) | ||||

| s.t. | (4d) | |||

Here, the matrices capture coupling between the variables and . We highlight that, in general, (the coupling between partitions is not symmetric). The matrices and are positive definite and we assume that and have full row rank and that the entire coupling matrix has full row rank. By positive definiteness of and and the full rank assumption of we have that the feasible set is non-empty and the primal and dual solution of (4) is unique. The coupling between partition variables is two-directional (given by the structure of the coupling matrix ). This structure is found in 1-D linear networks. When the coupling is only one-directional, as is the case of temporal coupling, we have that and thus is block lower triangular, indicating that the primal variables only propagate forward in time. We will see, however, that backward propagation of information also exists, but in the space of the dual variables.

The first-order KKT conditions of (4) are given by the linear system:

| (5) |

To avoid solving this system in a centralized manner we use a decentralized GS scheme with coordination update index . The scheme has the form:

| (6a) | ||||

| (6b) | ||||

This scheme requires an initial guess for the variables of the second partition that is used to update and we then proceed to update the variables of the second partition. Note, however, that we have picked this coordination order arbitrarily (lexicographic order). In particular, one can start with an initial guess of the first partition to update the second partition and then update the first partition (reverse lexicographic order). This scheme has the form:

| (7a) | ||||

| (7b) | ||||

We will see that the coordination order affects the convergence properties of the GS scheme. In problems with many partitions, we will see that a large number of coordination orders are possible. Moreover, we will see that coordination orders can be designed to enable sophisticated parallel and asynchronous implementations. A key observation is that the linear systems (6) in the GS scheme are the first-order KKT conditions of the following partition problems and , respectively:

| (8a) | ||||

| s.t. | (8b) | |||

| (8c) | ||||

| s.t. | (8d) | |||

The relevance of this observation is that one can implement the GS scheme by directly solving optimization problems, as opposed to performing intrusive linear algebra calculations zavalamultigrid . This has practical benefits, as one can use algebraic modeling languages and handle sophisticated problem formulations. This also reveals that both primal and dual variables are communicated between partitions. Primal information enters in the coupling constraints. The dual variables enter as cost terms in the objective and highlights the fact that dual variables can be interpreted as prices of the primal variable information exchanged between partitions.

We now seek to establish conditions guaranteeing convergence of this simplified GS scheme. To do so, we define the following matrices and vectors:

| (9a) | ||||

| (9b) | ||||

The partition matrices , are non-singular because the matrices , are positive definite and , have full row rank. Nonsingularity of and implies that the partition optimization subproblems and have a unique solution for any values of the primal and dual variables of the neighboring partition. We can now express in terms of to obtain a recursion of the form:

| (10) |

We can write this system in compact form by defining:

| (11) |

The solution of the -th update step of (6) can be represented as:

| (12a) | ||||

| (12b) | ||||

The solution of the QP (4) (i.e., the solution of the KKT system (5)) solves the implicit system , which can also be expressed as or . Consequently, we note that the eigenvalues of matrix play a key role in the convergence of the GS scheme (8). We discuss this in more detail in the following section.

2.2 General Setting

We now extend the previous analysis to a more general QP setting with an arbitrary number of partitions. Here, we seek to illustrate how to perform lifting in a general case where coupling is implicit in the model and how to derive a GS scheme to solve such a problem. Our discussion is based on the following convex QP:

| (13) |

Here are the optimization variables, is a positive definite matrix, and is the cost vector. For simplicity (and without loss of generality) we assume that (there is only one variable per node). We let represent the -th component of matrix and we let be the variable indices (in this case also the node indices). Problem (13) has a unique solution because the matrix is positive definite.

We focus our analysis on the QP (13) because we note that equality constraints in (1) (with and ) can be eliminated by using a null-space projection procedure. To see how this can be achieved we note that, if has full row rank, we can always construct a matrix whose columns span the null-space of (i.e., for any ) and with . Similarly, we can construct a matrix whose columns span the range-space of (i.e., for any ). We can express any as . We thus have that for all and thus and satisfies for any . With this, we can express the quadratic objective as as , where is a constant. We thus obtain a QP of the same form as (13) but with matrix , reduced cost , and variable vector . We highlight that this reduction procedure does not need to be applied in a practical implementation but we only use it to justify that the formulation (13) is general.

We thus have that the variables in (13) are coupled implicitly via the matrix and we seek to express this problem in the lifted form. We proceed to partition the set into a set of partitions to give the partition sets satisfying and . Coupling between variables arises when for , , and . We perform lifting by defining index sets:

| (14) |

The set includes all the coupled variables in the partition set that are not in partition . The set includes all variables in partition and its coupled variables. These definitions allow us to express problem (13) as:

| (15) |

To induce lifting, we introduce a new set of variables defined as:

| (16) |

where , , is the number of variables in partition , and is the number of variables coupled to partition . With this, we can express problem (15) in the following lifted form:

| (17a) | ||||

| s.t. | (17b) | |||

Here, and are given by:

| (18a) | |||

The coefficient matrices are given by:

| (19) |

where are elementary column vectors. Note that for , , , and , can be included in the objective function of either partition , partition , or both. In the lifting scheme shown in (18a), we assume that these terms are equally divided and included in each partition. However, this approach is arbitrary, and other lifting schemes are possible. In other words, we can manipulate the lifting scheme to set the partition sets to satisfy either or . Interestingly, one can show that the solution of the lifted problem is unique and is the same as that of problem (13). The proof of this assertion is intricate and will not be discussed here due to the lack of space. To simplify the notation we express the lifted variables , matrices , and cost vectors in (17) simply as .

The primal-dual solution of (17) can be obtained by solving the KKT conditions:

| (20) |

By exploiting the structure of this system, we can derive a GS scheme of the form:

| (21) |

Here, we have used a lexicographic coordination order. We note that the solution of the linear system (21) solves the optimization problem:

| (22a) | ||||

| s.t. | (22b) | |||

From this structure, we can see how the primal and dual variables propagate forward and backward relative to the partition , due to the inherent block triangular nature of the GS scheme. We now seek to establish a condition that guarantees convergence of the GS scheme in this more general setting. To do so, we define:

| (23) |

By using (23), we can express (21) as:

| (24) |

This can be expressed in matrix form as:

| (25) |

We can see that the partition matrices are nonsingular by inspecting the block structure of . In particular, by the definition of in (18a) (we denoted as here) and the definition of in (19), we can express as:

| (26) |

where each components of and are defined in (18a). Since is positive definite, is also positive definite. Noting that is nonsingular, we can see that the columns of are linearly independent and thus is nonsingular as well. This implies that the block lower triangular matrix on the left-hand side of (25) is also nonsingular. To simplify notation we define:

| (27a) | ||||

| (27b) | ||||

We express (25) by using the compact form or . The solution of (20) satisfies . This implies that the solution of the lifted problem also solves the original problem (13). We now formally establish the following convergence result for the GS scheme.

Proposition 1

Proof

Matrix is a permutation of matrix . The permutation is a similarity transformation and thus has the same set of eigenvalues as . Consequently, all the non-zero eigenvalues of have a magnitude of less than one. This implies that all the eigenvalues of decay exponentially as . Furthermore, this indicates that the series converges to . Note that is invertible since all the eigenvalue of have magnitude less than one. Accordingly, the solution of the scheme (22) satisfies . This convergence value satisfies equation . Note that this is a unique solution to equation and thus solves (17). Problem (17) has same solution of problem (13), and both problems have unique solutions. Thus, the solution of the GS scheme (22) converges to the solution of (13).

Convergence is achieved without any assumptions on the initial guess . The error between the solution of problem (13) and the solution of the -th coordination update of the GS scheme (21) (i.e., ) is given by . We will call the error of the -th coordination step. If we express the initial error term as , we can write . Moreover, if we define then, for any , there exists such that the bound holds for all and where is a matrix norm of . Using this inequality we can establish that . Since we can choose to be arbitrarily small, we can see that the decaying rate of the error is . Although the error decays exponentially we note that, if is close to one, convergence can be slow and thus having a good initial guess is essential. We also note that is tightly related to the topology of the coupling between partitions, indicating that the partition structure contributes to the convergence rate.

We highlight that the GS concepts discussed here focus on problems with no inequality constraints. In practice, however, GS schemes can also be applied to such problems by using projected GS schemes zavalamultigrid ; borzi2005multigrid ; zavala2010real .

2.3 Coordination Orders

In the lexicographic coordination order proposed in (21), we use the update sequence . We note that the order affects the structure of the matrix and thus convergence can be affected as well. To see this, consider a new order given by the sequence where is a bijective mapping. We can use this mapping to rearrange the partition variables as:

| (28) |

This gives the reordered matrix:

| (29) |

Importantly, the change in update order is not necessarily a similarity transformation of matrix and thus the eigenvalues will be altered. The GS scheme converges as long as eigenvalues of the reordered matrix have magnitude less than one but the convergence rate will be affected. Interestingly, GS schemes are highly flexible and allow for a large number of update orders. For instance, in some cases one can derive ordering sequences that enable parallelization. As an example, the 1-D spatial problem has a special structure with =0 for any such that . The GS scheme becomes:

| (30) |

Instead, we consider the following ordering for and for . Here we assume that the number of partitions is even. This is a called a red-black ordering and is widely popular in the solution of PDEs. By changing the index using , we can express (30) as:

| (31a) | ||||

| (31b) | ||||

and

| (32a) | ||||

| (32b) | ||||

We can thus see that the solution of (31) can proceed independently for any . This is because these partitions only depend on the solutions of (32) but not on the solutions of (31). Likewise, solving (32) can be done independently for any . Red-black ordering thus enables parallelism. Different coordination orders for 1-D and 2-D meshes are presented in Figures 4 and 4.

2.4 Coarsening

Low-complexity coarse versions of the full-resolution problem (1) can be solved to obtain an initial guess for the GS scheme and with this accelerate coordination. To illustrate how this can be done, we use the following representation of (1):

| (33a) | ||||

| s.t. | (33b) | |||

Our goal is to obtain a substantial reduction in the dimension of this problem by introducing a mapping from a coarse variable space to the original space. This is represented by the linear mapping where is the coarse variable and we assume that the mapping (called a restriction operator) has full column rank and . We can thus pose the low-dimensional coarse problem:

| (34a) | ||||

| s.t. | (34b) | |||

A key issue that arises in coarsening is that the columns of matrix do not necessarily span the entire range space of (i.e., we might not be able to find a coarse variable that satisfies ). Consequently, we introduce a constraint aggregation matrix that has full column rank to ensure that spans the range space of . With this, we can ensure that the feasible set of (34) is non-empty.

After solving the coarse problem (34), we can project the primal-dual solution from the coarse space to the original space. We note that the dimension of the dual space is also reduced because we performed constraint aggregation. The projection for the primal solution can be done by using while the projection for the dual solution can be obtained as . The derivation of coarsened representations is application-dependent and often requires domain-specific knowledge. In particular, coarsening can also be performed by using reduced order modeling techniques such as proper orthogonal decompositions antoulas or coherency-based network aggregation schemes kokotovic1982coherency . In the following sections we demonstrate how to derive coarse representations in certain settings.

2.5 Multi-Grid Schemes and Hierarchical Coordination

Multi-grid serves as a bridge between a fully centralized and a fully decentralized coordination schemes. In particular, a fully centralized scheme would aim to find a solution of the full-resolution problem (2) by gathering all the information in a single processing unit. A fully decentralized scheme such as GS, on the other hand, would proceed by finding solutions to subproblems (3) over each partition and information would only be shared between the connected partitions through the coordination variables. A drawback of a decentralized scheme is that a potentially large number of coordination steps might be needed to reach a solution for the full-resolution problem, particularly when many partitions are present.

In a multi-grid scheme, we seek to aid the decentralized scheme by using information from a low-resolution central problem that oversees the entire domain. In our context, the information is in the form of states and dual variables defined over the partition interfaces (i.e., the coupling variables and constraints). The key idea of this hierarchical arrangement is that the coarse central scheme can capture effects occurring at low global frequencies while the agents in the decentralized schemes can handle effects occurring at high local frequencies. As can be seen, multi-grid provides a framework to design hierarchical control architectures by leveraging existing and powerful reduced order modeling techniques such as coherency-based aggregation and decentralized control schemes.

Multi-grid is a widely studied computational paradigm. The framework proposed here presents basic elements of this paradigm but diverse extensions are possible borzi ; brandtalgebraic . For instance, the scheme proposed here involves only a coarse and a fine resolution level but one can create a multi-level schemes that transfer information between multiple scales recursively by using meshes of diverse resolution. This can allow us to cover a wider range of frequencies present in the system. In the following section we illustrate how sequential coarsening can be beneficial.

From an electricity markets perspective, we highlight that multi-grid schemes provide a framework to coordinate transactions at multiple spatial and temporal scales. To see this, consider the case of spatial coordination of electricity markets. Under such setting, we can interpret each partition of the spatial domain as a market player (e.g., a microgrid). The market players have internal resources (e.g., distributed energy resources) that they manage to satisfy their internal load. The players, however, can also transact energy with other players in order to improve their economic performance. The proposed GS scheme provides a mechanism to handle intra-partition decision-making (by solving the partition subproblems) and inter-partition transactions by exchanging state (voltages) and dual information (nodal prices). If transaction information is exchanged multiple times (corresponding to multiple GS iterates), the GS scheme will converge to an equilibrium point corresponding to the solution of the centralized economic maximization problem (e.g., the social welfare problem). This is a useful property of decentralized coordination because centralization of information and decision-making is often impractical. If the players only exchange information once (or a handful of times) they might not reach an optimal equilibrium and an inefficiency will be introduced. Moreover, when a disturbance affects the system, many GS iterations might be needed to reach the new optimal equilibrium. This is where hierarchical optimization becomes beneficial, because one can solve and aggregated spatial representation of the system (in which each partition is treated as a node) to compute approximate dual variables and states at the interfaces of the partitions. This approximation can be used to aid the convergence of the decentralized GS scheme (by conveying global spatial information to local market players). One can think of the coarse high-level problem as a system operator (supervisor) problem (e.g., at the distribution level). The operator might, at the same time, need to coordinate with other system operators (each of which oversees its own set of market players). These system operators can at the same time be aggregated into a higher level which would represent, for instance, a transmission or regional operator. We can thus see that hierarchical multi-grid schemes enable scalable coordination of potentially large number of market players over large geographical regions. The hierarchical multi-grid scheme can also be applied to handle multiple timescales of a single market player that might need to manage, for instance, assets with different dynamic characteristics.

3 Case Studies

We now present numerical case studies to demonstrate the concepts in the context of temporal and spatial management of energy systems. We use a multi-scale (in time) optimization problem with features of an storage management problem and a multi-scale (in space) optimization problem that considers power flow dispatch over a network.

3.1 Multi-Scale Temporal Control

We use a multi-grid scheme to solve the following temporal planning problem :

| (35a) | ||||

| s.t. | (35b) | |||

| (35c) | ||||

This problem has a state, a control, and a disturbance defined over time points contained in the set . The state and control are grouped into the decision variable . The distance between mesh points is given by . The structure of this problem resembles that of an inventory (storage) problem in which the disturbance is a load and is a charge/discharge flow from the storage. In these types of problems, the load might have frequencies covering multiple timescales (e.g., seasonal, daily, and down to seconds). Consequently, the time mesh has to be rather fine to capture all the frequencies. Moreover, the planning horizon (the time domain ) might need to be long so as to capture the low frequency components in the load. We partition the problem into partitions, each containing points. The set of inner points in the partition is defined as . The optimization problem over a partition solved in the GS scheme is given by:

| (36a) | ||||

| s.t. | (36b) | |||

| (36c) | ||||

In our numerical experiments, we set and to give points. The time mesh points were set with . We use a disturbance signal composed of a low and a high frequency . The disturbance signal and its frequency components are shown in Figure 5.

We solve a coarse problem to create a hierarchical structure that aids the GS scheme (which operates on the partitions at high resolution). To perform coarsening, we aggregate internal grid points (i.e., collapse 25 points into one coarse point). The set of inner coarse points is . The projection is and with:

| (37a) | |||

The projection matrices and have full column rank. The fine to coarse restriction can also be expressed as:

| (38) |

where is a round-down operator. We denote . The coarse problem can then be stated in terms of the coarse variables as follows:

| (39a) | ||||

| s.t. | (39b) | |||

| (39c) | ||||

where is the coarsened disturbance signal. The dynamic equations are defined over a smaller dimensional space (defined over ) which results from aggregating the dynamic equations in the full resolution problem (defined over ). We solve the full resolution problem (35) and compare its solution against that of the pure GS scheme, the one of the coarse low resolution problem, and the one of the hierarchical scheme that solves the coarse problem to coordinate the GS scheme. We also solve the coarse problem by using a GS scheme. Figure 6 shows the results. We note that the solution to the coarse problem (36) captures the general long-term trend of the solution but misses the high frequencies. The GS scheme refines this solution and converges in around 30 coordination steps. By comparing Figure 6 (top-right) and (bottom-right), we can see that initializing GS scheme with the coarse solution significantly reduces the initial error, and demonstrates the benefit of the hierarchical scheme.

3.2 Multi-Scale Spatial Control

Useful insights on how to use multi-grid schemes to create hierarchical network control structures result from interpreting network flows as diffusive processes. To illustrate this, we consider a network with nodes defined on a rectangular mesh. A node in the network exchanges flows with its four neighboring nodes (this is called a stencil). The flows are a function of the node potentials and given by:

| (40a) | ||||

| (40b) | ||||

| (40c) | ||||

| (40d) | ||||

Here, is the diffusion constant (i.e., the flow resistance) of the link connecting the nodes. At each node we have a load and a source that is used to counteract (balance) the load. This gives the flow balance conservation equation:

This can also be written in terms of the potentials as:

We assume that we have fixed 2-D spatial domain that is discretized using nodes in each direction. The sets are the sets of points in each direction. The set of total mesh points is and thus . As the number of nodes increases, the node potentials form a continuum described by the 2-D diffusion equation:

| (41) |

Using this analogy, we consider the following full-space problem:

| (42a) | ||||

| s.t. | ||||

| (42b) | ||||

| (42c) | ||||

| (42d) | ||||

| (42e) | ||||

| (42f) | ||||

The goal of the optimization problem is, given the loads , to control the potentials in the network nodes by using the sources . The decision variables at every node are . The presence of multiple frequencies in the 2-D disturbance load field might require us to consider fine meshes, making the optimization problem intractable. One can think of the disturbance field as spatial variations of electrical loads observed over a geographical region. If the loads have high frequency spatial variations, it would imply that we need high control resolution (i.e., we need sources at every node in the network to achieve tight control). This can be achieved, for instance, by installing distributed energy resources (DERs). Moreover, if the load has low-frequency variations, it would imply that the DERs would have to cooperate to counteract global variations.

In our experiments, the size of mesh was set and , which results in mesh points. To address this complexity, we partition the 2-D domain into partitions each with points and we label each element in the partition as . We can think of each partition as a region of the network. We define inner index sets by: and . The GS scheme for partition is given by:

| (43a) | ||||

| s.t. | ||||

| (43b) | ||||

| (43c) | ||||

| (43d) | ||||

| (43e) | ||||

| (43f) | ||||

The constraint indices for the constraints (43c)-(43f) run over and .

To perform coarsening, a mesh of points is collapsed into a single coarse point and the mapping from the coarse space to the original space is:

| (44) |

As with the temporal case, we also perform aggregation of the constraints in the partition to obtain a coarse representations. In our experiments, we used as default. The disturbance field is given by a linear combination of a 2-D sinusoidal and of a Gaussian function. The shape of the load field illustrated in Figure 7. Figure 8 shows the optimal potential field obtained with the coarse problem and that obtained with the GS scheme at the first and tenth steps (initialized with the coarse field). Note that the coarse field error captures the global structure of the load field but misses the high frequencies, while the GS scheme corrects the high-frequency load imbalances. In Figure 9 we again illustrate that the hierarchical scheme outperforms the decentralized GS scheme.

3.3 Effect of Coarsening Strategy

We now compare the efficiency of coarsening with different resolutions on the performance of GS. The results are given in Figure 10 (top left and right) and reveal that using a higher resolution for the coarse problem does not necessarily result in better performance of the GS scheme. This is particularly evident in the temporal case while for the spatial case increasing the mesh resolution does help. We attribute this difference to the asymmetric nature of the temporal problem compared to the symmetric mesh of the spatial case. For the temporal problem, we have found that the most effective coarsening strategy is to solve a sequence of coarse problems with increasing resolution. At each coarsening level, however, we only perform a single GS coordination step and the resulting coordination variables are used to initialize the GS coordination at the next level. The error evolution of this sequential coarsening scheme is shown in Figure 10 (top left). We sequentially solved the coarse problems by using (this gives a total of eight GS steps). We note that, at the tenth GS step, the solution from this sequential coarsening scheme is about seventy times smaller than that obtained with no coarsening. This can be attributed to the ability of the single step GS schemes to cover a wider range of frequencies.

3.4 Effect of Coordination Order

We solved the multi-scale temporal control problem (36) and the spatial control problem (43) with four different ordering methods for each problem. For temporal control problem, ordering method 1 was a lexicographic ordering, ordering method 2 was a reverse lexicographic ordering, ordering method 3 was a forward-backward ordering, and ordering method 4 was the red-black scheme. For the spatial control problem, ordering method 1 was a lexicographic ordering, ordering method 2 was a spiral-like ordering, ordering method 3 was the red-black ordering, and ordering method 4 was set by ordering the partitions based on the magnitude of the disturbance. The results are presented in Figure 10 (top left and right). As can be seen, in temporal problem, the performance of reverse lexicographic ordering is significantly better than that achieved by other methods. This can be attributed to the asymmetry of the coupling topology. In particular, in the temporal problem, the primal variable information is propagated in forward direction while the dual information is propagated in reverse direction. It can be seen that dual information plays an important role in the convergence of the temporal problem. In the spatial problem, the performance of the different orderings is virtually the same. The red-black ordering (which enables parallelism) achieves the same performance as the rest. We attribute this to the symmetry of the spatial domain.

4 Conclusions and Directions of Future Work

We have presented basic elements of multi-grid computing schemes and illustrated how to use these to create hierarchical coordination architectures for complex systems. In particular, we discuss how Gauss-Seidel schemes can be seen as decentralized coordination schemes that handle high-frequency effects while coarse solution operators can be seen as low-resolution centralized coordination schemes that handle low-frequency effects. We believe that multi-grid provides a powerful framework to systematically construct hierarchical coordination architectures but diverse challenges need to be addressed. In particular, it is important to understand convergence properties of GS schemes in more complex settings with nonlinear effects and inequality constraints. Moreover, it is necessary to develop effective coarsening (aggregation) schemes that can retain useful information while reducing complexity. Moreover, it is desirable to combine hierarchical coordination schemes and existing control theory to analyze stability and robustness properties.

5 Acknowledgements

We acknowledge funding from the National Science Foundation under award NSF-EECS-1609183.

References

- (1) Albersmeyer, J., Diehl, M.: The lifted newton method and its application in optimization. SIAM Journal on Optimization 20(3), 1655–1684 (2010)

- (2) Antoulas, A.C., Sorensen, D.C., Gugercin, S.: A survey of model reduction methods for large-scale systems. Contemporary mathematics 280, 193–220 (2001)

- (3) Arnold, M., Negenborn, R., Andersson, G., De Schutter, B.: Distributed predictive control for energy hub coordination in coupled electricity and gas networks. In: Intelligent Infrastructures, pp. 235–273. Springer (2010)

- (4) Baldea, M., Daoutidis, P.: Control of integrated process networks a multi-time scale perspective. Computers & chemical engineering 31(5), 426–444 (2007)

- (5) Biegler, L.T., Zavala, V.M.: Large-scale nonlinear programming using ipopt: An integrating framework for enterprise-wide dynamic optimization. Computers & Chemical Engineering 33(3), 575–582 (2009)

- (6) Borzì, A., Kunisch, K.: A multigrid scheme for elliptic constrained optimal control problems. Computational Optimization and Applications 31(3), 309–333 (2005)

- (7) Borzì, A., Schulz, V.: Multigrid methods for pde optimization. SIAM review 51(2), 361–395 (2009)

- (8) Boyd, S., Parikh, N., Chu, E., Peleato, B., Eckstein, J.: Distributed optimization and statistical learning via the alternating direction method of multipliers. Foundations and Trends® in Machine Learning 3(1), 1–122 (2011)

- (9) Brandt, A.: Algebraic multigrid theory: The symmetric case. Applied mathematics and computation 19(1), 23–56 (1986)

- (10) Camponogara, E., Jia, D., Krogh, B.H., Talukdar, S.: Distributed model predictive control. Control Systems, IEEE 22(1), 44–52 (2002)

- (11) Chow, J.H., Kokotovic, P.V.: Time scale modeling of sparse dynamic networks. Automatic Control, IEEE Transactions on 30(8), 714–722 (1985)

- (12) Diehl, M., Bock, H.G., Schlöder, J.P., Findeisen, R., Nagy, Z., Allgöwer, F.: Real-time optimization and nonlinear model predictive control of processes governed by differential-algebraic equations. Journal of Process Control 12(4), 577–585 (2002)

- (13) Farina, M., Zhang, X., Scattolini, R.: A hierarchical mpc scheme for interconnected systems. arXiv preprint arXiv:1703.02739 (2017)

- (14) Fisher, M.L.: The lagrangian relaxation method for solving integer programming problems. Management science 50(12_supplement), 1861–1871 (2004)

- (15) Giselsson, P., Doan, M.D., Keviczky, T., De Schutter, B., Rantzer, A.: Accelerated gradient methods and dual decomposition in distributed model predictive control. Automatica 49(3), 829–833 (2013)

- (16) Hong, M., Luo, Z.Q.: On the linear convergence of the alternating direction method of multipliers. arXiv preprint arXiv:1208.3922 (2012)

- (17) Jamshidi, M., Tarokh, M., Shafai, B.: Computer-aided analysis and design of linear control systems. Prentice-Hall, Inc. (1992)

- (18) Joo, J.Y., Ilic, M.D.: Multi-layered optimization of demand resources using lagrange dual decomposition. Smart Grid, IEEE Transactions on 4(4), 2081–2088 (2013)

- (19) Kokotovic, P.: Subsystems, time scales and multimodeling. Automatica 17(6), 789–795 (1981)

- (20) Kokotovic, P., Avramovic, B., Chow, J., Winkelman, J.: Coherency based decomposition and aggregation. Automatica 18(1), 47–56 (1982)

- (21) Kozma, A., Frasch, J.V., Diehl, M.: A distributed method for convex quadratic programming problems arising in optimal control of distributed systems. In: Decision and Control (CDC), 2013 IEEE 52nd Annual Conference on, pp. 1526–1531. IEEE (2013)

- (22) Lefort, A., Bourdais, R., Ansanay-Alex, G., Guéguen, H.: Hierarchical control method applied to energy management of a residential house. Energy and Buildings 64, 53–61 (2013)

- (23) Liu, C., Shahidehpour, M., Wang, J.: Application of augmented lagrangian relaxation to coordinated scheduling of interdependent hydrothermal power and natural gas systems. Generation, Transmission & Distribution, IET 4(12), 1314–1325 (2010)

- (24) Negenborn, R.R., De Schutter, B., Hellendoorn, J.: Efficient implementation of serial multi-agent model predictive control by parallelization. In: Networking, Sensing and Control, 2007 IEEE International Conference on, pp. 175–180. IEEE (2007)

- (25) Peponides, G.M., Kokotovic, P.V.: Weak connections, time scales, and aggregation of nonlinear systems. Automatic Control, IEEE Transactions on 28(6), 729–735 (1983)

- (26) Rawlings, J.B., Bonné, D., Jørgensen, J.B., Venkat, A.N., Jørgensen, S.B.: Unreachable setpoints in model predictive control. Automatic Control, IEEE Transactions on 53(9), 2209–2215 (2008)

- (27) Rawlings, J.B., Mayne, D.: Model predictive control. Noble Hill Publishing (2008)

- (28) Scattolini, R.: Architectures for distributed and hierarchical model predictive control–a review. Journal of Process Control 19(5), 723–731 (2009)

- (29) Scattolini, R., Colaneri, P.: Hierarchical model predictive control. In: Decision and Control, 2007 46th IEEE Conference on, pp. 4803–4808. IEEE (2007)

- (30) Simon, H.A., Ando, A.: Aggregation of variables in dynamic systems. Econometrica: journal of the Econometric Society pp. 111–138 (1961)

- (31) Stewart, B.T., Venkat, A.N., Rawlings, J.B., Wright, S.J., Pannocchia, G.: Cooperative distributed model predictive control. Systems & Control Letters 59(8), 460–469 (2010)

- (32) Stewart, B.T., Wright, S.J., Rawlings, J.B.: Cooperative distributed model predictive control for nonlinear systems. Journal of Process Control 21(5), 698–704 (2011)

- (33) Summers, T.H., Lygeros, J.: Distributed model predictive consensus via the alternating direction method of multipliers. In: Communication, Control, and Computing (Allerton), 2012 50th Annual Allerton Conference on, pp. 79–84. IEEE (2012)

- (34) Zavala, V.M.: New architectures for hierarchical predictive control. IFAC-PapersOnLine 49(7), 43–48 (2016)

- (35) Zavala, V.M., Anitescu, M.: Real-time nonlinear optimization as a generalized equation. SIAM Journal on Control and Optimization 48(8), 5444–5467 (2010)

- (36) Zavala, V.M., Anitescu, M.: Real-time nonlinear optimization as a generalized equation. SIAM Journal on Control and Optimization 48(8), 5444–5467 (2010)

- (37) Zavala, V.M., Biegler, L.T.: The advanced-step nmpc controller: Optimality, stability and robustness. Automatica 45(1), 86–93 (2009)

- (38) Zhu, D., Yang, R., Hug-Glanzmann, G.: Managing microgrids with intermittent resources: A two-layer multi-step optimal control approach. In: North American Power Symposium (NAPS), 2010, pp. 1–8. IEEE (2010)