Multi-feature Reconstruction Network using Crossed-mask Restoration for Unsupervised Industrial Anomaly Detection

Abstract

Unsupervised anomaly detection using only normal samples is of great significance for quality inspection in industrial manufacturing. Although existing reconstruction-based methods have achieved promising results, they still face two problems: poor distinguishable information in image reconstruction and well abnormal regeneration caused by model under-regularization. To overcome the above issues, we convert the image reconstruction into a combination of parallel feature restorations and propose a multi-feature reconstruction network, MFRNet, using crossed-mask restoration in this paper. Specifically, a multi-scale feature aggregator is first developed to generate more discriminative hierarchical representations of the input images from a pre-trained model. Subsequently, a crossed-mask generator is adopted to randomly cover the extracted feature map, followed by a restoration network based on the transformer structure for high-quality repair of the missing regions. Finally, a hybrid loss is equipped to guide model training and anomaly estimation, which gives consideration to both the pixel and structural similarity. Extensive experiments show that our method is highly competitive with or significantly outperforms other state-of-the-arts on four public available datasets and one self-made dataset.

Index Terms:

Unsupervised anomaly detection, multi-feature reconstruction, crossed-mask restoration, transformer structure.I Introduction

Anomaly detection aims to identify and locate anomalous regions in images [1], which is a fundamental yet challenging task in visual understanding that has a wide range of real-world applications, such as product quality monitoring [2, 3, 4], medical health diagnosis [5, 6], video surveillance [7, 8], and so on. In the past decades, machine vision-based methods [9, 10] have gained significant attention, and are increasingly replacing the traditional manual inspection, which is time-consuming, subjective and inaccurate. However, these previous works generally rely on the extraction of handcrafted features based on the characteristics of a specific situation, limiting the generalization ability of the algorithms. Since the unprecedented breakthrough of deep learning in computer vision, great efforts have been made to apply it to high-quality anomaly detection. Nevertheless, in most real application scenarios, it is easy to acquire normal examples, but unrealistic to collect and label all types of abnormality, which makes it impossible to apply well-established full-supervised learning methods [11, 12]. Therefore, unsupervised learning methods that only require normal data for training are attracting more and more attention. The two classical paradigms for unsupervised anomaly detection methods are feature-based and reconstruction-based.

The former firstly extracts discriminative features for normal images using deep neural networks, including pre-trained models and self-supervised learning. Then, relevant statistical approaches, such as clustering algorithms or Gaussian models, are utilized to model the distribution of these normal features. During inference, examples that deviate from the learned distribution will be designated as anomalies. Since working on the feature space with a semantically meaningful representation, feature-based methods typically produce promising results. However, they usually lack interpretability, because it is unable to directly determine which part of the image causes a high abnormal score. Some works [13, 14] attempt to obtain anomaly localization by performing evaluations on a large number of image patches respectively through the sliding window strategy, which brings high complexity and limits the practical applications of these methods. Meanwhile, the artificially selected distribution assumption is hard to hold in all abnormal cases, ranging from subtle texture changes (e.g., weak scratches) to larger structural changes (e.g., missing components). This hinders the universal application of feature-based methods.

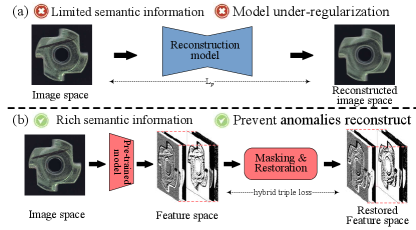

Another typical group of reconstruction-based methods commonly uses deep reconstruction models, such as auto-encoder (AE) and generative adversarial network (GAN), to model the latent representation of the normal data and then reconstruct itself. Since the trained models only have the knowledge specific to the normal samples, when the anomalous sample is fed through this pipeline, non-defective regions in it could be reconstructed reliably, while defective regions will most likely be poorly reconstructed. As a result, the difference between the input and its reconstruction is calculated by some distance metrics to generate an anomaly map, where the high reconstruction errors are designated as anomalies. Although reconstruction-based methods are intuitive and interpretable, their performance is relatively limited due to the several problems demonstrated in Fig.1 (a).

Firstly, most reconstruction-based models often fail to effectively utilize or learn semantic representation, which leads to imperfect anomaly detection in complex datasets. One main reason for this problem is that, unlike feature-based methods that inspect anomalies in feature space, most reconstruction-based models detect anomalies in image space with limited distinguishable information. Meanwhile, a recent study [15] has shown that reconstruction-based models, especially vanilla AE, are likely to capture only low-level features rather than high-level semantic information, which is attributed to the information equivalence among the input and the reestablish target.

Secondly, the under-regularization ability of the reconstruction model makes it challenging to ensure a poor reconstruction of abnormalities. More specifically, reconstruction-based methods assume that reconstruction only succeeds when samples are normal. But due to the strong generalization ability of neural networks, anomalies could be regenerated well unexpectedly in practice, thus resulting in false detection. Several empirical methodologies [7, 16] have been used to address this problem, but the effectiveness remains limited. Consequently, exploring an effective regularization method to prevent the reconstruction model from regenerating anomalies remains an open question.

Thirdly, commonly used loss is a simple per-pixel distance metric, which ignores the inter-dependencies between the local image regions. As a consequence, these pixel-level measures will get high reconstruction errors even with slight localization imprecisions of edges, resulting in many false anomaly detection.

To address the above problems, we propose a universal anomaly detection architecture that combines the advantages of feature-based methods with reconstruction-based methods, named MFRNet. As shown in Fig.1 (b), to better distinguish the normal samples and abnormal samples, we perform reconstruction at the feature space extracted by a frozen pre-trained backbone. The generated multi-scale features have inherently multi-scale context-aware representations, which is beneficial for detecting anomalies with various scales. In addition, we break the information equivalence in traditional auto-encoders and convert anomaly detection into a restoration problem through crossed-masking followed by restoring. The masked regions are regenerated by conditional only on their surrounding context, which can effectively restrict the model’s capability to reconstruct anomalies. Finally, model training and anomaly estimation are performed using a hybrid loss to take into consideration both pixel and structural similarity.

Compared to the previous work, especially the preliminary reconstruction-based models, the contributions of this paper are summarized as follows.

1. We evaluate the difference between normal and abnormal images from the perspective of feature domain and propose an anomaly detection framework based on multi-scale feature reconstruction.

2. We consider the reconstruction task as a restoration problem to break information equivalence, which can learn more distinguishable semantic information and regularize the reconstruction model to prevent abnormal reconstruction.

3. A self-collected Fabric-US dataset containing 180 normal images for training and 400 abnormal images for testing is produced and publicly available. Extensive experiments carried out on Fabric-US and four other public datasets demonstrate the effectiveness and generalization of our method.

The rest of the paper is structured as follows. Section II reviews the related works on anomaly detection briefly, and Section III describes the framework of our proposed method in detail. Next, the experiment results on five datasets and an ablation study are reported in section IV. Then, section V provides some discussions about the proposed method. Finally, we summarize the work and present the future work in Section VI.

II Related work

In this section, we restrict ourselves to an overview of current unsupervised anomaly detection methods for image data, focusing on those based on deep learning. They can be broadly divided into two categories: feature-based and reconstruction-based methods.

II-A Feature-based approaches

One promising solution for unsupervised anomaly detection is to leverage relevant machine learning models to model the distribution of normal image features extracted by either handcrafted feature descriptors or deep neural networks. The images will be designated as anomalous if their corresponding features deviate from the learned distribution. Since the distribution modeling of normal samples and anomaly inference are both in the feature space, such methods are often referred as feature-based approaches. Obviously, the performance of this methodology is primarily influenced by two components: the feature extraction and distribution assumption modules.

For the feature extraction modules, previous studies [9, 10] utilized a number of simple handcrafted feature descriptors, such as texture features or edge features, to represent the images. However, they generally fail to yield impressive detection performance, due to the lack of semantically discriminative features. With the rapid development of deep learning, in recent years, the trend has been shifted to use deep learning methods as feature extractors. SPADE [17] could be the first work that directly used a deep neural network pre-trained on the large-scale image database ImageNet [18] to obtain better feature representation. Subsequently, more and more researchers [13, 19, 20, 21, 22] began to experiment with a variety of popular pre-trained networks, e.g., ResNet, Wide-ResNet or EfficientNet, and also produced decent anomaly detection results. Most of these studies discussed the effect of using different layer features on the experimental results, and declared that the ensemble of multiple feature representations of the networks can offer some benefits. However, there is still no general consensus on how to choose the appropriate feature layers for aggregation to cope with different scenarios. Besides using pre-trained deep networks, significant efforts had also been invested in designing proper self-supervised learning tasks to learn meaningful representations from scratch. The popular proxy tasks include predicting the relative position of a pair of random image patches [23, 24], solving jigsaw puzzles of the randomly disrupted image patches [25], restoring the erased attributes of raw image (color and orientation information) [15], binary classification between the normal samples and the artificial pseudo-anomaly samples [14, 26], obtaining the learned latent features from deep auto-encoders via CNN [27, 28] or vision transformer [29], and so on. Although these elaborate auxiliary tasks have shown great potential in extracting semantic features, the learned representation capacity will be greatly limited by the limited normal training data. Furthermore, such methods require an additional training phase, which is not off-the-shelf like pre-trained features, and they also don’t exhibit significant advantages over the ones using pre-trained features. Very recent works [31, 32] starting with [30] are able to further transform the pre-trained features of normal data into a more simple well-defined distribution, typically Gaussian distribution, by using normalizing flow-based models. Due to being projected to a more tractable distribution, they generally showed favorable detection performance.

After obtaining meaningful feature representation describing normal data, various traditional statistical models are used to attempt to fit it into different hypothetical distributions. Then, the anomaly score can be calculated by the deviation distance between the features of test sample and the established distribution of normal features. Different feature-based methods mainly differ by the distribution estimation module that they adopt. The common distribution assumptions can be the k-nearest neighbours [17, 21], support vector data description (SVDD) [23, 24], Gaussian mixture model [28, 29], multivariate Gaussian model [13, 14, 19], multiple independent multivariate Gaussian clustering [3], etc. Moreover, the seminal work [33] and its successors [34, 35] used a student-teacher distillation scheme for anomaly detection and reached satisfactory results. Since the student networks are trained to regress the output of a teacher network pre-trained on ImageNet during the training, they only learn the normal data manifold and thus can be seen as an estimated distribution. Afterwards, the anomaly scores are derived from the discrepancy between the student network and teacher network. Although feature-based methods are extremely competitive, there are some crucial factors that affect their practical application in anomaly detection: (1) A heuristic distributional assumption is hard to characterize the distribution adequately as the data complexity increases. (2) Most of these methods lack interpretability that they simply predict the anomalies at the image-level without spatial localization. While performing anomaly evaluation for each image patch step-by-step can yield coarse detection results, it is computationally expensive. Remarkably, all of the above approaches indicate that normal and abnormal images are more likely to be distinguishable in feature space. This finding motivates our work to detect anomalies through the reconstruction of pre-trained deep features rather than raw images.

II-B Reconstruction-based approaches

Another mainstream for anomaly detection is the reconstruction-based approach, which typically uses a reconstruction model (AE or GAN, for instance) to encode the latent manifold of training data and then reconstruct itself from there. At the inference stage, anomalous regions cannot be reconstructed faithfully from the learned latent representation of anomaly-free data. Since it is intuitive and explainable, i.e., the anomaly map is directly generated by the difference between the test image and its reconstructed image, this kind of method has received substantial attention.

The most fundamental architecture of reconstruction-based methods, auto-encoder, is essentially an encoder-decoder network. The encoder manages to learn meaningful representations of normal inputs into a latent space, and the decoder attempts to regenerate the inputs from this low-dimensional space. The abnormal data unseen during the training are expected to not be reconstructed as accurately as normal ones, hence making higher reconstruction errors. Over the past few years, various variants of auto-encoder networks had been extensively explored for anomaly detection task. Chow et al. [36] proposed to adopt convolutional AE for anomaly recognition, in which the hidden layers are implement by convolution. While Chen et al. [37] implemented auto-encoder by the emerging Transformer structure to capture more non-local feature information. Further study [38] attempted to inspect anomaly by the variational AE, but did not show significant performance gains over the convolutional AE. Additionally, the training of variational AE is relatively unstable. To improve the robustness of convolutional AE, Mei et al. [39] introduced noise interference into the input images for model training and obtained a better detection performance. Although these models are supposed to only reconstruct normal images accurately, the under-regularization of neural networks sometimes result in anomalies being reproduced well, thus causing misdetection. Lots of efforts have been devoted to tackling this excessive generalization issue. [7] believed that skip connections in most auto-encoders may simply copy the information of abnormal images, and thus proposed a U2-net-like backbone network without skip connections. Some works [16, 40] introduced a memory module into auto-encoder to record the normal patterns of the training samples. Given a defective input, the proposed memory-augmented AE will try to reproduce images using normal features stored in the memory module, reducing the possibility of recreating anomalies. To restrict the representation learning in the latent space explicitly, Liu et al. [4] and Niu et al. [41] proposed the novel auto-encoder with dual prototype loss, which were able to encourage the latent representation of encoder to keep closer to their own prototype, i.e., the center of the latent representation of training images. Very recently, Fei et al. [15] held a view that the cause of anomaly reconstruction may be the failure to extract semantic features in the vanilla AE models. They suggested that the AE models tend to simply compress images instead of learning the abstract semantic representation, due to the information equivalence, namely the same information between the input and output. Later on, many following researchers [42, 43, 44] adopted image inpainting for anomaly localization to break the information equivalence and thus obtained more semantic understanding of the image. In these methods, parts of the sample images are covered by pre-specified masks, and then these hide regions are restored conditioned on their surroundings by the inpainting models. During the testing, anomaly scores are determined by the discrepancy between the masked regions and the corresponding repairs. This effective paradigm also inspires our work to formulate the anomaly detection task as a restoration problem through cross-masking and then recovering. The vast majority of reconstruction-based approaches just perform reconstruction in raw image space, which is insufficient for complex scenarios. To introduce more image information into the reconstruction process, some attempts have been made to execute reconstruction in the space of pre-trained features [37, 45], image pyramids [39], multi-frequency [46, 47] or texture–structure features [48]. Meanwhile, there are some works trying to leverage different distance metrics to assess the difference between inputs and reconstructed outputs. A simple or loss was used in [36, 37, 45] for model training, however, this ignored the inter-dependencies between the neighboring regions. [42, 44] and [49] introduced structural similarity losses to give a better measure for image similarity.

Another typical architecture is the generative adversarial network, which basically comprises a generator and a discriminator. Generator is to establish a mapping from random vectors drawn from a prior distribution to the input image space, while discriminator attempts to differentiate the created fake images by the generator from the real input images. Through a two-player zero-sum game [50], the trained network can learn the data distribution of input training data effectively, thereby producing samples as similar as possible to the normal samples. Initial effort in [5] first applied GAN for anomaly detection. During the testing, the proposed AnoGAN is able to generate a normal image that is visually closest to the input image. And the anomaly map is obtained by comparing the discrepancy between the input image and the corresponding generated image. However, this model requires iterative backpropagation through the generator network to find the closest encoding to the defective image in prior distribution space, which is computationally expensive. To circumvent such problem, later improvement [6] adopted an auto-encoder as the generator network to map the test image to a latent space and then output the normal version of the image. Moreover, the difference between each feature layer in the discriminator network can be regarded as a reliable criterion for identifying anomalies. Following this paradigm, most contemporary research [7, 41, 46] added a discriminant network to the above-mentioned auto-encoders to improve the quality of the generated images by conducting adversarial training. Although some improvements have been made, generative adversarial networks tend to suffer from erratic training and therefore require constantly adjust the training period manually to obtain acceptable results.

III Methodology

The problem definition of unsupervised anomaly detection is presented as follows: given a training dataset consisting only of normal images, the goal is to design a model that can determine whether an unseen test image is defective or not and further locate anomalous regions in the defective images. To solve this problem effectively, we develop a multi-feature reconstruction network using crossed-mask restoration. As shown in Fig.2, it is mainly composed of three parts: (1) a multi-scale feature aggregator to extract discriminative hierarchical features of the raw image; (2) a crossed-mask restoration network trained over the generated multi-scale representation to partially cover and recover it again; (3) a hybrid loss to measure the discrepancy between the input and regeneration more comprehensively. Since our model is trained only with normal images, the feature restoration of abnormal regions will fail, and the restoration degree can be used for anomaly inference. In the following subsections, our anomaly detection framework will be described elaborately.

III-A Multi-scale feature aggregator

It has been certified that feature representation from a pre-trained model could provide more distinguishable information for identifying normal and abnormal images [33]. Therefore, a frozen pre-trained model is firstly adopted to extract multi-scale features in this paper. Take VGG16 [51] as an example, we can obtain a set of feature maps with different sizes for a raw image , where means the output of -th convolutional layer. To achieve effective alignment, these feature maps are resized to the same size, and concatenated along the channel axis to produce a multi-scale feature map:

| (1) |

where and denote the operations of concatenation and resize, respectively. , and are height, width and channel dimensions of the produced feature map.

Obviously, feature maps from different convolutional layers contain different information and thus are sensitive to the different abnormalities. Earlier layers output higher-resolution maps encoding more low-level information (e.g., texture and edge), while latter layers output lower-resolution maps encoding richer semantic information. As a result, the aggregation of these complementary multi-scale features plays an important role in anomaly detection.

III-B Crossed-mask restoration network

After the multi-scale features of the image are ready, we leverage a crossed-mask restoration network to extend the reconstruction-based approach for anomaly localization. Specifically, a set of complementary crossed masks are generated to formulate a restoration task, followed by a restoration network trained to predict the covered regions. In this way, the difference between the masking region and the corresponding restoration is going to be significant for anomalous regions. By covering and restoring the partial regions of the input, our method can gain a much deeper semantic understanding of the scene, and regularizes the reconstruction model to prevent anomalies from being reconstructed well.

III-B1 Crossed-mask generator

An all-zero mask with the same size as the multi-scale feature map is uniformly partitioned into a grid of square regions of the size . And then these grids are randomly divided into disjoint sub-masks . Therefore, we have . These complementary sub-masks, after being replicated along the channel dimension, are respectively multiplied by the multi-scale feature map to obtain , which serves as the input for the later restoration network. Since these complementary sub-masks can jointly cover all the pixels of the feature map, any possible anomalous regions will not be lost. For convenience, we illustrate the masking operation with parameters and on a single-channel feature map in Fig.3.

Since the sizes of anomalies are diverse, multiple restorations using different values sampled from a set should be considered to obtain more reliable detection results. Meanwhile, the parameter could influence the representation capacity and repairing quality of restoration network, thus determining the performance of anomaly detection. Therefore, the proper match of parameters and is of great importance for our method, which will be discussed in Section ‘Discussion’.

III-B2 Restoration network

Given a masked feature map , we employ a concise yet powerful restoration network to imagine visually reasonable and semantically consistent content for the missing regions. As shown in Fig.4 (a), it overall follows the principle of the conventional auto-encoder with skip connections, and is mainly composed of a head convolution, a tail convolution and a hybrid transformer body, combining the merits of CNN and recently emerging transformer structures [52].

The head convolution is used to reduce the input feature dimension to using a convolutional layer with the kernel size of 33. The benefits are twofold: On the one hand, this dimensionality reduction operation can greatly reduce computational complexity and memory overhead. On the other hand, it can facilitate the information interaction across channels, resulting in a richer representation capability. The tail convolution is structurally identical to the head convolution, also a 33 convolution, and is located behind the hybrid transformer body. It is designed to modulate the feature dimension to match the original input for anomaly estimation. The hybrid transformer body is comprised of five stages, each of which involves a stack of hybrid transformer blocks. Furthermore, a down-sampling layer is used to halve the size and double the channel of the input map in the first two stages, while an up-sampling layer is used to do the opposite in the last two stages. Both of these are implemented by convolutions. Fig.4 (a) shows the corresponding feature sizes and the number of hybrid transformer blocks in each stage. The details of the hybrid transformer block are presented below.

The hybrid transformer block is a light-weight transformer variant, which is illustrated in Fig.4(b). We abandon the complex absolute or relative positional encodings used in previous transformer architectures and adopt a more convenient and efficient locally position-aware (LPA) module. Structurally, LPA is a 33 convolution with zero padding 1, which can implicitly provide local spatial relationships for our hybrid transformer block. Subsequently, Layer normalization (LN) and residual connection are applied at both ends of the multi-pooling self-attention (MPSA) and convolutional feed-forward network (CFFN) respectively. CFFN is made up of two 11 convolutions and one 33 convolution with activation functions in between for non-linear feature transformation. To lower the high computational cost of previous self-attention module, we apply a multi-pooling operation to the computation of key and value matrices in our MPSA. Specifically, suppose the input of our MPSA is , then the output is formulated as:

| (2) |

where , and are the linear projections used to calculate the query, key, and value matrices. The notation is the dimension of for scale balance. is a concatenation of after passing through multiple average pooling layers with a set of ratios , i.e., . For sufficiently large pooling ratios, can be a smaller substitute for and contain all of its contextual information.

The transformer structure in our restoration network can explicitly learn long-range dependencies to speculate the semantically plausible appearance, while the intrinsic locality of CNN makes it more adept at extracting local features to recover the texture details. Consequently, the high-quality reconstruction for each masked map can be generated independently. And the final feature reconstruction result is a union of the newly restored regions in each partial reconstruction, i.e., .

III-C Hybrid loss

To enable our crossed-mask restoration network to reconstruct the input well, a hybrid loss is adopted in this paper. It combines three loss functions to take into consideration both the pixel and structural similarity.

III-C1 Contextual loss

To explicitly force the network to generate contextually similar feature map, we apply a -loss between the multi-scale feature map of an image and it’s corresponding regenerated feature map . It is expressed as:

| (3) |

However, such per-pixel measure could ignore the interdependency between the neighboring regions, which is often unreasonable. Therefore, we additionally introduce two similarity metrics, including structural similarity index (SSIM) [53] and gradient magnitude similarity (GMS) [54] to perceive different structural properties.

III-C2 SSIM loss

The SSIM loss is denoted as:

| (4) |

where 1 means a matrix of ones with the same size as . is the structural similarity map between and . The value of each coordinate in the map is the value between two patches and cropped from and centered at , which can be denoted as:

| (5) |

where , , and are the mean and variance of and respectively, and is the covariance of them. and mean two small positive constants to avoid dividing by zero.

III-C3 GMS loss

The GMS loss is represented as:

| (6) |

where denotes the gradient magnitude similarity map between and , which is shown below:

| (7) |

where is small positive constant to avoid instability. is the gradient magnitude map for that can be computed by , where and indicate two 33 Prewitt filters along the horizontal and vertical directions and symbol denotes the convolution operation.

Finally, the hybrid loss is a sum of the above losses:

| (8) |

IV Experiment results

The experimental settings, including evaluation metrics, datasets description, implementation details and competing methods, are first introduced. Then, the generalization ability and superiority of our method are demonstrated by analyzing the comparison results against state-of-the-art methods on four publicly-available datasets and our newly-built dataset. Finally, we also report the performance of our method with different components to illustrate the contribution of the individual designs.

IV-A Experimental setups

IV-A1 Evaluation metrics

Besides the visual comparison, we introduce several evaluation metrics for a more comprehensive and objective assessment. Following the recent work [2] on anomaly detection, Area Under the Receiver Operating Characteristics (AUROC) is adopted as the primary indicator for performance evaluation. Meanwhile, to increase the diversity of evaluation standards, we also introduce three widely-used criterions in segmentation task, including MAE, ACC and F1-score. As the general rule, higher AUROC, ACC, F1 score, and lower MAE mean better anomaly detection performance.

MAE represents the mean absolute pixel-wise difference between the predicted detection result and its ground truth , formulated as:

| (9) |

where and denote the width and height of image, respectively, and represents the image pixel coordinates.

ACC refers to the accuracy rate, which is computed by the ratio of the properly predicted pixels and the total pixels. As expressed in the following formula:

| (10) |

where TP, TN, FP and FN indicate the number of true positive (defective pixels correctly detected), false positive (normal pixels misclassified as defective), true negative (defect-free pixels successfully detected) and false negative (defective pixels misclassified as normal), respectively.

F1 score denotes an overall metric for performance evaluation, calculated by the harmonic mean of and , as follows:

| (11) |

where denoted as is the fraction of defective pixels among the detected pixels. expressed as is the fraction of anomalous pixels that are correctly identified.

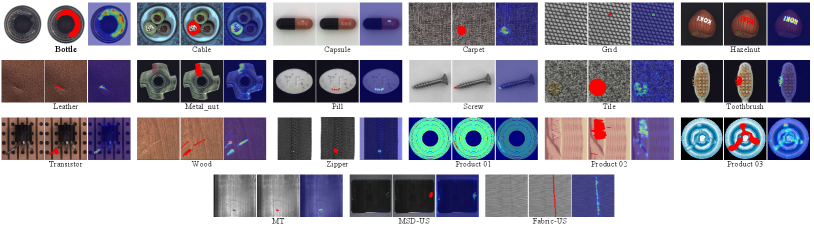

IV-A2 Datasets description

a) MVTec AD dataset [2]: It is the first comprehensive real-world industrial image dataset for anomaly detection, which is composed of 5,354 high-resolution images with a range from 700700 to 10241024 pixels. There are five texture and ten object categories from different application scenarios. Each category consists of around 60 to 391 normal images for training, and the remaining normal images as well as anomaly images with various types of defect for testing. The relatively small number of training images poses a challenge for learning effective deep representations.

b) BTAD dataset [29]: The dataset is made up of three different types of industrial products, called product 01, product 02 and product 03. The training sets for the three categories include 400 (16001600 pixels), 399 (600600 pixels) and 1000 (800600 pixels) defect-free images, respectively, while the testing set is a mixture of normal images and abnormal images. For each anomalous image, there is a detailed pixel-wise ground truth annotation. The high complexity of texture makes it challenging for anomaly detection.

c) MT dataset [55]: This dataset is a magnetic tile surface defect dataset, which involves 392 defective and 925 defect-free images with varied illuminations and image sizes. There are five defect types including blowhole, crack, fray, break and uneven occurring in the testing set. In our implementation, the 925 normal samples are served as the training set and 392 abnormal samples as the testing set. The existence of pseudo-defects and uneven lighting causes great difficulty in the detection of defects.

d) MSD-US dataset [56]: Such dataset is a recently released mobile phone screen surface defect dataset collected by an industrial camera. It contains of three types of man-made defects: oil, stain and scratch. Each class consists 400 images with 19201080 pixels for testing, and the corresponding ground truth masks are given. To follow the unsupervised setting, 20 extra non-defective images are provided for training. Uneven illumination at the edge of screen brings great challenges to anomaly detection.

e) Fabric-US dataset: This is a novel dataset of fabric images collected by our proposed acquisition equipment shown in Fig.5. The acquisition system primarily consists of line-scan digital cameras, strip lighting, and transmission roller. The line-scan cameras are equipped with high-precision CCD sensors, offering a lateral resolution of 4K and capable of achieving a maximum scanning speed of 16 meters per second. The strip light is strategically positioned perpendicular to the fabric surface, ensuring sufficient brightness to enhance the visibility of defects and make them more discernible. Due to the inherent softness and flexibility of fabric, the transmission roller facilitates the controlled movement and rolling of the material during the scanning process, ensuring stability and consistent image capture. This integrated system provides a reliable and efficient platform for high-quality fabric image acquisition, supporting detailed analysis and defect detection. Finally, 180 normal images are captured to serve as a training set, and 400 typical defect images are adopted as abnormal samples for the testing. The resolution of each raw image is 512512 pixels. Since the weak defect features, this dataset becomes challenging. This dataset is the unsupervised version of previous Fabric dataset [57], thus named Fabric-US (UnSupervised), and is available to the public111https://pan.baidu.com/s/19gZoBxI9AUCQUTTBxyq8mA?pwd=0927.

| Methods | Bottle | Cable | Capsule | Carpet | Grid | Hazelnut | Leather | Metal_nut | Pill | Screw | Tile | Toothbrush | Transistor | Wood | Zipper | Mean |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Patch-SVDD [23] | 49.97 | 25.86 | 6.62 | 22.77 | 5.86 | 22.69 | 14.05 | 41.58 | 19.91 | 1.66 | 21.48 | 29.78 | 20.46 | 17.92 | 18.54 | 21.28 |

| SPADE [17] | 48.79 | 35.20 | 28.44 | 46.83 | 22.23 | 38.22 | 32.64 | 41.15 | 28.45 | 17.65 | 47.41 | 23.30 | 43.31 | 39.99 | 40.15 | 35.58 |

| GCPF [3] | 59.47 | 49.55 | 23.52 | 49.10 | 27.01 | 52.2 | 34.7 | 42.37 | 25.55 | 11.83 | 55.27 | 16.63 | 41.38 | 42.27 | 41.44 | 38.15 |

| AE-ssim [49] | 46.43 | 18.46 | 15.75 | 11.71 | 8.90 | 49.54 | 39.59 | 40.1 | 22.71 | 26.79 | 14.64 | 39.09 | 36.65 | 16.31 | 30.82 | 27.83 |

| GANomaly [8] | 13.08 | 14.73 | 15.66 | 16.37 | 16.52 | 29.44 | 28.47 | 18.48 | 19.93 | 20.83 | 20.83 | 28.74 | 11.20 | 19.54 | 5.42 | 18.62 |

| STPM [58] | 62.41 | 49.41 | 19.64 | 49.31 | 26.55 | 41.07 | 16.11 | 44.98 | 38.25 | 16.34 | 46.91 | 26.76 | 49.18 | 38.39 | 40.46 | 37.72 |

| MKDAD [34] | 59.38 | 27.27 | 30.93 | 53.21 | 29.89 | 39.29 | 44.94 | 50.37 | 35.10 | 12.95 | 43.73 | 34.42 | 42.76 | 46.02 | 37.38 | 39.18 |

| DifferNet [30] | 10.26 | 9.69 | 10.50 | 15.92 | 13.50 | 15.84 | 25.93 | 11.32 | 14.82 | 10.01 | 9.97 | 16.74 | 9.93 | 10.66 | 15.42 | 13.37 |

| CS-Flow [32] | 44.30 | 35.48 | 21.79 | 43.96 | 24.89 | 30.53 | 34.98 | 41.10 | 25.13 | 18.40 | 46.68 | 24.78 | 38.35 | 31.81 | 43.23 | 33.69 |

| DFR [45] | 49.73 | 40.64 | 54.03 | 53.61 | 51.45 | 59.31 | 60.87 | 54.52 | 47.85 | 40.61 | 41.82 | 45.97 | 39.93 | 44.90 | 51.26 | 49.10 |

| OCR-GAN [46] | 8.94 | 3.05 | 11.02 | 1.21 | 1.81 | 16.05 | 8.56 | 3.37 | 4.72 | 7.12 | 4.51 | 4.79 | 2.32 | 7.63 | 5.17 | 6.02 |

| UTRAD [37] | 31.32 | 4.45 | 14.94 | 16.13 | 10.82 | 23.41 | 37.54 | 11.04 | 13.58 | 0.72 | 21.99 | 19.35 | 6.35 | 26.20 | 18.56 | 17.09 |

| RIAD [42] | 52.52 | 33.64 | 44.91 | 37.37 | 39.98 | 52.24 | 51.26 | 44.14 | 27.47 | 31.48 | 30.91 | 40.10 | 40.62 | 36.36 | 52.96 | 41.06 |

| SSM [44] | 37.75 | 25.15 | 34.03 | 17.35 | 35.50 | 54.23 | 52.87 | 30.73 | 25.31 | 31.8 | 23.31 | 33.34 | 28.73 | 34.83 | 33.70 | 33.24 |

| Ours | 64.01 | 49.50 | 55.30 | 57.21 | 52.65 | 57.21 | 61.49 | 50.75 | 45.59 | 43.36 | 48.32 | 47.09 | 47.65 | 47.50 | 53.97 | 52.11 |

IV-A3 Implementation details

For the input image, its resolution is resized to 256256 uniformly, and its channel is triplicated when encountering a grayscale image. For the multi-scale feature aggregator, we choose a frozen pre-trained model, VGG16, as the feature extractor by default. Considering the computational cost, the outputs of its first three blocks are selected for the experiments, and the size of the obtained feature map is resized to 6464. The parameters and in crossed-mask generator are set to 3 and , respectively. The set of pooling ratios and in restoration network are empirically set to and 64.

During the training phase, our model is optimized by Adam optimizer with a learning rate of , a weight decay of and a batch size of 6 for 400 epochs. The model is implemented by PyTorch and executed on a PC with an Intel i7-12700F CPU and an NVIDIA GeForce RTX 3090 GPU.

During the testing phase, the final anomaly map is an average of the anomaly maps generated by all values in the set respectively. And the individual anomaly map for each is computed by measuring the difference between the normalized input feature map and the normalized reconstructed feature map using

IV-A4 Competing methods

We compare the proposed method against the following state-of-the-art unsupervised anomaly detection methods: 1) feature-based methods SPADE [17], Patch SVDD [23], GCPF [3], DifferNet [30], CS-Flow [32], MKDAD [34] and STPM [58]; as well as 2) reconstruction-based methods AE-ssim [49], GANomaly [8], OCR-GAN [46], DFR [45], UTRAD [37], RIAD [42] and SSM [44]. To ensure fair comparisons, we re-execute the released codes with recommended parameters of the above methods on our computation platform.

IV-B Comparisons with State-of-the-arts

IV-B1 Detection Results on MVTec AD

In Table I, the proposed MFRNet is compared with recent anomaly detection methods on each category of the MVTec AD dataset. As we can see, our method outperforms all other methods in terms of F1 in nine out of fifteen categories. Moreover, in the remaining six categories, our method also achieves the second-best performance, which is very close to the best. Among these six categories, DFR performs the best on the hazelnut, metalnut and pill, but is prone to significant performance degradation on the transistor and cable. Similarly, although GCPF yields the top one F1 on the cable, it is inferior to ours on the capsule and screw by a great gap. On the transistor category, the same situation occurred with the STPM. On the contrary, our approach produces more consistently excellent performance across all categories and achieves the highest mean F1 score 52.11. Therefore, it can be concluded that the proposed MFRNet is not limited to specific defects and is highly adaptable to different objects, shapes and texture variations.

IV-B2 Detection Results on BTAD

| Methods | Product 01 | Product 02 | Product 03 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AUROC | MAE | ACC | F1 | AUROC | MAE | ACC | F1 | AUROC | MAE | ACC | F1 | |

| Patch-SVDD | 76.13 | 13.71 | 85.14 | 17.85 | 43.98 | 36.11 | 60.97 | 15.43 | 77.53 | 12.28 | 86.89 | 16.35 |

| SPADE | 82.84 | 39.81 | 60.03 | 15.10 | 81.17 | 46.66 | 54.04 | 23.52 | 81.46 | 57.24 | 43.56 | 13.62 |

| GCPF | 77.87 | 26.85 | 72.76 | 14.35 | 84.91 | 25.55 | 72.60 | 28.16 | 82.76 | 27.10 | 71.60 | 20.41 |

| AE-ssim | 84.37 | 14.57 | 84.21 | 21.77 | 54.22 | 19.92 | 76.20 | 14.89 | 84.48 | 11.38 | 87.06 | 18.56 |

| GANomaly | 85.74 | 6.75 | 90.60 | 15.58 | 56.16 | 9.37 | 86.61 | 7.68 | 76.09 | 4.87 | 94.35 | 8.60 |

| STPM | 87.78 | 23.13 | 75.85 | 28.12 | 80.78 | 34.08 | 65.11 | 28.69 | 86.38 | 34.11 | 65.53 | 21.74 |

| MKDAD | 91.81 | 20.02 | 77.46 | 42.06 | 75.82 | 26.02 | 71.70 | 25.49 | 92.15 | 28.10 | 71.14 | 34.21 |

| DifferNet | 67.77 | 5.61 | 89.25 | 7.33 | 65.92 | 7.24 | 87.87 | 6.89 | 68.79 | 3.34 | 93.05 | 5.58 |

| CS-Flow | 78.14 | 14.70 | 81.22 | 28.38 | 63.26 | 26.54 | 73.13 | 19.61 | 73.16 | 13.10 | 85.47 | 13.75 |

| DFR | 92.66 | 9.68 | 89.76 | 45.01 | 90.44 | 16.94 | 81.01 | 30.50 | 93.62 | 15.21 | 79.93 | 41.93 |

| OCR-GAN | 72.48 | 6.88 | 95.62 | 3.56 | 56.19 | 7.06 | 94.88 | 2.56 | 62.78 | 4.25 | 97.57 | 1.22 |

| UTRAD | 72.43 | 5.50 | 92.50 | 21.50 | 57.16 | 7.42 | 93.07 | 6.48 | 58.24 | 2.96 | 97.94 | 4.73 |

| RIAD | 87.66 | 21.66 | 76.82 | 26.30 | 73.10 | 23.30 | 72.42 | 27.15 | 82.35 | 18.54 | 80.49 | 13.54 |

| SSM | 85.34 | 15.28 | 83.93 | 19.76 | 66.29 | 8.20 | 89.37 | 13.62 | 82.34 | 10.22 | 89.36 | 12.70 |

| Ours | 91.52 | 5.27 | 94.12 | 43.58 | 92.45 | 6.39 | 95.41 | 35.30 | 94.34 | 2.67 | 98.88 | 42.08 |

The quantitative comparison of different methods for each product of the BTAD dataset is listed in Table II. Since it is not very convincing to perform well on only one indicator, four metrics are used for a more comprehensive evaluation. It can be seen from Table II that for almost all four evaluation metrics, our method holds the best performance on each type of product. Specifically, for the product 01, our MFRNet ranks first in terms of MAE and can be in the top three in the other three metrics. Although DFR achieves slightly better AUROC and F1 scores, our approach outperforms it with respect to MAE and ACC scores by a large margin. And OCR-GAN has a higher ACC compared to ours, but its AUROC and F1 scores are extremely poor. Concerning the product 02 and product 03, our MFRNet consistently surpasses the other methods in terms of all four metrics. Remarkably, our approach far exceeds the second-best on the F1 score, obtaining a significant improvement of 15.7 and 0.36, respectively. The above results suggest that the proposed MFRNet has a more comprehensive performance compared to other existing methods.

IV-B3 Detection Results on MT, MSD-US and Fabric-US

| Methods | MT | MSD-US | Fabric-US | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AUROC | MAE | ACC | F1 | AUROC | MAE | ACC | F1 | AUROC | MAE | ACC | F1 | |

| Patch-SVDD | 73.33 | 28.61 | 70.56 | 25.23 | 83.32 | 12.97 | 85.98 | 13.16 | 69.19 | 34.30 | 64.88 | 11.52 |

| SPADE | 69.82 | 30.90 | 68.13 | 23.91 | 64.78 | 21.64 | 77.29 | 6.82 | 85.07 | 17.75 | 80.67 | 16.23 |

| GCPF | 71.65 | 24.81 | 73.79 | 23.19 | 62.69 | 16.04 | 82.64 | 7.47 | 84.71 | 12.68 | 85.56 | 16.90 |

| AE-ssim | 79.49 | 21.25 | 80.63 | 21.59 | 90.01 | 13.06 | 86.35 | 12.44 | 87.78 | 8.09 | 89.27 | 29.22 |

| GANomaly | 61.69 | 11.24 | 87.83 | 10.56 | 83.41 | 2.63 | 89.94 | 19.84 | 84.48 | 1.15 | 94.73 | 26.10 |

| STPM | 64.31 | 35.55 | 64.21 | 21.63 | 77.56 | 28.88 | 70.54 | 7.14 | 82.18 | 20.91 | 77.82 | 15.82 |

| MKDAD | 84.28 | 23.42 | 77.77 | 35.74 | 97.51 | 13.01 | 86.06 | 32.90 | 88.22 | 12.45 | 85.67 | 30.56 |

| DifferNet | 78.98 | 9.56 | 90.56 | 17.09 | 89.99 | 2.16 | 94.76 | 24.84 | 77.65 | 1.88 | 90.28 | 20.14 |

| CS-Flow | 56.83 | 28.97 | 68.53 | 21.63 | 90.21 | 11.89 | 86.63 | 12.33 | 83.71 | 8.18 | 89.75 | 17.67 |

| DFR | 89.14 | 12.49 | 87.31 | 40.32 | 98.05 | 7.53 | 90.64 | 43.64 | 88.67 | 6.51 | 90.36 | 39.34 |

| OCR-GAN | 58.21 | 9.72 | 91.25 | 3.74 | 80.75 | 1.13 | 90.84 | 19.69 | 71.10 | 1.87 | 91.68 | 7.96 |

| UTRAD | 51.94 | 8.69 | 98.19 | 1.37 | 74.94 | 4.80 | 94.35 | 5.84 | 78.35 | 14.38 | 83.60 | 22.59 |

| RIAD | 73.40 | 29.64 | 69.74 | 27.50 | 97.96 | 10.74 | 87.91 | 39.72 | 85.79 | 20.22 | 76.68 | 28.60 |

| SSM | 76.82 | 13.69 | 86.50 | 27.50 | 97.26 | 3.46 | 95.83 | 38.30 | 84.23 | 6.43 | 88.48 | 37.54 |

| Ours | 88.86 | 7.07 | 98.75 | 42.11 | 98.32 | 2.17 | 95.75 | 43.11 | 88.78 | 1.80 | 96.89 | 39.66 |

Table III shows quantitative comparisons of different methods on the MT, MSD-US and Fabric-US datasets. On the MT dataset, it can be found that our detection method performs the best on the MAE, ACC and F1 scores and is only slightly inferior to the top-performing method DFR in terms of AUROC. Although DFR yields a marginally higher AUROC, the proposed MFRNet obtains remarkable improvements of 18.6 and 13.1 in terms of MAE and ACC respectively. Concerning the MSD-US dataset, our method surpasses other compared methods in terms of AUROC, with the highest score of 98.32. In addition, the MAE, ACC and F1 scores of our method are also of great impression, reaching 2.17, 95.75 and 43.11, respectively. For the Fabric-US dataset, our method markedly outperforms the other methods on most of the metrics, except MAE. Despite having the best MAE of GANomaly, it fails in the F1 metric. In general, as reported in Table III, our MFRNet is one of the top contenders in all evaluation metrics across the three industrial datasets, indicating that MFRNet can be an efficient solution for a variety of anomaly detection tasks.

IV-B4 Inference speed

| Methods | MVTec AD | BTAD | MT | MSD-US | Fabric-US | Mean |

|---|---|---|---|---|---|---|

| Patch-SVDD | 0.36 | 0.45 | 1.66 | 0.15 | 0.14 | 0.55 |

| SPADE | 3.83 | 5.04 | 7.33 | 3.14 | 3.59 | 4.59 |

| GCPF | 1.15 | 1.73 | 2.65 | 1.15 | 1.34 | 1.60 |

| AE-ssim | 0.14 | 0.08 | 0.02 | 0.13 | 0.04 | 0.08 |

| GANomaly | 0.16 | 0.10 | 0.04 | 0.15 | 0.06 | 0.10 |

| STPM | 0.13 | 0.10 | 0.03 | 0.17 | 0.06 | 0.10 |

| MKDAD | 0.81 | 0.69 | 0.61 | 0.35 | 0.74 | 0.64 |

| DifferNet | 0.40 | 0.34 | 0.29 | 0.42 | 0.31 | 0.35 |

| CS-Flow | 0.17 | 0.12 | 0.07 | 0.17 | 0.09 | 0.12 |

| DFR | 0.16 | 0.12 | 0.03 | 0.41 | 0.06 | 0.16 |

| OCR-GAN | 0.23 | 0.23 | 0.08 | 0.26 | 0.14 | 0.19 |

| UTRAD | 0.10 | 0.10 | 0.04 | 0.12 | 0.06 | 0.08 |

| RIAD | 0.43 | 0.37 | 0.31 | 0.47 | 0.35 | 0.39 |

| SSM | 0.33 | 0.28 | 0.20 | 0.34 | 0.24 | 0.28 |

| Ours | 0.35 | 0.32 | 0.28 | 0.39 | 0.27 | 0.32 |

We report the running time of different methods on five datasets for efficiency comparison. During the testing phase of MFRNet, multiple feature restorations are required to synthesize the detection results, which should be time-consuming. However, as listed in Table IV, our method achieves an average inference time of 0.32s per image, which is in the middle of the existing methods, and acceptable for practical application requirements. We argue that our method can attain this considerably competitive speed, thanks to the light-weight structure of our restoration network. Meanwhile, parallel computation of multiple feature restorations or accelerated implementation with TensorRT can significantly reduce inference time. Furthermore, by utilizing smaller and in the crossed-mask generator, we can further obtain a more efficient detection method with only a small degradation in performance. The details can be seen in Section ‘Discussion’. In summary, the proposed method exhibits relatively high efficiency, while maintaining superior performance and strong applicability, making it a practical choice for real-world scenarios.

IV-C Ablation Study

To validate the effectiveness of each core component in our MFRNet, we conduct a series of ablation experiments on the bottle category of MVTec AD dataset. The first row in Table V indicates the baseline, which replaces the hybrid transformer block of restoration network with convolutional block and is trained directly on the normal image space using contextual loss . Based on this, we incrementally add the following two types of components: a) architecture: multi-scale feature aggregator (MFA), crossed-mask generator (CG) and hybrid transformer-based restoration network (HTR); b) loss: SSIM loss () and GMS loss (). The detailed results for each configuration are given below.

IV-C1 Architecture ablation

As listed in Table V, the baseline holds an unsatisfactory result and leaves room for improvement, due to the limited distinguishable information in image space and under-regularization of the model. Then, instead of reconstructing the raw image as in baseline, we attempt to reconstruct the corresponding feature map, which can provide more distinguishable representation. From the second row of Table V, we can observe that this modification is extremely beneficial, achieving a reduction of 10.7 in MAE, and contributing gains of 9.3, 7.6 and 37.5 in AUROC, ACC and F1 metrics respectively, compared to the baseline. We can also see that the reconstruction of the feature map can significantly suppress erroneously reported anomalies in Fig. 6(d). Next, the reconstruction problem is converted into a restoration problem by introducing CG module, and the convolutional network in baseline is still utilized to repair the masked feature map. We observe a substantial improvement in AUROC (86.68 v.s. 91.79), MAE (7.93 v.s. 7.65), ACC (85.73 v.s. 88.56) and F1 (42.85 v.s. 50.79). It can also be noted from Fig. 6(e) that the problem of anomalies being well reconstructed has been effectively addressed. Besides, when using the proposed restoration network with hybrid transformer blocks, the overall performance is further increased, showing it enjoys the benefits of both long-range contextual information and low-level details. A similar observation can be found in Fig. 6(f).

| Architecture | Loss | AUROC | MAE | ACC | F1 | ||||

| MFA | CG | HTR | |||||||

| 79.31 | 8.88 | 79.70 | 31.16 | ||||||

| 86.68 | 7.93 | 85.73 | 42.85 | ||||||

| 91.79 | 7.65 | 88.56 | 50.79 | ||||||

| 95.54 | 7.22 | 90.07 | 57.01 | ||||||

| 96.86 | 6.96 | 90.80 | 61.33 | ||||||

| 98.43 | 6.88 | 91.29 | 64.01 | ||||||

IV-C2 Loss ablation

When a structural loss is added to the training and testing of our entire architecture, the AUROC, ACC and F1 are respectively increased by 1.4, 0.8 and 7.6, while the MAE is reduced by 3.6. Finally, as can be seen in the last row of Table V, our MFRNet, equipped with hybrid loss, sets a new state-of-the-art and achieves 98.43 AUROC, 6.88 MAE, 91.29 ACC and 64.01 F1. Moreover, the visual comparison of our MFRNet under different losses is also presented in Fig. 6 to signify the importance of the hybrid loss in a more intuitive way. It is clear that can detect anomalous areas, but the defective areas are relatively discrete due to the per-pixel error measure. In contrast, the combination of and captures interdependencies between the local regions and produces more visually consistent detection results. In Fig. 6(h), the hybrid loss can highlight the complete defects with higher anomaly scores. The above results prove that all loss functions are essential for the proposed method to achieve the best anomaly detection results.

V Discussions

In this section, we carry out a series of experiments to gain deeper insights into our MFRNet for unsupervised anomaly detection, all of which are conducted on the bottle category of MVTec AD dataset, unless otherwise stated.

V-A Influence of pre-trained model

| Backbone | AUROC | MAE | ACC | F1 |

|---|---|---|---|---|

| AlexNet [59] | 93.40 | 9.44 | 91.41 | 59.86 |

| ResNet18 [60] | 84.93 | 10.37 | 85.96 | 43.63 |

| DenseNet201 [61] | 85.79 | 9.12 | 87.96 | 38.79 |

| EfficientNet-B0 [62] | 88.02 | 9.77 | 82.79 | 47.17 |

| VGG16 [51] | 98.43 | 6.88 | 91.29 | 64.01 |

For the multi-scale feature aggregator in MFRNet, besides using VGG16 to obtain discriminant features, we also test our method with different backbone networks in Table VI. These backbone networks are initialized with ImageNet pre-trained weights, and similarly, the first three feature blocks are chosen as the output. The size of input image and output feature is identical to that of the previous setting. It can be observed that the results are basically stable when using different backbones, suggesting that our method has a relatively strong robustness. However, we surprisingly find that models such as ResNet18, DenseNet201, and EfficientNet-B0 perform better on classification tasks but produce worse anomaly detection results. We speculate the underlying reason is that the features extracted by these models are so abstract that information about small anomalies is lost. Therefore, only choosing the earlier feature layers in these models may be a good solution. In summary, our MFRNet can be used as a plug-in model with arbitrary backbone networks, and we recommend using VGG16 in the multi-scale feature aggregator.

V-B Influence of multi-scale feature

In the multi-scale feature aggregator, we extract hierarchical discriminative representations from different pre-trained feature layers. To quantify the effect of these multi-scale features, we show the results obtained when varying the feature layers extracted in VGG16. As illustrated in Fig. 7, the AUROC and F1 values of three configurations are reported, including using the first layer (Layer 1), the first two layers (Layer 1+2), and the first three layers (Layer 1+2+3). It is clear that features from the first layer can already yield encouraging results, and there is a consistent growth trend as the feature layers increase. This suggests that each feature layer can convey some different types of information that contribute to the final detection performance, and the performance may be further improved with more layers involved. Meanwhile, unlike previous work [45] that simply concatenates features from different layers, our method can establish information interactions between different feature layers via a head convolution. This also keeps the computational cost of the method constant, regardless of the number of feature layers used. As a result, we extract the last feature layer in the first three blocks by default for the VGG16 in MFRNet. And ones can also set it up with the task complexity of the actual industrial scenario to make the most of its performance.

V-C Choice of masking size

Since anomalies in the real world vary in size, the crossed-mask generator should consider multiple masks with different sizes, and then the generated anomaly maps for each masking size should also be merged to compute the final anomaly map. To determine the choice of masking size in our method, we compare the performance when uniting different values of . From Table VII, going from a single masking size to multiple masking sizes , we observe an improvement of 1, 7.8, 1.7 and 35.7 in terms of AUROC, MAE, ACC and F1, showing that combining different masking sizes is beneficial. To provide an intuitive understanding of the effect of masking size in our method, we also illustrate the anomaly maps under different masking sizes in Fig. 8. As expected, the small-size mask helps to find the tiny anomalies, but struggles with the large ones. And when combined with more large-size masks, we can cover the anomalies with various sizes, thus generating more reliable anomaly maps. Therefore, in the latter experiments, a set of masking sizes is utilized in the proposed method.

| Masking size | AUROC | MAE | ACC | F1 | |||

| 2 | 4 | 8 | 16 | ||||

| 97.44 | 7.46 | 89.76 | 47.18 | ||||

| 97.99 | 7.31 | 90.74 | 52.99 | ||||

| 98.52 | 7.05 | 90.86 | 57.13 | ||||

| 98.43 | 6.88 | 91.29 | 64.01 | ||||

V-D Choice of masking ratio

Besides the masking size discussed above, masking ratio is also an important parameter that affects the performance. The masking ratio refers to the ratio of patches removed in the feature map to be restored, which is equal to . For example, in Fig. 3, means that the masking ratio is , i.e., one-third of the patches are covered. To quantify its effect in our method, we compare the performance when varying the masking ratio. The results are shown in Fig. 9, where the symbol 0 on the x-axis indicates feature reconstruction without masking. We can see that the results regarding AUROC, ACC and F1 show a parabolic trend, rising first and then falling. More specifically, they increase steadily with the value of , peaking at , then gradually decline with the continuous increase of . The MAE value shows the opposite trend and also reaches the optimal value at . Accordingly, two conclusions can be drawn: 1) Converting the reconstruction problem to a restoration problems indeed improve detection performance. 2) A much high value of can adversely affect performance. We believe the reason for this is that a much high value of (i.e., a much low masking ratio) merely creates a simple task that can be easily solved by extrapolation from surrounding pixels, and thus fails to learn meaningful representations. Therefore, we set the masking ratio to , i.e., in the crossed-mask generator for the best performance.

V-E Low-shot anomaly detection

A better data efficiency is essential for real-world industrial inspections, where there may be a limited number of normal examples available. Therefore, we compare the performance of our method and AE-ssim [49] with a smaller number of training samples, ranging from 1, 10, 20, 50, 100 and 209 (the full bottle category). As reported in Fig. 10, AE-ssim yields an apparent decrease of 6.7 and 26.9 in AUROC and F1 when reducing the training samples from 209 to 100, while our method yields only 1 and 0.98. And we also observe that our MFRNet with only 20 training samples can significantly outperform AE-ssim with a full 209 samples in terms of F1. Furthermore, it is worth noting that with only one training sample, our MFRNet still achieves noticeable performance and even is comparable to AE-ssim with full samples. We believe that the better data efficiency of our method is due to anomaly detection in feature space. Compared to the image space, our method can capture more distinguishable information even in the few-shot cases. Therefore, we argue that our method can be well applied in real-world industrial scenarios.

V-F Comparison with supervised methods

In the above sections, we have verified that our MFRNet is better than the current unsupervised methods in terms of most evaluation metrics. And to further demonstrate its superiority, we also compare this unsupervised method with some supervised methods. Since supervised methods require anomaly labels during training, they are trained on the Fabric dataset [57], which contains 1200 abnormal fabric images with pixel-level annotations. As can be seen from Table VIII, it is surprising that our MFRNet achieves AUROC and ACC values close to the supervised methods, and even outperforms them in terms of F1 value. Regarding the MAE indicator, our method can perform better than most unsupervised methods, but worse than the supervised methods. We speculate the underlying reason is that there could be many plausible reconstructions for the same masked region, especially as the image pattern becomes more random and complex. In such cases, the plausible reconstruction of the masked area may differ significantly from the pixels or patches in the original image, thus resulting in increasing anomaly scores in these anomaly-free regions and a high MAE value of our method. In spite of this, considering that our MFRNet is trained in the unsupervised mode, we think that its performance is unparalleled and quite remarkable.

| Methods | AUROC | MAE | ACC | F1 |

|---|---|---|---|---|

| UNet [63] | 85.95 | 0.51 | 97.15 | 38.87 |

| MedT [64] | 57.74 | 0.83 | 95.55 | 24.97 |

| SETR [65] | 89.32 | 1.60 | 96.80 | 33.17 |

| MCnet [66] | 76.33 | 0.59 | 97.75 | 29.73 |

| Ours | 88.78 | 1.80 | 96.89 | 39.66 |

V-G Visual presentation

Some qualitative results of our anomaly detection method are presented in Fig. 11. As we can observe, the proposed method can accurately locate defects on different industrial material surfaces, such as fabric, wood, leather, metal, etc. Although the anomalies differ in size, shape, color and brightness, our method works well and thus is highly applicable to production lines. For example, our method can inspect the abnormal colors on the cable and metalnut categories with high accuracy and even recognize the misprinted words on the hazelnut category. Meanwhile, a tiny missing tip in the screw category is also precisely detected. In the tile category and MSD-US dataset, the abnormal oils appear transparent and have low visibility against the background, yet our method still detects them successfully, showing a strong semantic capability. Even on some material surfaces with complex texture variations, such as carpet, wood categories and Fabric-US dataset, our method can assign high anomaly scores to the anomalous regions.

VI Conclusion and future work

This paper proposes a multi-feature reconstruction network, MFRNet, based on crossed-mask restoration for unsupervised anomaly detection, which can detect anomalies accurately only using anomaly-free images. A multi-scale feature aggregator is adopted to extract discriminative hierarchical features of the raw image from a pre-trained model. A crossed-mask restoration network is also proposed to partially cover the generated multi-scale representation, and then restore the missing regions. Combined with the hybrid loss, MFRNet is able to ensure feature reconstruction and anomaly detection from both the pixel and structural similarity. Experimental results on four public datasets and our newly-proposed dataset show that our method is at par with or outperforms other state-of-the-art methods. In addition, our method has strong robustness and generalization ability and can even deliver detection performance close to the supervised methods.

In the future, we plan to design a more effective and reasonable masking strategy to formulate the restoration task. Moreover, developing a stronger restoration network could be considered to further improve the detection accuracy and inference speed.

References

- [1] Tao X, Gong X, Zhang X, et al. Deep Learning for Unsupervised Anomaly Localization in Industrial Images: A Survey[J]. IEEE Transactions on Instrumentation and Measurement, 2022.

- [2] Bergmann P, Fauser M, Sattlegger D, et al. MVTec AD–A comprehensive real-world dataset for unsupervised anomaly detection[C]//Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2019: 9592-9600.

- [3] Wan Q, Gao L, Li X, et al. Industrial Image Anomaly Localization Based on Gaussian Clustering of Pretrained Feature[J]. IEEE Transactions on Industrial Electronics, 2021, 69(6): 6182-6192.

- [4] Wu Q, Li H, Tian C, et al. AEKD: Unsupervised auto-encoder knowledge distillation for industrial anomaly detection[J]. Journal of Manufacturing Systems, 2024, 73: 159-169.

- [5] Schlegl T, Seeböck P, Waldstein S M, et al. Unsupervised anomaly detection with generative adversarial networks to guide marker discovery[C]//International conference on information processing in medical imaging. Springer, Cham, 2017: 146-157.

- [6] Schlegl T, Seeböck P, Waldstein S M, et al. f-AnoGAN: Fast unsupervised anomaly detection with generative adversarial networks[J]. Medical image analysis, 2019, 54: 30-44.

- [7] Liu R, Liu W, Li H, et al. Metro Anomaly Detection Based on Light Strip Inductive Key Frame Extraction and MAGAN Network[J]. IEEE Transactions on Instrumentation and Measurement, 2021, 71: 1-14.

- [8] [8] Wu P, Liu J, He X, et al. Toward video anomaly retrieval from video anomaly detection: New benchmarks and model[J]. IEEE Transactions on Image Processing, 2024, 33: 2213-2225.

- [9] Wang J, Xu G, Li C, et al. Surface defects detection using non-convex total variation regularized RPCA with kernelization[J]. IEEE Transactions on Instrumentation and Measurement, 2021, 70: 1-13.

- [10] Song G, Song K, Yan Y. Saliency detection for strip steel surface defects using multiple constraints and improved texture features[J]. Optics and Lasers in Engineering, 2020, 128: 106000.

- [11] Wang J, Xu G, Yan F, et al. Defect transformer: An efficient hybrid transformer architecture for surface defect detection[J]. Measurement, 2023, 211: 112614.

- [12] Zhang G, Lu Y, Jiang X, et al. Context-Aware Adaptive Weighted Attention Network for Real-Time Surface Defect Segmentation[J]. IEEE Transactions on Instrumentation and Measurement, 2024.

- [13] Rippel O, Mertens P, Merhof D. Modeling the distribution of normal data in pre-trained deep features for anomaly detection[C]//2020 25th International Conference on Pattern Recognition (ICPR). IEEE, 2021: 6726-6733.

- [14] Li C L, Sohn K, Yoon J, et al. Cutpaste: Self-supervised learning for anomaly detection and localization[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2021: 9664-9674.

- [15] Fei Y, Huang C, Jinkun C, et al. Attribute restoration framework for anomaly detection[J]. IEEE Transactions on Multimedia, 2020.

- [16] Gong D, Liu L, Le V, et al. Memorizing normality to detect anomaly: Memory-augmented deep autoencoder for unsupervised anomaly detection[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision. 2019: 1705-1714.

- [17] Cohen N, Hoshen Y. Sub-image anomaly detection with deep pyramid correspondences[J]. arXiv preprint arXiv:2005.02357, 2020.

- [18] Deng J, Dong W, Socher R, et al. Imagenet: A large-scale hierarchical image database[C]//2009 IEEE conference on computer vision and pattern recognition. Ieee, 2009: 248-255.

- [19] Rippel O, Mertens P, König E, et al. Gaussian anomaly detection by modeling the distribution of normal data in pretrained deep features[J]. IEEE Transactions on Instrumentation and Measurement, 2021, 70: 1-13.

- [20] Sun Z, Wang J, Li Y. RAMFAE: a novel unsupervised visual anomaly detection method based on autoencoder[J]. International Journal of Machine Learning and Cybernetics, 2024, 15(2): 355-369.

- [21] Roth K, Pemula L, Zepeda J, et al. Towards total recall in industrial anomaly detection[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2022: 14318-14328.

- [22] Heckler L, König R, Bergmann P. Exploring the importance of pretrained feature extractors for unsupervised anomaly detection and localization[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2023: 2917-2926.

- [23] Yi J, Yoon S. Patch svdd: Patch-level svdd for anomaly detection and segmentation[C]//Proceedings of the Asian Conference on Computer Vision. 2020.

- [24] Liu B, Li X, Xiao Y, et al. Adaboost-based SVDD for anomaly detection with dictionary learning[J]. Expert Systems with Applications, 2023: 121770.

- [25] Salehi M, Eftekhar A, Sadjadi N, et al. Puzzle-ae: Novelty detection in images through solving puzzles[J]. arXiv preprint arXiv:2008.12959, 2020.

- [26] Tian Y, Liu F, Pang G, et al. Self-supervised multi-class pre-training for unsupervised anomaly detection and segmentation in medical images[J]. arXiv preprint arXiv:2109.01303, 2021.

- [27] Yu Q, Li C, Zhu Y, et al. Convolutional autoencoder based on latent subspace projection for anomaly detection[J]. Methods, 2023, 214: 48-59.

- [28] Zong B, Song Q, Min M R, et al. Deep autoencoding gaussian mixture model for unsupervised anomaly detection[C]//International conference on learning representations. 2018.

- [29] Mishra P, Verk R, Fornasier D, et al. VT-ADL: A vision transformer network for image anomaly detection and localization[C]//2021 IEEE 30th International Symposium on Industrial Electronics (ISIE). IEEE, 2021: 01-06.

- [30] Rudolph M, Wandt B, Rosenhahn B. Same same but differnet: Semi-supervised defect detection with normalizing flows[C]//Proceedings of the IEEE/CVF winter conference on applications of computer vision. 2021: 1907-1916.

- [31] Zhou Y, Xu X, Song J, et al. Msflow: Multiscale flow-based framework for unsupervised anomaly detection[J]. IEEE Transactions on Neural Networks and Learning Systems, 2024.

- [32] Rudolph M, Wehrbein T, Rosenhahn B, et al. Fully convolutional cross-scale-flows for image-based defect detection[C]//Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision. 2022: 1088-1097.

- [33] Bergmann P, Fauser M, Sattlegger D, et al. Uninformed students: Student-teacher anomaly detection with discriminative latent embeddings[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2020: 4183-4192.

- [34] Salehi M, Sadjadi N, Baselizadeh S, et al. Multiresolution knowledge distillation for anomaly detection[C]//Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2021: 14902-14912.

- [35] Liu M, Jiao Y, Lu J, et al. Anomaly Detection for Medical Images Using Teacher-Student Model with Skip Connections and Multi-scale Anomaly Consistency[J]. IEEE Transactions on Instrumentation and Measurement, 2024.

- [36] Chow J K, Su Z, Wu J, et al. Anomaly detection of defects on concrete structures with the convolutional autoencoder[J]. Advanced Engineering Informatics, 2020, 45: 101105.

- [37] Chen L, You Z, Zhang N, et al. UTRAD: Anomaly detection and localization with U-Transformer[J]. Neural Networks, 2022, 147: 53-62.

- [38] Zhang X, Shi S, Sun H C, et al. ACVAE: A novel self-adversarial variational auto-encoder combined with contrast learning for time series anomaly detection[J]. Neural Networks, 2024, 171: 383-395.

- [39] Mei S, Yang H, Yin Z. An unsupervised-learning-based approach for automated defect inspection on textured surfaces[J]. IEEE Transactions on Instrumentation and Measurement, 2018, 67(6): 1266-1277.

- [40] Liu R, Liu W, Duan M, et al. MemFormer: A memory based unified model for anomaly detection on metro railway tracks[J]. Expert Systems with Applications, 2024, 237: 121509.

- [41] Niu M, Wang Y, Song K, et al. An Adaptive Pyramid Graph and Variation Residual-Based Anomaly Detection Network for Rail Surface Defects[J]. IEEE Transactions on Instrumentation and Measurement, 2021, 70: 1-13.

- [42] Zavrtanik V, Kristan M, Skočaj D. Reconstruction by inpainting for visual anomaly detection[J]. Pattern Recognition, 2021, 112: 107706.

- [43] Yan X, Zhang H, Xu X, et al. Learning semantic context from normal samples for unsupervised anomaly detection[C]//Proceedings of the AAAI Conference on Artificial Intelligence. 2021, 35(4): 3110-3118.

- [44] Huang C, Xu Q, Wang Y, et al. Self-Supervised Masking for Unsupervised Anomaly Detection and Localization[J]. IEEE Transactions on Multimedia, 2022.

- [45] Shi Y, Yang J, Qi Z. Unsupervised anomaly segmentation via deep feature reconstruction[J]. Neurocomputing, 2021, 424: 9-22.

- [46] Liang Y, Zhang J, Zhao S, et al. Omni-frequency channel-selection representations for unsupervised anomaly detection[J]. IEEE Transactions on Image Processing, 2023.

- [47] Yang Z, Zhang M, Chen Y, et al. Surface defect detection method for air rudder based on positive samples[J]. Journal of Intelligent Manufacturing, 2022: 1-19.

- [48] Luo J, Lin J, Yang Z, et al. SMD Anomaly Detection: A Self-Supervised Texture–Structure Anomaly Detection Framework[J]. IEEE Transactions on Instrumentation and Measurement, 2022, 71: 1-11.

- [49] Bergmann P, Löwe S, Fauser M, et al. Improving unsupervised defect segmentation by applying structural similarity to autoencoders[J]. arXiv preprint arXiv:1807.02011, 2018.

- [50] Goodfellow I, Pouget-Abadie J, Mirza M, et al. Generative adversarial networks[J]. Communications of the ACM, 2020, 63(11): 139-144.

- [51] Simonyan K, Zisserman A. Very Deep Convolutional Networks for Large-Scale Image Recognition[C]// International conference on learning representations. 2015.

- [52] Dosovitskiy A, Beyer L, Kolesnikov A, et al. An image is worth 16x16 words: Transformers for image recognition at scale [J]. International Conference on Learning Representations, 2021.

- [53] Wang Z, Simoncelli E P, Bovik A C. Multiscale structural similarity for image quality assessment[C]//The Thrity-Seventh Asilomar Conference on Signals, Systems and Computers, 2003, 2: 1398-1402.

- [54] Xue W, Zhang L, Mou X, et al. Gradient magnitude similarity deviation: A highly efficient perceptual image quality index[J]. IEEE transactions on image processing, 2013, 23(2): 684-695.

- [55] Huang Y, Qiu C, Yuan K. Surface defect saliency of magnetic tile[J]. The Visual Computer, 2020, 36(1): 85-96.

- [56] Zhang J, Ding R, Ban M, et al. FDSNeT: An Accurate Real-Time Surface Defect Segmentation Network[C]//ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2022: 3803-3807.

- [57] Wang J, Xu G, Li C, et al. SDDet: An Enhanced Encoder-Decoder Network with Hierarchical Supervision for Surface Defect Detection[J]. IEEE Sensors Journal, 2022.

- [58] G. Wang, S. Han, E. Ding, D. Huang, Student-teacher feature pyramid matching for anomaly detection[C]//British Machine Vision Conference. 2021: 1-13.

- [59] Krizhevsky A, Sutskever I, Hinton G E. Imagenet classification with deep convolutional neural networks[J]. Communications of the ACM, 2017, 60(6): 84-90.

- [60] He K, Zhang X, Ren S, et al. Deep residual learning for image recognition[C]//Proceedings of the IEEE conference on computer vision and pattern recognition. 2016: 770-778.

- [61] Huang G, Liu Z, Pleiss G, et al. Convolutional networks with dense connectivity[J]. IEEE transactions on pattern analysis and machine intelligence, 2019, 44(12): 8704-8716.

- [62] Tan M, Le Q. Efficientnet: Rethinking model scaling for convolutional neural networks[C]//International conference on machine learning. PMLR, 2019: 6105-6114.

- [63] Ronneberger O, Fischer P, Brox T. U-net: Convolutional networks for biomedical image segmentation[C]//International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2015: 234-241.

- [64] Valanarasu J M J, Oza P, Hacihaliloglu I, et al. Medical transformer: Gated axial-attention for medical image segmentation[C]// International Conference on Medical Image Computing and Computer Assisted Intervention. Springer, 2021: 36-46.

- [65] Zheng S, Lu J, Zhao H, et al. Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers[C]//Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2021: 6881-6890.

- [66] Zhang D, Song K, Xu J, et al. MCnet: Multiple context information segmentation network of no-service rail surface defects[J]. IEEE Transactions on Instrumentation and Measurement, 2020, 70: 1-9.