Multi-answer Constrained Optimal Querying: Maximum Information Gain Coding

Abstract

As the rapidly developments of artificial intelligence and machine Learning, behavior tree design in multiagent system or AI game become more important. The behavior tree design problem is highly related to the source coding in information theory. “Twenty Questions” problem is a typical example for the behavior tree design, usually used to explain the source coding application in information theory and can be solved by Huffman coding. In some realistic scenarios, there are some constraints on the asked questions. However, for general question set, finding the minimum expected querying length is an open problem, belongs to NP-hard. Recently, a new coding scheme has been proposed to provide a near optimal solution for binary cases with some constraints, named greedy binary separation coding (GBSC). In this work, we shall generalize it to D-ary cases and propose maximum information gain coding (MIGC) approach to solve the multi-answer decision constrained querying problem. The optimality of the proposed MIGC is discussed in theory. Later on, we also apply MIGC to discuss three practical scenarios and showcase that MIGC has better performance than GBSC and Shannon Coding in terms of bits persymbol.

Index Terms:

source coding, decision tree, greedy algorithm, information theory.I Introduction

Constucting a decision tree for identification is a fundamental problem in information theory and discrete mathematics [1]. As the rapidly developments of artificial intelligence and machine Learning, behavior tree design in multiagent system or AI game become more important. The behavior tree design problem is highly related to the source coding in information theory. “Twenty Questions” problem is a typical example for the behavior tree design, usually used to explain the source coding application in information theory and can be solved by Huffman coding. For the multiple agent systems, reinforcement learning[2], Q-learning[3, 4], and federated learning [5, 6, 7] may be the most important tools or frameworks to enhance the decision tree or behavior tree design.

Let us review the well-known game “Twenty Questions”, where a player is required to determine the identity of an item in a certain set by asking a minimum number of “yes/no” questions [8]. It is equivalent to the binary source coding in information theory. Its optimal solution can be given by Huffman coding for the scenario without any constraint [9, 10].

In general, numerous variants of the game could be designed by adding constraints. One example is to restrict the times of the question query [11, 12, 13], which can be solved by bounded-length Huffman coding. In the presence of large sets or multiple sources, the limited memory becomes the key constraint factor [14], which may cause conventional source coding methods can not give a reasonable solution. In particular, if each question is endowed with a cost, the problem can be turned from minimum length into minimum cost [1].

In this paper, we focus on the decision constrained case, where the question or decision set is limited. That is, one cannot divide the subtrees arbitrarily when building the decision tree. Generally, finding the optimal solution to it is an NP-hard problem[15]. Hence, it is common to utilize greedy algorithms to find some near optimal solutions rather than the exact solution [8]. In the literature, there exist heuristic algorithms that admit log-approximation [16, 17]. Furthermore, if the decision constraints can be described by a graph, an approximation with loglog from the optimal one was given[1]. Some algorithms based on active learning were also presented in[18, 19] .

Recently, the authors in [8] proposed a Greedy Binary Separation Coding (GBSC) algorithm to the problem based on information theory. However, it is only suitable to the ”yes/no” binary cases. Inspired by that, we generalize it to D-ary cases and propose a new algorithm MIGC referred to as Maximum Information Gain Coding. More specifically, the contribution can be mainly expressed as follows,

(i) We prove that for general distribution, the information quantity of the query is the entropy of the ”answer”.

(ii) We propose a Maximum Information Gain Coding algorithm to solve multi-answer decision constrained querying problem.

(iii) The optimality of MIGC is discussed in theory and some applications are given.

The rest of this paper is organized as follows. In Section II, we give a brief review of the GBSC algorithm. In Section III, we first build a generalized model for the multi-answer constrained problem and present the algorithm, Maximum Information Gain Coding (MIGC), to it. Then, we prove MIGC is better than Shannon Coding in terms of the average bits per symbol. In Section IV, three specific tasks are discussed to showcase the advantage of our proposed MIGC algorithm. Finally, the conclusion is given in Section V.

II Related Works

II-A Binary Identification Problem

First, we consider the binary case. Given a ramdom variable with distribution , and a set of questions , our objective is to find the minimum average query times by asking questions and present the selection of the in the sequel. Here, it is assumed that is the subset of X, and the query can be designed as ”Is in the set ?”, where . If the query set is , then the optimal sulotion can be given by Huffman coding. However, for some complex structures, Huffman coding algorithm may not be workable. Let us see some examples.

Example 1.

Suppose , distribution of is , and the query set is .

The solution of Example 1 is shown as Huffman decision tree mode in Fig.1. From the Huffman decision tree, one can find that the query set should contain and , but the query set in Example 1 did not contain them. That means the solution given by the Huffman decision tree is not valid to the problem in Example 1.

II-B Greedy Binary Separation Coding

To solve such a binary identification problem, recently, authors in [8] proposed an approximation algorithm based on a coding sheme, referred to as Greedy Binary Separation Coding (GBSC). The method constructs the decision tree from top to bottom and at each node, it chooses the query making the total probabilities of the left and right subtrees as close as possible. In this way, one can build the decision tree of Example 1 as in Fig.2

The intuition of GBSC is to greedily maximize the information gain at each node. It is proved that the mutual information between the target and the query is equal to the binary entropy of the answer to the query. This indicates that it obeys the rule of the probability of answer ”yes” to be close to . However, in [8], it is only proved GBSC is valid when is uniformly distributed. Now we will extend it and prove that for general discrete distributions, GBSC is still valid by using the near equal probability partition rule.

Note that GBSC is only suitable to the query problem in binary cases. Inspired by maximizing the information gain of GBSC, we generalize it and propose the Maximum Information Gain Coding (MIGC) method to multi-answer cases .

III Maximum Information Gain Coding

In this Section, we first introduce multi-answer constrained query problem and then present a new solution referred to as Maximum Information Gain Coding. Meanwhile we also analyze its optimality.

III-A Multi-answer Constrained Query Problem

Different from the constrained query problem in binary cases, the query now has possible answers. Each answer can be uniquely mapped to one of disjoint subsets of . For simplicity, we first introduce some definitions.

Definition 1.

The i-th query is mapped to , where , and represents the empty set.

That is, the i-th query can be described as ”Which subset is in”, where .

We now formally desciribe MIGC.

Definition 2.

is a -ary partition of , if and

Definition 3.

For a subset , its total probability

| (1) |

Definition 4.

The entropy of the partition is defined as

| (2) |

Definition 5.

A partition of is optimal, if for any partition ,

Similar to GBSC, the rule from top to bottom is still adopted for constructing the MIGC decision tree. The process can be described as follows. At the root, we choose the query that makes the partition of optimal as defined in Definition 5, and split the tree according to the partition. We repeat it recursively at each node, and choose the query corresponding to the optimal partition. In this way, it is easily to know that GBSC is the special case of MIGC for .

Example 2.

Suppose , distribution of is , and the query set is unconstrained.

Based on the rule of MIGC, the corresponding decision tree of Example 2 is shown in Fig.3. It gives clearly solution to the query problem in Example 2.

III-B Intuition

In this subsection, we illustrate why the MIGC can approach maximum entropy at each query. For a query on the node , each gives a -ary answer. The probability of getting answer is given by

| (3) |

The information gain of query with respect to node is

| (4) |

Lemma 1.

| (5) |

In [8], it proved is true when is discrete uniformly distributed. Now we will show that for any discrete distribution of , Lemma 1 always holds.

Proof.

| (6) |

Notice that

| (7) |

| (8) |

Then we have

| (9) |

∎

Lemma 1 indicates the information we gain from the query about is equal to the entropy of the answer. In fact, the uniform distribution of the answer maximizes the information gain, which means each answer should appear with the same probability.

Since different answers divide the into non-overlap partitions, can also be seen as the partition entropy as defined in section IIIA.

III-C Near-optimality

In this subsection, we discuss the optimality of MIGC. For simplicity, we only consider unconstrained condition. It is well known Huffman coding gives the optimal solution to the unconstrained query problem. However, both GBSC and MIGC may not reach the optimal solution for the unconstrained problem. That means there exists a gap between Huffman coding approach and GBSC or MIGC in terms of bits persymbol. In fact, GBSC for unconstrained query problem looks like the Fano’s source coding, but the main difference from Fano’s source coding is that Fano requires the order ranking of the answer probability from the least to the largest. GBSC does not require such preprocessing. In [8], it proves that GBSC is at least as good as Shannon coding[10] and better than Fano’s approach in terms of the upper bound of the average coding length. It is remarkable that the coding length for each symbol in GBSC is no more than the corresponding coding length in Shannon coding. In the sequel, we will prove it for MIGC.

Theorem 1.

Assume that the expected coding length is for MIGC, and for Shannon coding, then

Corollary 1.

Assume that the expected code length is for MIGC, then

Since , using Theorem 1, one can easily get the upper bound of in Corollary 1.

Now we shall prove Theorem 1, and we first prove the Lemma 2.

Lemma 2.

Assume the code length for symbol with probability is for MIGC, then

Note that this is a much stronger result in source coding theory. For the binary case, it has been proven in [8] by contradiction. However, such method cannot be directly generalized to D-ary cases. This is because each node in a binary tree only has two children, which can be easy to discuss separately. But for D-ary scenario, it is necessary to sort the children nodes at each step. Now we give the detail of the proof as follows.

Proof.

On the children nodes sorting process, it is illustrated in Fig.4, where a circle represents a single node while a rectangle represents a leaf or a subtree. The content in the nodes are indices of the nodes. is the sum of probabilities of all the children nodes of node .

Without lose of generality, we assume for any and , .

Similar to [8], we will prove it by contradiction. Without lose of generality, assume where is the maximum among its sister nodes. For , represents the nodes on the path from to the root.

Then we want to prove the following two propositions hold for any integer by mathematical induction.

(i)

(ii)

Remark 1.

if (i) and (ii) holds, then , causing a contradiction.

Basic step.

First, we prove the case when . Since

| (10) |

then

| (11) |

Proposition (i) is true.

If , then moving node from to will make smaller. According to the convex property of entropy, this will increase the partition entropy of node , which contradicts to the optimal partition defined in Definition 5. Hence,

| (12) |

so we have

| (13) |

And

| (14) |

Proposition (ii) is true.

Inductive step.

Assume the propositions are true for . Then

| (15) |

Proposition (i) is true.

If , then moving node from to will make smaller. According to the convex property of entropy, this will increase the partition entropy of node , which contradicts to the optimal partition defined in Definition 5. Hence,

| (16) |

so we have

| (17) |

And

| (18) |

Proposition (ii) is true. This demonstrates when , the two propositions also hold.

By using Remark 1 and the discussion above, Lemma 2 is proved.

∎

IV Some Practical Scenario Discussion

In this section, we use three practical scenarios to compare the performance of MIGC and other coding algorithms.

IV-A Average Coding Length of General Discrete Distributed X for D=3 Without Constraints

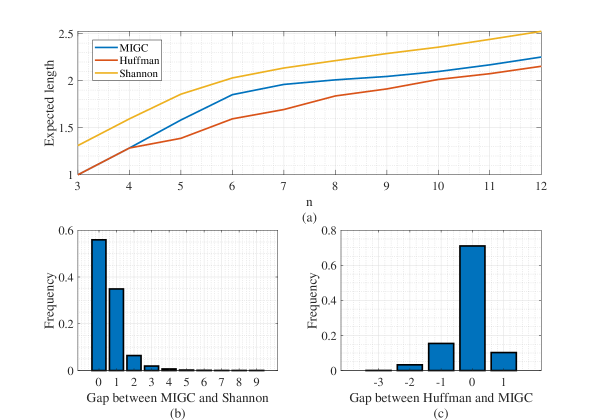

Set and , and we generate samples of discrete distribution randomly for each where each sample represents a discrete probability distribution. Fig.5(a) shows the average coding length of three different algorithms changes with . It is observed that when , the average coding length of MIGC is between the Huffman coding and Shannon coding. Note that the Huffman coding is the optimal.

By setting , Fig.5(b) shows the gap between the coding length of MIGC and that of Shannon coding for each symbol, it is easily seen that all the results are non-negative, which validates Lemma 2. Fig.5(c) shows the gap between the coding length of Huffman coding and that of MIGC for each symbol. Interestingly, although Huffman coding has less average coding length than MIGC, MIGC has more symbols that have less coding length than that of Huffman coding. And for a few symbols, their coding length of MIGC can be shorter than that of Huffman coding by 3.

IV-B Application to DNA Detection

The identification of genes is important in molecular biology, and is usually used to determine genomic sequences of different organisms[20]. An exon, which is an interval of the DNA and does not overlap with each other. A gene is a set of exons[21]. For simplicity, assume the target gene only contains one exon. Here, different from [8], we set two target genes A and B for the detection work. Each time, one can detect a continuous interval of the DNA, and acquire four kinds of results, “detect A”, “detect B”, “detect A and B”, “detect neither”. Due to the position of the target genes being fixed, the DNA detection is to find the optimal detection querying with minimum expected detection times. The procedure is shown in Fig.6.

DNA detection can be viewed as decision constrained coding problem. Set the number of exons and generate samples of discrete distributions for testing. Fig.7 shows the gap between the MIGC and the optimal solution by using brute force. In Fig.7(a), the red line represents the minimum average detection times, while the blue dots represent the results provided by MIGC. One can see that most blue dots are very close to the red line, which illustrates the effectiveness of MIGC. Fig.7(b) shows the distribution of the gap between MIGC and brute force. It demonstrates that the gap is less than 0.16 detection times with 95 probability while the optimal average detection times is 2.16. Fig.8 compares the performance of MIGC and GBSC when changes, illustrating that MIGC has less average detection times than GBSC.

IV-C Application to Battleship Games with 2 players

Battleship is a popular strategy game[22]. In conventional battleship game, it requires two players to place ”ships” on 10 by 10 boards, which are hidden to the opponent. The rules are expressed as follows, (i) the two players will play in turn to choose a grid (ii) if all the grids of one ship have been chosen by its owner’s opponent, then the ship is ”bombed” (iii) when one player’s ships are all ”bombed”, the game ends and another player will win the game.

Now we modify the battleship games as follows.

Assume two players only place their ships on the same 10 by 10 board randomly. They do not participate in the following procedure of the game. A third player tries to bomb the ships placed by the former two players. Each time, the third player can get the result from “hit player1’s ship”, “hit player2’s ship”, “hit nothing”. The target for the third player is to sink all the ships with fewest tries (bombs).

(i) If we do not distinguish the owner of the ships as in [8], the problem reduces to the binary case, i.e., the bombing result for the third player is either ”hit” or not. This can be well handled by GBSC.

(ii) If we take the owner of the ships into account, it corresponds to the multi-answer constrained cases ( each time, the third player can get the result from “hit player1’s ship”, “hit player2’s ship”, “hit nothing”). In this case, GBSC does not work and we ultilize MIGC instead. The query set is the coordinates of the board. For each try of the third player, one needs to maximize the entropy of the hitting result.

Assume each of the former two players place a 1 by 5 and a 1 by 3 ships on the board, i.e., there are total 4 ships. We generate possible board layouts randomly, and the game starts by choosing one of them as target board (every legal board is equally possible). Since each query (bombing) acquires in average at most bits of information, the theoritical minimal number of average tries is no less than 12.

Fig.9 shows the distribution of the average try times of the third player. Fig. 10 illustrates an example showcase of the game where color of each grid represents the probability of whether there exist ships of player 1. As the game progresses, the probability of the ships being bombed will become gradually larger. From Fig.10(d), after trying bombs of times, one ship of size 1 by 5 can be bombed definitely next time and the location of the other ship of size 1 by 3 has been narrowed to four possibilities.

Fig. 11 shows how MIGC plays the game from the perspective of information theory. Since every target board has the same probability of being chosen, we can calculate the entropy of the board by , where represents the set of boards meeting the hitting results after tries, and represents counting the number of elements in the set. We choose 10 boards randomly and show how the entropy or uncertainty of each board changes with the number of tries. The red dashed line represents the theoretical best result in average, which means each query (bombing) acquires bits of information. We can observe two types of the declination of entropy silimlar to the results in [8]. Most of times, the first few tries will not hit ships and the algorithm needs to explore the board, e.g., for board 6. At that stage, the declination of entropy is relatively slow. When a ”hit” happens, the entropy drops rapidly until the information provided by this ”hit” is fully ultilized. The process ends when the entropy reduces to the zero.

The modified battleship problem for the third player illustrates that MIGC can give an effective solution to the problem than that of GBSC.

V Conclusion

In this paper, we discussed an open problem in information theory, the multi-answer constrained optimal query problem and proposed an Maximum Information Gain Coding, MIGC, solution to it. We also discussed its optimality of MIGC in theory. It is proved that MIGC has better performance than that of Shannon coding in terms of the average coding length. Furthermore, an interesting result is obtained that the coding length persymbol in MIGC is less than that by Shannon coding for . Finally, we discuss three different scenarios and show that MIGC provides better results than GBSC and Shannon coding. It is expected the MIGC method can be combined with or embedded in some machine learning frameworks, i.e., federated learning mode in [23, 24] and got more applications in the future.

Acknowledgment

The authors would like to appreciate the support of the National Key R & D Program of China No. 2021YFA1000504. The authors thank the members of Wistlab of Tsinghua University for their good suggestions and discussions.

References

- [1] A. Karbasi and M. Zadimoghaddam, “Constrained binary identification problem,” in Proceedings of the 30th Symposium on Theoretical Aspects of Computer Science, 2013.

- [2] Y. Fu, L. Qin, and Q. Yin, “A reinforcement learning behavior tree framework for game ai,” in 2016 International Conference on Economics, Social Science, Arts, Education and Management Engineering. Atlantis Press, 2016, pp. 573–579.

- [3] Q. Zhang, L. Sun, P. Jiao, and Q. Yin, “Combining behavior trees with maxq learning to facilitate cgfs behavior modeling,” in 2017 4th International Conference on Systems and Informatics (ICSAI). IEEE, 2017, pp. 525–531.

- [4] S. S. O. Venkata, R. Parasuraman, and R. Pidaparti, “Kt-bt: A framework for knowledge transfer through behavior trees in multirobot systems,” IEEE Transactions on Robotics, 2023.

- [5] Y. Shi, P. Fan, Z. Zhu, C. Peng, F. Wang, and K. B. Letaief, “Sam: An efficient approach with selective aggregation of models in federated learning,” IEEE Internet of Things Journal, 2024.

- [6] S. Wan, J. Lu, P. Fan, Y. Shao, C. Peng, and K. B. Letaief, “Convergence analysis and system design for federated learning over wireless networks,” IEEE Journal on Selected Areas in Communications, vol. 39, no. 12, pp. 3622–3639, 2021.

- [7] Z. Zhu, S. Wan, P. Fan, and K. B. Letaief, “Federated multiagent actor–critic learning for age sensitive mobile-edge computing,” IEEE Internet of Things Journal, vol. 9, no. 2, pp. 1053–1067, 2021.

- [8] S. Zhang, J. Sun, and S. Chen, “Constrained optimal querying: Huffman coding and beyond,” arXiv preprint arXiv:2210.04013, 2022.

- [9] Mamta and Sharma, “Compression using huffman coding,” International Journal of Computer Science and Network Security Ijcsns, 2010.

- [10] T. M. Cover, Elements of information theory. John Wiley & Sons, 1999.

- [11] M. B. Baer, “Twenty (or so) questions: bounded-length huffman coding,” IEEE, 2006.

- [12] L. L. Larmore and D. S. Hirschberg, “A fast algorithm for optimal length-limited huffman codes,” Journal of the ACM (JACM), vol. 37, no. 3, pp. 464–473, 1990.

- [13] M. B. Baer, “D-ary bounded-length huffman coding,” in 2007 IEEE International Symposium on Information Theory. IEEE, 2007, pp. 896–900.

- [14] S. J. Lee and C. W. Lee, “Determination of huffman parameters for memory-constrained entropy coding of multiple sources,” IEEE Signal Processing Letters, vol. 4, no. 3, pp. 65–67, 1997.

- [15] H. Laurent and R. L. Rivest, “Constructing optimal binary decision trees is np-complete,” Information processing letters, vol. 5, no. 1, pp. 15–17, 1976.

- [16] V. T. Chakaravarthy, V. Pandit, S. Roy, and Y. Sabharwal, “Approximating decision trees with multiway branches,” in International Colloquium on Automata, Languages, and Programming. Springer, 2009, pp. 210–221.

- [17] S. R. Kosaraju, T. M. Przytycka, and R. Borgstrom, “On an optimal split tree problem,” in Workshop on Algorithms and Data Structures. Springer, 1999, pp. 157–168.

- [18] S. Dasgupta, “Analysis of a greedy active learning strategy,” Advances in neural information processing systems, vol. 17, 2004.

- [19] R. D. Nowak, “The geometry of generalized binary search,” IEEE Transactions on Information Theory, vol. 57, no. 12, pp. 7893–7906, 2011.

- [20] T. Biedl, B. Brejová, E. D. Demaine, A. M. Hamel, A. López-Ortiz, and T. Vinař, “Finding hidden independent sets in interval graphs,” Theoretical Computer Science, vol. 310, no. 1-3, pp. 287–307, 2004.

- [21] A. C. Hoffmann, D. Vallböhmer, K. Prenzel, R. Metzger, M. Heitmann, S. Neiss, F. Ling, A. H. Hölscher, and S. J. Brabender, “Methylated dapk and apc promoter dna detection in peripheral blood is significantly associated with apparent residual tumor and outcome,” Journal of Cancer ResearchClinical Oncology, 2009.

- [22] A. Fiat and A. Shamir, “How to find a battleship,” Networks, vol. 19, no. 3, pp. 361–371, 1989.

- [23] Z. Zhu, Y. Shi, J. Luo, F. Wang, C. Peng, P. Fan, and K. B. Letaief, “Fedlp: Layer-wise pruning mechanism for communication-computation efficient federated learning,” in ICC 2023-IEEE International Conference on Communications. IEEE, 2023, pp. 1250–1255.

- [24] Q. Wu, Y. Zhao, Q. Fan, P. Fan, J. Wang, and C. Zhang, “Mobility-aware cooperative caching in vehicular edge computing based on asynchronous federated and deep reinforcement learning,” IEEE Journal of Selected Topics in Signal Processing, vol. 17, no. 1, pp. 66–81, 2022.