Multi-Agent Path Planning based on MPC and DDPG

Abstract

The problem of mixed static and dynamic obstacle avoidance is essential for path planning in highly dynamic environment. However, the paths formed by grid edges can be longer than the true shortest paths in the terrain since their headings are artificially constrained. Existing methods can hardly deal with dynamic obstacles. To address this problem, we propose a new algorithm combining Model Predictive Control (MPC) with Deep Deterministic Policy Gradient (DDPG). Firstly, we apply the MPC algorithm to predict the trajectory of dynamic obstacles. Secondly, the DDPG with continuous action space is designed to provide learning and autonomous decision-making capability for robots. Finally, we introduce the idea of the Artificial Potential Field to set the reward function to improve convergence speed and accuracy. We employ Unity 3D to perform simulation experiments in highly uncertain environment such as aircraft carrier decks and squares. The results show that our method has made great improvement on accuracy by 7%-30% compared with the other methods, and on the length of the path and turning angle by reducing 100 units and 400-450 degrees compared with DQN (Deep Q Network), respectively.

Index Terms:

path planning, obstacle avoidance, MPC, Artificial Potential Field, Unity 3DI Introduction

In many dangerous and complex environments, path planning and obstacle avoidance still rely on manual decision-making. This method consumes a lot of human and material resources, and has the characteristics of low efficiency in path planning. Besides, in such environments like path planning of carrier aircraft on carrier deck and path planning for post-disaster rescue, once humans make a mistake, it is likely to bring immeasurable losses and injuries. Hence, this requires agents to have the abilities of autonomous path planning and obstacle avoidance in complex environments.

The core idea of path planning is to explore an optimal or sub-optimal barrier-free path according to certain evaluation criteria in obstacle environments [1, 2]. Early path planning is mostly graph-based path planning [3, 4, 5, 6]: such as V-graph, C-space [3], etc. However, graph-based methods cannot deal with the problem of dynamic obstacles. The Artificial Potential Field [7], Rapid-exploration Random Tree [8] and D* [9] can be used in dynamic environments. However, the Artificial Potential Field has the problem of local minimum. The Rapid-exploration Random Tree can’t deal with the continuous highly dynamic environments and the planned path is usually sub-optimal and not smooth. The D* algorithm is effective in finding a path in a dynamic environment. However, when moving to the target, it only checks the changes of the next node or neighboring nodes on the shortest path. So D* can’t deal with the changes of these nodes far away from the agent.

Reinforcement Learning (RL) comes out as a new research tendency that can grant the agent sufficient intelligence to make local decisions and accomplish necessary tasks. In [10] and [11], the authors presented a Q-learning algorithm [12] to solve the autonomous navigation problem of UAVs, afterwards, the Q-learning was also employed to establish paths while avoiding static or dynamic obstacles in [13] and [14]. Q-learning had good performance in these environments. However, in complex and highly dynamic environments, Q-learning hardly converges due to the curse of the dimensionality.

In recent years, artificial intelligence technology has been rapidly developed and applied. DRL [15] was obtained by combining the advantages of Deep Learning (DL) [16] and Reinforcement Learning (RL) [17], which provides a solution to the perceptual decision-making problem of complex systems. Liu et al. [18] utilized the Deep Q Network (DQN) [19] algorithm combined with greedy strategy to support agent trajectory planning and optimization in a large-scale complex environment. By using the value-based Dueling Double DQN (DDQN) [20], Zeng [21] introduced a high-precision navigation technique for UAV, which has improved the efficiency of target tracking. However, these authors used discrete actions (i.e. the environment is modeled as a grid world with limited agent action space, degree of freedom), which may reduce the efficiency while dealing with real-world environment, where the agent operated according to a continuous action space.

To address these problems, in this paper, a new algorithm combining MPC [22] (Model Predictive Control) with DDPG [23] based on policy gradient [24] is proposed. The main contributions of this research are as follows:

-

•

A DDPG-based obstacle avoidance method is proposed, which enables agent to keep trial and error during the training process to learn a strategy to reach the destination without collision. In order to improve the efficiency of learning, the idea of the Artificial Potential Field (the obstacles and the target impose repulsion and attraction on agent respectively) is used to design the reward function.

-

•

Considering the motion constraints of the agent in reality, the agent is modeled according to the Kinematic model. Then the MPC algorithm was used to predict the trajectory u(t) of the agent.

-

•

The experimental results showed that our algorithm has made great improvement on accuracy by 7%-30% compared with the other algorithms, and on the length of the path and turning angle by reducing 100 units and 400-450 degrees compared with DQN (Deep Q Network), respectively.

The structure of this paper is as follows: Section 2, the path planning algorithm based on DDPG and MPC is introduced. Including Knowledge of background, Algorithm update, Reward function, State space and Action space. The simulation is presented in Section 3. We use unity3D to simulate the aircraft carrier deck and square. Section 4 gives the Experimental results that compared the model with DDPG, DQN, and A2C on the accuracy, length of path, reward and smoothness of the path. The summary is given in Section 5.

II Path planning algorithm based on DDPG and MPC

In this section, we present a new algorithm based on DDPG and MPC. Firstly, we introduce the knowledge of background including MPC, DRL and DDPG. Then we analyze algorithmic principle according to the algorithmic framework that is divided into three parts: trajectory prediction, action selection and update. Finally, the state space, action space and reward function under this framework are defined and explained.

II-A Knowledge of background

II-A1 MPC

Model predictive control (Model Predictive Control, MPC) is a type of control algorithm developed in the 1970s. The algorithm adopts control strategies such as multi-step prediction, rolling optimization and feedback correction. Its purpose is to minimize the cost function to make the prediction more accurate. More precisely, in discrete time, the method of the model predictive control can be formulated as follows:

Where is control matrix, is control error matrix caused by noise or disturbance, is the initial state, are the information of prediction calculated by the predictive model.

To make each prediction module and the predicted result closer to the true value, we need to perform rolling optimization on the model. The cost/objective function is defined as . The optimal control input is generated by minimizing the cost function, that is:

The cost/objective function involve the future state trajectory , output trajectory and control effort .

II-A2 Deep Reinforcement Learning

DRL[15] combines the perceptual ability of DL [16] and the decision-making ability of RL[17], which can solve the high-dimensional decision-making problems. It is currently an extremely popular research direction in the field of machine learning. As shown in Fig.1, the framework of the DRL, the agent interacts with the environment to obtain a high-dimensional observation information, and the deep learning evaluates the value function of each action based on the expected return and completes the state-to-action mapping through a certain strategy. The environment reacts to this action and gets the next observation information.

II-A3 Deep Deterministic Policy Gradient

DDPG is an extension of DQN based on the framework of Actor-Critic , which uses the experience replay sample collection method. It solves this problem that DQN can’t deal with continuous actions and Actor-Critic algorithm is difficult to converge. It adopts the four-network structure combining strategy gradient and value function. The strategy gradient is used to select a certain action from the continuous action space, and the value function is used to evaluate the selected action.

DDPG adopts the delayed update method, however, unlike the hard update of DQN, it uses the method of soft update to update the target network. More precisely, the online policy Critic networks are updated by minimizing the mean square error. The online policy Critic networks are updated by maximizing the Q value. At last, the current networks parameter are updated by soft update strategies.

II-B Algorithmic Framework

II-B1 Trajectory prediction

Trajectory prediction refers to predict the short-term future coordinates according to history coordinates. In our algorithm, the MPC (Model Predictive Control) is used to predict the future coordinates, which has the advantages of good control effect and strong robustness.

As shown in Fig.1 the framework of our algorithm, the prediction model of MPC takes the current state s and the historical trajectory of the dynamic obstacle as input and the output are calculated by prediction model of MPC. In every time, the output by prediction model and historical trajectory are used to optimal the algorithm. The final predicted trajectory vector and the current state of the environment vector are concatenated as the final output.

II-B2 Action selection

Action selection is the decision-making essence of reinforcement learning. As shown in Fig.1 The framework of our algorithm, the DDPG takes the predicted environmental state as input, and the output—action is calculated by online policy network of Actor. After the agent performs the action, the reward is given by our reward function. Afterwards, the current predicted environment, the reward, the action and the next predicted environment state are stored in the experience replay memory.

II-B3 Update

DDPG is an extension of DQN [18] based on the AC [22] framework. It retains the experience replay memory of DQN and dual network structure. However, unlike the hard update of DQN, it uses the soft update method to update the target network.

When updating the Actor and Critic networks, a mini-batch of N transitions are sampled from the experience replay memory. Then the loss function of Critic networks is calculated by the current target Q value . Meanwhile, the Actor network is updated by the method of policy gradient.

At last, the two target network parameters and are updated by soft update strategies:

Where is a configurable constant coefficient, used to the regulate the soft update factor.

II-C State space

The state space of agent represents valuable information agent can attain before decision-making, which is used to help agent assesses the situation. For the agent to better understand the changing environment, we divided the state into the current environmental state and the predicted environmental state. The status value can be illustrated as .

II-C1 The current environmental state

Where represents the distance between the coordinates of the agent point and the target, and the represent the distance between the coordinates of the agent and the static obstacles.

II-C2 The predicted environmental state

Where represent the distance between the predicted coordinates of the dynamic obstacles and the coordinates of the agent.

II-D Agent action space

The action space represents the set of actions that the agent can perform. We set the action space as:

The distances that the agent moves in the x and y directions are expressed as: , respectively.

II-E Reward function

The reward in DRL, which is the feedback signal available for the agent’s learning, is used to evaluate the action taken by the agent. A simple approach is to set sparse reward, in which the agent can get a positive return only if the task is accomplished. However, this method is inefficient to collect useful experience data so as to help agent learning. Accordingly, the convergent speed of network updating is slow and the agent may not learn optimal strategies. In this paper, we introduce the idea of the Artificial Potential Field (the obstacles and the target impose repulsion and attraction on agent respectively) into the design of reward function. Four types of excitation are considered in the reward. (1) The reward of target attraction. (2) The reward of obstacle repulsion. (3) Collision reward. (4) Reward for reaching the target point. More precisely, these rewards are described as follows:

II-E1 The reward of target attraction

The magnitude of attraction is proportional to the distance between the agent and the target. As shown in Eq. (10) and (11), we simplified the attraction of the Artificial Potential Field.

The reward of target attraction is usually proportional to the difference between (the distance between the agent and the target at time t) and (he distance between the agent and the target at time t+1). However, when the difference is too large or too small, or replace the difference as the reward. Among them, the formulas (10) and (11) represent agent is close or far away from the target respectively.

II-E2 The reward of obstacle repulsion

Similar to the reward of target attraction , we also simplified the repulsion value of the Artificial Potential Field. The formulas as shown in Eq. (12) and (13).

Where and represent the distance between the agent and the obstacle at time and respectively. or represent the upper and lower limits of the reward value respectively when agent is close or far away from obstacles.

II-E3 Collision reward

As shown in Eq. (14), the reward is given by the environment when the agent collides with the obstacles.

II-E4 Reward for reaching the target point

As shown in Eq. (15), the reward is given by the environment when the agent reach to the target .

At last, the total reward value can be defined in Eq. (16).

Where represents the weight of the four kinds of reward values respectively.

III Simulation

The path planning of the agent and dynamic obstacle avoidance are important problems in computer simulation research and they are widely used in crowd animation, computer game and deduction of scheme. Among them, the pedestrian square has the characteristics of large area, high density poor regularity in crowd movement and high mobility. While, the deck space of the aircraft carrier is narrow and tasks are numerous and the uninterrupted operation of carrier-based aircraft increases the uncertainty of space usage. It is a challenge to find a collision-free path from the starting point to the target in such highly uncertain environments. Therefore, in this section, we chose the aircraft carrier deck and square as the objects of simulation to verify the efficiency of our algorithm, and the Unity3D is used to model this simulation. Meantime, we sampled randomly an episode date to described the trajectories of the carrier-based aircraft and dynamic obstacles in every scenes.

III-A Aircraft carrier deck simulation

As shown in Fig.2 and Fig.3, in the aircraft carrier deck, the blue carrier-based aircraft needs to avoid dynamic and static obstacles (the green and yellow carrier-based aircrafts represented dynamic and static obstacles, respectively) in real-time to find a collision-free path from the start point to the target (the position of the white board). In scene 1, we set 5 dynamic obstacles and 15 static obstacles. As shown in Fig.3, in order to verify the effective of our algorithm in the highly dynamic environment, 4 dynamic obstacles were added randomly to the scene 1.

To simulate the movement of dynamic obstacles the real scene. Three assumptions were made as follows:

Assumption 1: The movement of the dynamic obstacle have the purpose;

Assumption 2: Special locations are set as the target of dynamic obstacles in the scene (for example gas station, command room, etc.);

Assumption 3: The initial speeds are set to dynamic obstacles. Then when it moves to a special position, it will stay there for several time to perform operations, the time of operation is given randomly. After that, a new speed of dynamic obstacles is given randomly.

For two scenes, we described the trajectories of the carrier-based aircraft and dynamic obstacles in an episode. As shown in in Fig.4 and Fig.5, the trajectories of the carrier-based aircraft and dynamic obstacles were represented by the blue and green dashed lines, respectively. The final location of carrier-based aircraft and dynamic obstacles were marked with the red rectangle and numbers to identify them. In scene 1, when the carrier-based aircraft predicted that it would collide with the obstacle 3, an action——upward was taken to avoid the obstacle successfully in real-time. After that, the carrier-based aircraft predicted that the collision would be occurred with the obstacle 4 and then the direction was adjusted to avoid. In scene 2, when obstacles 2 and 5 appeared around the carrier-based aircraft, the carrier-based aircraft would move upward to avoid two dynamic obstacles, and ensured that the carrier-based aircraft moves closer to the target.

III-B Crowd square simulation

As shown in Fig.6, in the pedestrian square, the people on the blue square was moving toward the target to withdraw money in the position of green square board. In this scene, we set 28 static obstacles and 13 dynamic obstacles. It was worth noting that 9 dynamic obstacles and a large number of static obstacles were set around the agent to improve the difficulty of obstacle avoidance.

The trajectories of pedestrians and agent were depicted on the square in an episode by the blue and yellow dashed lines, respectively in Fig. 5. It is obvious that the agent moved following trajectory 1 to avoid the static crowd and pedestrian 1. Then the agent predicted that if continue to move in the original direction, it would collide with pedestrian 2 and deviate from the target, so the agent adjusted its direction and moved along with trajectory 2 to avoid collision and move towards the target. When the agent arrived near the target, pedestrian 5 finished the task of money withdrawal and moved outward. After the agent predicted that it would collide with pedestrian 5, he adjusted constantly the direction of movement to avoid obstacles and reached the target without collision in finally.

IV Results

For avoiding repetition and verifying the efficiency of the model, in this section we just adopted the method of comparative experiment on the aircraft carrier deck scene, and systematically compared the model with DDPG, DQN, and A2C on the accuracy rate, length of path, reward and smoothness of the path. In particular, the smoothness of the path was expressed by the total turning angle of the aircraft reaching the target point without collision.

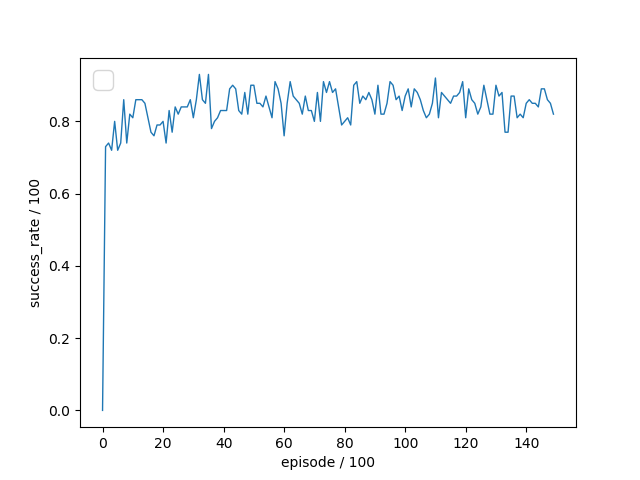

First of all, we compared the accuracy rate for the different algorithms in every scene. In scene 1, as shown in Fig.8, the accuracy rate of our algorithm was easiest to reach 100% in four algorithms. Due to the difficulty of convergence of the A2C, the accuracy rate of A2C was the most unstable. Secondly, although the accuracy rate of DDPG could reach 100%, it still fluctuated greatly. Although DQN had a good performance in accuracy rate, it still has disadvantages, which would be introduced in the later. To compare the results more clearly, we calculated the average accuracy rate and showed it in the table. As shown in Tables 1, 100 sets of accuracy rate were sampled randomly to calculate the average, our algorithm made great improvement on accuracy rate by 8%, 6%, and 31% compared with DQN, A2C and DDPG, respectively. In scene 2, with the complexity of the environment increases, the accuracy rate of the four algorithms had decreased, but our algorithm could still reach 90%. Our algorithm still had the highest accuracy rate in four algorithms. In summary, in terms of the accuracy rate of the algorithm, although both DDPG and A2C can solve the problems in the continuous action space, DDPG has a better learning effect. Our algorithm combines the prediction module on the basis of DDPG, so that the algorithm can achieve a higher accuracy rate in complex environments.

| Scene 1 | Scene 2 | |

|---|---|---|

| DQN | 90% | 84% |

| A2C | 67% | 64% |

| DDPG | 92% | 82% |

| MDDPG | 98% | 91% |

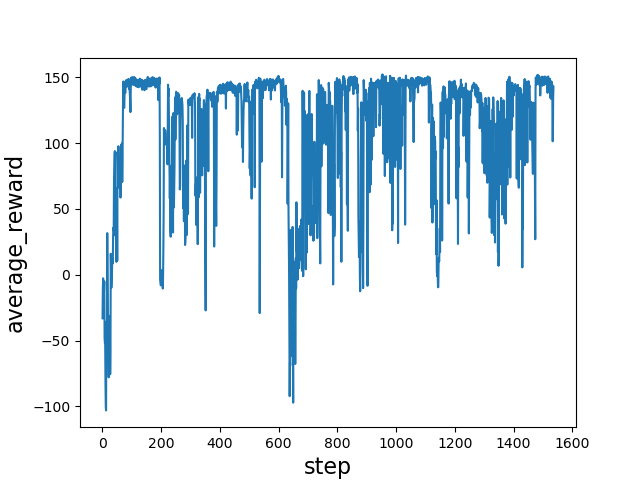

To verify the learning effect of the algorithm, we used our algorithm to compare the average reward with other algorithm. The average reward of the four algorithms in two scenes were presented in Fig.10 and Fig.11. The average reward indicated the efficiency of the learning. As shown in Fig. 9, we compared the average reward of the four algorithms in scene 1. Due to the difficulty of convergence of the A2C algorithm, the maximum average reward would only stabilize at about 100. However, DQN, DDPG, and our algorithm would reach 150. It was obvious that the average reward of DDPG was unstable. That showed that it was difficult for DDPG to deal with dynamic obstacles without prediction. Although the curve of the average reward is similar between DQN and our algorithm, our algorithm had higher stability and was more in line with the kinetic model in the real scene. It could be seen from the average reward that our algorithm had the best learning effect in four algorithms.

| Length of path | Turning angle | |

|---|---|---|

| DQN | 438.22 | 586.73 |

| A2C | 352.60 | 142.03 |

| DDPG | 328.97 | 139.96 |

| MDDPG | 328.95 | 139.80 |

| Length of path | Turning angle | |

|---|---|---|

| DQN | 465.45 | 522.62 |

| A2C | 324.16 | 158.00 |

| DDPG | 320.61 | 134.28 |

| MDDPG | 315.68 | 128.41 |

To take into account as much as possible the planning effect of the algorithm in the real scene, we compared the average length of the path and average turning angle of the algorithm. In a real scene, finding a smooth and optimal or sub-optimal path can not only reduce the consumption of resource, but also complete the task in the shortest time, increasing the real-time nature of the task. As shown in Table 2 and Table 3, which indicated the average length of the path and average turning angle of the four algorithms respectively in two different scenes. Although DQN had a good performance in accuracy rate, the average length of the path and average turning angle were longest in four algorithms, which would consume more time and resources in the real environment. Meanwhile, we compared two different scenes. Though the accuracy rate of the four algorithms had reduced after increasing the complexity of the environment. The average length of the path and average turning angle had small fluctuations.

In summary, our algorithm could effectively solve the difficult convergence of A2C and the difficulty of DDPG to deal with dynamic obstacles. Compared with the other three algorithms, our algorithm has obvious improvements in terms of average path length, path smoothness, accuracy and average reward.

V Conclusion

In this paper, a new algorithm of path planning based on DDPG and MPC is designed which combines the perception and decision-making capabilities of deep reinforcement learning and the predictive capabilities of the MPC, and can apply to the highly uncertain scene. The trajectory prediction of dynamic obstacles is achieved by the MPC, which greatly reduces the uncertainty of the environment, and effectively solves the problems faced by traditional algorithms in dynamic environments, such as slow convergence speed and poor generalization. The DDPG is suitable for solving the problems of continuous state space. which is more in line with real scenes. In order to verify the efficiency of the algorithm, we chose Unity3D to simulate the complex aircraft carrier deck and square, and conducted a detailed analysis of the agent’s trajectory. The final results show that our algorithm has made great improvement on accuracy by 7%-30% compared with the other algorithms, and on the length of the path and turning angle by reducing 100 units and 400-450 degrees compared with DQN (Deep Q Network), respectively.

References

- [1] D. Wang, “Indoor mobile-robot path planning based on an improved a* algorithm,” Journal of Tsinghua University Science and Technology, vol. 52, no. 8, pp. 1085–1089, 2012.

- [2] L. Li, T. Ye, M. Tan, and X.-j. Chen, “Present state and future development of mobile robot technology research,” Robot, vol. 24, no. 5, pp. 475–480, 2002.

- [3] T. Lozano-Pérez and M. A. Wesley, “An algorithm for planning collision-free paths among polyhedral obstacles,” Communications of the ACM, vol. 22, no. 10, pp. 560–570, 1979.

- [4] J. Barraquand and J.-C. Latombe, “Robot motion planning: A distributed representation approach,” The International Journal of Robotics Research, vol. 10, no. 6, pp. 628–649, 1991.

- [5] G. Hoffmann, S. Waslander, and C. Tomlin, “Quadrotor helicopter trajectory tracking control,” in AIAA guidance, navigation and control conference and exhibit, 2008, p. 7410.

- [6] R. Bohlin and L. E. Kavraki, “Path planning using lazy prm,” in Proceedings 2000 ICRA. Millennium Conference. IEEE International Conference on Robotics and Automation. Symposia Proceedings (Cat. No. 00CH37065), vol. 1. IEEE, 2000, pp. 521–528.

- [7] Y. K. Hwang, N. Ahuja et al., “A potential field approach to path planning.” IEEE Transactions on Robotics and Automation, vol. 8, no. 1, pp. 23–32, 1992.

- [8] J. Bruce and M. M. Veloso, “Real-time randomized path planning for robot navigation,” in Robot Soccer World Cup. Springer, 2002, pp. 288–295.

- [9] A. Stentz, “Optimal and efficient path planning for partially known environments,” in Intelligent unmanned ground vehicles. Springer, 1997, pp. 203–220.

- [10] C. Yan and X. Xiang, “A path planning algorithm for uav based on improved q-learning,” in 2018 2nd International Conference on Robotics and Automation Sciences (ICRAS). IEEE, 2018, pp. 1–5.

- [11] O. Bouhamed, H. Ghazzai, H. Besbes, and Y. Massoud, “Q-learning based routing scheduling for a multi-task autonomous agent,” in 2019 IEEE 62nd International Midwest Symposium on Circuits and Systems (MWSCAS). IEEE, 2019, pp. 634–637.

- [12] C. J. Watkins and P. Dayan, “Q-learning,” Machine learning, vol. 8, no. 3-4, pp. 279–292, 1992.

- [13] V. N. Sichkar, “Reinforcement learning algorithms in global path planning for mobile robot,” in 2019 International Conference on Industrial Engineering, Applications and Manufacturing (ICIEAM). IEEE, 2019, pp. 1–5.

- [14] M. A. K. Jaradat, M. Al-Rousan, and L. Quadan, “Reinforcement based mobile robot navigation in dynamic environment,” Robotics and Computer-Integrated Manufacturing, vol. 27, no. 1, pp. 135–149, 2011.

- [15] V. Mnih, K. Kavukcuoglu, D. Silver, A. A. Rusu, J. Veness, M. G. Bellemare, A. Graves, M. Riedmiller, A. K. Fidjeland, G. Ostrovski et al., “Human-level control through deep reinforcement learning,” nature, vol. 518, no. 7540, pp. 529–533, 2015.

- [16] Y. LeCun, Y. Bengio, and G. Hinton, “Deep learning,” nature, vol. 521, no. 7553, pp. 436–444, 2015.

- [17] R. S. Sutton, A. G. Barto et al., Introduction to reinforcement learning. MIT press Cambridge, 1998, vol. 135.

- [18] Q. Liu, L. Shi, L. Sun, J. Li, M. Ding, and F. Shu, “Path planning for uav-mounted mobile edge computing with deep reinforcement learning,” IEEE Transactions on Vehicular Technology, vol. 69, no. 5, pp. 5723–5728, 2020.

- [19] V. Mnih, K. Kavukcuoglu, D. Silver, A. Graves, I. Antonoglou, D. Wierstra, and M. Riedmiller, “Playing atari with deep reinforcement learning,” arXiv preprint arXiv:1312.5602, 2013.

- [20] M. Sewak, “Deep q network (dqn), double dqn, and dueling dqn,” in Deep Reinforcement Learning. Springer, 2019, pp. 95–108.

- [21] Y. Zeng, X. Xu, S. Jin, and R. Zhang, “Simultaneous navigation and radio mapping for cellular-connected uav with deep reinforcement learning,” arXiv preprint arXiv:2003.07574, 2020.

- [22] E. F. Camacho and C. B. Alba, Model predictive control. Springer science & business media, 2013.

- [23] T. P. Lillicrap, J. J. Hunt, A. Pritzel, N. Heess, T. Erez, Y. Tassa, D. Silver, and D. Wierstra, “Continuous control with deep reinforcement learning,” arXiv preprint arXiv:1509.02971, 2015.

- [24] J. Peters and S. Schaal, “Policy gradient methods for robotics,” in 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems. IEEE, 2006, pp. 2219–2225.