MSR-DARTS: Minimum Stable Rank of Differentiable Architecture Search

Abstract

In neural architecture search (NAS), differentiable architecture search (DARTS) has recently attracted much attention due to its high efficiency. It defines an over-parameterized network with mixed edges, each of which represents all operator candidates, and jointly optimizes the weights of the network and its architecture in an alternating manner. However, this method finds a model with the weights converging faster than the others, and such a model with fastest convergence often leads to overfitting. Accordingly, the resulting model cannot always be well-generalized. To overcome this problem, we propose a method called minimum stable rank DARTS (MSR-DARTS), for finding a model with the best generalization error by replacing architecture optimization with the selection process using the minimum stable rank criterion. Specifically, a convolution operator is represented by a matrix, and MSR-DARTS selects the one with the smallest stable rank. We evaluated MSR-DARTS on CIFAR-10 and ImageNet datasets. It achieves an error rate of with M parameters within GPU-days on CIFAR-10, and a top-1 error rate of on ImageNet. The official code is available at https://github.com/mtaecchhi/msrdarts.git.

1 Introduction

Neural architecture search (NAS) seeks to design neural network structures automatically and has been successful in many tasks [1, 20, 27]. In NAS, all possible architectures are defined by a search space, which consists of network topologies and operator sets, and a search strategy is used to efficiently obtain a better architecture on the defined search space. A recent trend in the search space is a small component in a network called a cell, which is defined as an optimization target to reduce search cost. Reinforcement learning (RL) [41, 42, 27] and evolutionary algorithms (EAs) [22, 28, 35] are widely used for the search strategy.

Recently, DARTS [23] and DARTS-based methods [37, 7, 38, 19] have been proposed, which are differentiable methods that relax the search spaces to be continuous, enabling direct application of gradient-based optimization. These methods are effective regarding search cost since they skip the evaluation of each sampled architecture, which is required in RL and EAs. The cell defined in the above studies is a direct acyclic graph (DAG) with multiple nodes, each of which is a latent representation (e.g., a feature map in convolutional networks), and each directed edge is associated with an operator. While these studies explicitly introduce architecture parameters as learnable parameters in addition to the weight parameters of over-parameterized networks in the architecture search, each edge in a DAG is a mixed edge that includes all candidate operators in the operator set, and each operator is weighted by an architecture parameter. An architecture parameter indicates how suitable its operator is in a mixed edge. Architecture parameters are jointly trained with the weight parameters in an alternating manner. However, this optimization process tends to produce a fast-converging architecture, which is not always the optimal solution in terms of accuracy [31].

We propose a new method called minimum stable rank differentiable architecture search (MSR-DARTS) to solve this problem. With this method, the optimization of the learnable architecture parameters is replaced with the selection process using a stable rank criterion; thus, only weight parameters of neural networks are trained during the architecture search, which enables us to avoid selecting a fast-converging architecture but appropriately find one with a better generalization error. Our operation set includes only limited convolutional operators (e.g., separable convolution and dilated convolution with different kernel sizes), in which each convolutional operator is regarded as a matrix. We use the stable rank (numerical rank) of each convolution to derive a discrete architecture. Specifically, in each mixed edge, the operator that has the lowest stable rank is selected. This architecture search based on stable rank is reasonable since the low-rankness of a matrix indicates the high generalization ability of neural networks. Several studies [2, 32] reported that a neural network with lower stable rank operators has higher generalization ability, where a stable rank is often used instead of a rank because the former properly captures the statistical degrees of freedom by ignoring negligibly tiny singular values. More precisely, when an input is noisy, a low stable rank convolution conveys the input information only and attenuates noise. Thus, a network with low stable rank has better generalization ability and is robust against noisy input. In summary, we train an over-parameterized network with fixed uniform architecture parameters; thus, only the weights of the network are optimized. The discrete architecture is then derived using the stable rank of each operator by treating a convolution as a matrix with MSR-DARTS to yield a well generalized architecture.

We conducted experiments to evaluate MSR-DARTS that involved the CIFAR-10 and ImageNet datasets. MSR-DARTS achieved an error rate of with M parameters, which is a lower test error rate than with another DARTS-based method, e.g., PC-DARTS [38], and is competitive with Fair DARTS [8]. MSR-DARTS achieved a top-1 error rate of on ImageNet. To the best of our knowledge, it is a state-of-the-art DARTS-based method that uses CIFAR-10 dataset for architecture search. The search process takes only GPU-days. The code is available at https://github.com/mtaecchhi/msrdarts.git.

2 Related Work

2.1 Neural Architecture Search

There has been growing interest in NAS since Zoph and Quoc [41] proposed its algorithm. In the early years, EAs and RL were used to optimize network architectures. The algorithm using RL trains a recurrent neural network meta-controller to guide the search process and gradually sample a better architecture. Zoph et al. and Pham et al. [42, 27] first optimized the structure of a small component in an entire network, namely a cell, instead of the entire network structure, then constructed the entire network by stacking the optimized cells. This two-step process reduces search cost. Liu et al., Tang et al. and Real et al. [22, 35, 28] used EAs, which mutate the architecture topologies and evolve towards better performances. DARTS introduces a differentiable NAS pipeline, which relaxes the search space to be continuous and directly uses gradient-based optimization. Many studies [37, 7, 38, 19] followed this approach and achieved remarkable performance with improved efficiency.

2.2 DARTS

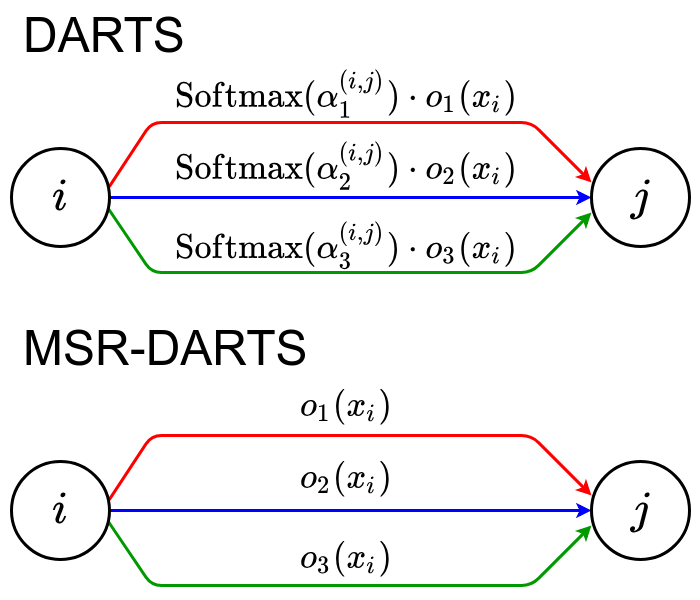

In this study, similar to previous ones [37, 7, 38], we used DARTS as a baseline framework. DARTS stacks cells, each of which is represented as a DAG of an ordered sequence of nodes, , where each node is a feature map and each edge denotes an information flow from node to node . A set of candidate operators is denoted as , in which an element that includes a learnable parameter is the -th candidate operator defined in advance (e.g., separable convolution and dilated convolution). Figure 1 left illustrates an example of a DAG with nodes. An information flow between nodes is a mixed edge, which includes all candidate operators. An architecture parameter , which indicates how suitable is for mixed edge , is introduced as a weight for . The goal is to optimize . For each , the information flow from node to node , illustrated in the upper middle of Figure 1, is computed as

| (1) |

where is the result of applying the input to . An output of node is a sum over all information flows from its predecessors, that is, . The first two nodes, and , are input nodes to a cell. The output of all the cells equals that of the final node , which is defined as the concatenation of all the intermediate cells, i.e., .

There are two types of cells introduced in DARTS. One is a normal cell, which maintains spatial resolution, and the other is a reduction cell, which reduces the spatial resolution of feature maps. Note that while DARTS shares the architecture topology among all normal cells, the same is true for reduction cells because it optimizes architecture parameters and , where is shared by all the normal cells and is shared by all the reduction cells.

DARTS has two stages. The first is the search stage, which trains the over-parameterized network consisting of mixed edges in each of which all possible operators are included, and derives a promising discrete architecture in accordance with the architecture parameter (see Subsec. 3.4 for more details). The second stage is an evaluation stage in which the derived architecture from full-scratch is trained.

|

|

|

2.3 Generalization Error Analysis

A deep network generalizes well even when it has a larger amount of parameters than the sample size. Generalization bounds represent the upper bound of the generalization error. We can guarantee that a model with small training error can have a high generalization performance if the generalization error (difference between training error and expected error) can be evaluated properly and has a small upper bound. There are several metrics to represent generalization error bounds such as VC-dimension [36, 14], PAC-Bayes theory [25, 11], norm based analysis [3, 26, 12], and the compression based approach [2, 4, 32]. The compression based approach has recently attracted much attention because it gives tighter generalization error bounds for deep neural networks. For example, Arora et al. [2] used the low rank property of weight matrices to compress neural networks based on the fact that a matrix with a lower stable rank is more robust to noise thus generalizes well. Suzuki et al. [32] experimentally confirmed that most networks have near low rank weight matrices after training, where a near low rank matrix is defined as a matrix in which a small number of singular values are significantly large while the other singular values are close to zero. This near low rank property is used to derive generalization bounds.

3 Method

3.1 MSR-DARTS

While DARTS and many related methods [37, 7, 38] search for architectures by jointly training the architecture parameters and weights of neural networks (e.g., the parameters of the convolutional operator) in an alternating manner ( is denoted as and is denoted as for simplicity), Shu et al. [31] reported that these methods tend to lead to a fast converged architecture during the search stage rather than well generalized models. This is because is updated on the basis of , which is not fully converged rather than well trained , which is harmful especially in the early epochs. This means that there is room to search for a model with lower error rate in the search space. MSR-DARTS addresses this issue. It fixes to , hence optimizes only . In the search stage, instead of Eq. (1), the information flow from node to node with MSR-DARTS illustrated in Figure 1 middle bottom, is defined as

| (2) |

The other process is the same as those defined in Subsec. 2.2.

With MSR-DARTS, we assume that each candidate operator consists of several convolutional layers. Let and be the -th and last convolutional layers in , respectively. Note that the notation of node is omitted for simplicity in and . Regarding convolutional computation as matrix calculation [30], the stable rank of convolution is then denoted as , where denotes the Frobenius norm and denotes the spectral norm. A stable rank is often used as a surrogate for a rank [2].

Then we select the best operator from for each edge after training of an over-parameterized network in the search stage. We use the relation between network generalization ability and singular values proposed by Arora et al. [2]. They reported that a well-generalized network consists of noise-robust convolutions that have low stable ranks. Noise sensitivity is defined as

| (3) |

where is a mapping from real-valued vectors to real-valued vectors (e.g., convolutional computation represented by a matrix), is a vector to be multiplied (i.e., input for a convolutional layer), is a noise distribution, is an expectation value, and is the Euclidean norm. Low noise sensitivity indicates that the convolution matrix has a near low rank property (i.e., a low stable rank) because the signal is correctly carried whereas noise is attenuated. Note that is at least its stable rank when noise is generated from standard normal distribution, i.e., . For more details, refer to the paper by Arora et al. [2].

We assume that an operator that generalizes better has lower noise sensitivity, i.e., a lower stable rank (experimentally shown in Appendix B). We further assume that the last convolution is the most relevant to the output of , thus use the stable rank of (i.e., only. The operator that has the lowest is selected to yield the discrete architecture.

3.2 Search Space

DARTS defines an operator set with eight operators. That is, 33 and 55 separable convolutions, 33 and 55 dilated separable convolutions, 33 max pooling, 33 average pooling, identity, and zero. Our operator set is the subset of the search space of DARTS, i.e., 33 and 55 separable convolutions and 33 and 55 dilated separable convolutions. As described in Subsec. 3.1, we assume that all candidate operators consist of limited convolutional layers, where 33 max pooling, 33 average pooling, identity, and zero, which are not convolutional calculations, are excluded. As with DARTS, we use the ReLU-Conv-BN order for convolutional operators, in which each separable convolution is always applied twice.

3.3 Setting Spectral Norm of Convolution in Search Stage

We assume that with MSR-DARTS, all operators in a search space are trained correctly in the search stage. However, previous studies [32, 13, 17] reported that deep learning tends to produce a simpler model than its full expression ability when we use regularization such as regularization. More precisely, it has been experimentally shown that a trained network tends to have near low rank weight matrices, in which only a few singular values are large and others are close to zero. Therefore, in each mixed edge of an over-parameterized network with MSR-DARTS, some operators can be redundant because their singular values can be all close to zero. To train each operator stably, we apply the spectral norm adjustment technique introduced by Behrmann et al. [5] to all convolutional operators in candidate operators, that is,

| (4) |

where is a constant value to be set (Figure 1 right). We estimate the spectral norm of by carrying out power-iteration (see Appendix A for more details), which yields an under-estimate , where denotes the -th largest singular value of . Similar to the above study, using this estimate, each convolution in candidate operator is normalized as

| (5) |

Note that spectral norm adjustment of each convolution is conducted before forward propagation. We used in our experiments.

3.4 Deriving Discrete Architecture

After training of the over-parameterized network, a discrete architecture is derived by selecting the topology and operator for each intermediate node. With DARTS and its derivations, the topology is selected by retaining two of the strongest precedent edges for each intermediate node, where the strength of an edge from node to node , denoted as , is defined as

| (6) |

In each edge, the operator with the largest is selected. However, as described in Subsec. 3.1, MSR-DARTS uses a fixed value for . We use the stable rank of the last convolution in each to determine the topology. First, for normal and reduction cells, the mixed edge between node and node is replaced with the best operator , defined by

| (7) |

We explicitly notate the edge indices in convolution , denotes the type of cells and denotes the average of all the cells belonging to cell type . Similar to Eq. (7), the strength of an edge from node to node of cell type with MSR-DARTS is defined by

| (8) |

We use Eq. (8) instead of Eq. (6) to derive topology. Note that the operator , which has lower is considered a better operator in terms of generalization ability (see Subsec. 3.1).

4 Experiments

4.1 Datasets

Similar to several previous studies [23, 7, 38], we conducted experiments on the well-known image classification datasets CIFAR-10 [18] and ImageNet [9]. CIFAR-10 consists of 50K training images and 10K testing images. All images have a fixed size of 3232 and equally distributed over ten classes. ImageNet was obtained from the Imagenet Large Scale Visual Recognition Challenge 2012 [29] and contains 1K object classes, 1.28M training images, and 50K validation images. We follow the general setting in which the input image size is .

4.2 CIFAR-10 Toy Experiment

First, we confirmed the effectiveness of the proposed method using MSR through a simple toy experiment using CIFAR-10. In this experiment, MSR-DARTS and DARTS [23] were compared, both under the same experimental conditions. The results indicate that MSR-DARTS is superior to DARTS.

In the search stage, following DARTS, the architecture was conducted on a network with cells. Each convolutional cell consists of nodes. The input nodes and were equal to the output of the last two preceding cells, respectively. The output node was , which is the concatenation of all the intermediate nodes. Reduction cells were inserted at 1/3 and 2/3 the total depth of the network to reduce the spatial resolution of feature maps. All other cells were normal cells that maintain spatial resolution. Note that while DARTS and related methods [37, 38] share the architecture topology among all normal cells, the same is true for reduction cells, because they optimize architecture parameters , where is shared by all the normal cells and is shared by all the reduction cells (see Subsec. 2.2). MSR-DARTS also optimizes two types of cell structures, i.e., normal and reduction cell, but each cell is optimized using MSR (see Subsec. 3.1) because learnable architecture parameters are not optimized with MSR-DARTS.

4.2.1 Experimental Settings

We split the CIFAR-10 training data in a ratio of four to one. The former was used to train the over-parameterized network weights while the latter was used to calculate the loss. The over-parameterized network was trained for 50 epochs, where the batch size was determined to fit into a single GPU. The initial number of channels was set to 16. We used momentum stochastic gradient descent (SGD) to optimize the weights, with an initial learning rate of 0.025 (annealed down following a cosine schedule), momentum of 0.9, and weight decay of . We used search space {“3x3 separable conv”, “5x5 separable conv”, “3x3 dilated conv”, “5x5 dilated conv”}. Note that we used search space because with MSR-DARTS assumed that each candidate operator in the search space is composed of several convolutional layers (see Subsec 3.1); therefore, we omitted the operators that do not meet this assumption, i.e., “zero”, “identity”, “3x3 max pooling”, “3x3 average pooling” from the search space. We used single the TITAN RTX GPU, and the architecture search took less than days.

In the evaluation stage of the toy experiment, the network was composed of cells (6 normal cells and 2 reduction cells) instead of cells (18 normal cells and 2 reduction cells), the number of which is widely used to compare the performance of DARTS-based methods. The other settings follow those of DARTS. The network was trained for 600 epochs, with a batch size 96. The initial number of channels was 36, the SGD optimizer with an initial learning rate of (annealed down to zero following a cosine schedule), a momentum of , a weight decay of and gradient clipping at . Cutout [10], path dropout of probability , and auxiliary towers with weight were used to enhance accuracy.

4.2.2 Results

The validation loss of the over-parameterized network in the search stage is illustrated in Figure 2. In the search stage, the validation loss decreased from the beginning of training and took a minimum value around 35 epochs. However, it began to increase little by little after 35 epochs. Since MSR-DARTS aims to determine a model structure with high generalization performance, the architecture generated before the increase in the validation loss was used for architecture evaluation. Specifically, we used the architecture generated in epoch 38.

| Architecture |

|

S.C. |

|

||||

|---|---|---|---|---|---|---|---|

| DARTS | 1.65 | ||||||

| MSR-DARTS | |||||||

Figure 3 left and right indicate the test accuracy and test loss, respectively. Each value is plotted after 300 epochs where the difference can be observed. The architecture generated with MSR-DARTS exhibited lower test error and higher test accuracy than those with DARTS, which indicates MSR-DARTS outperforms DARTS when the layer depth in the search stage is the same as that in the evaluation stage. Table 1 summarizes the results of the toy experiment. MSR-DARTS achieved a test error rate of , which is higher than that of DARTS. MSR-DARTS only requires days with a GPU to search for the optimal architecture, which is 3 times faster than with DARTS. In terms of comparing the number of parameters of each architecture, there was almost no difference between the models since the same search space was used. Strictly speaking, the number of parameters of MSR-DARTS is M, which is smaller than that of DARTS.

To conclude the toy experiment, we set the same experimental settings such as search space and hyper-parameters for both methods and confirmed that MSR-DARTS yields better results than DARTS.

4.3 Results on CIFAR-10

| Architecture |

|

|

|

Search Method | ||||||

| DenseNet-BC [16] | - | manual | ||||||||

| NASNet-A + c/o [42] | RL | |||||||||

| AmoebaNet-B + c/o [28] | evolution | |||||||||

| Hierarchical Evo [22] | evolution | |||||||||

| PNAS [21] | 3.2 | SMBO | ||||||||

| ENAS + c/o [27] | RL | |||||||||

| DARTS (1st order) + c/o [23] | gradient-based | |||||||||

| DARTS (2nd order) + c/o [23] | gradient-based | |||||||||

| SNAS (moderate) + c/o [37] | gradient-based | |||||||||

| ProxylessNAS + c/o [6] | - | gradient-based | ||||||||

| DARTS+ + c/o [19] | gradient-based | |||||||||

| P-DARTS + c/o [7] | gradient-based | |||||||||

| PC-DARTS + c/o [38] | gradient-based | |||||||||

| Fair DARTS + c/o [8] | gradient-based | |||||||||

| PR-DARTS + c/o [40] | gradient-based | |||||||||

| MSR-DARTS + c/o (ours) | Stable Rank | |||||||||

We evaluated the MSR-DARTS with cells (18 normal cells and 2 reduction cells) in the evaluation stage to compare other state-of-the-art methods. We used the same cell structure optimized in the toy experiment. The rest of the experimental settings were exactly the same as with DARTS. Table 2 compares the image-classification performance of MSR-DARTS with those of the other methods. MSR-DARTS achieved test error, which is better than 2nd order DARTS. It outperformed PC-DARTS [38] and was competitive with Fair DARTS [8] in terms of test-error rate. Our architecture search finished in GPU-days, which is faster than 2nd order DARTS ( GPU-day) and Fair DARTS ( GPU-days). Note that MSR-DARTS generated the optimal model with M parameters, which is a slightly larger number of parameters compared with the models of the other methods. This is because of search space , which includes the convolutional operator only. This means that the operator with few parameters, such as pooling or identity (skip connection), is not selected.

4.4 Results on ImageNet

| Architecture | Test Err.(%) | Params | Search Cost | Search Method | ||

| top-1 | top-5 | (M) | (M) | GPU-days | ||

| Inception-v1 [33] | - | manual | ||||

| MobileNet [15] | - | manual | ||||

| ShuffleNet (v1) [39] | - | manual | ||||

| ShuffleNet (v2) [24] | - | - | manual | |||

| NASNet-A [42] | RL | |||||

| AmoebaNet-C [28] | evolution | |||||

| PNAS [21] | SMBO | |||||

| MnasNet-92 [34] | - | RL | ||||

| DARTS (2nd order) [23] | gradient-based | |||||

| SNAS (mild) [37] | gradient-based | |||||

| ProxylessNAS(GPU) 222This architecture was searched for on ImageNet directly. [6] | gradient-based | |||||

| DARTS+(CIFAR-100)[19] | gradient-based | |||||

| DARTS+(ImageNet) 222This architecture was searched for on ImageNet directly. [19] | gradient-based | |||||

| P-DARTS(CIFAR-10) [7] | gradient-based | |||||

| P-DARTS(CIFAR-100) [7] | gradient-based | |||||

| PC-DARTS(CIFAR-10) [38] | gradient-based | |||||

| PC-DARTS(ImageNet) 222This architecture was searched for on ImageNet directly. [38] | gradient-based | |||||

| Fair DARTS [8] | gradient-based | |||||

| PR-DARTS [40] | gradient-based | |||||

| MSR-DARTS(CIFAR-10) (ours) | Stable Rank | |||||

Following DARTS and its derivations, we evaluated the architecture with cells in the architecture evaluation stage. We used the normal and reduction cells, which were optimized with CIFAR-10 (see Subsec. 4.2). The experimental settings mostly followed those of DARTS. The network was trained epochs with a batch size . The initial number of channels was set to be . We used momentum SGD to optimize the weights, with an initial learning rate (annealed down following a cosine schedule), momentum of , and weight decay of . For additional enhancements, label smoothing and an auxiliary loss tower were used during the training. We used TITAN V100 GPUs, applied learning warm-up for the first epochs (increase linearly with each batch), and used Horovod for distributed training.

The results are summarized in Table 3. The architecture found with MSR-DARTS using CIFAR-10 reported a top-1 error rate of and top-5 error rate of , which outperforms the top-1 error rate of and top-5 error rate of reported with DARTS. In the comparison with other DARTS-based methods using CIFAR-10 for architecture search, MSR-DARTS had higher top-1 accuracy than P-DARTS, higher than PC-DARTS, higher than Fair DARTS, and higher than PR-DARTS. It was also competitive with of DARTS+, which searches for an optimal architecture with CIFAR-100. Regarding search cost, MSR-DARTS required only GPU-days, which is 3 times faster than the 2nd order DARTS and slightly faster or competitive with Fair DARTS and P-DARTS. Due to the fact that the search space includes only limited types of convolutional operators (see Subsec. 3.2), MSR-DARTS had a relatively large number of parameters and multiply-add operations.

4.5 Qualitative Evaluation

In this subsection, we compare the optimized architectures between DARTS and MSR-DARTS qualitatively. Figure 4 illustrates the cell structures found with DARTS((a) normal cell, (b) reduction cell) and with MSR-DARTS ((c) normal cell, (d) reduction cell).

As described in a previous paper [31], the depth of a cell structure is defined as the number of connections along the longest path from input node to output node. The width of a cell structure is defined as the total width of intermediate nodes that are connected to the input nodes. In particular, the normal cell found with DARTS (Figure 4 (a)) had a depth of and width of .

The normal cell found by MSR-DARTS, however, had a depth of and width of , a deeper and narrower structure. As discussed in the above paper [31], DARTS tends to select shallow and wide cells because is optimizes two parameter sets (weight and architecture parameter ) in an alternating manner. Each candidate architecture is not evaluated based on the generalization performance at convergence during architecture search; thus architectures with faster convergence rates tend to be selected. In contrast, the architecture parameters are fixed and only are optimized with MSR-DARTS. Thus, MSR-DARTS can evaluate each architecture more correctly at convergence and yield deeper and narrower cells, which is a well generalized architecture. Note that the scheme that penalizes shallower cells is used with PR-DARTS [40]. However, PR-DARTS has many more hyper-parameters than the original DARTS, which are difficult to adjust. On the other hand, MSR-DARTS does not require any hyper-parameters to deepen a cell structure, making it easy to optimize. All the intermediate nodes, except the first intermediate node (illustrated as in Figure 4) in the normal cell found with MSR-DARTS had both a connection from an input node and a connection from another intermediate node. Each intermediate node can handle both the input features of the cell and well-processed features inside the cell. This structure may improve of the accuracy.

The reduction cell found with MSR-DARTS had a shallow and wide cell structure in contrast to the normal cell. The reason MSR-DARTS finds these structures may be that the reduction cell should summarize the information to reduce the spatial dimension, thus requiring more information from the input features than the normal cell. In addition, it seems that the reduction cell found with MSR-DARTS has small receptive fields (e.g., “3x3 separable convolution” and “5x5 separable convolution”) for the output of the before last cell and relatively large receptive fields (e.g., “5x5 separable convolution” and “3x3 dilated convolution”) for the output of the before cell. This enables the efficient aggregation of information by applying a large receptive field to the latest information.

5 Conclusion

We proposed MSR-DARTS, which optimizes only the weight parameters of an over-parameterized network. The discrete-architecture-selection process using the optimized architecture parameters with DARTS is replaced with the process using a stable rank of each convolution. By using the relation between the generalization ability of a neural network and low-rankness of an operator, MSR-DARTS searches for an architecture that is expected to have low test error by selecting the lowest stable rank operator. In our evaluation, MSR-DARTS achieved test error on CIFAR-10 and test error on ImageNet. The architecture-search process can be done within GPU-days.

Acknowledgements

This work was supported by JST CREST JPMJCR1687 and NEDO JPNP18002. TS was partially supported by MEXT Kakenhi (15H05707, 18K19793 and 18H03201), and Japan Digital Design.

References

- [1] Namhyuk Ahn, Byungkon Kang, and Kyung-Ah Sohn. Fast, accurate, and lightweight super-resolution with cascading residual network. In Proceedings of the European Conference on Computer Vision (ECCV), pages 252–268, 2018.

- [2] Sanjeev Arora, Rong Ge, Behnam Neyshabur, and Yi Zhang. Stronger generalization bounds for deep nets via a compression approach. In Jennifer G. Dy and Andreas Krause, editors, Proceedings of the 35th International Conference on Machine Learning, ICML 2018, Stockholmsmässan, Stockholm, Sweden, July 10-15, 2018, volume 80 of Proceedings of Machine Learning Research, pages 254–263. PMLR, 2018.

- [3] Peter L Bartlett and Shahar Mendelson. Rademacher and gaussian complexities: Risk bounds and structural results. Journal of Machine Learning Research, 3(Nov):463–482, 2002.

- [4] Cenk Baykal, Lucas Liebenwein, Igor Gilitschenski, Dan Feldman, and Daniela Rus. Data-dependent coresets for compressing neural networks with applications to generalization bounds. In 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, May 6-9, 2019. OpenReview.net, 2019.

- [5] Jens Behrmann, Will Grathwohl, Ricky T. Q. Chen, David Duvenaud, and Jörn-Henrik Jacobsen. Invertible residual networks. In Kamalika Chaudhuri and Ruslan Salakhutdinov, editors, Proceedings of the 36th International Conference on Machine Learning, ICML 2019, 9-15 June 2019, Long Beach, California, USA, volume 97 of Proceedings of Machine Learning Research, pages 573–582. PMLR, 2019.

- [6] Han Cai, Ligeng Zhu, and Song Han. Proxylessnas: Direct neural architecture search on target task and hardware. In 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, May 6-9, 2019. OpenReview.net, 2019.

- [7] Xin Chen, Lingxi Xie, Jun Wu, and Qi Tian. Progressive differentiable architecture search: Bridging the depth gap between search and evaluation. In Proceedings of the IEEE International Conference on Computer Vision, pages 1294–1303, 2019.

- [8] Xiangxiang Chu, Tianbao Zhou, Bo Zhang, and Jixiang Li. Fair DARTS: eliminating unfair advantages in differentiable architecture search. CoRR, abs/1911.12126, 2019.

- [9] J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei. ImageNet: A Large-Scale Hierarchical Image Database. In CVPR09, 2009.

- [10] Terrance DeVries and Graham W Taylor. Improved regularization of convolutional neural networks with cutout. arXiv preprint arXiv:1708.04552, 2017.

- [11] Gintare Karolina Dziugaite and Daniel M Roy. Computing nonvacuous generalization bounds for deep (stochastic) neural networks with many more parameters than training data. arXiv preprint arXiv:1703.11008, 2017.

- [12] Noah Golowich, Alexander Rakhlin, and Ohad Shamir. Size-independent sample complexity of neural networks. In Conference On Learning Theory, pages 297–299, 2018.

- [13] Suriya Gunasekar, Jason D. Lee, Daniel Soudry, and Nati Srebro. Implicit bias of gradient descent on linear convolutional networks. In Samy Bengio, Hanna M. Wallach, Hugo Larochelle, Kristen Grauman, Nicolò Cesa-Bianchi, and Roman Garnett, editors, Advances in Neural Information Processing Systems 31: Annual Conference on Neural Information Processing Systems 2018, NeurIPS 2018, 3-8 December 2018, Montréal, Canada, pages 9482–9491, 2018.

- [14] Nick Harvey, Christopher Liaw, and Abbas Mehrabian. Nearly-tight VC-dimension bounds for piecewise linear neural networks. In Satyen Kale and Ohad Shamir, editors, Proceedings of the 2017 Conference on Learning Theory, volume 65 of Proceedings of Machine Learning Research, pages 1064–1068, Amsterdam, Netherlands, 07–10 Jul 2017. PMLR.

- [15] Andrew G. Howard, Menglong Zhu, Bo Chen, Dmitry Kalenichenko, Weijun Wang, Tobias Weyand, Marco Andreetto, and Hartwig Adam. Mobilenets: Efficient convolutional neural networks for mobile vision applications. CoRR, abs/1704.04861, 2017.

- [16] Gao Huang, Zhuang Liu, Laurens van der Maaten, and Kilian Q. Weinberger. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), July 2017.

- [17] Ziwei Ji and Matus Telgarsky. Gradient descent aligns the layers of deep linear networks. In 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, May 6-9, 2019. OpenReview.net, 2019.

- [18] Alex Krizhevsky. Learning multiple layers of features from tiny images. Technical report, 2009.

- [19] Hanwen Liang, Shifeng Zhang, Jiacheng Sun, Xingqiu He, Weiran Huang, Kechen Zhuang, and Zhenguo Li. Darts+: Improved differentiable architecture search with early stopping. arXiv preprint arXiv:1909.06035, 2019.

- [20] Chenxi Liu, Liang-Chieh Chen, Florian Schroff, Hartwig Adam, Wei Hua, Alan L Yuille, and Li Fei-Fei. Auto-deeplab: Hierarchical neural architecture search for semantic image segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 82–92, 2019.

- [21] Chenxi Liu, Barret Zoph, Maxim Neumann, Jonathon Shlens, Wei Hua, Li-Jia Li, Li Fei-Fei, Alan Yuille, Jonathan Huang, and Kevin Murphy. Progressive neural architecture search. In Proceedings of the European Conference on Computer Vision (ECCV), September 2018.

- [22] Hanxiao Liu, Karen Simonyan, Oriol Vinyals, Chrisantha Fernando, and Koray Kavukcuoglu. Hierarchical representations for efficient architecture search. In 6th International Conference on Learning Representations, ICLR 2018, Vancouver, BC, Canada, April 30 - May 3, 2018, Conference Track Proceedings. OpenReview.net, 2018.

- [23] Hanxiao Liu, Karen Simonyan, and Yiming Yang. DARTS: Differentiable architecture search. In International Conference on Learning Representations, 2019.

- [24] Ningning Ma, Xiangyu Zhang, Hai-Tao Zheng, and Jian Sun. Shufflenet v2: Practical guidelines for efficient cnn architecture design. In Proceedings of the European Conference on Computer Vision (ECCV), September 2018.

- [25] Behnam Neyshabur, Srinadh Bhojanapalli, and Nathan Srebro. A pac-bayesian approach to spectrally-normalized margin bounds for neural networks. arXiv preprint arXiv:1707.09564, 2017.

- [26] Behnam Neyshabur, Russ R Salakhutdinov, and Nati Srebro. Path-sgd: Path-normalized optimization in deep neural networks. In C. Cortes, N. D. Lawrence, D. D. Lee, M. Sugiyama, and R. Garnett, editors, Advances in Neural Information Processing Systems 28, pages 2422–2430. Curran Associates, Inc., 2015.

- [27] Hieu Pham, Melody Y. Guan, Barret Zoph, Quoc V. Le, and Jeff Dean. Efficient neural architecture search via parameter sharing. In Jennifer G. Dy and Andreas Krause, editors, Proceedings of the 35th International Conference on Machine Learning, ICML 2018, Stockholmsmässan, Stockholm, Sweden, July 10-15, 2018, volume 80 of Proceedings of Machine Learning Research, pages 4092–4101. PMLR, 2018.

- [28] Esteban Real, Alok Aggarwal, Yanping Huang, and Quoc V. Le. Regularized evolution for image classifier architecture search. In The Thirty-Third AAAI Conference on Artificial Intelligence, AAAI 2019, The Thirty-First Innovative Applications of Artificial Intelligence Conference, IAAI 2019, The Ninth AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2019, Honolulu, Hawaii, USA, January 27 - February 1, 2019, pages 4780–4789. AAAI Press, 2019.

- [29] Olga Russakovsky, Jia Deng, Hao Su, Jonathan Krause, Sanjeev Satheesh, Sean Ma, Zhiheng Huang, Andrej Karpathy, Aditya Khosla, Michael Bernstein, Alexander C. Berg, and Li Fei-Fei. ImageNet Large Scale Visual Recognition Challenge. International Journal of Computer Vision (IJCV), 115(3):211–252, 2015.

- [30] Hanie Sedghi, Vineet Gupta, and Philip M. Long. The singular values of convolutional layers. In 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, May 6-9, 2019. OpenReview.net, 2019.

- [31] Yao Shu, Wei Wang, and Shaofeng Cai. Understanding architectures learnt by cell-based neural architecture search. In 8th International Conference on Learning Representations, ICLR 2020, Addis Ababa, Ethiopia, April 26-30, 2020. OpenReview.net, 2020.

- [32] Taiji Suzuki, Hiroshi Abe, and Tomoaki Nishimura. Compression based bound for non-compressed network: unified generalization error analysis of large compressible deep neural network. In 8th International Conference on Learning Representations, ICLR 2020, Addis Ababa, Ethiopia, April 26-30, 2020. OpenReview.net, 2020.

- [33] Christian Szegedy, Wei Liu, Yangqing Jia, Pierre Sermanet, Scott Reed, Dragomir Anguelov, Dumitru Erhan, Vincent Vanhoucke, and Andrew Rabinovich. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2015.

- [34] Mingxing Tan, Bo Chen, Ruoming Pang, Vijay Vasudevan, Mark Sandler, Andrew Howard, and Quoc V. Le. Mnasnet: Platform-aware neural architecture search for mobile. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2019.

- [35] Junqi Tang, Mohammad Golbabaee, and Mike E. Davies. Gradient projection iterative sketch for large-scale constrained least-squares. In Doina Precup and Yee Whye Teh, editors, Proceedings of the 34th International Conference on Machine Learning, ICML 2017, Sydney, NSW, Australia, 6-11 August 2017, volume 70 of Proceedings of Machine Learning Research, pages 3377–3386. PMLR, 2017.

- [36] Vladimir N. Vapnik. Statistical Learning Theory. Wiley-Interscience, 1998.

- [37] Sirui Xie, Hehui Zheng, Chunxiao Liu, and Liang Lin. SNAS: stochastic neural architecture search. In 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, May 6-9, 2019. OpenReview.net, 2019.

- [38] Yuhui Xu, Lingxi Xie, Xiaopeng Zhang, Xin Chen, Guo-Jun Qi, Qi Tian, and Hongkai Xiong. Pc-darts: Partial channel connections for memory-efficient differentiable architecture search. arXiv preprint arXiv:1907.05737, 2019.

- [39] Xiangyu Zhang, Xinyu Zhou, Mengxiao Lin, and Jian Sun. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In 2018 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, Salt Lake City, UT, USA, June 18-22, 2018, pages 6848–6856. IEEE Computer Society, 2018.

- [40] Pan Zhou, Caiming Xiong, Richard Socher, and Steven CH Hoi. Theory-inspired path-regularized differential network architecture search. arXiv preprint arXiv:2006.16537, 2020.

- [41] Barret Zoph and Quoc V. Le. Neural architecture search with reinforcement learning. In 5th International Conference on Learning Representations, ICLR 2017, Toulon, France, April 24-26, 2017, Conference Track Proceedings. OpenReview.net, 2017.

- [42] Barret Zoph, Vijay Vasudevan, Jonathon Shlens, and Quoc V Le. Learning transferable architectures for scalable image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 8697–8710, 2018.

A Estimation of spectral norm of a convolution

As described in Subsec. 3.3, we constrain the spectral norms of all the convolutions contained in the over-parameterized network. We use a power-iteration algorithm [10] described in Algorithm 1 to estimate the spectral norm of each convolution.

Note that the index and of are omitted for simplicity. The spectral norm of convolution is denoted by . is a convolutional calculation that takes input . is transposed convolution of . The Algorithm 1 yields an under-estimate, i.e., . In the experiment, we confirmed all the spectral norms of the convolutions in the over-parameterized network are estimated correctly. We set the number of iteration to .

B Comparison with the maximum stable rank architecture

We hypothesize the network with lower rank convolutions has higher generalization ability (see Subsec. 3.1). In our pipeline, we select the operators that have lowest stable rank in the mixed edges after training of the over-parameterized network. We examined the low rank convolution brings better generalization performance by comparing MSR-DARTS with the architecture constructed by selecting the operators that have the maximum stable rank in the mixed edges.

Figure 5 shows the results of the training of the both architectures. We observed the proposed method, i.e., the architecture with the lowest stable rank operators yields better performance than the architecture with the highest stable rank operators.