MPLAPACK version 2.0.1 user manual

Abstract

The MPLAPACK (formerly MPACK) is a multiple-precision version of LAPACK

(https://www.netlib.org/lapack/).

MPLAPACK version 2.0.1 is based on LAPACK version 3.9.1 and translated from Fortran 90 to C++ using FABLE, a Fortran to C++ source-to-source conversion tool (https://github.com/cctbx/cctbx_project/tree/master/fable/).

MPLAPACK version 2.0.1 provides the real and complex version of MPBLAS, and the real and complex versions of MPLAPACK support all LAPACK features: solvers for systems of simultaneous linear equations, least-squares solutions of linear systems of equations, eigenvalue problems, and singular value problems, and related matrix factorizations except for mixed-precision routines. The MPLAPACK defines an API for numerical linear algebra, similar to LAPACK. It is easy to port legacy C/C++ numerical codes using MPLAPACK. MPLAPACK supports binary64, binary128, FP80 (extended double), MPFR, GMP, and QD libraries (double-double and quad-double). Users can choose MPFR or GMP for arbitrary accurate calculations, double-double or quad-double for fast 32 or 64-decimal calculations. We can consider the binary64 version as the C++ version of LAPACK.

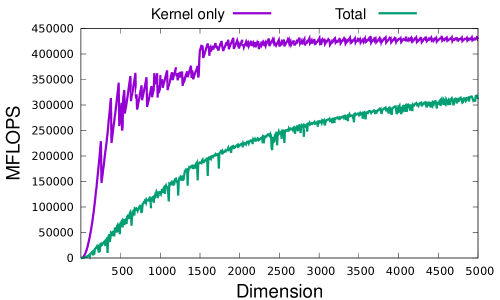

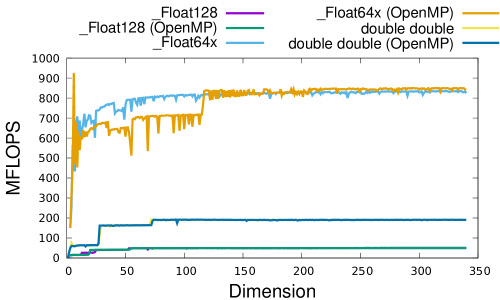

Moreover, it comes with an OpenMP accelerated version of MPBLAS for some routines and CUDA (A100 and V100 support) for double-double versions of Rgemm and Rsyrk. The peak performances of the OpenMP version are almost proportional to the number of cores, and the performances of the CUDA version are impressive, and approximately 400-600 GFlops. MPLAPACK is available at GitHub (https://github.com/nakatamaho/mplapack/) under the 2-clause BSD license.

1 Release note for version 2.0.1

-

•

Version 2.0.1 supports all the complex LAPACK functions.

-

•

Version 2.0.1 supports Rectangular Full Packed (RFP) Format.

-

•

Version 2.0.1 comes with an acceleration of double-double Rgemm and Rsyrk routines on NVIDIA Tesla V100 and A100.

-

•

Many small bug fixes and improvements in usability.

-

•

The quality assurance results and benchmark results on Intel CPU and Arm CPU are available.

-

•

Mixed precision version is not supported yet.

-

•

Version 2.0.1 was released on 2022-09-12.

2 Introduction

Numerical linear algebra aims to solve mathematical problems, such as simultaneous linear equations, eigenvalue problems, and least-squares methods, using arithmetic with finite precision on a computer [1]. We can formulate many problems as numerical linear algebra in various fields, such as natural science, social science, and engineering. Therefore, its importance is magnificent.

The numerical linear algebra package standards are BLAS [2] and LAPACK [3]. The BLAS library defines how computers should perform vector, matrix-vector, and matrix-matrix operations in FORTRAN77. Almost all other vector and matrix arithmetic libraries are compatible with it or have very similar interfaces. Furthermore, LAPACK solves linear problems such as solving linear equations, singular value problems, eigenvalue problems, and least-square fitting problems using BLAS as a building block.

The main focus in numerical linear algebra has been on the speed and the size of solving the problem at approximately 8 or 16 decimal digits (binary32 or 64) using highly optimized BLAS and LAPACK [4, 5, 6, 7, 8, 9]. The use of numbers with higher precision than binary64 is not common.

However, there are some problems in numerical linear algebra that require higher precision operations. In particular, when we solve ill-conditioned problems, large-scale simulations, and compute inversions of matrices, we usually need functions of higher precision numbers [10, 11, 12].

Solving positive semidefinite programming (SDP) is another example requiring multi-precision computation. Solving this problem using binary64 usually yields values up to eight decimal digits. The accumulation of numerical error occurs because the matrices’ condition number at the optimal solution usually becomes infinite. As a result, Cholesky factorization fails near the optimal solution; the approximate solution diverges toward the optimal solution using the primal and dual interior point method [13]. Therefore, we require multiple precision calculations if we need more than eight decimal digits for optimal solutions. For this reason, we have developed SDPA-GMP [14, 15, 16], the GNU MP version of semidefinite programming solver based on SDPA [16], which is one of the fastest SDP solvers.

We have been developing MPLAPACK as a drop-in replacement for BLAS and LAPACK in SDPA since SDPA performs Cholesky decomposition and solves symmetric eigenvalue problems via 50 BLAS and LAPACK routines [17].

The features of MPLAPACK are:

-

•

Provides Application Programming Interface (API) numerical algebra, similar to LAPACK and BLAS.

-

•

Like BLAS and LAPACK, we can implement an optimized version of MPBLAS and MPLAPACK; we provide a simple OpenMP version of some MPBLAS routines and two CUDA routines (Rgemm, Rsyrk dd version) as proof of concept.

-

•

Completely rewrote LAPACK and BLAS in C++ using FABLE and f2c.

-

•

C style programming. We do not introduce new matrix and vector classes.

-

•

Supports seven floating-point formats by precision independent programming; binary64 (16 decimal digits), binary128 (32 decimal digits), FP80 (extended double; 19 decimal digits), double-double (32 decimal digits), quad-double (64 decimal digits), GMP, and MPFR (arbitrary precision, and the default is 153 decimal digits).

-

•

MPLAPACK 2.0.1 is based on LAPACK 3.9.1.

-

•

Version 2.0.1 supports all real and complex versions of BLAS and LAPACK functions; simultaneous linear equations, least-squares solutions of linear systems of equations, eigenvalue problems, singular value problems, and related matrix factorization and full packed matrix form except for mixed-precision version.

-

•

Reliability: We extended the original test programs to handle multiple-precision numbers, and most MPLAPCK routines have passed the test.

-

•

Runs on Linux/Windows/Mac.

-

•

Released at https://github.com/nakatamaho/mplapack/ under 2-BSD clause license.

Unless otherwise noted, this paper gives examples with Ubuntu 20.04 amd64 inside Docker as the reference environment.

The rest of the sections are organized as follows: Section 3 describes supported CPUs, OSes, and compilers. Section 4 describes how to install MPLAPACK. Section 5 describes the supported floating-point format. Section 6 illustrates LAPACK and BLAS standard naming conventions and available MPBLAS and MPLAPACK routines; section 7 describes how to use MPBLAS and MPLAPACK. We use the Docker environment to try these examples to avoid over-complicating the notation with different environments. Section 8 describes how we tested MPBLAS and MPLAPACK. Section 9 describes how we rewrote Fortran90 codes to C++ and extended them to multiple precision versions. Next, section 10 describes benchmarking MPBLAS routines. Next, section 11 describes the history, and section 12 describes related works. Finally, section 13 describes future plans for MPLAPACK.

3 Supported CPUs, OSes, and compilers

Only 64-bit CPUs are supported. The following OSes are supported:

-

•

CentOS 7 (amd64, aarch64)

-

•

CentOS 8 (amd64, aarch64)

-

•

Ubuntu 22.04 (amd64, aarch64)

-

•

Ubuntu 20.04 (amd64, aarch64)

-

•

Ubuntu 18.04 (amd64)

-

•

Windows 10 (amd64)

-

•

MacOS (Intel)

We support the following compilers:

-

•

GCC (GNU Compiler Collection) 9 and later

-

•

Intel One API. (you need -fp-model precise to compile dd and qd)

Note that we use GCC by MacPorts on macOS, which is NOT the default compiler. However, we need to use GCC (Apple Clang) to compile GMP [18].

We support the following GPUs to accelerate matrix-matrix multiplication. See section 6 for CUDA-enabled routines.

-

•

NVIDIA A100

-

•

NVIDIA V100

We use the mingw64 and Wine64 environments on Linux to build and test the Windows version. On different configurations, MPLAPACK may build and work without problems. We welcome reports or patches from the community.

4 Installation

4.1 Using Docker (recommended for Linux environment), a simple demo

The easiest way to install MPLAPACK is to build inside Docker [19] and use it inside Docker. The following command will build inside docker on Ubuntu amd64 (also known as x86_64) or aarch64 (also known as arm64), and we use Docker ubuntu 20.04 amd64 environment for the reference in this paper and showing examples.

$ git clone https://github.com/nakatamaho/mplapack/ $ cd mplapack $ /usr/bin/time docker build -t mplapack:ubuntu2004 \ -f Dockerfile_ubuntu20.04 . 2>&1 | tee log.ubuntu2004

It will take a while, depending on CPU cores and OSes. For example, a Docker build took 35 minutes on a Ryzen 3970X (3.7GHz, 32 cores) Ubuntu 20.04 machine, a Docker build took 1 hour 50 minutes on Xeon E5-2623 v3 (3.0GHz, 2 CPUs 8 cores) Ubuntu 20.04 machine, a Docker build took 14 hours 40 minutes on Raspberry Pi 4 (Cortex A72 1.5GHz, four cores) Ubuntu 20.04 machine, Furthermore, the build of MPLAPACK on the Mac mini (2018, Core i5-8500B, 3.0GHz and six cores) took about 3.5 hours.

To run a simple demo (matrix-matrix multiplication in binary128) can be done as follows:

$ docker run -it mplapack:ubuntu20.04 /bin/bash docker@2cb33bf4c36f:~$ ls MPLAPACK mplapack-2.0.1 mplapack-2.0.1.tar.xz docker@2cb33bf4c36f:~ cd MPLAPACK/share/examples/mpblas/ docker@2cb33bf4c36f:~/MPLAPACK/share/examples/mpblas$ make -f Makefile.linux c++ -c -O2 -fopenmp -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ -I/home/docker/MPLAPACK/include/qd Rgemm_mpfr.cpp ... docker@2cb33bf4c36f:~/MPLAPACK/share/examples/mpblas$ ./Rgemm__Float128 # Rgemm demo... a =[ [ +1.00000000000000000000000000000000000e+00, +8.00000000000000000000000000000000000e+00, +3.00000000000000000000000000000000000e+00]; [ +2.00000000000000000000000 ... ans =[ [ +2.10000000000000000000000000000000000e+01, -1.92000000000000000000000000000000000e+02, +2.28000000000000000000000000000000000e+02]; [ -6.40000000000000000000000000000000000e+01, -1.46000000000000000000000000000000000e+02, +2.66000000000000000000000000000000000e+02]; [ +2.10000000000000000000000000000000000e+02, +3.61000000000000000000000000000000000e+02, -3.80000000000000000000000000000000000e+01] ] #please check by Matlab or Octave following and ans above alpha * a * b + beta * c

If it fails, it is a bug. Please report a problem via GitHub issue.

In Table 1, we list corresponding Dockerfiles for CPUs, GPUs, OSes, and compilers. When the build is finished, all the files will be installed under /home/docker/MPLAPACK_CUDA for the CUDA version, /home/docker/MPLAPACK_MINGW for Windows version, /home/docker/MPLAPACK_INTELONEAPI for Intel One API version, and others are under /home/docker/MPLAPACK. We can build corresponding environments (other OSes, GPUs, CPUs), and you can choose the Dockerfile appropriately listed on 1.

| Docker filename | CPU | OS | Compiler |

|---|---|---|---|

| Dockerfile_CentOS7 | amd64 | CentOS 7 | GCC |

| Dockerfile_CentOS7_AArch64 | aarch64 only | CentOS 7 | GCC |

| Dockerfile_CentOS8 | all CPUs | CentOS 8 | GCC |

| Dockerfile_ubuntu18.04 | amd64 only | Ubuntu 18.04 | GCC |

| Dockerfile_ubuntu20.04 | all CPUs | Ubuntu 20.04 | GCC |

| Dockerfile_ubuntu20.04_inteloneapi | amd64 only | Ubuntu 20.04 | Intel oneAPI |

| Dockerfile_ubuntu20.04_mingw64 | amd64 only | Ubuntu 20.04 | GCC |

| Dockerfile_ubuntu20.04_cuda | NVIDIA A100, V100 | Ubuntu 20.04 | GCC |

| Dockerfile_ubuntu22.04 | all cpus | Ubuntu 22.04 | GCC |

| Dockerfile_debian_bullseye | all cpus | Debian(bullseye) | GCC |

4.2 Compiling from the source

We list prerequisites for compiling from the source in Table 2; users can satisfy these prerequisites using MacPorts on macOS. Homebrew may be used as an alternative.

$ sudo port install gcc10 coreutils git ccache $ wget https://github.com/nakatamaho/mplapack/releases/download/v2.0.1/mplapack-2.0.1.tar.xz $ tar xvfz mplapack-2.0.1.tar.xz $ cd mplapack-2.0.1 $ CXX="g++-mp-10" ; export CXX $ CC="gcc-mp-10" ; export CC $ FC="gfortran-mp-10"; export FC $ ./configure --prefix=/usr/local --enable-gmp=yes --enable-mpfr=yes \ --enable-_Float128=yes --enable-qd=yes --enable-dd=yes --enable-double=yes \ --enable-_Float64x=yes --enable-test=yes ... $ make -j6 ... $ sudo make install

| Package | Version |

|---|---|

| GCC | 9 or later |

| gmake | 4.3 or later |

| git | 2.33.0 or later |

| autotools | 2.71 |

| automake | 1.16 |

| GNU sed | 4.1 or later |

5 Supported Floating point formats

We support binary64, FP80 (extended double), binary128, double-double, quad-double (QD library), GMP, and MPFR floating point arithmetics in MPLAPACK, and floating point types in C++ are summarized in table [mplapackformats].

Binary64 [4] is the so-called double precision: the number of significant digits in decimal is about 16, and CPUs perform arithmetic processing in hardware. As a result, the CPU can perform binary64 operations very fast.

FP80 [20] is the so-called extended double precision: the number of significant digits in decimal is about 19, and Intel and AMD CPUs perform arithmetic processing in hardware. ISO defined C real floating types [21] as _Float64x. Thus We always use _Float64x as a type for FP80 numbers in MPLAPACK. However, no SIMD support, and due to its architecture, FP80 operations are more than ten times slower than binary64 operations. Besides, FP80 was removed since IEEE 754-2008 [4].

Binary128, sometimes called quadruple precision, has been defined since IEEE754-2008, [4] and this format has approximately 33 significant decimal digits. Usually, binary128 arithmetic is done by software. Therefore, operations are prolonged. We know that only IBM z processors have been the only commercial platform supporting quadruple precision [22]. Some processors like aarch64, Sparc, RISCV64, and MIPS64 define instructions for quadruple-precision arithmetic. These processors emulate binary128 instructions by software. ISO defined C real floating types [21] as _Float128 but not yet a standard of C++. GCC has already provided a type for binary128 as __float128 since GCC 4.6. Intel one API also provides binary128 as _Quad. We always use _Float128 as a type for binary128 numbers in MPLAPACK. This type may be the same as long double or __float128 depends on the environment, we typedef appropriate type to _Float128.

The double-double casts two binary64 numbers as one number and has approximately 32 decimal significant digits, and the quad-double casts four binary64 numbers as one number and has approximately 64 decimal significant digits. We use the QD library [23] to support double-double and quad-double precision. The double-double precision and quad-double precision use Kunth and Dekker’s algorithm [24, 25], which can evaluate the addition and multiplication of two binary64 numbers rigorously. Then, we can define the addition and multiplication of double-double numbers. The pros of using these formats are that they are speedy. Since all arithmetic can be done by binary64 and accelerated by hardware, calculation speed is approximately ten times faster than software implemented binary128. The cons of using these formats are programs written for expecting that the IEEE754 feature might not work with these precisions. Historically, IBM XL compilers for PowerPCs and GCC targeted to PowerPCs and PowerMacs, “long double” has been equivalent to the double-double [26]. However, other environments do not always support “long double.” Therefore, we use the QD library for MPLAPACK, and we guess that is why Hida et al. developed the library.

GMP [27] is a C library for arbitrary precision arithmetic, operating on signed integers, rational numbers, and floating-point numbers. We can perform calculations to any accuracy, as long as the machine’s resources allow. GMP comes with C++ binding, and we use this mpf_class as an arbitrary floating-point number. In addition, we support complex numbers by preparing .

MPFR [28] is a C library for multiple-precision floating-point computations with correct rounding based on GMP. Unlike GMP, MPFR does not come with C++ binding, and we use mpreal [29] as an arbitrary floating-point number. We support complex numbers using MPC, a library for multiple-precision complex arithmetic with correct rounding [30] via class (more precisely, the final LGPL version with our customization). Both libraries provide almost the same functionalities, but MPFR and MPC are smooth extensions to IEEE 754 and further support trigonometric functions necessary for cosine-sine decomposition, elementary and special functions. Therefore, we will drop GMP support in the future.

long double is no longer supported in MPLAPACK since version 1.0 because the situation regarding long double is very different and confusing by CPUs and OSes. E.g., on Intel CPUs, long double is equivalent to _Float64x on Linux; however, on Windows, long double is equivalent to double. Similar confusion happens in the AArch64 environment. The official ARM ABI defines long double to be binary128 [31]. Nevertheless, Apple ABI overwrites long double to double on the OS side. On IBM PowerPC or Power Macs, long double have been double-double, as described above. When long double transit to __float128 on PowerPC in the future, double-double will also be supported as __ibm128 [26] as the GNU extension.

| Library or format | type name | accuracy in decimal digits |

| GMP | mpf_class, mpc_class | 154 (default) and arbitrary |

| MPFR | mpreal, mpcomplex | 154 (default) and arbitrary |

| double-double | dd_real, dd_complex | 32 |

| quad-double | qd_real, qd_complex | 64 |

| binary64 | double, std::complex<double> | 16 |

| extended double | _Float64x, std::complex<_Float64x> | 19 |

| binary128 | _Float128, std::complex<_Float128> | 33 |

| integer | mplapackint | (32bit on Win, 64bit on Linux and macOS) |

6 LAPACK and BLAS Routine Naming Conventions and available routines

BLAS and LAPACK prefix routine names with “s” and “d” for real single and double precision real numbers and “c” and “z” for single and double precision complex numbers. FORTRAN77 and Fortran90 are not case-sensitive in function and subroutine names, while C++ is case-sensitive in function names.

The prefix for real and complex routine names in MPLAPACK is an uppercase “R” for real numbers and “C” for complex numbers. All other letters in the routines are lowercase. Also, while we do not distinguish function names by floating-point class (e.g. mpf_class, _Float128) 3, we use the same function name for different floating-point classes to take advantage of function overloading (e.g., no matter which floating-point class is adopted, program calls Raxpy appropriately). The floating-point class is added after the routine name for routines that take no arguments or only integers. Otherwise, we add the prefix “M” or insert “M” after “i.”

For example,

-

•

daxpy, zaxpy Raxpy, Caxpy

-

•

dgemm, zgemm Rgemm, Cgemm

-

•

dsterf, dsyev Rsterf, Rsyev

-

•

dzabs1, dzasum RCabs1, RCasum

-

•

lsame Mlsame_mpfr, Mlsame_gmp, Mlsame__Float128 etc.

-

•

dlamch Rlamch_mpfr, Rlamch_gmp, Rlamch__Float128 etc.

-

•

ilaenv iMlaenv_mpfr, iMlaenv_gmp, iMlaenv__Float128 etc.

In table 4, we show all supported MPBLAS routines. The prototype definitions of these routines can be found in the following headers.

-

•

/home/docker/MPLAPACK/include/mplapack/mpblas__Float128.h

-

•

/home/docker/MPLAPACK/include/mplapack/mpblas__Float64x.h

-

•

/home/docker/MPLAPACK/include/mplapack/mpblas_dd.h

-

•

/home/docker/MPLAPACK/include/mplapack/mpblas_double.h

-

•

/home/docker/MPLAPACK/include/mplapack/mpblas_gmp.h

-

•

/home/docker/MPLAPACK/include/mplapack/mpblas_mpfr.h

-

•

/home/docker/MPLAPACK/include/mplapack/mpblas_qd.h

A simple OpenMP version of MPBLAS is available. In table 5, we show all OpenMP accelerated MPBLAS routines. In table 6, we show all CUDA accelerated MPBLAS (double-double) routines.

In table 7, we show all supported MPLAPACK real driver routines. In table 9, we show all supported MPLAPACK real computational routines. In table 8, we show all supported MPLAPACK complex driver routines. In table 10, we show all supported MPLAPACK complex computational routines.

We do not list them here, but there are also many MPLPACK auxiliary routines. We usually use MPLAPACK driver routines and MPBLAS routines directly. However, the driver routines also implicitly use computational routines and auxiliary routines.

The prototype definitions of the MLAPACK routines can be found in the following headers.

-

•

/home/docker/MPLAPACK/include/mplapack/mplapack__Float128.h

-

•

/home/docker/MPLAPACK/include/mplapack/mplapack__Float64x.h

-

•

/home/docker/MPLAPACK/include/mplapack/mplapack_dd.h

-

•

/home/docker/MPLAPACK/include/mplapack/mplapack_double.h

-

•

/home/docker/MPLAPACK/include/mplapack/mplapack_gmp.h

-

•

/home/docker/MPLAPACK/include/mplapack/mplapack_mpfr.h

-

•

/home/docker/MPLAPACK/include/mplapack/mplapack_qd.h

| Crotg | Cscal | Rrotg | Rrot | Rrotm | CRrot | Cswap | Rswap | CRscal | Rscal |

| Ccopy | Rcopy | Caxpy | Raxpy | Rdot | Cdotc | Cdotu | RCnrm2 | Rnrm2 | Rasum |

| iCasum | iRamax | RCabs1 | Mlsame | Mxerbla | |||||

| Cgemv | Rgemv | Cgbmv | Rgbmv | Chemv | Chbmv | Chpmv | Rsymv | Rsbmv | Ctrmv |

| Cgemv | Rgemv | Cgbmv | Rgemv | Chemv | Chbmv | Chpmv | Rsymv | Rsbmv | Rspmv |

| Ctrmv | Rtrmv | Ctbmv | Ctpmv | Rtpmv | Ctrsv | Rtrsv | Ctbsv | Rtbsv | Ctpsv |

| Rger | Cgeru | Cgerc | Cher | Chpr | Cher2 | Chpr2 | Rsyr | Rspr | Rsyr2 |

| Rspr2 | |||||||||

| Cgemm | Rgemm | Csymm | Rsymm | Chemm | Csyrk | Rsyrk | Cherk | Csyr2k | Rsyr2k |

| Cher2k | Ctrmm | Rtrmm | Ctrsm | Rtrsm |

| Raxpy | Rcopy | Rdot | Rgemm |

| Rgemm | Rsyrk |

| Rgesv | Rgesvx | Rgbsv | Rgbsvx | Rgtsv | Rgtsvx | Rposv | Rposvx | Rppsv | Rppsvx |

| Rpbsv | Rpvsvx | Rptsv | Rptsvx | Rsysv | Rsysvx | Rpspv | Rspsvx | ||

| Rgels | Rgelsy | Rgelss | Rgelsd | ||||||

| Rgglse | Rggglm | ||||||||

| Rsyev | Rsyevd | Rsyevx | Rsyevr | Rspev | Rspevd | Rspevx | Rsbev | Rsbevd | Rsbevx |

| Rstev | Rstevd | Rstevx | Rstevr | Rgees | Rgeesx | Rgeev | Rgeevx | Rgesvd | Rgesdd |

| Rsygv | Rsygvd | Rsygvx | Rspgv | Rspgvd | Rspgvx | Rsbgv | Rsbgv | Rsbgvx | Rgges |

| Rggesx | Rggev | Rggevx | Rggsvd |

| Cgesv | Cgesvx | Cgbsv | Cgbsvx | Cgtsv | Cgtsvx | Cposv | Cposvx | Cppsv | Cppsvx |

| Cpbsv | Cpbsvx | Cptsv | Cptsvx | Chesv | Chesvx | Csysv | Csysvx | Chpsv | Chpsvx |

| Cspsv | Cspsvx | ||||||||

| Cgels | Cgelsy | Cgelss | Cgelsd | ||||||

| Cgglse | Cggglm | ||||||||

| Cheev | Cheevd | Cheevx | Cheevr | Chpev | Chpevd | Chpevx | Chbev | Chbevd | Chbevx |

| Cgees | Cgeesx | Cgeev | Cgeevx | Cgesvd | Cgesdd | ||||

| Chegv | Chegvd | Chegvx | Chpgv | Chpgvd | Chpgvx | Chbgv | Chbgvd | Chbgvx | Cgges |

| Cggesx | Cggev | Cggevx | Cggsvd |

| Rgetrf | Rgetrs | Rgecon | Rgerfs | Rgetri | Rgeequ | Rgbtrf | Rgbtrs | Rgbcon | Rgbrfs |

| Rgbequ | Rgttrf | Rgttrs | Rgtcon | Rgtrfs | Rpotrf | Rpotrs | Rpocon | Rporfs | Rpotri |

| Rpoequ | Rpptrf | Rpptrs | Rppcon | Rpprfs | Rpptri | Rppequ | Rpbtrf | Rpbtrs | Rpbcon |

| Rpbrfs | Rpbequ | Rpttrf | Rpttrs | Rptcon | Rptrfs | Rsytrf | Rsytrs | Rsycon | Rsyrfs |

| Rsytri | Rsptrf | Rsptrs | Rspcon | Rsprfs | Rsptri | Rtrtrs | Rtrcon | Rtrrfs | Rtrtri |

| Rtptrs | Rtpcon | Rtprfs | Rtptri | Rtbtrs | Rtbcon | Rtbrfs | |||

| Rgeqp3 | Rgeqrf | Rorgqr | Rormqr | Rgelqf | Rorglq | Rormlq | Rgeqlf | Rorgql | Rormql |

| Rgerqf | Rorgrq | Rormrq | Rtzrzf | Rormrz | |||||

| Rsytrd | Rsptrd | Rsbtrd | Rorgtr | Rormtr | Ropgtr | Ropmtr | Rsteqr | Rsterf | Rstedc |

| Rstegr | Rstebz | Rstein | Rpteqr | ||||||

| Rgehrd | Rgebal | Rgebak | Rorghr | Rormhr | Rhseqr | Rhsein | Rtrevc | Rtrexc | Rtrsyl |

| Rtrsna | Rtrsen | ||||||||

| Rgebrd | Rgbbrd | Rorgbr | Rormbr | Rbdsqr | Rbdsdc | ||||

| Rsygst | Rspgst | Rpbstf | Rsbgst | ||||||

| Rgghrd | Rggbal | Rggbak | Rhgeqz | Rtgevc | Rtgexc | Rtgsyl | Rtgsna | Rtgsen | |

| Rggsvp | Rtgsja |

| Cgetrf | Cgetrs | Cgecon | Cgerfs | Cgetri | Cgeequ | Cgbtrf | Cgbtrs | Cgbcon | Cgbrfs |

| Cgbequ | Cgttrf | Cgttrs | Cgtcon | Cgtrfs | Cpotrf | Cpotrs | Cpocon | Cporfs | Cpotri |

| Cpoequ | Cpptrf | Cpptrs | Cppcon | Cpprfs | Cpptri | Cppequ | Cpbtrf | Cpbtrs | Cpbcon |

| Cpbrfs | Cpbequ | Cpttrf | Cpttrs | Cptcon | Cptrfs | Chetrf | Chetrs | Checon | Cherfs |

| Chetri | Csytrf | Csytrs | Csycon | Csyrfs | Csytri | Chptrf | Chptrs | Chpcon | Chprfs |

| Chptri | Csptrf | Csptrs | Cspcon | Csprfs | Csptri | Ctrtrs | Ctrcon | Ctrrfs | Ctrtri |

| Ctptrs | Ctpcon | Ctprfs | Ctptri | Ctbtrs | Ctbcon | Ctbrfs | |||

| Cgeqp3 | Cgeqrf | Cungqr | Cunmqr | Cgelqf | Cunglq | Cunmlq | Cgeqlf | Cungql | Cunmql |

| Cgerqf | Cungrq | Cunmrq | Ctzrzf | Cunmrz | |||||

| Chetrd | Chptrd | Chbtrd | Cungtr | Cunmtr | Cupgtr | Cupmtr | Csteqr | Cstedc | Cstegr |

| Cstein | Cpteqr | ||||||||

| Cgehrd | Cgebal | Cgebak | Cunghr | Cunmhr | Chseqr | Chsein | Ctrevc | Ctrexc | Ctrsyl |

| Ctrsna | Ctrsen | ||||||||

| Cgebrd | Cgbbrd | Cungbr | Cunmbr | Cbdsqr | |||||

| Chegst | Chpgst | Cpbstf | Chbgst | ||||||

| Cgghrd | Cggbal | Cggbak | Chgeqz | Ctgevc | Ctgexc | Ctgsyl | Ctgsna | Ctgsen | |

| Cggsvp | Ctgsja |

7 How to use MPBLAS and MPLPACK

7.1 Multiprecision Types

We use seven kinds of floating-point formats. Multiple precision types are listed in the table 3. For GMP, we use built-in mpf_class for real type. For complex type, we developed mpc_class. For MPFR, we use a modified version of mpfrc++ (the final LGPL version) to treat like double or float type. For complex type, we developed mpcomplex.h using MPFR and MPC for complex type. For double-double and quad-double, we use dd_real and qd_real, respectively. For complex type, we developed dd_complex and qd_complex, respectively.

For extended double, we use _Float64x for all Intel and AMD environments. For binary128, we use _Float128 for all OSes and CPUs. For complex types for double, _Float64x and _Float128, we use standard complex implementation of C++. Using __float80, __float128, or long double is strongly discouraged as they are not tested and not portable.

Also, we define mplapackint as a 64-bit signed integer to MPLAPACK that can access all the memory spaces regardless of which data type models the environment employs (LLP64 or LP64). On Windows, mplapackint is still a 32-bit signed integer.

7.2 General pitfall substituting floating point numbers to multiple precision numbers

Suppose when we substitute to multiple numbers alpha

alpha = 1.2;

Such code is problematic. First, GCC translates “1.2” into double precision, then substituting alpha. The compilation of this code cause exact numbers to be rounded to double precision. Thus, when we print this number up 64 decimal digits using default precision (512 bits = 153 decimal digits) of GMP in MPBLAS, alpha becomes

-1.1999999999999999555910790149937383830547332763671875000000000000e+00

. This may be an undesired result. To avoid this behavior, we should code as follows:

alpha = "1.2";

Alternatively, use the constructer explicitly as follows:

alpha = mpf_class("1.2");

Then, the output becomes as desired.

-1.2000000000000000000000000000000000000000000000000000000000000000e+00

For GMP, to substitute an imaginary number to beta, we should code as follows:

beta = mpc_class(mpf_class("1.2"), mpf_class("1.2");

For real numbers, we verified that all precision have string type constructor except for _Float128 and _Float64x as following:

alpha = "1.2";

For complex numbers, we have to explicitly use real constructors except for _Float128 and _Float64x as follows:

beta = dd_complex(dd_real("1.2"), dd_real("1.2");

7.3 How to use MPBLAS

This subsection describes the basic usage of MPBLAS, OpenMP accelerated MPBLAS and CUDA accelerated MPBLAS.

7.3.1 How to use reference MPBLAS

The API of MPBLAS is very similar to the original BLAS and CBLAS. However, unlike CBLAS, we always use a one-dimensional array as a column-major type matrix in MPBLAS.

Here, we show how to use MPBLAS by two examples; Rgemm and Cgemm. Of course, other routines can be used similarly. However, first, we show how to use Rgemm, which corresponds to DGEMM of BLAS.

Following is the prototype definition of the multiple-precision version of matrix-matrix multiplication (Rgemm) of the MPFR version.

void Rgemm(const char *transa, const char *transb, mplapackint const m, mplapackint const n, mplapackint const k, mpreal const alpha, mpreal *a, mplapackint const lda, mpreal *b, mplapackint const ldb, mpreal const beta, mpreal*c, mplapackint const ldc);

Moreover, the following is the definition part of the original DGEMM:

SUBROUTINE DGEMM(TRANSA,TRANSB,M,N,K,ALPHA,A,LDA,B,LDB,BETA,C,LDC)

* .. Scalar Arguments ..

DOUBLE PRECISION ALPHA,BETA

INTEGER K,LDA,LDB,LDC,M,N

CHARACTER TRANSA,TRANSB

* ..

* .. Array Arguments ..

DOUBLE PRECISION A(LDA,*),B(LDB,*),C(LDC,*)

There is a clear correspondence between variables in the C++ prototype of Rgemm and variables in the header of DGEMM.

-

•

CHARACTER const char *

-

•

INTEGER mplapackint

-

•

DOUBLE PRECISION A(LDA, *) mpreal *a

We can see such correspondences for other MPBLAS and MPLAPACK routines.

Then, let us see how we multiply matrices using the MPFR version of Rgemm,

where , and are matrices, and are scalars. Let us choose , , and

and , and .

The answer is:

The list is the following.

First, we must include mpblas_mpfr.h to use MPFR. printmat function prints matrix in Octave/Matlab format (lines 4 to 22). We set 256 bit in fraction for mpfrc++ in lines 28 to 29; MPFR Real accuracy setted to 77 decimal digits (). We allocate the matrix as a one-dimensional array (lines 31 to 33). Then we set the matrix , , and . We always input the matrix by row-major format to an array (lines 36 to 47). Matrix-matrix multiplication Rgemm is called in line 58. One can input the list and save as Rgemm_mpfr.cpp or you can find /home/docker/mplapack/examples/mpblas/ directory. Then, one can compile on one own following in the Docker environment as follows:

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Rgemm_mpfr.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib -L/home/docker/MPLAPACK/lib \ -lmpblas_mpfr -lgmp -lmpfr -lmpc

If the compilation is done successfully, one can run as follows:

$ ./a.out # Rgemm demo... A =[ [ 1.00e+00, 8.00e+00, 3.00e+00]; [ 0.00e+00, 1.00e+01, 8.00e+00]; [ 9.00e+00, -5.00e+00, -1.00e+00] ] B =[ [ 9.00e+00, 8.00e+00, 3.00e+00]; [ 3.00e+00, -1.10e+01, 0.00e+00]; [ -8.00e+00, 6.00e+00, 1.00e+00] ] C =[ [ 3.00e+00, 3.00e+00, 0.00e+00]; [ 8.00e+00, 4.00e+00, 8.00e+00]; [ 6.00e+00, 1.00e+00, -2.00e+00] ] alpha = 3.000e+00 beta = -2.000e+00 ans =[ [ 2.10e+01, -1.92e+02, 1.80e+01]; [ -1.18e+02, -1.94e+02, 8.00e+00]; [ 2.10e+02, 3.61e+02, 8.20e+01] ] #please check by Matlab or Octave following and ans above alpha * A * B + beta * C

One can check the result by comparing the result of the octave.

$ ./a.out | octave

octave: X11 DISPLAY environment variable not set

octave: disabling GUI features

A =

1 8 3

0 10 8

9 -5 -1

B =

9 8 3

3 -11 0

-8 6 1

C =

3 3 0

8 4 8

6 1 -2

alpha = 3

beta = -2

ans =

21 -192 18

-118 -194 8

210 361 82

ans =

21 -192 18

-118 -194 8

210 361 82

In this case, we see the result below two “ans =” are the same. The Rgemm result is correct up to 16 decimal digits.

Let us see how we can multiply matrices using _Float128 (binary128). The list is the following.

We do not show the output since the output is almost the same as the MPFR version.

The _Float128 version of the program list is almost similar to the MPFR version. However, printnum part is a bit complicated. Since there are at least three kinds of binary128 support depending on CPUs and OSes: (i) GCC only supports __float128 using libquadmath (Windows, macOS), (ii) GCC supports _Float128 directly and there is libc support as well (Linux amd64), (iii) long double is already binary128, and no special support is necessary (AArch64). For (i), we internally define ___MPLAPACK_WANT_LIBQUADMATH___, for (ii) we define ___MPLAPACK__FLOAT128_ONLY___, and for (iii), we define ___MPLAPACK_LONGDOUBLE_IS_BINARY128___. Users can use these internal definitions to write a portable program.

As a summary, we show how we compile and run binary64, FP80, binary128, double-double, quad-double, GMP and MPFR versions of Rgemm demo programs in /home/docker/mplapack/examples/mpblas as follows:

-

•

binary64 (double) version

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Rgemm_double.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib \ -L/home/docker/MPLAPACK/lib -lmpblas_double $ ./a.out

-

•

FP80 (extended double) version

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Rgemm__Float64x.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib \ -L/home/docker/MPLAPACK/lib -lmpblas__Float64x $ ./a.out

-

•

binary128 version

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Rgemm__Float128.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib \ -L/home/docker/MPLAPACK/lib -lmpblas__Float128 $ ./a.out

or (on macOS, mingw64 and CentOS7 amd64)

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Rgemm__Float128.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib \ -L/home/docker/MPLAPACK/lib -lmpblas__Float128 -lquadmath $ ./a.out

-

•

double-double version

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Rgemm_dd.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib \ -L/home/docker/MPLAPACK/lib -lmpblas_dd -lqd $ ./a.out

-

•

quad-double version

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Rgemm_qd.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib \ -L/home/docker/MPLAPACK/lib -lmpblas_qd -lqd $ ./a.out

-

•

GMP version

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Rgemm_gmp.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib \ -L/home/docker/MPLAPACK/lib -lmpblas_gmp -lgmpxx -lgmp $ ./a.out

-

•

MPFR version

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Rgemm_mpfr.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib \ -L/home/docker/MPLAPACK/lib -lmpblas_mpfr -lmpfr -lmpc -lgmp $ ./a.out

Next, we show how to use Cgemm the complex version gemm. This is not just an example of how to use Cgemm, but an example of how to input floating point numbers.

Following is the prototype definition of the multiple-precision version of matrix-matrix multiplication (Cgemm) of the GMP version.

void Cgemm(const char *transa, const char *transb, mplapackint const m, mplapackint const n, mplapackint const k, mpc_class const alpha, mpc_class *a, mplapackint const lda, mpc_class *b, mplapackint const ldb, mpc_class const beta, mpc_class *c, mplapackint const ldc);

The following is the definition part of the original ZGEMM:

* SUBROUTINE ZGEMM(TRANSA,TRANSB,M,N,K,ALPHA,A,LDA,B,LDB,BETA,C,LDC) * * .. Scalar Arguments .. * COMPLEX*16 ALPHA,BETA * INTEGER K,LDA,LDB,LDC,M,N * CHARACTER TRANSA,TRANSB * .. * .. Array Arguments .. * COMPLEX*16 A(LDA,*),B(LDB,*),C(LDC,*)

There is a clear correspondence between variables in the C++ prototype of Cgemm and variables in the header of ZGEMM like Rgemm’s case.

-

•

CHARACTER const char *

-

•

INTEGER mplapackint

-

•

COMPLEX*16 A(LDA, *) mpc_class *a

Let us see how we can multiply matrices using GMP.

where

and , and .

The answer is:

| (1) |

Following is the program list for multiplying complex matrices using GMP.

First, we must include mpblas_gmp.h to use GMP. printmat function prints matrix in Octave/Matlab format (lines 7 to 46). We use the default GMP complex precision 153 decimal digits (). We allocate the matrix as a one-dimensional array (lines 51 to 54). We use mpc_class for complex values. Then we set the matrix , , and . We always input the matrix by row-major format to an array (lines 36 to 47). Matrix-matrix multiplication Cgemm is called in line 58. One can input the list and save as Cgemm_gmp.cpp or you can find /home/docker/mplapack/examples/mpblas/ directory. Then, one can compile on one own following in the Docker environment as follows:

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Cgemm_gmp.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib -L/home/docker/MPLAPACK/lib \ -lmpblas_gmp -lgmp

Then, you can run as follows:

$ ./a.out # Cgemm demo... a =[ [ +1.0000000000000000000000000000000000000000000000000000000000000000e+00-1.0000000000000000000000000000000000000000000000000000000000000000e+00i, +8.0000000000000000000000000000000000000000000000000000000000000000e+00+2.2000000000000001776356839400250464677810668945312500000000000000e+00i, +0.0000000000000000000000000000000000000000000000000000000000000000e+00-1.0000000000000000000000000000000000000000000000000000000000000000e+01i]; [ +2.0000000000000000000000000000000000000000000000000000000000000000e+00+0.0000000000000000000000000000000000000000000000000000000000000000e+00i, +1.0000000000000000000000000000000000000000000000000000000000000000e+01+0.0000000000000000000000000000000000000000000000000000000000000000e+00i, +8.0999999999999996447286321199499070644378662109375000000000000000e+00+2.2000000000000001776356839400250464677810668945312500000000000000e+00i]; [ -9.0000000000000000000000000000000000000000000000000000000000000000e+00+3.0000000000000000000000000000000000000000000000000000000000000000e+00i, -5.0000000000000000000000000000000000000000000000000000000000000000e+00+3.0000000000000000000000000000000000000000000000000000000000000000e+00i, -1.0000000000000000000000000000000000000000000000000000000000000000e+00+0.0000000000000000000000000000000000000000000000000000000000000000e+00i] ] b =[ [ +9.0000000000000000000000000000000000000000000000000000000000000000e+00+0.0000000000000000000000000000000000000000000000000000000000000000e+00i, +8.0000000000000000000000000000000000000000000000000000000000000000e+00-1.0000000000000000208166817117216851329430937767028808593750000000e-02i, +3.0000000000000000000000000000000000000000000000000000000000000000e+00+1.0009999999999998898658759571844711899757385253906250000000000000e+00i]; [ +3.0000000000000000000000000000000000000000000000000000000000000000e+00-8.0000000000000000000000000000000000000000000000000000000000000000e+00i, -1.1000000000000000000000000000000000000000000000000000000000000000e+01+1.0000000000000000555111512312578270211815834045410156250000000000e-01i, +8.0000000000000000000000000000000000000000000000000000000000000000e+00+1.0000000000000000818030539140313095458623138256371021270751953125e-05i]; [ -8.0000000000000000000000000000000000000000000000000000000000000000e+00+1.0000000000000000000000000000000000000000000000000000000000000000e+00i, +6.0000000000000000000000000000000000000000000000000000000000000000e+00+0.0000000000000000000000000000000000000000000000000000000000000000e+00i, +1.1000000000000000888178419700125232338905334472656250000000000000e+00+1.0000000000000000000000000000000000000000000000000000000000000000e+00i] ] c =[ [ +3.0000000000000000000000000000000000000000000000000000000000000000e+00+1.0000000000000000000000000000000000000000000000000000000000000000e+00i, -3.0000000000000000000000000000000000000000000000000000000000000000e+00+9.9900000000000002131628207280300557613372802734375000000000000000e+00i, -9.0000000000000000000000000000000000000000000000000000000000000000e+00-1.1000000000000000000000000000000000000000000000000000000000000000e+01i]; [ +8.0000000000000000000000000000000000000000000000000000000000000000e+00-1.0000000000000000000000000000000000000000000000000000000000000000e+00i, +4.0000000000000000000000000000000000000000000000000000000000000000e+00+4.4400000000000003907985046680551022291183471679687500000000000000e+00i, +8.0000000000000000000000000000000000000000000000000000000000000000e+00+9.0000000000000000000000000000000000000000000000000000000000000000e+00i]; [ +6.0000000000000000000000000000000000000000000000000000000000000000e+00+0.0000000000000000000000000000000000000000000000000000000000000000e+00i, -1.0000000000000000000000000000000000000000000000000000000000000000e+00+0.0000000000000000000000000000000000000000000000000000000000000000e+00i, -2.0000000000000000000000000000000000000000000000000000000000000000e+00+1.0000000000000000000000000000000000000000000000000000000000000000e+00i] ] alpha = +3.0000000000000000000000000000000000000000000000000000000000000000e+00-1.1999999999999999555910790149937383830547332763671875000000000000e+00i beta = -2.0000000000000000000000000000000000000000000000000000000000000000e+00-2.0000000000000000000000000000000000000000000000000000000000000000e+00i ans =[ [ +1.9412000000000000429878355134860610085447466221730208764814304152e+02-3.9919999999999997415400798672635510784913059003625879561714777377e+01i, -3.2440199999999999789655757975737052220306876582624715712208640779e+02-1.9193400000000000968240765342187614821595591023640492250541356027e+02i, +2.3552422999999999997259326805967153749369165591598754371335859085e+02-3.9797933599999995135557075099860818051923306637592336735756250096e+01i]; [ -1.8239999999999999016342400182111297296053289861415149007714347172e+02-2.5970000000000000937028232783632108284631549553475262574071520758e+02i, -1.1830400000000000489791540658757190133827036314312018406665329523e+02+8.0140000000000003122918590392487108980814301859549537116009666066e+01i, +2.9515651999999999967519852739003531813778936267996503629002521457e+02-1.0768569999999999661823612607559887657445872205333970782644834681e+02i]; [ -1.1400000000000000333066907387546962127089500427246093750000000000e+02+2.8979999999999999715782905695959925651550292968750000000000000000e+02i, -7.9101999999999999661257077399056923910599691859337230298536113865e+01+1.6939999999999989081927997958132429788216147581137981441043024206e+00i, -1.7971994999999999910681342224060695849998775453588962690525666214e+02+1.5629648599999999952610174635322244325683964957536896708915619612e+02i] ] #please check by Matlab or Octave following and ans above alpha * a * b + beta * c

This output is tough to see, and pass to octave got more readable results.

$ ./a.out

a =

1.00000 - 1.00000i 8.00000 + 2.20000i 0.00000 - 10.00000i

2.00000 + 0.00000i 10.00000 + 0.00000i 8.10000 + 2.20000i

-9.00000 + 3.00000i -5.00000 + 3.00000i -1.00000 + 0.00000i

b =

9.0000 + 0.0000i 8.0000 - 0.0100i 3.0000 + 1.0010i

3.0000 - 8.0000i -11.0000 + 0.1000i 8.0000 + 0.0000i

-8.0000 + 1.0000i 6.0000 + 0.0000i 1.1000 + 1.0000i

c =

3.0000 + 1.0000i -3.0000 + 9.9900i -9.0000 - 11.0000i

8.0000 - 1.0000i 4.0000 + 4.4400i 8.0000 + 9.0000i

6.0000 + 0.0000i -1.0000 + 0.0000i -2.0000 + 1.0000i

alpha = 3.0000 - 1.2000i

beta = -2 - 2i

ans =

194.120 - 39.920i -324.402 - 191.934i 235.524 - 39.798i

-182.400 - 259.700i -118.304 + 80.140i 295.157 - 107.686i

-114.000 + 289.800i -79.102 + 1.694i -179.720 + 156.296i

ans =

194.120 - 39.920i -324.402 - 191.934i 235.524 - 39.798i

-182.400 - 259.700i -118.304 + 80.140i 295.157 - 107.686i

-114.000 + 289.800i -79.102 + 1.694i -179.720 + 156.296i

One can see that the input and output look wrong when we see the raw output. Nevertheless, the output is correct up to 153 decimal digits.

Let us explain the reason. We input many values, but we look at . MPBLAS GMP output is the following:

alpha = +3.0000000000000000000000000000000000000000000000000000000000000000e+00-1.1999999999999999555910790149937383830547332763671875000000000000e+00i

The imaginary part is correct up to 16 decimal digits. Again, this is correct behavior. First, compilers read alpha = mpc_class(3.0,-1.2);, and converts the string “” to double precision number. We cannot represent “” exactly, since representing in binary numbers results in an infinite number of circular decimals, i.e., . Then alpha is initialized by mpc_class. Thus, input and output look wrong. We can use a workaround for this behavior by enclosing numbers in mpf_class("") to indicate exact numbers up to specified precision as follows:

alpha = mpc_class(mpf_class("3.0"), mpf_class("-1.2"));

Then, the alpha becomes as follows:

alpha = +3.0000000000000000000000000000000000000000000000000000000000000000e+00-1.2000000000000000000000000000000000000000000000000000000000000000e+00i

Even though “” looks exact, there is still a tiny difference between mathematically rigorous “”.

Finally, we show a program and the result fixing such trancation problem as follows:

$ ./a.out # Cgemm demo... a =[ [ +1.0000000000000000000000000000000000000000000000000000000000000000e+00-1.0000000000000000000000000000000000000000000000000000000000000000e+00i, +8.0000000000000000000000000000000000000000000000000000000000000000e+00+2.2000000000000000000000000000000000000000000000000000000000000000e+00i, +0.0000000000000000000000000000000000000000000000000000000000000000e+00-1.0000000000000000000000000000000000000000000000000000000000000000e+01i]; [ +2.0000000000000000000000000000000000000000000000000000000000000000e+00+0.0000000000000000000000000000000000000000000000000000000000000000e+00i, +1.0000000000000000000000000000000000000000000000000000000000000000e+01+0.0000000000000000000000000000000000000000000000000000000000000000e+00i, +8.1000000000000000000000000000000000000000000000000000000000000000e+00+2.2000000000000000000000000000000000000000000000000000000000000000e+00i]; [ -9.0000000000000000000000000000000000000000000000000000000000000000e+00+3.0000000000000000000000000000000000000000000000000000000000000000e+00i, -5.0000000000000000000000000000000000000000000000000000000000000000e+00+3.0000000000000000000000000000000000000000000000000000000000000000e+00i, -1.0000000000000000000000000000000000000000000000000000000000000000e+00+0.0000000000000000000000000000000000000000000000000000000000000000e+00i] ] b =[ [ +9.0000000000000000000000000000000000000000000000000000000000000000e+00+0.0000000000000000000000000000000000000000000000000000000000000000e+00i, +8.0000000000000000000000000000000000000000000000000000000000000000e+00-1.0000000000000000000000000000000000000000000000000000000000000000e-02i, +3.0000000000000000000000000000000000000000000000000000000000000000e+00+1.0010000000000000000000000000000000000000000000000000000000000000e+00i]; [ +3.0000000000000000000000000000000000000000000000000000000000000000e+00-8.0000000000000000000000000000000000000000000000000000000000000000e+00i, -1.1000000000000000000000000000000000000000000000000000000000000000e+01+1.0000000000000000000000000000000000000000000000000000000000000000e-01i, +8.0000000000000000000000000000000000000000000000000000000000000000e+00+1.0000000000000000000000000000000000000000000000000000000000000000e-05i]; [ -8.0000000000000000000000000000000000000000000000000000000000000000e+00+1.0000000000000000000000000000000000000000000000000000000000000000e+00i, +6.0000000000000000000000000000000000000000000000000000000000000000e+00+0.0000000000000000000000000000000000000000000000000000000000000000e+00i, +1.1000000000000000000000000000000000000000000000000000000000000000e+00+1.0000000000000000000000000000000000000000000000000000000000000000e+00i] ] c =[ [ +3.0000000000000000000000000000000000000000000000000000000000000000e+00+1.0000000000000000000000000000000000000000000000000000000000000000e+00i, -3.0000000000000000000000000000000000000000000000000000000000000000e+00+9.9900000000000000000000000000000000000000000000000000000000000000e+00i, -9.0000000000000000000000000000000000000000000000000000000000000000e+00-1.1000000000000000000000000000000000000000000000000000000000000000e+01i]; [ +8.0000000000000000000000000000000000000000000000000000000000000000e+00-1.0000000000000000000000000000000000000000000000000000000000000000e+00i, +4.0000000000000000000000000000000000000000000000000000000000000000e+00+4.4400000000000000000000000000000000000000000000000000000000000000e+00i +8.0000000000000000000000000000000000000000000000000000000000000000e+00+9.0000000000000000000000000000000000000000000000000000000000000000e+00i]; [ +6.0000000000000000000000000000000000000000000000000000000000000000e+00+0.0000000000000000000000000000000000000000000000000000000000000000e+00i, -1.0000000000000000000000000000000000000000000000000000000000000000e+00+0.0000000000000000000000000000000000000000000000000000000000000000e+00i -2.0000000000000000000000000000000000000000000000000000000000000000e+00+1.0000000000000000000000000000000000000000000000000000000000000000e+00i] ] alpha = +3.0000000000000000000000000000000000000000000000000000000000000000e+00-1.2000000000000000000000000000000000000000000000000000000000000000e+00i beta = -2.0000000000000000000000000000000000000000000000000000000000000000e+00-2.0000000000000000000000000000000000000000000000000000000000000000e+00i beta = -2.0000000000000000000000000000000000000000000000000000000000000000e+00-2.0000000000000000000000000000000000000000000000000000000000000000e+00i ans =[ [ +1.9412000000000000000000000000000000000000000000000000000000000000e+02-3.9920000000000000000000000000000000000000000000000000000000000000e+01i, -3.2440200000000000000000000000000000000000000000000000000000000000e+02-1.9193400000000000000000000000000000000000000000000000000000000000e+02i, +2.3552423000000000000000000000000000000000000000000000000000000000e+02-3.9797933600000000000000000000000000000000000000000000000000000000e+01i]; [ -1.8240000000000000000000000000000000000000000000000000000000000000e+02-2.5970000000000000000000000000000000000000000000000000000000000000e+02i, -1.1830400000000000000000000000000000000000000000000000000000000000e+02+8.0140000000000000000000000000000000000000000000000000000000000000e+01i, +2.9515652000000000000000000000000000000000000000000000000000000000e+02-1.0768570000000000000000000000000000000000000000000000000000000000e+02i]; [ -1.1400000000000000000000000000000000000000000000000000000000000000e+02+2.8980000000000000000000000000000000000000000000000000000000000000e+02i, -7.9102000000000000000000000000000000000000000000000000000000000000e+01+1.6940000000000000000000000000000000000000000000000000000000000000e+00i, -1.7971995000000000000000000000000000000000000000000000000000000000e+02+1.5629648600000000000000000000000000000000000000000000000000000000e+02i] ] #please check by Matlab or Octave following and ans above alpha * a * b + beta * c

Unfortunately, such a workaround does not always exist. For example, _Float128 and _Float64x do not allow such a string to a floating point number conversion. Therefore, we should write a program to handle high-precision inputs in such a case.

In any case, the calculations are correct up to specified precisions.

7.3.2 OpenMP accelerated MPBLAS

We have provided reference implementation, and a simple OpenMP accelerated version for MPBLAS, shown in Table 5. Even though the number of optimized routines is small; however, acceleration of Rgemm is significant as it impacts the performance. To change linking against the optimized version, we must add “_opt” for MPBLAS library as follows:

-

•

-lmpblas_mpfr -lmpblas_mpfr_opt

-

•

-lmpblas_gmp -lmpblas_gmp_opt

-

•

-lmpblas_double -lmpblas_double_opt

-

•

-lmpblas__Float128 -lmpblas__Float128_opt

-

•

-lmpblas_dd -lmpblas_dd_opt

-

•

-lmpblas_qd -lmpblas_qd_opt

-

•

-lmpblas__Float128 -lmpblas__Float128_opt

-

•

-lmpblas__Float64x -lmpblas__Float64x_opt.

Besides, we must add the “-fopenmp” flag when compiling and linking.

We can compile the demo program for the MPFR version and link it against the optimized version of MPBLAS.

$ g++ -fopenmp -O2 -I/home/docker/MPLAPACK/include \ -I/home/docker/MPLAPACK/include/mplapack \ Rgemm_mpfr.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib -L/home/docker/MPLAPACK/lib \ -lmpblas_mpfr_opt -lmpfr -lmpc -lgmp $ ./a.out ...

Performance comparison is presented in Section 10.

7.3.3 CUDA accelerated MPBLAS

These routines are usable on Ampere and Volta generations of NVIDIA Tesla GPUs. We provide Rgemm and Rsyrk in double-double routines in CUDA [32]. First, we build the CUDA version on Docker as follows:

$ docker build -f Dockerfile_ubuntu20.04_cuda -t mplapack:ubuntu2004_cuda .

In this Dockerfile, we install MPLAPACK at /home/docker/MPLAPACK_CUDA. To run a shell in the Docker enabling GPUs by:

$ docker run --gpus all -it mplapack:ubuntu2004_cuda /bin/bash

Finally, to link against the CUDA version, we compile the program as follows:

$ g++ -O2 -I/home/docker/MPLAPACK_CUDA/include \ -I/home/docker/MPLAPACK_CUDA/include/mplapack Rgemm_dd.cpp \ -Wl,--rpath=/home/docker/MPLAPACK_CUDA/lib -L/home/docker/MPLAPACK_CUDA/lib \ -lmpblas_dd_cuda -lmpblas_dd -lqd \ -L/usr/local/cuda/lib64/ -lcudart $ ./a.out ...

As described in [32], using GPUs significantly improves the preformance of the double-double version of Rgemm and Rsyrk. We provide Rsyrk for the first time. Detailed benchmark will be given in Section 10, and for large matrices, we obtained 450-600GFlops using A100 or V100.

7.4 How to use MPLAPACK

In this subsection, we describe the basic usage of MPLAPACK with examples. The API of MPLAPACK is very similar to the original LAPACK. We always use a one-dimensional array as a column-major type matrix in MPLAPACK. Unfortunately, we do not have an interface like LAPACKE at the moment, and we cannot specify raw- or column-major orders in MPLAPACK.

7.4.1 Eigenvalues and eigenvectors of a real symmetric matrix (Rsyev)

Let us show how we diagonalize a real symmetric matrix

using GMP. Eigenvalues are and eigenvectors are

and for [33].

Following is the prototype definition of GMP’s diagonalization of a symmetric matrix (Rsyev).

void Rsyev(const char *jobz, const char *uplo, mplapackint const n, mpf_class *a, mplapackint const lda, mpf_class *w, mpf_class *work, mplapackint const lwork, mplapackint &info);

And the following is the definition of the original dsyev

SUBROUTINE dsyev( JOBZ, UPLO, N, A, LDA, W, WORK, LWORK, INFO )

*

* -- LAPACK driver routine --

* -- LAPACK is a software package provided by Univ. of Tennessee, --

* -- Univ. of California Berkeley, Univ. of Colorado Denver and NAG Ltd..--

*

* .. Scalar Arguments ..

CHARACTER JOBZ, UPLO

INTEGER INFO, LDA, LWORK, N

* ..

* .. Array Arguments ..

DOUBLE PRECISION A( LDA, * ), W( * ), WORK( * )

* ..

*

There are clear correspondences between variables in the C++ prototype of Rsyev and the header of DSYEV.

-

•

CHARACTER const char *

-

•

INTEGER mplapackint

-

•

DOUBLE PRECISION A(LDA, *) mpf_class *a

-

•

DOUBLE PRECISION W(*) mpf_class *w

-

•

DOUBLE PRECISION WORK(*) mpf_class *work

The list is the following.

One can input the list and save it as Rsyev_test_gmp.cpp, or you can find

/home/docker/mplapack/examples/mplapack/03_SymmetricEigenproblems directory. Then, one can compile on one own following in the Docker environment as follows:

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Rsyev_test_gmp.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib -L/home/docker/MPLAPACK/lib \ -lmplapack_gmp -lmpblas_gmp -lgmpxx -lgmp

Finally, you can run as follows:

$ ./a.out A =[ [ +5.0000000000000000e+00, +4.0000000000000000e+00, +1.0000000000000000e+00, +1.0000000000000000e+00]; [ +4.0000000000000000e+00, +5.0000000000000000e+00, +1.0000000000000000e+00, +1.0000000000000000e+00];] #eigenvalues w =[ [ +1.0000000000000000e+00]; [ +2.0000000000000000e+00]; [ +5.0000000000000000e+00]; [ +1.0000000000000000e+01] ] #eigenvecs U =[ [ +7.0710678118654752e-01, -1.3882760712710340e-155, -3.1622776601683793e-01, +6.3245553203367587e-01]; [ -7.0710678118654752e-01, -1.0249769098010974e-155, -3.1622776601683793e-01, +6.3245553203367587e-01] #you can check eigenvalues using octave/Matlab by: eig(A) #you can check eigenvectors using octave/Matlab by: U’*A*U

One can check the result by comparing the result of the octave.

$./a.out | octave

octave: X11 DISPLAY environment variable not set

octave: disabling GUI features

A =

5 4 1 1

4 5 1 1

1 1 4 2

1 1 2 4

w =

1

2

5

10

U =

0.70711 -0.00000 -0.31623 0.63246

-0.70711 -0.00000 -0.31623 0.63246

0.00000 -0.70711 0.63246 0.31623

0.00000 0.70711 0.63246 0.31623

ans =

1.00000

2.00000

5.00000

10.00000

ans =

1.0000e+00 -2.8639e-155 2.6715e-17 -5.3429e-17

-2.6070e-155 2.0000e+00 -4.1633e-18 -2.0817e-18

-6.7450e-17 1.7912e-16 5.0000e+00 8.5557e-17

-3.5824e-16 -1.3490e-16 1.1229e-16 1.0000e+01

As a summary, we show how we compile and run binary64, FP80, binary128, double-double, quad-double, GMP, and MPFR versions of Rsyev demo programs in

/home/docker/mplapack/examples/mplapack/03_SymmetricEigenproblems as follows:

-

•

binary64 (double) version

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Rsyev_test_double.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib \ -L/home/docker/MPLAPACK/lib -lmplapack_double -lmpblas_double $ ./a.out

-

•

FP80 (extended double) version

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Rsyev_test__Float64x.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib \ -L/home/docker/MPLAPACK/lib -lmplapack__Float64x -lmpblas__Float64x $ ./a.out

-

•

binary128 version

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Rsyev_test__Float128.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib \ -L/home/docker/MPLAPACK/lib \ -lmplapack__Float128 -lmpblas__Float128 $ ./a.out

or (on macOS, mingw64 and CentOS7 amd64)

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Rsyev_test__Float128.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib \ -L/home/docker/MPLAPACK/lib \ -lmplapack__Float128 -lmpblas__Float128 -lquadmath $ ./a.out

-

•

double-double version

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Rsyev_test_dd.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib -L/home/docker/MPLAPACK/lib \ -lmplapack_dd -lmpblas_dd -lqd $ ./a.out

-

•

quad-double version

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Rsyev_test_qd.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib -L/home/docker/MPLAPACK/lib \ -lmplapack_qd -lmpblas_qd -lqd $ ./a.out

-

•

GMP version

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Rsyev_test_gmp.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib -L/home/docker/MPLAPACK/lib \ -lmplapack_gmp -lmpblas_gmp -lgmpxx -lgmp $ ./a.out

-

•

MPFR version

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Rsyev_test_mpfr.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib -L/home/docker/MPLAPACK/lib \ -lmplapack_mpfr -lmpblas_mpfr -lmpfr -lmpc -lgmp $ ./a.out

7.4.2 Eigenvalues and eigenvectors of a complex Hermitian matrix (Cheev)

Let us show how we diagonalize a complex hermitian matrix

using GMP. Eigenvalues are and eigenvectors are

and for .

Following is the prototype definition of GMP’s diagonalization of a symmetric matrix (Cheev).

void Cheev(const char *jobz, const char *uplo, mplapackint const n, mpc_class *a, mplapackint const lda, mpf_class *w, mpc_class *work, mplapackint const lwork, mpf_class *rwork, mplapackint &info);

And the following is the definition of the original dsyev

* SUBROUTINE ZHEEV( JOBZ, UPLO, N, A, LDA, W, WORK, LWORK, RWORK, * INFO ) * * .. Scalar Arguments .. * CHARACTER JOBZ, UPLO * INTEGER INFO, LDA, LWORK, N * .. * .. Array Arguments .. * DOUBLE PRECISION RWORK( * ), W( * ) * COMPLEX*16 A( LDA, * ), WORK( * )

There are clear correspondences between variables in the C++ prototype of Cheev and the header of ZHEEV.

-

•

CHARACTER const char *

-

•

INTEGER mplapackint

-

•

COMPLEX*16 A(LDA, *) mpc_class *a

-

•

COMPLEX*16 WORK(*) mpc_class *rwork

-

•

DOUBLE PRECISION W(*) mpf_class *w

-

•

DOUBLE PRECISION RWORK(*) mpf_class *rwork

The list is the following.

One can input the list and save it as Cheev_test_gmp.cpp, or you can find

/home/docker/mplapack/examples/mplapack/03_SymmetricEigenproblems directory. Then, one can compile on one own following in the Docker environment as follows:

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Cheev_test_gmp.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib -L/home/docker/MPLAPACK/lib \ -lmplapack_gmp -lmpblas_gmp -lgmpxx -lgmp

Finally, you can run as follows:

A =[ [ +2.0000000000000000000000000000000000000000000000000000000000000000e+00+0.0000000000000000000000000000000000000000000000000000000000000000e+00i, +0.0000000000000000000000000000000000000000000000000000000000000000e+00-1.0000000000000000000000000000000000000000000000000000000000000000e+00i, +0.0000000000000000000000000000000000000000000000000000000000000000e+00+0.0000000000000000000000000000000000000000000000000000000000000000e+00i]; [ +0.0000000000000000000000000000000000000000000000000000000000000000e+00+1.0000000000000000000000000000000000000000000000000000000000000000e+00i, +2.0000000000000000000000000000000000000000000000000000000000000000e+00+0.0000000000000000000000000000000000000000000000000000000000000000e+00i, +0.0000000000000000000000000000000000000000000000000000000000000000e+00+0.0000000000000000000000000000000000000000000000000000000000000000e+00i]; [ +0.0000000000000000000000000000000000000000000000000000000000000000e+00+0.0000000000000000000000000000000000000000000000000000000000000000e+00i, +0.0000000000000000000000000000000000000000000000000000000000000000e+00+0.0000000000000000000000000000000000000000000000000000000000000000e+00i, +3.0000000000000000000000000000000000000000000000000000000000000000e+00+0.0000000000000000000000000000000000000000000000000000000000000000e+00i] ] #eigenvalues w =[ [ +1.0000000000000000000000000000000000000000000000000000000000000000e+00]; [ +3.0000000000000000000000000000000000000000000000000000000000000000e+00]; [ +3.0000000000000000000000000000000000000000000000000000000000000000e+00] ] #eigenvecs U =[ [ +0.0000000000000000000000000000000000000000000000000000000000000000e+00-7.0710678118654752440084436210484903928483593768847403658833986900e-01i, +0.0000000000000000000000000000000000000000000000000000000000000000e+00-7.0710678118654752440084436210484903928483593768847403658833986900e-01i, +0.0000000000000000000000000000000000000000000000000000000000000000e+00+0.0000000000000000000000000000000000000000000000000000000000000000e+00i]; [ -7.0710678118654752440084436210484903928483593768847403658833986900e-01+0.0000000000000000000000000000000000000000000000000000000000000000e+00i, +7.0710678118654752440084436210484903928483593768847403658833986900e-01+0.0000000000000000000000000000000000000000000000000000000000000000e+00i, +0.0000000000000000000000000000000000000000000000000000000000000000e+00+0.0000000000000000000000000000000000000000000000000000000000000000e+00i]; [ +0.0000000000000000000000000000000000000000000000000000000000000000e+00+0.0000000000000000000000000000000000000000000000000000000000000000e+00i, +0.0000000000000000000000000000000000000000000000000000000000000000e+00+0.0000000000000000000000000000000000000000000000000000000000000000e+00i, +1.0000000000000000000000000000000000000000000000000000000000000000e+00+0.0000000000000000000000000000000000000000000000000000000000000000e+00i] ] #you can check eigenvalues using octave/Matlab by: eig(A) #you can check eigenvectors using octave/Matlab by: U’*A*U

One can check the result by comparing the result of the octave.

$ ./a.out | octave octave: X11 DISPLAY environment variable not set octave: disabling GUI features A = 2 + 0i 0 - 1i 0 + 0i 0 + 1i 2 + 0i 0 + 0i 0 + 0i 0 + 0i 3 + 0i w = 1 3 3 U = 0.00000 - 0.70711i 0.00000 - 0.70711i 0.00000 + 0.00000i -0.70711 + 0.00000i 0.70711 + 0.00000i 0.00000 + 0.00000i 0.00000 + 0.00000i 0.00000 + 0.00000i 1.00000 + 0.00000i ans = 1 3 3 ans = 1.00000 0.00000 0.00000 0.00000 3.00000 0.00000 0.00000 0.00000 3.00000

As a summary, we show how we compile and run binary64, FP80, binary128, double-double, quad-double, GMP, and MPFR versions of Cheev demo programs in

/home/docker/mplapack/examples/mplapack/03_SymmetricEigenproblems as follows:

-

•

binary64 (double) version

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Cheev_test_double.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib \ -L/home/docker/MPLAPACK/lib -lmplapack_double -lmpblas_double $ ./a.out

-

•

FP80 (extended double) version

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Cheev_test__Float64x.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib \ -L/home/docker/MPLAPACK/lib -lmplapack__Float64x -lmpblas__Float64x $ ./a.out

-

•

binary128 version

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Cheev_test__Float128.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib \ -L/home/docker/MPLAPACK/lib \ -lmplapack__Float128 -lmpblas__Float128 $ ./a.out

or (on macOS, mingw64 and CentOS7 amd64)

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Cheev_test__Float128.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib \ -L/home/docker/MPLAPACK/lib \ -lmplapack__Float128 -lmpblas__Float128 -lquadmath $ ./a.out

-

•

double-double version

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Cheev_test_dd.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib -L/home/docker/MPLAPACK/lib \ -lmplapack_dd -lmpblas_dd -lqd $ ./a.out

-

•

quad-double version

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Cheev_test_qd.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib -L/home/docker/MPLAPACK/lib \ -lmplapack_qd -lmpblas_qd -lqd $ ./a.out

-

•

GMP version

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Cheev_test_gmp.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib -L/home/docker/MPLAPACK/lib \ -lmplapack_gmp -lmpblas_gmp -lgmpxx -lgmp $ ./a.out

-

•

MPFR version

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Cheev_test_mpfr.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib -L/home/docker/MPLAPACK/lib \ -lmplapack_mpfr -lmpblas_mpfr -lmpfr -lmpc -lgmp $ ./a.out

7.4.3 Eigenvalue problem of a real non-symmetric matrix (Rgees)

The following example shows how to solve a real non-symmetric eigenvalue problem. For example, let be four times four matrices:

with eigenvalues , , , [33]. First, we solve it with dd_real class with Rgees. This routine computes for an -by- real nonsymmetric matrix , the eigenvalues, the real Schur form , and, optionally, the matrix of Schur vectors . This gives the Schur factorization . overwrites the matrix . The prototype definition of Rgees is the following:

void Rgees(const char *jobvs, const char *sort, bool (*select)(dd_real, dd_real), mplapackint const n, dd_real *a, mplapackint const lda, mplapackint &sdim, dd_real *wr, dd_real *wi, dd_real *vs, mplapackint const ldvs, dd_real *work, mplapackint const lwork, bool *bwork, mplapackint &info);

The corresponding LAPACK routine is DGEES. We show 14 lines of DGEES are following.

* SUBROUTINE DGEES( JOBVS, SORT, SELECT, N, A, LDA, SDIM, WR, WI, * VS, LDVS, WORK, LWORK, BWORK, INFO ) * * .. Scalar Arguments .. * CHARACTER JOBVS, SORT * INTEGER INFO, LDA, LDVS, LWORK, N, SDIM * .. * .. Array Arguments .. * LOGICAL BWORK( * ) * DOUBLE PRECISION A( LDA, * ), VS( LDVS, * ), WI( * ), WORK( * ), * $ WR( * ) * .. * .. Function Arguments .. * LOGICAL SELECT * EXTERNAL SELECT

A sample program is following:

One can compile this source code by:

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Rgees_test_dd.cpp -L/home/docker/MPLAPACK/lib -lmplapack_dd -lmpblas_dd -lqd

The output of the executable is the following:

$ LD_LIBRARY_PATH=/home/docker/MPLAPACK/lib ./a.out # octave check a =[ [ -2.0000000000000000e+00, +2.0000000000000000e+00, +2.0000000000000000e+00, +2.0000000000000000e+00]; [ -3.0000000000000000e+00, +3.0000000000000000e+00, +2.0000000000000000e+00, +2.0000000000000000e+00]; [ -2.0000000000000000e+00, +0.0000000000000000e+00, +4.0000000000000000e+00, +2.0000000000000000e+00]; [ -1.0000000000000000e+00, +0.0000000000000000e+00, +0.0000000000000000e+00, +5.0000000000000000e+00] ] vs =[ [ -7.3029674334022148e-01, -6.8313005106397323e-01, +0.0000000000000000e+00, +0.0000000000000000e+00]; [ -5.4772255750516611e-01, +5.8554004376911991e-01, +5.9761430466719682e-01, -2.7760873845656105e-33]; [ -3.6514837167011074e-01, +3.9036002917941327e-01, -7.1713716560063618e-01, -4.4721359549995794e-01]; [ -1.8257418583505537e-01, +1.9518001458970664e-01, -3.5856858280031809e-01, +8.9442719099991588e-01] ] t =[ [ +1.0000000000000000e+00, -6.9487922897230340e+00, +2.5313275267375116e+00, -1.9595917942265425e+00]; [ +0.0000000000000000e+00, +2.0000000000000000e+00, -1.3063945294843617e+00, +7.8558440484957257e-01]; [ +0.0000000000000000e+00, +0.0000000000000000e+00, +3.0000000000000000e+00, -1.0690449676496975e+00]; [ +0.0000000000000000e+00, +0.0000000000000000e+00, +0.0000000000000000e+00, +4.0000000000000000e+00] ] vs*t*vs’ eig(a) w_1 = +1.0000000000000000e+00 +0.0000000000000000e+00i w_2 = +2.0000000000000000e+00 +0.0000000000000000e+00i w_3 = +3.0000000000000000e+00 +0.0000000000000000e+00i w_4 = +4.0000000000000000e+00 +0.0000000000000000e+00i

We see that we calculated all the eigenvalues correctly. Moreover, you can check the Schur matrix by octave.

$ LD_LIBRARY_PATH=/home/docker/MPLAPACK/lib ./a.out | octave octave: X11 DISPLAY environment variable not set octave: disabling GUI features a = -2 2 2 2 -3 3 2 2 -2 0 4 2 -1 0 0 5 vs = -0.73030 -0.68313 0.00000 0.00000 -0.54772 0.58554 0.59761 -0.00000 -0.36515 0.39036 -0.71714 -0.44721 -0.18257 0.19518 -0.35857 0.89443 t = 1.00000 -6.94879 2.53133 -1.95959 0.00000 2.00000 -1.30639 0.78558 0.00000 0.00000 3.00000 -1.06904 0.00000 0.00000 0.00000 4.00000 ans = -2.0000e+00 2.0000e+00 2.0000e+00 2.0000e+00 -3.0000e+00 3.0000e+00 2.0000e+00 2.0000e+00 -2.0000e+00 5.1394e-16 4.0000e+00 2.0000e+00 -1.0000e+00 2.5697e-16 -4.5393e-17 5.0000e+00 ans = 1.00000 2.00000 3.00000 4.00000 w_1 = 1 w_2 = 2 w_3 = 3 w_4 = 4

We can see that we correctly calculated all the eigenvalues, the Schur form, and the Schur vectors.

7.4.4 Eigenvalue problem of a complex non-symmetric matrix (Cgees)

The following example shows how to solve a complex non-symmetric eigenvalue problem. For example, let be four times four matrices:

with eigenvalues , , , [33]. First, we solve it with dd_real class with Cgees. This routine computes for an -by- real nonsymmetric matrix , the eigenvalues, the real Schur form , and, optionally, the matrix of Schur vectors . This gives the Schur factorization . overwrites the matrix . The prototype definition of Cgees is the following:

void Cgees(const char *jobvs, const char *sort, bool (*select)(dd_complex), mplapackint const n, dd_complex *a, mplapackint const lda, mplapackint &sdim, dd_complex *w, dd_complex *vs, mplapackint const ldvs, dd_complex *work, mplapackint const lwork, dd_real *rwork, bool *bwork, mplapackint &info);

The corresponding LAPACK routine is ZGEES. We show 14 lines of ZGEES are following.

* SUBROUTINE ZGGES( JOBVSL, JOBVSR, SORT, SELCTG, N, A, LDA, B, LDB, * SDIM, ALPHA, BETA, VSL, LDVSL, VSR, LDVSR, WORK, * LWORK, RWORK, BWORK, INFO ) * * .. Scalar Arguments .. * CHARACTER JOBVSL, JOBVSR, SORT * INTEGER INFO, LDA, LDB, LDVSL, LDVSR, LWORK, N, SDIM * .. * .. Array Arguments .. * LOGICAL BWORK( * ) * DOUBLE PRECISION RWORK( * ) * COMPLEX*16 A( LDA, * ), ALPHA( * ), B( LDB, * ), * $ BETA( * ), VSL( LDVSL, * ), VSR( LDVSR, * ), * $ WORK( * ) * .. * .. Function Arguments .. * LOGICAL SELCTG * EXTERNAL SELCTG

A sample program is following:

One can compile this source code by:

$ g++ -O2 -I/home/docker/MPLAPACK/include -I/home/docker/MPLAPACK/include/mplapack \ Cgees_test_dd.cpp -Wl,--rpath=/home/docker/MPLAPACK/lib -L/home/docker/MPLAPACK/lib \ -lmplapack_dd -lmpblas_dd -lqd

The output of the executable is the following:

# Ex. 6.5 p. 116, Collection of Matrices for Testing Computational Algorithms, Robert T. Gregory, David L. Karney # octave check split_long_rows(0) a =[ [ +5.0000000000000000e+00+9.0000000000000000e+00i, +5.0000000000000000e+00+5.0000000000000000e+00i, -6.0000000000000000e+00-6.0000000000000000e+00i, -7.0000000000000000e+00-7.0000000000000000e+00i]; [ +3.0000000000000000e+00+3.0000000000000000e+00i, +6.0000000000000000e+00+1.0000000000000000e+01i, -5.0000000000000000e+00-5.0000000000000000e+00i, -6.0000000000000000e+00-6.0000000000000000e+00i]; [ +2.0000000000000000e+00+2.0000000000000000e+00i, +3.0000000000000000e+00+3.0000000000000000e+00i, -1.0000000000000000e+00+3.0000000000000000e+00i, -5.0000000000000000e+00-5.0000000000000000e+00i]; [ +1.0000000000000000e+00+1.0000000000000000e+00i, +2.0000000000000000e+00+2.0000000000000000e+00i, -3.0000000000000000e+00-3.0000000000000000e+00i, +0.0000000000000000e+00+4.0000000000000000e+00i] ] w =[ +2.0000000000000000e+00+6.0000000000000000e+00i, +4.0000000000000000e+00+8.0000000000000000e+00i, +3.0000000000000000e+00+7.0000000000000000e+00i, +1.0000000000000000e+00+5.0000000000000000e+00i] vs =[ [ +3.7428970594742688e-01-5.2577170701090183e-02i, -1.1134324587883832e-01+4.9471763536387269e-01i, +3.8355427599552469e-01-6.7296813993349557e-01i, +0.0000000000000000e+00+0.0000000000000000e+00i]; [ +7.4857941189485376e-01-1.0515434140218037e-01i, +3.7114415292946107e-02-1.6490587845462423e-01i, -1.2785142533184156e-01+2.2432271331116519e-01i, +4.2849243249895099e-01-3.8694646738853330e-01i]; [ +3.7428970594742688e-01-5.2577170701090183e-02i, -1.1134324587883832e-01+4.9471763536387269e-01i, -2.5570285066368313e-01+4.4864542662233038e-01i, -4.2849243249895099e-01+3.8694646738853330e-01i]; [ +3.7428970594742688e-01-5.2577170701090183e-02i, +1.4845766117178443e-01-6.5962351381849692e-01i, +1.2785142533184156e-01-2.2432271331116519e-01i, -4.2849243249895099e-01+3.8694646738853330e-01i] ] t =[ [ +2.0000000000000000e+00+6.0000000000000000e+00i, -4.6106496752391337e+00+2.0837249271958309e+00i, +4.9279443181496018e+00-6.3330804742348119e-01i, +1.9999717105990234e+01+3.8559640218114672e+00i]; [ +0.0000000000000000e+00+0.0000000000000000e+00i, +4.0000000000000000e+00+8.0000000000000000e+00i, -2.8979419726911526e-01-5.4495110256015637e-01i, -1.0301880205614183e+00-5.9845553818543799e+00i]; [ +0.0000000000000000e+00+0.0000000000000000e+00i, +0.0000000000000000e+00+0.0000000000000000e+00i, +3.0000000000000000e+00+7.0000000000000000e+00i, +2.2784477280226518e+00+4.5175962580412624e+00i]; [ +0.0000000000000000e+00+0.0000000000000000e+00i, +0.0000000000000000e+00+0.0000000000000000e+00i, +0.0000000000000000e+00+0.0000000000000000e+00i, +1.0000000000000000e+00+5.0000000000000000e+00i] ] vs*t*vs’ eig(a)

We see that we calculated all the eigenvalues correctly. Moreover, you can check the Schur matrix by octave.

octave: X11 DISPLAY environment variable not set

octave: disabling GUI features

a =

5 + 9i 5 + 5i -6 - 6i -7 - 7i

3 + 3i 6 + 10i -5 - 5i -6 - 6i

2 + 2i 3 + 3i -1 + 3i -5 - 5i

1 + 1i 2 + 2i -3 - 3i 0 + 4i

w =

2 + 6i 4 + 8i 3 + 7i 1 + 5i

vs =

0.37429 - 0.05258i -0.11134 + 0.49472i 0.38355 - 0.67297i 0.00000 + 0.00000i

0.74858 - 0.10515i 0.03711 - 0.16491i -0.12785 + 0.22432i 0.42849 - 0.38695i

0.37429 - 0.05258i -0.11134 + 0.49472i -0.25570 + 0.44865i -0.42849 + 0.38695i

0.37429 - 0.05258i 0.14846 - 0.65962i 0.12785 - 0.22432i -0.42849 + 0.38695i

t =

2.00000 + 6.00000i -4.61065 + 2.08372i 4.92794 - 0.63331i 19.99972 + 3.85596i

0.00000 + 0.00000i 4.00000 + 8.00000i -0.28979 - 0.54495i -1.03019 - 5.98456i

0.00000 + 0.00000i 0.00000 + 0.00000i 3.00000 + 7.00000i 2.27845 + 4.51760i

0.00000 + 0.00000i 0.00000 + 0.00000i 0.00000 + 0.00000i 1.00000 + 5.00000i

ans =

5.00000 + 9.00000i 5.00000 + 5.00000i -6.00000 - 6.00000i -7.00000 - 7.00000i

3.00000 + 3.00000i 6.00000 + 10.00000i -5.00000 - 5.00000i -6.00000 - 6.00000i

2.00000 + 2.00000i 3.00000 + 3.00000i -1.00000 + 3.00000i -5.00000 - 5.00000i

1.00000 + 1.00000i 2.00000 + 2.00000i -3.00000 - 3.00000i -0.00000 + 4.00000i

ans =

2.0000 + 6.0000i

4.0000 + 8.0000i

3.0000 + 7.0000i

1.0000 + 5.0000i