MP-MVS: Multi-Scale Windows PatchMatch

and Planar Prior Multi-View Stereo

Abstract

Significant strides have been made in enhancing the accuracy of Multi-View Stereo (MVS)-based 3D reconstruction. However, untextured areas with unstable photometric consistency often remain incompletely reconstructed. In this paper, we propose a resilient and effective multi-view stereo approach (MP-MVS). We design a multi-scale windows PatchMatch (mPM) to obtain reliable depth of untextured areas. In contrast with other multi-scale approaches, which is faster and can be easily extended to PatchMatch-based MVS approaches. Subsequently, we improve the existing checkerboard sampling schemes by limiting our sampling to distant regions, which can effectively improve the efficiency of spatial propagation while mitigating outlier generation. Finally, we introduce and improve planar prior assisted PatchMatch of ACMP. Instead of relying on photometric consistency, we utilize geometric consistency information between multi-views to select reliable triangulated vertices. This strategy can obtain a more accurate planar prior model to rectify photometric consistency measurements. Our approach has been tested on the ETH3D High-res multi-view benchmark with several state-of-the-art approaches. The results demonstrate that our approach can reach the state-of-the-art. The associated codes will be accessible at https://github.com/RongxuanTan/MP-MVS.

Index Terms:

Multi-View Stereo, multi-scale PatchMatch, planar prior model, checkerboard sampling schemes, untextured areas.I Introduction

Multi-View Stereo (MVS) has been a hot problem for research in computer vision. Thanks to the success of the Structure-from-Motion (SfM) algorithm [1, 2, 3] and the availability of public benchmarks [4, 5, 6, 7], many excellent MVS approaches have emerged in the last decade, and these works have achieved impressive results. Presently, PatchMatch-based approaches are still the best way to solve the dense matching problem efficiently and reliably. PatchMatch estimates the plane of the object surface corresponding to each pixel, thereby transforming depth estimation into plane estimation. The initial plane hypotheses of pixels are first randomly initialized. Then, the confidence of these plane hypotheses is computed based on photometric consistency. Finally, reliable plane hypotheses are propagated to neighboring pixels and the depth of each pixel is estimated efficiently.

Currently, some of the most advanced PatchMatch-based MVS approaches can acquire accurate point clouds. However, PatchMatch [8] is profoundly reliant on the outcomes of the photometric consistency metric. When the patch is located in untextured areas, there is a lack of sufficient texture information in the receptive field, leading to erroneous depth estimates, which eventually makes it difficult to reconstruct these scenes completely. High-resolution images tend to contain more untextured areas, which further exacerbates this issue. Therefore, it is still a challenging problem to accurately estimate the depth of untextured areas.

The texture information within a patch becomes more abundant when an image is downsampled. Therefore ACMM[9] employs image downsampling and obtains more reliable depth estimation. Several of the most advanced PatchMatch-based MVS approaches[10, 11, 12] use ACMM as a fundamental module. Similarly, MARMVS[13] ensures that there is enough texture information within the receptive field by adjusting the patch size of each pixel. These strategies exhibit enhanced robustness in handling scenes with varying scales and texture richness.

In this paper, we also present a multi-scale depth estimation approach to improve the accuracy and completeness of depth estimation in untextured areas, and the contribution of our proposed approach is as follows:

-

•

We propose an efficient and straightforward multi-scale windows PatchMatch (mPM). It conducts photometric consistency using multi-scale patches in sequence within a single PatchMatch process. Notably, it outperforms ACMM in terms of speed and can be simply integrated into any existing PatchMatch-based MVS approaches.

-

•

We have refined the existing checkerboard sampling schemes by limiting our sampling to eight distant regions from the pixels. This improvement effectively curbs the generation of outliers, enhancing both the efficiency and reliability of correct hypothesis propagation.

-

•

We have adopted and improved the planar prior assisted PatchMatch of ACMP[27]. Reliable triangulated vertices are selected by geometric consistency information between multiple views instead of photometric consistency, which improves the accuracy of the planar prior model.

Finally, we combine the above modules to implement the PatchMatch-based MVS approach (MP-MVS). We tested our approach on the ETH3D high-resolution multi-view benchmark. The results show that our approach reaches the state-of-the-art.

II RELATED WORK

MVS can be divided into four classes according to the scene representation: voxel-based [14, 15, 16], surface evolution-based [17, 18, 19], feature growing-based [20, 21, 22], depth map merging-based. Our approach belongs to the last class. In this section, we only introduce PatchMatch-based related approaches.

Gipuma [23] designed a diffusion-like propagation scheme that enables the implementation of PatchMatch on GPUs. COLMAP [24] improves the robustness of view selection through photometric and geometric prior, and it incorporates geometric consistency optimization into the PatchMatch inference process to refine the depth map. Although the above approaches can achieve impressive accuracy, these approaches have difficulty in achieving reliable depth estimation for untextured areas and non-lambert surfaces. To deal with this problem, some approaches estimate the depth of untextured areas by expanding the receptive field of the patch. ACMM and PLC[25] estimate a reliable depth map on the coarse scale by downsampling the image and then guiding the fine-scale image for depth estimation. In addition to adjusting the image resolution, MARMVS adjusts the scale of the patch by measuring the matching, thus ensuring the validity of the photometric consistency metric.

Another class of approaches uses prior information to obtain reliable depth estimates. TAPA-MVS[26] assumes that untextured areas are piecewise planar, which divides the image into superpixels and then uses an initial depth map to fit a plane to each superpixel for estimating the depth of untextured areas. ACMP[27] samples some reliable pixels and triangulates them to obtain a planar prior model, and it designs a matching cost that considers photometric consistency and planar compatibility to make it applicable to depth estimation in non-planar and planar areas.

untextured areas and non-Lambert surfaces are difficult to estimate robustly by traditional approaches. Therefore, some approaches started to try the combination of PatchMatch with deep learning and achieve good results. The sky areas of outdoor scenes often produce strong artifacts that cannot be filtered by geometric consistency. PCF-MVS [28] introduces a semantic segmentation-based sky areas detection to reject artifacts. Depth map confidence estimation is a key component in 3D reconstruction, which optimizes the depth map and filters outliers. DeepC-MVS designed a DNN-based confidence prediction network, which can reliably perform depth map optimization and outlier filtering in high-resolution images. Similarly, [10] used point clouds to generate a surface mesh model, which is used to guide the depth estimation of untextured areas, and it designs a neural network module to detect the confidence level of the depth map and reject the points with low confidence.

In summary, there are two main types of approaches to achieve depth estimation of untextured areas. One category is to increase the receptive field of the patch, and the other category is to use prior information or confidence predictions to optimize the results of the photometric consistency metric. There are limitations in using a single approach, so some approaches [10, 11, 12] use ACMM as the base module and then optimize the depth map by their own approach. However, these approaches perform their own optimization approach at each scaled image, and this computation increases the running cost and redundancy, and there is an accuracy loss in the upsampling. As with MAR-MVS, we note that expanding the patch size can increase the receptive field. Based on this fact, our idea is to use multi-scale windows in one PatchMatch process to achieve concise and fast multi-scale depth estimation. At the same time, we introduce planar prior assisted PatchMatch and refine it by geometric consistency.

III PROPOSED APPROACH

Given a set of calibration images and a set of corresponding camera parameters , MVS needs to estimate the depth maps of all images. The pipeline of our approach is shown in Fig. 1.

During one PatchMatch process, we first estimate the depth using the largest scale window. Then, we progressively decrease the window size and refine the depth estimation results for each pixel through iterative propagation processes. Moreover, we improve the checkerboard sampling scheme of ACMH. By eliminating closer sampling points and only sampling optimal planar hypotheses from the eight more distant neighborhoods, we effectively suppress outlier generation, thereby improving the efficiency and reliability of spatial propagation. Finally, we introduce planar prior assisted PatchMatch of ACMP. Instead of using photometric consistency as in [27], we employ geometric consistency to generate the planar prior model to improve the completeness of untextured scene reconstruction.

III-A Multi-scale windows PatchMatch

We start by introducing the basic PatchMatch framework (ACMH). PatchMatch converts the depth estimation into a planar estimation problem, where the planar hypothesis of each pixel in the image can be obtained by random generation. Assuming that the plane hypothesis of pixel in the reference image is , for reference image patch which is centered on pixel , we can obtain the corresponding source image patch by homography. If the planar hypothesis of pixel is correct, the texture of corresponding patches should be similar. Then the photometric consistency cost between corresponding patches can be calculated as

| (1) |

Where , and is the expected value of pixel intensities within a patch.

Define weights with each source image as . The multi-view aggregated photometric consistency cost for each pixel with plane hypothesis is defined as

| (2) |

PatchMatch samples the planar hypothesis of neighboring pixels and computes aggregation cost. If the aggregation cost of the plane hypothesis is smaller, it is propagated to the current pixel. The PatchMatch typically performs three iterations of propagation optimization with a fixed window size. Unlike the base PatchMatch, our proposed mPM is propagation optimized with multi-scale windows. To ensure that propagation optimized can be implemented consistently and reliably, The following principles should be observed:

-

•

A larger scale window implies more computational cost, and to reduce the computation time, the pixels within the window should be sparsely sampled with equal spacing(Fig. 2), and the photometric consistency metric is computed by sampling points only.

-

•

To ensure that the spatial propagation process is efficient, window size should be the same for all pixels in the same iteration. Similarly, to ensure that all planar hypotheses can be refined at finer scales, the number of sampling points should be the same at different scales.

-

•

Larger-scale windows have a larger receptive field and are therefore able to estimate planar hypotheses for untextured areas more reliably. However, due to the effect of sparse sampling, the depth estimation accuracy obtained from larger-scale windows is poor. Therefore, iterative propagation should be performed starting from a larger scale window and gradually decreasing the window size.

Based on the above criteria, we sample 36 points at equal intervals in a window (Fig. 2). We define the window edge size at each scale as

| (3) |

The maximum scale of windows is determined according to the resolution of images. Starting from the maximum scale window, three iterations of propagation optimization are executed at each scale, and then the scale is reduced step by step for a total of iterations.

Note that at each scale we reset the cost threshold for a good match. To ensure that points that did not obtain reliable planar hypotheses at the previous scale get a robust view selection at this scale. For the t-th iteration under each scale window, the good match cost threshold can be calculated as

| (4) |

where is the initialized matching cost threshold and is a constant.

III-B Checkerboard sampling

ACMH[9] divides neighborhood pixels into eight regions and adaptively selects the optimal hypotheses of each region to propagate (Fig. 4a). Compared to propagation using only neighboring pixels in [23], this adaptive sampling scheme makes it more likely to sample good hypotheses. Since photometric consistency does not enable reliable depth estimation in untextured areas, only a few reliable planar hypotheses exist for untextured areas (Fig. 3c), and most of the reliable planar hypotheses correspond to texture-rich areas (Fig. 3b). As a result, closer ground sampling in the untextured areas often fails to obtain reliable planar hypotheses, thus hindering effective spatial propagation. This leads to a decrease in the accuracy of the depth estimation. On the other hand, it leads to an increase in the number of outliers. It is more severe by the increase in untextured areas of high-resolution images.

Therefore, we do not sample planar hypotheses in the region near the estimated pixel, but set the sampling region far from the estimated pixel(Fig. 4b). For the four regions of top, bottom, left, and right, we set 10 samples in each region. In the four diagonal regions, we set 12 samples in each region. Our proposed strategy can effectively improve the reliability of depth estimation in untextured areas while suppressing the generation of outliers. Experiments show that our sampling strategy does not affect the depth estimation of thin objects.

III-C Planar prior model

There are many planar structures in architectural scenes, that can provide effective planar priors information to assist depth estimation, and many Approaches [27, 10, 26, 30, 31] carry out research on structure priors.

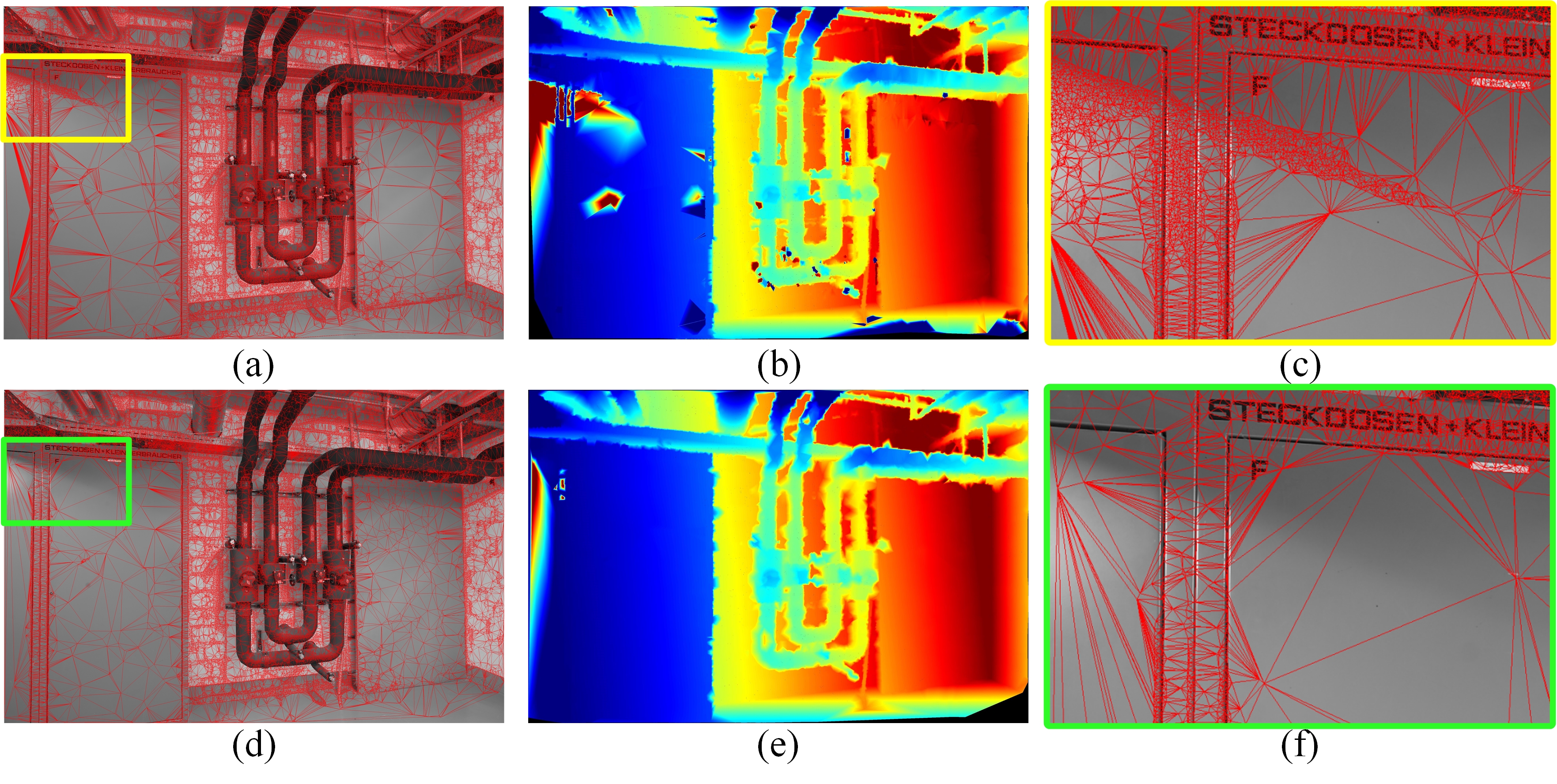

We introduce the planar prior assisted PatchMatch of [27] to improve the completeness of the reconstruction of untextured areas. ACMP divides a whole image into multiple rectangular regions, and a point with the smallest photometric consistency cost is selected in each rectangular region for triangulation, thus the accuracy of the plane prior relies on the reliability of selected points. Due to the ambiguity of photometric consistency in untextured areas, the depths of some of the selected points are wrong (fig. 5c), which results in generating a wrong planar prior model. Prior to COLMAP’s integration of geometric consistency into PatchMatch inference, geometric consistency typically filtered out outliers only in the post-processing stage. A multi-view geometric consistency can effectively refine depth estimation results. We note that using multi-view geometric consistency can find accurate sparse points in textureless regions. A robust strategy for generating planar prior models is to choose triangulated points by geometric consistency. Therefore, instead of directly generating planar prior assisted PatchMatch after computing an initial depth map, we first perform geometric consistency optimization, and the geometric consistency cost is calculated as

| (5) |

where is the forward-backward reprojection error between the reference image and the source image .

We select points that satisfy and within each region (Fig. 5d). Fig. 5e shows that geometric consistency can eliminate most of the outliers. Compared to refACMP, we generated a planar prior model that is more accurate.

| Approach | Train | |||||

|---|---|---|---|---|---|---|

| 2cm | 5cm | |||||

| Acc | Comp | Acc | Comp | |||

| Baseline(ACMH) | 79.55 | 92.04 | 70.74 | 89.87 | 96.80 | 84.33 |

| mPM+ACMH | 82.25 | 91.28 | 75.24 | 91.58 | 96.36 | 87.43 |

| mPM+rs | 83.63 | 91.26 | 77.52 | 92.29 | 96.46 | 88.62 |

| mPM+ACMH+pp | 83.81 | 91.01 | 77.93 | 92.68 | 96.25 | 89.48 |

| mPM+rs+pp | 85.01 | 90.83 | 80.08 | 93.10 | 96.21 | 90.29 |

| mPM+rs+gp | 85.50 | 90.84 | 80.94 | 93.35 | 96.13 | 90.81 |

IV Experiment

To validate the effectiveness of our approach, we evaluated our approach on the Intel core i7 11700 and RTX 3060 against the ETH3D High-res multi-view benchmark. The dataset was divided into a training set and a test set, which included 9 outdoor scenes and 16 indoor scenes. The original resolution of the images was 6048×4032, and in the experiments, we adjusted the image resolution uniformly to 3200×2130.

| Scene | Approach | Train | Test | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2cm | 5cm | 2cm | 5cm | ||||||||||

| Acc | Comp | Acc | Comp | Acc | Comp | Acc | Comp | ||||||

| Indoor | ACMM | 78.13 | 92.46 | 68.49 | 86.09 | 96.42 | 78.39 | 79.84 | 90.99 | 72.73 | 88.48 | 96.07 | 82.85 |

| MAR-MVS | 80.32 | 82.92 | 78.37 | 88.44 | 91.14 | 86.30 | 80.70 | 78.74 | 83.43 | 89.37 | 88.87 | 90.22 | |

| COLMAP | 66.76 | 95.01 | 52.90 | 78.49 | 97.98 | 66.61 | 70.41 | 91.95 | 59.65 | 82.04 | 96.62 | 73.00 | |

| CLD-MVS | 81.23 | 87.22 | 77.29 | 88.52 | 94.32 | 84.24 | 81.65 | 82.64 | 82.35 | 88.90 | 91.41 | 87.48 | |

| ACMMP | 85.27 | 92.40 | 79.59 | 92.16 | 96.27 | 88.71 | 85.39 | 91.87 | 80.67 | 92.88 | 96.34 | 89.97 | |

| DeepC-MVS | 86.03 | 92.56 | 81.05 | 91.86 | 96.75 | 88.03 | 86.88 | 89.08 | 85.24 | 92.99 | 95.33 | 91.06 | |

| Ours | 87.40 | 91.95 | 83.49 | 93.59 | 95.93 | 91.45 | 87.64 | 90.91 | 84.97 | 94.07 | 95.68 | 92.67 | |

| Outdoor | ACMM | 79.71 | 88.57 | 72.67 | 89.54 | 96.19 | 83.86 | 83.58 | 89.63 | 79.17 | 91.12 | 96.97 | 86.31 |

| MAR-MVS | 77.92 | 80.88 | 75.81 | 88.33 | 92.71 | 84.88 | 85.27 | 84.73 | 86.44 | 93.07 | 94.66 | 91.86 | |

| COLMAP | 68.70 | 88.16 | 57.73 | 82.85 | 96.05 | 73.76 | 80.81 | 92.04 | 72.98 | 89.74 | 97.13 | 83.94 | |

| CLD-MVS | 77.16 | 77.54 | 77.45 | 88.82 | 91.05 | 86.83 | 84.29 | 84.79 | 83.86 | 91.18 | 93.04 | 89.44 | |

| ACMMP | 81.26 | 88.56 | 75.30 | 91.87 | 95.93 | 88.22 | 87.38 | 92.03 | 83.93 | 94.30 | 97.43 | 91.64 | |

| DeepC-MVS | 83.37 | 87.82 | 79.42 | 92.73 | 96.08 | 89.71 | 87.69 | 89.37 | 86.37 | 94.26 | 95.71 | 92.94 | |

| Ours | 83.28 | 89.55 | 77.96 | 93.08 | 96.37 | 90.06 | 89.93 | 91.83 | 85.17 | 94.77 | 97.51 | 92.42 | |

| All | ACMM | 78.86 | 90.67 | 70.42 | 87.68 | 96.31 | 80.91 | 80.78 | 90.65 | 74.34 | 89.14 | 96.30 | 83.72 |

| MAR-MVS | 79.21 | 81.98 | 77.19 | 88.39 | 91.86 | 85.65 | 81.84 | 80.24 | 84.18 | 90.30 | 90.32 | 90.63 | |

| COLMAP | 67.66 | 91.85 | 55.13 | 80.50 | 97.09 | 69.91 | 73.01 | 91.97 | 62.98 | 83.96 | 96.75 | 75.74 | |

| CLD-MVS | 79.35 | 82.75 | 77.36 | 88.66 | 92.81 | 85.43 | 82.31 | 83.18 | 82.73 | 89.47 | 91.82 | 87.97 | |

| ACMMP | 83.42 | 90.63 | 77.61 | 92.03 | 96.12 | 88.48 | 85.89 | 91.91 | 81.49 | 93.24 | 96.61 | 90.39 | |

| DeepC-MVS | 84.81 | 90.37 | 80.30 | 92.26 | 96.44 | 88.80 | 87.07 | 89.15 | 85.52 | 93.31 | 95.43 | 91.53 | |

| Ours | 85.50 | 90.84 | 80.94 | 93.35 | 96.13 | 90.81 | 87.71 | 91.14 | 85.02 | 94.25 | 96.14 | 92.61 | |

| Approach | Ave. | counry. | deli. | elec. | faca. | kick. | mead. | offi. | pipes | playgr. | relif | relif2 | terra. | terrain. |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| COLMAP | 76.67 | 74.09 | 75.25 | 79.38 | 81.65 | 67.32 | 61.65 | 85.57 | 69.98 | 71.26 | 83.49 | 63.91 | 75.01 | 87.71 |

| ACMMP | 35.49 | 35.06 | 30.69 | 33.12 | 42.03 | 39.37 | 31.53 | 29.69 | 23.39 | 36.37 | 38.86 | 35.48 | 30.04 | 37.53 |

| ACMM | 28.70 | 28.17 | 24.78 | 26.74 | 35.94 | 31.89 | 25.08 | 24.21 | 18.82 | 28.14 | 30.14 | 28.07 | 24.39 | 29.68 |

| Ours | 30.32 | 27.76 | 24.36 | 27.16 | 36.96 | 37.98 | 28.70 | 25.17 | 18.29 | 27.68 | 34.94 | 30.28 | 24.82 | 34.29 |

IV-A Ablation study

We performed ablation experiments on the training set of ETH3D for validating the effects of our mPM, checkerboard sampling scheme, and geometric consistency generating plane prior model. We validate the effectiveness of our approach by using ACMH as our baseline method and adding our individual modules incrementally. For depth map fusion, we used the latest version scheme of ACMMP to ensure that the best results are obtained for each module. The results are shown in Table 1.

Compared to baseline, mPM significantly improves the completeness of scene reconstruction through multi-scale windows, while this coarse-to-fine approach has less impact on the loss of accuracy. Similarly, our proposed checkerboard sampling scheme is able to propagate correct hypotheses more efficiently in untextured regions, which is why the completeness of scene reconstruction is significantly improved. Generating a planar prior model from geometric consistency information can obtain more accurate results than using photometric consistency directly. The above experiments show that our proposed approach can well balance accuracy and completeness, and can significantly improve depth estimation of untextured regions with a small loss of accuracy.

IV-B Evaluation

We select some SOTA approaches for comparison with ours, and in Table 2 we list the training and test set results of these approaches for the ETH3D high-resolution multi-view benchmark, which are publicly available on the ETH3D benchmark server. Indoor scenes often have a large number of artificial objects, which results in more untextured areas. Since we use several methods to improve the completeness of untextured areas, our approach overall obtains optimal completeness and -score in indoor scenes. In more complex outdoor scenes, our proposed approach also shows strong performance in terms of accuracy. Overall, our approach is highly adaptable to both indoor and outdoor scenes.

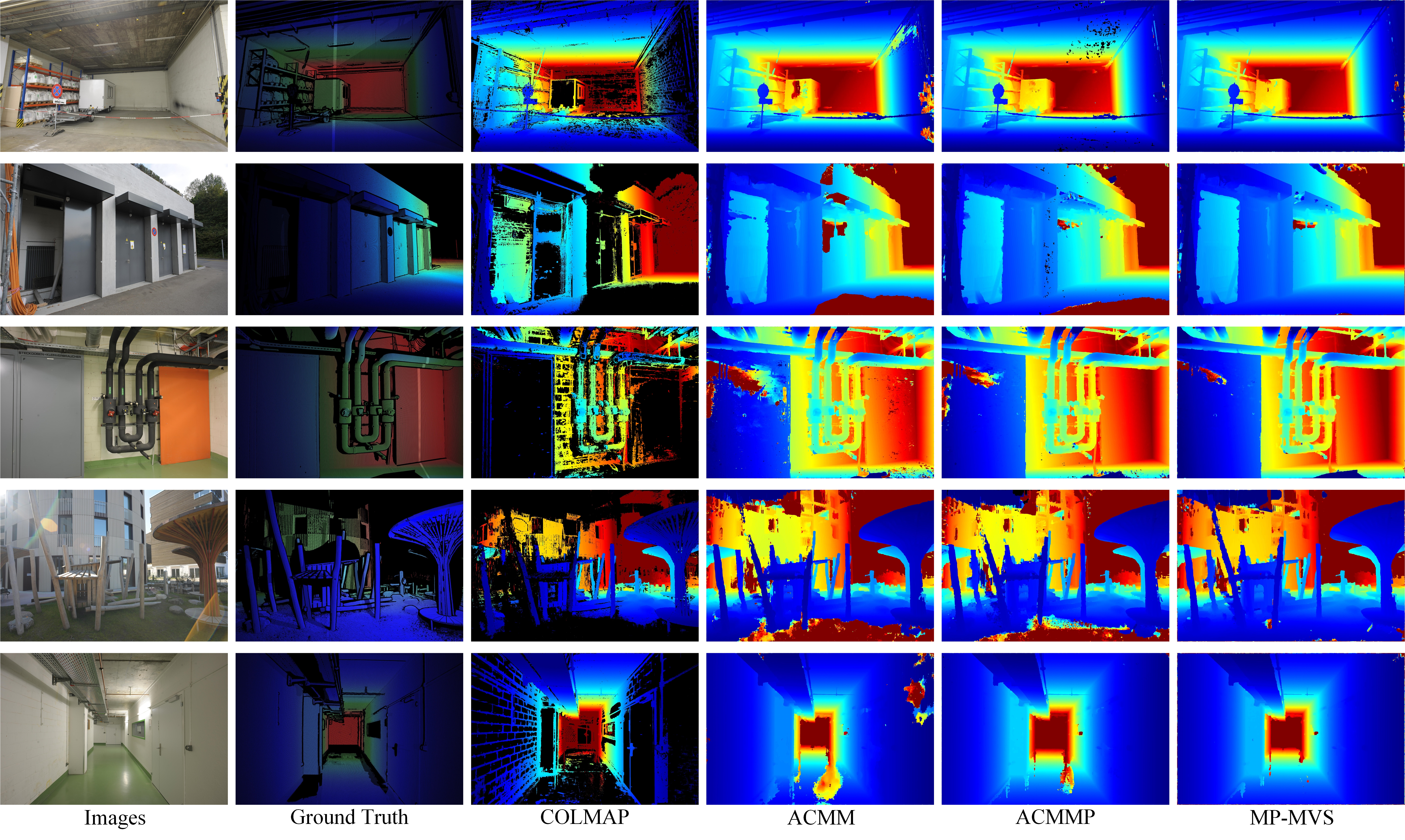

In addition to quantitative analysis, we have also selected some open-source approaches for visual comparison to show the performance of our approach more intuitively. We selected five scenes for depth map estimation: delivery_area, electro, pipes, playground, and terrains. The results of the depth map visualization obtained by several approaches are presented in Fig. 6. COLMAP eliminates unreliable depth estimates by a strict strategy but at the expense of depth map completeness. ACMM and ACMMP obtain good results in untextured areas, but in some challenging areas that are only observed by a few images, these approaches have difficulty in estimating correct depth. It can be seen that the ground in part of the scenes (electro, playground, terrains) only has the correct depth calculated by ours. The playground scene has many thin foregrounds, and our depth estimation results are significantly better than other approaches for thin foregrounds, which confirms that our proposed checkerboard sampling scheme has no significant effect on thin objects.

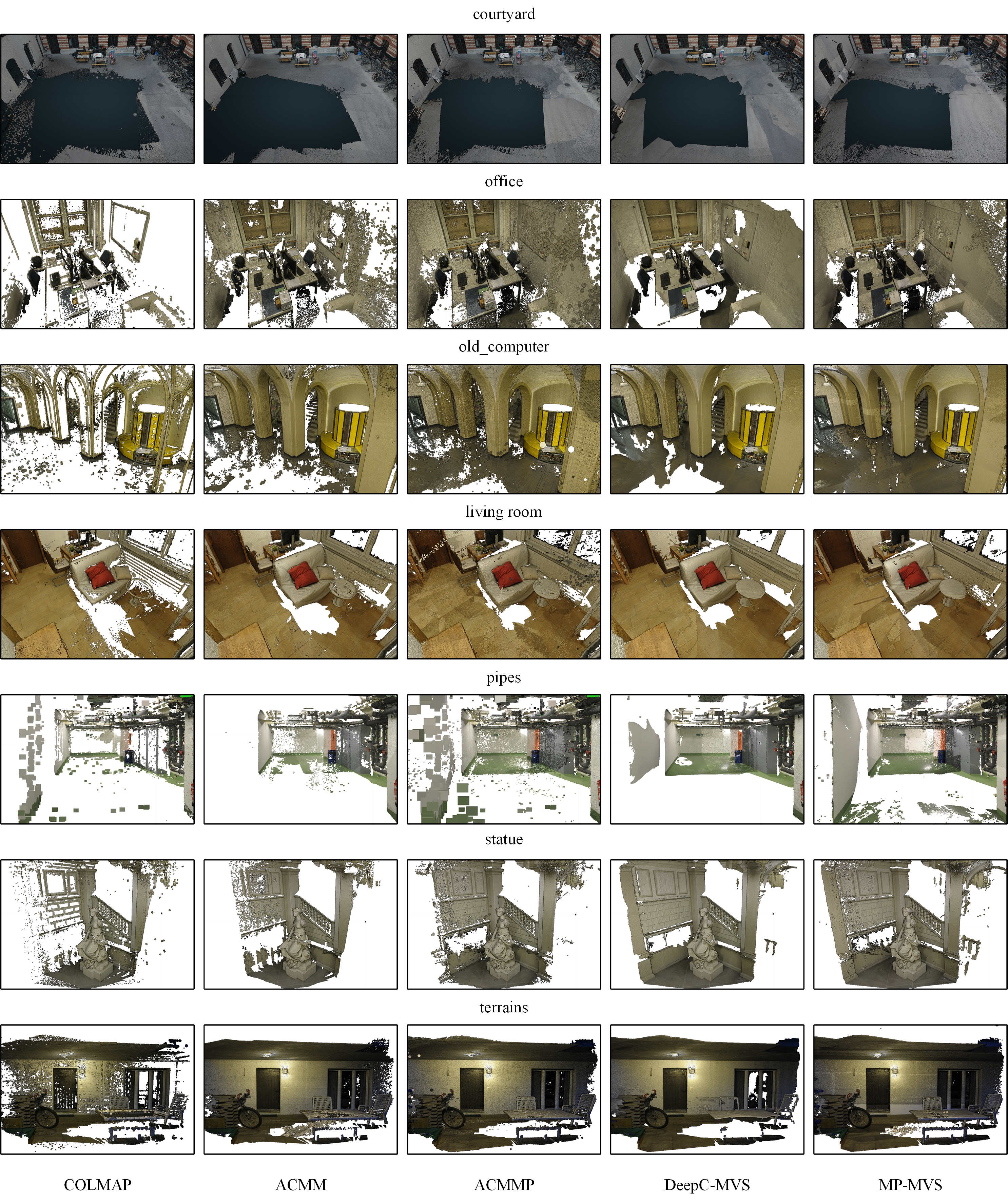

Comparing point cloud reconstruction results with those publicly available on the ETH3D benchmark server (Fig. 7). From point cloud reconstruction results, our approach can obtain the most complete results, especially for some untextured areas, and our approach is able to obtain more robust results.

IV-C Runtime performance

We list some approaches and ours to estimate the average running time (per second) of each depth map in Table 3. Considering the variability of data sizes and scenarios, we detail runtimes for all scenes in the ETH3D training dataset, and all approaches running on our platform based on a single GPU. Where ACMM and ACMMP downsample all images 2 times, ACMM performs 7 iterations and ACMMP performs 10 iterations in each scale image. COLMAP performs 5 iterations in a single scale image and our approach performs 16 iterations in a fixed scale image.

Since our mPM simplifies the multi-scale depth estimation, our approach is close to ACMM in terms of running time in most scenes, and even faster in some scenes. Our approach is 17% faster than ACMMP which also uses a multi-scale approach and planar prior assisted PatchMatch, while the performance of our approach is stronger than the above approaches.

V Conclusion

In this paper, we propose a multi-scale windows Patch-Match (mPM) that can be easily extended to PatchMatch-based MVS approaches. In addition, we optimize the existing checkerboard sampling scheme for more effective spatial propagation, and we generate more accurate planar prior model by geometric consistency. These strategies effectively improve the completeness of scene reconstruction. We evaluated our approach on the ETH3D dataset, which integrates the above strategies. The results show that our method enables state-of-the-art 3D reconstruction in high-resolution datasets.

VI References

References

- [1] J. L. Schonberger and J. M. Frahm, ”Structure-from-motion revisited,” in Proc. IEEE Conf. Computer Vision Pattern Recognition, pp. 4104-4113, 2016.

- [2] N. Snavely, S. M. Seitz, and R. Szeliski, ”Photo tourism: exploring photo collections in 3D,” in ACM SIGGRAPH 2006 Papers, pp. 835-846, 2006.

- [3] C. Wu, ”Towards linear-time incremental structure from motion,” in Proc. Int. Conf. 3D Vision-3DV, pp. 127-134, IEEE, 2013.

- [4] T. Schops et al., ”A multi-view stereo benchmark with high-resolution images and multi-camera videos,” in Proc. IEEE Conf. Computer Vision Pattern Recognition, pp. 3260-3269, 2017.

- [5] H. Aanæs, R. R. Jensen, G. Vogiatzis, E. Tola, and A. B. Dahl, ”Large-scale data for multiple-view stereopsis,” Int. J. Computer Vision, vol. 120, pp. 153-168, 2016.

- [6] A. Knapitsch, J. Park, Q. Y. Zhou, and V. Koltun, ”Tanks and temples: Benchmarking large-scale scene reconstruction,” ACM Trans. Graphics (TOG), vol. 36, no. 4, pp. 1-13, 2017.

- [7] C. Strecha, W. Von Hansen, L. Van Gool, P. Fua, and U. Thoennessen, ”On benchmarking camera calibration and multi-view stereo for high resolution imagery,” in Proc. IEEE Conf. Computer Vision Pattern Recognition, pp. 1-8, IEEE, 2008.

- [8] C. Barnes, E. Shechtman, A. Finkelstein, and D. B. Goldman, ”PatchMatch: A randomized correspondence algorithm for structural image editing,” ACM Trans. Graph., vol. 28, no. 3, p. 24, 2009.

- [9] Q. Xu and W. Tao, ”Multi-scale geometric consistency guided multi-view stereo,” in Proc. IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 5483-5492, 2019.

- [10] Y. Wang, T. Guan, Z. Chen, Y. Luo, K. Luo, and L. Ju, ”Mesh-guided multi-view stereo with pyramid architecture,” in Proc. IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 2039-2048, 2020.

- [11] A. Kuhn, C. Sormann, M. Rossi, O. Erdler, and F. Fraundorfer, ”Deepc-mvs: Deep confidence prediction for multi-view stereo reconstruction,” in Proc. 2020 Int. Conf. on 3D Vision (3DV), pp. 404-413, IEEE, 2020.

- [12] Q. Xu, W. Kong, W. Tao, and M. Pollefeys, ”Multi-scale geometric consistency guided and planar prior assisted multi-view stereo,” IEEE Trans. on Pattern Analysis and Machine Intelligence, vol. 45, no. 4, pp. 4945-4963, 2022.

- [13] Z. Xu, Y. Liu, X. Shi, Y. Wang, and Y. Zheng, ”Marmvs: Matching ambiguity reduced multiple view stereo for efficient large scale scene reconstruction,” in Proc. IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 5981-5990, 2020.

- [14] S. M. Seitz and C. R. Dyer, ”Photorealistic scene reconstruction by voxel coloring,” Int. J. Computer Vision, vol. 35, pp. 151-173, 1999.

- [15] G. Vogiatzis, C. H. Esteban, P. H. Torr, and R. Cipolla, ”Multiview stereo via volumetric graph-cuts and occlusion robust photo-consistency,” IEEE Trans. on Pattern Analysis and Machine Intelligence, vol. 29, no. 12, pp. 2241-2246, 2007.

- [16] S. N. Sinha, P. Mordohai, and M. Pollefeys, ”Multi-view stereo via graph cuts on the dual of an adaptive tetrahedral mesh,” in Proc. 2007 IEEE 11th Int. Conf. on Computer Vision, pp. 1-8, IEEE, 2007.

- [17] O. Faugeras and R. Keriven, ”Variational principles, surface evolution, PDE’s, level set methods and the stereo problem,” IEEE, pp. 83, 2002.

- [18] V. H. Hiep, R. Keriven, P. Labatut, and J. P. Pons, ”Towards high-resolution large-scale multi-view stereo,” in Proc. 2009 IEEE Conf. on Computer Vision and Pattern Recognition, pp. 1430-1437, IEEE, 2009.

- [19] S. Li, S. Y. Siu, T. Fang, and L. Quan, ”Efficient multi-view surface refinement with adaptive resolution control,” in Computer Vision–ECCV 2016: 14th European Conference, pp. 349-364, Springer International Publishing, 2016.

- [20] M. Lhuillier and L. Quan, ”A quasi-dense approach to surface reconstruction from uncalibrated images,” IEEE Trans. on Pattern Analysis and Machine Intelligence, vol. 27, no. 3, pp. 418-433, 2005.

- [21] M. Goesele, N. Snavely, B. Curless, H. Hoppe, and S. M. Seitz, ”Multi-view stereo for community photo collections,” in Proc. 2007 IEEE 11th Int. Conf. on Computer Vision, pp. 1-8, IEEE, 2007.

- [22] D. G. Lowe, ”Distinctive image features from scale-invariant keypoints,” Int. J. Computer Vision, vol. 60, pp. 91-110, 2004.

- [23] S. Galliani, K. Lasinger, and K. Schindler, ”Massively parallel multiview stereopsis by surface normal diffusion,” in Proc. IEEE Int. Conf. on Computer Vision, pp. 873-881, 2015.

- [24] J. L. Schönberger, E. Zheng, J. M. Frahm, and M. Pollefeys, ”Pixelwise view selection for unstructured multi-view stereo,” in Computer Vision–ECCV 2016: 14th European Conference, pp. 501-518, Springer International Publishing, 2016.

- [25] J. Liao, Y. Fu, Q. Yan, and C. Xiao, ”Pyramid multi‐view stereo with local consistency,” Computer Graphics Forum, vol. 38, no. 7, pp. 335-346, 2019.

- [26] A. Romanoni and M. Matteucci, ”Tapa-mvs: Textureless-aware patchmatch multi-view stereo,” in Proc. IEEE/CVF Int. Conf. on Computer Vision, pp. 10413-10422, 2019.

- [27] Q. Xu and W. Tao, ”Planar prior assisted patchmatch multi-view stereo,” in Proc. AAAI Conf. on Artificial Intelligence, vol. 34, no. 07, pp. 12516-12523, 2020.

- [28] A. Kuhn, S. Lin, and O. Erdler, ”Plane completion and filtering for multi-view stereo reconstruction,” in Pattern Recognition: 41st DAGM German Conference, DAGM GCPR, pp. 18-32, Springer International Publishing, 2019.

- [29] Z. Li, W. Zuo, Z. Wang, and L. Zhang, ”Confidence-based large-scale dense multi-view stereo,” IEEE Trans. on Image Processing, vol. 29, pp. 7176-7191, 2020.

- [30] D. Gallup, J. M. Frahm, and M. Pollefeys, ”Piecewise planar and non-planar stereo for urban scene reconstruction,” in Proc. 2010 IEEE Computer Society Conf. on Computer Vision and Pattern Recognition, pp. 1418-1425, IEEE, 2010.

- [31] H. Guo, S. Peng, H. Lin, Q. Wang, G. Zhang, H. Bao, and X. Zhou, ”Neural 3d scene reconstruction with the manhattan-world assumption,” in Proc. IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 5511-5520, 2022.