MoVL:Exploring Fusion Strategies for the Domain-Adaptive Application of Pretrained Models in Medical Imaging Tasks

Abstract

Medical images are often more difficult to acquire than natural images due to the specialism of the equipment and technology, which leads to less medical image datasets. So it is hard to train a strong pretrained medical vision model. How to make the best of natural pretrained vision model and adapt in medical domain still pends. For image classification, a popular method is linear probe (LP). However, LP only considers the output after feature extraction. Yet, there exists a gap between input medical images and natural pretrained vision model. We introduce visual prompting (VP) to fill in the gap, and analyze the strategies of coupling between LP and VP. We design a joint learning loss function containing categorisation loss and discrepancy loss, which describe the variance of prompted and plain images, naming this joint training strategy MoVL (Mixture of Visual Prompting and Linear Probe). We experiment on 4 medical image classification datasets, with two mainstream architectures, ResNet and CLIP. Results shows that without changing the parameters and architecture of backbone model and with less parameters, there is potential for MoVL to achieve full finetune (FF) accuracy (on four medical datasets, average 90.91% for MoVL and 91.13% for FF). On out of distribution medical dataset, our method(90.33%) can outperform FF (85.15%) with absolute 5.18 % lead.

1 Introduction

Recently, the evolution of foundation models in deep learning represent the paradigm of pre-training followed by finetuning. These models are extensively trained on tremendous data, establishing a broad base of knowledge, and then adapted to specific downstream tasks through finetuning. However, it is challenging for specific domains such as medical images, where the collection of substantial medical data is limited by privacy concerns or other constraints. Unlike the ease of capturing natural data, acquiring medical images such as X-ray is not as straight forward, leading to a scarcity of medical data compared to natural data. Despite effortes in Wang et al. (2022); Zhou et al. (2022b) developing visual pretrained models in specific medical domains, their performance often cannot match that of natural models. A usual and reasonable way is to make best use of natural pretrained model, which contains immense knowledge for feature extraction, to adapt in medical downstream tasks. While medical images and natural images differ significantly, they share certain features such as edge, corner and point. However, how to efficiently adapting the natural pretrained models for medical downstream tasks remains an unresolved challenges.

In addressing the challenge of adapting pre-trained models for downstream tasks, a commonly considered approach is full finetuning the pretrained model. However, this method needs to update numerous parameters, leading to considerable computation cost. In addition, there are some parameter efficient finetuning methods, such as Adapter Chen et al. (2022) and LoRA Hu et al. (2021). However, most of them require model modifications. Another popular adaptation method is linear probe, which adds a fully connected layer after the image encoder, does not change the original architecture with a minimal set of trainable parameters. Yet, as illustrated in Figure 2, a significant gap exists between the input distribution of the medical images and natural pretrained model. This discrepancy raises a crucial question of input for LP.

In natural language processing (NLP), prompt is an emerging method to adapt downstream tasks Petroni et al. (2019); Brown et al. (2020); Wei et al. (2022); Hambardzumyan et al. (2021). For example, give the prompt ”Suppose you play a role of a programmer” to guide the large language model (LLM) to solve the problems in coding. Prompt is considered to change the input distribution so that being closer to the distribution of downstream tasks Liu et al. (2023). Inspired by this idea, visual prompt (VP) is introduced to computer vision tasks. Prompting a point or a box to stress on the object for segmentation may be intuitive Kirillov et al. (2023). By adding prior knowledge, we can lead the model to focus on specific location. Another method tries to add perturbation to the input images, which changes the distribution of original images Bahng et al. (2022). This process may fill the gap between medical images and natural pretrained model.

While this method’s potential lies in its orthogonal to other finetuning methods that shift the input distribution, it still faces the issue of label matching(LM). In scenarios where classifiers like ResNet are not specifically trained, we may establish rules to matching the labels. For instance, one may select the first ten categories from the 1000 Imagenet Deng et al. (2009) categories for CIFAR10 Krizhevsky et al. (2009) classification task. Although there are existing work on LM Chen et al. (2023), it still challenging to fill the gap of output without training LP. For CLIP Radford et al. (2021), it seems getting a relatively better results because of text prompt, but when it switched to medical downstream tasks, complicated terms can also be difficult to extract text information. On account of output label matching limitation, how to fill the output gap for VP?

To tackle the previous challenges, we propose a method, named Mixture of Visual Prompting and Linear Probe (MoVL), which combines the adaptability of VP with the strength of LP for transfer learning, effectively addressing both input and output gaps without modifying the architecture or parameters of the pretrained model. We take different training strategies into consideration and choose an efficient mixed strategy, which trains VP and LP together from the initial stage. As shown in Figure 1, our method also considers the inherent information in the original image without VP . The original output is used as a reference in the loss function, enhancing the prompted output. Our contributions are listed below:

1. We propose an innovative mixed training strategy that effectively combines the adaptability of Visual Prompting (VP) with the strength of Linear Probe (LP) in transfer learning. This strategy commences from the initial training phase, simultaneously training both VP and LP components, ensuring a harmonized integration of their benefits.

2. Based on the mixed strategy, we design a novel joint learning loss comprising categorization loss and discrepancy loss, to utilize information from the original image. It updates model parameters by contrasting original images with their prompted counterparts, thus improving classification accuracy.

3. We perform extended experiments on different architectures and sizes of backbone models. According to the results, our strategy and loss can improve the accuracy on different medical downstream classification datasets.

2 Related Work

2.1 Prompt in NLP

In Natural Language Processing (NLP), the technique of prompting has been extensively utilized to stimulate and guide Pretrained Language Models (PLMs) in downstream tasks, which can be categorized into two types: hard prompts and soft prompts.

Hard Prompts, also known as templates, typically refer to predefined and fixed input patterns that are directly inserted into the model’s inputs during model training or inference. Petroni et al. (2019) effectively demonstrated the potential of hard prompts in extracting implicit knowledge from pre-trained models. Brown et al. (2020) revealed the important role of hard prompts in quickly adapting models to new tasks. Wei et al. (2022) utilised ”chain of thought” to enhance the model’s capability in logical reasoning and problem solving.

Soft Prompts, on the other hand, are those prompts that are dynamically, which do not change the parameters of the model, but rather influence the model’s behaviour by adjusting the distribution of inputs. Hambardzumyan et al. (2021) discussed that soft prompts can improve the flexiblity and effectiveness of pre-trained language models. Chen et al. (2024) demonstrated the effectiveness of continuous soft prompts in enhancing the model’s ability to perform knowledge-intensive tasks, whilst also highlighting the potential of automatically generated prompts in reducing manual intervention and increasing efficiency.

From the above research, it is evident that prompting techniques show immense potential in enhancing language model performance, knowledge extraction, and understanding model behavior.

2.2 Prompt in CV

As computer vision (CV) advancing, prompting techniques have expanded into visual tasks. While not yet widely applied, recent studies Zhou et al. (2022a); Gu et al. (2023) have demonstrated the unique potential of visual prompting techniques in enhancing model performance, interpreting model behavior, and facilitating cross-modal understanding.

A study Jia et al. (2022) demonstrated the effectiveness of visual prompt tuning (VPT) on a variety of image classification tasks. The approach achieves prompting of input visual features by adding a small number of learnable parameters before the transformer block. This approach adds trainable prompts only at the input of the model, avoiding the need to completely retrain the model and effectively reducing the training cost while maintaining the performance of the model.

Visual prompting Bahng et al. (2022), by adding a ring of learnable perturbation pixels around the input images, guides the model to better perform in specific visual downstream tasks. Activation Prompt Anonymous (2023) involves adding perturbations to the feature maps generated during the feature extraction process. This method enhances the training efficiency of visual prompts and offers a new perspective for understanding and improving the internal workings of visual models. Another research Chen et al. (2023), from the perspective of label mapping, explored and optimized the correspondence between visual prompts and labels to improve model classification accuracy, providing a deeper understanding of how models comprehend and process visual prompts.

A different approach does not superimpose visual prompts directly onto images but treats visual prompts as an additional and independent learnable component Wu et al. (2022). It enhances model accuracy and adaptability in complex visual tasks by appropriately scaling images to coordinate their pixel ratio with visual prompts. The paper also indicates that proper normalization can improve the performance of visual prompting.

2.3 Finetuneing Methods

Efficiently finetuning pretrained models for specific tasks is an attractive area of research in CV. Currently, despite full finetune, mainstream efficient finetuning techniques can be categorized into two main types: methods that change the model structure and those that do not. Methods that alter the model structure include Adapter Chen et al. (2022) and LoRA Hu et al. (2021). Full finetune is a traditional finetuning method that involves updating all parameters of the model. Adapter and LoRA are lightweight finetuning techniques that introduce a minimal number of additional parameters into the model. Without changing the original pretrained parameters of the model, only the newly introduced parameters are trained for finetuning. The distinction is that Adapter works in series with the backbone model, while LoRA operates in parallel.

As for techniques that do not change the model structure, the popular Linear Probe approach does not alter the model’s architecture but adds a linear classification head after the pretrained model. It adapts to new tasks by swiftly learning the weights of the linear layer. Prompt engineering refers to changing the distribution of input data to enhance model performance in downstream tasks.

The choice of an appropriate finetuning strategy depends on specific task requirements, resource availability, and the similarity between the model and the task. From the comprehensive learning of full finetune to the parameter economy of LoRA and Adapter, and the structural preservation of LP and VPT Jia et al. (2022), each method offers different trade-offs in practical applications.

3 Method

Finding how to effectively couple the visual prompting and linear probe is the key idea of our method. We will elaborate our method and how to design the loss function to promote the collaboration. In section 3.1, we define the optimization problem and mathematical symbols. Then in section 3.2, we conclude the properties of VP and LP, and their limitations. For section 3.3, details of MoVL (Mixture of Visual Prompting and Linear Probe) will be presented.

3.1 Problem Definition

In this paper, the primary concern on determining the most effective approach for leveraging natural pretrained models to achieve accurate classification of medical images. Input images are randomly selected from the target medical domain dataset . Subsequently, we add VP to the input image. This process can be denoted as:

where denotes the prompted images, denotes VP and denotes different ways of adding VP, which can create a pixel patch at random or fix location, paddling around the images Bahng et al. (2022) or resize the input images first and then paddling around the images Wu et al. (2022). Next, we input into the pretrained backbone model , and used the classifier to get final result. In this process, we formulate the optimization object:

where denotes loss function, denotes training dataset of target medical domain, and denotes pretrained backbone model parameters.

3.2 Comparison with LP and VP

For transfer learning with only LP, the input can be rewrited as

There is no visual prompt added to the input image and vanilla medical images will be sent to natural image encoder. We notice from to existing a gap from target to source domain. For example, ResNet He et al. (2016) is pretrained on imagenet Deng et al. (2009), which is a dataset of natural images. of ResNet is a set of particular parameters to extract the natural features. Despite medical images may contains some of the common features, but still maintains unique medical features.

For transfer learning with only VP, the formula can be rewrited as:

denotes the classifier for label matching (LM). Random label matching (RLM) and frequency label matching (FLM) are usually used in VP label matching process. RLM appoint the output label randomly, without considering the relationship between the labels. FLM select labels according to the probability of the labels, but without considering the change of VP. Although there is already work Chen et al. (2023) researching on LM, the accuracy of VP is still hard to reach LP’s.

3.3 MoVL

MoVL (Mixture of Visual Prompting and Linear Probe) is an designed algorithm containing training strategy and joint learning loss. The algorithm process is following:

Input: Target domain images

Parameter: Frozen backbone image encoder parameters , unfrozen VP parameters , unfrozen LP parameters , visual prompting , linear probe , image encoder , denotes for correct label confidence selecting

return

3.3.1 Training strategy

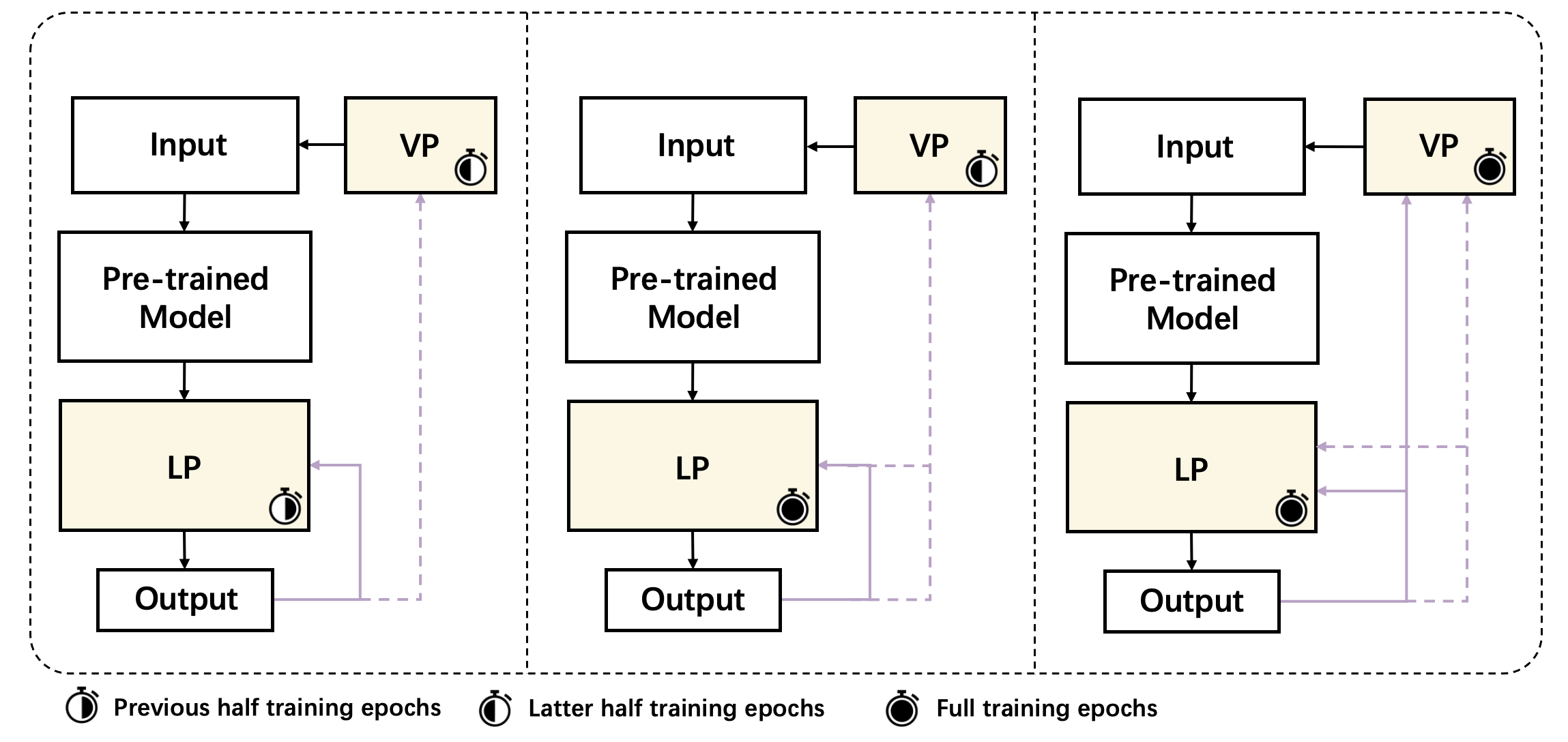

Taking the properties of LP and VP into account, it is complementary and compatible for two parts, but how to couple the two parts still pends. We design three different training strategies, LPVP, LPmix, and mix, as shown in Figure 3. The three training strategies are as follows:

LPVP: First train LP and then VP.

LPmix: First train LP and then together LP and VP.

mix: Train LP and VP from the beginning.

Noting that LP is always trained first or together with VP, for the label matching deficiency of VP. If VP is trained first, the learned visual prompt corresponding to wrong label, VP may not contribute to the correctly matched label. So we trained LP for correct label matching first, and then VP to fill the gap between source natural images to target medical images. Additionally, mix strategy may help with each module, because VP changes the distribution of input space and LP classify the feature map to corresponding labels, which is the output space. When input space and output space are both changeable, it is an intuitive way to train two parts together with frozen parameters .

Further considering LPVP strategy, if LP is frozen when VP trained, although LP can match labels to some extent, VP is constrained to locked LP. Essentially, VP still confront LM problem in section 3.2. However, mix strategy can alleviate the problem, because both VP and LP are trained in a conjunct space and finally converge to global optimization together, which may avoid falling into a local optimum. So we select mix as a base strategy.

3.3.2 Joint learning loss

To enhance the training of LP and VP by utilizing the information from the original input images, we specially designed the loss function. Many previous study, such as Wu et al. (2022), Chen et al. (2023), Chen et al. (2022) may only use images with VP for training, ignoring the information containing in original input images. Thus we redesign the loss function to make use of neglected information from original input. Specifically, the loss function can be represented as:

denotes the correct classification probability of input image with VP and denotes the correct classification probability of input image without VP. is the vanilla cross entropy loss, which is also called categorisation loss. The difference of and depict how far prompted image exceed reference, the original image without prompt, which is named as discrepancy loss. Joint learning loss function combines categorisation loss and discrepancy loss and denotes a superparameter to balance two parts weights.

From intuitive understanding, we encourage the model improve the confidence of correct classification with VP contrast to without VP, we name this ’confidence improvement’. We take the results of images without VP as a baseline, to instruct how further the results of images with VP can be better. The more beyonds , the less of the joint learning loss value.

There need to be highlighted that with only such a loss can not achieve the goal of improving confidence of VP and get a better accuracy, for can be widen by suppressing , which may just decrease the confidence of original results. Hence, we detach the original output results to avoid such suppression, with only update the parameters of with VP process.

4 Experiments

In this section, we will demonstrate the experiment details and the results among different datasets and baselines, which followed by analysis and explanation.

4.1 Experiment setups

We conducted the experiments with four datasets, inclulding HAM10000Tschandl et al. (2018), PathMNIST, BloodMNISTYang et al. (2023, 2021), and Camelyon17 Bandi et al. (2018). HAM10000 Tschandl et al. (2018) is a dataset of skin lesion images, which contains a total of 10,015 images of seven types of skin lesions from clinical settings: actinic keratoses, basal cell carcinoma, bening keratosis-like lesions, dermatofibroma, melanocytic nevi, vascular lesions, and melanoma.

PathMNIST and BloodMNIST are two tasks in MedMNIST for the classification of biomedical images.Yang et al. (2023, 2021). PathMNIST is a dataset collected from colorectal cancer histology slides, which contains 107,180. There are nine types of tissues in PathMNIST: adipose, background, debris, lymphocytes, mucus, smooth muscle, normal colon mucosa, cancer-associated stroma, colorectal adenocarscinoma epithelium. BloodMNIST contains images of normal cells without infection, hematologic or oncologic disease, and free of any pharmacologic treatment at the time of blood collection. It consists of a total of 17,092 images categorized into 8 classes, which are basophil, eosinophil, erythroblast, immature granulocytes, lymphocyte, monocyte, neutrophil, and platelet.

Camelyon17 Bandi et al. (2018) is a dataset designed to probe model’s capability on the condition of out-of-distribution (OOD). We use the version from WILDS benchmark Koh et al. (2021); Sagawa et al. (2022). The tissue patches are collected from different hospitals, then was divided into training, validation and test set, which is a classic circumstance of distribution shift. It contains 45K images of data with two categories, benign or tumor.

The official split is adopted for all datasets.

| Baseline | ResNet18 | ResNet50 | ViT-B/32 | ViT-L/14 |

|---|---|---|---|---|

| LP | 82.028 | 92.362 | 83.712 | 88.303 |

| VP | 73.921 | 77.224 | 84.351 | 95.100 |

| MoVL | 91.648 | 94.460 | 94.066 | 91.339 |

| FF | 99.084 | 98.913 | 99.265 | 99.425 |

| Baseline | ResNet18 | ResNet50 | ViT-B/32 | ViT-L/14 |

|---|---|---|---|---|

| LP | 86.532 | 88.872 | 89.123 | 89.457 |

| VP | 74.624 | 77.145 | 81.518 | 87.173 |

| MoVL | 87.130 | 89.540 | 92.033 | 90.585 |

| FF | 91.365 | 86.797 | 93.370 | 94.875 |

| Baseline | ResNet18 | ResNet50 | ViT-B/32 | ViT-L/14 |

|---|---|---|---|---|

| LP | 87.284 | 89.652 | 88.395 | 90.119 |

| VP | 73.020 | 73.517 | 77.580 | 85.443 |

| MoVL | 90.909 | 91.611 | 90.792 | 92.692 |

| FF | 96.200 | 96.083 | 96.638 | 98.100 |

| Baseline | ResNet18 | ResNet50 | ViT-B/32 | ViT-L/14 |

|---|---|---|---|---|

| LP | 87.657 | 89.373 | 90.331 | 89.666 |

| VP | 86.271 | 85.411 | 86.059 | 89.772 |

| MoVL | 89.091 | 89.675 | 92.075 | 90.462 |

| FF | 80.985 | 79.446 | 86.861 | 93.299 |

We conducted the experiments with ResNet He et al. (2016) and CLIP Radford et al. (2021) as backbone models. For each of the architecture, we select two different sizes to show the robustness of our method. For ResNet, we choose ResNet18 and ResNet50, which are pretrained on Imagenet-1K Deng et al. (2009). For CLIP, we choose ViT-B/32 and ViT-L/14 visual encoder.

We train the model with AdamW optimizer Loshchilov and Hutter (2017). Except for mix strategy of ViT-L/14, we use the learning rate of 0.01. Because we find that it is hard for mix strategy convergence with such big backbone model as the same epochs with other model settings, we modify the learning rate to 0.1 for all mix strategy experiments with ViT-L/14. We use cosine scheduler Loshchilov and Hutter (2016) to decrease the learning rate as epochs increasing and weight decay is set to 0. Other parameters are default for AdamW optimizer. Warmup steps is set to 10. For HAM10000, PathMNIST and BloodMNIST datasets, we use the batch size of 128. For Camelyon17 dataset, we use the batchsize of 256. For all experiments, we use the prompt method of paddle and the prompt size is set to 30 pixels. The input images are first resize to (224-2*30)*(224-2*30) and then adding VP, so the prompted input images are 224*224 size Wu et al. (2022). VP is initialized as all zeroes pixels with minimum = 0.001.

For only LP or mix training period, namely one stage training, we use 20 epochs totally. For two stage training, we train 10 epochs for each stage.

4.2 Experiment Results

For model validation, we first choose full finetune as the upper benchmark on ID datasets, given its capability to update a larger number of parameters. We also choose LP and VP as two baseline finetuning methods. These methods do not change the original parameters or architecture of the backbone image encoder. For LP and VP, we adhered to the same settings mentioned in section 4.1. For full finetune, we still use learning rate 0.01 for ResNet but 0.0001 for CLIP. The text prompt for CLIP follows the work byRadford et al. (2021),specifically ’This is a photo of a { }’. For dataset Camelyon17, we use text labels ’benign tissue region’ and ’tissue tumor region’. For other datasets, we use the labels as described in section 4.1.

From Table 1, VP performs better using CLIP architecture. This may be caused by output label matching gap of frozen linear probe in ResNet. For text prompt of CLIP, calculating the similarity between text and image can remit the gap to some extent, but professional medical terms can still be awkward. LP performs relatively stable than VP. However, there is a great distance from full finetune, which may caused by medical input and natural image encoder gap.

Our proposed MoVL (average of 90.917%) can outperform than single LP (average of 88.304%) or VP (average of 81.758%) for most experiments, indicating that only one module involved can be restricted by its proverties. However, a mixture strategy can be complementary and improve the accuracy. When contrast with full finetune (average of 91.132%), there is potential for MoVL to reach accuracy of full finetune.

We notice that on OOD dataset Camelyon17, full finetune can get worse performance than other methods. This is consistent with prior work Kumar et al. (2022). There is a gap between ID and OOD data, and full finetune only fit with ID, but distort OOD features. However, MoVL can be less influenced by this distribution shift and take 5.178% lead.

We observe that for ViT-L/14, some resluts of MoVL are lower than ViT-B/32, which may be caused by more training epochs to get convergence for larger backbone model, and we use the same consistent epochs for different models.

We observe MoVL (average of 0.029M) use far less parameters compared with full finetune (average of 107.3M), with the sum parameters of LP and VP. Parameters of VP is the same because of the same prompt size and input image size, but LP will change with feature map channel and number of categories.

4.3 Strategy Validation Experiments

In this section, we do experiments with different training strategies: LP only, LPVP, LPmix and mix all time. We choose LP only as a baseline to analyze, for LP is the base of other strategies. We also use various backbone models to show the efficiency of our proposed method. Both ID and OOD datasets are used to show the validation experiment results.

| Strategy | ResNet18 | ResNet50 | ViT-B/32 | ViT-L/14 |

|---|---|---|---|---|

| LP | 82.028 | 92.362 | 83.712 | 88.303 |

| LPVP | 80.121 | 84.510 | 85.821 | 74.241 |

| LPmix | 87.834 | 92.298 | 90.796 | 90.210 |

| mix | 91.467 | 94.439 | 93.704 | 91.787 |

| Strategy | ResNet18 | ResNet50 | ViT-B/32 | ViT-L/14 |

|---|---|---|---|---|

| LP | 86.532 | 88.872 | 89.123 | 89.457 |

| LPVP | 85.404 | 87.535 | 90.348 | 87.103 |

| LPmix | 86.769 | 89.095 | 90.738 | 90.139 |

| mix | 86.963 | 89.428 | 91.629 | 90.446 |

| Strategy | ResNet18 | ResNet50 | ViT-B/32 | ViT-L/14 |

|---|---|---|---|---|

| LP | 87.284 | 89.652 | 88.395 | 90.119 |

| LPVP | 86.524 | 87.518 | 87.138 | 86.027 |

| LPmix | 89.594 | 91.055 | 89.068 | 90.880 |

| mix | 90.558 | 91.464 | 90.500 | 91.815 |

| Strategy | ResNet18 | ResNet50 | ViT-B/32 | ViT-L/14 |

|---|---|---|---|---|

| LP | 87.657 | 89.373 | 90.331 | 89.666 |

| LPVP | 87.844 | 88.671 | 91.133 | 88.133 |

| LPmix | 88.583 | 89.459 | 91.938 | 91.849 |

| mix | 89.044 | 89.445 | 92.050 | 91.849 |

From Table 2, we observe adding VP may not be outperform than train LP for the full time, which indicates that adding modules in arbitrary combination doesn’t guarantee enhanced accuracy.

Under most circumstances, use mix strategy can improve the accuracy of categorizing tasks, no matter after LP training or mix training from the beginning, which indicates validity of mix strategy. There is also a trend that with more epochs of LP and VP training together, the better performance of the result, which indicates that both input and output space trainable is helpful with the improvement of the accuracy.

We also do the strategy analysis on OOD dataset, Camelyon17. The conclusion of ID dataset can still work on OOD dataset, but with a discount on improvement. This can be explained by the distribution shift from training and testing dataset. Nonetheless, mix strategy can help with combining the benefits of LP and VP.

| Strategy | ResNet18 | ResNet50 | ViT-B/32 | ViT-L/14 |

|---|---|---|---|---|

| LP | 85.281 | 90.295 | 87.077 | 89.293 |

| LPVP | 86.166 | 89.107 | 88.797 | 81.514 |

| LPmix | 89.072 | 91.918 | 90.535 | 89.837 |

| mix | 90.063 | 92.188 | 91.783 | 91.050 |

To prove the training strategy is efficient for different situations, we change the visual prompt method. Following the workflow of Bahng et al. (2022), we directly add VP onto the original input images, which may cover the border of the images. The results still shows that mix strategy can be more competitive than other training strategies.

4.4 Loss Ablation Experiments

From the experiments above, we have shown that mix strategy can be quite a competitive strategy combining LP and VP. In this section, we will interpret our designed joint learning loss function can make use of information from original output without prompt to improve the accuracy of the model.

| ResNet18 | ResNet50 | ViT-B/32 | ViT-L/14 | |

|---|---|---|---|---|

| HAM10000 | +0.181 | +0.021 | +0.362 | -0.448 |

| PathMNIST | +0.167 | +0.112 | +0.404 | +0.139 |

| BloodMNIST | +0.351 | +0.147 | +0.292 | +0.887 |

| Camelyon17* | +0.047 | +0.230 | +0.025 | -1.387 |

We counted data from different models on different datasets and found that using the joint learning loss function can improve the accuracy of the models. This may because cross entropy loss can only get the information after adding VP, with only one forward propagation. However, as original images getting through image encoder and LP, then gaining the original output, which can still contains information. For example, an original dog image can be wrongly categorized as cat, but this information can be referred by prompted one, which is not cat. As the model classify the image correctly, it can still be a reference for prompted one to exceed. On considering such information, VP can reach and beyond reference output.

4.5 Exploration of VP Initialization

| ResNet18 | ResNet50 | ||

|---|---|---|---|

| HAM10000 | 89.634 (-2.014) | 92.543 (-1.917) | |

| PathMNIST | 85.598 (-1.532) | 88.510 (-1.030) | |

| BloodMNIST | 90.558 (-0.351) | 91.318 (-0.293) |

We found initialization of VP can greatly influence the accuracy. With a simple ’blank’ VP, which is all zeroes plus a minimum = 0.001 initialization, can be better than random initialization.

5 Conclusion and Future Work

This paper explore the proper strategy for LP and VP combining, which is inspired by the properties of LP and VP. Training LP and VP together from the beginning outperforms than other strategies. Besides, we propose a joint learning loss function, which contains categorisation loss and discrepancy loss. Discrepancy loss takes information of the original imageinto consideration. We do experiments on different backbone models, both ID and OOD distribution datasets. The results shows the efficiency of our method.

We regard the MoVL as a promising strategy, which can adapt to different downstream tasks with a huge gap from natural. The idea of referring to original image may guide more delicate loss design about VP training. Initialization of VP is also very important for training process, and we will further explore it in the future work.

References

- Anonymous [2023] Anonymous. Visual prompting reimagined: The power of activation prompts. In Submitted to The Twelfth International Conference on Learning Representations, 2023. under review.

- Bahng et al. [2022] Hyojin Bahng, Ali Jahanian, Swami Sankaranarayanan, and Phillip Isola. Exploring visual prompts for adapting large-scale models. arXiv preprint arXiv:2203.17274, 2022.

- Bandi et al. [2018] Peter Bandi, Oscar Geessink, Quirine Manson, Marcory Van Dijk, Maschenka Balkenhol, Meyke Hermsen, Babak Ehteshami Bejnordi, Byungjae Lee, Kyunghyun Paeng, Aoxiao Zhong, et al. From detection of individual metastases to classification of lymph node status at the patient level: the camelyon17 challenge. IEEE transactions on medical imaging, 38(2):550–560, 2018.

- Brown et al. [2020] Tom Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared D Kaplan, Prafulla Dhariwal, Arvind Neelakantan, Pranav Shyam, Girish Sastry, Amanda Askell, et al. Language models are few-shot learners. Advances in neural information processing systems, 33:1877–1901, 2020.

- Chen et al. [2022] Zhe Chen, Yuchen Duan, Wenhai Wang, Junjun He, Tong Lu, Jifeng Dai, and Yu Qiao. Vision transformer adapter for dense predictions. arXiv preprint arXiv:2205.08534, 2022.

- Chen et al. [2023] Aochuan Chen, Yuguang Yao, Pin-Yu Chen, Yihua Zhang, and Sijia Liu. Understanding and improving visual prompting: A label-mapping perspective. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 19133–19143, 2023.

- Chen et al. [2024] Yadang Chen, Gang Yang, Duolin Wang, and Dichao Li. Eliciting knowledge from language models with automatically generated continuous prompts. Expert Systems with Applications, 239:122327, 2024.

- Deng et al. [2009] Jia Deng, Wei Dong, Richard Socher, Li-Jia Li, Kai Li, and Li Fei-Fei. Imagenet: A large-scale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition, pages 248–255. Ieee, 2009.

- Gu et al. [2023] Jindong Gu, Zhen Han, Shuo Chen, Ahmad Beirami, Bailan He, Gengyuan Zhang, Ruotong Liao, Yao Qin, Volker Tresp, and Philip Torr. A systematic survey of prompt engineering on vision-language foundation models. arXiv preprint arXiv:2307.12980, 2023.

- Hambardzumyan et al. [2021] Karen Hambardzumyan, Hrant Khachatrian, and Jonathan May. Warp: Word-level adversarial reprogramming. arXiv preprint arXiv:2101.00121, 2021.

- He et al. [2016] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- Hu et al. [2021] Edward J Hu, Yelong Shen, Phillip Wallis, Zeyuan Allen-Zhu, Yuanzhi Li, Shean Wang, Lu Wang, and Weizhu Chen. Lora: Low-rank adaptation of large language models. arXiv preprint arXiv:2106.09685, 2021.

- Jia et al. [2022] Menglin Jia, Luming Tang, Bor-Chun Chen, Claire Cardie, Serge Belongie, Bharath Hariharan, and Ser-Nam Lim. Visual prompt tuning. In European Conference on Computer Vision, pages 709–727. Springer, 2022.

- Kirillov et al. [2023] Alexander Kirillov, Eric Mintun, Nikhila Ravi, Hanzi Mao, Chloe Rolland, Laura Gustafson, Tete Xiao, Spencer Whitehead, Alexander C Berg, Wan-Yen Lo, et al. Segment anything. arXiv preprint arXiv:2304.02643, 2023.

- Koh et al. [2021] Pang Wei Koh, Shiori Sagawa, Henrik Marklund, Sang Michael Xie, Marvin Zhang, Akshay Balsubramani, Weihua Hu, Michihiro Yasunaga, Richard Lanas Phillips, Irena Gao, Tony Lee, Etienne David, Ian Stavness, Wei Guo, Berton A. Earnshaw, Imran S. Haque, Sara Beery, Jure Leskovec, Anshul Kundaje, Emma Pierson, Sergey Levine, Chelsea Finn, and Percy Liang. WILDS: A benchmark of in-the-wild distribution shifts. In International Conference on Machine Learning (ICML), 2021.

- Krizhevsky et al. [2009] Alex Krizhevsky, Geoffrey Hinton, et al. Learning multiple layers of features from tiny images. 2009.

- Kumar et al. [2022] Ananya Kumar, Aditi Raghunathan, Robbie Jones, Tengyu Ma, and Percy Liang. Fine-tuning can distort pretrained features and underperform out-of-distribution. arXiv preprint arXiv:2202.10054, 2022.

- Liu et al. [2023] Pengfei Liu, Weizhe Yuan, Jinlan Fu, Zhengbao Jiang, Hiroaki Hayashi, and Graham Neubig. Pre-train, prompt, and predict: A systematic survey of prompting methods in natural language processing. ACM Computing Surveys, 55(9):1–35, 2023.

- Loshchilov and Hutter [2016] Ilya Loshchilov and Frank Hutter. Sgdr: Stochastic gradient descent with warm restarts. arXiv preprint arXiv:1608.03983, 2016.

- Loshchilov and Hutter [2017] Ilya Loshchilov and Frank Hutter. Decoupled weight decay regularization. arXiv preprint arXiv:1711.05101, 2017.

- Petroni et al. [2019] Fabio Petroni, Tim Rocktäschel, Patrick Lewis, Anton Bakhtin, Yuxiang Wu, Alexander H Miller, and Sebastian Riedel. Language models as knowledge bases? arXiv preprint arXiv:1909.01066, 2019.

- Radford et al. [2021] Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, et al. Learning transferable visual models from natural language supervision. In International conference on machine learning, pages 8748–8763. PMLR, 2021.

- Sagawa et al. [2022] Shiori Sagawa, Pang Wei Koh, Tony Lee, Irena Gao, Sang Michael Xie, Kendrick Shen, Ananya Kumar, Weihua Hu, Michihiro Yasunaga, Henrik Marklund, Sara Beery, Etienne David, Ian Stavness, Wei Guo, Jure Leskovec, Kate Saenko, Tatsunori Hashimoto, Sergey Levine, Chelsea Finn, and Percy Liang. Extending the wilds benchmark for unsupervised adaptation. In International Conference on Learning Representations (ICLR), 2022.

- Tschandl et al. [2018] Philipp Tschandl, Cliff Rosendahl, and Harald Kittler. The ham10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions. Scientific data, 5(1):1–9, 2018.

- Wang et al. [2022] Zifeng Wang, Zhenbang Wu, Dinesh Agarwal, and Jimeng Sun. Medclip: Contrastive learning from unpaired medical images and text. arXiv preprint arXiv:2210.10163, 2022.

- Wei et al. [2022] Jason Wei, Xuezhi Wang, Dale Schuurmans, Maarten Bosma, Fei Xia, Ed Chi, Quoc V Le, Denny Zhou, et al. Chain-of-thought prompting elicits reasoning in large language models. Advances in Neural Information Processing Systems, 35:24824–24837, 2022.

- Wu et al. [2022] Junyang Wu, Xianhang Li, Chen Wei, Huiyu Wang, Alan Yuille, Yuyin Zhou, and Cihang Xie. Unleashing the power of visual prompting at the pixel level. arXiv preprint arXiv:2212.10556, 2022.

- Yang et al. [2021] Jiancheng Yang, Rui Shi, and Bingbing Ni. Medmnist classification decathlon: A lightweight automl benchmark for medical image analysis. In IEEE 18th International Symposium on Biomedical Imaging (ISBI), pages 191–195, 2021.

- Yang et al. [2023] Jiancheng Yang, Rui Shi, Donglai Wei, Zequan Liu, Lin Zhao, Bilian Ke, Hanspeter Pfister, and Bingbing Ni. Medmnist v2-a large-scale lightweight benchmark for 2d and 3d biomedical image classification. Scientific Data, 10(1):41, 2023.

- Zhou et al. [2022a] Kaiyang Zhou, Jingkang Yang, Chen Change Loy, and Ziwei Liu. Learning to prompt for vision-language models. International Journal of Computer Vision, 130(9):2337–2348, 2022.

- Zhou et al. [2022b] Lei Zhou, Huidong Liu, Joseph Bae, Junjun He, Dimitris Samaras, and Prateek Prasanna. Self pre-training with masked autoencoders for medical image classification and segmentation. arXiv preprint arXiv:2203.05573, 2022.