(eccv) Package eccv Warning: Package ‘hyperref’ is loaded with option ‘pagebackref’, which is *not* recommended for camera-ready version

11email: clmo6615@uni.sydney.edu.au, {kun.hu, zhiyong.wang, dong.yuan}@sydney.edu.au 22institutetext: Meta Reality Labs, Burlingame, CA, USA 22email: clong1@meta.com

Motion Keyframe Interpolation for Any Human Skeleton via Temporally Consistent Point Cloud Sampling and Reconstruction

Abstract

In the character animation field, modern supervised keyframe interpolation models have demonstrated exceptional performance in constructing natural human motions from sparse pose definitions. As supervised models, large motion datasets are necessary to facilitate the learning process; however, since motion is represented with fixed hierarchical skeletons, such datasets are incompatible for skeletons outside the datasets’ native configurations. Consequently, the expected availability of a motion dataset for desired skeletons severely hinders the feasibility of learned interpolation in practice. To combat this limitation, we propose Point Cloud-based Motion Representation Learning (PC-MRL), an unsupervised approach to enabling cross-compatibility between skeletons for motion interpolation learning. PC-MRL consists of a skeleton obfuscation strategy using temporal point cloud sampling, and an unsupervised skeleton reconstruction method from point clouds. We devise a temporal point-wise K-nearest neighbors loss for unsupervised learning. Moreover, we propose First-frame Offset Quaternion (FOQ) and Rest Pose Augmentation (RPA) strategies to overcome necessary limitations of our unsupervised point cloud-to-skeletal motion process. Comprehensive experiments demonstrate the effectiveness of PC-MRL in motion interpolation for desired skeletons without supervision from native datasets.

Keywords:

3D point clouds Human body motion Dataset creation1 Introduction

3D character animation workflows largely rely on the concepts of keyframing with interpolation, often referred to as the pose-to-pose principle [13]. By defining key poses at correct timings, algorithms can be employed to generate intermediate poses, and thereby eliminating the need for defining each frame individually [40, 5]. Although this keyframe-based workflow is less costly than frame-by-frame production, human motion is often complex, and natural depictions demand a significant number of keyframes, especially for realistic motions that adhere to physical properties and constraints. Although motion capture (MoCap) is frequently employed as an alternative for achieving realistic human motions, MoCap often nonetheless necessitates subsequent keyframing to correct unwanted motion elements and address other imperfections.

In recent years, the interpolation process has been widely studied, particularly with the advent of machine learning methods for sequential data. Compared to conventional methods such as linear interpolation (LERP), machine learning methods have demonstrated the capability to derive visually natural motions from sparser keyframe sets [18, 38, 35, 33, 12], which we can observe in Fig. 1. Data-driven interpolation approaches require large motion capture datasets to learn the necessary motion features for effective synthesis of transitions between keyframes. In the field of 3D motions, this aspect significantly restricts the feasibility of learned interpolation methods in animation practice, as motion/pose data are tied to specific skeletal configurations and are not cross-compatible. That is, the dataset’s native skeleton, i.e. source skeleton, is structurally different and incompatible with the desired skeleton, i.e. target skeleton.

Therefore, we propose a novel point cloud-based motion representation learning (PC-MRL) approach, in which skeletal hierarchies are obfuscated by sampling into the point cloud medium, and reconstructed into skeletal motion data using an unsupervised neural network. Unlike raw pose and motion data, the point cloud format is a non-hierarchic representation, and effectively obscures any skeletal configuration as displayed in Fig. 2. PC-MRL samples a set of points around the rest position of skeletons using a combination of uniform and normal distributions, and geometrically parents each point to an associated bone in order to effectively represent the associated skeletons’ motion data in a temporally consistent manner. Subsequently, for the reconstruction of motion data into a given desired skeleton, we propose an unsupervised learning scheme, featuring a K-nearest neighbors (KNN) loss between cross-skeleton point clouds to optimize a transformer neural network. The network processes the point cloud-based motion representations and generates the corresponding target skeletal motion representations. By obscuring both source and predicted target motion data into the point cloud space, our strategy allows for a direct comparison, utilizing KNN to minimize the visual difference between the two point clouds.

The unsupervised nature of our target skeleton reconstruction scheme creates two main disparities between the expected and predicted motion features. Firstly, the exact roll rotation axis of bones cannot be represented in the point cloud format. To this end, we introduce First-frame Offset Quaternion (FOQ) representations to incorporate relative roll values, which are obtainable from temporally consistent point clouds. This approach is agnostic to absolute roll rotations, providing a standardisation mechanism for the resulting motion data within the context of motion interpolation learning. Second, KNN-based objectives learn skeletal representations in a geometrically optimal manner, which does not always reflect the desired skeletal behaviour. This disparity is commonly observed with smaller skeletal features, such as shoulder and hip bones. As such, we augment training motions using Rest Pose Augmentation (RPA) to increase the range of skeletal behaviours that our interpolation model learns. Extensive experiments demonstrate the effectiveness of our proposed method, achieving performance targets near the level of direct dataset supervision.

In summary, this paper presents the following key contributions:

-

1.

We propose a novel method for achieving unsupervised human motion reconstruction from point cloud data, enabling skeleton-agnostic motion interpolation learning for the first time.

-

2.

To train motion interpolation models, we formulate first-frame offset quaternions to represent bone rotations with relative roll data, as well as a rest position augmentation strategy to address skeletal configuration variability.

-

3.

We perform comprehensive experiments to demonstrate our method’s effectiveness towards learning motion interpolation, despite the absence of directly compatible datasets in the training process.

2 Related works

We explore existing research on motion interpolation approaches, and motion data modelling methods in general. In addition, our proposed approach bears resemblance to unsupervised approaches for the motion re-targeting research problem, and as such, we will also analyse existing methods in this field.

2.1 Data-Driven Motion Modelling Methods

Contemporary research in motion data modelling predominantly explore learned data-driven methods, using neural networks to capture, model, and establish correlations between the motion features of various skeletal configurations [49, 53, 39]. Motion features alone have demonstrated considerable efficacy in cross-skeleton imitation using a variety of strategies, including task-driven objectives [43, 19], diffusion-based generation [47, 25, 50], and latent feature consistency [2, 1, 44, 3, 7]. Latent consistency in particular aims to learn inter-skeleton correlations without the need for paired datasets, which reduces the barrier of entry for practical applications.

Likewise, motion interpolation strategies have evolved through the use of learning based approaches. The inherent numerical precision of motion data has traditionally posed a challenge for the adoption of the learning approaches, due to their approximate nature [27, 21]. This challenge is further amplified by the temporally sparse distribution of keyframes. Recently, a number of recurrent neural network based methods [17, 18, 51, 41] and transformer-based networks [12, 33, 35] have demonstrated encouraging interpolation performance for the transition between keyframes. Additionally, various other motion modelling methods are capable for keyframe based interpolation, albeit with limited intermediate frame representation capabilities [43, 19, 25].

A major constraint inherent in all data-driven motion modelling methods is their dependency on datasets featuring specific skeletal configurations. To address this, skeleton-free training strategies have been explored for elementary tasks involving 3D characters. These include vertex-based pose transfer [30, 45, 8, 7], and point cloud-based human shape reconstruction [23, 46]. Notably, in these methods, 3D mesh and point cloud coordinates serve as general 3D data representations. Concurrently, other research has significantly minimized the required volume of training data, bringing it down to single example sequences. This reduction has been achieved through the use of patch matching techniques [28] and imitation learning within physics-based simulations [37, 29]. Reinforcement learning has facilitated the generation of goal-driven motion without pre-existing datasets [36, 48, 31]. However, these simulation-based methods have typically been either exceedingly complex to implement in practice, or yield results that are too inflexible and/or prone to errors for animation workflows.

2.2 Motion Re-Targeting without Supervision

Conventional approaches in motion re-targeting have been a cornerstone of 3D animation for decades, primarily dedicated to converting motion capture data into 3D skeletal motions [4, 15]. Typically, raw motion capture data is optically recorded, yielding primarily spatial information. In contrast, motion data is largely rotational in nature [5], often represented in SO(3) space. Therefore, a principal objective of motion re-targeting solutions has been to effectively bridge these two distinct types of representations. In [14], extensive manually adjustable constraints were introduced for flexible re-targeting between skeletons of identical topology (i.e., same hierarchy, different bone lengths and rest poses). Key practices in this approach, including end-effector matching, joint contacts, and inter-skeleton point correlations, are still relevant to current animation pipelines. Point correlations using Inverse Kinematics (IK) have also been proposed as an intermediate representation to facilitate the adaptation between topologically distinct skeletons [34, 9], albeit with hierarchical pairing limitations.

In response, morphologically-independent motion control systems were proposed using IK joint chains and joint-wise constraints [26, 20]. Such systems often expect highly standardised motion skeleton features, and are generally impractical for re-targeting motions produced for different control schemes. Additionally, the reliance on Euler angles for extensive classical re-targeting constraints poses a variety of issues regarding rotational freedom [11], as well as compatibility with animation workflows [32], which predominantly use quaternion-based systems.

Despite these advancements, the challenge of arbitrary skeleton re-targeting from motion datasets still remains largely unresolved, limiting any widespread application of data-driven pipelines. Our paper aims to effectively address this pervasive limitation.

3 Methodology

As illustrated in Fig. 3, our proposed method consists of two main components: point cloud-to-motion reconstruction and motion keyframe interpolation.

3.1 Quaternion-based Motion Representations

We first declare a set of notations for representing skeletons. A skeleton is defined by a tree of bones . A bone is defined by its bone parent , and a head position which denotes the bone’s base offset from its parent head . In addition, a tail position helps to define the end point of the bone’s line segment representation, starting from its head position, of . The root bone has no parent, and thus no head position . The set of and for each bone make up the rest position of the skeleton.

Next, we define the properties of motion data. At any given frame , a skeleton’s pose position can be represented as global 3D positions and global quaternion rotations for each bone . For a given motion sequence , only the root bone is given a 3D position in pose position, i.e. , as all other pose positions are derived using Forwards Kinematics (FK [11, 24]):

| (1) |

where is the quaternion rotation function, and is the parent joint. In summary, a motion sequence of frames is defined by:

| (2) |

3.2 Point Cloud Obfuscation and Skeleton Reconstruction

Our approach is based on the hypothesis that 3D motion sequences can be visually represented by a more universal format: 3D point cloud sequences. By geometrically sampling the volume around a skeleton, point cloud data becomes a non-hierarchical representation of its pose(s), obfuscating the original skeletal configuration. Due to this decoupling between the skeleton configuration and motion representation, a successful motion data reconstruction from point cloud data would be the first step to enabling learnable cross-skeleton motion features. Formally, for a given source skeleton with an associated motion sequence , and any humanoid skeletal configuration , the point cloud obfuscation and reconstruction module is to learn a function that can adequately perform , where is a visually similar motion adaptation of on skeleton , which is an estimation of .

3.2.1 Motion Data Obfuscation with Point Clouds

To sample the point cloud , we first generate a set of points along the line segments defined by the global head and tail positions for each bone . In the bone-local space to , this would mean sampling by a uniformly distributed factor . Specifically, aligns with the head position of the bone, and indicates the tail position. By further sampling via a normal distribution, our sampling strategy can capture the surrounding volume around the skeleton. Formally, to sample from its associated bone within a standard deviation of , we have:

| (3) | ||||

Notably, this sampling process as in Eq. 3 is associated with the skeletal configuration only, by which the sampled points are temporally consistent for further processing with the motion sequence. Specifically, each point maintains a constant position from its associated bone in the bone-local space. The lateral distance of each point from their parent bone segment allows point clouds to react to the bone’s rotational roll axis.

In a pure point cloud representation, it is possible to remove the relationships between bones. However, to clearly distinguish symmetrical body features in different skeletons, we introduce and embed body group associations for the points during our reconstruction process. Specifically, every bone is categorised by one of these body groups: [spine, left arm, right arm, left leg, right leg]. Each sampled point is assigned q group attribute in line with the associated bone. The attribute is represented by a one-hot feature vector . To this end, we characterize .

3.2.2 Skeleton Reconstruction

Given a sampled point cloud representation from and as input, we aim to train a motion adaptation function , which is tasked with producing a visually similar skeletal motion for , using a temporal and set-based neural network. As shown in Fig. 3, to construct , we employ a Point Transformer [52] to learn embeddings for the unordered point cloud sets frame-wisely, and a standard temporal transformer to process the sequence and decode into .

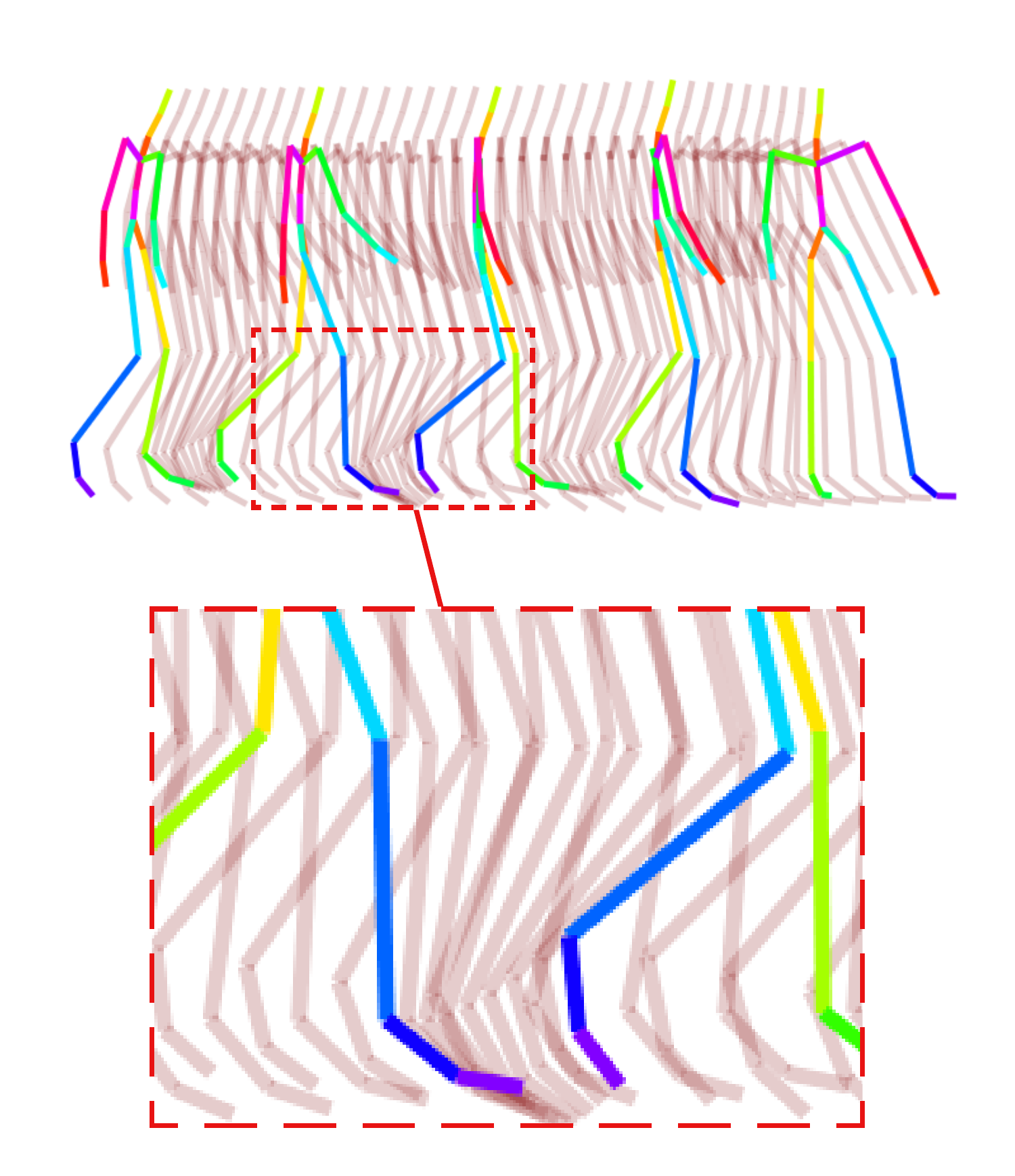

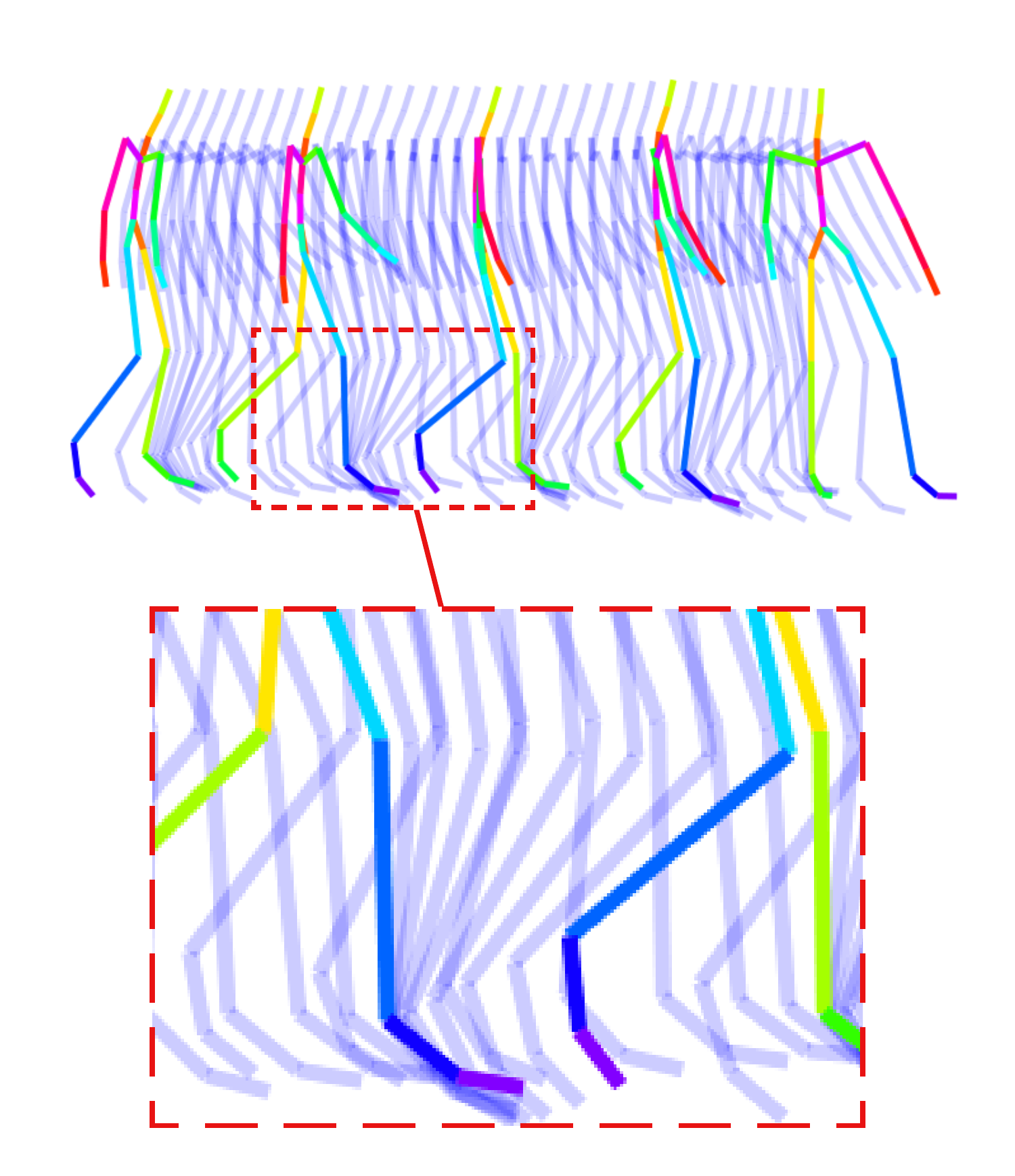

To optimize , our objective function maximises the similarity between the sampled point clouds of both and . can be viewed as the ground truth to , which enables an unsupervised strategy for . In detail, we first sample a point cloud from based on derived by . Next, a temporally consistent KNN-Loss objective is devised to minimise the distance between any given point of from its nearest neighbouring points in . The nearest neighbours are determined based on inter-point distance throughout the entire motion sequence, instead of a frame-wise setting, as shown in Fig. 4. The points with differing body groups are excluded from the neighbour search. Mathematically, we have:

| (4) | ||||

| (5) |

where is the set containing points that are the k-nearest neighbors of in , based on distance.

In addition to the KNN-Loss, we introduce an optional end-effector loss in the skeleton space for the hand, foot, and head bones, which provides direct positional guidance for matched end-effector pairs :

| (6) |

3.3 First-Frame Offset Quaternions

Point clouds lack absolute roll axis information for each bone. As such, we introduce a First-frame Offset Quaternion (FOQ) transform to incorporate the available relative roll data for motion modelling. We formulate all quaternions of a motion sequence relative to their values from the initial frame. In detail, for any quaternion sequence , we can simply obtain its FOQ transform as , where is the quaternion conjugate of . An FOQ sequence can easily be converted back to a quaternion sequence as long as any is known.

Crucially, FOQ is a roll-invariant rotation representation, that is, FOQ remains identical regardless of a bone’s absolute roll position in the original data. Trivially, to adjust the rotational roll of a bone (e.g., ), we perform the quaternion multiplication for each frame (including the initial frame): , where is a roll quaternion. As a quaternion on the roll axis of , is constrained by , where ,, are the 3D XYZ values of , and are scalars that control the roll magnitude. Note that is constant throughout the sequence. We can then deduce that for any constant ,

Therefore, FOQ is indeed roll-invariant.

3.4 Motion Interpolation Transformer and Rest Pose Augmentation

To leverage our point cloud-based representation learning for cross-skeleton interpolation, a transformer model - CITL [33] is adapted, allowing for an efficient interpolation pipeline with existing datasets to alternative skeletons. We introduce two key modifications to CITL and its training strategy, focusing on enhancing motion quality. First, quaternion predictions are substituted with FOQ predictions. This is inspired by the RNN-based method [18], which predicts quaternion offsets from the preceding frame rather than direct quaternion values, thereby achieving greater generalizability and superior quality in interpolation.

For our model’s CITL-based architecture, we substitute the original sinusoidal positional encoding scheme with learned relative positions [42]. While the original implementation relies on the continual nature of sinusoidal functions, we observe that relative positional embeddings with zero initialisation converges upon similarly continuous behaviour, and significantly lowers the complexity of positional attention. The lessened focus on positional relations allows the model to synthesise deeper behaviours within the pose space, improving overall generalisability. As a side effect, the model also accepts arbitrary length inputs beyond the training data length.

Notably, the intended rest pose of a skeletal configuration is often geometrically sub-optimal for point cloud matching, and can manifest in multiple forms. To address this, we devise a rest position augmentation strategy, leveraging a per-bone Inverse Kinematics (IK) system. RPA broadens the range of intended rest poses that the learned interpolation model can accommodate. In detail, during each phase of the Forward Kinematics (FK) process, we augment the bone tails with a random additional offset. We re-align the augmented global tail position as closely as possible to its original location using a quaternion rotation with the maximum possible real component. Algorithm 1 describes the method by which this quaternion can be derived. Since the real component describes, in cosine, the amount of rotation around an axis, this minimizes numerical disturbance from RPA while altering the visual representation to the desired extent.

4 Experimental Results

4.1 Datasets

To thoroughly evaluate the effectiveness of the proposed method, we conducted extensive experiments on the following widely used motion capture datasets:

-

•

LaFAN1 [18] contains long, high quality motions in controllable video game character styles. The dataset was recorded on a large motion capture stage, and manually cleaned to production standards. The native skeleton of LaFAN1 contains 6 spinal bones, and 4 bones for each limb.

- •

-

•

CMU Mocap [10] is a diverse motion dataset encompassing 113 overlapping categories. It features a mix of static and dynamic motions, captured on a spacious 3x8m stage. The CMU skeleton is identical to that of Human3.6M, with the exception of the pelvis, which is divided into two symmetrical hip bones and a lower back bone.

For PC-MRL, we designated the CMU MoCap skeleton as . Its native dataset is exclusively utilized for testing purposes; while LaFAN1 and Human3.6M are used simultaneously for training.

4.2 Implementation Details

In our experiments, point cloud obfuscation adopted a 256-point setting for the sampling process with and each point was characterized by a 64-dimensional vector. To maintain consistency, we ensure that all bone offsets and motion positions are defined in metre units. For skeletal reconstruction, 4 Point Transformer [52] layers was utilized to construct the neural network. Within each layer, the feature size was doubled, and the size of the point set was downsampled by a factor of 4 through a farthest-first traversal approach. This processing across layers resulted in a singular feature vector. In the final step, two linear layers with ReLU activations were utilized to transform the resulted feature vector in a frame-wise manner, producing the output skeletal representation. During training, we set for the KNN loss to optimize the network. Additionally, the use of unit quaternion values in practice relies on modulus division for constraint, a process that can potentially lead to exploding gradients. To address this, we introduced an objective function that measures the norms’ difference between the raw quaternion output and the expected unit quaternion. All experiments were trained and evaluated on a single NVIDIA GeForce RTX 3090 GPU.

4.3 Motion Interpolation Comparison against Supervised Methods

| Motion | Method | L2P | L2Q | NPSS | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| category | 5 | 15 | 30 | 5 | 15 | 30 | 5 | 15 | 30 | |

| Basketball | LERP | 0.0787 | 0.4009 | 0.8033 | 0.1588 | 0.5834 | 0.9611 | 0.1315 | 0.6120 | 1.8202 |

| [18] | 0.1050 | 0.4419 | 0.7104 | 0.1859 | 0.6439 | 0.9303 | 0.1600 | 0.6579 | 1.2136 | |

| BERT [12] | 0.0889 | 0.3737 | 0.6865 | 0.1585 | 0.5560 | 0.8992 | 0.1689 | 0.6177 | 1.4587 | |

| CITL [33] | 0.0625 | 0.2973 | 0.5708 | 0.1410 | 0.4932 | 0.8011 | 0.1047 | 0.4098 | 1.0959 | |

| PC-MRL w/o RPA | 0.0767 | 0.3534 | 0.6715 | 0.1505 | 0.5431 | 0.9049 | 0.1364 | 0.5596 | 1.3471 | |

| PC-MRL (Ours) | 0.0752 | 0.3443 | 0.6662 | 0.1561 | 0.5495 | 0.9088 | 0.1397 | 0.5572 | 1.3015 | |

| Golf | LERP | 0.0189 | 0.1229 | 0.3187 | 0.0455 | 0.1870 | 0.3987 | 0.0587 | 0.3979 | 1.0312 |

| [18] | 0.0473 | 0.2616 | 0.4546 | 0.0693 | 0.3191 | 0.5297 | 0.0954 | 0.5062 | 1.1534 | |

| BERT [12] | 0.0276 | 0.1159 | 0.2967 | 0.0612 | 0.2082 | 0.4258 | 0.1099 | 0.3906 | 0.9255 | |

| CITL [33] | 0.0201 | 0.1043 | 0.2589 | 0.0476 | 0.1766 | 0.3552 | 0.0636 | 0.2581 | 0.7799 | |

| PC-MRL w/out RPA | 0.0274 | 0.1304 | 0.3622 | 0.0555 | 0.2065 | 0.4923 | 0.0911 | 0.3759 | 1.2083 | |

| PC-MRL (Ours) | 0.0273 | 0.1130 | 0.2706 | 0.0598 | 0.2012 | 0.3844 | 0.1049 | 0.3716 | 0.8150 | |

| Swimming | LERP | 0.0731 | 0.3680 | 0.7374 | 0.1326 | 0.5327 | 0.9476 | 0.2147 | 0.9222 | 1.6972 |

| [18] | 0.1292 | 0.5796 | 0.9141 | 0.1845 | 0.7420 | 1.1199 | 0.2660 | 0.9716 | 1.7698 | |

| BERT [12] | 0.1088 | 0.3896 | 0.7516 | 0.1819 | 0.5518 | 0.9622 | 0.2877 | 0.9074 | 1.6682 | |

| CITL [33] | 0.0905 | 0.3895 | 0.7475 | 0.1484 | 0.5535 | 0.9533 | 0.2084 | 0.8317 | 1.6122 | |

| PC-MRL w/o RPA | 0.0722 | 0.3754 | 0.7459 | 0.1345 | 0.5442 | 0.9594 | 0.1944 | 0.8731 | 1.6608 | |

| PC-MRL (Ours) | 0.0734 | 0.3595 | 0.7139 | 0.1355 | 0.5331 | 0.9248 | 0.1956 | 0.8591 | 1.6370 | |

| Walking & Running Locomotion | LERP | 0.0455 | 0.2505 | 0.5527 | 0.1120 | 0.3997 | 0.6233 | 0.0655 | 0.2682 | 0.9071 |

| [18] | 0.0540 | 0.1786 | 0.3103 | 0.1147 | 0.2866 | 0.3866 | 0.0895 | 0.2616 | 0.4265 | |

| BERT [12] | 0.0552 | 0.1998 | 0.3544 | 0.1111 | 0.3101 | 0.4762 | 0.0901 | 0.2760 | 0.6458 | |

| CITL [33] | 0.0328 | 0.1034 | 0.2427 | 0.0942 | 0.2102 | 0.3384 | 0.0526 | 0.1568 | 0.4080 | |

| PC-MRL w/o RPA | 0.0397 | 0.1657 | 0.3417 | 0.1058 | 0.2879 | 0.4722 | 0.0640 | 0.2288 | 0.6021 | |

| PC-MRL (Ours) | 0.0377 | 0.1392 | 0.2848 | 0.1064 | 0.2778 | 0.4281 | 0.0658 | 0.2129 | 0.5098 | |

To demonstrate the efficacy of our method, we produce and compare our method against the conventional LERP method, as well as three state-of-the-art interpolation models trained on the original CMU MoCap dataset,. Specifically, we measure the performance of the RNN-based model [18], the BERT-based motion interpolation adaptation [12], and a transformer-based encoder-decoder approach [33]. We additionally provide results on a variant of our method trained without our RPA strategy. The experimental configuration for each state-of-the-art model is identical to their original implementations. To measure interpolation accuracy, we employ the standard positional distance (L2P), quaternion difference (L2Q), and NPSS [16] for visual similarity evaluation [18].

The results listed in Table 1 clearly demonstrate our method’s ability to approach the accuracy levels exhibited by directly supervised state-of-the-art methods. PC-MRL consistently outperforms both the LERP standard and RNN-based model, particularly in longer keyframe interval scenarios where the quality and depth of learned motion features is most critical and impactful. Likewise, the inclusion of RPA also tends to improve the long interval performance of PC-MRL, particularly when precise movements (i.e. golf) or deeper motion features (i.e. locomotion) are expected. Given this, we can observe that RPA definitively improves the overall consistency of the PC-MRL method in scenarios that may be unseen or less effectively represented by our point cloud-to-motion system.

Native supervision, i.e. with CITL, unsurprisingly produces the most accurate interpolation model, with a notable exception of swimming motions, where our PC-MRL supervision produces even stronger results. We believe the abundance of low crawl motions, a similar motion to swimming in LaFAN1, provides more meaningful supervision over the original CMU dataset, which conversely has few similar motions once swimming motions are filtered out. On the other end of the spectrum, i.e. for high data scenarios such as walking and running locomotion from Fig. 5, all models including our PC-MRL approach observe the strongest improvements for learned methods over the naive LERP method. Both cases strongly support our method’s capability to supervise highly intricate motion patterns, despite the incompleteness of the point cloud representation and reliance on relative rotations and geometric optimisation assumptions.

4.4 Cross-Skeleton Motion Re-Targeting Experiments

| Model | ||||||

| Original motion | 1.0099 | 2.0234 | 5.7399 | 9.6636 | 16.9223 | 0 |

| Primal skeleton [1] | 8.1109 | 8.0353 | 9.4206 | 12.8291 | 20.6332 | 9.6990 |

| PC-MRL (Ours) | 3.8714 | 4.1231 | 6.7277 | 10.3078 | 17.5261 | 5.6258 |

We further conduct an experiment on motion re-targeting to directly benchmark our method against the sole existing state-of-the-art method for unpaired motion re-targeting with unrestricted skeletons, i.e. primal skeletons (PS) [1]. For direct motion re-targeting evaluation, we utilized the KNN-Loss and end-effector loss as metrics, owing to their effective measurement of visual similarity and non-sensitivity towards absolute roll axis correctness. Though absolute rolls are generally necessary for a complete motion re-targeting solution, this aspect is out of scope for our project as our main goal is motion interpolation.

Table 2 indicates the superior performance of our method over the state-of-the-art PS method in terms of visual similarity. At low values, the higher relative of the primal skeleton method underscores its geometric deviations from the original motion. In contrast, our method demonstrates significantly better visual adherence to the original geometry. As increases, our method approaches near-perfect alignment with the original motion. Figure 6 visually indicates this, showing our method follows the original motion closely, while the latent consistency-based primal skeleton method struggles to generalise and decode the skeleton’s internal structures without directly supervised objectives, such as the end-effector positions provided during training. In addition, Fig 7 demonstrates that, like existing re-targeting approaches, our method is able to produce adequate results on disproportionate skeletons.

| Method | L2P | L2Q | NPSS | ||||||

|---|---|---|---|---|---|---|---|---|---|

| 5 | 15 | 30 | 5 | 15 | 30 | 5 | 15 | 30 | |

| CITL - PS | 0.2043 | 0.6332 | 1.1524 | 0.2318 | 0.7428 | 1.1442 | 0.1122 | 0.4276 | 1.4622 |

| CITL - Native | 0.0495 | 0.2306 | 0.4898 | 0.0979 | 0.3604 | 0.6609 | 0.1149 | 0.4684 | 1.1591 |

| PC-MRL | 0.0494 | 0.2337 | 0.4960 | 0.1030 | 0.3771 | 0.6813 | 0.1370 | 0.5433 | 1.1760 |

Since our approach is largely focused on enabling non-native motion interpolation supervision, we additionally compare our PC-MRL method against a CITL model supervised by PS. Due to the suboptimal re-targeting performance of PS, models supervised by PS exhibit expectedly poor accuracy, as shown in Table 3.

5 Limitations

Due to the inherent limitations of the point cloud representation, certain constraints on the applicability of our point cloud-to-motion method are inevitable. Primarily, the reliance of our point cloud re-targeting method on the relative nature of First-frame Offset Quaternions (FOQ) for most motion learning tasks necessitates the presence of at least one known pose. In the absence of a known pose, relative quaternions are incapable of reconstructing absolute rotational values, which are essential for motion applications. Secondly, due to our method of sampling point clouds with a consistent deviation from each bone segment, the resultant representation inevitably obscures finer details. This includes elements like fingers and facial controls, to an extent that is beyond rectification.

Our proposed objective function relies entirely on geometric segments, necessitating that all bones possess a non-zero length. This stipulation is deemed a reasonable prerequisite for generic human skeletons, as each bone is typically responsible for manipulating a discernible segment of the human body. Due to technical considerations, the skeletal configurations in each of our datasets included at least one bone of zero length, which we ignored in our experiments in favour of global quaternion rotations of all bones with non-zero length.

As a result of these limitations, we refrained from claiming state-of-the-art performance for general motion re-targeting tasks.

6 Conclusion

This paper introduces a novel learning-based motion interpolation method designed to enable cross-skeleton compatibility with existing motion datasets. Our proposed method PC-MRL employs a process of point cloud obfuscation and skeleton reconstruction. The point cloud space represents human motions in a non-hierarchic and skeleton-agnostic manner. It enables a KNN-based objective to be optimised without dataset supervision, guiding a neural network to generate high-quality motion features with any target skeleton. We address the rotational information loss of our point cloud format by presenting an offset quaternion strategy compatible with concurrent transformer-based models. Through extensive experiments, we have demonstrated the efficacy of PC-MRL in performing motion interpolation without relying on native motion data. Moreover, PC-MRL has achieved superior visual similarity metrics in the domain of motion re-targeting. We concluded our work by discussing the limitations of PC-MRL.

References

- [1] Aberman, K., Li, P., Lischinski, D., Sorkine-Hornung, O., Cohen-Or, D., Chen, B.: Skeleton-aware networks for deep motion retargeting 39(4), 62–1 (2020)

- [2] Aberman, K., Wu, R., Lischinski, D., Chen, B., Cohen-Or, D.: Learning character-agnostic motion for motion retargeting in 2d 38(4), 1–14 (jul 2019)

- [3] Annabi, L., Ma, Z., et al.: Unsupervised motion retargeting for human-robot imitation. In: Companion of the 2024 ACM/IEEE International Conference on Human-Robot Interactio. ACM (2024)

- [4] Bodenheimer, B., Rose, C., Rosenthal, S., Pella, J.: The process of motion capture: Dealing with the data. In: Computer Animation and Simulation. pp. 3–18. Springer (1997)

- [5] Burtnyk, N., Wein, M.: Computer-generated key-frame animation. Journal of the SMPTE 80(3), 149–153 (1971)

- [6] Catalin Ionescu, Fuxin Li, C.S.: Latent structured models for human pose estimation. In: International Conference on Computer Vision (2011)

- [7] Chen, H., Tang, H., Timofte, R., Gool, L.V., Zhao, G.: Lart: Neural correspondence learning with latent regularization transformer for 3d motion transfer. Advances in Neural Information Processing Systems 36 (2024)

- [8] Chen, J., Li, C., Lee, G.H.: Weakly-supervised 3D pose transfer with keypoints. In: ICCV. pp. 15156–15165 (2023)

- [9] Choi, K.J., Ko, H.S.: Online motion retargetting. The Journal of Visualization and Computer Animation 11(5), 223–235 (2000)

- [10] CMU: Carnegie-mellon university motion capture database (2003), http://mocap.cs.cmu.edu/

- [11] Dam, E.B., Koch, M., Lillholm, M.: Quaternions, interpolation and animation, vol. 2. Citeseer (1998)

- [12] Duan, Y., Lin, Y., Zou, Z., Yuan, Y., Qian, Z., Zhang, B.: A unified framework for real time motion completion. In: AAAI. vol. 36, pp. 4459–4467 (2022)

- [13] Frank, T., Johnston, O.: Disney Animation: The Illusion of Life. Abbeville Publishing Group, New York (1981)

- [14] Gleicher, M.: Retargetting motion to new characters. In: Computer Graphics and Interactive Techniques. pp. 33–42 (1998)

- [15] Gleicher, M.: Animation from observation: Motion capture and motion editing 33(4), 51–54 (1999)

- [16] Gopalakrishnan, A., Mali, A., Kifer, D., Giles, L., Ororbia, A.G.: A neural temporal model for human motion prediction. In: CVPR. pp. 12116–12125 (2019)

- [17] Harvey, F.G., Pal, C.: Recurrent transition networks for character locomotion. In: SIGGRAPH Asia 2018 Technical Briefs, pp. 1–4 (2018)

- [18] Harvey, F.G., Yurick, M., Nowrouzezahrai, D., Pal, C.: Robust motion in-betweening 39(4), 60–1 (2020)

- [19] He, C., Saito, J., Zachary, J., Rushmeier, H., Zhou, Y.: Nemf: Neural motion fields for kinematic animation 35, 4244–4256 (2022)

- [20] Hecker, C., Raabe, B., Enslow, R.W., DeWeese, J., Maynard, J., van Prooijen, K.: Real-time motion retargeting to highly varied user-created morphologies 27(3), 1–11 (2008)

- [21] Hernandez, A., Gall, J., Moreno-Noguer, F.: Human motion prediction via spatio-temporal inpainting. In: ICCV. pp. 7134–7143 (2019)

- [22] Ionescu, C., Papava, D., Olaru, V., Sminchisescu, C.: Human3.6m: Large scale datasets and predictive methods for 3D human sensing in natural environments. IEEE Transactions on Pattern Analysis and Machine Intelligence 36(7), 1325–1339 (jul 2014)

- [23] Jiang, H., Cai, J., Zheng, J.: Skeleton-aware 3D human shape reconstruction from point clouds. In: ICCV. pp. 5431–5441 (2019)

- [24] Kantor, I.L., Solodovnikov, A.S., Shenitzer, A.: Hypercomplex numbers: an elementary introduction to algebras, vol. 302. Springer (1989)

- [25] Karunratanakul, K., Preechakul, K., Suwajanakorn, S., Tang, S.: Guided motion diffusion for controllable human motion synthesis. In: ICCV. pp. 2151–2162 (2023)

- [26] Kulpa, R., Multon, F., Arnaldi, B.: Morphology-independent representation of motions for interactive human-like animation. In: Eurographics (2005)

- [27] Lehrmann, A.M., Gehler, P.V., Nowozin, S.: Efficient nonlinear markov models for human motion. In: CVPR. pp. 1314–1321 (2014)

- [28] Li, P., Aberman, K., Zhang, Z., Hanocka, R., Sorkine-Hornung, O.: Ganimator: Neural motion synthesis from a single sequence 41(4), 1–12 (2022)

- [29] Li, Z., Peng, X.B., Abbeel, P., Levine, S., Berseth, G., Sreenath, K.: Reinforcement learning for versatile, dynamic, and robust bipedal locomotion control. arXiv preprint arXiv:2401.16889 (2024)

- [30] Liao, Z., Yang, J., Saito, J., Pons-Moll, G., Zhou, Y.: Skeleton-free pose transfer for stylized 3D characters. In: ECCV. pp. 640–656. Springer (2022)

- [31] Makoviychuk, V., Wawrzyniak, L., Guo, Y., Lu, M., Storey, K., Macklin, M., Hoeller, D., Rudin, N., Allshire, A., Handa, A., et al.: Isaac gym: High performance gpu-based physics simulation for robot learning. arXiv preprint arXiv:2108.10470 (2021)

- [32] Merry, B., Marais, P., Gain, J.: Animation space: A truly linear framework for character animation. ACM Transactions on Graphics (TOG) 25(4), 1400–1423 (2006)

- [33] Mo, C.A., Hu, K., Long, C., Wang, Z.: Continuous intermediate token learning with implicit motion manifold for keyframe based motion interpolation. In: CVPR. pp. 13894–13903 (2023)

- [34] Monzani, J.S., Baerlocher, P., Boulic, R., Thalmann, D.: Using an intermediate skeleton and inverse kinematics for motion retargeting. In: Computer Graphics Forum. vol. 19, pp. 11–19. Wiley Online Library (2000)

- [35] Oreshkin, B.N., Valkanas, A., Harvey, F.G., Ménard, L.S., Bocquelet, F., Coates, M.J.: Motion inbetweening via deep -interpolator (2022)

- [36] Peng, X.B., Abbeel, P., Levine, S., van de Panne, M.: Deepmimic: Example-guided deep reinforcement learning of physics-based character skills 37(4), 143:1–143:14 (Jul 2018)

- [37] Peng, X.B., Ma, Z., Abbeel, P., Levine, S., Kanazawa, A.: Amp: Adversarial motion priors for stylized physics-based character control 40(4) (Jul 2021)

- [38] Qin, J., Zheng, Y., Zhou, K.: Motion in-betweening via two-stage transformers 41(6), 1–16 (2022)

- [39] Reda, D., Won, J., Ye, Y., van de Panne, M., Winkler, A.: Physics-based motion retargeting from sparse inputs. ACM Computer Graphics and Interactive Techniques 6(3), 1–19 (2023)

- [40] Reeves, W.T.: Inbetweening for computer animation utilizing moving point constraints 15(3), 263–269 (1981)

- [41] Ren, T., Yu, J., Guo, S., Ma, Y., Ouyang, Y., Zeng, Z., Zhang, Y., Qin, Y.: Diverse motion in-betweening from sparse keyframes with dual posture stitching. IEEE Transactions on Visualization and Computer Graphics (2024)

- [42] Shaw, P., Uszkoreit, J., Vaswani, A.: Self-attention with relative position representations. In: NAACL. Association for Computational Linguistics (2018)

- [43] Tiwari, G., Antić, D., Lenssen, J.E., Sarafianos, N., Tung, T., Pons-Moll, G.: Pose-ndf: Modeling human pose manifolds with neural distance fields. In: ECCV. pp. 572–589. Springer (2022)

- [44] Villegas, R., Yang, J., Ceylan, D., Lee, H.: Neural kinematic networks for unsupervised motion retargetting. In: CVPR. pp. 8639–8648 (2018)

- [45] Wang, J., Li, X., Liu, S., De Mello, S., Gallo, O., Wang, X., Kautz, J.: Zero-shot pose transfer for unrigged stylized 3D characters. In: CVPR. pp. 8704–8714 (2023)

- [46] Wang, K., Xie, J., Zhang, G., Liu, L., Yang, J.: Sequential 3D human pose and shape estimation from point clouds. In: CVPR. pp. 7275–7284 (2020)

- [47] Yuan, Y., Song, J., Iqbal, U., Vahdat, A., Kautz, J.: Physdiff: Physics-guided human motion diffusion model. In: ICCV. pp. 16010–16021 (2023)

- [48] Yuan, Y., Wei, S.E., Simon, T., Kitani, K., Saragih, J.: Simpoe: Simulated character control for 3D human pose estimation. In: CVPR. pp. 7159–7169 (2021)

- [49] Zhang, J., Weng, J., Kang, D., Zhao, F., Huang, S., Zhe, X., Bao, L., Shan, Y., Wang, J., Tu, Z.: Skinned motion retargeting with residual perception of motion semantics & geometry. In: CVPR. pp. 13864–13872 (2023)

- [50] Zhang, M., Li, H., Cai, Z., Ren, J., Yang, L., Liu, Z.: Finemogen: Fine-grained spatio-temporal motion generation and editing. Advances in Neural Information Processing Systems 36 (2024)

- [51] Zhang, X., van de Panne, M.: Data-driven autocompletion for keyframe animation. In: ACM SIGGRAPH Conference on Motion, Interaction and Games. pp. 1–11 (2018)

- [52] Zhao, H., Jiang, L., Jia, J., Torr, P.H., Koltun, V.: Point transformer. In: ICCV. pp. 16259–16268 (2021)

- [53] Zhu, W., Yang, Z., Di, Z., Wu, W., Wang, Y., Loy, C.C.: Mocanet: Motion retargeting in-the-wild via canonicalization networks. In: AAAI. vol. 36, pp. 3617–3625 (2022)