Motif Identification using CNN-based Pairwise Subsequence Alignment Score Prediction

††thanks: Identify applicable funding agency here. If none, delete this.

Abstract

A common problem in bioinformatics is related to identifying gene regulatory regions marked by relatively high frequencies of motifs, or deoxyribonucleic acid sequences that often code for transcription and enhancer proteins. Predicting alignment scores between subsequence k-mers and a given motif enables the identification of candidate regulatory regions in a gene, which correspond to the transcription of these proteins. We propose a one-dimensional (1-D) Convolution Neural Network trained on k-mer formatted sequences interspaced with the given motif pattern to predict pairwise alignment scores between the consensus motif and subsequence k-mers. Our model consists of fifteen layers with three rounds of a one-dimensional convolution layer, a batch normalization layer, a dense layer, and a 1-D maximum pooling layer. We train the model using mean squared error loss on four different data sets each with a different motif pattern randomly inserted in DNA sequences: the first three data sets have zero, one, and two mutations applied on each inserted motif, and the fourth data set represents the inserted motif as a position-specific probability matrix. We use a novel proposed metric in order to evaluate the model’s performance, , which is based on the Jaccard Index. We use 10-fold cross validation to evaluate out model. Using , we measure the accuracy of the model by identifying the 15 highest-scoring 15-mer indices of the predicted scores that agree with that of the actual scores within a selected region. For the best performing data set, our results indicate on average 99.3% of the top 15 motifs were identified correctly within a one base pair stride () in the out of sample data. To the best of our knowledge, this is a novel approach that illustrates how data formatted in an intelligent way can be extrapolated using machine learning.

Index Terms:

Motif Finding, Convolution Neural Network, Pairwise Sequence AlignmentI Introduction

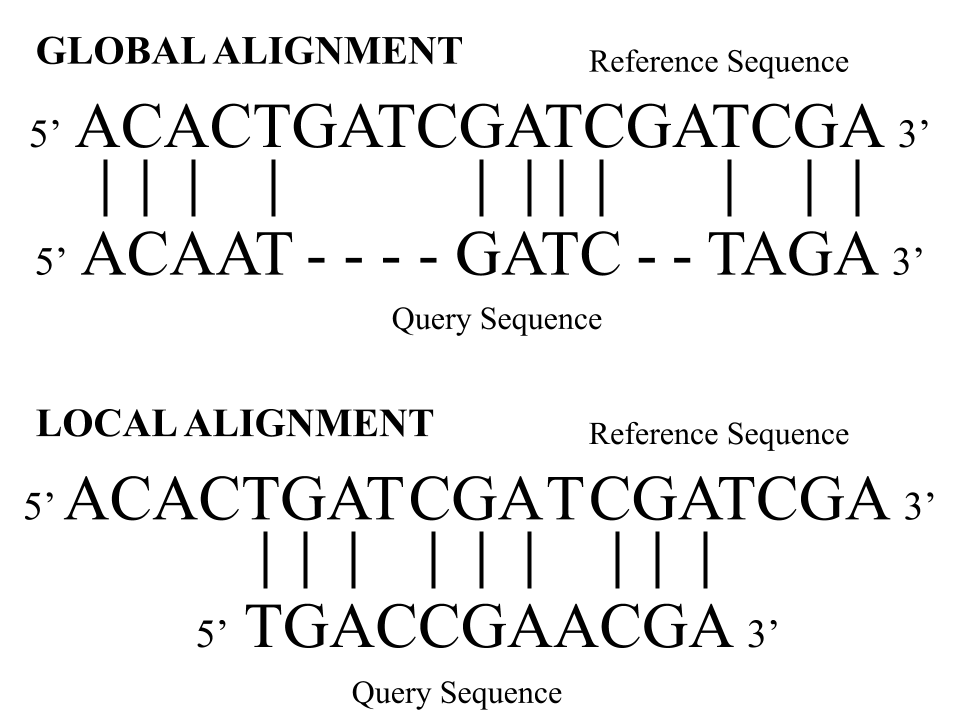

Measuring the similarity of two sequences is a well known problem called sequence alignment. This topic includes a vast category of methods for identifying regions of high similarity in biological sequences, such as those in deoxyribonucleic Acid (DNA), ribonucleic acid (RNA), and protein [7]. Specifically, DNA pairwise sequence alignment (PSA) methods are concerned with finding the best arrangement of two DNA sequences. Some historically notable dynamic programming PSA methods are the Needleman-Wunsch (NW) algorithm for global alignment [1] and Smith-Waterman (SW) algorithm for local alignment [2]. The main difference between global and local alignment is related to the difference in length of the two sequences: global alignment attempts to find the highest-scoring end-to-end alignment between two sequences of approximately the same length, and local alignment searches for local regions of high similarity between two sequences with different lengths [8]. Figure 1 shows this difference between local and global DNA alignment with two sequences aligned in a 5’ (i.e. five prime) to 3’ direction. In molecular biology, this orientation refers to the directionality of the carbon backbone in DNA. The top subfigure displays global alignment where a query sequence is aligned end-to-end with a reference. The bottom subfigure displays local alignment where a short query sequence is most optimally aligned with a longer reference sequence. This latter alignment displays how the query sequence is approximately equal to a subsequence of the reference sequence.

In this way, local alignment methods recognize approximate subsequence matches of a query sequence with respect to a given reference sequence. One common paradigm utilizing local alignment is to examine similarities between a query sequence and specific k-long subsequences in a given gene, known as k-mers, found within the reference sequence. Traditional local alignment algorithms calculate these scores between the query sequence and each k-mer in the reference sequence. The aim of this research is to identify where the most likely subsequence matches of the query sequence occur in each reference sequence using machine learning methods. One such type of query sequence that is of high biological significance is a sequence motif, which are short reoccurring subsequences of DNA [5]. Therefore, this research follows the ability of machine learning methods to gauge the relative enrichment of various representations of motifs (or motif patterns) in independent reference sequences. More specifically, the efficacy of identifying motif enrichment in sequences is explored using a one-dimensional (1-D) convolution neural network (CNN).

Four different data sets are generated, each with a different motif pattern randomly inserted in approximately 10,000 reference sequences: the first three data sets have zero, one, and two mutations applied on each inserted motif, and the fourth data set represents the inserted motif as a position-specific probability matrix (PPM). In this data structure, each nucleotide position corresponds to a frequency of nucleotides [22]. These distinct motif patterns help display how the CNN model can recognize both subsequence matches with exact, inexact, and probabilistic motifs. Each sample in a given data set consists of artificial sequences enriched with a given motif pattern at a frequency between five and fifteen occurrences per 1,000 base pairs (bp). These samples are split into 986 overlapping 15-mers with a corresponding calculated local alignment score from the BioPython Aligner [20]. These sores are then predicted using a CNN with 10-fold cross validation. In order to measure the performance of the model, the average out of sample mean squared error (MSE), R2, and accuracy scores are reported.

While the MSE of the model trained on each data set is not representative of the model’s effectiveness, the Jaccard Index and , a novel modified version of the Jaccard Index, are better suited to capture accuracy of the model. The standard MSE is not suitable for this problem because it inherently only displays differences between predicted and actual values. Since our aim is to locate those highest-scoring 15-mers, we need a metric that determines at which positions they occur and with what accuracy (see subsection V-A). This new metric, , measures the degree of similarity between two sets where each pair of elements can be different by at most . Because of the plateauing nature of this metric as seen in each data set and the risks involved in increasing alpha, only to are reported.

In implementing this new metric, the accuracy of the model increases dramatically across all four data sets compared to the Jaccard Index. This indicates that while the model is not able to precisely identify the highest-scoring k-mers exactly, it is able to accurately identify their local region. As expected, the model’s accuracy is far higher for the data sets with relatively simple inserted motif patterns–non-probabilistic consensus motifs–compared to that of the data set with more complex inserted motif patterns, such as consensus PPM.

II Background

Clusters of motifs across a genome strongly correlate to a gene regulatory regions [18]. These regions are especially important for motif enrichment analysis, where known motifs are identified in the regulatory sequence of a gene in order to determine which proteins (transcription factors and enhancers) control its transcription [6] [19]. Motif enrichment analysis is only relevant given that the regulatory region of a gene is known, otherwise the sequence under study may be from a non-coding region of an organism’s genome or an untranslated region of a gene [9]. Given that the regulatory region of a gene is unknown, one frequently used approach to identifying it is to first locate sequences enriched with highly conserved motifs. Fortunately, many motifs that have been discovered are common amongst genes serving a similar role across organisms, such as a negative regulatory region for eukaryotes [10]. Finding these conserved motifs may facilitate the identification of the regulatory regions in a gene. For that reason, identifying the exact or relative positions of a given motif in a gene or sequence is a relevant inquiry in the process for classifying candidate regulatory regions of a gene.

A software toolkit known as MEME Suit includes three different methods for motif-sequence searching [23]: FIMO (Find Individual Motif Occurrences) [21], GLAM2SCAN (Gapped Local Alignment of Motifs SCAN) [24], and MAST (Motif Alignment and Search Tool) [25].

FIMO focuses on scanning both DNA and protein sequences for a given motif represented as PPM. This software tool calculates the log-likelihood ratio score, p-value, and q-value (false discovery rate) for each subsequence position in a sequence database [21].

Typically, GLAM2SCAN performs a Waterman-Eggert local alignment between motifs found by GLAM2, its companion motif-finding algorithm, and a sequence database. These local alignment scores are generated from an aligner programmed with position specific residue scores, deletion scores, and insertion scores returned from the GLAM2 algorithm. The highest alignments are returned to the user [24].

MAST locates the highest-scoring subsequences with respect to a motif described as a position-specific score matrix. Using the QFAST algorithm, MAST calculates the p-value of a group of motif matches. This is accomplished by first finding the p-value of each match (position p-value’) and normalizing it for the length of the motif (’sequence p-value’). Then each of these normalized p-values are multiplied together to find the statistical significance across all located motifs in the database (’combined p-value’) [25].

III Data Analysis & Curation

A single data set contains approximately 10,000 randomly generated DNA sequences, each 1,000 bp long. The number of samples vary slightly from one to another due to some inconsistencies that are removed in prepossessing. A 15-mer motif is inserted into each sample anywhere from five to fifteen times. Four separate data sets of this structure are created where a different motif pattern is inserted randomly into each sequence. The first three data sets have zero, one, and two mutations applied on each inserted motif. These mutations are applied in order to determine whether the proposed model has the potential to identify consensus motifs and non-exact consensus motifs across many sequences. Since motifs mostly exist as profiles where each base pair position corresponds to a frequency table of nucleotides, the fourth data set is created where the inserted motifs are based off of a PPM [11].

Equation 1 is used to calculate the PPM indicated by matrix given a set of candidate motifs, or sequences that are thought to be from the same motif PPM. This equation counts the number of occurrences of each nucleotide in set for each nucleotide position across all motifs, where ; represents an indicator function, where is 1 if and 0 otherwise; (1, …, L), where L is the length of each motif; and , where N is the number of motifs.

| (1) |

In order to apply Equation 1 on candidate motifs, the DNA sequence data must be formatted as nucleotide position counts shown in Figure 2. This figure illustrates the conversion of a list of candidate motifs to matrix and then to using Equation 1. While Figure 2 displays this process for five 10-mers, the fourth data sets in this work relies on profiles built from ten 15-mers.

-

TACAGAGTTG

-

CCATAGGCGT

-

TGAACGCTAC

-

ACGGACGATA

-

CGAATTTACG

=

A

1

1

3

3

2

1

0

2

1

1

T

2

0

0

1

1

1

1

2

2

1

C

2

2

1

0

1

1

1

1

1

1

G

0

2

1

1

1

2

3

0

1

2

= A 0.2 0.2 0.6 0.6 0.4 0.2 0.0 0.4 0.2 0.2 T 0.4 0.0 0.0 0.2 0.2 0.2 0.2 0.4 0.4 0.2 C 0.4 0.4 0.2 0.0 0.2 0.2 0.2 0.2 0.2 0.2 G 0.0 0.4 0.2 0.2 0.2 0.4 0.6 0.0 0.2 0.4

IV Feature & Output Selection

In order to format the sequence data into a structure that is both recognizable and meaningful to a CNN, we first split each sequence into a list of overlapping 15-mers. Next, we generate a one-hot encoding for each nucleotide in the 15-mers. The resulting feature set is composed of 60 values. Figure 3 displays this process using a small subsequence example formatted as 4-mers.

To obtain the target values, each of these 15-mers are pairwise aligned with the consensus motif for the given data set motif pattern using the SW algorithm. Given two sequences, of length and of length , this algorithm begins by defining an by matrix . The first column and first row are assigned , and the following recurrence relation is applied to assign the rest of the values in .

where W is a gap score and is a score matrix such that

In the case when , returns a match score of , and in the case when , returns a mismatch score of . The gap score, , is assigned . The match, mismatch, and gap score can be configured for different alignments. These parameters are used because they are the most optimal for this type of local alignment [4]. Once is assigned its values, the best alignment is obtained by finding the maximum value in and tracing back the matrix elements that led up to this maximum. In this way, the maximum value in defines the optimal path in for the best alignment between sequences and [2]. The calculated alignment scores are normalized based on the maximum alignment score in each sample.

V Methods

V-A CNN Model Evaluation

Although the MSE loss function is effective at penalizing large differences between predicted and target values, such as outliers in the data, it does not successfully represent the predictive power of the model given the scope of the problem [14]. In the data, the target value from each sample ranges from zero to one. This range already generates an inherently small MSE. Even when the MSE for each sample is normalized, the metric is overshadowed by the overwhelming majority of the predicted values that were approximately equal to the global mean of each sample. In other words, the MSE as a metric does not capture the correct information pertaining to the five to fifteen inserted motif patterns in each sample due to a large unequal distribution of such scores that deviate from the global mean. This problem is analogous to that of an unequal class distribution in a classification problem.

The goal of the model is to score the CNN based on its ability to locate the 15 highest-scoring 15-mers, because we inserted a motif pattern at most 15 times into a single sample. Since this network deals with continuous values instead of discrete classes, initially we cannot be certain of the 15-mer to which a 15-mer score at any index corresponds. However, a higher scoring 15-mer has a greater probability of corresponding to that of a motif, whereas the lower scoring 15-mers carry little information. This is due to the fact that each score in the data is generated from a local alignment between 15-mer and the given consensus motif. In this way, only the highest 15-scoring 15-mers are of interest. As previously mentioned, we indicate that there is an unequal distribution between the number of scores corresponding to that of each inserted motif and the global mean of each sample. Using these observations, we rationalize that we only have to examine the 15 highest-scoring indices. This generality that the 15 highest-scoring idicies correspond to the inserted motif patterns is further supported by the notion that probability of observing a random 15-mer exactly equal or similar to the inserted motifs is relatively low.

Thus, the indices of the predicted 15 highest-scoring 15-mer inherently hold information about the position of possible inserted motif patterns because it is at these indices at which the local alignment is conducted. Due to the low likelihood of observing a false positive (when a 15-mer is identified as a motif but in all actuality is not one), we create a one-to-one correspondence between the indices of the actual motif indices and that of the predicted motifs using high local alignment scores. The accuracy of this one-to-one correspondence can be measured using the Jaccard Index given in Equation 2.

| (2) |

We propose a more generalized index, , in Equation 3 which measures the similarity of two sets with an allowed margin of error of . Because of the high locality of local alignment score predictions and due to the fact that the highest-scoring 15-mers can still be found from examining the immediate region of a prediction, this margin of error serves as a heuristic for motif identification. In this metric, two items are considered identical if they are no more than away from each other. In the scope of this work, sets and contain the indices of the 15 highest-scoring 15-mers of the actual data and predicted data, respectively. When , in Equation 2 is identical to in Equation 3. Conversely, as increases, the allowed distance between indices in sets and increases. For example, when , a predicted 15-mer index and actual 15-mer index are considered the same.

| (3) |

The following process is an algorithm to calculate a modified version of the Jaccard Index. Using the function in NumPy, we examine the indices that order both the actual outputs and the predicted outputs. In looping through the each of the top indices of the predicted outputs, we count the number of them which are contained in the list of indices of the actual outputs. The process returns the score as count over the maximum possible value, which in this case is . This is implemented in Algorithm 1

VI Results

Each of the four data sets is characterized by 10,000 samples where each sample contains a sequence that is 1,000 bp in length. In each sample, a motif pattern is inserted randomly anywhere from five to fifteen times. The first three data sets include inserted motif patterns with zero, one, and two mutations. The fourth data set includes an inserted motif pattern represented based on a PPM. Each data set is evaluated using out of sample data generated from 10-fold cross validation based on eight metrics: MSE, R2, and -.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/4b8fd2ab-4f05-43f3-80c0-a39680ebf66f/cnnresults.png)

A fifth analysis is conducted with another data set using a motif representation similar to that of the fourth data set with the MafK transcription factor from the BATCH1 regulatory gene [26]. This motif is a 15-mer with a less conserved consensus sequence compared to that of the former four data sets. While this data set did not perform as well as the other four data sets with a of 45.3%, this analysis brought to light the consideration of the aligner scoring matrix as another hyperparameter to this work.

As it turns out, the performance of the model varies greatly with the chosen match score, mismatch score penalty, and gap score penalty for the currently implemented alignment method. For instance, the varies from 33.7% to 52.6% with different scoring hyperparameters. The former result is derived from an aligner with a match score of +2.0, mismatch score penalty of -3.0, and gap score penalty of -3.5, whereas the latter result is derived from an aligner with a match score of +2.0, mismatch score penalty of -4.0, and gap score penalty of -4.5. It is currently unclear what aligner hyperparameters are most optimal for this more complex data set and the original four data sets explored in the work. Although there is evidence to suggest that aligner scoring matrices vary with the type of inserted motif pattern, it is unclear whether the most optimal hyperparameters change from motif to motif.

One possible interpretation of the dependence of the model’s chosen evaluation metric, , on the aligner hyperparameters is related to the fact that the CNN predicts alignment scores that are normalized within each sample. Therefore, the farther these highest-scoring scores are from the global mean, the more likely that the proposed metric will be able to recognize inserted motifs. Conversely, when analyzing a data set with a less conserved motif consensus sequence, such as that of the MafK transcription factor, the alignment scores are closer to the global mean of each sample. This in turn makes recognizing the indices of the highest-scoring segments more challenging. It follows that the aligner hyperparameters which capitalize on increasing this difference are most favorable for all motifs, regardless of pattern.

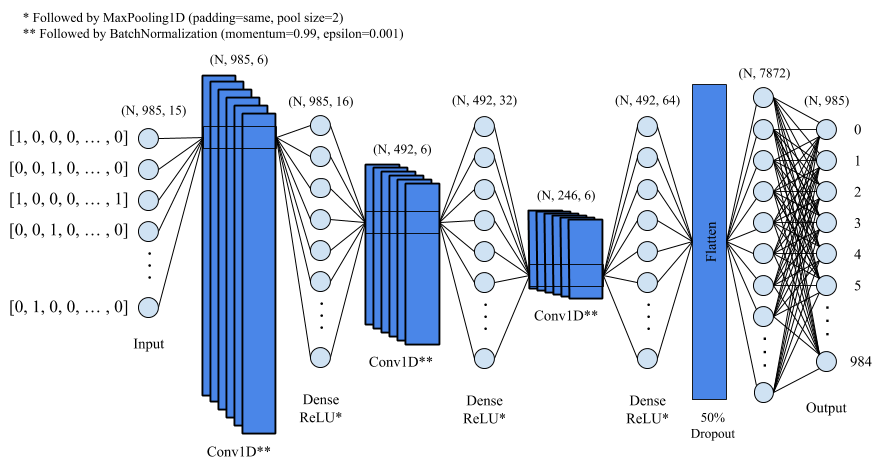

VI-A Convolution Neural Network (CNN) Architecture

CNN is a class of deep learning models which can infer patterns based on data formatted as a grid structure, such as a set of prices over time for stock or a grid representation of pixels in an image (add reference for these architectures). These Artificial Neural Netowrk (ANNs) use a linear mathematical operation called convolution in at least one of their layers [3]. The convolution operation is commonly identified by the following two equations:

| (4) |

| (5) |

Equation 4 explicitly denotes the equation for convolution, whereas Equation 5 displays how an asterisk can be used to for the linear operation. In both equations, is referred to as the input. Typically, this is formatted as a multidimensional array, or a tensor, that matches the size and dimensions of the data. The second argument is , representing a kernel, which stores parameters for the model also formatted as a tensor. This argument is adapted throughout the training process of the model. The output of both functions, , is called the feature map of the convolution layer. This is what is fed into the next layer of the network [3]. Hidden layers are generated from applying a kernel, or filter, of weights over the receptive field of the inputs. More specifically, the hidden layer is computed based off of the filter weights and the input layer as it strides across the feature space [28]. This operation can either compress or expand input space depending on the applied kernel [29]. This paradigm is followed by rounds of activations, normalizations, and pooling [29]. The model typically ends with a fully connected layer to compute its outputs [28]. The proposed model is represented in Figure 4 [cite my paper].

The model is marked by three rounds of a 1-D convolution layer, a batch normalization layer, a dense layer, and a 1-D maximum pooling layer. After these 12 layers, the model finishes off with a 50% dropout layer, a flattened layer, and finally a fully connected layer corresponding to the 986 alignment scores for each sample [13] [12].

The model described above is ran on all four data sets for 100 epochs with a batch size of 80 and compiled with the Adam optimizer (learning rate=0.001, beta 1=0.9, beta 2=0.999, epsilon=1e-07). Of the 10,000 samples in each dataset, 80% is reserved for training the network and the remaining 20% is used for validation after each epoch. For its loss function, the model relies on Mean Squared Error (MSE), which is calculated between predicted values () and target values () with the following formula in Equation 6:

| (6) |

VII Discussion

As displayed in this work, deep learning models, such as a CNN, have the capacity to recognize and predict the positions of an inserted motif with great accuracy. Furthermore, data structures can be devised to take advantage of unequal class distributions in regression problems as highlighted by the design of k-mer data representation in this work and the incorporation of as a novel evaluation metric.

In analyzing the results in Table I, there is a characteristic pattern between the accuracy metrics across each data set. For instance, in comparing - for the first data set with zero mutations applied on each inserted motif, the score monotonically increases with an increasing . This is evident for the three other data sets as well. With respect to this particular trend, it is expected that as increases, the score will also increase since relates directly to the allowed margin of error, making less conservative.

Additionally, the model’s accuracy is far higher for the data sets with relatively simple inserted motif patterns, such as nonmutated and mutated consensus motifs, compared to that of the fourth data set with a PPM motif pattern. This relationship can be explained by the process by which the scores for each 15-mer are calculated. For a given 15-mer, a score is computed based on its local alignment with a given consensus motif. For the first data set, these local alignment scores generated are derived from each inserted motif, whereas in the latter three data sets, the scores are not necessarily derived from each data set’s consensus motif since the motif patterns support variable inserted motif.

In all data sets, the largest increase in appears to be between the and . After this point, change in plateaus after a given . With the consideration that the likelihood of observing a false positive is relatively low, this indicates that the addition of stride is well-advised. This is the case because the increase in only influences up to a certain point. It is expected that as , where is the maximum on either side of a given motif index, because every single indices will be covered by the stride . In the case that , the certainty for each identified motif decreases with increasing regardless; however, the absence of this limit in the data indicates that the certainty of the identified motifs does not decreases dramatically from to . Furthermore, the presence of a plateauing supports the thought that a decrease in the certainty of an identified motif is negligible. This analysis can be drawn further in noticing that the point at which plateaus increases as the complexity of the motif pattern increases. In the case of a more complex motif pattern, such as either of the PPMs, a greater is required to fully encapsulate accuracy of the model’s predictions. Even then, the certainty of such motif identification with increasing decreases.

In subsection V-A, we draw a one to one correspondence between the actual motif indices and that of the predicted motifs by only examining the indices of the 15 highest-scoring 15-mers in both the actual scores and predicted scores. This is not a strong one-to-one correspondence because the number of inserted motifs actually varies randomly from five to fifteen times sample to sample. By design, this is a confounding variable When is applied on a sample with five inserted motifs, the returned score is predicted to be an underestimate of the model’s prediction. This is due to the fact that this function only examines the highest 15-scoring indices for each sample. In the case of five inserted motifs, there would be ten 15-mers identified as high-scoring motifs, when in reality these are random 15-mers in the sequence. Because those scores are more likely to be present throughout a sequence, there will be less similarity between the indices of the predicted 15 highest-scoring 15-mers and that of the actual 15 highest-scoring 15-mers. This will most likely lead to a decrease in .

References

- [1] Cold Spr. A general method applicable to the search for similarities in the amino acid sequence of two proteins. Mol. Biol, 48:443–153, 1970.

- [2] Temple F Smith, Michael S Waterman, et al. Identification of common molecular subsequences. Journal of molecular biology, 147(1):195–197, 1981.

- [3] Yoshua Bengio, Ian Goodfellow, and Aaron Courville. Deep learning, volume 1. MIT press Massachusetts, USA:, 2017.

- [4] Ahmad Al Kawam, Sunil Khatri, and Aniruddha Datta. A survey of software and hardware approaches to performing read alignment in next generation sequencing. IEEE/ACM transactions on computational biology and bioinformatics, 14(6):1202–1213, 2016.

- [5] Patrik D’haeseleer. What are dna sequence motifs? Nature biotechnology, 24(4):423–425, 2006.

- [6] Robert C McLeay and Timothy L Bailey. Motif enrichment analysis: a unified framework and an evaluation on chip data. BMC bioinformatics, 11(1):165, 2010.

- [7] Waqar Haque, Alex Aravind, and Bharath Reddy. Pairwise sequence alignment algorithms: A survey. In Proceedings of the 2009 Conference on Information Science, Technology and Applications, page 96–103, 2009.

- [8] EMBL-EBI. Pairwise Sequence Alignment, 2020.

- [9] Xiaole Liu, Douglas L Brutlag, and Jun S Liu. Bioprospector: discovering conserved dna motifs in upstream regulatory regions of co-expressed genes. In Biocomputing 2001, pages 127–138. World Scientific, 2000.

- [10] Jorge A Iñiguez-Lluhí and David Pearce. A common motif within the negative regulatory regions of multiple factors inhibits their transcriptional synergy. Molecular and Cellular Biology, 20(16):6040–6050, 2000.

- [11] Modan K Das and Ho-Kwok Dai. A survey of dna motif finding algorithms. In BMC bioinformatics, volume 8, page S21. Springer, 2007.

- [12] Yann LeCun, Yoshua Bengio, and Geoffrey Hinton. Deep learning. Nature, 521(7553):436–444, 2015.

- [13] Jürgen Schmidhuber. Deep learning in neural networks: An overview. Neural Networks, 61:85–117, 2015.

- [14] Yu Qi, Yueming Wang, Xiaoxiang Zheng, and Zhaohui Wu. Robust feature learning by stacked autoencoder with maximum correntropy criterion. In 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pages 6716–6720. IEEE, 2014.

- [15] Luping Ji, Xiaorong Pu, Hong Qu, and Guisong Liu. One-dimensional pairwise cnn for the global alignment of two dna sequences. Neurocomputing, 149:505–514, 2015.

- [16] Q. Zhang, L. Zhu, W. Bao, and D. Huang. Weakly-supervised convolutional neural network architecture for predicting protein-dna binding. IEEE/ACM Transactions on Computational Biology and Bioinformatics, 17(2):679–689, 2020.

- [17] Gary D Stormo and George W Hartzell. Identifying protein-binding sites from unaligned dna fragments. Proceedings of the National Academy of Sciences, 86(4):1183–1187, 1989.

- [18] Martin C. Frith, Michael C. Li, and Zhiping Weng. Cluster-Buster: finding dense clusters of motifs in DNA sequences. Nucleic Acids Research, 31(13):3666–3668, 07 2003.

- [19] Tom Lesluyes, James Johnson, Philip Machanick, and Timothy L. Bailey. Differential motif enrichment analysis of paired chip-seq experiments. BMC Genomics, 15(1):752, 2014.

- [20] Peter J. A. Cock, Tiago Antao, Jeffrey T. Chang, Brad A. Chapman, Cymon J. Cox, Andrew Dalke, Iddo Friedberg, Thomas Hamelryck, Frank Kauff, Bartek Wilczynski, and Michiel J. L. de Hoon. Biopython: freely available python tools for computational molecular biology and bioinformatics. Bioinformatics, 25(11):1422–1423, 8/5/2020 2009.

- [21] Charles E. Grant, Timothy L. Bailey, and William Stafford Noble. Fimo: scanning for occurrences of a given motif. Bioinformatics, 27(7):1017–1018, 9/8/2020 2011.

- [22] Mengchi Wang, David Wang, Kai Zhang, Vu Ngo, Shicai Fan, and Wei Wang. Motto: Representing motifs in consensus sequences with minimum information loss. Genetics, page genetics.303597.2020, 08 2020.

- [23] Timothy L. Bailey, Mikael Boden, Fabian A. Buske, Martin Frith, Charles E. Grant, Luca Clementi, Jingyuan Ren, Wilfred W. Li, and William S. Noble. Meme suite: tools for motif discovery and searching. Nucleic Acids Research, 37(suppl_2):W202–W208, 9/9/2020 2009.

- [24] Martin C. Frith, Neil F. W. Saunders, Bostjan Kobe, and Timothy L. Bailey. Discovering sequence motifs with arbitrary insertions and deletions. PLOS Computational Biology, 4(5):e1000071–, 05 2008.

- [25] T L Bailey and M Gribskov. Combining evidence using p-values: application to sequence homology searches. Bioinformatics, 14(1):48–54, 9/9/2020 1998.

- [26] Oriol Fornes, Jaime A Castro-Mondragon, Aziz Khan, Robin van der Lee, Xi Zhang, Phillip A Richmond, Bhavi P Modi, Solenne Correard, Marius Gheorghe, Damir Baranašić, Walter Santana-Garcia, Ge Tan, Jeanne Chèneby, Benoit Ballester, François Parcy, Albin Sandelin, Boris Lenhard, Wyeth W Wasserman, and Anthony Mathelier. JASPAR 2020: update of the open-access database of transcription factor binding profiles. Nucleic Acids Research, 48(D1):D87–D92, 11 2019.

- [27] Ian Goodfellow, Yoshua Bengio, and Aaron Courville. Deep Learning. MIT Press, 2016.

- [28] Jürgen Schmidhuber. Deep learning in neural networks: An overview. Neural Networks, 61:85–117, 2015.

- [29] Yann LeCun, Yoshua Bengio, and Geoffrey Hinton. Deep learning. Nature, 521(7553):436–444, 2015.

- [30] Ethan J Moyer and Anup Das. Machine learning applications to dna subsequence and restriction site analysis. arXiv preprint arXiv:2011.03544, 2020.