Monocular Event-Inertial Odometry with Adaptive decay-based Time Surface and Polarity-aware Tracking

Abstract

Event cameras have garnered considerable attention due to their advantages over traditional cameras in low power consumption, high dynamic range, and no motion blur. This paper proposes a monocular event-inertial odometry incorporating an adaptive decay kernel-based time surface with polarity-aware tracking. We utilize an adaptive decay-based Time Surface to extract texture information from asynchronous events, which adapts to the dynamic characteristics of the event stream and enhances the representation of environmental textures. However, polarity-weighted time surfaces suffer from event polarity shifts during changes in motion direction. To mitigate its adverse effects on feature tracking, we optimize the feature tracking by incorporating an additional polarity-inverted time surface to enhance the robustness. Comparative analysis with visual-inertial and event-inertial odometry methods shows that our approach outperforms state-of-the-art techniques, with competitive results across various datasets.

I Introduction

Accurate environmental perception is essential in robotics. Traditional vision sensors, like conventional cameras, often suffer from motion blur during rapid movement and can lose image details due to their limited dynamic range, thereby undermining perception accuracy and robustness. Event cameras, equipped with Dynamic Vision Sensors (DVS), offer a promising solution to these challenges. They generate events asynchronously whenever a pixel’s brightness change surpasses a preset threshold. Consequently, event cameras boast a wide dynamic range, high temporal resolution, low energy consumption, and immunity to motion blur.

It is commonly recognized that high-textured regions trigger events more frequently than low-textured ones, making it possible to extract texture details from the event stream. However, processing these asynchronous events is a challenging task. For this reason, various event representation methods have been proposed, and Gallego et al. [1] classify these event representations into Individual Events, Event Packet, Event Frame/Image, Time Surface, etc. Time Surface [2], a 2D map representation in which each pixel stores the timestamp of the corresponding event, is widely utilized in event-based SLAM or odometry methods [3, 4].

To highlight recent events over past events, time surfaces often employ an exponential decay kernel [5], but this method presents some inherent limitations. Initially, the exponential decay kernel requires a preset parameter—time constant , which requires manual adjustment for different sequences. It lacks adaptability to the dynamics of the event stream, and this approach is prone to bold edges and noticeable trailing when the event frequency is high. Moreover, it does not filter out events that contribute less to the texture, resulting in superfluous events within time surfaces. Furthermore, the motion direction of the event camera influences the polarities. When sudden changes occur in the direction of motion, the most recent events may exhibit opposite polarities. Consequently, this can lead to fluctuations in the grayscale values of the pixels associated with these events, potentially disrupting feature tracking.

To tackle the aforementioned challenges, inspired by [6], we propose a monocular event-based visual inertial odometry with the adaptive decay-based time surface representation and polarity-aware tracking. The main contributions of the paper are concluded as follows:

-

•

We propose a real-time monocular event-inertial odometry based on adaptive decay-based time surface and polarity-aware tracking within the MSCKF framework for accurate pose estimation.

-

•

We present an adaptive decay-based time surface to accommodate the dynamic characteristics of the event stream. And we propose a polarity-aware tracking method that improves the stability of feature tracking by utilizing an additional polarity-inverted time surface.

-

•

We evaluate the proposed method using different datasets, comparing its accuracy with visual-inertial odometry and event-inertial odometry methods, and assessing the efficiency of the adaptive decay and the proposed tracking approach. Experimental results show that our method produces competitive results.

The remainder of this paper is organized as follows: Section II provides a brief summary of recent works. Our system is described in Section III. Section IV presents detailed experimental settings and results in multiple datasets. Finally, Section V offers a succinct overview of our system and outlines future directions.

II Related Works

II-A Event-based Visual Odometry

Kueng et al. [7] developed a pioneering method for event-based visual odometry that relies on feature extraction from grayscale maps and asynchronous event-based tracking, facilitating 6DoF pose estimation and mapping. However, this method is confined to feature points detected in image frames. Another stride in event-based visual odometry by Kim et al. [8] achieved real-time event-based SLAM by integrating triplet probabilistic filters for pose estimation, scene mapping, and intensity estimation, although this approach demands GPU acceleration due to its computational intensity. EVO [9] advanced the field by presenting an image-to-model alignment-based tracking approach to capture rapid camera movements, recovering semi-dense maps in parallel processing, although a lengthy start-up phase and poor accuracy hinder it. EDS [10] improved upon this with an event generation model to track camera motion, enhancing the accuracy of visual odometry but at the expense of increased computational demand and slower optimization speeds. Lastly, an innovative 6DoF motion compensation mechanism by Huang et al. [11] allowed deblurred event frame generation synchronized to RGB images using an event generation model, addressing the modality disparities between images and event data.

Geometric approaches inadequately harness event data, whereas deep learning-based event odometers introduce an innovative processing paradigm. RAMP-VO [12] is the inaugural end-to-end framework for event- and image-based visual odometry, merging asynchronous event streams with image data through a parallel encoding scheme. Alternatively, DEVO [13] constitutes the premier monocular event-only odometry system, grounded in deep learning, which trains on event voxel grids and inverse depth maps under the guidance of ground truth poses, achieving markedly enhanced accuracy over conventional techniques. Although deep learning odometry signifies a stride in precision, cost-effective accuracy enhancements on computation-constrained platforms, such as drones, may still benefit from integrating event data with IMU measurements.

II-B Event-based Visual Inertial Odometry

Zhu et al. [14] introduced the pioneering event-based odometry by integrating an event-driven tracker with IMU data. However, its real-time application is hindered by computationally demanding feature tracking. Vidal et al. [15] advanced the field by establishing a tightly integrated framework combining events, frames, and inertial readings for enhanced state estimation. Complementarily, some research integrates events and IMU within a continuous-time schema. Mueggler et al. [16] devised a continuous-time approach for merging high-frequency event and IMU data for visual inertial odometry. Embracing a sophisticated feature tracker [17], EKLT-VIO [18] demonstrated accurate performance in Mars-like and high-dynamic-range sequences and showed good potential in vision-based exploration on Mars. Dai et al. [19] presented a comprehensive model marrying continuous-time inertial data with events, innovating an exponential decay correlation for events. Guan et al. [20] developed a uniformly distributed event corner detection algorithm for raw events, and designed two event representations to perform feature tracking and loop closure matching for a keyframe-based visual inertial system. Based on this, Guan et al. [21] utilized motion compensation for the event stream based on IMU measurements and introduced line and point feature constraints, improving odometric precision.

Although time surfaces based on exponential decay can be additionally motion compensated to enhance performance, as employed by [11, 21], they still rely on accurate sensor measurements (e.g. IMU) or accurate odometer estimates. To make the exponential decay kernel adaptable to the dynamics of the event stream, we adopted the event activity model proposed by Nunes et al. [6], and introduced an adaptive decay kernel for different event cameras and practical operating conditions. Leveraging such advancements, we propose a monocular event-inertial odometry with the adaptive decay kernel and optimize feature tracking for the problems faced by the polarity-weighted time surface-based approach, resulting in a more robust and accurate odometry.

III Methodology

III-A System Overview

The system overview is depicted in Fig. 2. Raw events are transformed into adaptive decay-based time surfaces (Sec. III-C) using the proposed Time-Priority strategy. Data from a monocular event camera and an IMU are fused in the Multi-State Constraint Kalman Filter (MSCKF) [22], enabling precise and low-latency pose estimation. Given the sparse event outputs from the event camera when the system is in static or slow motion, we utilize a dynamic initialization method [23] to incorporate feature observations across time surfaces and IMU measurements for system initialization. Once initialized, upon receiving a new event packet, the system leverages IMU measurements to propagate the mean and covariance of the state to the timestamp of the new event packet, and augments a cloned IMU pose to the state vector (Sec. III-B). Afterward, sparse feature observations from the proposed tracking method (Sec. III-D) are utilized to update the state (Sec. III-E). Landmarks and old cloned poses are marginalized out of the state vector for computational efficiency.

III-B State Vector of Event-Inertial Odometry

The state vector at timestamp of the system is composed of the inertial state , inertial pose clones and the inverse depths of landmarks , given by

| (1) | ||||

where denotes the unit quaternion, and the corresponding rotation matrix is denoted as . Furthermore, and denote the position and velocity of the IMU in the global frame at time , while and are the biases of the accelerometer and the gyroscope, respectively.

The sliding window maintains a set of cloned IMU poses at the timestamps of the event packet for feature triangulation and state update like an RGB-Camera-based VIO. Stable features tracked across the entire sliding window frames are considered as SLAM features and will be augmented to the state vector for extending the time span of active constraints. In this paper, we use the inverse depth parameterization and only include maximum landmarks in the state for limiting computational complexity. The extrinsic parameters between the IMU and the camera are precalibrated and assumed to be known. In our practical experiments, and are set to 10 and 50, respectively.

III-C Time Surface and Adaptive decay

Event Definition: Events primarily occur in regions with rich environmental textures, such as edges. When the brightness of a pixel changes beyond a certain threshold, an event is triggered. An event at timestamp with pixel coordinate is defined as:

| (2) |

where the polarity indicates whether the brightness increase or decrease.

Time Surface: As illustrated in Fig. 1, event frames (in the second column) generated from spatio-temporal neighborhoods of raw event are the primitive method for representing event positions within an image. This representation method is highly sensitive to event counts and often struggles to accurately capture environmental texture due to the lack of consideration for event timestamps. To address this issue, the Time Surface [2], which retains event timestamps, is proposed. To prioritize recent event information, the time surface is usually used together with an exponential decay kernel [5]:

| (3) |

where represents a predefined time constant and is any previous event and is its timestamp. The time constant needs to be fine-tuned to mitigate interference from past events. However, this invariant time constant does not accommodate all camera motion and should be adjusted with different motion situations. A constant results in bold edges in the time surfaces when the motion is aggressive.

Adaptive Decay Kernel: To reflect the dynamic characteristics of the event stream, [6] proposes the Event Activity as follows:

| (4) |

where is the timestamp of any previous event and is the count of events within the time interval . Then we can obtain the adaptive decay kernel for the time surface, which is derived from the exponential decay. Given the time derivation of Eq. (3):

| (5) |

where represents the decay rate which we expect to be time-varying and influenced by the event activity. The decay rate is intended to be proportional to the event activity , solving the Eq. (5) yields the adaptive decay:

| (6) |

where is the event activity at previous timestamp and is the coefficient. Eq. (6) introduces the coefficient , which differs from [6] where the coefficient is set to a constant of 1. However, this setting ignores the influence of the camera resolution as well as the threshold for triggering events, resulting in too little decay for redundant events or too much decay for active events.

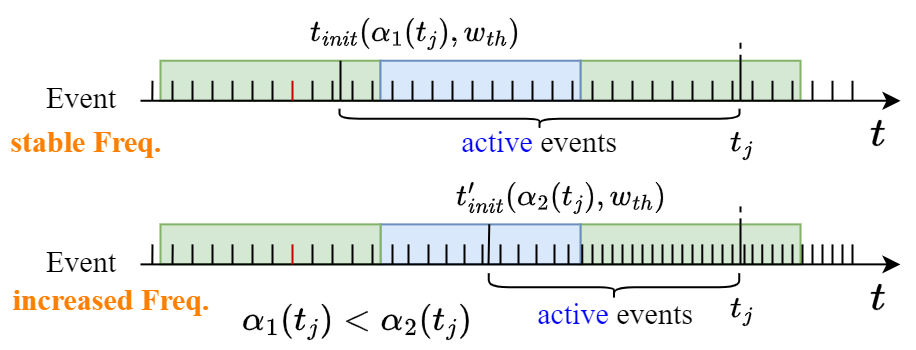

The number of event activities increases with event frequency. As inferred from Eq. (6), when the event frequency increases, events with the later timestamp have less decays. In other words, if the pixel value remains constant, a higher event frequency implies that the event timestamps are closer to . Event information is retained for a longer period of time when the event frequency is high. Therefore, modeling the dynamics of the event stream by introducing the event activity enables adaptively adjusting the decay for the events and thus provides high-quality imaging.

Adaptive decay-based Time Surface (ATS) : We then discuss how to use the adaptive decay to generate an adaptive time surface with timestamp . The adaptive decay kernel includes the event activity which could reflect the dynamic characteristics of the event stream. Thus, the adaptive time surface based on the kernel is more robust to various event frequencies and naturally provides a novel and effective way to filter out inactive events and reduce noise. Specifically, any previous event with is considered inactive ( is the timestamp of the adaptive time surface), with is defined as:

| (7) |

here is a flexible threshold and is modified from Eq. (6) for the convenience of calculation. In practical computations, can be approximated by the event activity associated with the closest event to time .

When the system receives an event message, as shown in the Fig. 3, the event activity is calculated recursively, i.e., the decay is calculated first from Eq. (6), and then Eq. (4) is used to calculate the event activity at the event timestamp . is always 1 since there is only one event in adjacent moments. In this recursive manner, calculate all event activity at the moment of the event timestamp and save it with the corresponding event.

And there are two different strategies to determine the initial event timestamp and the timestamp of the adaptive decay-based time surface:

-

•

Data-Priority [6]: starts with the first event timestamp and is typically reset after obtaining a set of active events when . The method tends to utilize all events without duplication, but the timestamp of the time surface cannot be predicted in advance. In addition, the frequency of the time surfaces generated based on this method is sensitive to the threshold .

-

•

Time-Priority: we first estimate based on the the timestamp of the time surface, event activity and predefined threshold , as follows:

(8) This approach allows for arbitrary timestamps for time surfaces and decouples event activity and generation of the adaptive time surfaces for parallel processing.

Events that are considered active have timestamps between and . We find the latest active events in each pixel, denoted as and the pixel value is or if the time surface is polarity-weighted. If the pixel has no active events, set the value of the pixel to zero. Finally, all pixel values are mapped to .

III-D Polarity-aware Feature Tracking

To verify the performance of adaptive decay-based time surfaces in the odometry, we utilize the Fast corners [24] and track them with the LK optical flow [25] method. This approach functions properly within datasets containing event cameras. However, we observe that abrupt changes in motion direction may lead to tracking failures as they violate the assumption of brightness constancy. Diverse motion directions induce events with opposite polarities at identical scene positions, leading to fluctuations in pixel values, as illustrated in Fig. 1 and Fig. 4. Such fluctuations notably impact the accuracy of odometry estimation.

Actively inverting event polarities generates a polarity-inverted time surface map, and this map can be regarded as an approximation under the condition of maintaining event polarity, which to some extent mitigates the issue of the violated brightness constancy assumption. By simultaneously tracking both the original and polarity-inverted time surfaces and adaptively merging the tracking results, we ensure continuous and stable feature tracking. Estimating the velocity based on IMU measurements to make this determination may suffer from misjudgments or delays. We track both images separately and then compare the number of successfully tracked features. When the number of successfully tracked features on the polarity-weighted time surface is less than that on the polarity-inverted map, we merge the both tracking results. Finally, we apply the RANSAC method to eliminate outliers and obtain the final matches by estimating the fundamental matrix from the matched features.

III-E Update with Tracked Features

When tracking results are available, we select features within the sliding window for triangulation and system update. The system update follows two principles. Firstly, we aim to triangulate using as much data as possible to ensure accuracy, thereby ensuring positive gains from system updates. Secondly, stable tracked points are selectively included in the state vector for continuous estimation, aiming to improve system accuracy while maintaining control over increased computation time. Based on these principles, features are categorized into SLAM and MSCKF types.

Specifically, for the current time surface feature tracking, if tracking fails or reaches the maximum length (equal to the sliding window), suggesting that landmark observations are maximized, we attempt to triangulate. For a landmark associating with a successfully triangulated feature, we have

| (9) |

where represents the back projection function that transforms a pixel to the normalized image plane. The inverse depth is defined in the anchor frame. For instance, the SLAM feature always selects the oldest frame in the sliding window as the anchor frame. Consequently, the position could be computed using the observation in the anchor frame. It’s worth noting that for the MSCKF feature, the anchor frame is determined as the first observed frame. Given the new observation (e.g. ) in the latest frame , the nonlinear measurement model is given by:

| (10) | ||||

| (11) |

where the measurement noise is associated with the event pixel noise and follows a white Gaussian distribution . Features tracked throughout the entire sliding window are augmented into the state vector, continuously updating the system with subsequent landmark observations—referred to as SLAM features. Other successfully triangulated points, termed MSCKF features, are updated using the efficient MSCKF nullspace projection [22], avoiding the inclusion of landmarks in the state vector and thus reducing system complexity.

IV Experiments

In this section, we perform extensive experiments to evaluate the efficacy of the proposed method. Initially, we conduct comparisons with classical visual-inertial odometry approaches, specifically two optimization-based methods: ORB-SLAM3 [26] and VINS-Mono [27], along with a filtering-based method, OpenVINS [28], on the HKU dataset [29]. Subsequently, we compare our method with event-inertial odometry [14, 30, 15, 31, 20, 19] on the DAVIS 240C dataset [32], which is collected using the event camera (with a resolution of ) and an internal IMU. Additionally, we conducted ablation experiments on different decay kernels and feature tracking methods, on the dataset [20]. Both datasets [29, 20] contain the DAVIS 346 event camera with a resolution of . Finally, we assess the time consumption of the system to evaluate its real-time performance. The experiments are conducted with the left camera if the stereo event camera is available.

The experiments are carried out on a desktop computer equipped with an Intel Core i7-8700 CPU running Ubuntu 20.04 and ROS Noetic. Accuracy metrics for odometry evaluation consist of Mean Position Error (MPE, %, per 100 meters), Mean Yaw Error (MYE, deg/m) and Absolute Trajectory Error (ATE, m), while the trajectories are aligned with the ground truth using SE(3) Umeyama alignment [33] before evaluation. Taking into account the differences between event cameras and to obtain better environmental textures, in Eq. (6) is set to 0.2 in the DAVIS 240C dataset and 0.1 for another two datasets.

| Sequence | hku_agg_rota | hku_agg_small_flip | hku_agg_tran | hku_agg_walk | hku_dark_normal | hku_hdr_agg | hku_hdr_circle | hku_hdr_slow | hku_hdr_tran_rota |

|---|---|---|---|---|---|---|---|---|---|

| ORB-SLAM3 [26] | 0.711 | 1.895 | 0.841 | 1.389 | 0.695 | 0.623 | 1.451 | 0.635 | 0.971 |

| Ours | 0.276 | 0.807 | 0.211 | 0.350 | 0.524 | 0.271 | 0.714 | 0.430 | 0.496 |

| Sequence | Length (m) | Zhu’s [14] | Rebecq’s [30] | Vidal’s [15] | Alzugaray’s [31] | Guan’s [20] | Dai’s [19] | Ours |

|---|---|---|---|---|---|---|---|---|

| boxes_6dof | 69.852 | 3.61 | 0.69 | 0.44 | 2.03 | 0.61 | 1.5 | 0.32 (0.02) |

| boxes_translation | 65.237 | 2.69 | 0.57 | 0.76 | 2.55 | 0.34 | 1.0 | 0.36 (0.01) |

| dynamic_6dof | 39.615 | 4.07 | 0.54 | 0.38 | 0.52 | 0.43 | 1.5 | 0.49 (0.05) |

| dynamic_translation | 30.068 | 1.90 | 0.47 | 0.59 | 1.32 | 0.26 | 0.9 | 0.59 (0.05) |

| hdr_boxes | 50.088 | 1.23 | 0.92 | 0.67 | 1.75 | 0.40 | 1.8 | 0.31 (0.02) |

| hdr_poster | 55.437 | 2.63 | 0.59 | 0.49 | 0.57 | 0.40 | 2.8 | 0.18 (0.02) |

| poster_6dof | 61.143 | 3.56 | 0.82 | 0.30 | 1.50 | 0.26 | 1.2 | 0.31 (0.03) |

| poster_translation | 49.265 | 0.94 | 0.89 | 0.15 | 1.34 | 0.40 | 1.9 | 0.23 (0.04) |

| Average | 52.588 | 2.58 | 0.69 | 0.47 | 1.45 | 0.39 | 1.58 | 0.35 (0.03) |

IV-A Odometry Accuracy Evaluation and Comparison

Comparison with VIO: We first test the accuracy of three visual odometry methods with the proposed odometry to validate the superiority of event cameras in challenging environments. The results are presented in Tab. I. VINS-Mono [27] and OpenVINS [23] failed in all sequences in this dataset, while ORB-SLAM3 [26] (using the monocular visual-inertial method) demonstrates a larger MPE in all sequences, with an average MPE of 1.023. Our method exhibits consistent performance across all sequences with an average MPE of 0.453. From the above results, our event-based odometry is more accurate than conventional camera-based methods in environments with high dynamic ranges and intense motion.

Comparison with EIO: Next, we compare the accuracy of this method with other event-based approaches. The estimated and ground-truth trajectories were aligned with the subset [5-10]s. The results are presented in Tab. II. The proposed method achieves an average MPE of 0.35, indicating an error of 0.35m for 100m motion. The test results demonstrate that our method outperforms others in six sequences and shows comparable performance in the remaining sequences. The estimated trajectories of sequence hdr_boxes and hdr_poster are shown in Fig. 5. The figure shows that our odometry can provide good pose estimates, but can still find a partial difference between the estimated trajectory and the ground truth. When the event camera moves slowly, the output events are not sufficient to reflect the texture of the environment, which inevitably leads to inadequate features and inaccurate tracking. Compared to other event-inertial odometry methods, our approach estimates poses more accurately. The adaptive decay-based method enables high-quality texture for feature extraction and tracking, enhancing odometry accuracy with the proposed tracking method.

IV-B Decay Comparison and Parameter Setting

In this section, we analyze how different decay functions (exponential and adaptive decay) for time surface representations affect odometry accuracy. Specifically, we evaluate various time constants for the exponential decay kernel and compare them with the adaptive decay kernel (as shown in Tab. III). The experiments utilize a dataset publicly available in [20], including a DAVIS 346 event camera.

Comparison of different decays: Tab. III reveals that different time constants affect the accuracy of the odometry. Usually, a larger time constant will keep more information from older events but also introduce unwanted disturbances. Setting to 90ms may result in coarser and overlapping edges, leading to lower precision. However, in sequences such as vicon_hdr1 and vicon_hdr2, slightly larger time constants can retain more information, with set to 60ms exhibiting slightly higher accuracy compared to set to 30ms. The odometry with exponential decay-based time surface achieves the relatively best average accuracy when the time constant is 30ms. But it is inevitably prone to bold edges and trailing when the event camera motion suddenly becomes faster. The experimental results show that the odometry with the adaptive decay-based time surface achieves the lowest average ATE of 0.182m. The adaptive decay, which adjusts the decay rate according to the dynamics of the event stream, facilitates the creation of high-quality time surface maps, providing better odometry accuracy than exponential decay.

Comparison of Different Thresholds : The threshold provides a novel solution for filtering out events that contribute little to the texture and saving the time surface generation time. From Eq. (8), is first estimated based on the event activity to bound the time range of active events. If the event activity remains constant, a larger threshold tends to utilize events within a smaller time interval, occasionally hindering the formation of desired environmental textures. Conversely, a smaller threshold tends to use events within a larger time interval, enriching image information but introducing interference from old events. Fig. 6 depicts adaptive time surface maps with different threshold settings. A large threshold of 0.1 results in insufficient texture, while a small threshold of 0.01 incorporates older events that cause trailing effects. It requires a reasonable setting of the threshold according to practical requirements to achieve optimal texture performance. And a good threshold setting usually accommodates all sequences in the dataset without having to set it individually for each sequence. It is important to note that the pixel values for pixels containing active events are determined by the decay kernel and the active event, rather than the threshold .

| Sequence | TS | TS | TS | ATS | ATS (T) |

|---|---|---|---|---|---|

| vicon_aggressive_hdr | 0.377 | 0.397 | 0.450 | 0.155 | 0.163 |

| vicon_dark1 | 0.248 | 0.294 | 0.251 | 0.124 | 0.111 |

| vicon_dark2 | 0.120 | 0.163 | 0.191 | 0.253 | 0.187 |

| vicon_darktolight1 | 0.273 | 0.309 | 0.359 | 0.177 | 0.219 |

| vicon_darktolight2 | 0.235 | 0.353 | 0.249 | 0.209 | 0.187 |

| vicon_hdr1 | 0.277 | 0.263 | 0.426 | 0.267 | 0.180 |

| vicon_hdr2 | 0.365 | 0.282 | 0.866 | 0.232 | 0.220 |

| vicon_hdr3 | 0.187 | 0.280 | 0.269 | 0.123 | 0.122 |

| vicon_hdr4 | 0.229 | 0.272 | 0.520 | 0.192 | 0.171 |

| vicon_lighttodark1 | 0.393 | 0.362 | 0.405 | 0.228 | 0.226 |

| vicon_lighttodark2 | 0.306 | 0.501 | 0.596 | 0.227 | 0.211 |

| Average | 0.274 | 0.316 | 0.417 | 0.198 | 0.182 |

IV-C Polarity-aware Feature Tracking Evaluation

Subsequently, we investigate the effectiveness of the polarity weighting and the polarity-aware feature tracking process, as presented in Tab. III. Experimental results indicate some improvement when using polarity weighting, achieving average ATE of 0.210m. Polarity weighting enhances the distinction between brighter and darker pixels in the time surface maps, particularly accentuating nearby edges. While offering the above benefits, it also introduces challenges for feature tracking, mainly when the event camera’s motion direction changes abruptly, causing shifts in event polarity and undermining the assumption of intensity constancy. Our method incorporates the tracking results of time surfaces with inverted polarity, which somewhat mitigates the problem of tracking failures due to abrupt changes in the direction of motion, and thus achieves an improvement in the accuracy.

IV-D Computational Efficiency

Finally, we conduct a detailed analysis of the time consumption for each operation in the proposed method, as outlined in Tab. IV. The analysis is conducted on a typical sequence vicon_aggressive_hdr, which has aggressive motion speed and includes high dynamic range scenes. The reported results represent the average values obtained from multiple tests. The statistical results indicate that the average time required for generating an adaptive time surface map is 5.4ms, while the adaptive tracking module is 6.7ms. The total time required for the system to complete one update is 16ms, indicating its capability for real-time performance.

|

|

|

|

|

|

|

|||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

5.4 | 6.7 | 1.3 | 0.2 | 2.4 | 16.0 |

V Conclusions and Future Work

Cameras frequently experience motion blur during rapid movement and are constrained by a limited dynamic range. Event cameras, with novel dynamic vision sensors, offer potential solutions to these problems by tracking changes in pixel brightness. However, the asynchronous output of event cameras poses challenges in leveraging event information. We propose a monocular event-inertial odometry with an adaptive decay kernel-based time surface and an MSCKF in the back-end for state propagation and update. The adaptive decay highlights recent events according to the dynamic characteristics of the event stream and can also filter out inactive events. Polarity-weighted time surfaces suffer from polarity shifts when motion direction changes abruptly, and we refine feature tracking by incorporating an additional polarity-inverted time surface map to improve robustness. Extensive experiments demonstrate the competitive accuracy of our proposed method. The number of events may decrease during slow motion, resulting in inadequate textures that pose challenges to stable feature tracking. We will continue to work on improving its robustness and accuracy.

References

- [1] Guillermo Gallego, Tobi Delbrück, Garrick Orchard, Chiara Bartolozzi, Brian Taba, Andrea Censi, Stefan Leutenegger, Andrew J. Davison, Jörg Conradt, Kostas Daniilidis and Davide Scaramuzza “Event-Based Vision: A Survey” In IEEE Transactions on Pattern Analysis and Machine Intelligence 44.1, 2022, pp. 154–180 DOI: 10.1109/TPAMI.2020.3008413

- [2] Tobi Delbruck “Frame-free dynamic digital vision” In Proceedings of Intl. Symp. on Secure-Life Electronics, Advanced Electronics for Quality Life and Society 1, 2008, pp. 21–26 Citeseer

- [3] Yi Zhou, Guillermo Gallego and Shaojie Shen “Event-Based Stereo Visual Odometry” In IEEE Transactions on Robotics 37.5, 2021, pp. 1433–1450 DOI: 10.1109/TRO.2021.3062252

- [4] Yi–Fan Zuo, Jiaqi Yang, Jiaben Chen, Xia Wang, Yifu Wang and Laurent Kneip “DEVO: Depth-Event Camera Visual Odometry in Challenging Conditions” In 2022 International Conference on Robotics and Automation (ICRA), 2022, pp. 2179–2185 DOI: 10.1109/ICRA46639.2022.9811805

- [5] Xavier Lagorce, Garrick Orchard, Francesco Galluppi, Bertram E. Shi and Ryad B. Benosman “HOTS: A Hierarchy of Event-Based Time-Surfaces for Pattern Recognition” In IEEE Transactions on Pattern Analysis and Machine Intelligence 39.7, 2017, pp. 1346–1359 DOI: 10.1109/TPAMI.2016.2574707

- [6] Urbano Miguel Nunes, Ryad Benosman and Sio-Hoi Ieng “Adaptive Global Decay Process for Event Cameras” In 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023, pp. 9771–9780 DOI: 10.1109/CVPR52729.2023.00942

- [7] Beat Kueng, Elias Mueggler, Guillermo Gallego and Davide Scaramuzza “Low-latency visual odometry using event-based feature tracks” In 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2016, pp. 16–23 DOI: 10.1109/IROS.2016.7758089

- [8] Hanme Kim, Stefan Leutenegger and Andrew J Davison “Real-time 3D reconstruction and 6-DoF tracking with an event camera” In Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11-14, 2016, Proceedings, Part VI 14, 2016, pp. 349–364 Springer

- [9] Henri Rebecq, Timo Horstschaefer, Guillermo Gallego and Davide Scaramuzza “EVO: A Geometric Approach to Event-Based 6-DOF Parallel Tracking and Mapping in Real Time” In IEEE Robotics and Automation Letters 2.2, 2017, pp. 593–600 DOI: 10.1109/LRA.2016.2645143

- [10] Javier Hidalgo-Carrió, Guillermo Gallego and Davide Scaramuzza “Event-aided Direct Sparse Odometry” In 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022, pp. 5771–5780 DOI: 10.1109/CVPR52688.2022.00569

- [11] Jiafeng Huang, Shengjie Zhao, Tianjun Zhang and Lin Zhang “MC-VEO: A Visual-Event Odometry With Accurate 6-DoF Motion Compensation” In IEEE Transactions on Intelligent Vehicles 9.1, 2024, pp. 1756–1767 DOI: 10.1109/TIV.2023.3323378

- [12] Roberto Pellerito, Marco Cannici, Daniel Gehrig, Joris Belhadj, Olivier Dubois-Matra, Massimo Casasco and Davide Scaramuzza “End-to-end Learned Visual Odometry with Events and Frames”, 2024 arXiv: https://arxiv.org/abs/2309.09947

- [13] Simon Klenk, Marvin Motzet, Lukas Koestler and Daniel Cremers “Deep Event Visual Odometry” In 2024 International Conference on 3D Vision (3DV), 2024, pp. 739–749 DOI: 10.1109/3DV62453.2024.00036

- [14] Alex Zihao Zhu, Nikolay Atanasov and Kostas Daniilidis “Event-Based Visual Inertial Odometry” In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017, pp. 5816–5824 DOI: 10.1109/CVPR.2017.616

- [15] Antoni Rosinol Vidal, Henri Rebecq, Timo Horstschaefer and Davide Scaramuzza “Ultimate SLAM? Combining Events, Images, and IMU for Robust Visual SLAM in HDR and High-Speed Scenarios” In IEEE Robotics and Automation Letters 3.2, 2018, pp. 994–1001 DOI: 10.1109/LRA.2018.2793357

- [16] Elias Mueggler, Guillermo Gallego, Henri Rebecq and Davide Scaramuzza “Continuous-Time Visual-Inertial Odometry for Event Cameras” In IEEE Transactions on Robotics 34.6, 2018, pp. 1425–1440 DOI: 10.1109/TRO.2018.2858287

- [17] Daniel Gehrig, Henri Rebecq, Guillermo Gallego and Davide Scaramuzza “EKLT: Asynchronous photometric feature tracking using events and frames” In International Journal of Computer Vision 128.3 Springer, 2020, pp. 601–618

- [18] Florian Mahlknecht, Daniel Gehrig, Jeremy Nash, Friedrich M. Rockenbauer, Benjamin Morrell, Jeff Delaune and Davide Scaramuzza “Exploring Event Camera-Based Odometry for Planetary Robots” In IEEE Robotics and Automation Letters 7.4, 2022, pp. 8651–8658 DOI: 10.1109/LRA.2022.3187826

- [19] Benny Dai, Cedric Le Gentil and Teresa Vidal-Calleja “A Tightly-Coupled Event-Inertial Odometry using Exponential Decay and Linear Preintegrated Measurements” In 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2022, pp. 9475–9482 DOI: 10.1109/IROS47612.2022.9981249

- [20] Weipeng Guan and Peng Lu “Monocular Event Visual Inertial Odometry based on Event-corner using Sliding Windows Graph-based Optimization” In 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2022, pp. 2438–2445 DOI: 10.1109/IROS47612.2022.9981970

- [21] Weipeng Guan, Peiyu Chen, Yuhan Xie and Peng Lu “PL-EVIO: Robust Monocular Event-Based Visual Inertial Odometry With Point and Line Features” In IEEE Transactions on Automation Science and Engineering, 2023, pp. 1–17 DOI: 10.1109/TASE.2023.3324365

- [22] Anastasios I. Mourikis and Stergios I. Roumeliotis “A Multi-State Constraint Kalman Filter for Vision-aided Inertial Navigation” In Proceedings 2007 IEEE International Conference on Robotics and Automation, 2007, pp. 3565–3572 DOI: 10.1109/ROBOT.2007.364024

- [23] Patrick Geneva, Kevin Eckenhoff, Woosik Lee, Yulin Yang and Guoquan Huang “OpenVINS: A Research Platform for Visual-Inertial Estimation” In 2020 IEEE International Conference on Robotics and Automation (ICRA), 2020, pp. 4666–4672 DOI: 10.1109/ICRA40945.2020.9196524

- [24] Edward Rosten and Tom Drummond “Machine learning for high-speed corner detection” In Computer Vision–ECCV 2006: 9th European Conference on Computer Vision, Graz, Austria, May 7-13, 2006. Proceedings, Part I 9, 2006, pp. 430–443 Springer

- [25] Bruce D Lucas and Takeo Kanade “An iterative image registration technique with an application to stereo vision” In IJCAI’81: 7th international joint conference on Artificial intelligence 2, 1981, pp. 674–679

- [26] Carlos Campos, Richard Elvira, Juan J. Gómez Rodríguez, José M. M. Montiel and Juan D. Tardós “ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual–Inertial, and Multimap SLAM” In IEEE Transactions on Robotics 37.6, 2021, pp. 1874–1890 DOI: 10.1109/TRO.2021.3075644

- [27] Tong Qin, Peiliang Li and Shaojie Shen “VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator” In IEEE Transactions on Robotics 34.4, 2018, pp. 1004–1020 DOI: 10.1109/TRO.2018.2853729

- [28] Patrick Geneva and Guoquan Huang “Openvins state initialization: Details and derivations”

- [29] Peiyu Chen, Weipeng Guan and Peng Lu “ESVIO: Event-Based Stereo Visual Inertial Odometry” In IEEE Robotics and Automation Letters 8.6, 2023, pp. 3661–3668 DOI: 10.1109/LRA.2023.3269950

- [30] Henri Rebecq, Timo Horstschaefer and Davide Scaramuzza “Real-time Visual-Inertial Odometry for Event Cameras using Keyframe-based Nonlinear Optimization” In British Machine Vision Conference (BMVC), 2017

- [31] Ignacio Alzugaray and Margarita Chli “Asynchronous Multi-Hypothesis Tracking of Features with Event Cameras” In 2019 International Conference on 3D Vision (3DV), 2019, pp. 269–278 DOI: 10.1109/3DV.2019.00038

- [32] Elias Mueggler, Henri Rebecq, Guillermo Gallego, Tobi Delbruck and Davide Scaramuzza “The event-camera dataset and simulator: Event-based data for pose estimation, visual odometry, and SLAM” In The International Journal of Robotics Research 36.2 SAGE Publications Sage UK: London, England, 2017, pp. 142–149

- [33] S. Umeyama “Least-squares estimation of transformation parameters between two point patterns” In IEEE Transactions on Pattern Analysis and Machine Intelligence 13.4, 1991, pp. 376–380 DOI: 10.1109/34.88573

- [34] Weipeng Guan, Peiyu Chen, Huibin Zhao, Yu Wang and Peng Lu “EVI-SAM: Robust, Real-Time, Tightly-Coupled Event–Visual–Inertial State Estimation and 3D Dense Mapping” In Advanced Intelligent Systems Wiley Online Library, 2024, pp. 2400243