Modularizing Deep Learning via Pairwise Learning With Kernels

Abstract

By redefining the conventional notions of layers, we present an alternative view on finitely wide, fully trainable deep neural networks as stacked linear models in feature spaces, leading to a kernel machine interpretation. Based on this construction, we then propose a provably optimal modular learning framework for classification that does not require between-module backpropagation. This modular approach brings new insights into the label requirement of deep learning: It leverages only implicit pairwise labels (weak supervision) when learning the hidden modules. When training the output module, on the other hand, it requires full supervision but achieves high label efficiency, needing as few as 10 randomly selected labeled examples (one from each class) to achieve 94.88% accuracy on CIFAR-10 using a ResNet-18 backbone. Moreover, modular training enables fully modularized deep learning workflows, which then simplify the design and implementation of pipelines and improve the maintainability and reusability of models. To showcase the advantages of such a modularized workflow, we describe a simple yet reliable method for estimating reusability of pre-trained modules as well as task transferability in a transfer learning setting. At practically no computation overhead, it precisely described the task space structure of 15 binary classification tasks from CIFAR-10.

Index Terms:

kernel methods, deep learning, neural networks, modular training, task transferabilityI Introduction

Understanding the connections between neural networks (NNs) and kernel methods has been a long-standing goal in machine learning research [1]. Recently, there has been a resurgence of interest in this direction, leading to important insights and powerful algorithms [2, 3, 4, 5, 6, 7, 8]. The established connections require highly nontrivial assumptions: The equivalence between a particular kernel method and a family of NNs only exists in infinitely wide NNs and/or in expectation of random NNs (only weakly-trained [7]). Moreover, existing algorithms using kernels inspired by NNs typically have prohibitively high computational complexity (super-quadratic in sample size, to be exact [6]).

In this paper, we propose a simple method to link fully trainable, finitely wide NNs to kernel machines (KMs), i.e., linear models in feature spaces (reproducing kernel Hilbert spaces (RKHSs)) (Fig. 1). Specifically, as opposed to the existing literature, where the nonlinearity is considered the last component of an NN layer or module (composition of layers), we consider it to be the first component of the next layer. Then layers in deep NN can be identified as linear models in feature spaces connected by potentially nonlinear feature maps. These linear models can be proven to be KMs with kernels induced by the connecting feature maps. The benefits of our construction are three-fold: First, the connections are established for fully trainable and finitely wide NNs, and are exact in the sense that the trainable parameters of the NN layers are exactly those of the corresponding KMs. Second, our method works with any NN layer, fully-connected or convolutional, with minimal adjustments. Last but not least, these NN-equivalent KMs run in linear time, contrasting the super-quadratic runtime of existing algorithms.

We then turn to another important yet understudied problem: How can we modularize deep learning (DL)? In engineering and software engineering in particular, modularization is the core of any scalable workflow. Dividing the design into modules, optimizing them individually, and then wiring them together simplifies implementation via enabling unit tests, and enhances maintainability and reusability. There is currently no reliable way to completely modularize a deep NN, however, since there is no modular learning approach that provably matches the performance of end-to-end training. As a result, one is forced to design and optimize the entire model as a whole, configuring hundreds of hyperparameters simultaneously. When performance is unsatisfying, it is practically impossible to trace the source of the problem to a particular design choice. Even if one succeeded in training a good model, reusing that model for a new task is highly nontrivial: Which part of the model is more reusable for what task?

To enable fully modularized DL, we develop a provably optimal modular training framework for deep NNs in classification that trains each module separately, yet still finds the overall loss function minimizer as an end-to-end approach would. Focusing on the two-module case, we prove that the training of input and output modules can be decoupled by leveraging pairwise kernel evaluations on training examples from distinct classes. This kernel is defined by the output module’s nonlinearity. It suffices to have the input module optimize a proxy objective function that does not involve the trainable parameters of the output one. This removes the need for error backpropagation between modules. On MNIST and CIFAR-10, our modular approach compares favorably against end-to-end training in terms of accuracy.

Our modular learning utilizes labels more efficiently than the existing end-to-end training paradigm. Specifically, training of the input module involves only pairs of examples from distinct classes with no need for knowing the actual classes. This is a weaker form of supervision than knowing exactly which class each example belongs to as required by backpropagation. And we empirically show that the output module, which requires full supervision in training, is highly label-efficient, achieving accuracy on CIFAR-10 with randomly selected labeled examples (one from each class) using a ResNet-18 [9] backbone ( when using all labels). Overall, our modular training can leverage a weaker form of supervision yet still produce models as performant as those obtained from end-to-end backpropagation, indicating that the existing form of supervision and backpropagation is not efficient enough. This can potentially enable less costly procedures for acquiring labeled data and more powerful un/semi-supervised learning algorithms.

To showcase one of the main benefits of modularization — module reuse with confidence — we demonstrate that one can easily and reliably quantify the reusability of a pre-trained module on a new target task with our proxy objective function. This provides a fast yet effective solution to an important practical issue in transfer learning. Moreover, this method can be extended to measure task transferability, a central problem in transfer learning, continual/lifelong learning, and multi-task learning [10]. Unlike many existing methods, our approach requires no training, is task agnostic, flexible, and completely data-driven. Nevertheless, it accurately described the task space structure on binary classification tasks derived from CIFAR-10 using only a small amount of labeled data.

To summarize, our main contributions are as follows.

-

•

We present a simple method that establishes connections between fully trainable, finitely wide NNs and KMs, contrasting earlier efforts that always assume random networks or require infinite widths. (Sec. III)

-

•

We present a modular training framework, opening up new possibilities for modularized DL. Focusing on the two-module case, we provide an optimality proof for our learning approach, guaranteeing that it finds the loss function minimizer without between-module backpropagation. As empirical validation, we demonstrate its strong performance on MNIST and CIFAR-10. (Sec. IV)

-

•

Our modular learning approach sheds new light on the label requirement of DL: It relies almost only on implicitly-labeled example pairs, achieving state-of-the-art accuracy on CIFAR-10 with a single randomly chosen fully-labeled example per class. (Sec. VII-D)

-

•

We propose a simple but effective method to quantify module reusability and task transferability using components from our modular training framework, demonstrating that modularized DL enables solutions to important issues in transfer learning, lifelong learning, etc. (Sec. V)

II Notations and Background

II-A Notations

Throughout, we use bold capital letters for matrices and tensors, bold lower-case letters for vectors, and unbold letters for scalars. denotes the component of vector . And denotes the column of matrix . For a 3D tensor , denotes the matrix indexing along the third dimension from the left (or the channel). We use to denote the inner product in an inner product space . And the subscript shall be omitted if doing so causes no confusion. For functions, we use capital letters to denote vector/matrix/tensor-valued functions, and lower-case letters are reserved specifically for scalar-valued ones. In a network, we call a composition of an arbitrary number of layers as a module for convenience.

Since we shall propose an alternative view on NNs that redefine the network layers and also modules, it is helpful to introduce notations to distinguish our view and the conventional one. We use letter (or , depending on if this layer or module is a scalar-valued function) with a numeric subscript to refer to the network layer or module under the conventional view and letter (or ) the layer or module (of the same model) under our view. Example usages can be found in Fig. 1 and Sec. III.

II-B Kernels and Kernel Machines

Kernel machines can be considered as linear models in feature spaces, the mappings into which are potentially nonlinear [11]. Consider a feature map , where is a (real) Hilbert space, i.e., an inner product space over the real numbers that is complete in the metric induced by the inner product, a kernel machine is a linear model in this feature space :

| (1) |

where are its trainable weights and bias, respectively. For certain feature spaces (called RKHSs), one can define a bivariate “kernel” function that is symmetric and positive semidefinite via

| (2) |

This definition is often used as an identity and is referred to as the “kernel trick” in some more modern texts [11].111The correspondence between and holds true in both directions and is sometimes stated the other way around. Namely, per Moore-Aronszajn Theorem, for every symmetric, continuous, positive semidefinite bivariate function that maps into , one can find an RKHS such that , where the feature map is defined through as [12].

An RKHS, different from a general Hilbert space, possesses a “canonical coordinate system” induced by the kernel [13] (in addition to the regular coordinate system induced by its basis) that enables one to concisely express distance between feature vectors using kernel values, thanks to the reproducing property [14]. Concretely, we have

| (3) |

One can use the “kernel trick” to implement highly nontrivial feature maps (thus highly complicated functions in the input space). For feature maps that are not implementable, e.g., when is an infinite series, one can approximate the KM with a set of “centers” as follows:

| (4) |

Assuming a satisfying Eq. 2 can be found and using the “kernel trick”, the right hand side is equal to

| (5) |

Now, the learnable parameters become the ’s anb .

This approximation changes the computational complexity of evaluating the kernel machine on a single example from , where is the dimension of the feature space and can be infinite for certain kernels, to , where is the dimension of the input space. In practice, the runtime for a sample is quadratic in sample size since is typically on the same order as the sample size. There exists acceleration methods that reduce the complexity via further approximation in this case (e.g., [15]), yet the compromise in performance can be nonnegligible in practice especially when the input space dimension is large.

III Revealing the Hidden Kernel Machines in Neural Networks

In this section, we present a method revealing the hidden KMs in NNs. The idea is simple: Instead of considering the nonlinearity to be the last component of a layer or a module (call it the layer or module here for convenience), we consider it to be the first component of the next layer, i.e., layer . After potentially repeating this process for layer to get rid of its trailing nonlinearity, we turned layer into a layer of linear models in feature spaces. These linear models can be shown to be KMs (Fig. 1). We first present the method for fully-connected networks and then extend to CNNs [16]. Contrasting existing works (e.g., [7]), the extension requires minimal adjustments only.

III-A The Methodology

III-A1 Fully-Connected Neural Networks

We use a one-hidden-layer NN as an illustrative example. The idea scales easily to deeper models. Note that we assume the output layer has a single neuron. When the output layer has multiple neurons, one can apply the same analysis to each of these output neurons. Finally, we assume the output layer to be linear without loss of generality since if there is a trailing nonlinearity at the output, it can be viewed as a part of the loss function instead of a part of the network.

Considering an input vector , the model is given as

| (6) | |||

| (7) |

where is an elementwise nonlinearity such as ReLU [17] with for any and some , the input layer, the output layer, the final model output, and and the learnable weights of the NN model.

There are different ways of dividing this particular model into “layers”. Indeed, without changing the input-output mapping, we can redefine the layers as follows.

| (8) |

where and is the canonical inner product of for , i.e., the dot product. In other words, we treat as the new input layer and absorb the nonlinearity into the old output layer, forming the new output layer such that each layer is now one or multiple parallel linear models (models linear in their weights, not necessarily in their inputs) (Fig. 1).

Then one-hidden layer NN can be interpreted as a composition of KMs with finite-dimensional RKHSs. Using Eq. 2, the linear models on the input layer are simply KMs with feature maps being the identity map on , inducing the identity kernel, i.e., . On the other hand, the linear model on the output layer, i.e., , can be easily identified as a KM with feature map being , (and together with the input layer) inducing kernel .

III-A2 Convolutional Neural Networks

The above method for fully-connected networks can be extended to CNNs as follows. We discuss here simple 2D convolutional networks and the same idea generalizes to more complicated models easily.

Similar to the fully-connected case, we first absorb the trailing elementwise nonlinearity of each layer or module of interest into the next layer. Suppose the activation tensor of a given layer or module is , then each channel of the next layer is a matrix of KMs sharing the same weights with the KM having feature map: , where , denotes the operator that returns a vectorized receptive field centered at a specific location (depending on ) upon receiving a matrix, and denotes vector concatenation. This is illustrated in Fig. 2.

There are two main differences compared to the fully-connected case. First, in the convolutional case, the KMs on each channel in a given layer share the same weights whereas in the fully-connected case, there is no such restriction. Second, these KMs have distinct kernel functions, unlike in the fully-connected case where they share kernels. These observations allow for a new perspective in interpreting CNNs. Indeed, the fact that each convolutional layer is essentially KMs using distinct kernels but sharing weights suggests that it can be viewed as an instantiation of the multiple kernel learning framework that has been studied in the kernel method literature for decades [18]. However here the composition is different because it is an embedding of functions.

III-B Implementable Feature Maps and Linear Runtime

Note that, for common NNs, the kernels involved in the above methods have implementable feature maps with feature space dimensions being equal to the widths of the corresponding NN layers (so they are always finite). Therefore, one no longer needs to rely on the kernel trick and approximate the kernel machines through pairwise evaluations on a set of centers — one can simply use the explicit linear model representation ! The major advantage is that the computational complexity of running the model on a single data example becomes , where is the size of the corresponding NN layer (or equivalently, the size of ). This reduces the runtime over a set of data from the usual super-quadratic to linear in sample size, making the model much more practical.

IV Provably Optimal Modular Learning

Constructing a unified model class that encompasses both NNs and KMs yields immediately practical implications. Namely, existing algorithms and results for KMs can now be directly applied to NNs and vice versa. Specifically, one may expect the theory behind KMs (see, e.g., [19, 14, 20]) to significantly enrich our understanding of NNs and enable novel algorithms.

In this section, we propose a provably optimal modular training framework for NNs in classification, which enables fully modularized DL workflows. We focus on the two-module case. The idea can be easily generalized to enable modular training with more than two modules by analyzing one pair of modules at a time. We leave the corresponding optimality proofs as a future work. Applying this framework to NNs essentially relies on viewing the output module as a KM and utilizing its linearity in an RKHS and properties of the RKHS. This demonstrates how the interplay of NN and KM theories can produce useful results.

IV-A Set-Up, Goal, and an Idea

Suppose we have a deep model consisting of two modules and an objective function , where is a training set . can be compositions of arbitrary layers.

A modular learning algorithm trains , freezes it afterwards, then trains . And the goal is that after wiring together the two trained modules, the overall model minimizes .

For a given , define

| (9) |

Clearly, to obtain an that minimizes , the goal when training can be to find an that is in . Then one can simply train to minimize and the resulting minimizer will satisfy . And if we can characterize independently of the trainable parameters of , the training of can be decoupled from that of .

IV-B Main Result

Earlier we have shown how to redefine NN layers such that they become KMs, but we have not yet demonstrated any practical advantage of such a representation. In this section, we show that under this KM view on the output module, the optimal input module can be described independently of the output module, which, as stated above, is the core idea motivating our modular learning algorithm. For simplicity, we discuss only binary classification. The result easily extend to classification with more classes.

Concretely, we present a result stating that if is a single KM with kernel , can be characterized independently of the trainable parameters of via pairwise evaluations of the kernel on training data from distinct classes. The proof of the following theorem is given in the Appendix.

Theorem IV.1.

Let , be given and consider , where are free parameters and is a given mapping into a real inner product space with for all and some fixed .222Throughout, we consider the natural norm induced by the inner product, i.e., . Let be the set of ’s in such that . And let be the set of ’s in such that . Suppose the objective function admits the following form:

| (10) |

where , are all real-valued with nondecreasing and nonincreasing.

For an , let be in . If , satisfies

| (11) |

then

| (12) |

Further, if the infimum of is attained in and equals , then Eq. 11 is equivalent to

| (15) |

Interpreting a two-module classifier as learning a new representation of the given data on which will carry out the classification, the intuition behind our result can be explained as follows. Given some data, its “optimal” representation for a linear model to classify should be the one where examples from distinct classes are located as distant from each other as possible. Thus, the optimal should be the one that produces this optimal representation. And since our is a linear model in an RKHS feature space and because distance in an RKHS can be expressed via evaluations of its reproducing kernel, the optimal can then be fully described with only pairwise kernel evaluations over the training data, i.e., for being training examples from different classes. In other words, an RKHS provides a useful “canonical coordinate system” [13] that enables a concise definition of the optimal input module for any linear classifier in that RKHS using only kernel evaluations.

IV-C Applicability of the Main Result

The earlier Theorem imposes assumptions on the network architecture and the classification objective function. We now show that these assumptions are satisfied in many standard classification set-ups.

IV-C1 A Two-Module View on Neural Networks

Most popular networks, including the ResNet family [9], has the following representation:

| (16) |

where is a output linear layer: and is a composition of arbitrary previous layers ending with a nonlinearity . When the model also uses a nonlinearity on top of the output linear layer, one may absorb the nonlinearity into the loss function such that the model would still assume the aforementioned representation.

Considering the ending nonlinearity of as the beginning component of the output layer, we can view the output layer as the KM output module and apply Theorem IV.1. To be specific, write for some , we can rewrite the model such that it satisfies the condition of Theorem IV.1:

| (17) | |||

| (18) |

Note that the assumption that is fixed for all may require that one normalizes the activation vector/matrix/tensor in practice, which is the only modification one has to make for a standard NN to satisfy the conditions of the theorem.

IV-C2 Loss Functions

In terms of loss functions, many common ones including softmax cross-entropy (two-class version), any monotonic nonlinearity mean squared error, and hinge loss, admit the required representation. We provide details in the Appendix.

IV-D From Theory to Algorithm

To convert the theoretical result into an implementable learning algorithm for classification (with potentially more than two classes), one may proceed as follows. Let an NN be given, where is a linear layer (absorb the trailing nonlinearity into the loss function if necessary) and is a composition of arbitrary layers followed by nonlinearity . Suppose the activation vector of is normalized such that it is a unit vector by (elementwise) dividing the activation vector by its norm. Also let a loss function be given and suppose it (or its two-class analog) satisfies the requirements of Theorem IV.1. Define kernel . Determine based on . Some examples include: for ReLU and sigmoid; for tanh. Denote as . The training consists of two stages: Training and training (without touching ).

Training . Given a batch of training data and let (for negative) denote all pairs of indices such that and (for positive) denote all pairs of indices with . Train to maximize one of the following proxy objective functions.

-

•

Alignment (negative only) (AL-NEO):

(19) -

•

Contrastive (negative only) (CTS-NEO):

(20) -

•

Negative Mean Squared Error (negative only) (NMSE-NEO):

(21)

All of the above proxy objectives can be shown to learn an that satisfies the optimality condition required by Theorem IV.1.

Note that in cases where some of these proxies are undefined (for example, when ), we may train to maximize the following alternative proxy objectives instead assuming is known, where we define to be if or if and if otherwise. Compared to the previous proxies, these are applicable to all ’s and impose a stronger constraint on learning. Specifically, in addition to controlling the inter-class pairs, these proxies force intra-class pairs to share representations.

-

•

Alignment (AL): The kernel alignment [21] between the kernel matrix formed by and on the given data.

-

•

Upper Triangle Alignment (UTAL): Same as AL, except only the upper triangles minus the main diagonals of the two matrices are considered. This can be considered a refined version of the raw alignment.

-

•

Contrastive (CTS):

(22) -

•

Negative Mean Squared Error (NMSE):

(23)

Empirically, we found that alignment and squared error typically produced better results than the contrastive ones, which is potentially due to how the exponential term involved in the contrastive objectives made the range of ideal learning rates to be smaller.

Training . Now suppose has been trained and frozen at , we simply train to minimize the overall loss function . This process is illustrated in Fig. 3.

V A Method for Task Transferability Estimation

In this section, we demonstrate the improved module reusability of modularized DL. Let a source task be defined as one that we have already solved and a target task be one that we are interested in solving. Specifically, we consider the following practical issue in transfer learning: Assume one is given a set of input modules pre-trained on some source tasks and a target task to be solved by training an output module on top of a frozen input module. Which input module will result in the most performant network on the target task?

Underlying this practical problem is a theoretical issue that is central to many important research domains including transfer learning, continual/lifelong learning, meta learning, and multi-task learning: Given a set of tasks, how to effectively model the task space structure in terms of the transferability between task pairs, i.e., how helpful knowledge about a source task is for solving a target task [22, 23, 10, 24].

We propose the following solution to the module reusability (and also task transferability) problem. Let a target task be characterized by the loss function , where is a loss function assuming the representation in Theorem IV.1 and is some training data [10]. Our modular learning framework suggests that we can measure the goodness of these pre-trained ’s for this target task by measuring , where is a proxy objective. This is based on the fact that the that maximizes the proxy objective constitutes the input module of a minimizer for . Therefore, one can simply rank the pre-trained ’s in terms of how well they maximize and select the maximizer. Since a well-trained input module must encode useful information for the source task, this procedure can also fully describe the task space structure of the given tasks. The benefit is that this procedure requires no training. Indeed, one only needs to run the given modules on potentially a subset of . Moreover, this method is task agnostic, flexible, and completely data-dependent.

VI Related Work

VI-A Connecting Neural Networks With Kernel Methods

While some works establish connections via exactly matching one architecture to the other, others do so from a probabilistic perspective by studying large-sample behavior in the infinite layer widths limit and/or taking the expectation over the network parameters. The former line of research, to which this work belongs, often yields more direct and practical results since the theories operate under much milder assumptions.

VI-A1 Exact Equivalences

In [19], a family of kernels were defined to mimic single-hidden-layer MLPs. The resulting KMs bear the same mathematical formulations as the corresponding NNs with the constraint that the input layer weights of the NNs are fixed. The authors of [26] modified these kernels to allow the KMs to correspond to fully-trainable single-hidden-layer MLPs. Their construction can be viewed as a special case of ours. Nevertheless, they presented their work as another way to train MLPs without pointing out the connections with KMs, i.e., their MLPs are in fact also KMs. The input and output layers are trained alternately, with the former learning to minimize the VC dimension [27] of the latter while the latter learning to classify. An optimality guarantee of the training was hinted. In contrast, we extended their theoretical framework, explicitly established the connections between NNs and KMs, and proposed a fully modular training approach with proved strong optimality guarantee.

VI-A2 Equivalences in Infinite Widths and/or in Expectation

That single-hidden-layer MLPs are Gaussian processes in the infinite width limit and in expectation of random input layer has been known at least since [1]. [2] generalized the classic result to deeper MLPs. [5] defined a family of “arc-cosine” kernels to imitate the computations performed by infinitely wide networks in expectation. [4] proposed kernels that are equivalent to expectations of finite-widths random networks. [7] presented exact computations of some kernels, using which the kernel regression models can be shown to be the limit (in widths and training time) of fully-trainable, infinitely wide fully-connected networks trained with gradient descent. In comparison, our work established that NNs can be directly viewed as KMs, requiring neither infinite widths nor taking expectation over random parameters.

VI-B Modularized Deep Learning

NNs were developed with inspiration from human brain structures and functions [28]. The human brain itself is modular in a hierarchical manner, as the learning process always occurs in a very localized subset of highly inter-connected nodes which are relatively sparsely connected to nodes in other modules [29, 30]. That is to say that the human brain is organized as functional, sparsely connected subunits [31].

Many existing works in machine learning can be analyzed from the perspective of modularization. An old example is the mixture of experts [32, 33] which uses a gating function to enforce each expert in a committee of networks to solve a distinct group of training cases. Another recent example is the generative adversarial networks (GANs) [34]. Typical GANs have two competing neural networks that are essentially decoupled in functionality and can be viewed as two modules. In [35], the authors proposed an a posteriori method that analyzes a trained network as modules in order to extract useful information. Most works in this direction, however, achieved only partial modularization due to their dependence of end-to-end optimization.

The authors of [36] pioneered the idea of greedily learn the architecture of an NN, achieving full modularization. In their work, each new node is added to maximize the correlation between its output and the residual error. Several works attempted to remove the need for end-to-end backpropagation via approximating gradient signals locally at each layer or each node [37, 38, 39, 40, 41]. In [42], a backpropagation-free deep architecture based on decision trees was proposed. In [43], the authors proposed to learn the hidden layers with unsupervised contrastive learning, decoupling their training from that of the output layer. Compared to these existing methods that enable full modularization, our method is simple to implement and provide strong optimality guarantee. A similar provably optimal modular training framework was proposed in [44]. However, their optimality result was less general than ours and their framework required that the NN be modified by substituting certain neurons with KMs using classical kernels that have quadratic runtime.

VI-C Task Transferability

Describing the task space structure via measuring the transferability between tasks, i.e., estimating to what extent representations learned from one task can help learning another, is a central problem in transfer learning, multi-task learning, meta learning, continual/lifelong learning, etc [22, 23, 10, 24].

Information-theoretic measures have been proven useful in quantifying task transferability. Early task-relatedness measures include the -relatedness [45] and the -distance [46]. -divergence [47] and Wasserstein distance [48] have received increasing attention in recent years. Specifically, [49] applied -divergence in natural language processing for text classification, whereas [50] used Wasserstein distance to estimate the similarity of linear parameters instead of the data generation distributions. Along this line of research, some more recent methods include H-score [51] and the Bregman-correntropy conditional divergence [52], the latter of which used the correntropy functional [53] and the Bregman matrix divergence [54] to quantify divergence between mappings.

Model-based methods, i.e., methods that leverage trained models to extract knowledge about tasks, are more closely related to our proposed method. [10] estimated task transferability via the negative conditional entropy between their training label sequences, assuming the two tasks share the same input data examples. The proposed method assumed the existence of an optimal trained source model but did not actually use a trained model in the estimation. [24] removed the input-sharing assumption. Both works assumed that the source and the target tasks use the cross-entropy loss. Our method works, on the other hand, asserts mild assumptions on the choice of loss function only. Taskonomy [22] estimated the transferability of a pair of tasks via the transfer performance from the tasks to a third reference task. Task2Vec [23] tuned a single “probe” network on all target tasks and extracted task-characterizing information via the parameters of this network. Both Taskonomy and Task2Vec required training models on target tasks to be able to extract task information. In contrast, our method does not require any training on target tasks.

All of the mentioned model-based methods have their limiting assumptions. The main assumption of our method, required by our optimality guarantee, is that the output module (classifier) that will be tuned on top of the transferred component admits a particular form as described in Theorem IV.1. Essentially, it is required to be a single-layer NN. It is worth noting that this set-up is common in practice. We summarize the properties of these related methods in Table I.

| Method | Training | Test Runtime | Main Assumption |

|---|---|---|---|

| TASK2VEC [23] | ✓ | a reference model | |

| Taskonomy [22] | ✓ | a third reference task | |

| NCE [10] | ✗ | tasks share input | |

| LEEP [24] | ✗ | cross-entropy loss | |

| Ours | ✗ | form of classifier |

VII Experiments

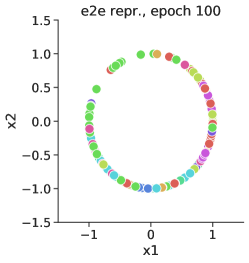

VII-A Sanity Check: Modular Training Results in Identical Learning Dynamics As End-to-End

In this section, we attempt to answer the important question: Do end-to-end and the proposed modular training, when restricted to the architecturally identical hidden modules, drive the underlying modules to functions that are the same in terms of minimizing the overall loss function? Clearly, a positive answer would verify empirically the optimality of the modular approach.

We now test this hypothesis with toy data. The set up is as follows. We generate 1000 32-dimensional random input and assign them random labels from 10 classes. The underlying network consists two modules. The input module is a fully-connected (fc) layer followed by ReLU nonlinearity and another fc layer. The output module is a fc layer. The two modules are linked by a tanh nonlinearity (output normalized to unit vector). The overall loss is the cross-entropy loss. And for modular training, the proxy objective is CTS-NEO. We visualize the activations from the tanh nonlinearity as an indicator of the behavior of the hidden layers under training.

From Fig. 4, we see that the underlying input modules are indeed driven to the same functions (when restricted to the training data and in terms of minimizing the overall loss) by both modular and end-to-end in the limit of training time going to infinity. This confirms the optimality of our proposed method (albeit in a simplified set-up) and allows us to modularize learning with confidence.

VII-B Sanity Check: Proxy Objectives Align Well With Accuracy

We now extend the verification of optimality from the simplified set-up in the previous section to a more practical setting. Recall that we have characterized our proxy objective as function of the input module whose maximizers constitute parts of the overall loss minimizers and we have proposed to train the input module to maximize the proxy objective.

Ideally, however, a proxy objective for input module should satisfy: The overall accuracy is a function of solely the proxy value and the output module and as a function of the proxy value, the overall accuracy is strictly increasing.333This type of results is reminiscent of the “ideal bounds” for representation learning sought for in, e.g., [55]. We now demonstrate empirically that the proxies we proposed enjoy this property.

The set-up is as follows. We train a LeNet-5 as two modules on MNIST with as the input module, and as the output one. The input module is trained with a number of different epochs so as to achieve different proxy values. And for each epoch, we freeze the input module and train output module to minimize the overall cross-entropy until it converges. Mathematically, suppose we froze input module at and we denote the proxy as and overall accuracy as , we are visualizing vs. , where is the output module.

Fig. 5 shows that the overall accuracy, as a function of the proxy value, is indeed approximately increasing. Further, this positive correlation becomes near perfect in high-accuracy regime, which agrees with our theoretical results. To summarize, we have extended our theoretical guarantees and empirically verified that maximizing the proposed proxy objectives effectively learns input modules that are optimal in terms of maximizing the overall accuracy, rendering end-to-end training unnecessary.

VII-C Accuracy on MNIST and CIFAR-10

We now present the main results on MNIST and CIFAR-10 demonstrating the effectiveness of our modular learning method. To facilitate fair comparisons, end-to-end and modular training operate on the same backbone network. For all results, we used stochastic gradient descent as the optimizer with batch size . For each module in the modular method as well as the end-to-end baseline, we trained with annealing learning rates (, each for epochs). The momentum was set to throughout. For data preprocessing, we used simple mean subtraction followed by division by standard deviation. On CIFAR-10, we used the standard data augmentation pipeline from [9], i.e., random flipping and clipping with the exact parameters specified therein. In modular training, the models were trained as two modules, with the output layer alone as the output module and everything else as the input module as specified in Sec. IV-C1. We normalized the activation vector right before the output layer of each model to a unit vector such that the equal-norm condition required by Theorem IV.1 is satisfied. We did not observe a significant performance difference after this normalization.

From Table II and III, we see that on three different backbone networks and both MNIST and CIFAR-10, our modular learning compares favorably against end-to-end. Since under two-module modular training, the ResNets can be viewed as KMs with adaptive kernels, we also compared performance with other NN-inspired kernel methods in the literature. In Table III, the ResNet KMs clearly outperformed other kernel methods by a considerable margin.

| Model | Training | Obj. Fn. | Acc. (%) |

| LeNet-5 (ReLU) | e2e | XE | 99.33 |

| LeNet-5 (tanh) | e2e | XE | 99.32 |

| LeNet-5 (ReLU) | mdlr | AL/XE | 99.35 |

| LeNet-5 (ReLU) | mdlr | UTAL/XE | 99.42 |

| LeNet-5 (ReLU) | mdlr | MSE/XE | 99.36 |

| LeNet-5 (tanh) | mdlr | AL(-NEO)/XE | 99.11 (99.19) |

| LeNet-5 (tanh) | mdlr | UTAL(-NEO)/XE | 99.21 (99.11) |

| LeNet-5 (tanh) | mdlr | MSE(-NEO)/XE | 99.27 (99.23) |

| LeNet-5 (tanh) | mdlr | CTS(-NEO)/XE | 99.16 (99.16) |

| Model | Data Aug. | Training | Obj. Fn. | Acc. (%) |

| ResNet-18 | flip & clip | e2e | XE | 94.91 |

| ResNet-152 | flip & clip | e2e | XE | 95.87 |

| ResNet-18 | flip & clip | mdlr | AL/XE | 94.93 |

| ResNet-152 | flip & clip | mdlr | AL/XE | 95.73 |

| CKN [56] | none | 82.18 | ||

| CNTK [8] | flip | 81.40 | ||

| CNTK∗ [8] | flip | 88.36 | ||

| CNN-GP [8] | flip | 82.20 | ||

| CNN-GP∗ [8] | flip | 88.92 | ||

| NKWT∗ [4] | flip | 89.80 |

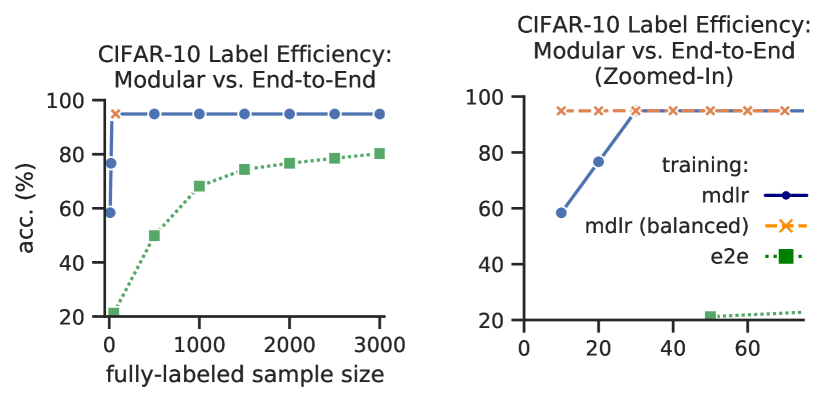

VII-D Label Efficiency of Modular Deep Learning

Our modular training needs only implicit labels (weak supervision) for training the latent modules. Full supervision is only needed for the output module. And we argue that in fact, the modular training of output module requires a smaller number of fully-labeled data than end-to-end training.

Intuitively, the input module in a deep architecture can be understood as learning a new representation of the given data with which the output module’s classification task is simplified. A simple way to quantify the difficulty of a learning task is through its sample complexity, which, when put in simple terms, refers to the number of labeled examples needed for a given model and a training algorithm to achieve a certain level of test accuracy [11].

In a modular training setting, one can decouple the training of input and output modules and then it would make sense to discuss the sample complexity of the two modules individually. The output modules should require fewer labeled data examples to train since its task has been simplified when the input one has been well-trained. We now observe that this is indeed the case.

We trained ResNet-18 on CIFAR-10 with end-to-end and our modular approach. The input module was trained with the full training set in the modular method, but again, this only requires implicit pairwise labels. We now compare the need for fully-labeled data between the two training methods.

With the full training set of labeled examples, the modular and end-to-end model achieved and test accuracy, respectively. From Fig. 6, we see that while the end-to-end model struggled in the label-scarce regime, achieving barely over accuracy with fully-labeled examples, the modular model consistently achieved strong performance, achieving accuracy with randomly chosen fully-labeled examples for training. In fact, if we ensure that there is at least one example from each class in the training data, the modular approach needed as few as randomly chosen examples to achieve accuracy, that is, a single randomly chosen example per class.444The fact that the modular model underperformed at and labels is likely caused by that there were some classes missing in the training data that we randomly selected. Specifically, classes were missing in the -example training data, and in the -example data.

These observations suggest that our modular training method for classification can almost completely rely on implicit pairwise labels and still produce highly performant models, which suggests new paradigms for obtaining labeled data that can potentially be less costly than the existing ones. This also indicates that the current form of strong supervision, i.e., full labels on individual data examples, relied on by existing end-to-end training, is not efficient enough. In particular, we have shown that by leveraging pairwise information in the RKHS, implicit labels in the form of whether pairs of examples are from the same class are just as informative. This may have further implications for un/semi-supervised learning.

VII-D1 Connections With Un/Semi-Supervised Learning

Whenever the training batch size is less than the size of the entire training set, we are using strictly less supervision than using full labels.555This is because if one does not cache information over batches, it is impossible to recover the labels for the entire training set if one is only given pairwise relationships on each batch. The fact that our learning method still produces equally performant models suggests that we are using supervision more efficiently.

Existing semi-supervised methods also aim at utilizing supervision more efficiently. They still rely on end-to-end training and use full labels, but achieve enhanced efficiency mostly through data augmentation. While our results are not directly comparable to those from existing methods (these methods do not work in the same setting as ours: They use fewer full labels while we use mostly implicit labels), as a reference point, the state-of-the-art semi-supervised algorithm achieves accuracy on CIFAR-10 with labels with a stronger backbone network (Wide ResNet-28-2) and a significantly more complicated training pipeline [57].

Therefore, we think the insights gained from our modular learning suggest that (1) the current form of supervision, i.e., full labels on individual data examples, although pervasively used by supervised and semi-supervised learning methods, is far from efficient and (2) by leveraging novel forms of labels and learning methods, simpler yet stronger un/semi-supervised learning algorithms exist. We leave this as a future work.

VII-E Transferability Estimation With Proxy Objective

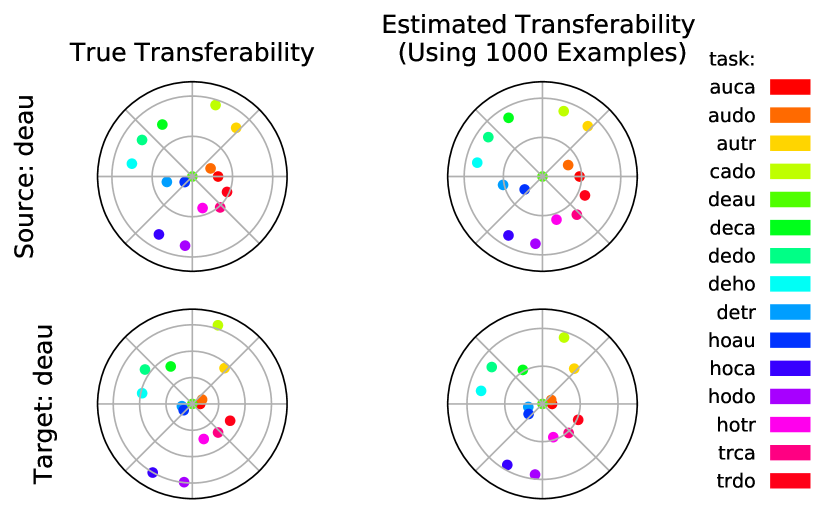

To empirically verify the effectiveness of our transferability estimation method, we created a set of tasks by selecting classes from CIFAR-10 and grouping each pair into a single binary classification task. The classes selected are: cat, automobile, dog, horse, truck, deer. Each new task is named by concatenating the first two letters from the classes involved, e.g., cado refers to the task of classifying dogs from cats.

We trained one ResNet-18 using the proposed modular method for each source task, using AL as the proxy objective. For each model, the output linear layer plus the nonlinearity preceding it is the output module, and everything else belongs to the input module. All networks achieved on average test accuracy on the task they were trained on666Highest accuracy was , achieved on audo. Lowest was , on cado., suggesting that each frozen input module possesses information essential to the source task. Therefore, the question of quantifying input module reusability is essentially the same as describing the transferability between each pair of tasks.

The true transferability between a source and a target task in the task space can be quantified by the test performance of the optimal output module trained for the target task on top of a frozen input module from a source task [10]. Our estimation of transferability is based purely on proxy objective: A frozen input module achieving higher proxy objective value on a target task means that the source and target are more transferable. This estimation is performed using a randomly selected subset of the target task training data.

In Fig. 7, we visualize the target space structure with trdo being the source and the target, respectively. We see from the figures that our method accurately described the target space structure despite its simplicity, using only of target task training data. The discovered transferability relationships also align well with common sense. For example, trdo is more transferable to, e.g., detr, since they both contain the truck class. trdo also transfers well with auca because features useful for distinguishing trucks from dogs are likely also helpful for classifying automobiles from cats.

VIII Conclusions

In this paper, we proposed a simple, alternative view on NNs that turns layers into linear models in feature spaces and showed that they are KMs. Based on this construction, we presented a modular learning framework for classification that does not require between-module propagation. Focusing on the two-module instantiation, we proved its optimality, demonstrated that it matches state-of-the-art performance from end-to-end backpropagation on MNIST and CIFAR-10, and showed that it learns powerful classifiers using almost only implicit, more efficient pairwise labels. This modular learning framework enables fully modularized DL workflows. We then demonstrated the benefit of such a workflow in a transfer learning setting, where the transferability among 15 binary classification tasks from CIFAR-10 were to be estimated. Our simple approach accurately described the task space structure using a fraction of target task training data with practically no computation overhead.

Some future work include a rigorous study of the transferability estimation problem and our proposed solution. Both theoretical and empirical results can be obtained to enhance the understanding of our method. A more in-depth study on how our modular learning method can help produce better un/semi-supervised learning paradigms is also of interest. A study on how the proposed proxy objectives behave in practice and if and how network design choices affect our modular learning can be beneficial especially for practitioners.

IX Acknowledgements

This work was supported by DARPA (FA9453-18-1-0039) and ONR (N00014-18-1-2306).

References

- [1] R. M. Neal, “Bayesian learning for neural networks,” Ph.D. dissertation, University of Toronto, 1995.

- [2] J. Lee, Y. Bahri, R. Novak, S. S. Schoenholz, J. Pennington, and J. Sohl-Dickstein, “Deep neural networks as gaussian processes,” arXiv preprint arXiv:1711.00165, 2017.

- [3] A. Jacot, F. Gabriel, and C. Hongler, “Neural tangent kernel: Convergence and generalization in neural networks,” in Advances in neural information processing systems, 2018, pp. 8571–8580.

- [4] V. Shankar, A. Fang, W. Guo, S. Fridovich-Keil, L. Schmidt, J. Ragan-Kelley, and B. Recht, “Neural kernels without tangents,” arXiv preprint arXiv:2003.02237, 2020.

- [5] Y. Cho and L. K. Saul, “Kernel methods for deep learning,” in Advances in neural information processing systems, 2009, pp. 342–350.

- [6] S. Arora, S. S. Du, Z. Li, R. Salakhutdinov, R. Wang, and D. Yu, “Harnessing the power of infinitely wide deep nets on small-data tasks,” arXiv preprint arXiv:1910.01663, 2019.

- [7] S. Arora, S. S. Du, W. Hu, Z. Li, R. R. Salakhutdinov, and R. Wang, “On exact computation with an infinitely wide neural net,” in Advances in Neural Information Processing Systems, 2019, pp. 8139–8148.

- [8] Z. Li, R. Wang, D. Yu, S. S. Du, W. Hu, R. Salakhutdinov, and S. Arora, “Enhanced convolutional neural tangent kernels,” arXiv preprint arXiv:1911.00809, 2019.

- [9] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 770–778.

- [10] A. T. Tran, C. V. Nguyen, and T. Hassner, “Transferability and hardness of supervised classification tasks,” in Proceedings of the IEEE International Conference on Computer Vision, 2019, pp. 1395–1405.

- [11] S. Shalev-Shwartz and S. Ben-David, Understanding machine learning: From theory to algorithms. Cambridge university press, 2014.

- [12] N. Aronszajn, “Theory of reproducing kernels,” Transactions of the American mathematical society, vol. 68, no. 3, pp. 337–404, 1950.

- [13] J. H. Manton, P.-O. Amblard et al., “A primer on reproducing kernel hilbert spaces,” Foundations and Trends® in Signal Processing, vol. 8, no. 1–2, pp. 1–126, 2015.

- [14] B. Scholkopf and A. J. Smola, Learning with kernels: support vector machines, regularization, optimization, and beyond. MIT press, 2001.

- [15] A. Rahimi and B. Recht, “Random features for large-scale kernel machines,” in Advances in neural information processing systems, 2008, pp. 1177–1184.

- [16] Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner, “Gradient-based learning applied to document recognition,” Proceedings of the IEEE, vol. 86, no. 11, pp. 2278–2324, 1998.

- [17] V. Nair and G. E. Hinton, “Rectified linear units improve restricted boltzmann machines,” in Proceedings of the 27th international conference on machine learning (ICML-10), 2010, pp. 807–814.

- [18] M. Gönen and E. Alpaydın, “Multiple kernel learning algorithms,” Journal of machine learning research, vol. 12, no. Jul, pp. 2211–2268, 2011.

- [19] V. Vapnik, “The nature of statistical learning theory,” 2000.

- [20] J. Shawe-Taylor, N. Cristianini et al., Kernel methods for pattern analysis. Cambridge university press, 2004.

- [21] N. Cristianini, J. Shawe-Taylor, A. Elisseeff, and J. S. Kandola, “On kernel-target alignment,” in Advances in neural information processing systems, 2002, pp. 367–373.

- [22] A. R. Zamir, A. Sax, W. Shen, L. J. Guibas, J. Malik, and S. Savarese, “Taskonomy: Disentangling task transfer learning,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 3712–3722.

- [23] A. Achille, M. Lam, R. Tewari, A. Ravichandran, S. Maji, C. C. Fowlkes, S. Soatto, and P. Perona, “Task2vec: Task embedding for meta-learning,” in Proceedings of the IEEE International Conference on Computer Vision, 2019, pp. 6430–6439.

- [24] C. V. Nguyen, T. Hassner, C. Archambeau, and M. Seeger, “Leep: A new measure to evaluate transferability of learned representations,” arXiv preprint arXiv:2002.12462, 2020.

- [25] P. L. Bartlett and S. Mendelson, “Rademacher and gaussian complexities: Risk bounds and structural results,” Journal of Machine Learning Research, vol. 3, no. Nov, pp. 463–482, 2002.

- [26] J. A. Suykens and J. Vandewalle, “Training multilayer perceptron classifiers based on a modified support vector method,” IEEE transactions on Neural Networks, vol. 10, no. 4, pp. 907–911, 1999.

- [27] V. Vapnik and A. Y. Chervonenkis, “On the uniform convergence of relative frequencies of events to their probabilities,” Theory of Probability & Its Applications, vol. 16, no. 2, pp. 264–280, 1971.

- [28] E. R. Kandel, J. H. Schwartz, T. M. Jessell, D. of Biochemistry, M. B. T. Jessell, S. Siegelbaum, and A. Hudspeth, Principles of neural science. McGraw-hill New York, 2000, vol. 4.

- [29] T. Hrycej, Modular learning in neural networks: a modularized approach to neural network classification. John Wiley & Sons, Inc., 1992.

- [30] D. Meunier, R. Lambiotte, and E. T. Bullmore, “Modular and hierarchically modular organization of brain networks,” Frontiers in neuroscience, vol. 4, p. 200, 2010.

- [31] J. Clune, J.-B. Mouret, and H. Lipson, “The evolutionary origins of modularity,” Proceedings of the Royal Society b: Biological sciences, vol. 280, no. 1755, p. 20122863, 2013.

- [32] R. A. Jacobs, M. I. Jordan, S. J. Nowlan, and G. E. Hinton, “Adaptive mixtures of local experts,” Neural computation, vol. 3, no. 1, pp. 79–87, 1991.

- [33] M. I. Jordan and R. A. Jacobs, “Hierarchical mixtures of experts and the em algorithm,” Neural computation, vol. 6, no. 2, pp. 181–214, 1994.

- [34] I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio, “Generative adversarial nets,” in Advances in neural information processing systems, 2014, pp. 2672–2680.

- [35] C. Watanabe, K. Hiramatsu, and K. Kashino, “Modular representation of layered neural networks,” Neural Networks, vol. 97, pp. 62–73, 2018.

- [36] S. E. Fahlman and C. Lebiere, “The cascade-correlation learning architecture,” in Advances in neural information processing systems, 1990, pp. 524–532.

- [37] Y. Bengio, “How auto-encoders could provide credit assignment in deep networks via target propagation,” arXiv preprint arXiv:1407.7906, 2014.

- [38] D.-H. Lee, S. Zhang, A. Fischer, and Y. Bengio, “Difference target propagation,” in Joint european conference on machine learning and knowledge discovery in databases. Springer, 2015, pp. 498–515.

- [39] M. Carreira-Perpinan and W. Wang, “Distributed optimization of deeply nested systems,” in Artificial Intelligence and Statistics, 2014, pp. 10–19.

- [40] D. Balduzzi, H. Vanchinathan, and J. Buhmann, “Kickback cuts backprop’s red-tape: biologically plausible credit assignment in neural networks,” in Twenty-Ninth AAAI Conference on Artificial Intelligence, 2015.

- [41] M. Jaderberg, W. M. Czarnecki, S. Osindero, O. Vinyals, A. Graves, D. Silver, and K. Kavukcuoglu, “Decoupled neural interfaces using synthetic gradients,” in Proceedings of the 34th International Conference on Machine Learning-Volume 70. JMLR. org, 2017, pp. 1627–1635.

- [42] Z.-H. Zhou and J. Feng, “Deep forest,” arXiv preprint arXiv:1702.08835, 2017.

- [43] S. Löwe, P. O’Connor, and B. Veeling, “Putting an end to end-to-end: Gradient-isolated learning of representations,” in Advances in Neural Information Processing Systems, 2019, pp. 3033–3045.

- [44] S. Duan, S. Yu, Y. Chen, and J. C. Principe, “On kernel method–based connectionist models and supervised deep learning without backpropagation,” Neural computation, pp. 1–39, 2019.

- [45] S. Ben-David and R. Schuller, “Exploiting task relatedness for multiple task learning,” in Learning Theory and Kernel Machines. Springer, 2003, pp. 567–580.

- [46] S. Ben-David, J. Blitzer, K. Crammer, and F. Pereira, “Analysis of representations for domain adaptation,” in Advances in neural information processing systems, 2007, pp. 137–144.

- [47] Y. Ganin, E. Ustinova, H. Ajakan, P. Germain, H. Larochelle, F. Laviolette, M. Marchand, and V. Lempitsky, “Domain-adversarial training of neural networks,” The Journal of Machine Learning Research, vol. 17, no. 1, pp. 2096–2030, 2016.

- [48] Y. Li, D. E. Carlson et al., “Extracting relationships by multi-domain matching,” in Advances in Neural Information Processing Systems, 2018, pp. 6798–6809.

- [49] P. Liu, X. Qiu, and X. Huang, “Adversarial multi-task learning for text classification,” arXiv preprint arXiv:1704.05742, 2017.

- [50] H. Janati, M. Cuturi, and A. Gramfort, “Wasserstein regularization for sparse multi-task regression,” arXiv preprint arXiv:1805.07833, 2018.

- [51] Y. Bao, Y. Li, S.-L. Huang, L. Zhang, L. Zheng, A. Zamir, and L. Guibas, “An information-theoretic approach to transferability in task transfer learning,” in 2019 IEEE International Conference on Image Processing (ICIP). IEEE, 2019, pp. 2309–2313.

- [52] S. Yu, A. Shaker, F. Alesiani, and J. C. Principe, “Measuring the discrepancy between conditional distributions: Methods, properties and applications,” 2020.

- [53] W. Liu, P. P. Pokharel, and J. C. Príncipe, “Correntropy: Properties and applications in non-gaussian signal processing,” IEEE Transactions on Signal Processing, vol. 55, no. 11, pp. 5286–5298, 2007.

- [54] B. Kulis, M. A. Sustik, and I. S. Dhillon, “Low-rank kernel learning with bregman matrix divergences,” Journal of Machine Learning Research, vol. 10, no. Feb, pp. 341–376, 2009.

- [55] S. Arora, H. Khandeparkar, M. Khodak, O. Plevrakis, and N. Saunshi, “A theoretical analysis of contrastive unsupervised representation learning,” arXiv preprint arXiv:1902.09229, 2019.

- [56] J. Mairal, P. Koniusz, Z. Harchaoui, and C. Schmid, “Convolutional kernel networks,” in Advances in neural information processing systems, 2014, pp. 2627–2635.

- [57] K. Sohn, D. Berthelot, C.-L. Li, Z. Zhang, N. Carlini, E. D. Cubuk, A. Kurakin, H. Zhang, and C. Raffel, “Fixmatch: Simplifying semi-supervised learning with consistency and confidence,” arXiv preprint arXiv:2001.07685, 2020.

1. Proving Theorem IV.1

Lemma .1.

Given an inner product space , a unit vector , and four other vectors , and with , where the norm is the canonical norm induced by the inner product, i.e., . Assume

| (24) |

Then there exists a unit vector such that

| (25) | |||

| (26) |

Proof.

Throughout, we omit the subscript on inner products and norms for brevity, which shall cause no ambiguity since no other inner product or norm will be involved in this proof.

If , then , which would imply

| (27) |

Then we may choose such that and the result holds trivially.

On the other hand, if , would be a valid choice of . Indeed, by Cauchy-Schwarz,

| (28) | |||

| (29) |

Therefore, we may assume that .

For two vectors , we define the “angle” between them, denoted , via . This angle is well-defined since is injective on .

Since

| (30) |

and together implies , which then implies since is strictly decreasing on , where is the angle between and is the angle between .

Let be the angle between and that between . Note that . Define

| (31) | |||

| (32) |

Now, suppose that we have shown

| (33) | ||||

| (34) |

where is the angle between and that between . Define:

| (35) | |||

| (36) |

Then using and the earlier result that , we would have

| (37) | |||

| (38) |

which, together with the fact that is strictly decreasing on and the assumption that , implies

| (39) | |||

| (40) |

proving the result.

To prove Eq. 33, it suffices to show since is decreasing on , to which both and belong. To this end, we have

| (41) | ||||

| (42) |

Since and similarly ,

| (43) | ||||

| (44) | ||||

| (45) | ||||

| (46) |

where the inequality is due to Cauchy-Schwarz.

To prove 34, it suffices to show that there exists such that

-

A

one of is a scalar multiple of the other;

-

B

.

Indeed, A implies , which, together with similar arguments as we used to prove Eq. 33, implies . Also note that B is equivalent to .

We prove existence constructively. Specifically, we set and find such that satisfies A and B simultaneously.

Let . Note that we immediately have:

| (47) |

The assumption implies .

Since is a unit vector, we have the following constraint on :

| (48) |

And some simple algebra yields

| (49) | |||

| (50) |

Define

| (54) | |||

| (55) | |||

| (56) | |||

| (57) |

Then we have

| (58) | |||

| (59) |

Assuming none of is , A is equivalent to

| (60) |

To check that this is always true, we have

| (61) | |||

| (62) | |||

| (63) |

because of Eq. 48. And

| (64) |

Therefore, A is always true.

If at least one of is , we have the following mutually exclusive cases:

-

i

one of the four coefficients is while the other three are not; ✗

-

ii

, the others are not ; ✓

-

iii

, the others are not ; ✓

-

iv

, the others are not ; ✗

-

v

, the others are not ; ✗

-

vi

, the others are not ; ✓

-

vii

, the others are not ; ✓

-

viii

three of the four coefficients are while one is not; ✓

-

ix

, ✓

where the cases marked with ✗ are the ones where A cannot be true (so our choice of cannot fall into these cases) and the ones marked with ✓ are the ones where A is true. Note that A cannot be true in cases iv and v because if A was true, it would imply that and are linearly dependent. However, since , we would have either or , both of which have been excluded from the discussion in the beginning of this proof.

Therefore, finding such that A and B hold simultaneously amounts to finding such that

| (66) | ||||

| (67) | ||||

| none of case i, iv, v is true. | (68) |

Now, substituting into Eq. 67 and solving for , we have

| (69) |

and we choose

| (70) |

This root is real since and therefore .

To verify that

| (71) |

satisfies 68, first note that since this solution of comes from solving and Eq. 67, we have

| (72) | |||

| (73) | |||

| (74) | |||

| (75) |

We now analyze each one of case i, iv, and v individually and show that our choice of does not fall into any of them, proving that this particular satisfies Eq. 66, 67, and condition 68.

case i:

If , then either or , resulting in or , i.e., there must be at least coefficients being .

If , then either , in which case , or . In the latter case, we would then use Eq. 67 and have

| (76) |

Again, we would have at least nonzero coefficients.

If , then either , which would result in , or . Assuming , Eq. 71 would imply and . Either way, we would have and therefore .

Finally, if , then first assuming , we would have . Then Eq. 71 would give . Solving for , we have . would imply that . On the other hand, Eq. 71 also implied . Hence, must be , in which case . If , we would have as well.

case iv:

It is easy to see that this case is impossible since .

case v:

If , then we have already shown in the proof of case i that either or must be , that is, at least coefficients would be .

We now prove Theorem IV.1.

Proof.

The result amounts to proving

| (77) |

Define to be the set of all such that and the set of all such that . Let , we have

| (78) | |||

| (79) | |||

for some from , respectively, where and .

For any , let be parameterized by . We have

| (80) | |||

| (81) | |||

for with maximizing over and minimizing over , where and .

Using the assumption on ,

| (82) |

Then using the earlier Lemma, there exists a unit vector such that

| (83) | |||

| (84) |

Let , then evidently, , and we have,

| (86) | |||

| (87) | |||

| (88) | |||

| (89) | |||

| (90) | |||

| (91) | |||

| (92) | |||

| (93) |

This proves the result. ∎

2. Example Loss Functions Satisfying Conditions of Theorem IV.1

The empirical risk of many popular loss functions can be decomposed into two terms with one nondecreasing and the other nonincreasing such that the condition required by Theorem IV.1 is satisfied.

-

•

softmax + cross-entropy (two-class version):

(94) (95) where is the softmax nonlinearity.

-

•

tanh + mean squared error (this decomposition works for any monotonic nonlinearity with the value of adjusted for the range of the nonlinearity):

(96) (97) where is the hyperbolic tangent nonlinearity.

-

•

hinge loss:

(98) (99)

3. Additional Transferability Results