Modularity based linkage model for neuroevolution

Abstract.

Crossover between neural networks is considered disruptive due to the strong functional dependency between connection weights. We propose a modularity-based linkage model at the weight level to preserve functionally dependent communities (building blocks) in neural networks during mixing. A proximity matrix is built by estimating the dependency between weights, then a community detection algorithm maximizing modularity is run on the graph described by such matrix. The resulting communities/groups of parameters are considered to be mutually independent and used as crossover masks in an optimal mixing EA. A variant is tested with an operator that neutralizes the permutation problem of neural networks to a degree. Experiments were performed on 8 and 10-bit parity problems as the intrinsic hierarchical nature of the dependencies in these problems are challenging to learn. The results show that our algorithm finds better, more functionally dependent linkage which leads to more successful crossover and better performance.

1. Introduction

The building block hypothesis states that Genetic Algorithms (GAs) work well by recombining small schemas of dependent genes into a better solution. The key to efficient recombination is the capability of preserving such schemas or so-called building blocks. Linkage Learning methods(Thierens, 2010)(Thierens and Bosman, 2011)(Przewozniczek and Komarnicki, 2020) have shown success by estimating the dependencies between genes in order not to disrupt them during the recombination, thus improving the performance of the evolutionary process.

Previous research applying EAs on neural networks has focused on the so-called permutation problem (Hancock, 1992)(Dragoni et al., 2014)(Stanley and Miikkulainen, 2002)(Mahmood et al., 2007)(Thierens et al., 1993), which states that a neural network can have many configurations with the same functionality by a reordering of neurons (e.g. in a fully-connected layer). Recombination between two functionally similar neural networks thus does not always produce offspring that is functionally similar to its parents. A non-disruptive crossover can only then happen when the population is extremely converged in the parameter space which will hinder the search process. Solving the permutation problem would mean achieving permutation invariance from parameter space to functionality space and effectively reduce the search space in terms of the network architecture.

In addition to the permutation problem, in this paper we show that the disregard of inter-dependency between parameters is another primary source of disruption of recombination between neural networks. Neural networks typically implement a highly non-linear function, meaning that the optimization of their parameters can be a highly inseparable problem. The functionality of any weight influences, and is influenced by the perturbing of any other weight, as long as there is no disjoint part of the neural network. Performing recombination while preserving this interaction as much as possible while exchanging functional substructures is then a key factor in the success of the evolutionary process.

This issue has been considered in some previous work(Mouret and Doncieux, 2008)(Radcliffe, 1993) on indirect encoding neuroevolution. MENNAG(Mouret and Doncieux, 2008) address this problem by encoding neural network as a generated graph of a Genetic Programming syntax tree, where connections between subgraphs represented by disjoint subtrees are naturally sparse, thus the overall generated neural network has a hierarchical modular structure. Swapping subtrees is then equivalent to swapping relatively independent substructures and such recombination was shown to be beneficial to performance by results in the paper and later empirical study(Qiao and Gallagher, 2020). To our knowledge, no efforts toward non-disruptive recombination have been made on fully connected neural networks with direct encoding.

Linkage Learning attempts to model the dependency structure between the problem variables by observing the existing population. The best-known linkage model is the Linkage Tree (LT) model(Thierens, 2010) where the mutual information between problem variables is estimated from the population and a tree-like linkage structure is learned by iteratively merging variables with the most mutual information. Linkage Learning does not require any domain knowledge of the problem, but this could lead to a problem (as pointed out in (Przewozniczek and Komarnicki, 2020)) in that it does not guarantee functional dependency of learned linkage as it simply captures correlated occurrences in the population.

On the optimization of feedforward neural networks, we are not in a completely black-box situation, as we have a certain amount of domain knowledge of how parameters interact with each other. In this paper, we describe the Modularity-based Linkage model to exploit this fact. First, we build a proximity matrix where each element is the estimated first-order functional dependency between connection weights. A graph is then created by treating the proximity matrix as an adjacency matrix. Then, a community searching algorithm is applied to the graph to minimize the dependency between the searched communities where each community contains neural network weights that are deemed to be dependent within the community but independent to the rest of the neural network.

We carry out experiments on 8-bit parity and 10-bit parity problems since the non-separable nature of this problem enhances the inter-dependency of neural network parameter optimization, making it more challenging than it is. The results show that our algorithm finds better, more functionally dependent linkage which leads to more successful crossover and better performance compared with LT or when no crossover is applied. The results also indicate that solving either the permutation problem or the substructure disruption problem is not enough for efficient recombination, but both are required at the same time.

2. Algorithm

2.1. Family Of Subsets

Following the paradigm of linkage representation in (Thierens and Bosman, 2011), a Family Of Subsets (FOS) is a set of subsets of which are the indices of problem parameters with a size of . Each element is assumed to be independent of the rest of the parameters .

In a Marginal Product FOS, each element is mutually exclusive of the other; for any . In its simplest form, each subset consists of only one parameter which is the univariate FOS. The Linkage Tree FOS describes a hierarchical dependency structure(Thierens, 2010). For any subset with more than one element, there exist two subsets that are mutually exclusive, smaller in size and their union is exactly . The LT FOS can be seen as the result of a hierarchical clustering algorithm. At the start, we have the univariate FOS where each cluster contains one problem variable. New FOS elements are created by merging two existing FOS elements into a binary tree in a bottom-up fashion with each node being a subset of the FOS except the root. The whole Linkage Tree would contain nodes which is also the size of the FOS. The size of the FOS can sometimes be a problem and since most of the bottom-leveled FOS elements do not describe valuable linkage information, a depth threshold is often applied while the linkage tree is being traversed.

2.2. Optimal Mixing

In Optimal Mixing (OM)(Thierens and Bosman, 2011) the FOS is traversed in a random order and an offspring is produced by two parents swapping genetic material using a different FOS element as a crossover mask. Specifically, the FOS element describes a subset of variables to be swapped into the first parent from the second parent. The offspring is only accepted if it outperforms the first parent and when it does it will replace the first parent. For a FOS with good quality, FOS elements are deemed to be independent to each other and OM would explore the outcome of all possible non-disruptive crossover learned by the linkage model.

For Recombinative Optimal Mixing, the same parents are used for each FOS element. For Gene-pool Optimal Mixing, the second parent is re-selected randomly each time a different FOS element is applied. These methods are summarized in Algorithm 1.

2.3. Neuron Similarity

A big issue with recombination in neuroevolution is the permutation problem. A neural network can have many juxtaposes with the same functionality but different orderings of the neurons in the same layers. This means mixing between functionally close neural networks doesn’t always result in functionally close offspring. To attempt to neutralize this to some degree, we implemented the Neuron Similarity operator inspired by (Dragoni et al., 2014).

In this operator, the activation (output) values of the neurons of each individual are recorded for all inputs. During recombination, the neurons of the second parent are rearranged temporarily by comparing their activation values to the first parent. In each layer, each neuron is matched to a neuron of the first parent where the sum of the differences of the activation values over a batch of input is minimal, in a greedy manner. This operator is summarized in Algorithm 2.

2.4. Modularity-based linkage model

Our proposed modularity-based linkage model attempts to preserve interaction between weights by finding a modular decomposition of a graph, where each vertex is a weight in the neural network and each edge represents the estimated strength of the interaction between the two vertices connected to the edge.

Although a fully connected neural network is non-separable in terms of dependency between the parameters, we only consider two weights to be ’connected’ when one weight connects to a neuron and the other weight connects from the same neuron. The strength of interaction of the said pair of weights is estimated to be the absolute value of the product of the weights. This provides a crude approximation as it ignores the non-linearity of the activation function and the effect of other inputs of the neuron. We define a weights proximity matrix as a matrix of interaction values between all pairs of weights in each layer of a network. Algorithm 3 summarizes the calculation of this matrix.

| 0 | 0 | 0 | 0 | 0.2 | 0 | |

| 0 | 0 | 0 | 0 | 0 | 0.3 | |

| 0 | 0 | 0 | 0 | 0.2 | 0 | |

| 0 | 0 | 0 | 0 | 0 | 0.3 | |

| 0.2 | 0 | 0.2 | 0 | 0 | 0 | |

| 0 | 0.3 | 0 | 0.3 | 0 | 0 |

The recalculation of the proximity matrix after merging groups can be done easily with mutual information-based metrics due to the grouping property(Thierens, 2010), making hierarchical clustering a suitable method of estimating the dependency structure. In order to better estimate the dependencies within a group, we apply the concept of modularity: for a given graph and the grouping of its vertices, modularity describes how sparse the edges are between groups than within groups. By treating the proximity matrix as an adjacency matrix of a graph, finding a grouping that maximizes modularity would be close to finding subsets of highly dependent variables while keeping them independent between different subsets, which fits the purpose of FOS.

For two groups,modularity is defined as(Newman, 2006):

| (1) |

where is the number of edges between vertices and , is the degree of vertex , is the total number of edges, indicates the group belongs to, where a value of 1 indicates group 1 and a value of -1 indicates group 2. This formula can be generalized to positive weighted graphs, where the number of edges would represent weights and the total number of edges would represent the sum of weights instead.

The recombination operator requires two individuals, however the aforementioned metric only describes the proximity structure of a single entity. This is the main reason a between-group average hierarchical clustering method like UPGMA as implemented in GOMEA(Bosman and Thierens, 2012), cannot be used directly since we cannot apply this dependency measurement to a population.

The definition of modularity can be shown to be linear with graphs having the same number of vertices and the same sum of edges. Let adjacency matrix describe a graph calculated from individual . Assuming our goal is to find a grouping maximizing modularity for two individuals simultaneously , assuming we have a fixed architecture, the sizes of the networks are the same, we have:

| (2) |

Since we are finding a single grouping for both graphs simultaneously, will be equal to . By normalizing with a factor of we can get:

| (3) |

That is, to maximize the modularity of a graph described by adjacency matrix is to find a single grouping maximizing a linear combination of the modularity of graph 1 and graph 2. With a common assumption that every individual of the population is from the same distribution, the value of should be close to 1. This means the modular decomposition should not lean too much to one side where only one individual’s substructures are being preserved. The linear nature of this approximation also indicates that it is not suitable to be generalized to multiple individuals as the substructures of some individuals are deemed to be ignored. This leads to the fact that a population-wise linkage structure cannot be learned effectively and thus a pair-wise linkage structure must be learned before crossover. Another consequence is that Gene-Pool optimal mixing will not be compatible with this linkage model anymore since changing the second donor would mean recalculating the linkage and the computational overhead would be unacceptable.

We apply the Leiden algorithm to optimize the community decomposition of the graph described by the proximity matrix as it arguably the state of the art algorithm for this task(Traag et al., 2019). Empirical results(Traag et al., 2019) showed that it runs in near linear time in the number of edges, this is exceptionally important as our proximity matrix is very sparse and the graph can be represented as an adjacency list instead. A downside, however, is that the algorithm does not provide a hierarchical structure of the communities, unlike Newman’s algorithm(Newman, 2006). This means we cannot build a linkage tree but have to use the marginal product model. Newman’s algorithm, however, requires eigen-decomposition of the dependency matrix and it would simply not scale when there are more than a few hundred of weights with a time complexity of . Time complexity is also a reason for not using UPGMA as it runs in (Gronau and Moran, 2007).

Our modularity-based linkage EA is summarized in Algorithm 4.

3. Experiments

3.1. Parity Problem

In nature, fully connected feed-forward neural networks have overlapping building blocks: it is hard to find a sub-network that does not interact with any other sub-network. To further enhance this property, we choose the parity problem to test the capability of finding good linkages with our algorithm.

The input of the parity problem is an -bit bitstring, and the target output is the parity of the sum of 1s in the bitstring. This problem cannot be decomposed into a linear combination of sub-problems meaning that any building block found will be overlapping with at least one other. Even if the problem is solved optimally by building a hierarchical XOR function the solution will consist of building blocks with high inter-dependency.

3.2. Setup

On each problem, each experiment is run for 20 repeated trials. For the 8-bit parity problem, the size of the layers of neural networks including input and output layers is:[8,8,8,8,1] with three hidden layers. For the 10-bit parity problem it is [10,10,10,10,1]. All neurons have as the activation function. Weight initialization is Gaussian with a mean of 0 and a standard deviation of 3. The mutation is performed by adding a Gaussian perturbation with a standard deviation of 0.2. The mutation rate is 0.3. The experiments stop after a maximum of evaluations. The first parent is selected by rank-proportional selection. The population size is 100 with an elitism rate of 0.01.

To make a fair comparison to recombination without linkage and mutation-only reproduction, we apply the idea of only accepting successful offspring to setups that do not require FOS in order to give them a similar amount of pressure in the reproduction phase. As a matter of fact, such an operator improves the performance significantly, making our results more convincing. The different algorithmic setups tested are as follows:

- MOD:

-

Recombinative optimal mixing, Modularity-based linkage model.

- MOD_NS:

-

Same as MOD but the neuron similarity operator is applied to the second parent to align its neurons to the first parent.

- Uniform:

-

Only accept successful reproduction, Uniform crossover. In Uniform crossover the offspring inherits each parameter from both parents with equal probability.

- Uniform_NS:

-

Same as Uniform but the neuron similarity operator is applied to the second parent to align its neurons to the first parent.

- No:

-

Only accept successful reproduction, no crossover. This serves as a baseline since if recombination is beneficial, the performance should be at least as good as no crossover at all.

- LT:

-

Gene-pool optimal mixing, Mutual information-based Linkage Tree model. The focus of this work is to compare the quality of linkage found in neural networks, thus we only implemented a minimal version of GOMEA adapted to real value with no covariance adaptation mechanism(Bouter et al., 2017). There is no neuron similarity variant since the linkage tree must be built on mutual information calculated from the whole population. Rearranging neurons before crossover would invalidate the linkages learned beforehand.

3.3. Metrics

To evaluate the results of our experiments, the following metrics are used (averages reported over the 20 runs):

- Fitness:

-

The fitness is the accuracy of the prediction of parity given the bit-string. A negative output of the neural network is treated as 0 and a positive output is treated as 1.

- Population parameter similarity:

-

A measurement of population convergence calculated as the average pairwise cosine similarity of the population. This metric was introduced in (Mouret and Doncieux, 2012).

- Parent child behavior difference:

-

A measurement of recombination disruptiveness. The behavior difference between two neural networks is calculated as the mean absolute value of the difference of output values for all inputs. The parent-child behavior difference is the mean of the behavior difference between the child and the first parent, and between the child and the second parent.

4. Results

As shown in Figure 2(a)(b), for 8-bit parity MOD_NS converges towards good solutions more quickly, though it is slightly worse than the average fitness of MOD. For 10-bit parity, MOD_NS outperforms all other setups by a large margin. An interesting observation is that the neuron similarity operator positively impacts the performance of the modularity-based linkage model but not so much when uniform crossover is performed. In fact, for the 10-bit parity problem, NS has the exact opposite effect. To understand this, we show how the population converges in terms of parameter cosine similarity in Figure2(c)(d). In both problems, MOD_NS has the least converged population. Our interpretation is that since neurons are rearranged according to their behavior before crossover, successful crossovers do not require the same permutation of neurons thus the diversity of neuron permutations is preserved. The NS operator however is not perfect; it effectively induces noise as the difficulty of getting the correct neuron alignment rises. There could be no 1-to-1 alignments in the first place or the complexity of the functionality overwhelms this method of simply comparing neuron activation values. In 2(d) we observed extremely converged populations of UNIFORM_NS, but not so much in 2(c). A possibility is that given a disruptive recombination operator, the population of OMEAs tends to be extremely converged in order to perform non-disruptive crossover. The reason is that during Optimal Mixing, an offspring would only be accepted if outperforms its parents and this is more likely to happen when the crossover is non-disruptive, which requires both parents to be very similar when the crossover operator itself is disruptive. Population diversity can be quickly lost when all offspring are produced by extremely similar parents. This could mean the complexity of 10-bit parity is so much for the NS operator, that it starts to induce such a big noise for the already disruptive uniform crossover. In order to overcome this, the population has to be extremely converged to negate the noise so non-disruptive crossovers can be performed so that the offspring can be accepted. However the NS operator is helpful on both problems for the modularity-based linkage model. We hypothesize that this is because NS works by reducing effective search space by achieving permutation invariance in terms of neuron orders and if the population is already very converged, the permutation problem would not exist anyway thus the contribution of NS would be purely negative. That is, solving the permutation problem can barely help with recombination if high disruption exists to push toward an early-converged population. According to 2(c)(d), MOD has significantly less-converged populations thus it benefits from NS more, despite it causing some degree of disruption to the recombination.

In Figure 2(e)(f), we measure how differently an offspring would behave from its parents to understand how disruptive each recombination operator can be. While MOD_NS has the highest difference in some of the early stages, it gradually achieves the lowest average behavioral difference by the end for both problems while maintaining high diversity in the parameter space. Modularity-based linkage model aims to preserve substructures during recombination, but in the early stage of evolution, substructures might not yet emerge, or, the overall structures are so diverse in the population, attempting to swap substructures between individuals is deemed to be disruptive. This combined with the fact that NS is also disruptive while there is not good alignment between neurons from both parents, explains why MOD_NS performs disruptive recombination in the early stage. As substructures emerge in the process, MOD_NS and MOD become less and less disruptive compared with other settings.

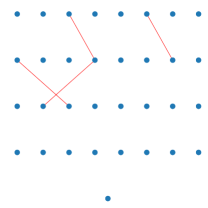

In OM with FOS an offspring is only accepted when crossover with the traversed FOS element results in a performance increase. A big factor of recombination disruptiveness is the amount of genetic material exchanged. Swapping only a tiny part of the neural network should not have as much of an impact on its functionality compared to swapping half of the network. This is also a reason for uniform crossover being such a disruptive operator since its crossover rate is fixed at 0.5. A reasonable deduction is that the size of accepted subsets tells us the quality of the linkage being modeled by the FOS, as a well-separated subset should be functionally independent regardless of its size. Table.2 shows that MOD and MOD_NS have a much higher average rate of genetic material exchange compared to LT, meaning much larger subsets being accepted during OM. Note that when the exchange rate is greater than 0.5, (1 - the exchange rate) is considered to be the crossover rate instead. To further examine the quality of the FOS, Figure.3 shows some of the accepted subsets randomly selected from the experiments. Note that when we show instead since it is hard to see anything when the FOS element contains almost all of the parameters. Although being small in size and containing disjoint connections, LT identifies connected parameters with a higher probability than random, even without prior knowledge of neural networks. A big problem, however, is that LT learns either very large linkage or very small linkage as the population converges. This is expected since the optimization of neural network parameters is such an inseparable problem, that every weight is dependent on each other to some degree. This intractable noise would make it extremely hard to find relatively independent functional substructures without any domain knowledge. The low crossover rate also explains why the convergence of the parameters of the population of LT is even more severe compared to UNIFORM where a disruptive crossover is performed. With our model specifically designed for fully connected feed-forward neural networks, by focusing on first-order interactions only, this noise can be overcome at the price of ignoring more complex interactions. This is reflected by the fact that linkages found are always well-connected as shown in Figure.3, which means the inter-dependency within the subsets is being respected. Combined with the fact that MOD and MOD_NS cause the least amount of behavioral difference during recombination, we conclude that our method finds linkages with good quality.

| 8-bit | 10-bit | |

|---|---|---|

| LT | 1.14 | 0.57 |

| MOD | 9.90 | 5.18 |

| MOD_NS | 8.21 | 5.23 |

5. Conclusion

This paper has identified two main sources of disruption of recombination between fully connected feed-forward neural networks with direct encoding, the permutation problem and the substructure disruption problem. A modularity-based linkage model was proposed to solve the latter problem while the neuron similarity operator, inspired by(Dragoni et al., 2014), was applied in combination, to reduce the former problem. We have shown that this combination outperforms the Linkage Tree model, as it reaches higher fitness in fewer function evaluations on the challenging parity problem. It produces offspring that are functionally close to their parents while maintaining a diverse population in the parameter space. it is also shown that the actual linkage information learned by our model is of much higher quality compared to the linkage learned by LT as the size of the accepted linkage is much larger. Finally, we showed that solving either of the permutation problem or the substructure disruption problem is not enough for efficient recombination, but both need to be assessed at the same time.

6. Future Work

While revealing the important factors toward efficient recombination between fully connected neural networks, the problems are only addressed by crude estimations, despite producing good results. It may well be possible to make further improvements if more sophisticated and accurate methods are applied, but computational demands could become a problem. Future work on better dependency estimation methods and better solutions to the permutation problem is an open area of research towards the feasibility of scaling neuroevolution to large, modular solutions for challenging problems.

References

- (1)

- Bosman and Thierens (2012) Peter AN Bosman and Dirk Thierens. 2012. Linkage neighbors, optimal mixing and forced improvements in genetic algorithms. In Proceedings of the 14th annual conference on Genetic and evolutionary computation. 585–592.

- Bouter et al. (2017) Anton Bouter, Tanja Alderliesten, Cees Witteveen, and Peter AN Bosman. 2017. Exploiting linkage information in real-valued optimization with the real-valued gene-pool optimal mixing evolutionary algorithm. In Proceedings of the Genetic and Evolutionary Computation Conference. 705–712.

- Dragoni et al. (2014) Mauro Dragoni, Antonia Azzini, and Andrea GB Tettamanzi. 2014. SimBa: A novel similarity-based crossover for neuro-evolution. Neurocomputing 130 (2014), 108–122.

- Gronau and Moran (2007) Ilan Gronau and Shlomo Moran. 2007. Optimal implementations of UPGMA and other common clustering algorithms. Inform. Process. Lett. 104, 6 (2007), 205–210.

- Hancock (1992) Peter JB Hancock. 1992. Genetic algorithms and permutation problems: a comparison of recombination operators for neural net structure specification. In [Proceedings] COGANN-92: International Workshop on Combinations of Genetic Algorithms and Neural Networks. IEEE, 108–122.

- Mahmood et al. (2007) Ashique Mahmood, Sadia Sharmin, Debjanee Barua, and Md Monirul Islam. 2007. Graph matching recombination for evolving neural networks. In Advances in Neural Networks–ISNN 2007: 4th International Symposium on Neural Networks, ISNN 2007, Nanjing, China, June 3-7, 2007, Proceedings, Part II 4. Springer, 562–568.

- Mouret and Doncieux (2008) Jean-Baptiste Mouret and Stéphane Doncieux. 2008. MENNAG: a modular, regular and hierarchical encoding for neural-networks based on attribute grammars. Evolutionary Intelligence 1 (2008), 187–207.

- Mouret and Doncieux (2012) J-B Mouret and Stéphane Doncieux. 2012. Encouraging behavioral diversity in evolutionary robotics: An empirical study. Evolutionary computation 20, 1 (2012), 91–133.

- Newman (2006) Mark EJ Newman. 2006. Modularity and community structure in networks. Proceedings of the national academy of sciences 103, 23 (2006), 8577–8582.

- Przewozniczek and Komarnicki (2020) Michal W Przewozniczek and Marcin M Komarnicki. 2020. Empirical linkage learning. In Proceedings of the 2020 Genetic and Evolutionary Computation Conference Companion. 23–24.

- Qiao and Gallagher (2020) Yukai Qiao and Marcus Gallagher. 2020. An Implementation and Experimental Evaluation of a Modularity Explicit Encoding Method for Neuroevolution on Complex Learning Tasks. In AI 2020: Advances in Artificial Intelligence: 33rd Australasian Joint Conference, AI 2020, Canberra, ACT, Australia, November 29–30, 2020, Proceedings 33. Springer, 138–149.

- Radcliffe (1993) Nicholas J Radcliffe. 1993. Genetic set recombination and its application to neural network topology optimisation. Neural Computing & Applications 1 (1993), 67–90.

- Stanley and Miikkulainen (2002) Kenneth O Stanley and Risto Miikkulainen. 2002. Evolving neural networks through augmenting topologies. Evolutionary computation 10, 2 (2002), 99–127.

- Thierens (2010) Dirk Thierens. 2010. The linkage tree genetic algorithm. In International Conference on Parallel Problem Solving from Nature. Springer, 264–273.

- Thierens and Bosman (2011) Dirk Thierens and Peter AN Bosman. 2011. Optimal mixing evolutionary algorithms. In Proceedings of the 13th annual conference on Genetic and evolutionary computation. 617–624.

- Thierens et al. (1993) Dirk Thierens, Johan Suykens, Joos Vandewalle, and Bart De Moor. 1993. Genetic weight optimization of a feedforward neural network controller. In Artificial Neural Nets and Genetic Algorithms: Proceedings of the International Conference in Innsbruck, Austria, 1993. Springer, 658–663.

- Traag et al. (2019) Vincent A Traag, Ludo Waltman, and Nees Jan Van Eck. 2019. From Louvain to Leiden: guaranteeing well-connected communities. Scientific reports 9, 1 (2019), 5233.