Model selection in reconciling hierarchical time series

Abstract.

Model selection has been proven an effective strategy for improving accuracy in time series forecasting applications. However, when dealing with hierarchical time series, apart from selecting the most appropriate forecasting model, forecasters have also to select a suitable method for reconciling the base forecasts produced for each series to make sure they are coherent. Although some hierarchical forecasting methods like minimum trace are strongly supported both theoretically and empirically for reconciling the base forecasts, there are still circumstances under which they might not produce the most accurate results, being outperformed by other methods. In this paper we propose an approach for dynamically selecting the most appropriate hierarchical forecasting method and succeeding better forecasting accuracy along with coherence. The approach, to be called conditional hierarchical forecasting, is based on Machine Learning classification methods and uses time series features as leading indicators for performing the selection for each hierarchy examined considering a variety of alternatives. Our results suggest that conditional hierarchical forecasting leads to significantly more accurate forecasts than standard approaches, especially at lower hierarchical levels.

Key words and phrases:

Hierarchical forecasting, machine learning, time series features, classification1. Introduction and background

Forecasting is essential for supporting decision making, especially in applications that involve a lot of uncertainty. For instance, accurately forecasting the future demand of stock keeping units (SKUs) can significantly improve supply chain management [20], reduce inventory costs [54], and increase service levels [45], particularly under the presence of promotions [7, 3]. In order to obtain more accurate forecasts, forecasters typically try to identify the most appropriate forecasting model for each series from a variety of alternatives. This task, although it can provide significant improvements under perfect foresight [14], is difficult to effectively perform in practice due to model, parameter, and data uncertainty [44]. Thus, many strategies have been proposed in the literature to effectively perform forecasting model selection [15], most of which are based on the in-sample and out-of-sample accuracy of the forecasting models [55], their complexity [27], and the features that time series display [43, 40].

However, in business forecasting applications, data is typically grouped based on its context and characteristics, thus structuring cross-sectional hierarchies. For example, although the demand of an SKU can be reported at a store level, it can be also aggregated (summed) at a regional or national level. Similarly, demand can be aggregated for various SKUs of the same type (e.g., dairy products) or category (e.g., foods). As a result, hierarchical time series introduce additional complexity to the whole forecasting process since, apart from selecting the most appropriate forecasting model for each series, forecasters have also to account for coherence, i.e., make sure that the forecasts produced at the lower hierarchical levels will sum up to those produced at the higher ones [9]. In fact, coherence is a prerequisite in hierarchical forecasting (HF) applications as it ensures that different decisions made across different hierarchical levels will be aligned.

Naturally, the demand recorded at lower hierarchical levels will always add up to the observed demand at higher levels. However, this is rarely the case for forecasts which are usually produced for each series separately and are therefore incoherent. To achieve coherence, various HF methods can be used for reconciling the individual, base forecasts [50]. The most basic HF method is probably the bottom-up (BU), according to which base forecasts are produced just for the series at the lowest level of the hierarchy, being then aggregated to provide forecasts for the series at the higher levels [12]. Top-down (TD) is another option which suggests forecasting just the series at the highest level of the hierarchy and then using proportions to disaggregate these forecasts and predict the series at the lower levels [21, 8]. Middle-out (MO) mixes the above-mentioned methods, producing base forecasts for a middle level of the hierarchy and then aggregating or disaggregating them to forecast the higher and lower levels, respectively. Finally, a variety of HF methods that combine (COM) the forecasts produced at all hierarchical levels have been proposed in the literature succeeding more often than not, along with coherence, better forecasting performance [28, 58, 30].

From the HF methods found in the literature, a COM method, called minimum trace (MinT; [58]), has been distinguished due to the strong theory supporting it and the results of many empirical studies highlighting its merits over other alternatives. However, there are still circumstances under which MinT might fail to provide the most accurate forecasts. For instance, since MinT is based on the estimation of the one-step-ahead error covariance matrix, the method might be proven inappropriate when the in-sample errors of the baseline forecasting models do not represent post-sample accuracy, the assumption that the multi-step forecast error covariance is proportional to the one-step forecast error covariance is unrealistic, or the required estimations are computationally too hard to make. Moreover, since MinT treats all levels equally, it cannot be optimized with respect to certain hierarchical levels of interest. Finally, given that medians are not additive, there is no reason to expect that MinT will always improve forecasting accuracy, at least when measures that are based on averages of forecast errors are used for evaluating forecasting performance.

In such cases, simpler HF methods like the BU and the TD can be proven useful. However, there might still be inadequate evidence about which of the two methods to use [28]. For example, the BU method is typically regarded more suitable for short-term forecasts and for hierarchies which bottom series are not highly correlated but less robust to randomness. On the other hand, the TD method is usually regarded more appropriate for long-term forecasts but less accurate for predicting the series at the lower aggregation levels due to information loss. It seems that no reconciliation method can fit all kinds of HF problems and that, similarly to forecasting model selection, the appropriateness of the different HF methods depends on various factors, including the particularities of the time series [41] and the structure of the hierarchy [16, 17, 21, 1], among others.

The above findings reconfirm George Box’s quote that “all models are wrong, but some are useful” [59], and highlight the potential benefits of conditional HF, i.e., the improvements in terms of forecasting accuracy that could be possibly achieved if forecasters were able to select the most appropriate HF method according to the characteristics of the series that form a hierarchy. In this paper we propose an approach for performing such a conditional selection using time series features as leading indicators [31, 51] and Machine Learning (ML) methods for conducting the classification. Essentially, we suggest that the forecasting accuracy of the different HF methods found in the literature is closely related with the characteristics of the individual series and that, based on these relationships, “horses for courses” can be effectively identified [43].

We benchmark the accuracy of the proposed approach against various HF methods, both standard and state-of-the-art, using a large dataset coming from the retail industry. Our results suggest that conditional HF leads to superior forecasts which are significantly better than those of the individual HF methods examined. Thus, we conclude that selection should not be limited to forecasting models, as done till now, but be expanded to HF methods as well.

The remainder of the paper is organised as follows. Section 2 describes the most popular HF methods found in the literature and Section 3 introduces conditional HF. Section 4 presents the dataset used for the empirical evaluation of the proposed approach and describes the experimental set-up. Section 5 presents the results of the experiment and discusses our findings. Finally, Section 6 concludes the paper.

2. Hierarchical forecasting methods

In this section, we discuss the TD, BU, and COM as three well-established HF methods that are widely used in the literature and in practice for reconciling hierarchical base forecasts. These methods are also the ones considered in this study, both as alternatives of the conditional HF approach to be described in the next section and as benchmarks. For a more detailed discussion on the existing HF methods, their advantages, and drawbacks, please refer to the study of [9].

Before proceeding, we introduce the following notations and parameters that will facilitate the discussion of the three methods:

We can express a hierarchical time series as , where is a summing matrix of order . For example, we can express the three-level hierarchical time series shown in Figure 1 as:

Accordingly, we can express various hierarchical structures with a unified format as , where is a matrix of order which elements depend on the type of the reconciliation method used, in our case the BU, TD, and COM methods [26].

2.1. Bottom-up

BU is the simplest HF method according to which we forecast the series at the bottom level of the hierarchy and then aggregate these forecasts to obtain forecasts at higher levels. In this case, the matrix can be constructed as , where is a null matrix.

2.2. Top-down

In the TD method, base forecasts are produced at the top level of the hierarchy and then disaggregated to the lower levels with appropriate factors. While there are various ways for computing such factors and disaggregating the top level forecasts, we consider the proportions of the historical averages since it is a widely used alternative that provides reasonable results [8]. These proportions are computed as follows

| (1) |

where represents the average of the historical value of the bottom level series relative to the average value of the total aggregate . We can then construct the vector and matrix .

2.3. Optimal combination

The COM method produces base forecasts for all series across all hierarchical levels and then combines them with a linear model to obtain the reconciled forecasts. Suppose

depicts the -step-ahead reconciled forecasts. Then, the covariance matrix of the errors of these forecasts can be given by

where is the variance-covariance matrix of the -step-ahead base forecast errors [58, 29]. It can be shown that the matrix that minimizes the trace of such that it generates unbiased reconciled forecasts, i.e., , is given by

where is the generalized inverse of .

There are a few different ways to estimate . In this study we consider the shrinkage estimation as it has been empirically shown that it yields the most accurate forecasts in many HF applications [1, 53, 58]. Using the shrinkage method, this matrix can be estimated by . The diagonal target of the shrinkage estimator is and the shrinkage parameter is given by

where is the element of the one-step-ahead in-sample correlation matrix [47].

The COM method was implemented using the MinT function of the hts package for R [25].

3. Conditional hierarchical forecasting

Time series often depict different patterns, such as seasonality, randomness, noise, and auto-correlation [31]. As a result, there is no model that can consistently forecast all types of series more accurately than other models, even relatively simple ones [43, 15]. Similarly, although some models may perform better on a time series dataset of particular characteristics, there is no guarantee that this will always be the case [51]. For example, despite that exponential smoothing [19] typically produces accurate forecasts for seasonal series, it might be outperformed by ML methods when a large number of observations is available [48]. Thus, selecting the most appropriate forecasting model for each series becomes a promising, yet challenging task for improving overall forecasting accuracy [40].

Model selection has been extensively studied in the forecasting literature. Although there is no unique way to determine the most appropriate forecasting model for each series, empirical studies have provided effective strategies for performing this task [15]. From these strategies, the approaches that build on time series features are among the most promising ones given that the latter can effectively represent the behaviour of the series in an abstract form and match it with the relative performance of various forecasting models [46, 39, 57, 43, 31, 2].

Expert systems and rule-based forecasting were two of the early approaches to be suggested for forecasting model selection [11, 36]. [11] considered domain knowledge along with 18 time series features and proposed a framework that consisted of 99 rules to select the most appropriate forecasting model from 4 alternatives. In another study, [5] considered 6 features and 64 rules to select the most accurate forecasting model from 3 alternatives. Similarly, [6] proposed an approach to automatically extract time series features and choose the best forecasting model. [43] measured the impact of 7 time series features plus the length of the forecasting horizon on the accuracy of 14 popular forecasting models, while [31] and [51] linked the performance of standard time series forecasting models with that of various indicative features using data from well-known forecasting competitions. More recently, [40] used 42 time series features to determine the weights for optimally combining 9 different forecasting models, winning the second place in the M4 forecasting competition [38].

Inspired by the work done in the area of forecasting model selection, we posit that HF methods can be similarly selected using time series features and, based on such a selection, improve forecasting accuracy for the case of hierarchical series. In this respect, we proceed by computing various time series features across all hierarchical levels and propose using these features for selecting the HF method that best suites the hierarchy, i.e., produces on average the most accurate forecasts for all the series it consists of [2, 43]. The selection is done by employing a popular ML classification method.

The proposed approach, to be called conditional HF (CHF), is summarised below and presented in the flowchart of Figure 2.

Given that CHF builds on time series features and its accuracy is directly connected with the representativeness of the features used, as well as the capacity of the algorithm employed for selecting the most appropriate HF method, it becomes evident that choosing a set of diverse, yet finite features is a prerequisite for enhancing the performance of the proposed classification approach. There are many features that can be used to describe time series patterns. For example, [18] extracted more than 7,700 features for describing the behaviour of the time series and then summarised them into 22 canonical features, losing just 7% of accuracy [35]. Similarly, [56] and [31] suggested that a relatively small number of features can be used for effectively visualising time series and performing forecasting model selection. Based on the above, we decided to consider 32 features for the CHF algorithm so that the patters of the hierarchical series are effectively captured without exaggerating. These features included entropy, lumpiness, hurst, acffeatures, pacffeatures, nperiods, seasonal-period, trend, spike, linearity, curvature,e-acf1, e-acf10, seasonal-strength, x-acf1, x-acf10, diff1-acf1, diff1-acf10, diff2-acf1, diff2-acf10, seas-acf1, x-pacf5, diff1x-pacf5, diff2x-pacf5, seas-pacf, linearity and non-linearity, arch-test, luctanal-prop-r1, and unitroot-kpss, and were computed using the tsfeatures package for R [23]. For more details about these features, please refer to the studies of [56] and [31].

Note that CHF is flexible in terms of the method that will be employed for performing the classification. That is, users can choose their classification method of choice for identifying the most accurate HF method and reconciling the base forecasts produced for the examined hierarchy. In the preliminary experiment, we considered three methods, namely eXtreme Gradient Boosting (XGB), Support Vector Machines (SVM), and Random Forests (RF). However, since XGB performed best, we decided to exclude the last two from our analysis. This is aligned with the mainstream knowledge in data science [42, 10, 13]. XGB is an implementation of gradient boosted decision trees that is based on ensembling and uses a large number of hyper-parameters for performing the classification. Since XGB results are fairly robust to the chosen values of the hyper-parameters, we set their values manually by considering the literature and users’ experience [53]. More specifically, we set the learning rate (eta) to 0.01, colsample-bytree to 1, min-child weight to 5 , max-depth to 5, sub sample size to 0.7, and the number of iterations to 1000. We trained XGB by setting the objective to multi:softprob and evaluated its performance with mlogloss.

Note that CHF is also flexible in terms of the forecasting models that will be used for producing the base forecasts and the HF methods that will be considered for their reconciliation. For the latter case, in the present study we considered three HF methods (BU, TD, and COM), as described in Section 2. The reasoning is two-fold. First, classification methods tend to perform better when the number of classes is limited and, as a result, the key differences between the classes are easier to identify [22]. Second, we believe that the selected HF methods are diverse enough, each one focusing on different levels of the hierarchy and adopting a significantly different approach for reconciling the base forecasts. Although we could have considered more HF methods of those proposed in the literature, they are mostly variants of the examined three methods (especially the COM method) and are therefore sufficiently covered.

We should also highlight that the rolling origin evaluation of the off-line phase can be adjusted to any desirable set-up that might be more suitable to the user. For example, if computational cost is not an issue, instead of updating the forecast origin by periods at a time, a step of one period could be considered to further increase the size of the set used for training the classification method and facilitate learning. The main motivation for considering an -step-ahead update is that this practice suits the way the retail firms operate when forecasting their sales and making their plans, creating also a rich set on which the ML classification method can be effectively trained, without exaggerating in terms of computational cost. Finally, although we chose to train the classification method so that the average accuracy of CHF is minimized across the entire hierarchy, this objective can be easily adjusted in order for CHF to provide more accurate forecasts for a specific level of interest. This choice depends on the decision-makers and can vary based on their focus and objectives. However, we do believe that our choice to optimize forecasting accuracy across the entire hierarchy, weighting equally all hierarchical level, is realistic when dealing with demand forecasting and supply chain management given that in such settings each level supports very different, yet equally important decisions. A similar weighting scheme was adopted in the latest M competition, M5111https://mofc.unic.ac.cy/m5-competition/, whose objective was to produce the most accurate point forecasts for 42,840 time series that represent the hierarchical unit sales of ten Walmart stores.

4. Data and experimental setup

4.1. Data

Although HF is relevant in many applications, such as energy [52] and tourism [34], it is most commonly found in the retail industry where SKU demand can be grouped based on location and product-related information. Therefore, the dataset used in this study for empirically evaluating the accuracy of the proposed HF approach involves the sales and prices recorded for 55 hierarchies, corresponding to 55 fast-moving consumer goods (FMCG) of a food manufacturing company sold in various locations in Australia. Although the exact labels of the products are unknown to us, the products include breakfast cereals, long-life milk, and other breakfast products.

The hierarchical structure is the same for all 55 products of the dataset and is depicted in Figure 3. The number of series at each hierarchical level is provided in Table 1. As seen, each hierarchy consists of three levels, where the top level (level 0) represents total product sales, the middle level (level 1) the product sales recorded for 2 major retailer companies, and the bottom level (level 2) the way the product sales are disaggregated across 12 distribution centers (DC), 6 per retailer company, located in different states of Australia. Thus, each hierarchy includes 15 time series, each containing 120 weeks of observations, spanning from September 2016 to December 2018.

| Hierarchical level | Number of series |

|---|---|

| Level 0 | 1 |

| Level 1 | 2 |

| Level 2 | 12 |

| Total | 15 |

Figure 4 presents the hierarchical time series of an indicative product of the dataset. We observe that sales may experience spikes at different levels of the hierarchy, i.e., different levels of the supply chain. These spikes correspond to promotional periods and their frequency and size vary significantly for different products. Moreover, different nodes in the hierarchy may experience spikes of different extent. As such, the dataset represents a diverse set of demand patterns which are highly affected under the presence of promotions, i.e., price changes.

4.2. Experimental setup

Considering that the examined dataset involves products which sales are highly affected by promotions, we produce base forecasts for all the series of the 55 hierarchies using a regression model with ARMA errors (Reg-ARMA), where product prices are used as a regressor variable. Note that Reg-ARIMA can effectively capture sales both during promotional and non-promotional periods as price inherently carries the impact of promotions and, therefore, explains sufficiently the corresponding variations in sales. Reg-ARMA model is implemented using the forecast package for R [24].

To evaluate the accuracy of the proposed HF approach both in terms of median and mean approximation [32], we consider two measures, namely the mean absolute scaled error (MASE) and the root mean sum of squared scaled error (RMSSE). The measures are calculated as follows:

| RMSSE |

where and are the observation and the forecast for period , is the sample size (observations used for training the forecasting model), and is the forecasting horizon. Note that both measures are scale-independent, thus allowing us to average the results across series.

Once the base forecasts are produced, we use the BU, TD, and COM methods to reconcile them across the three levels of the hierarchy. These methods are used for benchmarking the proposed HF approach as they have been successfully applied in numerous applications and are considered standards in the area of HF [1, 28, 29]. We also use the CHF algorithm to select which of the three benchmarks is more suitable for forecasting each hierarchy.

In order to fit and evaluate the CHF algorithm, we split the original dataset into a train and test set. More specifically, we used the first 26 weeks of data to initially train the Reg-ARMA model and the following 58 periods to produce 4-step-ahead base forecasts on a rolling origin basis [55]. Each time that the forecast origin was updated, the set used for fitting the Reg-ARMA model was accordingly extended so that the base forecasts produced were appropriately adjusted. Moreover, on each step, the BU, TD, and COM methods were applied to reconcile the base forecasts and identify the most accurate alternative according to MASE. Note that the results were consistent when RMSSE was used as an accuracy measure for training the classifiers, so we report them only for the case of MASE for reasons of brevity. In this respect, a total of 14 accuracy measurements (average accuracy of 4-step-ahead forecasts over 58 weeks) 55 hierarchies 15 series = 11,550 evaluations were recorded. We then summarised the results across the hierarchy and constructed a dataset of 14 evaluations 55 hierarchies = 770 observations that was used for training the XGB classification method. The remaining 36 weeks of data were used as a test set to evaluate the actual accuracy of the proposed approach, again on a rolling origin fashion. Thus, our evaluation is based on a total of 9 accuracy measurements (average accuracy of 4-step-ahead forecasts over 36 weeks) 55 hierarchies 15 series = 7,425 observations. Note that XGB is trained iteratively each time that we move across the window, with the values of the time series features being accordingly updated.

5. Empirical results and discussion

Table 2 displays the forecasting accuracy (MASE and RMSSE) of the three HF methods considered in this study as benchmarks as we all as classes for training the CHF algorithm in the retail dataset presented in Section 4.1. The accuracy is reported both per hierarchical level and on average (arithmetic mean of the three levels), while CHF is implemented using the XGB classifier, as described in section 4.2.

| HF Method | Level 0 | Level 1 | Level 2 | Average |

| MASE | ||||

| BU | 0.502 | 0.854 | 0.856 | 0.832 |

| TD | 0.435 | 0.987 | 0.907 | 0.885 |

| COM | 0.455 | 0.844 | 0.844 | 0.817 |

| CHF | 0.466 | 0.820 | 0.828 | 0.802 |

| RMSSE | ||||

| BU | 0.583 | 1.049 | 1.044 | 1.013 |

| TD | 0.507 | 1.180 | 1.100 | 1.071 |

| COM | 0.531 | 1.039 | 1.035 | 1.001 |

| CHF | 0.534 | 1.014 | 1.006 | 0.975 |

The results indicate that, on average, CHF is the best HF method according to both accuracy measures used. More specifically, CHF provides about 4%, 9%, and 2% more accurate forecasts than BU, TD, and COM, respectively, indicating that the XGB method has effectively managed to classify the hierarchies based on the feautures that their series display.

The improvements are similar if not better for the middle and bottom levels of the hierarchy. However, CHF fails to outperform TD and COM for the top hierarchical level, being about 6% and 2% less accurate, despite being still 8% better than the BU method. This finding confirms our initial claim that, depending on the hierarchical level of interest, different HF methods may be more suitable. In this study, we focused on the average accuracy of the hierarchical levels and optimised CHF with such an objective. However, as described in section 3, different objectives could be considered in order to explicitly optimise the top, middle or bottom level of the hierarchy.

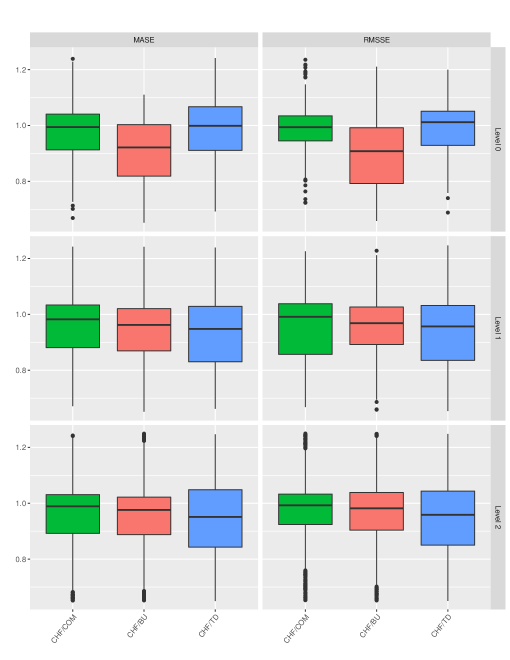

In order to better evaluate the performance of the proposed approach, we proceed by investigating the distribution of the error ratios reported between CHF and the three benchmark methods examined in our study across all the 55 hierarchies of the dataset. The results, presented per hierarchical level and accuracy measure, are visualised in Figure 5 where box-plots are used to display the minimum, 1st quantile, median, 3rd quantile, and maximum values of the ratios, as well as any possible outliers. Values lower than unity indicate that CHF provides more accurate forecasts and vice versa. As observed, in most of the cases, CHF outperforms the rest of the HF methods, having a median ratio value lower than unity. The only exception is when forecasting level 0 and using RMSSE for measuring forecasting accuracy, where the TD method tends to provide superior forecasts. Thus, we conclude that CHF does not only provide the most accurate forecasts on average across all the 55 hierarchies, but also the most accurate forecasts across the individual ones.

To validate this finding, we also examine the significance of the differences reported between the various HF methods using the multiple comparisons with the best (MCB) test, as proposed by [33]. According to MCB, the methods are first ranked based on the accuracy they display for each series of the hierarchy and then their average ranks are compared considering a critical difference, , as follows:

| (2) |

where is the number of the time series, is the number of the examined HF methods, and is the quantile of the confidence interval. In our case, where is set equal to 0.05 (95% confidence), takes a value of 3.219. Accordingly, is equal to 6 and is equal to 7,425.

The results of the MCB test are presented in Figure 6. If the intervals of two methods do not overlap, this indicates a statistically different performance. Thus, methods that do not overlap with the grey zone of Figure 6 are significantly worse than the best, and vice versa. As seen, our results indicate that CHF provides significantly better forecasts than the rest of the HF methods, both in terms of MASE and RMSSE. Moreover, we find that CHF is followed by COM and then by TD and BU. Interestingly, the performance of TD is not significantly different than the one of BU according to RMSSE or MASE. In this regard, we conclude that CHF performs better than the state-of-the-art HF methods found in the literature, being also significantly more accurate than the standards used for HF, such as BU and TD. As such, CHF can be effectively used for selecting the most appropriate HF method from a set of alternatives and improving the overall forecasting accuracy of various hierarchies.

We also investigate the performance of the XGB classifier in terms of precision, recall, and score. The precision metric measures the number of correct predictions in the total number of predictions made for each class. Recall (also known as sensitivity) informs us about the number of times that the classifier has successfully chosen the best HF method for each class. Finally, the score is the harmonic mean of precision and recall, computed by , and is used to combine the information provided by the other two metrics [49].

Before presenting the results, we should first note that the TD, BU, and COM methods have been identified as the most accurate HF method in 1,860, 2,745, and 2,820 cases, respectively. This indicates that the dataset used for training the classification method was equally populated, displaying a uniform probability distribution. Having a balanced training sample is important for our experiment since it facilitates the training of the ML algorithm (enough observations from each class are available and biases can be effectively mitigated) and provides more opportunities for accuracy improvements (if no HF method is dominant, selecting between different HF methods becomes promising).

The performance of the XGB classification method is presented in Table 3. Observe that, according to the precision metric, XGB manages to select quite accurately the BU and COM methods but finds it difficult to make appropriate selections when the TD method is the most preferable one. This indicates that, although XGB identifies the conditions under which the BU or COM methods are more preferable than the TD method, the opposite is not true.

| ML classifier | Class | Precision | Recall | |

|---|---|---|---|---|

| BU | 0.55 | 0.46 | 0.50 | |

| XGB | TD | 0.22 | 0.32 | 0.26 |

| COM | 0.42 | 0.38 | 0.40 |

As a final step in our analysis we investigate the significance of the time series features used by the XGB classifier, i.e., the number of times that each feature was considered by the method for making a prediction. Figure 7 shows the top-5 selected features of the classifier. As seen, the non-linearity at level 2 is the most frequently used feature and, therefore, the most critical variable for selecting a HF method. This is because non-linearity is one of the strongest features in our dataset, with promotions frequently affecting the sales of the products strongly and changing the volatility of their demand not only over promotional periods but over a number of periods [2]. Thus, the non-linearity at level 1 and level 0 are also among the top-selected features. Maximum variance shift at level 0 is another feature that is frequently selected. This may attribute to the sudden changes and spikes in sales data set caused by promotion. Finally, e-acf-a.L1, which contains the first autocorrelation coefficient of the error terms of series at level 1, is also among top selected features. One explanation is because sales are affected by promotions and have a high level of entropy.

Note that when conducting this experiment we considered another training set-up where for the classifiers, apart from time series features, we also provided information about the correlation of the series, both across levels and within each level separately. The results were similar to those reported in Table 2 and therefore we decided to exclude those features from our models for reasons of simplicity. However, we do believe that features related with the structure and the relationships of the series could help further improving the accuracy of CHF and leave this for future research.

Another extension of the proposed approach that could be also examined in a future study, is that it focuses on selecting the most appropriate hierarchical forecasting method per hierarchy. However, numerous empirical studies have shown that combining forecasts from multiple forecasting methods can improve forecasting accuracy [37]. Thus, replacing classifiers with other methods that would combine various HF methods using appropriate weights becomes a promising alternative to CHF. Simple, equal-weighted combinations of standard HF methods have already been proven useful under some settings [4], while feature-based forecast model averaging has demonstrated its potential to generate robust and accurate forecasts [40].

6. Conclusion

This paper introduced conditional hierarchical forecasting, a dynamic approach for effectively selecting the most accurate method for reconciling incoherent hierarchical forecasts. Inspired by the work done in the area of forecasting model selection and the advances reported in the field of Machine Learning, the proposed approach computes various features for the time series of the examined hierarchy and relates their values to the forecasting accuracy achieved by different hierarchical forecasting methods, like bottom-up, top-down, and combination methods, using an appropriate classification method. Based on the lessons learned, and depending on the characteristics of time series in the hierarchy, the most suitable hierarchical forecasting method can be chosen and used to enhance overall forecasting performance.

We exploited various time series features at different levels of the hierarchy that represent their behavior and trained an extreme gradient boosting classification model to choose the most appropriate type of hierarchical forecasting method for a hierarchical time series with the selected features. The accuracy of the proposed approach was evaluated using a large dataset coming from the retail industry and compared to that of three popular hierarchical forecasting methods. Our results indicate that conditional hierarchical forecasting can produce significantly more accurate forecasts than the benchmarks considered, especially at lower hierarchical levels. Thus, we suggest that, when dealing with hierarchical forecasting applications, selection should be expanded from forecasting model to reconciliation methods as well.

References

- [1] Mahdi Abolghasemi, Rob J Hyndman, Garth Tarr and Christoph Bergmeir “Machine learning applications in time series hierarchical forecasting” In arXiv preprint arXiv:1912.00370, 2019

- [2] Mahdi Abolghasemi, Eric Beh, Garth Tarr and Richard Gerlach “Demand forecasting in supply chain: The impact of demand volatility in the presence of promotion” In Computers & Industrial Engineering Elsevier, 2020, pp. 106380

- [3] Mahdi Abolghasemi, Jason Hurley, Ali Eshragh and Behnam Fahimnia “Demand forecasting in the presence of systematic events: Cases in capturing sales promotions” In International Journal of Production Economics Elsevier, 2020, pp. 107892

- [4] Wessam Abouarghoub, Nikos K Nomikos and Fotios Petropoulos “On reconciling macro and micro energy transport forecasts for strategic decision making in the tanker industry” In Transportation Research Part E: Logistics and Transportation Review 113, 2018, pp. 225–238

- [5] Monica Adya, J Scott Armstrong, Fred Collopy and Miles Kennedy “An application of rule-based forecasting to a situation lacking domain knowledge” In International Journal of Forecasting 16.4 Elsevier, 2000, pp. 477–484

- [6] Monica Adya, Fred Collopy, J Scott Armstrong and Miles Kennedy “Automatic identification of time series features for rule-based forecasting” In International Journal of Forecasting 17.2 Elsevier, 2001, pp. 143–157

- [7] Özden Gür Ali, Serpil Sayın, Tom van Woensel and Jan Fransoo “SKU demand forecasting in the presence of promotions” In Expert Systems with Applications 36.10, 2009, pp. 12340–12348 DOI: https://doi.org/10.1016/j.eswa.2009.04.052

- [8] George Athanasopoulos, Roman A Ahmed and Rob J Hyndman “Hierarchical forecasts for Australian domestic tourism” In International Journal of Forecasting 25.1 Elsevier, 2009, pp. 146–166

- [9] George Athanasopoulos et al. “Hierarchical Forecasting” In Macroeconomic Forecasting in the Era of Big Data: Theory and Practice Springer International Publishing, 2020, pp. 689–719

- [10] Sotirios P Chatzis et al. “Forecasting stock market crisis events using deep and statistical machine learning techniques” In Expert Systems with Applications 112 Elsevier, 2018, pp. 353–371

- [11] Fred Collopy and J Scott Armstrong “Rule-based forecasting: Development and validation of an expert systems approach to combining time series extrapolations” In Management Science 38.10 INFORMS, 1992, pp. 1394–1414

- [12] Byron J Dangerfield and John S Morris “Top-down or bottom-up: Aggregate versus disaggregate extrapolations” In International Journal of Forecasting 8.2 Elsevier, 1992, pp. 233–241

- [13] Halil Demolli, Ahmet Sakir Dokuz, Alper Ecemis and Murat Gokcek “Wind power forecasting based on daily wind speed data using machine learning algorithms” In Energy Conversion and Management 198 Elsevier, 2019, pp. 111823

- [14] Robert Fildes “Beyond forecasting competitions” In International Journal of Forecasting 17.4, 2001, pp. 556–560 DOI: https://doi.org/10.1016/S0169-2070(01)00119-4

- [15] Robert Fildes and Fotios Petropoulos “Simple versus complex selection rules for forecasting many time series” Special Issue on Simple Versus Complex Forecasting In Journal of Business Research 68.8, 2015, pp. 1692–1701 DOI: https://doi.org/10.1016/j.jbusres.2015.03.028

- [16] Gene Fliedner “An investigation of aggregate variable time series forecast strategies with specific subaggregate time series statistical correlation” In Computers & Operations Research 26.10-11 Elsevier, 1999, pp. 1133–1149

- [17] Gene Fliedner “Hierarchical forecasting: issues and use guidelines” In Industrial Management & Data Systems 101.1 MCB UP Ltd, 2001, pp. 5–12

- [18] Ben D Fulcher, Max A Little and Nick S Jones “Highly comparative time-series analysis: The empirical structure of time series and their methods” In Journal of the Royal Society Interface 10.83 The Royal Society, 2013, pp. 20130048

- [19] Everette S Gardner Jr “Exponential smoothing: The state of the art” In Journal of forecasting 4.1 Wiley Online Library, 1985, pp. 1–28

- [20] Adel A Ghobbar and Chris H Friend “Evaluation of forecasting methods for intermittent parts demand in the field of aviation: a predictive model” In Computers & Operations Research 30.14 Elsevier, 2003, pp. 2097–2114

- [21] Charles W Gross and Jeffrey E Sohl “Disaggregation methods to expedite product line forecasting” In Journal of Forecasting 9.3 Wiley Online Library, 1990, pp. 233–254

- [22] Trevor Hastie and Robert Tibshirani “Classification by pairwise coupling” In Advances in neural information processing systems, 1998, pp. 507–513

- [23] Rob Hyndman et al. “tsfeatures: Time Series Feature Extraction” R package version 1.0.1, 2019 URL: https://pkg.robjhyndman.com/tsfeatures/

- [24] Rob Hyndman et al. “forecast: Forecasting functions for time series and linear models” R package version 8.12, 2020 URL: http://pkg.robjhyndman.com/forecast

- [25] Rob Hyndman, Alan Lee, Earo Wang and Shanika Wickramasuriya “hts: Hierarchical and Grouped Time Series” R package version 6.0.0, 2020 URL: https://CRAN.R-project.org/package=hts

- [26] Rob J Hyndman and George Athanasopoulos “Forecasting: principles and practice” Melbourne, Australia: OTexts, 2018 URL: http://OTexts.com/fpp2

- [27] Rob J Hyndman, Anne B Koehler, Ralph D Snyder and Simone Grose “A state space framework for automatic forecasting using exponential smoothing methods” In International Journal of Forecasting 18.3, 2002, pp. 439–454 DOI: https://doi.org/10.1016/S0169-2070(01)00110-8

- [28] Rob J Hyndman, Roman A Ahmed, George Athanasopoulos and Han Lin Shang “Optimal combination forecasts for hierarchical time series” In Computational Statistics & Data Analysis 55.9 Elsevier, 2011, pp. 2579–2589

- [29] Rob J Hyndman, Alan J Lee and Earo Wang “Fast computation of reconciled forecasts for hierarchical and grouped time series” In Computational Statistics & Data Analysis 97 Elsevier, 2016, pp. 16–32

- [30] Jooyoung Jeon, Anastasios Panagiotelis and Fotios Petropoulos “Probabilistic forecast reconciliation with applications to wind power and electric load” In European Journal of Operational Research, 2019

- [31] Yanfei Kang, Rob J. Hyndman and Kate Smith-Miles “Visualising forecasting algorithm performance using time series instance spaces” In International Journal of Forecasting 33.2, 2017, pp. 345–358 DOI: https://doi.org/10.1016/j.ijforecast.2016.09.004

- [32] Stephan Kolassa “Evaluating predictive count data distributions in retail sales forecasting” In International Journal of Forecasting 32.3, 2016, pp. 788–803

- [33] Alex J Koning, Philip Hans Franses, Michele Hibon and Herman O Stekler “The M3 competition: Statistical tests of the results” In International Journal of Forecasting 21.3 Elsevier, 2005, pp. 397–409

- [34] Nikolaos Kourentzes and George Athanasopoulos “Cross-temporal coherent forecasts for Australian tourism” In Annals of Tourism Research 75, 2019, pp. 393–409

- [35] Carl H Lubba et al. “catch22: CAnonical Time-series CHaracteristics” In Data Mining and Knowledge Discovery 33.6 Springer, 2019, pp. 1821–1852

- [36] Vijay Mahajan and Yoram Wind “New product forecasting models: Directions for research and implementation” In International Journal of Forecasting 4.3 Elsevier, 1988, pp. 341–358

- [37] Spyros Makridakis, Rob J. Hyndman and Fotios Petropoulos “Forecasting in social settings: The state of the art” In International Journal of Forecasting 36.1, 2020, pp. 15 –28 DOI: https://doi.org/10.1016/j.ijforecast.2019.05.011

- [38] Spyros Makridakis, Evangelos Spiliotis and Vassilios Assimakopoulos “The M4 Competition: 100,000 time series and 61 forecasting methods” In International Journal of Forecasting 36.1 Elsevier, 2020, pp. 54–74

- [39] Nigel Meade “Evidence for the selection of forecasting methods” In Journal of forecasting 19.6 Wiley Online Library, 2000, pp. 515–535

- [40] Pablo Montero-Manso, George Athanasopoulos, Rob J. Hyndman and Thiyanga S. Talagala “FFORMA: Feature-based forecast model averaging” In International Journal of Forecasting 36.1, 2020, pp. 86–92 DOI: https://doi.org/10.1016/j.ijforecast.2019.02.011

- [41] Zlatana D Nenova and Jerrold H May “Determining an optimal hierarchical forecasting model based on the characteristics of the data set” In Journal of Operations Management 44 Elsevier, 2016, pp. 62–68

- [42] Didrik Nielsen “Tree boosting with xgboost-why does xgboost win ”every” machine learning competition?”, 2016

- [43] Fotios Petropoulos, Spyros Makridakis, Vassilios Assimakopoulos and Konstantinos Nikolopoulos “’Horses for Courses’ in demand forecasting” In European Journal of Operational Research 237.1, 2014, pp. 152–163

- [44] Fotios Petropoulos, Rob J. Hyndman and Christoph Bergmeir “Exploring the sources of uncertainty: Why does bagging for time series forecasting work?” In European Journal of Operational Research 268.2, 2018, pp. 545–554 DOI: https://doi.org/10.1016/j.ejor.2018.01.045

- [45] Alireza Pooya, Morteza Pakdaman and Lotfi Tadj “Exact and approximate solution for optimal inventory control of two-stock with reworking and forecasting of demand” In Operational Research 19.2 Springer, 2019, pp. 333–346

- [46] DJ Reid “A comparison of forecasting techniques on economic time series” In Forecasting in Action. Operational Research Society and the Society for Long Range Planning, 1972

- [47] Juliane Schäfer and Korbinian Strimmer “A shrinkage approach to large-scale covariance matrix estimation and implications for functional genomics” In Statistical applications in genetics and molecular biology 4.1 De Gruyter, 2005

- [48] Slawek Smyl “A hybrid method of exponential smoothing and recurrent neural networks for time series forecasting” In International Journal of Forecasting 36.1, 2020, pp. 75–85 DOI: https://doi.org/10.1016/j.ijforecast.2019.03.017

- [49] Marina Sokolova and Guy Lapalme “A systematic analysis of performance measures for classification tasks” In Information processing & management 45.4 Elsevier, 2009, pp. 427–437

- [50] Evangelos Spiliotis, Fotios Petropoulos and Vassilios Assimakopoulos “Improving the forecasting performance of temporal hierarchies” In PloS ONE 14.10, 2019, pp. e0223422

- [51] Evangelos Spiliotis, Andreas Kouloumos, Vassilios Assimakopoulos and Spyros Makridakis “Are forecasting competitions data representative of the reality?” In International Journal of Forecasting 36.1, 2020, pp. 37–53 DOI: https://doi.org/10.1016/j.ijforecast.2018.12.007

- [52] Evangelos Spiliotis, Fotios Petropoulos, Nikolaos Kourentzes and Vassilios Assimakopoulos “Cross-temporal aggregation: Improving the forecast accuracy of hierarchical electricity consumption” In Applied Energy 261, 2020, pp. 114339

- [53] Evangelos Spiliotis et al. “Hierarchical forecast reconciliation with machine learning” In arXiv preprint arXiv:2006.02043, 2020

- [54] Aris A. Syntetos, Konstantinos Nikolopoulos and John E. Boylan “Judging the judges through accuracy-implication metrics: The case of inventory forecasting” In International Journal of Forecasting 26.1, 2010, pp. 134–143

- [55] Leonard J. Tashman “Out-of-sample tests of forecasting accuracy: an analysis and review” In International Journal of Forecasting 16.4, 2000, pp. 437–450 DOI: https://doi.org/10.1016/S0169-2070(00)00065-0

- [56] Xiaozhe Wang, Kate Smith and Rob Hyndman “Characteristic-based clustering for time series data” In Data Mining and Knowledge Discovery 13.3 Springer, 2006, pp. 335–364

- [57] Xiaozhe Wang, Kate Smith-Miles and Rob Hyndman “Rule induction for forecasting method selection: Meta-learning the characteristics of univariate time series” In Neurocomputing 72.10-12 Elsevier, 2009, pp. 2581–2594

- [58] Shanika L Wickramasuriya, George Athanasopoulos and Rob J Hyndman “Optimal forecast reconciliation for hierarchical and grouped time series through trace minimization” In J American Statistical Association 114.526, 2019, pp. 804–819

- [59] Wikipedia “All models are wrong— Wikipedia, The Free Encyclopedia” [Online; accessed 12-October-2020], 2004 URL: \url{https://en.wikipedia.org/wiki/All_models_are_wrong}