Model selection for estimation of causal parameters

Abstract

A popular technique for selecting and tuning machine learning estimators is cross-validation. Cross-validation evaluates overall model fit, usually in terms of predictive accuracy. In causal inference, the optimal choice of estimator depends not only on the fitted models, but also on assumptions the statistician is willing to make. In this case, the performance of different (potentially biased) estimators cannot be evaluated by checking overall model fit.

We propose a model selection procedure that estimates the squared -deviation of a finite-dimensional estimator from its target. The procedure relies on knowing an asymptotically unbiased ”benchmark estimator” of the parameter of interest. Under regularity conditions, we investigate bias and variance of the proposed criterion compared to competing procedures and derive a finite-sample bound for the excess risk compared to an oracle procedure. The resulting estimator is discontinuous and does not have a Gaussian limit distribution. Thus, standard asymptotic expansions do not apply. We derive asymptotically valid confidence intervals that take into account the model selection step.

The performance of the approach for estimation and inference for average treatment effects is evaluated on simulated data sets, including experimental data, instrumental variables settings and observational data with selection on observables.

1 Introduction

Model selection is a fundamental task in statistical practice. Usually, the aim is to find a model that optimizes overall model fit. If the loss function is quadratic, the total error can be decomposed into the error due to variance and the error due to bias. A popular technique to balance the bias-variance trade-off in the context of prediction is cross-validation. However, the performance of common estimators in causal inference does not only depend on prediction performance, but, as we will discuss below, also on assumptions the statistician is willing to make. Thus, methods cannot be reliably compared by checking overall model fit. In the literature, there exist some solutions for parameter-specific model selection, but we currently lack a reliable general-purpose tool. In the context of causal inference, a reliable parameter-specific model selection tool could enable the following applications.

Moving the goalpost.

Estimating average treatment effects from observational data can be unreliable, if conditional treatment assignment probabilities are close to zero or one. To address this issue, some researchers move the goalpost by switching to causal contrasts that can be estimated more reliably, see LaLonde (1986), Heckman et al. (1998), Crump et al. (2006) and references therein. For example, instead of estimating the average treatment effect (ATE), a practitioner might switch to estimating the average treatment effect on the treated (ATT), or other causal contrasts such as the overlap effect. This is often done in an ad-hoc fashion. Using a model selection tool in this context could allow to trade off bias and variance when switching from estimating the ATE to estimators of other causal contrasts.

Data fusion.

Combining evidence across data sets in the context of causal inference has recently attracted increasing interest (Peters et al., 2016; Bareinboim and Pearl, 2016; Athey et al., 2020). However, data quality often varies from data set to data set. In this case, using all data sets can lead to untrustworthy, biased estimators. A reliable model selection tool could make it possible to distinguish which data sets and estimators are useful for solving the estimation problem at hand, and which are not.

Choosing between estimators that are optimal in different models.

If all confounders are observed, semi-parametrically efficient estimators such as augmented inverse probability weighting are attractive as they do not rely on parametric specifications (Robins et al., 1994). On the other hand, if the model is well-specified, parametric estimators such as ordinary least-squares can achieve higher efficiency and the fitted model may be easier to interpret. A reliable model selection procedure would allow practitioners to automatically select interpretable models if the parametric model is a good approximation, and semi-parametric estimators if the parametric model is far from the underlying data-generating process.

Leveraging conditional independencies.

Researchers are often uncomfortable with using statistical procedures that only work under strong assumptions. Using such methods over other procedures may introduce some bias if the assumptions are violated, but has the potential to reduce variance. For example, conditional independence assumptions can be leveraged to improve precision of treatment effect estimators (Athey et al., 2019; Guo and Perković, 2020). A model selection tool as described above would allow to systematically trade off bias and variance when switching between estimation procedures that are optimal under different graphical structures. Doing so can potentially improve precision in scenarios in which researchers are not comfortable with making strong assumptions.

1.1 Related work

Model selection has a long history in statistics and machine learning. For optimizing loss-based estimators, the most commonly used methods include cross-validation, the Akaike information criterion, and the Bayesian information criterion (Akaike, 1974; Schwarz, 1978; Friedman et al., 2001; Arlot and Celisse, 2010).

The focused information criterion is a model selection criterion which, for a given focus parameter, estimates the mean-squared error of submodels (Claeskens and Hjort, 2003, 2008). It relies on knowing an asymptotically unbiased estimator of the parameter of interest. Its theoretical justification is given in a local misspecification framework.

More recently, Cui and Tchetgen-Tchetgen (2019) introduce a model selection tool for finite-dimensional functionals in a semiparametric model if a doubly robust estimation function is available. It is based on a pseudo-risk criterion that has a robustness property if one of the estimators is biased.

For the task of model selection when estimating heterogeneous treatment effects, several methods have been developed (Kapelner et al., 2014; Rolling and Yang, 2014; Athey and Imbens, 2016; Nie and Wager, 2017; Zhao et al., 2017; Powers et al., 2018). Most of the methodologies are specific to the considered model class. A comparison of this line of work for individual treatment effects can be found in Schuler et al. (2018).

Van der Laan and Robins (2003) propose a loss-based approach for parameter-specific model selection. In this work, the authors recommend minimizing an empirical estimate of the overall risk , where , are candidate estimators and is an efficient estimator of the nuisance parameter, computed on the training data set. Our approach is more generic in the sense that we do not assume that parameter of interest minimizes a known loss function.

Closest to our work is the sample-splitting criterion developed by Brookhart and Van Der Laan (2006). Roughly speaking, the data is split into a training and a test data set. Then, estimators are computed on the training and the test data set, and the squared deviation of estimators is aggregated across several splits. The criterion developed by Brookhart and Van Der Laan (2006) can be seen as a form of Monte Carlo cross-validation. In the following, we discuss a variant of this approach that splits the data into folds and thus mimics -fold cross-validation procedures, which are popular in practice. The data is randomly split into disjoint roughly equally-sized folds . Define . Assuming that the data are i.i.d., and are independent for each . Let be an unbiased estimator of the parameter of interest . If several unbiased estimators are available, aggregation procedures such as inverse variance weighting can be used in a pre-processing step to obtain . Let be candidate estimators, . Then, we can compute the risk criterion

| (1) |

Using independence of and ,

As is constant in , the criterion in equation (1) can be used to select an estimator with low mean-squared error for estimating among . The criterion in equation (1) is attractive as it is simple and widely applicable. We will compare the proposed model selection criterion to the criterion in equation (1), both from a theoretical perspective and in simulations.

1.2 Our contribution

In this paper, we work towards making parameter-specific model selection more reliable. We derive a model selection criterion that estimates the squared -deviation of an estimator from its target. We show that the selected model has equal or lower variance than the baseline estimator asymptotically. Compared to the model selection procedure (1), we show that the proposed criterion has equal or lower variance asymptotically. The proposed criterion is flexible in the sense that it can be used for any low-dimensional estimator in both parametric and semi-parametric settings. Theoretical justification of the method is given under the assumption of asymptotic linearity.

Even if the candidate estimators are asymptotically linear, the model selection procedure is discontinuous and will not result in a regular estimator, even for . Thus, the final estimator does not have a Gaussian limit distribution. We derive asymptotically valid confidence intervals for the resulting estimator that takes into account the model selection step.

In previous work, the goal of model selection for estimation is usually to select a nuisance parameter model from a set of candidate models, but the identification strategy is held fixed across models (Van der Laan and Robins, 2003; Brookhart and Van Der Laan, 2006; Cui and Tchetgen-Tchetgen, 2019). In this paper, the goal is to select among different estimands, or identification strategies. Mathematically, this correspond to selecting among different functionals of the underlying data generating distribution. In some situations, this results in dramatic improvements in the mean-squared error. However, there is no free lunch. Compared to the baseline procedure, for fixed , model selection can lead to increased risk in parts of the parameter space. We provide a finite-sample bound that reveals that the excess risk due to model selection becomes negligible as the dimension of the target parameter grows.

In simulations, the proposed criterion exhibits reliable performance in a variety of scenarios, including experiments, instrumental variables settings and data with selection on observables. The code can be found at github.com/rothenhaeusler/tms.

1.2.1 Outline

2 Targeted model selection

This section consists of two parts. We briefly discuss the setting in Section 2.1. Then, we introduce the method in Section 2.2 and discuss basic properties.

2.1 Setting and notation

We observe data , , where the are independently drawn from some unknown distribution . Suppose we have access to estimators , , of some unknown parameter . In the following, to simplify notation, we will write instead of . We assume that the baseline estimator is asymptotically unbiased for , i.e. that . In practice, the data scientist may know several estimators that are asymptotically unbiased for the parameter of interest. In this case, one can use aggregation procedures such as inverse variance weighting to construct an optimally weighted aggregated estimator .

In addition, the data scientist may have access to estimators for which the data scientist is not sure whether they are approximately unbiased for the effect of interest. The goal is to select among the set of estimators, minimizing the mean-squared error with respect to the target of interest . We assume that for some unknown and that converges to a non-degenerate random variable. We write for the asymptotic standard deviation of . Similarly, we assume that converges to a non-degenerate random variable for and write for the asymptotic standard deviation of .

2.2 The method

We aim to find an estimator that minimizes

| (2) |

Here and in the following, we suppress the dependence of and on . As bias and variance of are unknown, the function is unknown and one has to minimize a proxy of the risk instead. We propose to estimate in equation (2) via

| (3) |

where is an estimator of the asymptotic standard deviation of and is an estimator of the asymptotic standard deviation of . If the estimators are asymptotically linear (i.e. in some semi-parametric or low-dimensional parametric settings), consistent estimators and are usually available via plug-in estimators of the variance of the influence function (Van der Vaart, 2000; Tsiatis, 2007). An example will be discussed below. We propose to choose a final estimate by solving

| (4) |

Let us consider a linear regression example. This example was mainly chosen for expository simplicity; the main motivating examples for this method are drawn from causal inference. The causal inference examples need more discussion and will be explained in detail in Section 4.

Example 1 (Model selection for parameter estimation).

Usually, when conducting model selection in the context of prediction, the goal is to find a model that can be estimated well and is a good approximation of some complex model of interest. However, if the purpose is parameter estimation, fitting complex models can reduce variance while potentially introducing bias. Such settings appear in causal inference and will be further discussed in Section 4. Here, we consider the task where the goal is to fit a regression with just one covariate; but there are additional covariates at our disposal that can be used to reduce variance, while potentially introducing some bias for the parameter of interest. Let , where are i.i.d. and the are noise terms that are uncorrelated of the . Furthermore, for simplicity we assume that , and are centered. We are interested in the parameter and consider the baseline estimator

Let us assume that we have access to observations from some additional covariate. One may consider the estimator

Let denote a generic . If is correlated with and only weakly correlated with , this estimator may reduce asymptotic variance compared to since intuitively speaking, adjusting for reduces unexplained variation in the residuals. On the other hand if is strongly correlated with , may converge to a different parameter than . Under regularity conditions (Van der Vaart, 2000, Section 5), is asymptotically linear and unbiased for , i.e.

Similarly, under regularity conditions,

where and where denotes the -th unit vector. Thus,

These quantities can be consistently estimated via plug-in estimators in standard settings. For example, and can be consistently estimated via the sandwich estimator under regularity assumptions (Huber, 1967).

We will compare the risk proxy in equation (3) to sample-splitting based criteria in Section 3. The method is evaluated on simulated data sets in Section 4.

2.2.1 Improving precision

The risk criterion can be decomposed into several parts, i.e. , where

and

As the naming indicates, the first term can be interpreted as an estimate of the squared bias , whereas the second term is an estimate of the variance of . Since we know that squared bias terms are non-negative this motivates defining the following modified risk criterion:

| (5) |

Then the final estimator is chosen such that minimizes equation (5). We take the positive part of the sum (instead of the sum of positive parts) as this allows random errors to cancel out for large . This will be important for the theory developed in Section 3.6. If there are ties, we select as the one that minimizes among the that satisfy . The criterion is not asymptotially unbiased for , but has some favorable statistical properties that we will discuss in the following section.

3 Theory

In this section we discuss the theoretical underpinnings of the method introduced in Section 2. First, we show that the criterion is asymptotically unbiased for estimating the mean-squared error . Secondly, we compare the criterion to cross-validation in terms of asymptotic bias and variance. Then, we discuss the asymptotic risk of the resulting estimator. We derive asymptotically valid confidence intervals for the parameter of interest that takes into account the model selection step. Finally, we present a finite-sample bound that shows that if the dimension of the target parameter is large, the excess risk due to model selection becomes negligible.

3.1 Assumptions

We make two major assumptions, in addition to the assumptions outlined in Section 2.1. The first major assumption is a slightly stronger version of asymptotic linearity. Asymptotic linearity is an assumption that is commonly made to justify asymptotic normality of an estimator (Van der Vaart, 2000; Tsiatis, 2007). As our goal is to estimate the mean-squared error of an estimator, we use a slightly stronger version that guarantees convergence of second moments. The second major assumption is that the variance estimates are consistent.

Assumption 1.

We make two major assumptions.

-

1.

Let , be estimators such that

where are centered and have finite nonzero second moments, and . To avoid trivial special cases, in addition we assume that the covariance matrix of is positive definite.

-

2.

The estimators of variance are consistent, that means

Let us compare the first part of the assumption to asymptotic linearity. Asymptotic linearity assumes that while we assume that . Thus, our assumption is stronger than asymptotic linearity. Let us now turn to our theoretical results.

3.2 Asymptotic unbiasedness

Our first result shows that the proposed criterion is asymptotically unbiased for the mean-squared error of . The convergence rate depends on whether is asymptotically biased for estimating . If the estimator is unbiased, the convergence rate is faster. The proof of the following result can be found in the supplement.

Theorem 1 (Asymptotic unbiasedness of ).

Let Assumption 1 hold.

-

1.

If ,

converges weakly to a random variable with mean zero.

-

2.

If ,

converges weakly to a random variable with mean zero.

This result shows that the estimator is asymptotically unbiased, which is important for the theoretical justification of the approach. The major strength of the proposed approach will become apparant in the next section, where we compare asymptotic bias and variance to the cross-validation criterion (1).

3.3 Comparison to cross-validation

In this section, we will show that the proposed criterion has asymptotically lower variance than the cross-validation criterion (1) if . Furthermore, we show that cross-validation is generally biased for estimating the mean-squared error if . Let us first discuss asymptotic bias of the naive approach. The short proof of this result can be found in the supplement.

Theorem 2 (Asymptotic biasedness of ).

Let Assumption 1 hold and fix .

-

1.

If ,

converges weakly to a random variable with mean zero.

-

2.

If ,

converges weakly to a random variable with mean zero.

Comparing this result with Theorem 1, we see a major difference how the methods behave when . The proposed criterion is asymptotically unbiased for the mean-squared error, while cross-validation is not (even modulo additive constants). The cross-validation approach computes the estimator on randomly chosen subsets of the data. Thus, intuitively, this approach overestimates the asymptotic variance of unbiased estimators compared to the mean-squared error of biased estimators. In practice, this means that the approach tends to choose biased estimators with low variance over unbiased estimators with slighly higher variance. This effect can also be observed in Section 4.

Now, let us turn to the asymptotic variance of the model selection criteria. The proof of the following result can be found in the supplement.

Theorem 3 (Asymptotic variance of model selection criteria).

Let Assumption 1 hold and fix .

-

1.

If , the asymptotic variance of is strictly lower than the asymptotic variance of ,

-

2.

If , the asymptotic variance of is equal to the asymptotic variance of .

Roughly speaking, this theorem shows that the proposed criterion has equal or lower asymptotic variance than the cross-validation criterion . This means that risk estimates based on the cross-validation criterion are harder to interpret than the risk estimates of the proposed criterion, since their variation will be larger. The difference in variance is particularly large if the splits are imbalanced, i.e. if the test data sets have much smaller or much larger sample size than the training data sets. Intuitively, if the validation data sets have small sample size, validation becomes unstable. However, if the validation data set is large, the estimator on the training data sets becomes unstable. This tradeoff can be avoided by estimating bias and variance separately, as done in the proposed approach.

3.4 Asymptotic risk

Here and in the following, we focus on the case where the number of models is small and fixed and . First, we investigate the asymptotic behaviour of the proposed procedure in the case where the number of models is fixed and . The proof of the following result can be found in the supplement.

Corollary 1 (Asymptotic risk of selected model).

In words, for , the proposed method selects models with lower or equal risk than the baseline estimator . Interestingly, an analogous result does not hold for the cross-validation procedure (1). In Section 4 we will see that even for relatively large , the selected model by cross-validation may have risk that is significantly larger than the risk of the baseline procedure.

3.5 Confidence intervals

Deriving confidence intervals that are valid in conjuction with a model selection step is a challenging topic and has attracted substantial interest in recent years, see for example Berk et al. (2013); Taylor and Tibshirani (2015). Generally speaking, statistical inference after a model selection step can be unreliable if the uncertainty induced by the model selection step is ignored. In this section, we describe how to construct confidence intervals that take into account the uncertainty induced by model selection. Intuitively speaking, the challenge is that the final estimator is a discontinuous function of the data. To be more precise, the final estimator is not regular and not asymptotically normal.

The goal in this section is to find and as a function of the data such that

for some pre-determined and and where

The following theorem shows how to construct confidence intervals in low-dimensional settings; i.e. in settings where the number of models is fixed and the sample size goes to infinity.

Theorem 4.

Define and . Assume that

for some positive definite . Let be a consistent estimator of and be a consistent estimator of the asymptotic standard deviation of and be a consistent estimator of the asymptotic standard deviation of . With some abuse of notation, conditionally on the data draw with . We define the event

Now for some fixed define

| (6) |

Then:

-

1.

For , with probability converging to one, the inverse is well-defined.

-

2.

For all ,

Note that by definition the conditional distribution of given is known to the researcher. Also, the researcher often can construct estimators of the variances in parametric and semi-parametric settings via plug-in estimators of the influence function, see e.g. (Van der Vaart, 2000; Tsiatis, 2007). In these cases, can be computed by the researcher, for example by Monte-Carlo simulation. Thus, Theorem 4 allows us to construct asymptotically valid confidence intervals for the final estimator in many parametric and semi-parametric settings.

This result is different from standard asymptotic arguments in the sense that is not asymptotically Gaussian. However, as the result shows, it is possible to recover the exact asymptotic distribution of in low-dimensional scenarios and use this information to conduct asymptotically valid statistical inference. We will evaluate the empirical performance of confidence intervals constructed via Theorem 4 in Section 4.

3.6 Finite-sample bound

As discussed in Section 3.4, the proposed method selects a model that is asymptotically no worse than the baseline estimator. However, there is no free lunch. In transitional regimes, for fixed , the estimator can perform worse than the baseline estimator . This is to be expected from statistical theory, see for example the discussion of the Hodges-Le Cam estimator on page 110 in Van der Vaart (2000). This makes it important to understand in which cases we can expect reliable performance of the proposed model selection procedure. In the case , a Bayesian bound (Gill and Levit, 1995) reveals that improving over the Cramér-Rao bound in some parts of the parameter space must lead to deteriorating performance in other parts of the parameter space. In the following we will provide a finite-sample bound that will show that for large the excess risk becomes negligible, uniformly over a set of distributions.

Theorem 5.

Let where is a constant vector and . We assume that the are centered, independent and sub-Gaussian random variables with variance proxy , i.e. that for all and and . Define as the standard deviation of and as the standard deviation of . We assume that for some constant and . Define . Furthermore, assume that for some constant . Then, for every there exists a constant that may depend on , , and (but not on ) such that with probability exceeding ,

In particular, the excess risk due to model selection goes to zero as and if and . In Section 4 we will see in an example that the excess risk declines for growing dimension of the estimand.

4 Applications

In this section, we discuss applications of the proposed method. Compared to existing literature on model selection for causal effects, instead of selecting among nuisance parameter models, we consider shrinking between different functionals of the data generating distribution. As we will see, doing so can lead to drastic improvements in the mean-squared error. However, there is no free lunch. Compared to the baseline procedure, for fixed , model selection can lead to increased risk in parts of the parameter space. Thus, in this section, we study the excess risk of the procedures across the parameter space.

In the following we will use potential outcomes to define causal effects (Rubin, 1974; Splawa-Neyman et al., 1990). We are interested in the causal effect of a treatment on an outcome . Let denote the potential outcome under treatment and the potential outcome under treatment . We assume a superpopulation model, i.e. and are random variables. In the following, the goal is to estimate the average treatment effect within several subgroups,

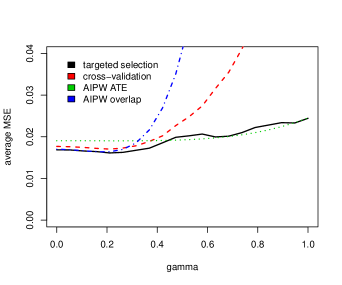

| (7) |

Many methods have been designed to estimate (7) and these methods operate under a variety of assumptions. We present several applications that are based on different sets of assumptions for identifying (7). In each of the cases, we compare the proposed method (5, termed “targeted selection”) with the cross-validation procedure (1) and with a baseline estimator. The code can be found at github.com/rothenhaeusler/tms.

4.1 Observational studies

In observational studies, it is common practice to estimate causal effects under the assumption of unconfoundedness and under the overlap assumption. Roughly speaking, the overlap assumption states that treatment assignment probabilities are bounded away from zero and one, conditional on covariates . If these assumptions are met, it is possible to identify the average treatment effect via matching, inverse probability weighting, regression adjustment, or doubly robust methods (Hernan and Robins, 2020; Imbens and Wooldridge, 2009). However, if the overlap is limited, estimating the average treatment effect can be unreliable.

To deal with the issue of limited overlap, researchers sometimes switch to different estimands such as the average effect on the treated (ATT) or the overlap weighted effect (Crump et al., 2006). In the following, we will focus on the overlap-weighted effect as it is the causal contrast that can be estimated with the lowest asymptotic variance in certain scenarios (Crump et al., 2006). The overlap-weighted effect is defined as

where . Note that if the treatment effect is homogeneous , then the overlap-weighted effect and the average treatment effect coincide, that means . Thus, shrinkage towards an efficient estimator of the overlap effect is potentially beneficial under treatment effect homogeneity.

We investigate shrinking between estimators of the average treatment effect and the overlap-weighted effect in a data-driven way. The proposed model selection tool will be used to trade off bias and variance.

4.1.1 The data set

We observe independent and identically distributed draws of a distribution , where the are covariates. The data generating process was chosen such that there is limited overlap, i.e. and that the unconfoundedness assumptions, that means (Rosenbaum and Rubin, 1983). As discussed above, the causal effect can be estimated via doubly robust methods such as augmented inverse probability weighting, among others (Hernan and Robins, 2020). The data are generated according to the following equations:

| (8) | ||||

where . For , the treatment effect is homogeneous across . Thus, for , the overlap-weighted effect coincides with the average treatment effect.

4.1.2 The estimators

In the following, we compute the estimators for each group separately on the data set . For reasons of readability, notationally we suppress the dependence of the conditional probabilities and conditional expectations on . We can estimate that average treatment effect via augmented inverse probability weighting (Robins et al., 1994),

where

and where is the empirical mean of given and and are empirical probabilities. Similarly as above, we can estimate the overlap effect by

where

For we define

| (9) |

For , due to treatment effect homogeneity we expect . For , we expect . In the first case, the optimal estimator is with . In the second case the optimal estimator is with .

4.1.3 Results

The mean-squared error of the estimator selected by targeted selection and 10-fold cross-validation is depicted in Figure 1. To further improve the stability of cross-validation, we repeat the data splitting 10 times on randomly shuffled data. To study the influence of dimension on the performance of the model selection procedure, we show how the method performs on the full data set (left-hand side) and how the method performs if it only has access to the subset of observations for which (right-hand side). Results are averaged across simulation runs. The method performs better for (left plot) than for (right plot), which is consistent with Theorem 5. For , targeted selection performs similarly or better than the baseline estimator for all . For , the proposed approach performs worse than the baseline estimator for .

We also evaluated the realized coverage of confidence intervals with nominal coverage as described in Section 3.5. Equation 6 is estimated using the non-parametric bootstrap. Across , the minimal realized coverage is . The maximal realized coverage is . Averaged across all , the overall coverage is .

4.2 Instrumental variables and data fusion

The instrumental variables approach is a widely-used method to estimate causal effect of a treatment on a target outcome in the presence of confounding (Wright, 1928; Bowden and Turkington, 1990; Angrist et al., 1996). Roughly speaking, the method relies on a predictor (called the instrument) of the treatment that is not associated with the error term of the outcome . We will not discuss the assumptions behind instrumental variables in detail, but refer the interested reader to Hernan and Robins (2020). We will focus on the case, where , and are one-dimensional. Under IV assumptions and linearity, the target quantity can be re-written as

Estimating this quantity can be challenging if the instrument is weak, i.e. if . In this case, the approach can benefit from shrinkage towards the ordinary least-squares solution (Nagar, 1959; Theil, 1961; Rothenhäusler et al., 2020; Jakobsen and Peters, 2020). Doing so may decrease the variance but generally introduces bias. We will focus on the case where we have some additional observational data, where we observe and , but where the instrument is unobserved.

4.2.1 The data set

We draw i.i.d. observations according to the following equations:

| (10) | ||||

We vary , which corresponds to the strength of confounding between and . We observe for . We also assume that we have access to a larger data set with incomplete observations. To be more precise, on this data set we only observe and , but not the instrument . Formally, for we observe drawn according to equation (10).

4.2.2 The estimators

In the linear case, for each subset , the instrumental variables estimator can be written as

where denotes the empirical covariance over the observations . To deal with the weak instrument, we will consider shrinking the instrumental variables estimator torwards ordinary least-squares,

where denotes the empirical expectation over the observations . Shrinking towards the ordinary least-squares solution will introduce some bias if , but potentially decreases variance. As candidate estimators, for any we consider convex combinations of OLS and IV,

4.2.3 Results

The mean-squared error of the estimator selected by targeted selection and cross-validation is depicted in Figure 2. We use 10-fold cross-validation. To further improve the stability of cross-validation, we repeat the data splitting 10 times on randomly shuffled data. Results are averaged across simulation runs. Over the entire range , the proposed approach outperforms cross-validation. For and , targeted selection outperforms the IV approach. For , the proposed approach performs worse than the IV approach. We evaluate the realized coverage of confidence intervals with nominal coverage as described in Section 3.5. Equation 6 is estimated using the non-parametric bootstrap. Across , the minimal realized coverage was . The maximal realized coverage was . Averaged across all , the overall realized coverage was .

4.3 Experiment with proxy outcome

One of the most popular estimators for causal effects in experimental settings is difference-in-means. To improve variance, it is possible to adjust for pre-treatment covariates, see for example Lin (2013). This raises the question whether post-treatment covariates can be used to improve the precision of causal effect estimates. This is indeed the case under additional assumptions. For example, in some cases, the treatment effect can be written as the product

| (11) |

where is the effect of the treatment on some surrogate or proxy outcome ; and is the effect of the proxy on the outcome. It is well-known that estimators that make use of such decompositions can outperform the standard difference-in-means estimator in terms of asymptotic variance (Tsiatis, 2007; Athey et al., 2019; Guo and Perković, 2020). However, doing so can introduce bias if equation (11) does not hold. We will use the proposed model selection procedure to shrink between difference-in-means and an estimator that is unbiased if the treatment effect decomposition in equation (11) holds.

4.3.1 The data set

We consider a simple experimental setting with a post-treatment variable . For simplicity, let us consider an experiment with binary treatment , a binary proxy outcome and outcome . We draw i.i.d. observations according to the following equations:

| (12) | ||||

For , the outcome is conditionally independent of the treatment, given the proxy . In this case, the average treatment effect can be written in product form, , and this decomposition can be leveraged for estimation. For , this decomposition does not hold.

4.3.2 The estimators

The standard estimator to estimate causal effects from experiments is difference-in-means,

| (13) |

If the proxy outcome is a valid surrogate, i.e. if

we can rewrite as

Thus, in this case, we can also consider the product estimator

| (14) | ||||

On each subset we compute (13) and (14), yielding and for . We shrink between these two vectors, i.e. for we define

4.3.3 Results

The mean-squared error of the estimator selected by targeted selection and cross-validation is depicted in Figure 3. We use 10-fold cross-validation. To improve the stability of cross-validation, we repeat the data splitting 10 times on randomly shuffled data. Results are averaged across simulation runs. Similarly as above, targeted selection performs similar or better than cross-validation. Targeted selection performs better than the difference-in-means for . For , targeted selection approaches the performance of difference-in-means. We evaluated the realized coverage of confidence intervals with nominal coverage as described in Section 3.5. Equation 6 is estimated using the non-parametric bootstrap. Across , the minimal realized coverage was . The maximal realized coverage was . Averaged across all , the overall coverage was .

5 Conclusion

We have introduced a method that allows to conduct targeted parameter selection by estimating the bias and variance of candidate estimators. The theoretical justification of the method relies on a linear expansion of the estimator. The method is very general and can be used in both parametric and semi-parametric settings. Under regularity conditions, we showed that the proposed criterion has asymptotically equal or lower variance than competing procedures based on sample splitting. In addition, we showed that for , the modified risk criterion selects models with lower or equal risk than the baseline estimator . Furthermore, we derived asymptotically valid confidence intervals in low-dimensional settings.

In simulations, we showed that the method selects reasonable models and outperforms the cross-validation procedure in most scenarios. The proposed method can decrease variance if the competing estimators are approximately unbiased. However, there is no free lunch. In transitional regimes, for fixed , the estimator can perform worse than the baseline estimator . This is to be expected from statistical theory, see for example Van der Vaart (2000, page 110). However, as the finite-sample bound and our simulations show, the excess risk goes to zero as the dimension of the target parameter grows.

The theoretical justification of the proposed method relies on a linear approximation of the estimator in a neighborhood of the parameter values . Thus, it would be important to understand the performance of the method in scenarios where parameter estimates of some of the estimators are far from the parameter values. In Section 4.2, we have seen some preliminary evidence that the proposed methodology may be used to combine knowledge across data sets. The proposed method is not tailored to this special case. Thus, we believe that it would be exciting to investigate whether the model selection can be further improved for data fusion tasks.

References

- Akaike [1974] H. Akaike. A new look at the statistical model identification. In Selected Papers of Hirotugu Akaike, pages 215–222. Springer, 1974.

- Angrist et al. [1996] J. Angrist, G. Imbens, and D. Rubin. Identification of causal effects using instrumental variables. Journal of the American Statistical Association, 91(434):444–455, 1996.

- Arlot and Celisse [2010] S. Arlot and A. Celisse. A survey of cross-validation procedures for model selection. Statistics surveys, 2010.

- Athey and Imbens [2016] S. Athey and G. Imbens. Recursive partitioning for heterogeneous causal effects. Proceedings of the National Academy of Sciences, 2016.

- Athey et al. [2020] S. Athey, R. Chetty, and G. Imbens. Combining experimental and observational data to estimate treatment effects on long term outcomes. arXiv preprint arXiv:2006.09676, 2020.

- Athey et al. [2019] Susan Athey, Raj Chetty, Guido W Imbens, and Hyunseung Kang. The surrogate index: Combining short-term proxies to estimate long-term treatment effects more rapidly and precisely. Technical report, National Bureau of Economic Research, 2019.

- Bareinboim and Pearl [2016] E. Bareinboim and J. Pearl. Causal inference and the data-fusion problem. Proceedings of the National Academy of Sciences, 113(27):7345–7352, 2016.

- Berk et al. [2013] R. Berk, L. Brown, A. Buja, K. Zhang, and L. Zhao. Valid post-selection inference. The Annals of Statistics, 41(2):802–837, 2013.

- Bowden and Turkington [1990] R. Bowden and D. Turkington. Instrumental variables, volume 8. Cambridge university press, 1990.

- Brookhart and Van Der Laan [2006] M. Brookhart and M. Van Der Laan. A semiparametric model selection criterion with applications to the marginal structural model. Computational statistics & data analysis, 50(2):475–498, 2006.

- Claeskens and Hjort [2003] G. Claeskens and N. Hjort. The focused information criterion. Journal of the American Statistical Association, 2003.

- Claeskens and Hjort [2008] G. Claeskens and N. Hjort. Model selection and model averaging. Cambridge University Press, 2008.

- Crump et al. [2006] R. Crump, V. Hotz, G. Imbens, and O. Mitnik. Moving the goalposts: Addressing limited overlap in the estimation of average treatment effects by changing the estimand. Technical report, National Bureau of Economic Research, 2006.

- Cui and Tchetgen-Tchetgen [2019] Y. Cui and E. Tchetgen-Tchetgen. Bias-aware model selection for machine learning of doubly robust functionals. arXiv preprint arXiv:1911.02029, 2019.

- Friedman et al. [2001] J. Friedman, T. Hastie, and R. Tibshirani. The elements of statistical learning. Springer, 2001.

- Gill and Levit [1995] Richard D Gill and Boris Y Levit. Applications of the van Trees inequality: a Bayesian Cramér-Rao bound. Bernoulli, 1(1-2):59–79, 1995.

- Guo and Perković [2020] F. Guo and E. Perković. Efficient least squares for estimating total effects under linearity and causal sufficiency. arXiv preprint arXiv:2008.03481, 2020.

- Heckman et al. [1998] J. Heckman, H. Ichimura, and P. Todd. Matching as an econometric evaluation estimator. The review of economic studies, 65(2):261–294, 1998.

- Hernan and Robins [2020] M. Hernan and J. Robins. Causal inference: What If. Chapman & Hall, 2020.

- Huber [1967] P. Huber. The behavior of maximum likelihood estimates under nonstandard conditions. In Proceedings of the fifth Berkeley symposium on mathematical statistics and probability. University of California Press, 1967.

- Imbens and Wooldridge [2009] G. Imbens and J. Wooldridge. Recent developments in the econometrics of program evaluation. Journal of economic literature, 47(1):5–86, 2009.

- Jakobsen and Peters [2020] M. Jakobsen and J. Peters. Distributional robustness of k-class estimators and the PULSE. arXiv preprint arXiv:2005.03353, 2020.

- Kapelner et al. [2014] A. Kapelner, J. Bleich, A. Levine, Zachary D. C., R. DeRubeis, and R. Berk. Inference for the effectiveness of personalized medicine with software. arXiv preprint arXiv:1404.7844, 2014.

- LaLonde [1986] R. LaLonde. Evaluating the econometric evaluations of training programs with experimental data. The American economic review, pages 604–620, 1986.

- Lin [2013] W. Lin. Agnostic notes on regression adjustments to experimental data: Reexamining Freedman’s critique. The Annals of Applied Statistics, 7(1):295–318, 2013.

- Nagar [1959] A. Nagar. The bias and moment matrix of the general k-class estimators of the parameters in simultaneous equations. Econometrica, pages 575–595, 1959.

- Nie and Wager [2017] X. Nie and S. Wager. Quasi-oracle estimation of heterogeneous treatment effects. arXiv preprint arXiv:1712.04912, 2017.

- Peters et al. [2016] Jonas Peters, Peter Bühlmann, and Nicolai Meinshausen. Causal inference by using invariant prediction: identification and confidence intervals. Journal of the Royal Statistical Society: Series B, 78(5), 2016.

- Powers et al. [2018] S. Powers, J. Qian, K. Jung, A. Schuler, N. Shah, T. Hastie, and R. Tibshirani. Some methods for heterogeneous treatment effect estimation in high dimensions. Statistics in medicine, 2018.

- Robins et al. [1994] J. Robins, A. Rotnitzky, and L. Zhao. Estimation of regression coefficients when some regressors are not always observed. Journal of the American Statistical Association, 1994.

- Rolling and Yang [2014] C. Rolling and Y. Yang. Model selection for estimating treatment effects. Journal of the Royal Statistical Society: Series B, 2014.

- Rosenbaum and Rubin [1983] P. Rosenbaum and D. Rubin. Assessing sensitivity to an unobserved binary covariate in an observational study with binary outcome. Journal of the Royal Statistical Society: Series B, 45(2):212–218, 1983.

- Rothenhäusler et al. [2020] D. Rothenhäusler, N. Meinshausen, P. Bühlmann, and J. Peters. Anchor regression: heterogeneous data meets causality. arXiv preprint arXiv:1801.06229, 2020.

- Rubin [1974] D. Rubin. Estimating causal effects of treatments in randomized and nonrandomized studies. Journal of educational Psychology, 66(5):688, 1974.

- Schuler et al. [2018] A. Schuler, M. Baiocchi, R. Tibshirani, and N. Shah. A comparison of methods for model selection when estimating individual treatment effects. arXiv preprint arXiv:1804.05146, 2018.

- Schwarz [1978] G. Schwarz. Estimating the dimension of a model. Annals of Statistics, 6(2), 1978.

- Splawa-Neyman et al. [1990] J. Splawa-Neyman, D. Dabrowska, and T. Speed. On the application of probability theory to agricultural experiments. Statistical Science, pages 465–472, 1990.

- Taylor and Tibshirani [2015] J. Taylor and R. Tibshirani. Statistical learning and selective inference. Proceedings of the National Academy of Sciences, 112(25):7629–7634, 2015.

- Theil [1961] H. Theil. Economic forecasts and policy. North-Holland Pub. Co., 1961.

- Tsiatis [2007] A. Tsiatis. Semiparametric theory and missing data. Springer Science & Business Media, 2007.

- Van der Laan and Robins [2003] M. Van der Laan and J. Robins. Unified methods for censored longitudinal data and causality. Springer, 2003.

- Van der Vaart [2000] A. Van der Vaart. Asymptotic statistics. Cambridge university press, 2000.

- Wainwright [2019] M. Wainwright. High-dimensional statistics: A non-asymptotic viewpoint, volume 48. Cambridge University Press, 2019.

- Wright [1928] P. Wright. Tariff on animal and vegetable oils. Macmillan Company, New York, 1928.

- Zhao et al. [2017] Y. Zhao, X. Fang, and D. Simchi-Levi. Uplift modeling with multiple treatments and general response types. In Proceedings of the 2017 SIAM International Conference on Data Mining. SIAM, 2017.

Appendix A SUPPLEMENTARY MATERIAL

This supplementary material contains proofs for the theoretical results in the main paper.

A.1 Proof of Theorem 1

Proof.

First, note that

Thus, it is sufficient to show that for each with

converges in distribution to a centered random variable and for each with ,

converges in distribution to a centered random variable. Thus, without loss of generality, in the following we will assume that .

Case 1:

As and as ,

By assumption,

Similarly,

Combining these equations with the definition of ,

Using the Central Limit Theorem, converges to a Gaussian random variable with mean zero and variance . Thus,

converges in distribution to a random variable with mean zero. This concludes the proof of the case .

Case 2:

Similarly as above, we can show that

and

Thus,

| (15) |

Using the CLT, converges in distribution to a centered Gaussian random variable with variance . Using this fact in equation (15) concludes the proof.

∎

A.2 Proof of Theorem 2

Let . First, note that

where

and where if and if . Furthermore, using Assumption 1,

Thus, it is sufficient to show the following: For each with

converges in distribution to a centered random variable and for each with ,

converges in distribution to a centered random variable. In the following, without loss of generality we will assume that .

Proof.

Case 1: . Recall that . Using the CLT, for every ,

converges to a centered Gaussian random variable with variance

where . Hence,

converges to a random variable with asymptotic mean

This concludes the proof of case 1.

Case 2:

Similarly as above, we can show that

Thus,

| (16) |

Using the CLT, converges to a centered Gaussian random variable with variance . Using this fact in equation (16) concludes the proof. ∎

A.3 Proof of Theorem 3

Proof.

First, we show that it is sufficient to show the statement in the one-dimensional case, i.e. for . Define and , where the orthonormal matrix is chosen such that the asymptotic covariance of is diagonal. By Assumption 1, converges to a Gaussian random variable with a covariance matrix that is positive definite. Let denote the asymptotic covariance of . Define as the asymptotic standard deviation of and as the asymptotic standard deviation of . Similarly let denote the asymptotic covariance of . Now note that

Analogously,

Thus,

Now, by construction converges to a Gaussian with diagonal covariance matrix. Thus, the components of are asymptotically independent. Thus, the asymptotic mean and variance of is equal to the sums of asymptotic means and asymptotic variances of

Similarly, note that

As the components of are asymptotically independent, the asymptotic mean and variance of is equal to the sums of asymptotic means and asymptotic variances of

Thus, in the following without loss of generality we will focus on the case and , . We will now consider two cases.

Case 1:

Let . Inspecting the proof of Theorem 1 we obtain that

Multiplying with , we can rewrite this as

Writing and and using we obtain

Similarly, by inspecting the proof of Theorem 2,

We have , and . Thus, multiplying with we can rewrite this as

Thus, to complete the proof, we have to show that the asymptotic variance of the first term is larger than the asymptotic variance of the second term:

Using the CLT, for and fixed, converge to a centered multivariate Gaussian vector with non-degenerate variance. Hence, without loss of generality in the following we can assume that the vector

is multivariate Gaussian. Recall that from Assumption 1 it follows that . Thus we also have . Using Lemma 1 completes the proof of case 1.

Case 2:

A.4 Proof of Corollary 1

Proof.

By assumption, we have . If , then

with . On the other hand, if ,

Thus,

Now consider any with and . Then, using Assumption 1,

and

Recall that by assumption . Thus, for . As this holds for all with for , this concludes the proof. ∎

A.5 Proof of Theorem 4

Proof.

We will first prove the first statement. Note that if is positive definite, is continuous and strictly increasing and , . As converges in probability to a positive definite matrix, the inverse is well-defined, except on an event with vanishing probability as .

Let us now turn to the second statement. We use the decomposition

| (18) |

Define the event

It is crucial to understand how this event behaves in the limit. converges to a centered Gaussian distribution with covariance matrix . Thus, define

where , independent of the data . Before we investigate the large-sample behaviour of equation (18), let us fix some notation.

where , conditionally on the data . In the first step, let us consider for which . Recall that , , and . Also, converges weakly to a distribution that is equal to the distribution of . By weak convergence, for all ,

| (19) |

where the limit on the right-hand side is in probability. Now, let us focus on for which . Note that for all with , . Thus, for all with ,

Since and and and , for all with we have . Thus for all with , . Analogously it can be shown that for all with . Thus, for with ,

| (20) |

where the limit on the right-hand side is in probability. By equation (19) and equation (20), for all ,

Now note that as is positive definite, has positive density on and thus

is strictly increasing. By definition, for all , is non-decreasing. Thus, the function

is strictly increasing. Note that by construction form a disjoint partition of the sample space. Thus, using the definition of ,

Similarly, by definition, and are increasing with and . Invoking Polya’s theorem with equation (20), in probability. Analogously, converges to zero in probability.

Consider a sequence such that

Since converges to uniformly, we have that in probability. Since is strictly increasing and continuous, converges in probability to the unique with . Thus, for all ,

Now, letting and using that is continuous and ,

| (21) |

Analogously,

Thus,

| (22) |

Combining equation (21) and equation (22),

Analogously, it can be shown that

Thus,

This completes the proof.

∎

A.6 Proof of Theorem 5

Proof.

First, with probability exceeding ,

for some constants and . Here, we used sub-Gaussian and subexponential tail bounds, see for example Chapter 2 in Wainwright [2019]. More precisely, we used that is sub-Gaussian with variance proxy and that is subexponential with parameter . Using a union bound, for every there exists a constant , such that with probability exceeding ,

Since , for all there exists a constant such that with probability exceeding ,

| (23) |

Analogously, it can be shown that there exists a constant such that with probability exceeding ,

| (24) | ||||

Combining equation (23) and equation (24), with probability ,

for some constant that depends on , , , and . Thus, on that event,

Furthermore, on that event,

Thus,

Here, we used that . ∎

A.7 Auxiliary Lemmas

Lemma 1.

Let be i.i.d. Gaussian with mean zero and nonzero variance and . Let . Then,

| (25) |

Proof.

As are multivariate Gaussian and , , for some , where is centered Gaussian and independent of with nonzero variance. Thus, it suffices to show that

| (26) |

On the left-hand side of equation (26), we have

On the right-hand side of equation (26) we have

Thus, it suffices to show that the following three inequalities hold:

The first two inequalities follow from Lemma 2 and Lemma 3. Let us now deal with the last term.

Thus, it suffices to show that

Or, equivalently,

| (27) |

Dividing by yields

The left term can be written as

This shows the inequality in equation (27), which completes the proof.

∎

Lemma 2.

Let be i.i.d. centered Gaussian random variables with nonzero variance and . Then,

| (28) |

Proof.

Lemma 3.

Let be i.i.d. centered Gaussian random variables and . Then,

Proof.

It suffices to show that

| (29) |

On the left-hand side we have

On the right-hand side of equation (29) we have

Using the two inequalities above, it suffices to show that for all and all ,

Rearranging, it suffices to show that

Multiplying with , this is equivalent to

which is equivalent to

This inequality is equivalent to

| (30) |

Rearranging the left-hand side, we obtain

This proves the inequality of equation (30), which completes the proof.

∎