Yuliang et. al.

*Yuliang Shi.

200 University Ave W, Waterloo, ON, Canada, N2L 3G1.

Model Selection for Causal Modeling in Missing Exposure Problems

Abstract

[Abstract]In causal inference, properly selecting the propensity score (PS) model is an important topic and has been widely investigated in observational studies. There is also a large literature focusing on the missing data problem. However, there are very few studies investigating the model selection issue for causal inference when the exposure is missing at random (MAR). In this paper, we discuss how to select both imputation and PS models, which can result in the smallest root mean squared error (RMSE) of the estimated causal effect in our simulation study. Then, we propose a new criterion, called “rank score” for evaluating the overall performance of both models. The simulation studies show that the full imputation plus the outcome-related PS models lead to the smallest RMSE and the rank score can help select the best models. An application study is conducted to quantify the causal effect of cardiovascular disease (CVD) on the mortality of COVID-19 patients.

keywords:

Causal Inference; Propensity Score; Multiple Imputation Chained Equations; Rank Score.1 Introduction

In application studies, missing data can occur in multiple ways and one of the common cases is that the exposure of interest may not be fully observed 1. For example, a patient’s exposure status may not be fully recorded when the patient suddenly drops out of the clinical study; or an individual may decline to answer a sensitive question regarding his/her health problem in a survey questionnaire. In addressing the challenge of missing data, researchers have proposed various methodologies for handling cases where the outcome is missing 2. However, when the exposure status is missing, few approaches have been provided in literature to deal with this issue 3, 4.

In causal inference, propensity score (PS) analysis is a popular tool to address the confounding issue 5. However, when the exposure status is missing at random (MAR), estimation of the causal effect is challenging. The case of MAR on exposure significantly differs from MAR on the outcome. When the outcome is missing, only the estimation of the outcome model is influenced by the missing data, but the estimation of the PS model will not be affected by the missing outcome. In contrast, when the exposure is missing, the estimation of all three models — imputation, PS, and outcome models— is affected. Consequently, although PS-based methods have been widely discussed when the outcome is MAR, their application to cases where the exposure is missing is not straightforward 3. Careful adjustment regarding both missing and confounding issues are necessary in such scenarios.

One of the common approaches to dealing with missing data is via imputation. Single imputation (SI) is easy to conduct based on the observed data 6. However, imputing data only once may not be reliable and a large variation may be induced 7. In contrast, the multiple imputations chained equations (MICE) approach is proposed through random sampling, and Rubin’s Rules can be applied to account for both the sampling variability and the uncertainty in the imputation of missing values 8, 9.

In addition to the imputation-based method, inverse probability weighting (IPW) or double robust (DR) methods have been widely investigated in the literature 10, 11, 12. In recent literature, when the exposure is MAR, two-layer DR estimators have been proposed to deal with both missingness and confounding issues 3. Later, a triple robust estimator (TR) was proposed to protect against misspecification of the missingness and imputation models 4. Besides that, nonparametric estimators with efficient bounds have been proposed using the efficient influence function 13. Compared with the imputation-based method, DR or TR approach requires more flexible conditions for the model specifications 14, but very few papers discuss the model selection problem when the exposure is MAR. Despite the above-mentioned more sophisticated methods for estimating causal effects when the exposure is MAR, in this article, we focus on the more intuitive and straightforward approach: we impute the missing exposure values first via MICE and employ propensity score-based methods, such as IPW or DR, to estimate causal effects based on the imputed datasets. Therefore, we face the model selection issue for both the imputation model and the PS model.

In the previous literature, researchers have shown that improper model selection for the PS model can result in higher bias and variance 15. However, little attention has been given to addressing how to select both imputation and PS models when the exposure is MAR. As a motivating example, we consider the case in which researchers aim to determine the causal relationship between the incidence of cardiovascular disease (CVD, as the exposure) and COVID-19 patients’ mortality (as the outcome) in an observational study where CVD status is not completely observed. In this situation, we need to make adjustment for both missing CVD status and other confounding factors. However, which variables should be selected for the imputation and PS models is not clear because we do not know the true missing values of CVD.

Another problem from the motivating example is the so-called “double-dipping issue”, which means if we impute the missing values of exposure with all exposure-related covariates on the first step and fit the PS model with the same set of covariates using that imputed dataset on the second step, the bias and variance of the estimated causal effect may be increased due to two mains reasons: (1) we do not clearly distinguish the purpose of fitting the imputation and PS models; (2) we include redundant exposure-related covariates instead of outcome-related variable into two models. More specifically, for the imputation model, we aim to increase its predictive ability to correctly impute the missing exposure. In contrast, to select the proper PS model, we intend to balance the confounders across exposure and non-exposure groups instead of increasing its predictive performance 16. In addition, including all exposure-related covariates in the PS model is also redundant because those variables are not considered as main confounders.

Even though fitting the imputation and PS models with the same set of exposure-related covariates is very common in application studies, it is very important to investigate which variables should be included in the imputation and PS models to achieve the best performance of causal estimates among candidate models. Furthermore, since we generally do not know the true causal effect in the application, evaluating those selected models through some model selection criteria is also essential. Therefore, we propose a new rank-based criterion to combine the performance of the imputation and PS models, compared with the traditional criterion. The rest of this article is organized as follows. In Section 2, we describe the goal of our research and discuss the proposed criteria for conducting model selection. In Section 3, we further design a simulation study to investigate model selection in both imputation and PS models. In Section 4, an application study is conducted to identify the causal effect of CVD on mortality for a cohort of COVID-19 patients. The article ends with a discussion in Section 5.

2 Notation and Methods

2.1 Framework and Assumptions

Based on the counterfactual causal framework 17, we denote as the potential outcomes if individual were exposed or unexposed (or equivalently, treated or untreated), respectively. Let denote the covariates, denote the exposure of interest, denote the binary outcome, and denote the indicator of whether the exposure value is missing or not for individual . In this study, we focus on the exposure variable being missing, so all other variables are assumed to be completely observed. In addition, we study the model selection problem on a finite set of covariates instead of the high-dimensional setting.

For each individual , and cannot be observed at the same time; instead, we only observe and . The observed dataset is , and the relationship between the observed and potential outcomes can be written as , for . The true propensity score (PS) is defined as for individual . We denote and as the average potential outcomes if treated or untreated, respectively. For the binary outcome in our application studies, we define the causal estimand of interest as the causal risk ratio: . Even though the study focuses on the binary outcome, the theory is developed in general scenarios and the key ideas presented in this article will not be largely changed by the different formats of the causal quantities, so the application can be easily expanded to other cases, such as the continuous outcome or count outcome. To estimate the causal risk ratio , three assumptions are required as follows:

-

1.

Strongly ignorable treatment assignment assumption (SITA): .

-

2.

Positivity assumption: .

-

3.

MAR assumption: .

Here, SITA assumption is also known as the assumption of no unmeasured confounders, which cannot be tested in the application studies. The positivity assumption requires the propensity score to be a positive probability, which can be checked after imputing the missing exposure status correctly 17. MAR assumption assumes that the missing indicator is conditionally independent of the exposure itself given all observed covariates and outcomes in the dataset, which also cannot be tested on the observed data due to the unknown missing values 3.

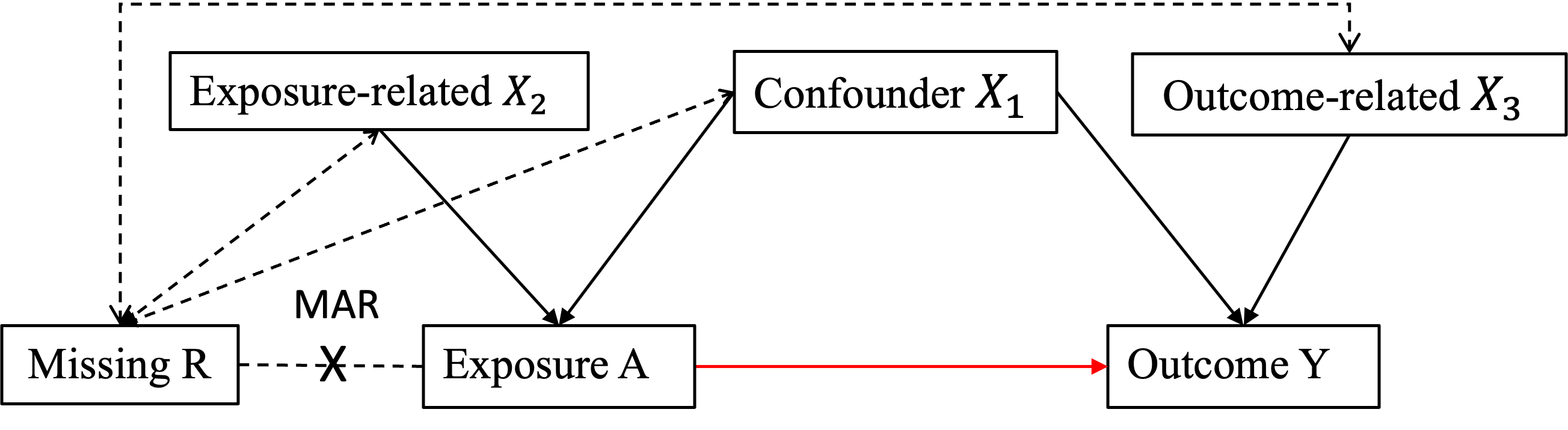

The objective of this study is two-fold. Firstly, since different components of can be different as shown in Figure 1, our primary goal is to find the best selection on both imputation and PS models when the exposure is MAR. Secondly, we aim to find a suitable criterion to evaluate the performance of the two models in the application study when a DAG is not known.

2.2 An Illustrative Example

To study the different roles of covariates in model selection, we consider a simplified scenario with three covariates, which have different effects on the exposure or the outcome. As shown in Figure 1, is the main confounder, is the exposure-related covariate, and is the covariate related only to the outcome. We denote the vector of covariates for subject as . In terms of assumptions described in the general scenario in Section 2.1, we can rewrite three main assumptions based on Figure 1 as follows:

-

1.

Strongly ignorable treatment assignment assumption (SITA): .

-

2.

Positivity assumption: .

-

3.

MAR assumption: .

Since is the “sufficient set” for confounding adjustment in this specific case 18, SITA assumption only requires conditioning on the main confounder instead of all covariates. In addition, we do not include the association between and as shown in Figure 1 since we want to investigate whether adding and will increase the accuracy of the imputation model for the missing exposure. In that way, we only need to condition on for MAR assumption based on Figure 1.

To investigate the role of each variable in the imputation model, one of the approaches is to rely on the theories of directed acyclic graph (DAG), which is a common tool using “d-separation” 19 to check conditional independence among variables without specifying the form of the models. In our specific example in Figure 1, we know that should be included in the imputation model because they affect and directly. The main question is whether should be included in the imputation model or not. Even though we do not include the outcome in the true missingness model in Figure 1, is directly correlated with the exposure variable, so adding may help predict the missing exposure values.

Next, we aim to study the role of in the imputation model. Notice that , but if given , is a function of and is also correlated with , as shown in Figure 1. In addition, if we condition on , will be the collider in the path of , so . In other words, including the outcome and outcome-related variables in the imputation model is expected to improve the predictive ability of the imputation model. In summary, we should include all into the imputation model, which will be verified later in simulation studies.

2.3 Estimation

Before we discuss the model selection strategy, we first discuss how to estimate . As we have previously mentioned, estimating requires us to account for both missing and confounding issues. For the missing data problem, we will impute the exposure status via MICE based on MAR assumption 7, 8. After the missing exposures are imputed, can be estimated using inverse-probability weighting (IPW) or double robust (DR) method to account for the confounding issues.

In summary, we present an algorithm with three key steps as follows:

-

1.

Fit imputation (Imp) model based on MAR assumption using MICE: fit logistic regression model for to obtain as the exposure on the imputed dataset, which includes the original exposure for individuals without missing values and imputed exposure for individuals with missing values.

-

2.

Fit PS model using the logistic model on the imputed dataset: to obtain fitted PS values, denoted as .

-

3.

Apply inverse weighting to adjust for the confounding issue, and the IPW estimator can be written as 20:

(1) Then, an IPW estimator for is: or the DR estimator can be written as:

(2) where is the fitted response for the treatment group, i.e., ; is the fitted response for the control group, i.e., . Here, are estimated parameters for the treatment or control group from the outcome model, written as . Then, a DR estimator for is: .

Notice that when we conduct imputation using MICE, we choose as the number of imputations. Then, IPW and DR estimators for can be constructed on each imputed dataset and Rubin’s Rules is applied to obtain final estimated values based on the algorithm described above 2.3 9. If the imputation model is correctly specified, based on MAR assumption, we can approximate by . Furthermore, if PS model is also correct, due to SITA assumption, and its asymptotic normality holds as 20. For DR estimator, it can protect against misspecification of either PS or the outcome model 12, 11. In other words, if the imputation model is correct and either PS or the outcome model is correct, we know and its asymptotic normality holds as 2.

2.4 Model Selection Criteria

In application studies, since the true causal effect is unknown, we are not able to find which combination of imputation and PS models leads to the best performance in terms of some performance metrics, such as RMSE. In such a case, we need some model selection criteria to choose the best combination of imputation and PS models. In this section, we discuss some traditional criteria for model selection and propose a new criterion that takes into consideration both models.

2.4.1 Weighted Accuracy (

We first discuss a theoretical way to evaluate the performance of the imputation model via weighted accuracy based on the observed data. Due to the missing exposure, even though we can impute the missing data, we do not know the true missing values, which makes it challenging to estimate the accuracy only based on the observed data. A naive approach is to fit the imputation model based on the observed data and compare the imputed exposure with those observed values. However, since the accuracy is a function of and the distribution of on observed data is different from the whole data in general, this approach to estimate accuracy from the observed data is no longer valid.

One approach to deal with this issue is to apply inverse probability weighting on the accuracy when we consider as the propensity for the missingness. Obtaining the weighted accuracy can be described in the following four steps:

-

1.

First, we randomly split the individuals into either the training or the testing data according to a ratio , where . Here, is the sample size for the whole observed data and for the testing data, respectively. Certainly, can be arbitrarily chosen by the user, but the simplest way is to set the ratio equal to the missing rate in the original dataset.

-

2.

Next, we fit the imputation model on the training data and impute the exposure on the testing data to compare with the known exposure status.

-

3.

Then, we fit the full missingness model on the whole dataset, written as , where is the vector of coefficients including the intercept to be estimated so that we can obtain as the estimated propensity of missingness for individual .

-

4.

Finally, we calculate the weighted accuracy based on the observed data, called “”:

(3)

where and is an indicator for whether the imputed value equals to the observed value. Here, is estimated from the full missingness model including all covariates and the outcome. The weighted accuracy is consistent to the true accuracy when the full missingness model is correctly specified and the detailed proof is attached in Appendix A.1.

2.4.2 ASMD, KS, and BIC

To evaluate the PS model, the traditional approaches are based on the balance statistic calculated from the confounders. For example, one can calculate absolute standardized mean difference (ASMD) and Kolmogorov-Smirnov (KS) statistics in the covariates after propensity score adjustment 21, 22, 23.

Another approach to evaluate the PS model is using BIC criterion to select the best model adjusting for both the goodness of fit and the number of parameters in the model. Since in PS model selection, it is recommended to select the outcome-related variables instead of the exposure-related variables 15, 24, 25, we suggest using BIC of the outcome model, i.e., , as the selection criterion. For example, from Figure 1, since , a smaller BIC indicates that we have included outcome-related variables and excluded the exposure-related variables. Notice that we do not recommend using the c-statistics or accuracy of PS model to select variables because in PS analysis, we aim to balance the confounders across the exposure and non-exposure group instead of maximizing the predictability of the PS model 16, 26, 27.

We should also notice that only using one traditional criterion may not be appropriate to select both imputation and PS models, such as either , ASMD, or KS statistics, because they just focus on the performance of a single model. That motivates us to combine the evaluation of and BIC into an integrated criterion, called “rank score”, proposed in the next section.

2.4.3 Rank Score

The idea of this new criterion called “rank score” is to take into account of the performance of both imputation and PS models. We first obtain the values of and BIC for all possible combinations of the candidate models. Then, for a given model, we calculate its rank score value by:

| (4) |

where “Rank()” is the rank of the model based on the value of if we order from the largest to the smallest. “Rank(BIC)” is the rank for a given model based on the value of BIC for regressing on and the covariates selected in the given model. We can calculate the rank score for every possible combination of the imputation and PS models. Then, we select the smallest rank score, which leads to the highest and the smallest BIC, so the best imputation and PS models can be successfully chosen.

In summary, the main advantage of the rank score is to combine the performance of both imputation and PS models and directly find the best model based on this rank-based criterion. In addition, the rank score is a unit-free score, which is not affected by the different magnitudes of the accuracy and BIC. The evaluation of those criteria is shown in Table 4.

2.4.4 Other Criteria

Another possible criterion to combine accuracy and BIC is to re-scale both terms into the range of . Then, we average two rescaled terms and call the following criterion as “ABIC”:

| (5) |

where “min” means the smallest value and “max” means the largest value among all candidates of models. Notice that we cannot directly average over the accuracy and BIC values because of the different magnitude issues. Therefore, ABIC is considered to solve that problem after we rescale the accuracy and BIC between 0 and 1. We want to choose the candidate model with a smaller ABIC value, which usually means that its accuracy will be larger and BIC will become smaller. However, the min and max values of ABIC can still be affected by the extreme values of either BIC or . As a result, the ABIC values among the candidate models may be largely shrunk to a quite small value, which makes it hard for us to distinguish the model performance. The main results are shown in Table 3 and A.10 in Section A.2 of Appendix.

3 The Simulation Studies

3.1 Simulation Setup

To investigate which covariates should be included in the imputation and PS models, a simulation study is conducted based on the causal diagram in Figure 1. The number of replications is , and the sample size is in each data generation. Three covariates are generated from independently. , and the missing indicator are generated by the following three models:

-

•

missingness model: ;

-

•

treatment model: ;

-

•

outcome model:

The missing rate is about 48% in this scenario and the true causal effect . Notice that the true missingness model does not include the outcome , but one of our aims is to investigate whether adding the outcome into the imputation model will improve the performance of the estimated causal effect in the simulation studies.

For each simulated data set, we investigate five different imputation models and four different PS models, which we have specified and named in Tables 3.1, respectively. In total, twenty possible combinations of the imputation and PS models are investigated. We provide some rationale behind the specification of these imputation and PS models. When selecting the imputation model, we start with an exposure-related model, which includes only and as they directly affect . To increase the predictive ability, we gradually modify the imputation model by adding and in a step-by-step process until the full imputation model is obtained.

| Model Name (shortened form) | Model Form |

|---|---|

| Five imputation models | |

| exposure-related model (Exp) | |

| outcome-related model (Out) | |

| all covariates-included model (Covs) | |

| response-included model (Res) | |

| full model (Full) | |

| \hdashline Four PS models | |

| naive model (Naive) | |

| exposure-relate model (Exp) | |

| outcome-relate model (Out) | |

| all covariates-included model (Covs) | |

In contrast, the goal of fitting PS model is not to increase the predictive ability, but to adjust for the confounding issue. Therefore, we start with a naive PS model with only as the main confounder. Then, we gradually add exposure-related or outcome-related variables until PS model includes all covariates.

3.2 Simulation Results for Imputation and PS Selection

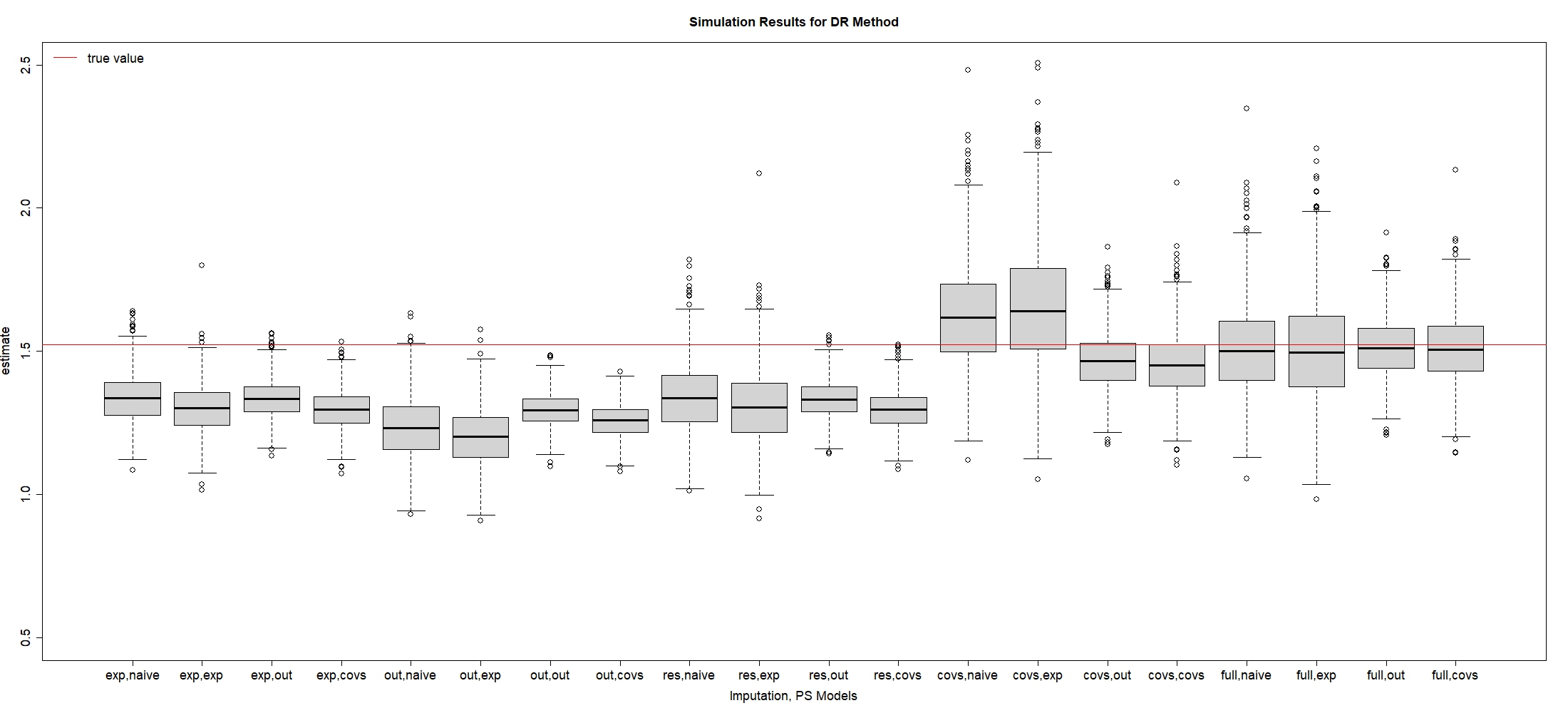

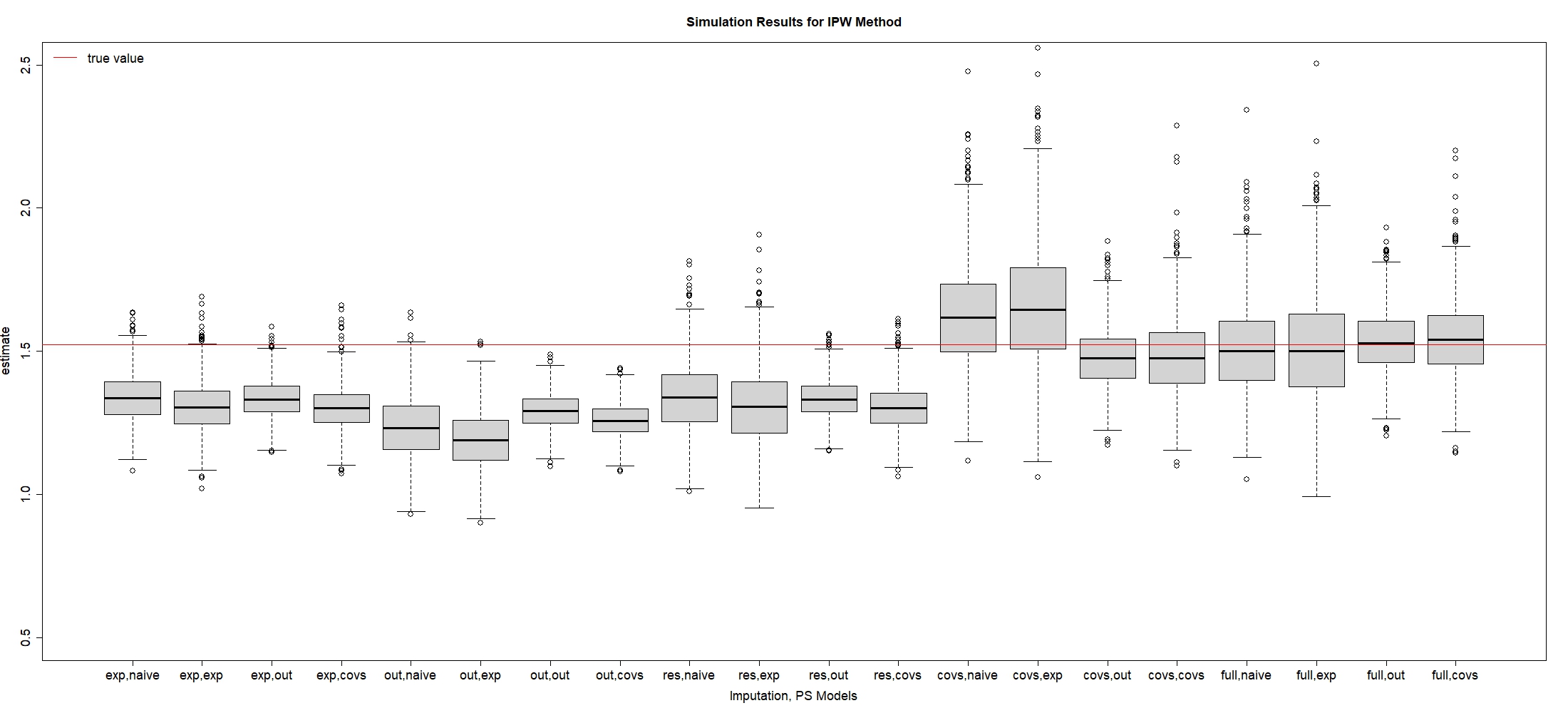

In this subsection, we investigate which combination of imputation and PS models can lead to the optimal RMSE of . Table 2 shows simulation results for IPW estimator when , and Figure 2 is plotted to visualize these results. We also provide the simulation results for the continuous outcome with the goal of estimating the average causal effect in Section S.1 of the supplementary material. To evaluate the performance of different models, we present the bias rate as the quantity of main interest: , where , and is the point estimate in the replication, . The empirical standard error (ESE), which is also called the sampling standard error, is written as . The square root of mean squared error (RMSE) is defined as .

Since are both directly correlated with the exposure from Figure 1, some researchers may argue that we only need to include without into the imputation and PS models as the best results. However, Table 2 shows that the exposure-related imputation model plus the exposure-related PS model leads to higher bias and variance than the full imputation model or the outcome-related PS model. That provides support to compare the performance among different candidates of imputation and PS models in the following sections.

| Imp, PS Models | Bias | Bias Rate | ESE | RMSE |

|---|---|---|---|---|

| Exp, naive | -0.186 | -12.187 | 0.085 | 0.204 |

| Exp, exp | -0.217 | -14.283 | 0.092 | 0.236 |

| Exp, out | -0.188 | -12.324 | 0.067 | 0.199 |

| Exp, covs | -0.219 | -14.384 | 0.080 | 0.233 |

| \hdashlineOut, naive | -0.290 | -19.069 | 0.109 | 0.310 |

| Out, exp | -0.333 | -21.858 | 0.103 | 0.348 |

| Out, out | -0.230 | -15.074 | 0.059 | 0.237 |

| Out, covs | -0.263 | -17.301 | 0.059 | 0.270 |

| \hdashlineCovs, naive | -0.181 | -11.920 | 0.123 | 0.219 |

| Covs, exp | -0.211 | -13.883 | 0.138 | 0.252 |

| Covs, out | -0.188 | -12.335 | 0.067 | 0.199 |

| Covs, covs | -0.218 | -14.295 | 0.080 | 0.232 |

| \hdashlineRes, naive | 0.102 | 6.679 | 0.176 | 0.204 |

| Res, exp | 0.137 | 9.026 | 0.217 | 0.257 |

| Res, out | -0.046 | -2.996 | 0.107 | 0.116 |

| Res, covs | -0.038 | -2.523 | 0.137 | 0.143 |

| \hdashlineFull, naive | -0.017 | -1.096 | 0.160 | 0.161 |

| Full, exp | -0.011 | -0.710 | 0.194 | 0.194 |

| Full, out | 0.010 | 0.640 | 0.110 | 0.111 |

| Full, covs | 0.023 | 1.498 | 0.137 | 0.139 |

-

•

Here, Exp, Out, Res, and Covs, refer to the exposure-related, outcome-related, response-included, and covariates-included models, respectively. The lowest RMSE is bolded above.

3.2.1 Comparison of Imputation Models

Based on Table 2 and Figure 2, if we focus on the same PS model and compare different imputation models, we find that the full imputation model has resulted in the smallest bias of compared to other imputation models. In contrast, the exposure-related, outcome-related, and all covariates-included imputation models generate huge bias rates.

Furthermore, our simulation results indicate that adding into the imputation model is necessary to reduce the bias of the estimated causal effect. For example, comparing the exposure-related model with the response-included imputation model, Table 2 shows that adding into the imputation model reduces about 10% of bias. To study the effect of , if we compare the exposure-related model versus the covariate-included imputation model, adding into the imputation model does not reduce the large bias if we do not condition on . In contrast, when we condition on , compared with the response-included imputation versus the full imputation model, Figure 2 shows that adding leads to a reduction of bias. These findings are consistent with the discussion of DAG in Section 2, which indicate that even though the true missing model does not include and is not directly correlated with , adding both and into the imputation model can improve the predictive ability.

In summary, even though the true missingness model does not include , all exposure-related, outcome-related covariates and the outcome itself should be included in the imputation model, as the full imputation model results in the smallest bias among others.

3.2.2 Comparison of PS Models

Based on the above discussion, we next focus on the full imputation model and compare the performance of different PS models in Table 2 and Figure 2. We find that all PS models that incorporate the main confounder can lead to very small bias as we have previously discussed that is the “sufficient set” in this case 18. Even though directly causes , adding does not reduce the bias because it only causes the change of the exposure and has no direct impact on the outcome , so is not considered as the main confounder.

In terms of standard errors, the outcome-related PS model with can generate the smallest ESE when we fit the full imputation model. In contrast, both exposure-related and all-covariates PS models lead to an increase in ESE because adding exposure-related variable is redundant, which may generate more extreme PS values to enlarge the variation in finite samples 15. Therefore, we recommend choosing the outcome-related PS model over the exposure-related PS model.

The results using DR estimator are presented in Table A.9 and visualized in Figure A.4 in Section A.2 of Appendix. Overall, we observe a similar trend of model selection using DR estimator as IPW estimator, suggesting that full imputation plus outcome-based PS models can effectively reduce RMSE of compared to other candidate models.

In conclusion, the best model selection is to choose the full imputation plus outcome-related PS models for both IPW or DR estimator, which results in the smallest RMSE of . In contrast, using outcome-related imputation plus exposure-related PS models leads to the largest RMSE, thus is not recommended.

3.3 Simulation Results of Selection Criteria

To evaluate the performance of different criteria discussed in Section 2.4, we calculate the correlation between RMSE and the value of each criterion in Table 4. More specifically, after replications, we have obtained RMSE for all candidate models. Within each replication of simulation studies, we can evaluate each candidate model with different criteria. Then, in the replication, we can calculate the Spearman correlation between the proposed criterion and RMSE of . Table 4 shows the average of the Spearman correlations over 1000 replications.

| Imp, PS Models | Out BIC | ASMD | KS | ABIC | Rank Score | Rank(RMSE) | |

|---|---|---|---|---|---|---|---|

| Exp, naive | 0.586 | 677.561 | 0.161 | 0.130 | 0.608 | 11.789 | 10 |

| Exp, exp | 0.586 | 720.437 | 0.022 | 0.130 | 0.675 | 14.272 | 14 |

| Exp, out | 0.586 | 430.074 | 0.149 | 0.130 | 0.225 | 7.849 | 7 |

| Exp, covs | 0.586 | 433.966 | 0.014 | 0.130 | 0.231 | 8.959 | 13 |

| \hdashlineOut, naive | 0.507 | 683.862 | 0.103 | 0.148 | 0.930 | 15.947 | 19 |

| Out, exp | 0.507 | 724.226 | 0.031 | 0.148 | 0.993 | 18.306 | 20 |

| Out, out | 0.507 | 434.737 | 0.075 | 0.148 | 0.545 | 12.460 | 15 |

| Out, covs | 0.507 | 435.659 | 0.008 | 0.148 | 0.546 | 12.918 | 18 |

| \hdashlineCovs, naive | 0.585 | 676.668 | 0.170 | 0.136 | 0.612 | 11.875 | 11 |

| Covs, exp | 0.585 | 719.239 | 0.033 | 0.136 | 0.678 | 14.354 | 16 |

| Covs, out | 0.585 | 430.103 | 0.148 | 0.136 | 0.231 | 8.043 | 8 |

| Covs, covs | 0.585 | 433.958 | 0.014 | 0.136 | 0.237 | 9.136 | 12 |

| \hdashlineRes, naive | 0.609 | 652.471 | 0.182 | 0.124 | 0.484 | 8.103 | 9 |

| Res, exp | 0.609 | 694.546 | 0.040 | 0.124 | 0.549 | 10.450 | 17 |

| Res, out | 0.609 | 411.095 | 0.154 | 0.124 | 0.110 | 3.993 | 2 |

| Res, covs | 0.609 | 415.906 | 0.015 | 0.124 | 0.118 | 4.580 | 4 |

| \hdashlineFull, naive | 0.622 | 662.369 | 0.172 | 0.138 | 0.430 | 7.463 | 5 |

| Full, exp | 0.622 | 705.051 | 0.034 | 0.138 | 0.497 | 9.941 | 6 |

| Full, out | 0.622 | 401.525 | 0.149 | 0.138 | 0.027 | 1.989 | 1 |

| Full, covs | 0.622 | 406.367 | 0.014 | 0.138 | 0.034 | 2.574 | 3 |

-

•

: weighted accuracy on the observed data; Out BIC: BIC of the outcome model; ASMD: absolute standardized mean difference for ; KS: Kolmogorov-Smirnov distance for ; ABIC: rescaled product of Accuracy and BIC. Rank Score: average ranks of and BIC. The lowest rank and RMSE are bolded above.

From Table 4, we find that adding more variables to the imputation model increases the accuracy values, so the full imputation model is preferred over other candidate models. In addition, if we fit the same imputation model, the naive and exposure-related PS models generally have larger BIC than outcome-related and covariates-included PS models. However, using a single criterion to select both models leads to some limitations. For example, if we just adopt to select imputation and PS models, we cannot distinct between different PS models because the same imputation model will lead to the same value. Similarly, if we only employ BIC to select two models and we choose the same exposure-related PS model, we cannot easily distinguish the performance of the exposure-related vs covariates-included imputation models.

Compared with other criteria, the rank score shows a stronger correlation (close to 0.85) with the RMSE of using either IPW method, which indicates that a smaller value of the rank score is more likely to result in a smaller RMSE. In other words, even though we do not know the true causal effect and RMSE in the application, the rank score is valid for researchers to evaluate the performance of imputation and PS models together. In addition, from Table 3 using IPW method and Table A.10 using DR method, we can confirm that full imputation plus outcome-related PS models have a higher chance to be selected via the “rank score” criterion.

In summary, the full imputation plus outcome-related PS models will result in the smallest rank score as the best choice, consistent with the smallest RMSE. Our proposed rank score combines the performance of both imputation and PS models. As a rank-based criterion, the rank score shows a stronger correlation with the RMSE of compared with other traditional criteria. Based on our simulation results, the rank score can also successfully select the full imputation plus the outcome-related PS models with the smallest RMSE as the best choice. In comparison, if we choose the outcome-related imputation plus exposure-related model, the rank score has the largest value, which is also consistent with the highest RMSE.

| Criterion | IPW Method | DR Method |

|---|---|---|

| 1- | 0.678 | 0.655 |

| ASMD | -0.306 | -0.337 |

| KS | 0.212 | 0.191 |

| Out BIC | 0.682 | 0.702 |

| \hdashlineABIC | 0.793 | 0.785 |

| Rank Score | 0.841 | 0.837 |

-

•

: weighted accuracy on the observed data; Out BIC: BIC of the outcome model; ASMD: absolute standardized mean difference for ; KS: Kolmogorov-Smirnov distance for ; ABIC: rescaled product of Accuracy and BIC. Rank Score: average ranks of and BIC.

4 Application Study

4.1 Background

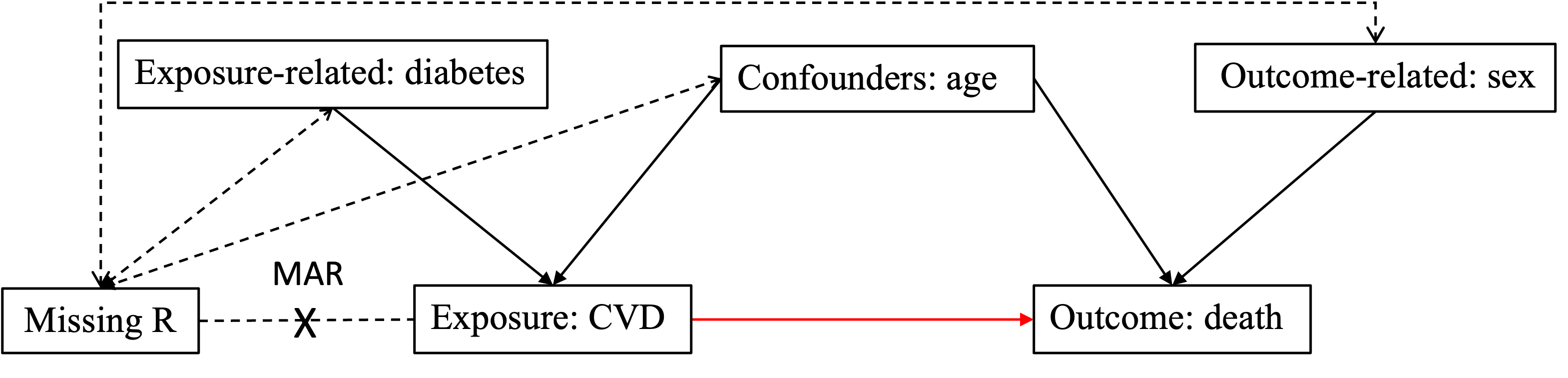

COVID-19 is a highly contagious disease that can lead to severe clinical manifestations and long-term complications for patients. Some researchers have shown that the presence of cardiovascular disease (CVD) is associated with significantly worse mortality in COVID-19 patients 28. However, the associational analysis cannot answer the question of whether CVD directly causes higher mortality or whether other risk factors that lead to CVD are also a common cause of COVID-19 death. In this section, we present an application study on a COVID-19 dataset to investigate the causal effect of CVD (as the exposure) on the mortality of COVID-19 patients (as the outcome) when CVD status is not fully observed. We aim to select both imputation and PS models using the proposed methods and compare their performance.

The data were collected during the second wave of the pandemic in Brazil from June 25th to December 31st, 2020. The dataset contains 2878 COVID-19 patients, of whom 1253 died from COVID-19. Although all patients were exposed to COVID-19, 548 patients had missing information on CVD, resulting in a missing rate of 19%. Demographic and clinical variables were also collected including age, sex, and diabetes without missing data. A summary of the dataset is provided in Tables 5 and6, stratified by the mortality or the missingness, respectively. The independence between mortality and covariates was tested using the Fisher test for categorical variables and the Mann-Whitney test for continuous variables. From Table 5, we find a significant association between age and mortality, which is a well-known risk factor. There are also significant associations between CVD and mortality, which may be influenced by both confounders and missingness in the exposure. However, no significant association is identified between either diabetes or sex with mortality.

| Covariate | Full Sample (n=2878) | Alive (n=1625) | Died (n=1253) | p-value |

|---|---|---|---|---|

| CVD | 0.022 | |||

| No | 802 (34) | 478 (36) | 324 (32) | |

| Yes | 1528 (66) | 835 (64) | 693 (68) | |

| Missing | 548 | 312 | 236 | |

| \hdashlineage | 0.001 | |||

| Mean (sd) | 68.9 (16.3) | 65.4 (16.6) | 73.3 (14.7) | |

| Median (Min,Max) | 72 (0,107) | 67 (0,104) | 76 (0,107) | |

| \hdashlinesex | 0.054 | |||

| Male | 1551 (54) | 850 (52) | 701 (56) | |

| Female | 1327 (46) | 775 (48) | 552 (44) | |

| \hdashlinediabetes | 0.3 | |||

| No | 1117 (39) | 617 (38) | 500 (40) | |

| Yes | 1761 (61) | 1008 (62) | 753 (60) |

| Covariate | Full Sample (n=2878) | Observed (n=2330) | Missing (n=548) | p-value |

|---|---|---|---|---|

| age | 0.099 | |||

| Mean (sd) | 68.9 (16.3) | 69 (16.6) | 68.3 (14.8) | |

| Median (Min,Max) | 72 (0,107) | 72 (0,104) | 71 (1,107) | |

| \hdashlinesex | 0.37 | |||

| Male | 1551 (54) | 1246 (53) | 305 (56) | |

| Female | 1327 (46) | 1084 (47) | 243 (44) | |

| \hdashlinediabetes | 0.001 | |||

| No | 1117 (39) | 1111 (48) | 6 (1) | |

| Yes | 1761 (61) | 1219 (52) | 542 (99) |

In this application, we aim to estimate the causal risk ratio of mortality for patients with CVD versus those without CVD as our main interest in the application study. However, in such observational studies, we cannot simply randomize the exposure status for patients, making it challenging to estimate the causal effect of CVD on COVID-19 mortality in the presence of confounding issues. Meanwhile, since CVD status is not fully observed, we also have to deal with the missing exposure problem, so we need to make assumptions about the missing exposure and confounding issues. From Table 6, we find a significant association between missingness and diabetes. Older COVID-19 patients with chronic diseases, such as diabetes, may be more likely to report whether they have CVD or not, so assuming MCAR in this study is not reasonable. Instead, we adopt the MAR assumption, which assumes that the missing indicator for CVD is conditionally independent of CVD status itself, given other observed variables. In addition, we assume SITA assumption holds, i.e. the potential outcomes are conditionally independent of the CVD given all pre-measured covariates. After that, we intend to apply MICE to impute the missing values and IPW-based estimators to account for confounding issues.

4.2 Model Selection

Since the effect of each clinical variable on CVD status or the outcome may be different, properly selecting imputation and PS models is essential to obtain a valid estimation of the causal effect. However, a DAG in this case is not readily available if we lack sufficient background information in the application. Previous clinical papers show that age and sex are important risk factors for death 29, 30. In addition, many clinical studies find that diabetes and age can affect the risk of CVD 31. Based on the prior knowledge, we can draw a hypothetical causal diagram in Figure 3.

For simplicity, we rename the age variable as , diabetes as , sex as , CVD as the exposure variable , and death as the outcome variable . Then, following the same model setup in the simulation studies described in Section 3, we consider five different imputations and four different PS models as candidate models.

| Imp Model | PS Model | IPW Estimate | DR Estimate | Out BIC | ASMD | KS | Rank Score | |

|---|---|---|---|---|---|---|---|---|

| 0.945 | 0.986 | 0.607 | 3790.876 | 0.050 | 0.292 | 7.5 | ||

| 0.961 | 0.988 | 0.607 | 3796.287 | 0.006 | 0.290 | 12.0 | ||

| 0.950 | 0.991 | 0.607 | 3789.885 | 0.048 | 0.292 | 5.0 | ||

| 0.963 | 0.989 | 0.607 | 3795.815 | 0.008 | 0.290 | 9.5 | ||

| \hdashline | 0.946 | 0.983 | 0.581 | 3790.743 | 0.042 | 0.267 | 12.0 | |

| 0.960 | 0.984 | 0.581 | 3796.138 | 0.006 | 0.264 | 16.5 | ||

| 0.951 | 0.988 | 0.581 | 3789.763 | 0.040 | 0.267 | 9.5 | ||

| 0.962 | 0.986 | 0.581 | 3795.679 | 0.007 | 0.264 | 14.5 | ||

| \hdashline | 0.942 | 0.984 | 0.606 | 3790.866 | 0.050 | 0.294 | 11.0 | |

| 0.958 | 0.985 | 0.606 | 3796.309 | 0.006 | 0.291 | 16.5 | ||

| 0.947 | 0.990 | 0.606 | 3789.882 | 0.049 | 0.294 | 8.5 | ||

| 0.960 | 0.986 | 0.606 | 3795.837 | 0.008 | 0.291 | 14.0 | ||

| \hdashline | 0.942 | 0.985 | 0.606 | 3790.846 | 0.050 | 0.293 | 8.5 | |

| 0.959 | 0.986 | 0.606 | 3796.272 | 0.006 | 0.291 | 13.5 | ||

| 0.947 | 0.990 | 0.606 | 3789.852 | 0.049 | 0.293 | 6.0 | ||

| 0.961 | 0.987 | 0.606 | 3795.797 | 0.008 | 0.291 | 11.0 | ||

| \hdashline | 0.946 | 0.986 | 0.608 | 3790.742 | 0.048 | 0.289 | 3.5 | |

| 0.960 | 0.987 | 0.608 | 3796.144 | 0.006 | 0.287 | 9.0 | ||

| 0.950 | 0.991 | 0.608 | 3789.735 | 0.048 | 0.289 | 1.0 | ||

| 0.962 | 0.988 | 0.608 | 3795.661 | 0.008 | 0.287 | 6.0 |

Without assuming the hypothetical DAG is correct, we apply the rank score to select the best imputation and PS models among the candidates. Table 7 reports the estimated causal effects and the values of each criteria. Based on the proposed rank score, the best imputation model includes “age”, “sex”, “diabetes”, and “death” as the predictors, and the best PS model selects “age” and “sex” as the predictors. This is consistent with the simulation results, i.e., the proposed criterion can still help us select the full imputation model plus the outcome-related PS model as the best choice following the hypothetical DAG we draw in Figure 3.

4.3 Estimation Results

After the model selection, we obtain the estimated causal effect of CVD on mortality using IPW and DR estimators. We also provide confidence intervals (CI) after running bootstrap resamples, as shown in Table 8. We compare two different types of CI including a CI based on bootstrap standard error (BSE) with a normal approximation, called “CI Normal”, and another CI based on bootstrap percentiles (between 2.5% and 97.5% percentiles of bootstrap point estimates), called “CI Percentile” 32.

The estimated causal risk ratio of CVD on the mortality for COVID-19 patients is 0.95 using IPW method with a 95% CI of [0.867, 1.052] using CI Percentile. For DR method, we also obtain a similar result as IPW method.

Although some researchers may presume that patients with CVD status increase the risk of death, our analysis does not support this assumption. Since CI covers the value of 1, we would expect that the statistical test for whether the ratio of patients with CVD is higher than those without CVD is not significant after adjusting for missing data and confounding factors. One possible explanation for this finding is due to the confounding issue, i.e. age is strongly associated with both CVD status and death. Additionally, researchers may have ignored the effect of missing exposure on the estimated results. These results align with recent clinical research 33, 34, which suggests that those CVD risk factors, such as age, rather than CVD status itself, are the primary contributors to mortality.

One of the limitations of the application study is that we only include four clinical variables. Since we lack enough observations of other variables in the real dataset, we may miss some relevant confounders in the analysis. In addition, the model selection and estimated results are only based on the samples in Brazil instead of the world population.

| Method | Selected Imputation, PS Models | Estimate (risk ratio) | BSE | CI Normal | CI Percentile |

|---|---|---|---|---|---|

| IPW | , | 0.950 | 0.048 | (0.856,1.044) | (0.867,1.052) |

| DR | , | 0.991 | 0.050 | (0.897,1.085) | (0.905,1.098) |

-

•

Estimate: causal effect (as the risk ratio) of CVD on mortality. BSE: bootstrap standard errors. CI-Normal: 95% bootstrap normal confidence interval using BSE. CI-Percentile: 95% bootstrap percentile confidence interval.

5 Discussion

In causal inference, when the exposure is MAR, a common practice is to impute the missing values and then estimate causal effects using the imputed data. Model selection on both imputation and PS models is a challenging problem when the exposure is MAR, and simply selecting the imputation and PS models with the same set of exposure-related covariates is not appropriate. In addition, few studies discuss the appropriate criterion for selecting these models when the true value is unknown in the application studies.

In this paper, we conduct simulation studies to investigate the effect of different imputation and PS models on the estimated causal effect. Unexpectedly, we find that even though the true missingness model does not depend on the outcome in our simulation setup, selecting the outcome and outcome-related variables into the imputation model can improve the accuracy of the imputed exposure and reduce the bias of the estimated causal effect. In addition, we propose “rank score” as a rank-based and unit-free criterion to evaluate the performance of both imputation and PS models, which shows a higher correlation with RMSE in simulation studies.

The limitation of the rank score is that it requires ranking both and BIC among all candidate models, which may be affected by the pool of candidate models. If the number of covariates in the dataset is small, we can recommend fitting all possible combinations of models. On the other hand, when the number of candidate models is very large, ranking all candidate models becomes time-consuming. To address this issue, researchers can perform model selection sequentially, i.e. we use to select the best imputation model, and then we select PS model through BIC given that imputation model. However, such a sequential strategy is not ideal in some cases because the selection of PS model is only conditioned on the selected imputation model from the first step, which may not lead to the overall best models with the smallest RMSE. On the other hand, the rank score is similar to the “best subset” strategy because both accuracy and BIC contribute equally to the rank score, ensuring a balance between the rankings of imputation and PS models.

Further research can extend the proposed model selection criterion into multiple areas such as MAR on both exposure and outcome. Besides that, one can also consider other missing mechanisms such as MNAR. Additionally, it may be interesting for researchers to incorporate missing exposure into other complex settings, such as longitudinal or survival data in future studies.

6 Acknowledgements

We appreciate NSERC grants to support our research work and IntegraSUS 111https://integrasus.saude.ce.gov.br/ for publicly sharing the COVID-19 data in Brazil. We would also like to thank Wei Liang for his great suggestions on the Appendix.

References

- 1 Rubin DB. Inference and missing data. Biometrika 1976; 63(3): 581–592.

- 2 Bang H, Robins JM. Doubly robust estimation in missing data and causal inference models. Biometrics 2005; 61(4): 962–973.

- 3 Williamson EJ, Forbes A, Wolfe R. Doubly robust estimators of causal exposure effects with missing data in the outcome, exposure or a confounder. Statistics in Medicine 2012; 31(30): 4382–4400.

- 4 Zhang Z, Liu W, Zhang B, Tang L, Zhang J. Causal inference with missing exposure information: Methods and applications to an obstetric study. Statistical Methods in Medical Research 2016; 25(5): 2053–2066.

- 5 Rosenbaum PR, Rubin DB. The central role of the propensity score in observational studies for causal effects. Biometrika 1983; 70(1): 41–55.

- 6 Donders ART, Van Der Heijden GJ, Stijnen T, Moons KG. A gentle introduction to imputation of missing values. Journal of Clinical Epidemiology 2006; 59(10): 1087–1091.

- 7 Buuren vS, Boshuizen HC, Knook DL. Multiple imputation of missing blood pressure covariates in survival analysis. Statistics in Medicine 1999; 18(6): 681–694.

- 8 van Buuren S, Groothuis-Oudshoorn K. mice: Multivariate Imputation by Chained Equations in R. Journal of Statistical Software 2011; 45(3): 1-67. doi: 10.18637/jss.v045.i03

- 9 Rubin DB. Multiple imputation for nonresponse in surveys. 81. John Wiley & Sons . 2004.

- 10 Neyman JS. On the application of probability theory to agricultural experiments. essay on principles. section 9.(tlanslated and edited by dm dabrowska and tp speed, statistical science (1990), 5, 465-480). Annals of Agricultural Sciences 1923; 10: 1–51.

- 11 Scharfstein DO, Rotnitzky A, Robins JM. Adjusting for nonignorable drop-out using semiparametric nonresponse models. Journal of the American Statistical Association 1999; 94(448): 1096–1120.

- 12 Robins JM, Rotnitzky A, Zhao LP. Estimation of regression coefficients when some regressors are not always observed. Journal of the American Statistical Association 1994; 89(427): 846–866.

- 13 Kennedy EH. Efficient nonparametric causal inference with missing exposure information. The International Journal of Biostatistics 2020; 16(1): 20190087.

- 14 Seaman SR, White IR. Review of inverse probability weighting for dealing with missing data. Statistical Methods in Medical Research 2013; 22(3): 278–295.

- 15 Brookhart MA, Schneeweiss S, Rothman KJ, Glynn RJ, Avorn J, Stürmer T. Variable selection for propensity score models. American Journal of Epidemiology 2006; 163(12): 1149–1156.

- 16 Rubin DB. On principles for modeling propensity scores in medical research.. Pharmacoepidemiology and Drug Safety 2004; 13(12): 855–857.

- 17 Rosenbaum PR, Rubin DB. The central role of the propensity score in observational studies for causal effects. Biometrika 1983; 70(1): 41–55.

- 18 Tanner-Smith EE, Lipsey MW. Identifying baseline covariates for use in propensity scores: A novel approach illustrated for a nonrandomized study of recovery high schools. Peabody Journal of Education 2014; 89(2): 183–196.

- 19 Glymour M, Pearl J, Jewell NP. Causal inference in statistics: A primer. John Wiley & Sons . 2016.

- 20 Horvitz DG, Thompson DJ. A generalization of sampling without replacement from a finite universe. Journal of the American Statistical Association 1952; 47(260): 663–685.

- 21 Lilliefors HW. On the Kolmogorov-Smirnov test for normality with mean and variance unknown. Journal of the American Statistical Association 1967; 62(318): 399–402.

- 22 Berger VW, Zhou Y. Kolmogorov–smirnov test: Overview. Wiley statsref: Statistics reference online 2014.

- 23 Franklin JM, Rassen JA, Ackermann D, Bartels DB, Schneeweiss S. Metrics for covariate balance in cohort studies of causal effects. Statistics in Medicine 2014; 33(10): 1685–1699.

- 24 Bhattacharya J, Vogt WB. Do instrumental variables belong in propensity scores?. Working Paper 343, National Bureau of Economic Research 2007.

- 25 Zhu Y, Schonbach M, Coffman DL, Williams JS. Variable selection for propensity score estimation via balancing covariates. Epidemiology 2015; 26(2): e14–e15.

- 26 Westreich D, Cole SR, Funk MJ, Brookhart MA, Stürmer T. The role of the c-statistic in variable selection for propensity score models. Pharmacoepidemiology and Drug Safety 2011; 20(3): 317–320.

- 27 Patrick AR, Schneeweiss S, Brookhart MA, et al. The implications of propensity score variable selection strategies in pharmacoepidemiology: an empirical illustration. Pharmacoepidemiology and Drug Safety 2011; 20(6): 551–559.

- 28 Kassir R. Risk of COVID-19 for patients with obesity. Obesity Reviews 2020; 21(6).

- 29 Dana PM, Sadoughi F, Hallajzadeh J, et al. An insight into the sex differences in COVID-19 patients: what are the possible causes?. Prehospital and Disaster Medicine 2020; 35(4): 438–441.

- 30 Abdi A, Jalilian M, Sarbarzeh PA, Vlaisavljevic Z. Diabetes and COVID-19: A systematic review on the current evidences. Diabetes Research and Clinical Practice 2020; 166: 108347.

- 31 Bertoluci MC, Rocha VZ. Cardiovascular risk assessment in patients with diabetes. Diabetology & Metabolic Syndrome 2017; 9: 1–13.

- 32 Tibshirani RJ, Efron B. An introduction to the bootstrap. Monographs on Statistics and Applied Probability 1993; 57: 1–436.

- 33 Di Castelnuovo A, Bonaccio M, Costanzo S, et al. Common cardiovascular risk factors and in-hospital mortality in 3,894 patients with COVID-19: survival analysis and machine learning-based findings from the multicentre Italian CORIST Study. Nutrition, Metabolism and Cardiovascular Diseases 2020; 30(11): 1899–1913.

- 34 Vasbinder A, Meloche C, Azam TU, et al. Relationship between preexisting cardiovascular disease and death and cardiovascular outcomes in critically ill patients with COVID-19. Circulation: Cardiovascular Quality and Outcomes 2022; 15(10): e008942.

A.1 Proof of the Validity of Weighted Accuracy

The key idea of weighted accuracy is to create a pseudo sample after weighting on those observed individuals so that the weighted accuracy on the observed dataset can represent the original accuracy on the whole dataset. Based on MAR assumption, if the missingness model is correct, under the true value of , we can prove that,

| (A.6) | ||||

Here the first equality holds because is an indicator of whether the individual is randomly selected into the testing data or not, which is free of . Its expectation is an arbitrarily chosen ratio, which can also be cancelled. The second equality holds because after taking double expectation with the condition of , we know based on the MAR assumption. The third equality holds because the full missingness model is correctly specified, so we know . After taking the double expectation again, we know will converge to the true accuracy on the original data as .

Notice that Equation A.6 also describes why we cannot only use the naive approach to estimate the accuracy on the observed data, written as . In such a way, the missing indicator will largely affect the naive estimator, which cannot be cancelled without the weights . Therefore, to properly evaluate the performance of the imputation model, we need to adopt the inverse weights of the missingness on the estimated accuracy of the observed data.

In the simulation studies, since we know the true missing values, we can also impute all missing values on the whole data via MICE and calculate the standard accuracy, called the “Benchmark-Accuracy” on the original data. In such a way, the bias between and Benchmark-Accuracy is close to 0.008 (the results are omitted in the simulation section), which supports the statement that the weighted accuracy will converge to the true accuracy as . However, we will not know the true accuracy in the application due to the unknown missing data, so will be a useful tool for researchers to evaluate the selected imputation model.

A.2 Estimate Risk Ratio for Binary Outcome using DR method

| Imp, PS Models | Bias | Bias Rate | ESE | RMSE |

|---|---|---|---|---|

| Exp, naive | -0.186 | -12.198 | 0.084 | 0.204 |

| Exp, exp | -0.222 | -14.553 | 0.085 | 0.237 |

| Exp, out | -0.188 | -12.374 | 0.065 | 0.199 |

| Exp, covs | -0.225 | -14.804 | 0.066 | 0.235 |

| \hdashlineOut, naive | -0.291 | -19.087 | 0.109 | 0.310 |

| Out, exp | -0.321 | -21.056 | 0.103 | 0.337 |

| Out, out | -0.226 | -14.859 | 0.058 | 0.233 |

| Out, covs | -0.264 | -17.354 | 0.057 | 0.270 |

| \hdashlineCovs, naive | -0.182 | -11.933 | 0.123 | 0.219 |

| Covs, exp | -0.215 | -14.105 | 0.134 | 0.253 |

| Covs, out | -0.189 | -12.392 | 0.065 | 0.200 |

| Covs, covs | -0.225 | -14.786 | 0.067 | 0.235 |

| \hdashlineRes, naive | 0.102 | 6.679 | 0.176 | 0.204 |

| Res, exp | 0.133 | 8.763 | 0.211 | 0.250 |

| Res, out | -0.056 | -3.654 | 0.100 | 0.114 |

| Res, covs | -0.066 | -4.350 | 0.115 | 0.132 |

| \hdashlineFull, naive | -0.017 | -1.102 | 0.160 | 0.161 |

| Full, exp | -0.015 | -0.977 | 0.189 | 0.189 |

| Full, out | -0.010 | -0.660 | 0.104 | 0.104 |

| Full, covs | -0.011 | -0.691 | 0.120 | 0.120 |

-

•

Here, Exp, Out, Res, and Covs, refer to the exposure-related, outcome-related, response-included, and covariates-included models, respectively. The lowest RMSE is bolded above.

| Imp, PS Models | Out BIC | ASMD | KS | ABIC | Rank Score | Rank(RMSE) | |

|---|---|---|---|---|---|---|---|

| Exp, naive | 0.586 | 677.561 | 0.161 | 0.130 | 0.608 | 11.789 | 10 |

| Exp, exp | 0.586 | 720.437 | 0.022 | 0.130 | 0.675 | 14.272 | 15 |

| Exp, out | 0.586 | 430.074 | 0.149 | 0.130 | 0.225 | 7.849 | 7 |

| Exp, covs | 0.586 | 433.966 | 0.014 | 0.130 | 0.231 | 8.959 | 14 |

| \hdashlineOut, naive | 0.507 | 683.862 | 0.103 | 0.148 | 0.930 | 15.947 | 19 |

| Out, exp | 0.507 | 724.226 | 0.031 | 0.148 | 0.993 | 18.306 | 20 |

| Out, out | 0.507 | 434.737 | 0.075 | 0.148 | 0.545 | 12.460 | 12 |

| Out, covs | 0.507 | 435.659 | 0.008 | 0.148 | 0.546 | 12.918 | 18 |

| \hdashlineCovs, naive | 0.585 | 676.668 | 0.170 | 0.136 | 0.612 | 11.875 | 11 |

| Covs, exp | 0.585 | 719.239 | 0.033 | 0.136 | 0.678 | 14.354 | 17 |

| Covs, out | 0.585 | 430.103 | 0.148 | 0.136 | 0.231 | 8.043 | 8 |

| Covs, covs | 0.585 | 433.958 | 0.014 | 0.136 | 0.237 | 9.136 | 13 |

| \hdashlineRes, naive | 0.609 | 652.471 | 0.182 | 0.124 | 0.484 | 8.103 | 9 |

| Res, exp | 0.609 | 694.546 | 0.040 | 0.124 | 0.549 | 10.450 | 16 |

| Res, out | 0.609 | 411.095 | 0.154 | 0.124 | 0.110 | 3.993 | 2 |

| Res, covs | 0.609 | 415.906 | 0.015 | 0.124 | 0.118 | 4.580 | 4 |

| \hdashlineFull, naive | 0.622 | 662.369 | 0.172 | 0.138 | 0.430 | 7.463 | 5 |

| Full, exp | 0.622 | 705.051 | 0.034 | 0.138 | 0.497 | 9.941 | 6 |

| Full, out | 0.622 | 401.525 | 0.149 | 0.138 | 0.027 | 1.989 | 1 |

| Full, covs | 0.622 | 406.367 | 0.014 | 0.138 | 0.034 | 2.574 | 3 |

-

•

: weighted accuracy on the observed data; Out BIC: BIC of the outcome model; ASMD: absolute standardized mean difference for ; KS: Kolmogorov-Smirnov distance for ; ABIC: rescaled product of Accuracy and BIC. Rank Score: average ranks of and BIC. The lowest rank and RMSE are bolded above.